Chapter 4. Health Probe

The Health Probe pattern indicates how an application can communicate its health state to Kubernetes. To be fully automatable, a cloud native application must be highly observable by allowing its state to be inferred so that Kubernetes can detect whether the application is up and whether it is ready to serve requests. These observations influence the lifecycle management of Pods and the way traffic is routed to the application.

Problem

Kubernetes regularly checks the container process status and restarts it if issues are detected. However, from practice, we know that checking the process status is not sufficient to determine the health of an application. In many cases, an application hangs, but its process is still up and running. For example, a Java application may throw an OutOfMemoryError and still have the JVM process running. Alternatively, an application may freeze because it runs into an infinite loop, deadlock, or some thrashing (cache, heap, process). To detect these kinds of situations, Kubernetes needs a reliable way to check the health of applications—that is, not to understand how an application works internally, but to check whether the application is functioning as expected and capable of serving consumers.

Solution

The software industry has accepted the fact that it is not possible to write bug-free code. Moreover, the chances for failure increase even more when working with distributed applications. As a result, the focus for dealing with failures has shifted from avoiding them to detecting faults and recovering. Detecting failure is not a simple task that can be performed uniformly for all applications, as everyone has different definitions of a failure. Also, various types of failures require different corrective actions. Transient failures may self-recover, given enough time, and some other failures may need a restart of the application. Let’s look at the checks Kubernetes uses to detect and correct failures.

Process Health Checks

A process health check is the simplest health check the Kubelet constantly performs on the container processes. If the container processes are not running, the container is restarted on the node to which the Pod is assigned. So even without any other health checks, the application becomes slightly more robust with this generic check. If your application is capable of detecting any kind of failure and shutting itself down, the process health check is all you need. However, for most cases, that is not enough, and other types of health checks are also necessary.

Liveness Probes

If your application runs into a deadlock, it is still considered healthy from the process health check’s point of view. To detect this kind of issue and any other types of failure according to your application business logic, Kubernetes has liveness probes—regular checks performed by the Kubelet agent that asks your container to confirm it is still healthy. It is important to have the health check performed from the outside rather than in the application itself, as some failures may prevent the application watchdog from reporting its failure. Regarding corrective action, this health check is similar to a process health check, since if a failure is detected, the container is restarted. However, it offers more flexibility regarding which methods to use for checking the application health, as follows:

- HTTP probe

-

Performs an HTTP GET request to the container IP address and expects a successful HTTP response code between 200 and 399.

- TCP Socket probe

-

Assumes a successful TCP connection.

- Exec probe

-

Executes an arbitrary command in the container’s user and kernel namespace and expects a successful exit code (0).

- gRPC probe

-

Leverages gRPC’s intrinsic support for health checks.

In addition to the probe action, the health check behavior can be influenced with the following parameters:

initialDelaySeconds-

Specifies the number of seconds to wait until the first liveness probe is checked.

periodSeconds-

The interval in seconds between liveness probe checks.

timeoutSeconds-

The maximum time allowed for a probe check to return before it is considered to have failed.

failureThreshold-

Specifies how many times a probe check needs to fail in a row until the container is considered to be unhealthy and needs to be restarted.

An example HTTP-based liveness probe is shown in Example 4-1.

Example 4-1. Container with a liveness probe

apiVersion:v1kind:Podmetadata:name:pod-with-liveness-checkspec:containers:-image:k8spatterns/random-generator:1.0name:random-generatorenv:-name:DELAY_STARTUPvalue:"20"ports:-containerPort:8080protocol:TCPlivenessProbe:httpGet:path:/actuator/healthport:8080initialDelaySeconds:30

Depending on the nature of your application, you can choose the method that is most suitable for you. It is up to your application to decide whether it considers itself healthy or not. However, keep in mind that the result of not passing a health check is that your container will restart. If restarting your container does not help, there is no benefit to having a failing health check as Kubernetes restarts your container without fixing the underlying issue.

Readiness Probes

Liveness checks help keep applications healthy by killing unhealthy containers and replacing them with new ones. But sometimes, when a container is not healthy, restarting it may not help. A typical example is a container that is still starting up and is not ready to handle any requests. Another example is an application that is still waiting for a dependency like a database to be available. Also, a container can be overloaded, increasing its latency, so you want it to shield itself from the additional load for a while and indicate that it is not ready until the load decreases.

For this kind of scenario, Kubernetes has readiness probes. The methods (HTTP, TCP, Exec, gRPC) and timing options for performing readiness checks are the same as for liveness checks, but the corrective action is different. Rather than restarting the container, a failed readiness probe causes the container to be removed from the service endpoint and not receive any new traffic. Readiness probes signal when a container is ready so that it has some time to warm up before getting hit with requests from the service. It is also useful for shielding the container from traffic at later stages, as readiness probes are performed regularly, similarly to liveness checks. Example 4-2 shows how a readiness probe can be implemented by probing the existence of a file the application creates when it is ready for operations.

Example 4-2. Container with readiness probe

apiVersion:v1kind:Podmetadata:name:pod-with-readiness-checkspec:containers:-image:k8spatterns/random-generator:1.0name:random-generatorreadinessProbe:exec:command:["stat","/var/run/random-generator-ready"]

Again, it is up to your implementation of the health check to decide when your application is ready to do its job and when it should be left alone. While process health checks and liveness checks are intended to recover from the failure by restarting the container, the readiness check buys time for your application and expects it to recover by itself. Keep in mind that Kubernetes tries to prevent your container from receiving new requests (when it is shutting down, for example), regardless of whether the readiness check still passes after having received a SIGTERM signal.

In many cases, liveness and readiness probes are performing the same checks. However, the presence of a readiness probe gives your container time to start up. Only by passing the readiness check is a Deployment considered to be successful, so that, for example, Pods with an older version can be terminated as part of a rolling update.

For applications that need a very long time to initialize, it’s likely that failing liveness checks will cause your container to be restarted before the startup is finished. To prevent these unwanted shutdowns, you can use startup probes to indicate when the startup is finished.

Startup Probes

Liveness probes can also be used exclusively to allow for long startup times by stretching the check intervals, increasing the number of retries, and adding a longer delay for the initial liveness probe check. This strategy, however, is not optimal since these timing parameters will also apply for the post-startup phase and will prevent your application from quickly restarting when fatal errors occur.

When applications take minutes to start (for example, Jakarta EE application servers), Kubernetes provides startup probes.

Startup probes are configured with the same format as liveness probes but allow for different values for the probe action and the timing parameters. The periodSeconds and failureThreshold parameters are configured with much larger values compared to the corresponding liveness probes to factor in the longer application startup.

Liveness and readiness probes are called only after the startup probe reports success.

The container is restarted if the startup probe is not successful within the configured failure threshold.

While the same probe action can be used for liveness and startup probes, a successful startup is often indicated by a marker file that is checked for existence by the startup probe.

Example 4-4 is a typical example of a Jakarta EE application server that takes a long time to start.

Example 4-4. Container with a startup and liveness probe

apiVersion:v1kind:Podmetadata:name:pod-with-startup-checkspec:containers:-image:quay.io/wildfly/wildflyname:wildflystartupProbe:exec:command:["stat","/opt/jboss/wildfly/standalone/tmp/startup-marker"]initialDelaySeconds:60periodSeconds:60failureThreshold:15livenessProbe:httpGet:path:/healthport:9990periodSeconds:10failureThreshold:3

JBoss WildFly Jakarta EE server that will take its time to start.

Marker file that is created by WildFly after a successful startup.

Timing parameters that specify that the container should be restarted when it has not been passing the startup probe after 15 minutes (60-second pause until the first check, then maximal 15 checks with 60-second intervals).

Timing parameters for the liveness probes are much smaller, resulting in a restart if subsequent liveness probes fail within 20 seconds (three retries with 10-second pauses between each).

The liveness, readiness, and startup probes are fundamental building blocks of the automation of cloud native applications. Application frameworks such as Quarkus SmallRye Health, Spring Boot Actuator, WildFly Swarm health check, Apache Karaf health check, or the MicroProfile spec for Java provide implementations for offering health probes.

Discussion

To be fully automatable, cloud native applications must be highly observable by providing a means for the managing platform to read and interpret the application health, and if necessary, take corrective actions. Health checks play a fundamental role in the automation of activities such as deployment, self-healing, scaling, and others. However, there are also other means through which your application can provide more visibility about its health.

The obvious and old method for this purpose is through logging. It is a good practice for containers to log any significant events to system out and system error and have these logs collected to a central location for further analysis. Logs are not typically used for taking automated actions but rather to raise alerts and further investigations. A more useful aspect of logs is the postmortem analysis of failures and detection of unnoticeable errors.

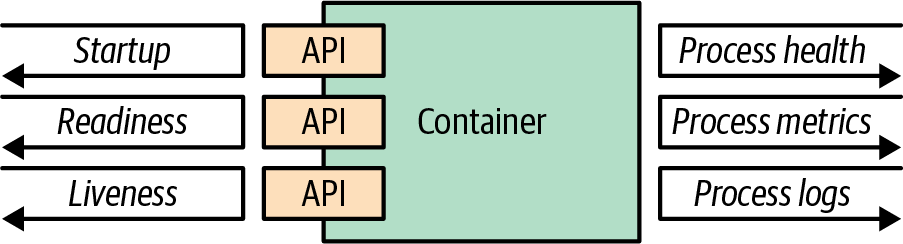

Apart from logging to standard streams, it is also a good practice to log the reason for exiting a container to /dev/termination-log. This location is the place where the container can state its last will before being permanently vanished.1 Figure 4-1 shows the possible options for how a container can communicate with the runtime platform.

Figure 4-1. Container observability options

Containers provide a unified way for packaging and running applications by treating them like opaque systems. However, any container that is aiming to become a cloud native citizen must provide APIs for the runtime environment to observe the container health and act accordingly. This support is a fundamental prerequisite for automation of the container updates and lifecycle in a unified way, which in turn improves the system’s resilience and user experience. In practical terms, that means, as a very minimum, your containerized application must provide APIs for the different kinds of health checks (liveness and readiness).

Even-better-behaving applications must also provide other means for the managing platform to observe the state of the containerized application by integrating with tracing and metrics-gathering libraries such as OpenTracing or Prometheus. Treat your application as an opaque system, but implement all the necessary APIs to help the platform observe and manage your application in the best way possible.

The next pattern, Managed Lifecycle, is also about communication between applications and the Kubernetes management layer, but coming from the other direction. It’s about how your application gets informed about important Pod lifecycle events.

1 Alternatively, you could change the .spec.containers.terminationMessagePolicy field of a Pod to FallbackToLogsOnError, in which case the last line of the log is used for the Pod’s status message when it terminates.

Get Kubernetes Patterns, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.