Chapter 1. Chaos Engineering Distilled

Want your system to be able to deal with the knocks and shakes of life in production? Want to find out where the weaknesses are in your infrastructure, platforms, applications, and even people, policies, practices, and playbooks before you’re in the middle of a full-scale outage? Want to adopt a practice where you proactively explore weaknesses in your system before your users complain? Welcome to chaos engineering.

Chaos engineering is an exciting discipline whose goal is to surface evidence of weaknesses in a system before those weaknesses become critical issues. Through tests, you experiment with your system to gain useful insights into how your system will respond to the types of turbulent conditions that happen in production.

This chapter takes you on a tour of what chaos engineering is, and what it isn’t, to get you in the right mind-set to use the techniques and tools that are the main feature of the rest of the book.

Chaos Engineering Defined

According to the Principles of Chaos Engineering:

Chaos Engineering is the discipline of experimenting on a system in order to build confidence in the system’s capability to withstand turbulent conditions in production.

Users of a system want it to be reliable. Many factors can affect reliability (see “Locations of Dark Debt”), and as chaos engineers we are able to focus on establishing evidence of how resilient our systems are in the face of these unexpected, but inevitable, conditions.

Chaos engineering’s sole purpose is to provide evidence of system weaknesses. Through scientific chaos engineering experiments, you can test for evidence of weaknesses in your system—sometimes called dark debt—that provides insights into how your system might respond to turbulent, production-like conditions.

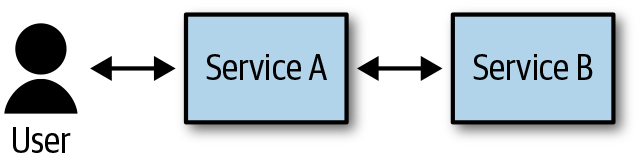

Take an example where you have two services that communicate with each other. In Figure 1-1, Service A is dependent on Service B.

Figure 1-1. A simple two-service system

What should happen if Service B dies? What will happen to Service A if Service B starts to respond slowly? What happens if Service B comes back after going away for a period of time? What happens if the connection between Service A and B becomes increasingly busy? What happens if the CPU that is being used by Service B is maxed out? And most importantly, what does this all mean to the user?

You might believe you’ve designed the services and the infrastructure perfectly to accommodate all of these cases, but how do you know? Even in such a simple system it is likely there might be some surprises—some dark debt—present. Chaos engineering provides a way of exploring these uncertainties to find out whether your assumptions of the system’s resiliency hold water in the real world.

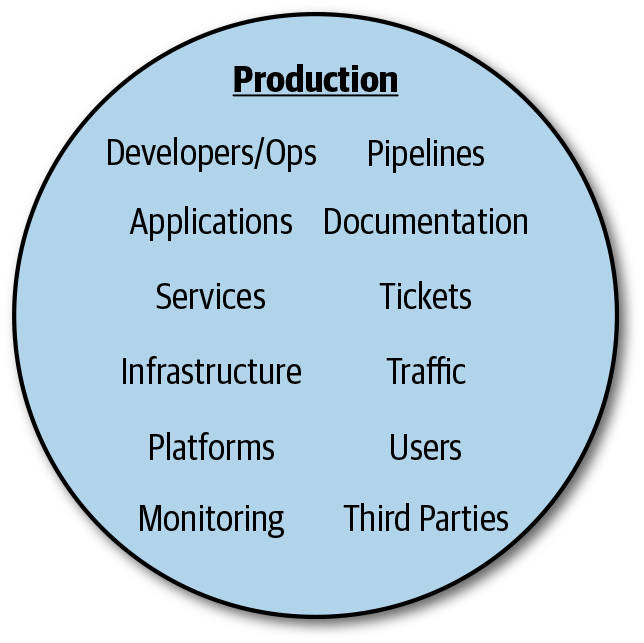

Chaos Engineering Addresses the Whole Sociotechnical System

Chaos engineering doesn’t just deal with the technical aspects of your software system; it also encourages exploration across the whole sociotechnical system (Figure 1-2).

Figure 1-2. Just some of the aspects involved in the entire sociotechnical system

To your organization, chaos engineering is about risk mitigation. A system outage can mean a huge loss of revenue. Even an internal system failing can mean people can’t get their jobs done, which is another form of production outage. Chaos engineering helps you explore the conditions of those outages before they happen, giving you a chance to overcome those weaknesses before they hit your organization’s bottom line.

The reality is that failure in production is “SNAFU.”1 It’s only in production that your software comes under the most hostile real-world stresses, and no amount of preplanning can completely avoid an outage. Chaos engineering takes a different approach. Instead of trying to avoid failure, chaos engineering embraces it.

As a chaos engineer, you build experiments that proactively establish trust and confidence in the resilience of your whole system in production by exploring failure in everything from the infrastructure to the people, processes, and practices involved in keeping the system running and evolving (hint: that’s everyone!).

Locations of Dark Debt

Dark debt can be present anywhere in a system, but the original chaos engineering tools tended to focus on a system’s infrastructure. Netflix’s Chaos Monkey, recognized as the first tool in the space, focuses on providing the capability to explore how a system would respond to the death of Amazon Web Services (AWS) EC2 virtual machines in a controlled and random way. Infrastructure, though, is not the only place where dark debt may be.

There are three further broad areas of failure to be considered when exploring your system’s potential weaknesses:

-

Platform

-

Applications

-

People, practices, and processes

The infrastructure level encompasses the hardware, your virtual machines, your cloud provider’s Infrastructure-as-a-Service (IaaS) features, and the network. The platform level usually incorporates systems such as Kubernetes that work at a higher level of abstraction than infrastructure. Your own code inhabits the application level. Finally, we complete the sociotechnical system that is production by including the people, practices, and processes that work on it.

Dark debt may affect one or more of these areas, in isolation or as a compound effect. This is why you, as a chaos engineer, will consider all of these areas when looking to surface evidence of dark debt across the whole sociotechnical system.

The Process of Chaos Engineering

Chaos engineering begins by asking the question, “Do we know what the system might do in this case?” (Figure 1-3). This question could be prompted by a previous incident or might simply spring from the responsible team’s worries about one or more cases. Once the question has been asked and is understood to be an important risk to explore (see Chapter 2), the process of chaos engineering can begin.

Figure 1-3. The process of chaos engineering

Starting with your question, you then formulate a hypothesis as the basis for a chaos engineering Game Day or automated chaos experiment (more on this in the next section). The outcomes of those Game Days and chaos experiments will be a collection of observations that provide evidence that one or more weaknesses exist and should be considered as candidates for improvements.

The Practices of Chaos Engineering

Chaos engineering most often starts by defining an experiment that can be run manually by the teams and supported by a chaos engineer. These manual chaos experiments are executed as a Game Day (see Chapter 3) where all the responsible teams and any interested parties can gather to assess how a failure is dealt with “In production” (in fact, when an organization is new to chaos engineering, Game Day experiments are more often executed against a safe staging environment rather than directly in production).

The advantage of Game Days is that they provide a low-technology-cost way to get started with chaos engineering. In terms of time and effort, however, Game Days represent a larger investment from the teams and quickly become unscalable when chaos engineering is done continuously.

You’ll want to run chaos experiments as frequently as possible because production is continuously changing, not least through new software deployments and changing user behavior (see Chapter 12). Throw in the fluidity of production running in the cloud, and failure-inducing conditions change from minute to minute, if not second to second! Production conditions aren’t called “turbulent” in the definition of chaos engineering for nothing!

Automated chaos engineering experiments come to the rescue here (see Chapter 5). Using your tool of choice, you can carefully automate your chaos experiments so that they can be executed with minimal, or even no manual intervention, meaning you can run them as frequently as you like, and the teams can get on with other work, such as dreaming up new areas of concern for new chaos experiments or even developing and delivering new features.

Sandbox/Staging or Production?

When an organization is in its early stages of maturity in adopting chaos engineering, the temptation to execute experiments against safer, isolated sandbox or staging environments will be strong. Such an approach is not “wrong,” but it is worth being aware of the trade-offs.

When considering whether an experiment can be executed in production, it is a good idea to limit its effect—called its Blast Radius—as much as possible so as to try to avoid causing a real production incident.3 The important point is that, regardless of the size of an experiment’s Blast Radius, it will not be completely safe. And in fact, it shouldn’t be.

Your chaos experiments are attempts to discover and surface new weaknesses. While it’s wise to limit the potential known impact of an experiment, the point is still to empirically build trust and confidence that there isn’t a weakness, and to do that you are deliberately taking a controlled risk that a weakness—even a big one—may be found.

A good practice is to start with a small–Blast Radius experiment somewhere safer, such as staging, and then grow it’s Blast Radius until you are confident the experiment has found no weaknesses in that environment. Then you dial back the Blast Radius again as you move the experiment to production so that you can begin to discover weaknesses there instead.

Running a Game Day or an automated chaos experiment in staging or some other safe(r) environment has the upside of not interrupting the experience of the system’s real users should the experiment get out of control, but it has the downside of not discovering real evidence of the weakness being present in production. It is this evidence in production that gives a chaos experiment’s findings their unignorable power to encourage improvement in the system’s resilience that can be lost when a weakness is found “just in staging.”

Production Brings Learning Leverage

I try to encourage organizations to at least consider how they might, in as safe a way as possible, eventually graduate their experiments into production because of the need to make the findings from the chaos experiments as unignorable as possible. After all, in production everyone can hear you scream…

Chaos Engineering and Observability

While any system can benefit right away from immediately applying chaos engineering in small ways, there is at least one important system property that your chaos engineering experiments will rely upon almost immediately: observability.

Charity Majors describes observability as a running system’s ability to be debugged. The ability to comprehend, interrogate, probe, and ask questions of a system while it is running is at the heart of this debuggability.

Chaos engineering—particularly automated chaos experiments—encourages and relies on the observability of the system so that you’re able to detect the evidence of your system’s reactions to the turbulent conditions caused by your experiments. Even if you do not have good system observability when you begin to adopt chaos engineering, you will quickly see the value and need for system debuggability in production. Thus, chaos engineering and observability frequently go hand in hand with each other, chaos engineering being one forcing factor to improve your system’s observability.

Is There a “Chaos Engineer”?

Chaos engineering is a technique that everyone in the team responsible for software in production will find useful. Just as writing tests is something everyone is responsible for, so thinking about experiments and conducting Game Days and writing automated chaos experiments is a job best done as part of the regular, day-to-day work of everyone in the team. In this way, everyone is a chaos engineer, and it is more of an additional skill than a full-time role.

Some large companies, like Netflix, do employ full-time chaos engineers—but their jobs are not quite what you’d expect. These individuals work with the software-owning teams to support them by doing chaos engineering through workshops, ideation, and tooling. Sometimes they also coordinate larger chaos experiments across multiple teams. What they don’t do is attack other people’s systems with surprise chaos experiments of their own. That wouldn’t be science; that would be sadism!

So while chaos engineering is a discipline that everyone can learn with practice, your company may have a dedicated set of chaos engineers supporting the teams, and even a dedicated resilience engineering group. The most important thing is that everyone is aware of, and has bought into chaos experiments and has an opportunity to learn from their findings.

Summary

The goal of this chapter was to distill as much of the discipline of chaos engineering as possible so that you will be able to successfully begin creating, running, and learning from your own chaos engineering experiments. You’ve learned what chaos engineering is useful for and how to think like a chaos engineer.

You’ve also gotten an overview of the practices and techniques that comprise chaos engineering in Game Days and automated chaos experiments. Finally, you’ve learned how the role of chaos engineer successfully works within and alongside teams.

That’s enough about what chaos engineering is—now it’s time to take your first steps towards applying the discipline by learning how to source, capture, and prioritize your first set of chaos engineering experiment hypotheses.

1 Here I mean “Situation Normal,” but the full acronym also applies!

2 See the preface in Site Reliability Engineering, edited by Niall Richard Murphy et al. (O’Reilly), for the full story of how Margaret saved the Apollo moon landing. Once you’ve read it you’ll see why I argue that Margaret Hamilton was a great resilience engineer, and that Margaret’s daughter Lauren should be called the “World’s First Chaos Engineer!” Tip for parents: the story is also told in the wonderful book, Margaret and the Moon, by Dean Robbins (Knopf Books for Young Readers).

3 Adrian Hornsby, Senior Technology Evangelist at AWS, said at a talk that “Chaos Engineers should obsess about blast radius.”

Get Learning Chaos Engineering now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.