It’s one thing to create a language that prevents you from shooting yourself in the foot; it’s quite another to create one that prevents others from shooting you in the foot.

Encapsulation is a technique for hiding data and behavior within a class; it’s an important part of object-oriented design. It helps you write clean, modular software. In most languages, however, the visibility of data items is simply part of the relationship between the programmer and the compiler. It’s a matter of semantics, not an assertion about the actual security of the data in the context of the running program’s environment.

When

Bjarne Stroustrup chose the keyword

private

to designate hidden members of classes

in C++, he was probably thinking about shielding you from the messy

details of a class developer’s code, not the issues of

shielding that developer’s classes and objects from the

onslaught of someone else’s

viruses and Trojan horses. Arbitrary casting

and pointer arithmetic in C or C++ make it trivial to violate access

permissions on classes without breaking the rules of the language.

Consider the following code:

// C++ code

class Finances {

private:

char creditCardNumber[16];

...

};

main( ) {

Finances finances;

// Forge a pointer to peek inside the class

char *cardno = (char *)&finances;

printf("Card Number = %s\n", cardno);

}In this little C++ drama, we have written some code that violates the

encapsulation of the Finances class and pulls out

some secret information. This sort of shenanigan—abusing an

untyped pointer—is not possible in Java. If this example seems

unrealistic, consider how important it is to protect the foundation

(system) classes of the runtime environment from similar kinds of

attacks. If untrusted code can corrupt the components that provide

access to real resources, such as the filesystem, the network, or the

windowing system, it certainly has a chance at stealing your credit

card numbers.

In Visual BASIC, it’s also possible to compromise the system by peeking, poking, and, under DOS, installing interrupt handlers. Even some recent languages that have some commonalties with Java lack important security features. For example, the Apple Newton uses an object-oriented language called NewtonScript that is compiled into an interpreted byte-code format. However, NewtonScript has no concept of public and private members, so a Newton application has free reign to access any information it finds. General Magic’s Telescript language is another example of a device-independent language that does not fully address security concerns. The list goes on ...

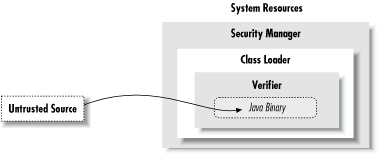

If a Java application is to dynamically download code from an untrusted source on the Internet and run it alongside applications that might contain confidential information, protection has to extend very deep. The Java security model wraps three layers of protection around imported classes, as shown in Figure 1.3.

At the outside, application-level security decisions are made by a security manager. A security manager controls access to system resources like the filesystem, network ports, and the windowing environment. A security manager relies on the ability of a class loader to protect basic system classes. A class loader handles loading classes from the network. At the inner level, all system security ultimately rests on the Java verifier, which guarantees the integrity of incoming classes.

The Java byte-code verifier is a fixed part of the Java runtime system. Class loaders and the security managers (or security policies to be more precise), however, are components that may be implemented differently by different applications that load byte-code, such as applet viewers and web browsers. All three of these pieces need to be functioning properly to ensure security in the Java environment.[3]

Java’s first line of defense is the byte-code verifier. The verifier reads byte-code modules before they are run and makes sure they are well-behaved and obey the basic rules of the Java language. A trusted Java compiler won’t produce code that does otherwise. However, it’s possible for a mischievous person to deliberately assemble bad code. It’s the verifier’s job to detect this.

Once code has been verified, it’s considered safe from certain inadvertent or malicious errors. For example, verified code can’t forge references or violate access permissions on objects. It can’t perform illegal casts or use objects in unintended ways. It can’t even cause certain types of internal errors, such as overflowing or underflowing the operand stack. These fundamental guarantees underlie all of Java’s security.

You might be wondering, isn’t this kind of safety implicit in lots of interpreted languages? Well, while it’s true that you shouldn’t be able to corrupt the interpreter with bogus BASIC code, remember that the protection in most interpreted languages happens at a higher level. Those languages are likely to have heavyweight interpreters that do a great deal of runtime work, so they are necessarily slower and more cumbersome.

By comparison, Java byte-code is a relatively light, low-level instruction set. The ability to statically verify the Java byte-code before execution lets the Java interpreter run at full speed with full safety, without expensive runtime checks. Of course, you are always going to pay the price of running an interpreter, but that’s not a serious problem with the speed of modern CPUs. Java byte-code can also be compiled on the fly to native machine code, which has even less runtime overhead.

The verifier is a type of theorem prover. It steps through the Java byte-code and applies simple, inductive rules to determine certain aspects of how the byte-code will behave. This kind of analysis is possible because compiled Java byte-code contains a lot more type information than the object code of other languages of this kind. The byte-code also has to obey a few extra rules that simplify its behavior. First, most byte-code instructions operate only on individual data types. For example, with stack operations, there are separate instructions for object references and for each of the numeric types in Java. Similarly, there is a different instruction for moving each type of value into and out of a local variable.

Second, the type of object resulting from any operation is always known in advance. There are no byte-code operations that consume values and produce more than one possible type of value as output. As a result, it’s always possible to look at the next instruction and its operands, and know the type of value that will result.

Because an operation always produces a known type, by looking at the starting state, it’s possible to determine the types of all items on the stack and in local variables at any point in the future. The collection of all this type information at any given time is called the type state of the stack; this is what Java tries to analyze before it runs an application. Java doesn’t know anything about the actual values of stack and variable items at this time, just what kind of items they are. However, this is enough information to enforce the security rules and to ensure that objects are not manipulated illegally.

To make it feasible to analyze the type state of the stack, Java places an additional restriction on how Java byte-code instructions are executed: all paths to the same point in the code have to arrive with exactly the same type state.[4] This restriction makes it possible for the verifier to trace each branch of the code just once and still know the type state at all points. Thus, the verifier can insure that instruction types and stack value types always correspond, without actually following the execution of the code. For a more thorough explanation of all of this, see The Java Virtual Machine, by Jon Meyer and Troy Downing (O’Reilly & Associates).

Java adds a second layer of security with a class loader. A class loader is responsible for bringing the byte-code for one or more Java classes into the interpreter. Every application that loads classes from the network must use a class loader to handle this task.

After a class has been loaded and passed through the verifier, it remains associated with its class loader. As a result, classes are effectively partitioned into separate namespaces based on their origin. When a loaded class references another class name, the location of the new class is provided by the original class loader. This means that classes retrieved from a specific source can be restricted to interact only with other classes retrieved from that same location. For example, a Java-enabled web browser can use a class loader to build a separate space for all the classes loaded from a given uniform resource locator (URL).

The search for classes always begins with the built-in Java system classes. These classes are loaded from the locations specified by the Java interpreter’s class path (see Chapter 3). Classes in the class path are loaded by the system only once and can’t be replaced. This means that it’s impossible for an applet to replace fundamental system classes with its own versions that change their functionality.

Finally, a security manager is responsible for making application-level security decisions. A security manager is an object that can be installed by an application to restrict access to system resources. The security manager is consulted every time the application tries to access items like the filesystem, network ports, external processes, and the windowing environment, so the security manager can allow or deny the request.

A security manager is most useful for applications that run untrusted code as part of their normal operation. Since a Java-enabled web browser can run applets that may be retrieved from untrusted sources on the Net, such a browser needs to install a security manager as one of its first actions. This security manager then restricts the kinds of access allowed after that point. This lets the application impose an effective level of trust before running an arbitrary piece of code. And once a security manager is installed, it can’t be replaced.

In Java 2, the security manager works in conjunction with an access controller that lets you implement security policies by editing a file. Access policies can be as simple or complex as a particular application warrants. Sometimes it’s sufficient simply to deny access to all resources or to general categories of services such as the filesystem or network. But it’s also possible to make sophisticated decisions based on high-level information. For example, a Java-enabled web browser could use an access policy that lets users specify how much an applet is to be trusted or that allows or denies access to specific resources on a case-by-case basis. Of course, this assumes that the browser can determine which applets it ought to trust. We’ll see how this problem is solved shortly.

The integrity of a security manager is based on the protection afforded by the lower levels of the Java security model. Without the guarantees provided by the verifier and the class loader, high-level assertions about the safety of system resources are meaningless. The safety provided by the Java byte-code verifier means that the interpreter can’t be corrupted or subverted, and that Java code has to use components as they are intended. This, in turn, means that a class loader can guarantee that an application is using the core Java system classes and that these classes are the only means of accessing basic system resources. With these restrictions in place, it’s possible to centralize control over those resources with a security manager.

[3] You may have seen reports about various security flaws in Java. While these weaknesses are real, it’s important to realize that they have been found in the implementations of various components, namely Sun’s byte-code verifier and Netscape’s class loader and security manager, not in the basic security model itself. One of the reasons Sun has released the source code for Java is to encourage people to search for weaknesses, so they can be removed.

[4] The implications of this rule are of interest mainly to compiler writers. The rule means that Java byte- code can’t perform certain types of iterative actions within a single frame of execution. A common example would be looping and pushing values onto the stack. This is not allowed because the path of execution would return to the top of the loop with a potentially different type state on each pass, and there is no way that a static analysis of the code can determine whether it obeys the security rules.

Get Learning Java now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.