Squeryl is an object-relational mapping library. It converts Scala classes into tables, rows, and columns in a relational database, and provides a way to write SQL-like queries that are type-checked by the Scala compiler. The Lift Squeryl Record module integrates Squeryl with Record, meaning your Lift application can use Squeryl to store and fetch data while making use of the features of Record, such as data validation.

The code in this chapter can be found at https://github.com/LiftCookbook/cookbook_squeryl.

Include the Squeryl-Record dependency in your build, and in Boot.scala, provide a database connection function to SquerylRecord.initWithSquerylSession.

For example, to configure Squeryl with PostgreSQL, modify build.sbt to add two dependencies, one for Squeryl-Record and one for the database driver:

libraryDependencies++={valliftVersion="2.5"Seq("net.liftweb"%%"lift-webkit"%liftVersion,"net.liftweb"%%"lift-squeryl-record"%liftVersion,"postgresql"%"postgresql"%"9.1-901.jdbc4"...)}

In Boot.scala, we define a connection and register it with Squeryl:

Class.forName("org.postgresql.Driver")defconnection=DriverManager.getConnection("jdbc:postgresql://localhost/mydb","username","password")SquerylRecord.initWithSquerylSession(Session.create(connection,newPostgreSqlAdapter))

All Squeryl queries need to run in the context of a transaction. One way to provide a transaction is to configure a transaction around all HTTP requests. This is also configured in Boot.scala:

importnet.liftweb.squerylrecord.RecordTypeMode._importnet.liftweb.http.Simportnet.liftweb.util.LoanWrapperS.addAround(newLoanWrapper{overridedefapply[T](f:=>T):T={valresult=inTransaction{try{Right(f)}catch{casee:LiftFlowOfControlException=>Left(e)}}resultmatch{caseRight(r)=>rcaseLeft(exception)=>throwexception}}})

This arranges for requests to be handled in the inTransaction scope. As Lift uses an exception for redirects, we catch this exception and throw it after the transaction completes, avoiding rollbacks after an S.redirectTo or similar.

You can use any JVM persistence mechanism with Lift. What Lift Record provides is a light interface around persistence with bindings to Lift’s CSS transforms, screens, and wizards. Squeryl-Record is a concrete implementation to connect Record with Squeryl. This means you can use standard Record objects, which are effectively your schema, with Squeryl and write queries that are validated at compile time.

Plugging into Squeryl means initialising Squeryl’s session management, which allows us to wrap queries in Squeryl’s transaction and inTransaction functions. The difference between these two calls is that inTransaction will start a new transaction if one doesn’t exist, whereas transaction always creates a new transaction.

By ensuring a transaction is available for all HTTP requests via addAround, we can write queries in Lift, and for the most part, do not have to establish transactions ourselves unless we want to. For example:

importnet.liftweb.squerylrecord.RecordTypeMode._valr=myTable.insert(MyRecord.createRecord.myField(aValue))

In this recipe, the PostgreSqlAdapter is used. Squeryl also supports: OracleAdapter, MySQLInnoDBAdapter and MySQLAdapter, MSSQLServer, H2Adapter, DB2Adapter, and DerbyAdapter.

The Squeryl Getting Started Guide links to more information about session management and configuration.

See Using a JNDI DataSource for configuring connections via Java Naming and Directory Interface (JNDI).

You want to use a Java Naming and Directory Interface (JNDI) data source for your Record-Squeryl Lift application.

In Boot.scala, call initWithSquerylSession with a DataSource looked up from the JNDI context:

importjavax.sql.DataSourcevalds=newInitialContext().lookup("java:comp/env/jdbc/mydb").asInstanceOf[DataSource]SquerylRecord.initWithSquerylSession(Session.create(ds.getConnection(),newMySQLAdapter))

Replace mydb with the name given to your database in your JNDI

configuration, and replace MySQLAdapter with the appropriate adapter

for the database you are using.

JNDI is a service provided by the web container (e.g., Jetty, Tomcat) that allows you to configure a database connection in the container and then refer to the connection by name in your application. One advantage of this is that you can avoid including database credentials to your Lift source base.

The configuration of JNDI is different for each container, and may vary with versions of the container you use. The “See Also” section next includes links to the documentation pages for popular containers.

Some environments may also require that you to reference the JNDI resource in your src/main/webapp/WEB-INF/web.xml file:

<resource-ref><res-ref-name>jdbc/mydb</res-ref-name><res-type>javax.sql.DataSource</res-type><res-auth>Container</res-auth></resource-ref>

Resources for JNDI configuration include:

- An example on the Lift wiki for Apache and Jetty configuration.

- The documentation for Jetty gives examples for various databases.

- For Tomcat, see the JNDI configuration guide.

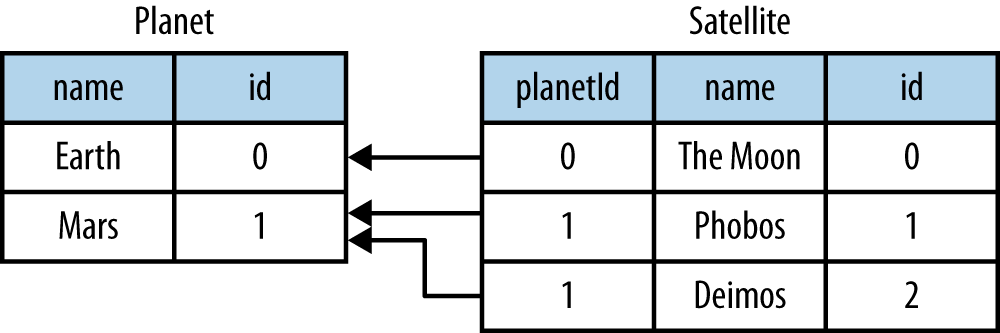

You want to model a one-to-many relationship, such as a satellite belonging to a single planet, but a planet possibly having many satellites.

Use Squeryl’s oneToManyRelation in your schema, and on your Lift model, include a reference from the satellite to the planet.

The objective is to model the relationship as shown in Figure 7-1.

In code:

packagecode.modelimportorg.squeryl.Schemaimportnet.liftweb.record.{MetaRecord,Record}importnet.liftweb.squerylrecord.KeyedRecordimportnet.liftweb.record.field.{StringField,LongField}importnet.liftweb.squerylrecord.RecordTypeMode._objectMySchemaextendsSchema{valplanets=table[Planet]valsatellites=table[Satellite]valplanetToSatellites=oneToManyRelation(planets,satellites).via((p,s)=>p.id===s.planetId)on(satellites){s=>declare(s.planetIddefineAsindexed("planet_idx"))}classPlanetextendsRecord[Planet]withKeyedRecord[Long]{overridedefmeta=PlanetoverridevalidField=newLongField(this)valname=newStringField(this,256)lazyvalsatellites=MySchema.planetToSatellites.left(this)}objectPlanetextendsPlanetwithMetaRecord[Planet]classSatelliteextendsRecord[Satellite]withKeyedRecord[Long]{overridedefmeta=SatelliteoverridevalidField=newLongField(this)valname=newStringField(this,256)valplanetId=newLongField(this)lazyvalplanet=MySchema.planetToSatellites.right(this)}objectSatelliteextendsSatellitewithMetaRecord[Satellite]}

This schema defines the two tables based on the Record classes, as table[Planet] and table[Satellite]. It establishes a oneToManyRelation based on (via) the planetId in the satellite table.

This gives Squeryl the information it needs to produce a foreign key to constrain the planetId to reference an existing record in the planet table. This can be seen in the schema generated by Squeryl. We can print the schema in Boot.scala with:

inTransaction{code.model.MySchema.printDdl}

which will print:

-- table declarations :createtablePlanet(namevarchar(256)notnull,idFieldbigintnotnullprimarykeyauto_increment);createtableSatellite(namevarchar(256)notnull,idFieldbigintnotnullprimarykeyauto_increment,planetIdbigintnotnull);-- indexes on Satellitecreateindexplanet_idxonSatellite(planetId);-- foreign key constraints :altertableSatelliteaddconstraintSatelliteFK1foreignkey(planetId)referencesPlanet(idField);

An index called planet_idx is declared on the planetId field to improve query performance during joins.

Finally, we make use of the planetToSatellites.left and right methods to establish lookup queries as Planet.satellites and Satellite.planet. We can demonstrate their use by inserting example data and running the queries:

inTransaction{code.model.MySchema.createimportcode.model.MySchema._valearth=planets.insert(Planet.createRecord.name("Earth"))valmars=planets.insert(Planet.createRecord.name("Mars"))// .save as a short-hand for satellite.insert when we don't need// to immediately reference the record (save returns Unit).Satellite.createRecord.name("The Moon").planetId(earth.idField.is).saveSatellite.createRecord.name("Phobos").planetId(mars.idField.is).savevaldeimos=satellites.insert(Satellite.createRecord.name("Deimos").planetId(mars.idField.is))println("Deimos orbits: "+deimos.planet.single.name.is)println("Moons of Mars are: "+mars.satellites.map(_.name.is))}

Running this code produces the output:

Deimos orbits: Mars Moons of Mars are: List(Phobos, Deimos)

In this example code, we’re calling deimos.planet.single, which returns one result or will throw an exception if the associated planet was not found. headOption is the safer way if there’s a chance the record will not be found, as it will evaluate to None or Some[Planet].

The planetToSatellites.left method is not a simple collection of Satellite objects. It’s a Squeryl Query[Satellite], meaning you can treat it like any other kind of Queryable[Satellite]. For example, we could ask for those satellites of a planet that are alphabetically after “E,” which for Mars would match “Phobos”:

mars.satellites.where(s=>s.namegt"E").map(_.name)

The left method result is also a OneToMany[Satellite] that adds the following methods:

-

assign - Adds a new relationship, but does not update the database

-

associate -

Similar to

assign, but updates the database -

deleteAll - Removes the relationships

The assign call gives the satellite the relationship to the planet:

valexpress=Satellite.createRecord.name("Mars Express")mars.satellites.assign(express)express.save

The next time we query mars.satellites, we will find the Mars Express orbiter.

A call to associate would go one step further for us, making Squeryl insert or update the satellite automatically:

valexpress=Satellite.createRecord.name("Mars Express")mars.satellites.associate(express)

The third method, deleteAll, does what it sounds like it should do. It would execute the following SQL and return the number of rows removed:

deletefromSatellite

The right side of the one-to-many also has additional methods added by ManyToOne[Planet] of assign and delete. Be aware that to delete the “one” side of a many-to-one, anything assigned to the record will need to have been deleted already to avoid a database constraint error that would arise from, for example, leaving satellites referencing nonexistent planets.

As left and right are queries, it means each time you use them you’ll be sending a new query to the database. Squeryl refers to these forms as stateless relations.

The stateful versions of left and right look like this:

classPlanetextendsRecord[Planet]withKeyedRecord[Long]{...lazyvalsatellites:StatefulOneToMany[Satellite]=MySchema.planetToSatellites.leftStateful(this)}classSatelliteextendsRecord[Satellite]withKeyedRecord[Long]{...lazyvalplanet:StatefulManyToOne[Planet]=MySchema.planetToSatellites.rightStateful(this)}

This change means the results of mars.satellites will be cached. Subsequent calls on that instance of a Planet won’t trigger a round trip to the database. You can still associate new records or deleteAll records, which will work as you expect, but if a relationship is added or changed elsewhere you’ll need to call refresh on the relation to see the change.

Which version should you use? That will depend on your application, but you can use both in the same record if you need to.

The Squeryl Relations page provides additional details.

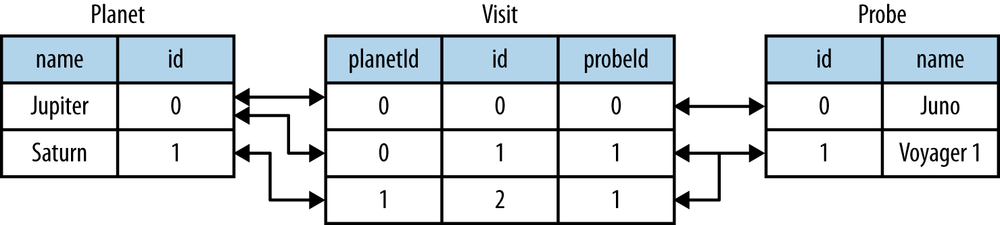

You want to model a many-to-many relationship, such as a planet being visited by many space probes, but a space probe also visiting many planets.

Use Squeryl’s manyToManyRelation in your schema, and implement a record to hold the join between the two sides of the relationship. Figure 7-2 shows the structure we will create in this recipe, where Visit is the record that will connect each many to the other many.

Figure 7-2. Many-to-many: Jupiter was visited by Juno and Voyager 1; Saturn was only visited by Voyager 1

The schema is defined in terms of two tables, one for planets and one for space probes, plus a relationship between the two based on a third class, called Visit:

packagecode.modelimportorg.squeryl.Schemaimportnet.liftweb.record.{MetaRecord,Record}importnet.liftweb.squerylrecord.KeyedRecordimportnet.liftweb.record.field.{IntField,StringField,LongField}importnet.liftweb.squerylrecord.RecordTypeMode._importorg.squeryl.dsl.ManyToManyobjectMySchemaextendsSchema{valplanets=table[Planet]valprobes=table[Probe]valprobeVisits=manyToManyRelation(probes,planets).via[Visit]{(probe,planet,visit)=>(visit.probeId===probe.id,visit.planetId===planet.id)}classPlanetextendsRecord[Planet]withKeyedRecord[Long]{overridedefmeta=PlanetoverridevalidField=newLongField(this)valname=newStringField(this,256)lazyvalprobes:ManyToMany[Probe,Visit]=MySchema.probeVisits.right(this)}objectPlanetextendsPlanetwithMetaRecord[Planet]classProbeextendsRecord[Probe]withKeyedRecord[Long]{overridedefmeta=ProbeoverridevalidField=newLongField(this)valname=newStringField(this,256)lazyvalplanets:ManyToMany[Planet,Visit]=MySchema.probeVisits.left(this)}objectProbeextendsProbewithMetaRecord[Probe]classVisitextendsRecord[Visit]withKeyedRecord[Long]{overridedefmeta=VisitoverridevalidField=newLongField(this)valplanetId=newLongField(this)valprobeId=newLongField(this)}objectVisitextendsVisitwithMetaRecord[Visit]}

In Boot.scala, we can print out this schema:

inTransaction{code.model.MySchema.printDdl}

which will produce something like this, depending on the database in use:

-- table declarations :createtablePlanet(namevarchar(256)notnull,idFieldbigintnotnullprimarykeyauto_increment);createtableProbe(namevarchar(256)notnull,idFieldbigintnotnullprimarykeyauto_increment);createtableVisit(idFieldbigintnotnullprimarykeyauto_increment,planetIdbigintnotnull,probeIdbigintnotnull);-- foreign key constraints :altertableVisitaddconstraintVisitFK1foreignkey(probeId)referencesProbe(idField);altertableVisitaddconstraintVisitFK2foreignkey(planetId)referencesPlanet(idField);

Notice that the visit table will hold a row for each relationship between a planetId and probeId.

Planet.probes and Probe.planets provide an associate method to establish a new relationship. For example, we can establish a set of planets and probes:

valjupiter=planets.insert(Planet.createRecord.name("Jupiter"))valsaturn=planets.insert(Planet.createRecord.name("Saturn"))valjuno=probes.insert(Probe.createRecord.name("Juno"))valvoyager1=probes.insert(Probe.createRecord.name("Voyager 1"))

and then connect them:

juno.planets.associate(jupiter)voyager1.planets.associate(jupiter)voyager1.planets.associate(saturn)

We can also use Probe.planets and Planet.probes as a query to look up the associations. To access all the probes that had visited each planet in a snippet, we can write this:

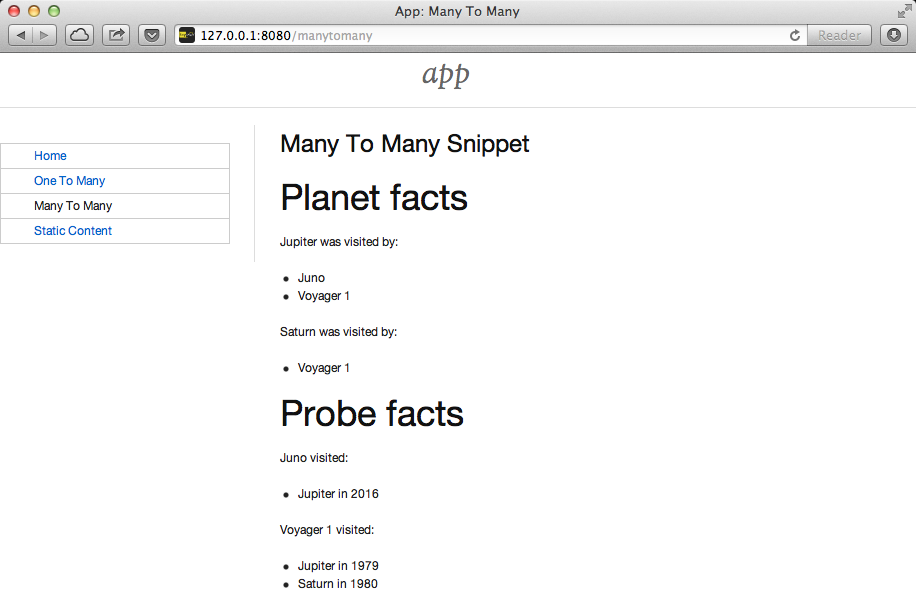

packagecode.snippetclassManyToManySnippet{defrender="#planet-visits"#>planets.map{planet=>".planet-name *"#>planet.name.is&".probe-name *"#>planet.probes.map(_.name.is)}}

The snippet could be combined with a template like this:

<divdata-lift="ManyToManySnippet"><h1>Planet facts</h1><divid="planet-visits"><p><spanclass="planet-name">Name will be here</span>was visited by:</p><ul><liclass="probe-name">Probe name goes here</li></ul></div></div>

The top half of Figure 7-3 gives an example of the output from this snippet and template.

The Squeryl DSL manyToManyRelation(probes, planets).via[Visit] is the core element here connecting our Planet, Probe, and Visit records together. It allows us to access the “left” and “right” sides of the relationship in our model as Probe.planets and Planet.probes.

As with One-to-Many Relationship for one-to-many relationships, the left and right sides are queries. When you ask for Planet.probes, the database is queried appropriately with a join on the Visit records:

SelectProbe.name,Probe.idFieldFromVisit,ProbeWhere(Visit.probeId=Probe.idField)and(Visit.planetId=?)

Also as described in One-to-Many Relationship, there are stateful variants of left and right to cache the query results.

In the data we inserted into the database, we did not have to mention Visit. The Squeryl manyToManyRelation has enough information to know how to insert a visit as the relationship. Incidentally, it doesn’t matter which way round we make the calls in a many-to-many relationship. The following two expressions are equivalent and result in the same database structure:

juno.planets.associate(jupiter)// ..or..jupiter.probes.associate(juno)

You might even wonder why we had to bother with defining a Visit record at all, but there are benefits in doing so. For example, you can attach additional information onto the join table, such as the year the probe visited a planet.

To do this, we modify the record to include the additional field:

classVisitextendsRecord[Visit]withKeyedRecord[Long]{overridedefmeta=VisitoverridevalidField=newLongField(this)valplanetId=newLongField(this)valprobeId=newLongField(this)valyear=newIntField(this)}

Visit is still a container for the planetId and probeId references, but we also have a plain integer holder for the year of the visit.

To record a visit year, we need the assign method provided by ManyToMany[T]. This will establish the relationship but not change the database. Instead, it returns the instance Visit, which we can change and then store in the database:

probeVisits.insert(voyager1.planets.assign(saturn).year(1980))

The return type of assign in this case is Visit, and Visit has a year field. Inserting the Visit record via probeVisits will create a row in the table for visits.

To access this extra information on the Visit object, you can make use of a couple of methods provided by ManyToMany[T]:

-

associations -

A query returning the

Visitobjects related to thePlanet.probesorProbe.planets -

associationMap -

A query returning pairs of

(Planet,Visit)or(Probe,Visit), depending on which side of the join you call it on (probesorplanets)

For example, in a snippet, we could list all the space probes and, for each probe, show the planet it visited and what year it was there. The snippet would look like this:

"#probe-visits"#>probes.map{probe=>".probe-name *"#>probe.name.is&".visit"#>probe.planets.associationMap.collect{case(planet,visit)=>".planet-name *"#>planet.name.is&".year"#>visit.year.is}}

We are using collect here rather than map just to match the (Planet,Visit) tuple and give the values meaningful names. You could also use (for { (planet, visit) <- probe.planets.associationMap } yield ...) if you prefer.

The lower half of Figure 7-3 demonstrates how this snippet would render when combined with the following template:

<h1>Probe facts</h1><divid="probe-visits"><p><spanclass="probe-name">Space craft name</span>visited:</p><ul><liclass="visit"><spanclass="planet-name">Name here</span>in<spanclass="year">n</span></li></ul></div>

To remove an association, use the dissociate or dissociateAll methods on the left or right queries. To remove a single association:

valnumRowsChanged=juno.planets.dissociate(jupiter)

This would be executed in SQL as:

deletefromVisitwhereprobeId=?andplanetId=?

To remove all the associations:

valnumRowsChanged=jupiter.probes.dissociateAll

The SQL for this is:

deletefromVisitwhereVisit.planetId=?

What you cannot do is delete a Planet or Probe if that record still has associations in the Visit relationship. What you’d get is a referential integrity exception thrown. Instead, you’ll need to dissociateAll first:

jupiter.probes.dissociateAllplanets.delete(jupiter.id)

However, if you do want cascading deletes, you can achieve this by overriding the default behaviour in your schema:

// To automatically remove probes when we remove planets:probeVisits.rightForeignKeyDeclaration.constrainReference(onDeletecascade)// To automatically remove planets when we remove probes:probeVisits.leftForeignKeyDeclaration.constrainReference(onDeletecascade)

This is part of the schema, in that it will change the table constraints, with printDdl producing this (depending on the database you use):

altertableVisitaddconstraintVisitFK1foreignkey(probeId)referencesProbe(idField)ondeletecascade;altertableVisitaddconstraintVisitFK2foreignkey(planetId)referencesPlanet(idField)ondeletecascade;

One-to-Many Relationship, on one-to-many relationships, discusses leftStateful and rightStateful relations, which are also applicable for many-to-many relationships.

Foreign keys and cascading deletes are described on the Squeryl Relations page.

You want to add validation to a field in your model, so that users are informed of missing fields or fields that aren’t acceptable to your application.

Override the validations method on your field and provide one or more validation functions.

As an example, imagine we have a database of planets and we want to ensure any new planets entered by users have names of at least five characters. We add this as a validation on our record:

classPlanetextendsRecord[Planet]withKeyedRecord[Long]{overridedefmeta=PlanetoverridevalidField=newLongField(this)valname=newStringField(this,256){overridedefvalidations=valMinLen(5,"Name too short")_::super.validations}}

To check the validation, in our snippet we call validate on the record, which will return all the errors for the record:

packagecodepackagesnippetimportnet.liftweb.http.{S,SHtml}importnet.liftweb.util.Helpers._importmodel.MySchema._classValidateSnippet{defrender={valnewPlanet=Planet.createRecorddefvalidateAndSave():Unit=newPlanet.validatematch{caseNil=>planets.insert(newPlanet)S.notice("Planet '%s' saved"formatnewPlanet.name.is)caseerrors=>S.error(errors)}"#planetName"#>newPlanet.name.toForm&"type=submit"#>SHtml.onSubmitUnit(validateAndSave)}}

When the snippet runs, we render the Planet.name field and wire up a submit button to call the validateAndSave method.

If the newPlanet.validate call indicates there are no errors (Nil), we can save the record and inform the user via a notice. If there are errors, we render all of them with S.error.

The corresponding template could be:

<html><head><title>Planet Name Validation</title></head><bodydata-lift-content-id="main"><divid="main"data-lift="surround?with=default;at=content"><h1>Add a planet</h1><divdata-lift="Msgs?showAll=false"><lift:notice_class>noticeBox</lift:notice_class></div><p>Planet names need to be at least 5 characters long.</p><formclass="ValidateSnippet?form"><div><labelfor="planetName">Planet name:</label><inputid="planetName"type="text"></input><spandata-lift="Msg?id=name_id&errorClass=error">Msg to appear here</span></div><inputtype="submit"></input></form></div></body></html>

In this template, the error message is shown next to the input field, styled with a CSS class of errorClass. The success notice

is shown near the top of the page, just below the <h1> heading, using a style called noticeBox.

-

valMinLen - Validates that a string is at least a given length, as shown previously

-

valMaxLen - Validates that a string is not above a given length

-

valRegex - Validates that a string matches the given pattern

An example of regular expression validation on a field would be:

importjava.util.regex.Patternvalurl=newStringField(this,1024){overridedefvalidations=valRegex(Pattern.compile("^https?://.*"),"URLs should start http:// or https://")_::super.validations}

The list of errors from validate are of type List[FieldError]. The S.error method accepts this list and registers each validation error message so it can be shown on the page. It does this by associating the message with an ID for the field, allowing you to pick out just the errors for an individual field, as we do in this recipe. The ID is stored on the field, and in the case of Planet.name, it is available as Planet.name.uniqueFieldId. It’s a Box[String] with a value of Full("name_id"). It is this name_id value that we used in the lift:Msg?id=name_id&errorClass=error markup to pick out just the error for this field.

You don’t have to use S.error to display validation messages. You can roll your own display code, making use of the FieldError directly. As you can see from the source for FieldError, the error is available as a msg property:

caseclassFieldError(field:FieldIdentifier,msg:NodeSeq){overridedeftoString=field.uniqueFieldId+" : "+msg}

BaseField.scala in the Lift source code contains the definition of the built-in StringValidators.

Chapter 3 describes form processing, notices, and errors.

Implement a function from the type of the field to

List[FieldError], and reference the function in the validations on the field.

Here’s an example: we have a database of planets, and when a user

enters a new planet, we want the name to be unique. The name of the planet

is a String, so we need to provide a function from String => List[FieldError].

With the validation function defined (valUnique, next), we include it in the list of validations on the

name field:

importnet.liftweb.util.FieldErrorclassPlanetextendsRecord[Planet]withKeyedRecord[Long]{overridedefmeta=PlanetoverridevalidField=newLongField(this)valname=newStringField(this,256){overridedefvalidations=valUnique("Planet already exists")_::super.validations}privatedefvalUnique(errorMsg:=>String)(name:String):List[FieldError]=Planet.unique_?(name)match{casetrue=>FieldError(this.name,errorMsg)::Nilcasefalse=>Nil}}objectPlanetextendsPlanetwithMetaRecord[Planet]{defunique_?(name:String)=from(planets){p=>where(lower(p.name)===lower(name))select(p)}.isEmpty}

The validation is triggered just like any other validation, as described in Adding Validation to a Field.

By convention, validation functions have two argument lists: the first for the error message, and the second to receive the value to validate. This allows you to easily reuse your validation function on other fields. For example, if you wanted to validate that satellites have a unique name, you could use exactly the same function but provide a different error message.

The FieldError you return needs to know the field it applies to as

well as the message to display. In the example, the field is name, but

we’ve used this.name to avoid confusion with the name parameter passed

into the valUnique function.

The example code has used text for the error message, but there is a variation of FieldError that

accepts NodeSeq. This allows you to produce safe markup as part of the error if you need to. For example:

FieldError(this.name,<p>Pleasesee<ahref="/policy">ournamepolicy</a></p>)

For internationalisation, you may prefer to pass in a key to the validation function, and

resolve it via S.?:

valname=newStringField(this,256){overridedefvalidations=valUnique("validation.planet")_::super.validations}// ...combined with...privatedefvalUnique(errorKey:=>String)(name:String):List[FieldError]=Planet.unique_?(name)match{casefalse=>FieldError(this.name,S?errorKey)::Nilcasetrue=>Nil}

Adding Validation to a Field discusses field validation and the built-in validations.

Text localisation is discussed on the Lift wiki.

You want to modify the value of a field before storing it (for example, to clean a value by removing leading and trailing whitespace).

Override setFilter and provide a list of functions to apply to the field.

To remove leading and trailing whitespace entered by the user, the field would use the trim filter:

valname=newStringField(this,256){overridedefsetFilter=trim_::super.setFilter}

The built-in filters are:

-

crop - Enforces the field’s min and max length by truncation

-

trim -

Applies

String.trimto the field value -

toUpperandtoLower - Change the case of the field value

-

removeRegExChars - Removes matching regular expression characters

-

notNull - Converts null values to an empty string

Filters are run before validation. This means if you have a minimum length validation and the trim filter, for example, users cannot pass the validation test by just including spaces on the end of the value they enter.

A filter for a String field would be of type String => String, and the setFilter function expects a List of these. Knowing this, it’s straightforward to write custom filters. For example, here’s is a filter that applies a simple form of title case on our name field:

deftitleCase(in:String)=in.split("\\s").map(_.toList).collect{casex::xs=>(Character.toUpperCase(x).toString::xs).mkString}.mkString(" ")

This function is splitting the input string on spaces, converting each word into a list of characters, converting the first character into uppercase, and then gluing the strings back together.

We install titleCase on a field like any other filter:

valname=newStringField(this,256){overridedefsetFilter=trim_::titleCase_::super.setFilter}

Now when a user enters “jaglan beta” as a planet name, it is stored in the database as “Jaglan Beta.”

The best place to understand the filters is the trait StringValidators in the source for BaseField.

If you really do need to apply title case to a value, the Apache Commons WordUtils class provides ready-made functions for this.

Use an in-memory database, and arrange for it to be set up before your test and destroyed after it.

There are three parts to this: including a database in your project and connecting to it in an in-memory mode; creating a reusable trait to set up the database; and then using the trait in your test.

The H2 database has an in-memory mode, meaning it won’t save data to disk. It needs to be included in build.sbt as a dependency. Whilst you are editing build.sbt, also disable SBT’s parallel test execution to prevent database tests from influencing each other:

libraryDependencies+="com.h2database"%"h2"%"1.3.170"parallelExecutioninTest:=false

Create a trait to initialise the database and create the schema:

packagecode.modelimportjava.sql.DriverManagerimportorg.squeryl.Sessionimportorg.squeryl.adapters.H2Adapterimportnet.liftweb.util.StringHelpersimportnet.liftweb.common._importnet.liftweb.http.{S,Req,LiftSession}importnet.liftweb.squerylrecord.SquerylRecordimportnet.liftweb.squerylrecord.RecordTypeMode._importorg.specs2.mutable.Aroundimportorg.specs2.execute.ResulttraitTestLiftSession{defsession=newLiftSession("",StringHelpers.randomString(20),Empty)definSession[T](a:=>T):T=S.init(Req.nil,session){a}}traitDBTestKitextendsLoggable{Class.forName("org.h2.Driver")Logger.setup=Full(net.liftweb.util.LoggingAutoConfigurer())Logger.setup.foreach{_.apply()}defconfigureH2()={SquerylRecord.initWithSquerylSession(Session.create(DriverManager.getConnection("jdbc:h2:mem:dbname;DB_CLOSE_DELAY=-1","sa",""),newH2Adapter))}defcreateDb(){inTransaction{try{MySchema.dropMySchema.create}catch{casee:Throwable=>logger.error("DB Schema error",e)throwe}}}}caseclassInMemoryDB()extendsAroundwithDBTestKitwithTestLiftSession{defaround[T<%Result](testToRun:=>T)={configureH2createDbinSession{inTransaction{testToRun}}}}

In summary, this trail provides an InMemoryDB context for Specs2. This context ensures that the database is configured, the schema created, and a transaction is supplied around your test.

Finally, mix the trait into your test and execute in the scope of the InMemoryDB context.

As an example, using the schema from One-to-Many Relationship, we can test that the planet Mars has two moons:

packagecode.modelimportorg.specs2.mutable._importnet.liftweb.squerylrecord.RecordTypeMode._importMySchema._classPlanetsSpecextendsSpecificationwithDBTestKit{sequential

"Planets">>{"know that Mars has two moons">>InMemoryDB(){valmars=planets.insert(Planet.createRecord.name("Mars"))Satellite.createRecord.name("Phobos").planetId(mars.idField.is).saveSatellite.createRecord.name("Deimos").planetId(mars.idField.is).savemars.satellites.sizemust_==2}}}

Running this with SBT’s test command would show a success:

> test [info] PlanetsSpec [info] [info] Planets [info] + know that Mars has two moons [info] [info] [info] Total for specification PlanetsSpec [info] Finished in 1 second, 274 ms [info] 1 example, 0 failure, 0 error [info] [info] Passed: : Total 1, Failed 0, Errors 0, Passed 1, Skipped 0 [success] Total time: 3 s, completed 03-Feb-2013 11:31:16

The DBTestKit trait has to do quite a lot of work for us. At the lowest level, it loads the H2 driver and configures Squeryl with an in-memory connection. The mem part of the JDBC connection string (jdbc:h2:mem:dbname;DB_CLOSE_DELAY=-1) means that H2 won’t try to persist the data to disk. The database just resides in memory, so there are no files in disk to maintain, and it runs quickly.

By default, when a connection is closed, the in-memory database is destroyed. In this recipe, we’ve disabled that by adding the DB_CLOSE_DELAY=-1, which will allow us to write unit tests that span connections if we want to.

The next step up from connection management is the creation of the database schema in memory. We do this in createDb by throwing away the schema and any data when we start a test, and create it afresh. If you have very common test datasets, this might be a good place to insert that data before your test runs.

These steps are brought together at the InMemoryDB class, which implements a Specs2 interface for code to run Around a test. We’ve also wrapped the test around a TestLiftSession. This provides an empty session, which is useful if you are accessing state-related code (such as the S object). It’s not necessary for running tests against Record and Squeryl, but it has been included here because you may want to do that at some point.

In our specification itself, we mix in the DBTestKit and reference the InMemoryDB context on the tests that access the database. You’ll note that we’ve used >> rather than Specs2’s should and in that you may have seen elsewhere. This is to avoid name conflicts between Specs2 and Squeryl that you might come across.

As we disabled parallel execution with SBT, we also disable parallel execution in Specs2 with sequential. We are doing this to prevent a situation where one test might be expecting data that another test is modifying at the same time.

If all the tests in a specification are going to use the database, you can use the Specs2 AroundContextExample[T] to avoid having to mention InMemoryDB on every test. To do that, mix in AroundContextExample[InMemoryDB] and define aroundContext:

packagecode.modelimportMySchema._importorg.specs2.mutable._importorg.specs2.specification.AroundContextExampleimportnet.liftweb.squerylrecord.RecordTypeMode._classAlternativePlanetsSpecextendsSpecificationwithAroundContextExample[InMemoryDB]{sequentialdefaroundContext=newInMemoryDB()"Solar System">>{"know that Mars has two moons">>{valmars=planets.insert(Planet.createRecord.name("Mars"))Satellite.createRecord.name("Phobos").planetId(mars.idField.is).saveSatellite.createRecord.name("Deimos").planetId(mars.idField.is).savemars.satellites.sizemust_==2}}}

All the tests in AlternativePlanetsSpec will now be run with an InMemoryDB around them.

We’ve used a database with an in-memory mode for the advantages of speed and no files to clean up. However, you could use any regular database: you’d need to change the driver and connection string.

See the H2 database website for more about H2’s in-memory database settings.

Unit Testing Record with MongoDB discusses unit testing with MongoDB, but the comments on SBT’s other testing commands and testing in an IDE would apply to this recipe, too.

Use UniqueIdField:

importnet.liftweb.record.field.UniqueIdFieldvalrandomId=newUniqueIdField(this,32){}

Note the {} in the example; this is required as UniqueIdField is an

abstract class.

The size value, 32, indicates how many random characters to create.

The UniqueIdField field is a kind of StringField and the default value for the field

comes from StringHelpers.randomString. The value is randomly generated, but not guaranteed to be unique in the database.

The database column backing the UniqueIdField in this recipe will be a varchar(32) not null or similar. The value stored will look like:

GOJFGQRLS5GVYGPH3L3HRNXTATG3RM5M

As the value is made up of just letters and numbers, it makes it easy to use in URLs as there are no characters to escape. For example, it could be used in a link to allow a user to validate her account when sent the link over email, which is one of the uses in ProtoUser.

If you need to change the value, the reset method on the field will generate a new random string for the field.

If you need an automatic value that is even more likely to be unique per-row, you can add a field that wraps a universally unique identifier (UUID):

importjava.util.UUIDvaluuid=newStringField(this,36){overridedefdefaultValue=UUID.randomUUID().toString}

This will automatically insert values of the form “6481a844-460a-a4e0-9191-c808e3051519” in records you create.

Java’s UUID support includes a link to RFC 4122, which defines UUIDs.

You want created and updated timestamps on your records and would like them automatically updated when a row is added or updated.

Define the following traits:

packagecode.modelimportjava.util.Calendarimportnet.liftweb.record.field.DateTimeFieldimportnet.liftweb.record.RecordtraitCreated[T<:Created[T]]extendsRecord[T]{self:T=>valcreated:DateTimeField[T]=newDateTimeField(this){overridedefdefaultValue=Calendar.getInstance}}traitUpdated[T<:Updated[T]]extendsRecord[T]{self:T=>valupdated=newDateTimeField(this){overridedefdefaultValue=Calendar.getInstance}defonUpdate=this.updated(Calendar.getInstance)}traitCreatedUpdated[T<:Updated[T]withCreated[T]]extendsUpdated[T]withCreated[T]{self:T=>}

Add the trait to the model. For example, we can modify a Planet record to include

the time the record was created and updated:

classPlanetprivate()extendsRecord[Planet]withKeyedRecord[Long]withCreatedUpdated[Planet]{overridedefmeta=Planet// field entries as normal...}

Finally, arrange for the updated field to be updated:

classMySchemaextendsSchema{...overridedefcallbacks=Seq(beforeUpdate[Planet]call{_.onUpdate})...

Although there is a built-in net.liftweb.record.LifecycleCallbacks

trait that allows you to trigger behaviour onUpdate, afterDelete, and so

on, it is only for use on individual fields, rather than records. As our

goal is to update the updated field when any part of the record

changes, we can’t use the LiftcycleCallbacks here.

Instead, the CreatedUpdated trait simplifies adding updated and

created fields to a record, but we do need to remember to add a hook

into the schema to ensure the updated value is changed when a record

is modified. This is why we set the callbacks on the Schema.

The schema for records with CreatedUpdated mixed in will include two additional columns:

updatedtimestampnotnull,createdtimestampnotnull

The timestamp is used for the H2 database. For other databases, the type may be different.

The values can be accessed like any other record field. Using the example data from One-to-Many Relationship, we could run the following:

valupdated:Calendar=mars.updated.idvalcreated:Calendar=mars.created.is

If you only need created time, or updated time, just mix in the Created[T] or Updated[T] trait instead of CreatedUpdated[T].

It should be noted that onUpdate is called only on full updates and

not on partial updates with Squeryl. A full update is when the object is

altered and then saved; a partial update is where you attempt to alter objects via a query.

If you’re interested in other automations for Record, the Squeryl schema callbacks support these triggered behaviours:

-

beforeInsertandafterInsert -

afterSelect -

beforeUpdateandafterUpdate -

beforeDeleteandafterDelete

Full and partial updates are described in Insert, Update, and Delete.

Add the following any time you have a Squeryl season, such as just before your query:

org.squeryl.Session.currentSession.setLogger(s=>println(s))

By providing a String => Unit function to setLogger, Squeryl will

execute that function with the SQL it runs. In this example, we are

simply printing the SQL to the console.

You’ll probably want to use the logging facilities in Lift to capture SQL. For example:

packagecode.snippetimportnet.liftweb.common.Loggableimportorg.squeryl.SessionclassMySnippetextendsLoggable{defrender={Session.currentSession.setLogger(s=>logger.info(s))// ...your snippet code here...}}

This will log queries according to the settings for the logging system, typically the Logback project configured in src/resources/props/default.logback.xml.

It can be inconvenient to have to enable logging in each snippet during development. To trigger logging for all snippets, you can modify the addAround call in Boot.scala (Configuring Squeryl and Record) to include a setLogger call while inTransaction:

S.addAround(newLoanWrapper{overridedefapply[T](f:=>T):T={valresult=inTransaction{Session.currentSession.setLogger(s=>logger.info(s))// ... rest of addAround as normal

You can learn about logging in Lift from the Logging wiki page.

Use Squeryl’s dbType in your schema:

objectMySchemaextendsSchema{on(mytable)(t=>declare(t.mycolumndefineAsdbType("MEDIUMTEXT")))}

This schema setting will give you the correct column type in MySQL:

createtablemytable(mycolumnMEDIUMTEXTnotnull);

On the record you can use StringField as usual.

This recipe points towards the flexibility available with Squeryl’s schema definition DSL. The column attribute in this example is just one of a variety of adjustments you can make to the default choices that Squeryl uses.

For example, you can use the syntax to chain column attributes for a single column, and also define multiple columns at the same time:

objectMySchemaextendsSchema{on(mytable)(t=>declare(t.mycolumndefineAs(dbType("MEDIUMTEXT"),indexed),t.iddefinedAs(unique,named("MY_ID"))))}

The schema definition page for Squeryl gives examples of attributes you can apply to tables and columns.

Ensure that:

-

LiftRules.early.append(_.setCharacterEncoding("UTF-8"))is included in Boot.scala. -

?useUnicode=true&characterEncoding=UTF-8is included in your JDBC connections URL. - Your MySQL database has been created using a UTF-8 character set.

There are a number of interactions here that can impact characters going into, and coming out of, a MySQL database. The basic problem is that bytes transferred across networks have no meaning unless you know the encoding.

The setCharacterEncoding("UTF-8") call in Boot.scala is being applied to every HTTPRequest that ultimately, in a servlet container, is applied to a ServletRequest. This is how parameters in a request are going to be interpreted by the servlet container when received.

The flip side of this is that responses from Lift are encoded as UTF-8. You’ll see this in a number of places. For example, templates-hidden/default includes:

<metahttp-equiv="content-type"content="text/html; charset=UTF-8"/>

Also, the LiftResponse classes set the encoding as UTF-8.

Another aspect is how character data from Lift is sent to the database over the network. This is controlled by the parameters to the JDBC driver. The default for MySQL is to detect the encoding, but it seems from experience that this is not a great option, so we force the UTF-8 encoding.

Finally, the MySQL database itself needs to store the data as UTF-8. The default character encoding is not UTF-8, so you’ll need to specify the encoding when you create the database:

CREATEDATABASEmyDbCHARACTERSETutf8

Get Lift Cookbook now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.