It’s high time now to begin programming. This chapter introduces all the essential concepts about modules and kernel programming. In these few pages, we build and run a complete module. Developing such expertise is an essential foundation for any kind of modularized driver. To avoid throwing in too many concepts at once, this chapter talks only about modules, without referring to any specific device class.

All the kernel items (functions, variables, header files, and macros) that are introduced here are described in a reference section at the end of the chapter.

For the impatient reader, the following code is a complete “Hello, World” module (which does nothing in particular). This code will compile and run under Linux kernel versions 2.0 through 2.4.[4]

#define MODULE

#include <linux/module.h>

int init_module(void) { printk("<1>Hello, world\n"); return 0; }

void cleanup_module(void) { printk("<1>Goodbye cruel world\n"); }

The printk function is defined in the Linux

kernel and behaves similarly to the standard C library function

printf. The kernel needs its own printing

function because it runs by itself, without the help of the C library.

The module can call printk because, after

insmod has loaded it, the module is linked

to the kernel and can access the kernel’s public symbols (functions and

variables, as detailed in the next section). The string

<1> is the priority of the message. We’ve

specified a high priority (low cardinal number) in this module because

a message with the default priority might not show on the console,

depending on the kernel version you are running, the version of the

klogd daemon, and your configuration. You

can ignore this issue for now; we’ll explain it in Section 4.1.1 in Chapter 4.

You can test the module by calling insmod and rmmod, as shown in the screen dump in the following paragraph. Note that only the superuser can load and unload a module.

The source file shown earlier can be loaded and unloaded as shown only

if the running kernel has module version support disabled; however,

most distributions preinstall versioned kernels (versioning is

discussed in Section 11.3 in Chapter 11). Although older

modutils allowed loading nonversioned

modules to versioned kernels, this is no longer possible. To solve the

problem with hello.c, the source in the

misc-modules directory of the sample code

includes a few more lines to be able to run both under versioned and

nonversioned kernels. However, we strongly suggest you compile and

run your own kernel (without version support) before you run the

sample code.[5]

root#gcc -c hello.croot#insmod ./hello.oHello, world root#rmmod helloGoodbye cruel world root#

According to the mechanism your system uses to deliver the message

lines, your output may be different. In particular, the previous

screen dump was taken from a text console; if you are running

insmod and rmmod

from an xterm, you won’t see anything on

your TTY. Instead, it may go to one of the system log files, such as

/var/log/messages (the name of the actual file

varies between Linux distributions). The mechanism used to deliver

kernel messages is described in Section 4.1.2 in

Chapter 4.

As you can see, writing a module is not as difficult as you might expect. The hard part is understanding your device and how to maximize performance. We’ll go deeper into modularization throughout this chapter and leave device-specific issues to later chapters.

Before we go further, it’s worth underlining the various differences between a kernel module and an application.

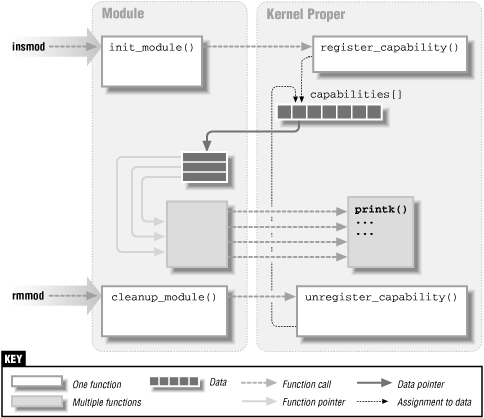

Whereas an application performs a single task from beginning to end, a module registers itself in order to serve future requests, and its “main” function terminates immediately. In other words, the task of the function init_module (the module’s entry point) is to prepare for later invocation of the module’s functions; it’s as though the module were saying, “Here I am, and this is what I can do.” The second entry point of a module, cleanup_module, gets invoked just before the module is unloaded. It should tell the kernel, “I’m not there anymore; don’t ask me to do anything else.” The ability to unload a module is one of the features of modularization that you’ll most appreciate, because it helps cut down development time; you can test successive versions of your new driver without going through the lengthy shutdown/reboot cycle each time.

As a programmer, you know that an application can call functions it

doesn’t define: the linking stage resolves external references using

the appropriate library of functions. printf is

one of those callable functions and is defined in

libc. A module, on the other hand, is

linked only to the kernel, and the only functions it can call are the

ones exported by the kernel; there are no libraries to link to. The

printk function used in

hello.c earlier, for example, is the version of

printf defined within the kernel and exported to

modules. It behaves similarly to the original function, with a few

minor differences, the main one being lack of floating-point

support.[6]

Figure 2-1 shows how function calls and function pointers are used in a module to add new functionality to a running kernel.

Because no library is linked to modules, source files should

never include the usual header files. Only

functions that are actually part of the kernel itself may be used in

kernel modules.

Anything related to the kernel is declared in headers found in

include/linux and

include/asm inside the kernel sources (usually

found in /usr/src/linux). Older distributions

(based on libc version 5 or earlier) used

to carry symbolic links from /usr/include/linux

and /usr/include/asm to the actual kernel

sources, so your libc include tree could

refer to the headers of the actual kernel source you had

installed. These symbolic links made it convenient for user-space applications to include kernel header files, which they occasionally need to do.

Even though user-space headers are now separate from kernel-space

headers, sometimes applications still include kernel headers, either

before an old library is used or before new information is needed

that is not available in the user-space headers. However, many of the

declarations in the kernel header files are relevant only to the

kernel itself and should not be seen by user-space applications. These

declarations are therefore protected by #ifdef __KERNEL__ blocks. That’s why your driver,

like other kernel code, will need to be compiled with the

__KERNEL__ preprocessor symbol

defined.

The role of individual kernel headers will be introduced throughout the book as each of them is needed.

Developers working on any large software system (such as the kernel) must be aware of and avoid namespace pollution. Namespace pollution is what happens when there are many functions and global variables whose names aren’t meaningful enough to be easily distinguished. The programmer who is forced to deal with such an application expends much mental energy just to remember the “reserved” names and to find unique names for new symbols. Namespace collisions can create problems ranging from module loading failures to bizarre failures—which, perhaps, only happen to a remote user of your code who builds a kernel with a different set of configuration options.

Developers can’t afford to fall into such an error when writing kernel

code because even the smallest module will be linked to the whole

kernel.

The best approach for preventing namespace pollution is to declare all

your symbols as static and to use a prefix that is

unique within the kernel for the symbols you leave global. Also note

that you, as a module writer, can control the external visibility of

your symbols, as described in Section 2.3 later in

this chapter.[7]

Using the chosen prefix for private symbols within the module may be a good practice as well, as it may simplify debugging. While testing your driver, you could export all the symbols without polluting your namespace. Prefixes used in the kernel are, by convention, all lowercase, and we’ll stick to the same convention.

The last difference between kernel programming and application programming is in how each environment handles faults: whereas a segmentation fault is harmless during application development and a debugger can always be used to trace the error to the problem in the source code, a kernel fault is fatal at least for the current process, if not for the whole system. We’ll see how to trace kernel errors in Chapter 4, in Section 4.4.

A module runs in the so-called kernel space, whereas applications run in user space. This concept is at the base of operating systems theory.

The role of the operating system, in practice, is to provide programs with a consistent view of the computer’s hardware. In addition, the operating system must account for independent operation of programs and protection against unauthorized access to resources. This nontrivial task is only possible if the CPU enforces protection of system software from the applications.

Every modern processor is able to enforce this behavior. The chosen approach is to implement different operating modalities (or levels) in the CPU itself. The levels have different roles, and some operations are disallowed at the lower levels; program code can switch from one level to another only through a limited number of gates. Unix systems are designed to take advantage of this hardware feature, using two such levels. All current processors have at least two protection levels, and some, like the x86 family, have more levels; when several levels exist, the highest and lowest levels are used. Under Unix, the kernel executes in the highest level (also called supervisor mode), where everything is allowed, whereas applications execute in the lowest level (the so-called user mode), where the processor regulates direct access to hardware and unauthorized access to memory.

We usually refer to the execution modes as kernel space and user space. These terms encompass not only the different privilege levels inherent in the two modes, but also the fact that each mode has its own memory mapping—its own address space—as well.

Unix transfers execution from user space to kernel space whenever an application issues a system call or is suspended by a hardware interrupt. Kernel code executing a system call is working in the context of a process—it operates on behalf of the calling process and is able to access data in the process’s address space. Code that handles interrupts, on the other hand, is asynchronous with respect to processes and is not related to any particular process.

The role of a module is to extend kernel functionality; modularized code runs in kernel space. Usually a driver performs both the tasks outlined previously: some functions in the module are executed as part of system calls, and some are in charge of interrupt handling.

One way in which device driver programming differs greatly from (most) application programming is the issue of concurrency. An application typically runs sequentially, from the beginning to the end, without any need to worry about what else might be happening to change its environment. Kernel code does not run in such a simple world and must be written with the idea that many things can be happening at once.

There are a few sources of concurrency in kernel programming. Naturally, Linux systems run multiple processes, more than one of which can be trying to use your driver at the same time. Most devices are capable of interrupting the processor; interrupt handlers run asynchronously and can be invoked at the same time that your driver is trying to do something else. Several software abstractions (such as kernel timers, introduced in Chapter 6) run asynchronously as well. Moreover, of course, Linux can run on symmetric multiprocessor (SMP) systems, with the result that your driver could be executing concurrently on more than one CPU.

As a result, Linux kernel code, including driver code, must be reentrant—it must be capable of running in more than one context at the same time. Data structures must be carefully designed to keep multiple threads of execution separate, and the code must take care to access shared data in ways that prevent corruption of the data. Writing code that handles concurrency and avoids race conditions (situations in which an unfortunate order of execution causes undesirable behavior) requires thought and can be tricky. Every sample driver in this book has been written with concurrency in mind, and we will explain the techniques we use as we come to them.

A common mistake made by driver programmers is to assume that concurrency is not a problem as long as a particular segment of code does not go to sleep (or “block”). It is true that the Linux kernel is nonpreemptive; with the important exception of servicing interrupts, it will not take the processor away from kernel code that does not yield willingly. In past times, this nonpreemptive behavior was enough to prevent unwanted concurrency most of the time. On SMP systems, however, preemption is not required to cause concurrent execution.

If your code assumes that it will not be preempted, it will not run properly on SMP systems. Even if you do not have such a system, others who run your code may have one. In the future, it is also possible that the kernel will move to a preemptive mode of operation, at which point even uniprocessor systems will have to deal with concurrency everywhere (some variants of the kernel already implement it). Thus, a prudent programmer will always program as if he or she were working on an SMP system.

Although kernel modules don’t execute sequentially as applications do,

most actions performed by the kernel are related to a specific

process. Kernel code can know the current process driving it by

accessing the global item current, a pointer to

struct task_struct, which as of version 2.4 of the

kernel is declared in <asm/current.h>,

included by <linux/sched.h>. The

current pointer refers to the user process

currently executing. During the execution of a system call, such as

open or read, the current

process is the one that invoked the call. Kernel code can use

process-specific information by using current, if

it needs to do so. An example of this technique is presented in

Section 5.6, in Chapter 5.

Actually, current is not properly a global variable

any more, like it was in the first Linux kernels. The developers

optimized access to the structure describing the current process by

hiding it in the stack page. You can look at the details of

current in

<asm/current.h>. While the code you’ll look

at might seem hairy, we must keep in mind that Linux is an

SMP-compliant system, and a global variable simply won’t work when you

are dealing with multiple CPUs. The details of the implementation

remain hidden to other kernel subsystems though, and a device driver

can just include <linux/sched.h> and refer to

the current process.

From a module’s point of view, current is just like

the external reference printk. A module can

refer to current wherever it sees fit. For

example, the following statement prints the process ID and the command

name of the current process by accessing certain fields in

struct task_struct:

printk("The process is \"%s\" (pid %i)\n",

current->comm, current->pid);

The command name stored in current->comm is the

base name of the program file that is being executed by the current

process.

[4] This example, and all the others presented in this book, is available on the O’Reilly FTP site, as explained in Chapter 1.

[5] If you are new to building kernels, Alessandro has posted an article at http://www.linux.it/kerneldocs/kconf that should help you get started.

[6] The implementation found in Linux 2.0 and 2.2

has no support for the L and Z

qualifiers. They have been introduced in 2.4,

though.

[7] Most versions of

insmod (but not all of them) export all

non-static symbols if they find no specific

instruction in the module; that’s why it’s wise to declare as

static all the symbols you are not willing to

export.

Get Linux Device Drivers, Second Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.