Chapter 4. System Management Using NIS

We’ve seen how NIS operates on master servers, slave servers, and clients, and how clients get map information from the servers. Just knowing how NIS works, however, does not lead to its efficient use. NIS servers must be configured so that map information remains consistent on all servers, and the number of servers and the load on each server should be evaluated so that there is not a user-noticeable penalty for referring to the NIS maps.

Ideally, NIS streamlines system administration tasks by allowing you to update configuration files on many machines by making changes on a single host. When designing a network to use NIS, you must ensure that its performance cost, measured by all users doing “normal” activities, does not exceed its advantages. This chapter explains how to design an NIS network, update and distribute NIS map data, manage multiple NIS domains, and integrate NIS hostname services with the Domain Name Service.

NIS network design

At this point, you should be able to set up NIS on master and slave servers and have a good understanding of how map changes are propagated from master to slave servers. Before creating a new NIS network, you should think about the number of domains and servers you will need. NIS network design entails deciding the number of domains, the number of servers for each domain, and the domain names. Once the framework has been established, installation and ongoing maintenance of the NIS servers is fairly straightforward.

Dividing a network into domains

The number of NIS domains that you need depends upon the division of your computing resources. Use a separate NIS domain for each group of systems that has its own system administrator. The job of maintaining a system also includes maintaining its configuration information, wherever it may exist.

Large groups of users sharing network resources may warrant a separate NIS domain if the users may be cleanly separated into two or more groups. The degree to which users in the groups share information should determine whether you should split them into different NIS domains. These large groups of users usually correspond very closely to the organizational groups within your company, and the level of information sharing within the group and between groups is fairly well defined.

A good example is that of a large university, where the physics and chemistry departments have their own networked computing environments. Information sharing within each department will be common, but interdepartment sharing is minimal. The physics department isn’t that interested in the machine names used by the chemistry department. The two departments will almost definitely be in two distinct NIS domains if they do not have the same system administrator (each probably gets one of its graduate students to assume this job). Assume, though, that they share an administrator — why create two NIS domains? The real motivation is to clearly mark the lines along which information is commonly shared. Setting up different NIS domains also keeps users in one department from using machines in another department.

Conversely, the need to create splinter groups of a few users for access to some machines should not warrant an independent NIS domain. Netgroups are better suited to handle this problem, because they create subsets of a domain, rather than an entirely new domain. A good example of a splinter group is the system administration staff — they may be given logins on central servers, while the bulk of the user community is not. Putting the system administrators in another domain generally creates more problems than the new domain was intended to solve.

Domain names

Choosing domain names is not nearly as difficult as gauging the number of domains needed. Just about any naming convention can be used provided that domain names are unique. You can choose to apply the name of the group as the NIS domain name; for example, you could use history, politics, and comp-sci to name the departments in a university.

If you are setting up multiple NIS domains that are based on hierarchical divisions, you may want to use a multilevel naming scheme with dot-separated name components:

| cslab.comp-sci |

| staff.comp-sci |

| profs.history |

| grad.history |

The first two domain names would apply to the “lab” machines and the departmental staff machines in the computer science department, while the two .history domain names separate the professors and graduate students in that department.

Multilevel domain names are useful if you will be using an Internet Domain Name Service. You can assign NIS domain names based on the name service domain names, so that every domain name is unique and also identifies how the additional name service is related to NIS. Integration of Internet name services and NIS is covered at the end of this chapter.

Number of NIS servers per domain

The number of servers per NIS domain is determined by the size of the domain and the aggregate service requirements for it, the level of failure protection required, and any physical network constraints that might affect client binding patterns. As a general rule, there should be at least two servers per domain: one master and one slave. The dual-server model offers basic protection if one server crashes, since clients of that server will rebind to the second server. With a solitary server, the operation of the network hinges on the health of the NIS server, creating both a performance bottleneck and a single point of failure in the network.

Increasing the number of NIS servers per domain reduces the impact of any one server crashing. With more servers, each one is likely to have fewer clients binding to it, assuming that the clients are equally likely to bind to any server. When a server crashes, fewer clients will be affected. Spreading the load out over several hosts may also reduce the number of domain rebindings that occur during unusually long server response times. If the load is divided evenly, this should level out variations in the NIS server response time due to server crashes and reboots.

There is no golden rule for allocating a certain number of servers for every n NIS clients. The total NIS service load depends on the type of work done on each machine and the relative speeds of client and server. A faster machine generates more NIS requests in a given time window than a slower one, if both machines are doing work that makes equal use of NIS. Some interactive usage patterns generate more NIS traffic than work that is CPU-intensive. A user who is continually listing files, compiling source code, and reading mail will make more use of password file entries and mail aliases than one who runs a text editor most of the time.

The bottom line is that very few types of work generate endless streams of NIS requests; most work makes casual references to the NIS maps separated by at most several seconds (compare this to disk accesses, which are usually separated by milliseconds). Generally, 30-40 NIS clients per server is an upper limit if the clients and servers are roughly the same speed. Faster clients need a lower client/server ratio, while a server that is faster than its clients might support 50 or more NIS clients. The best way to gauge server usage is to watch for ypbind requests for domain bindings, indicating that clients are timing out waiting for NIS service. Methods for observing binding requests are discussed in Section 13.4.2.

Finally, the number of servers required may depend on the physical structure of the network. If you have decided to use four NIS servers, for example, and have two network segments with equal numbers of clients, joined by a bridge or router, make sure you divide the NIS servers equally on both sides of the network-partitioning hardware. If you put only one NIS server on one side of a bridge or router, then clients on that side will almost always bind to this server. The delay experienced by NIS requests in traversing the bridge approaches any server-related delay, so that the NIS server on the same side of the bridge will answer a client’s request before a server on the opposite side of the bridge, even if the closer server is more heavily loaded than the one across the bridge. With this configuration, you have undone the benefits of multiple NIS servers, since clients on the one-server side of the bridge bind to the same server in most cases. Locating lopsided NIS server bindings is discussed in Section 13.4.2.

Managing map files

Keeping map files updated on all servers is essential to the proper operation of NIS. There are two mechanisms for updating map files: using make and the NIS Makefile, which pushes maps from the master server to the slave servers, and the ypxfr utility, which pulls maps from the master server. This section starts with a look at how map file updates are made and how they get distributed to slave servers.

Having a single point of administration makes it easier to propagate configuration changes through the network, but it also means that you may have more than one person changing the same file. If there are several system administrators maintaining the NIS maps, they need to coordinate their efforts, or you will find that one person removes NIS map entries added by another. Using a source code control system, such as SCCS or RCS, in conjunction with NIS often solves this problem. In the second part of this section, we’ll see how to use alternate map source files and source code control systems with NIS.

Map distribution

Master and slave servers are distinguished by their ability to effect permanent changes to NIS maps. Changes may be made to an NIS map on a slave server, but the next map transfer from the master will overlay this change. Modify maps only on the master server, and push them from the master server to its slave servers. On the NIS master server, edit the source file for the map using your favorite text editor. Source files for NIS maps are listed in Table 3-1. Then go to the NIS map directory and build the new map using make, as shown here:

#vi /etc/hosts#cd /var/yp#make...New hosts map is built and distributed...

Without any arguments, make builds all maps that are out-of-date with respect to their ASCII source files. When more than one map is built from the same ASCII file, for example the passwd.byname and passwd.byuid maps built from /etc/passwd, they are all built when make is invoked.

When a map is rebuilt, the yppush utility is used to check the order number of the same map on each NIS server. If the maps are out-of-date, yppush transfers the map to the slave servers, using the server names in the ypservers map. Scripts to rebuild maps and push them to slave servers are part of the NIS Makefile, which is covered in Section 4.2.3.

Map transfers done on demand after source file modifications may not always complete successfully. The NIS slave server may be down, or the transfer may timeout due to severe congestion or server host loading. To ensure that maps do not remain out-of-date for a long time (until the next NIS map update), NIS uses the ypxfr utility to transfer a map to a slave server. The slave transfers the map after checking the timestamp on its copy; if the master’s copy has been modified more recently, the slave server will replace its copy of the map with the one it transfers from the server. It is possible to force a map transfer to a slave server, ignoring the slave’s timestamp, which is useful if a map gets corrupted and must be replaced. Under Solaris, an additional master server daemon called ypxfrd is used to speed up map transfer operations, but the map distribution utilities resort to the old method if they cannot reach ypxfrd on the master server.

The map transfer — both in yppush and in ypxfr — is performed by requesting that the slave server walk through all keys in the modified map and build a map containing these keys. This seems quite counterintuitive, since you would hope that a map transfer amounts to nothing more than the master server sending the map to the slave server. However, NIS was designed to be used in a heterogeneous environment, so the master server’s DBM file format may not correspond to that used by the slave server. DBM files are tightly tied to the byte ordering and file block allocation rules of the server system, and a DBM file must be created on the system that indexes it. Slave servers, therefore, have to enumerate the entries in an NIS map and rebuild the map from them, using their own local conventions for DBM file construction. Indeed, it is theoretically possible to have NIS server implementation that does not use DBM. When the slave server has rebuilt the map, it replaces its existing copy of the map with the new one. Schedules for transferring maps to slave servers and scripts to be run out of cron are provided in the next section.

Regular map transfers

Relying on demand-driven updates is overly optimistic, since a server may be down when the master is updated. NIS includes the ypxfr tool to perform periodic transfers of maps to slave servers, keeping them synchronized with the master server even if they miss an occasional yppush. The ypxfr utility will transfer a map only if the slave’s copy is out-of-date with respect to the master’s map.

Unlike yppush, ypxfr runs on the slave. ypxfr contacts the master server for a map, enumerates the entries in the map, and rebuilds a private copy of the map. If the map is built successfully, ypxfr replaces the slave server’s copy of the map with the newly created one. Note that doing a yppush from the NIS master essentially involves asking each slave server to perform a ypxfr operation if the slave’s copy of the map is out-of-date. The difference between yppush and ypxfr (besides the servers on which they are run) is that ypxfr retrieves a map even if the slave server does not have a copy of it, while yppush requires that the slave server have the map in order to check its modification time.

ypxfr map updates should be scheduled out of cron based on how often the maps change. The passwd and aliases maps change most frequently, and could be transferred once an hour. Other maps, like the services and rpc maps, tend to be static and can be updated once a day. The standard mechanism for invoking ypxfr out of cron is to create two or more scripts based on transfer frequency, and to call ypxfr from the scripts. The maps included in the ypxfr_1perhour script are those that are likely to be modified several times during the day, while those in ypxfr_2perday, and ypxfr_1perday may change once every few days:

ypxfr_1perhour script: /usr/lib/netsvc/yp/ypxfr passwd.byuid /usr/lib/netsvc/yp/ypxfr passwd.byname ypxfr_2perday script: /usr/lib/netsvc/yp/ypxfr hosts.byname /usr/lib/netsvc/yp/ypxfr hosts.byaddr /usr/lib/netsvc/yp/ypxfr ethers.byaddr /usr/lib/netsvc/yp/ypxfr ethers.byname /usr/lib/netsvc/yp/ypxfr netgroup /usr/lib/netsvc/yp/ypxfr netgroup.byuser /usr/lib/netsvc/yp/ypxfr netgroup.byhost /usr/lib/netsvc/yp/ypxfr mail.aliases ypxfr_1perday script: /usr/lib/netsvc/yp/ypxfr group.byname /usr/lib/netsvc/yp/ypxfr group.bygid /usr/lib/netsvc/yp/ypxfr protocols.byname /usr/lib/netsvc/yp/ypxfr protocols.bynumber /usr/lib/netsvc/yp/ypxfr networks.byname /usr/lib/netsvc/yp/ypxfr networks.byaddr /usr/lib/netsvc/yp/ypxfr services.byname /usr/lib/netsvc/yp/ypxfr ypservers crontab entry: 0 * * * * /usr/lib/netsvc/yp/ypxfr_1perhour 0 0,12 * * * /usr/lib/netsvc/yp/ypxfr_2perday 0 0 * * * /usr/lib/netsvc/yp/ypxfr_1perday

ypxfr logs its activity on the slave servers if the log file /var/yp/ypxfr.log exists when ypxfr starts.

Map file dependencies

Dependencies of NIS maps on ASCII source files are maintained by the NIS Makefile, located in the NIS directory /var/yp on the master server. The Makefile dependencies are built around timestamp files named after their respective source files. For example, the timestamp file for the NIS maps built from the password file is passwd.time, and the timestamp for the hosts maps is kept in hosts.time.

The timestamp files are empty because only their modification dates are of interest. The make utility is used to build maps according to the rules in the Makefile, and make compares file modification times to determine which targets need to be rebuilt. For example, make compares the timestamp on the passwd.time file and that of the ASCII /etc/passwd file, and rebuilds the NIS passwd map if the ASCII source file was modified since the last time the NIS passwd map was built.

After editing a map source file, building the map (and any other maps that may depend on it) is done with make:

#cd /var/yp#make passwdRebuilds only password map. #makeRebuilds all maps that are out-of-date.

If the source file has been modified more recently than the timestamp file, make notes that the dependency in the Makefile is not met and executes the commands to regenerate the NIS map. In most cases, map regeneration requires that the ASCII file be stripped of comments, fed to makedbm for conversion to DBM format, and then pushed to all slave servers using yppush.

Be careful when building a few selected maps; if other maps depend on the modified map, then you may distribute incomplete map information. For example, Solaris uses the netid map to combine password and group information. The netid map is used by login shells to determine user credentials: for every user, it lists all of the groups that user is a member of. The netid map depends on both the /etc/passwd and /etc/group files, so when either one is changed, the netid map should be rebuilt.

But let’s say you make a change to the /etc/groups file, and decide to just rebuild and distribute the group map:

nismaster#cd /var/ypnismaster#make group

The commands in this example do not update the netid map, because the netid map doesn’t depend on the group map at all. The netid map depends on the /etc/group file — as does the group map — but in the previous example, you would have instructed make to build only the group map. If you build the group map without updating the netid map, users will become very confused about their group memberships: their login shells will read netid and get old group information, even though the NIS map source files appear correct.

The best solution to this problem is to build all maps that are out-of-date by using make with no arguments:

nismaster#cd /var/ypnismaster#make

Once the map is built, the NIS Makefile distributes it, using yppush, to the slave servers named in the ypservers map. yppush walks through the list of NIS servers and performs an RPC call to each slave server to check the timestamp on the map to be transferred. If the map is out-of-date, yppush uses another RPC call to the slave server to initiate a transfer of the map.

A map that is corrupted or was not successfully transferred to all slave servers can be explicitly rebuilt and repushed by removing its timestamp file on the master server:

master#cd /var/ypmaster#rm hosts.timemaster#make hosts

This procedure should be used if a map was built when the NIS master server’s time was set incorrectly, creating a map that becomes out-of-date when the time is reset. If you need to perform a complete reconstruction of all NIS maps, for any reason, remove all of the timestamp files and run make:

master#cd /var/ypmaster#rm *.timemaster#make

This extreme step is best reserved for testing the map distribution mechanism, or recovering from corruption of the NIS map directory.

Password file updates

One exception to the yppush push-on-demand strategy is the passwd map. Users need to be able to change their passwords without system manager intervention. The hosts file, for example, is changed by the superuser and then pushed to other servers when it is rebuilt. In contrast, when you change your password, you (as a nonprivileged user) modify the local password file. To change a password in an NIS map, the change must be made on the master server and distributed to all slave servers in order to be seen back on the client host where you made the change.

yppasswd is a user utility that is similar to the passwd program, but it changes the user’s password in the original source file on the NIS master server. yppasswd usually forces the password map to be rebuilt, although at sites choosing not to rebuild the map on demand, the new password will not be distributed until the next map transfer. yppasswd is used like passwd, but it reports the server name on which the modifications are made. Here is an example:

[wahoo]% yppasswd

Changing NIS password for stern on mahimahi.

Old password:

New password:

Retype new password:

NIS entry changed on mahimahiSome versions of passwd (such as Solaris 2.6 and higher) check to see if the password file is managed by NIS, and invoke yppasswd if this is the case. Check your vendor’s documentation for procedures particular to your system.

NIS provides read-only access to its maps. There is nothing in the NIS protocol that allows a client to rewrite the data for a key. To accept changes to maps, a server distinct from the NIS server is required that modifies the source file for the map and then rebuilds the NIS map from the modified ASCII file. To handle incoming yppasswd change requests, the master server must run the yppasswdd daemon (note the second “d” in the daemon’s name). This RPC daemon gets started in the /usr/lib/netsvc/yp/ypstart boot script on the master NIS server only:

if [ "$master" = "$hostname" -a X$YP_SERVER = "XTRUE" ]; then

...

if [ -x $YPDIR/rpc.yppasswdd ]; then

PWDIR=`grep "^PWDIR" /var/yp/Makefile 2> /dev/null` \

&& PWDIR=`expr "$PWDIR" : `.*=[ ]*<[^ ]*>``

if [ "$PWDIR" ]; then

if [ "$PWDIR" = "/etc" ]; then

unset PWDIR

else

PWDIR="-D $PWDIR"

fi

fi

$YPDIR/rpc.yppasswdd $PWDIR -m \

&& echo ` rpc.yppasswdd\c`

fi

...

fiThe host making a password map change locates the master server by asking for the master of the NIS passwd map, and the yppasswdd daemon acts as a gateway between the user’s host and a passwd-like utility on the master server. The location of the master server’s password file and options to build a new map after each update are given as command-line arguments to yppasswdd, as shown in the previous example.

The -D argument specifies the name of the master server’s source for the password map; it may be the default /etc/passwd or it may point to an alternative password file.[1] The -m option specifies that make is to be performed in the NIS directory on the master server. You can optionally specify arguments after -m that are passed to make. With a default set up, the fragment in ypstart would cause yppasswdd to invoke make as:

# ( cd /var/yp; make )after each change to the master’s password source file. Since it is likely only the password file will have changed, only the password maps get rebuilt and pushed. You can ensure that only the password maps get pushed changing the yppaswdd line in ypstart to:

$YPDIR/rpc.yppasswdd $PWDIR -m passwd \

&& echo ` rpc.yppasswdd\c`Source code control for map files

With multiple system administrators and a single point of administration, it is possible for conflicting or unexplained changes to NIS maps to wreak havoc with the network. The best way to control modifications to maps and to track the change history of map source files is to put them under a source code control system such as SCCS.

Source code files usually contain the SCCS headers in a comment or in a global string that gets compiled into an executable. Putting SCCS keywords into comments in the /etc/hosts and /etc/aliases files allows you to track the last version and date of edit:

header to be added to file: # /etc/hosts header # %M% %I% %H% %T% # %W% keywords filled in after getting file from SCCS: # /etc/hosts header # hosts 1.32 12/29/90 16:37:52 # @(#)hosts 1.32

Once the headers have been added to the map source files, put them under SCCS administration:

nismaster#cd /etcnismaster#mkdir SCCSnismaster#/usr/ccs/bin/sccs admin -ialiases aliasesnismaster#/usr/ccs/bin/sccs admin -ihosts hostsnismaster#/usr/ccs/bin/sccs get aliases hosts

The copies of the files that are checked out of SCCS control are read-only. Someone making a casual change to a map is forced to go and check it out of SCCS properly before doing so. Using SCCS, each change to a file is documented before the file gets put back under SCCS control. If you always return a file to SCCS before it is converted into an NIS map, the SCCS control file forms an audit trail for configuration changes:

nismaster#cd /etcnismaster#sccs prs hostsD 1.31 00/05/22 08:52:35 root 31 30 00001/00001/00117 MRs: COMMENTS: added new host for info-center group D 1.30 00/06/04 07:19:04 root 30 29 00001/00001/00117 MRs: COMMENTS: changed bosox-fddi to jetstar-fddi D 1.29 90/11/08 11:03:47 root 29 28 00011/00011/00107 MRs: COMMENTS: commented out the porting lab systems.

If any change to the hosts or aliases file breaks, SCCS can be used to find the exact lines that were changed and the time the change was made (for confirmation that the modification caused the network problems).

The two disadvantages to using SCCS for NIS maps are that all changes must be made as root and that it won’t work for the password file. The superuser must perform all file checkouts and modifications, unless the underlying file permissions are changed to make the files writable by nonprivileged users. If all changes are made by root, then the SCCS logs do not contain information about the user making the change. The password file falls outside of SCCS control because its contents will be modified by users changing their passwords, without being able to check the file out of SCCS first. Also, some files, such as /etc/group, have no comment lines, so you cannot use SCCS keywords in them.

Using alternate map source files

You may decide to use nonstandard source files for various NIS maps on the master server, especially if the master server is not going to be an NIS client. Alternatively, you may need to modify the standard NIS Makefile to build your own NIS maps. Approaches to both of these problems are discussed in this section.

Some system administrators prefer to build the NIS password map from a file other than /etc/passwd, giving them finer control over access to the server. Separating the host’s and the NIS password files is also advantageous if there are password file entries on the server (such as those for dial-in UUCP) that shouldn’t be made available on all NIS clients. To avoid distributing UUCP password file entries to all NIS clients, the NIS password file should be kept separately from /etc/passwd on the master server. The master can include private UUCP password file entries and can embed the entire NIS map file via nsswitch.conf.

If you de-couple the NIS password map from the master server’s password file, then the NIS Makefile should be modified to reflect the new dependency. Refer back to the procedure described in Section 3.2.2.

Advanced NIS server administration

Once NIS is installed and running, you may find that you need to remove or re arrange your NIS servers to accommodate an increased load on one server. For example, if you attach several printers to an NIS server and use it as a print server, it may no longer make a good NIS server if most of its bandwidth is used for driving the printers. If this server is your master NIS server, you may want to assign NIS master duties to another host. We’ll look at these advanced administration problems in this section.

Removing an NIS slave server

If you decommission an NIS slave server, or decide to stop running NIS on it because the machine is loaded by other functions, you need to remove it from the ypserver map and turn off NIS. If a host is listed in the ypservers map but is not running ypserv, then attempts to push maps to this host will fail. This will not cause any data corruption or NIS service failures. It will, however, significantly increase the time required to push the NIS maps because yppush times out waiting for the former server to respond before trying the next server.

There is no explicit “remove” procedure in the NIS maintenance tools, so you have to do this manually. Start by rebuilding the ypservers map on the NIS master server:

master#cd /var/ypmaster#ypcat -k ypservers | grep -vservername\| makedbm - /var/yp/`domainname`/ypservers

The ypcat command line prints the entries in the current ypservers map, then removes the entry for the desired server using grep -v. This shortened list of servers is given to makedbm, which rebuilds the ypservers map. If the decommissioned server is not being shut down permanently, make sure you remove the NIS maps in /var/yp on the former server so that the machine doesn’t start ypserv on its next boot and provide out-of-date map information to the network. Many strange problems result if an NIS server is left running with old maps: the server will respond to requests, but may provide incorrect information to the client. After removing the maps and rebuilding ypservers, reboot the former NIS server and check to make sure that ypserv is not running. You may also want to force a map distribution at this point to test the new ypservers map. The yppush commands used in the map distribution should not include the former NIS server.

Changing NIS master servers

The procedure described in the previous section works only for slave servers. There are some additional dependencies on the master server that must be removed before an NIS master can be removed. To switch NIS master service to another host, you must rebuild all NIS maps to reflect the name of the new master host, update the ypservers map if the old master is being taken out of service, and distribute the new maps (with the new master server record) to all slave servers.

Here are the steps used to change master NIS servers:

Build the new master host as a slave server, initializing its domain directory and filling it with copies of the current maps. Each map must be rebuilt on the new master, which requires the NIS Makefile and map source files from the old master. Copy the source files and the NIS Makefile to the new master, and then rebuild all of the maps — but do not attempt to push them to other slave servers:

newmaster#

cd /var/ypnewmaster#rm *.timenewmaster#make NOPUSH=1Removing all of the timestamp files forces every map to be rebuilt; passing NOPUSH=1 to make prevents the maps from being pushed to other servers. At this point, you have NIS maps that contain master host records pointing to the new NIS master host.

Install copies of the new master server’s maps on the old master server. Transferring the new maps to existing NIS servers is made more difficult because of the process used by yppush: when a map is pushed to a slave server via the transfer-map NIS RPC call, the slave server consults its own copy of the map to determine the master server from which it should load a new copy. This is an NIS security feature: it prevents someone from creating an NIS master server and forcing maps onto the valid slave servers using yppush. The slave servers will look to their current NIS master server for map data, rather than accepting it from the renegade NIS master server.

In the process of changing master servers, the slave servers’ maps will point to the old master server. To work around yppush, first move the new maps to the old master server using ypxfr:

oldmaster#

/usr/lib/netsvc/yp/ypxfr -h newmaster -f passwd.byuidoldmaster#/usr/lib/netsvc/yp/ypxfr -h newmaster -f passwd.bynameoldmaster#/usr/lib/netsvc/yp/ypxfr -h newmaster -f hosts.byname...include all NIS maps...The -h newmaster option tells the old master server to grab the map from the new master server, and the -f flag forces a transfer even if the local version is not out of order with the new map. Every NIS map must be transferred to the old master server. When this step is complete, the old master server’s maps all point to the new master server.

On the old master server, distribute copies of the new maps to all NIS slave servers using yppush:

oldmaster#

/usr/lib/netsvc/yp/yppush passwd.byuidoldmaster#/usr/lib/netsvc/yp/yppush passwd.bynameoldmaster#/usr/lib/netsvc/yp/yppush hosts.byname...include all NIS maps...yppush forces the slave servers to look at their old maps, find the master server (still the old master), and copy the current map from the master server. Because the map itself contains the pointer record to the master server, transferring the entire map automatically updates the slave servers’ maps to point to the new master server.

If the old master server is being removed from NIS service, rebuild the ypservers map.

Many of these steps can be automated using shell scripts or simple rule additions to the NIS Makefile, requiring less effort than it might seem. For example, you can merge steps 2 and 3 in a single shell script that transfers maps from the new master to the old master, and then pushes each map to all of the slave servers. Run this script on the old master server:

#! /bin/sh

MAPS="passwd.byuid passwd.byname hosts.byname ..."

NEWMASTER=newmaster

for map in $MAPS

do

echo moving $map

/usr/lib/netsvc/yp/ypxfr -h $NEWMASTER -f $map

/usr/lib/netsvc/yp/yppush $map

doneThe alternative to this method is to rebuild the entire NIS system from scratch, starting with the master server. In the process of building the system, NIS service on the network will be interrupted as slave servers are torn down and rebuilt with new maps.

Managing multiple domains

A single NIS server may be a slave of more than one master server, if it is providing service to multiple domains. In addition, a server may be a master for one domain and a slave of another. Multimaster relationships are set up when NIS is installed on each of the master servers. In the course of building the ypservers map, the slave servers handling multiple domains are named in the ypservers map for each domain.

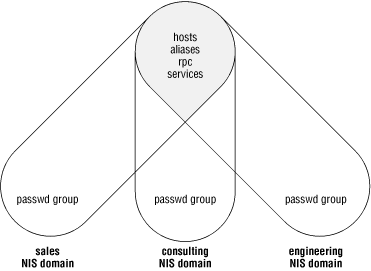

When multiple domains are used with independent NIS servers (each serving only one domain), it is sometimes necessary to keep one or more of the maps in these domains in perfect synchronization. Domains with different password and group files, for example, might still want to share global alias and host maps to simplify administration. Adding a new user to either domain would make the user’s mail aliases appear in the global alias file, to be shared by both domains. Figure 4-1 shows three NIS domains that share some maps and keep private copies of others.

The hosts and aliases maps are shared between the NIS domains so that any changes to them are reflected on all NIS clients in all domains. The passwd and group files are managed on a per-domain basis so that new users or groups in one domain do not automatically appear in the other domains. This gives the system administrators fine control over user access to machines and files in each NIS domain.

A much simpler case is the argument for having a single /etc/rpc file and an /etc/services file across all domains in an organization. As locally developed or third-party software that relies on these additional services is distributed to new networks, the required configuration changes will be in place. This scenario is most common when multiple NIS domains are run on a single network with less than one system administrator per domain.

Sharing maps across domains involves setting up a master/slave relationship between the two NIS master servers. The map transfer can be done periodically out of cron on the “slave” master server, or the true master server for the map can push the modified source file to the secondary master after each modification. The latter method offers the advantages of keeping the map source files synchronized and keeping the NIS maps current as soon as changes are made, but it requires that the superuser have remote execution permissions on the secondary NIS master server.

To force a source file to be pushed to another domain, modify the NIS Makefile to copy the source file to the secondary master server, and rebuild the map there:

hosts.time:

....

rebuild hosts.byname and hosts.byaddr

@touch hosts.time;

@echo "updated hosts";

@if [ ! $(NOPUSH) ]; then $(YPPUSH) -d $(DOM) hosts.byname; fi

@if [ ! $(NOPUSH) ]; then $(YPPUSH) -d $(DOM) hosts.byaddr; fi

@if [ ! $(NOPUSH) ]; then echo "pushed hosts"; fi

@echo "copying hosts file to NIS server ono"

@rcp /etc/hosts ono:/etc/hosts

@echo "updating NIS maps on ono"

@rsh ono "( cd /var/yp; make hosts )"The commands in the Makefile are preceded by at

signs (@) to suppress command echo when

make is executing them. rcp

moves the file over to the secondary master server, and the script

invoked by rsh rebuilds the maps on server

ono.

Superuser privileges are not always extended from one NIS server to another, and this scheme works only if the rsh and rcp commands can be executed. In order to get the maps copied to the secondary master server, you need to be able to access that server as root. You might justifiably be concerned about the security implications, since the rcp and rsh commands work without password prompts. One alternative is to leave the source files out-of-date and simply move the map file to the secondary master and have it distributed to slave servers in the second domain. Another alternative is to use Kerberos V5 versions of rcp and rsh or to use the secure shell (ssh). Kerberos V5 and ssh are available as free software or in commercial form. Your vendor might even provide one or both. For Solaris 2.6 and upward, you can get the Sun Enterprise Authentication Mechanism (SEAM) product from Sun, which has Kerberos V5, including rcp and rsh using Kerberos V5 security (see Section 12.5.5.2). If you use SEAM, you’ll want to prefix rcp and rsh in the Makefile with /usr/krb5/bin/.

The following script can be run out of cron on the secondary master server to pick up the host maps from NIS server mahimahi, the master server for domain nesales:

#! /bin/sh /usr/lib/netsvc/yp/ypxfr -h mahimahi -s nesales hosts.byname /usr/lib/netsvc/yp/ypxfr -h mahimahi -s nesales hosts.byaddr /usr/lib/netsvc/yp/yppush -d `domainname` hosts.byname /usr/lib/netsvc/yp/yppush -d `domainname` hosts.byaddr

The ypxfr commands get the maps from the primary master server, and then the yppush commands distribute them in the local, secondary NIS domain. The -h option to ypxfr specifies the hostname from which to initiate the transfer, and overrides the map’s master record. The -s option indicates the domain from which the map is to be taken. Note that in this approach, the hosts map points to mahimahi as the master in both domains. If the rcp-based transfer is used, then the hosts map in each domain points to the master server in that domain. The master server record in the map always indicates the host containing a source file from which the map can be rebuilt.

Get Managing NFS and NIS, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.