Chapter 1. Design, Build, and Specify APIs

You will be presented with many options when designing and building APIs. It is incredibly fast to build a service with modern technologies and frameworks, but creating a durable approach requires careful thought and consideration. In this chapter we will explore REST and RPC to model the producer and consumer relationships in the case study.

You will discover how standards can help to shortcut design decisions and navigate away from potential compatibility issues. You will look at OpenAPI Specifications, the practical uses for teams, and the importance of versioning.

RPC-based interactions are specified using a schema; to compare and contrast with a REST approach, we will explore gRPC. With both REST and gRPC in mind, we will look at the different factors to consider in how we model exchanges. We will look at the possibility of providing both a REST and RPC API in the same service and whether this is the right thing to do.

Case Study: Designing the Attendee API

In the Introduction we decided to migrate our legacy conference system and move toward a more API-driven architecture. As a first step to making this change, we are going to create a new Attendee service, which will expose a matching Attendee API. We also provided a narrow definition of an API. In order to design effectively, we need to consider more broadly the exchange between the producer and consumer, and more importantly who the producer and consumer are. The producer is owned by the attendee team. This team maintains two key relationships:

-

The attendee team owns the producer, and the legacy conference team owns the consumer. There is a close relationship between these two teams and any changes in structure are easily coordinated. A strong cohesion between the producer/consumer services is possible to achieve.

-

The attendee team owns the producer, and the external CFP system team owns the consumer. There is a relationship between the teams, but any changes need to be coordinated to not break the integration. A loose coupling is required and breaking changes would need to be carefully managed.

We will compare and contrast the approaches to designing and building the Attendee API throughout this chapter.

Introduction to REST

REpresentation State Transfer (REST) is a set of architectural constraints, most commonly applied using HTTP as the underlying transport protocol. Roy Fieldingâs dissertation âArchitectural Styles and the Design of Network-based Software Architecturesâ provides a complete definition of REST. From a practical perspective, to be considered RESTful your API must ensure that:

-

A producer-to-consumer interaction is modeled where the producer models resources the consumer can interact with.

-

Requests from producer to consumer are stateless, meaning that the producer doesnât cache details of a previous request. In order to build up a chain of requests on a given resource, the consumer must send any required information to the producer for processing.

-

Requests are cachable, meaning the producer can provide hints to the consumer where this is appropriate. In HTTP this is often provided in information contained in the header.

-

A uniform interface is conveyed to the consumer. You will explore the use of verbs, resources, and other patterns shortly.

-

It is a layered system, abstracting away the complexity of systems sitting behind the REST interface. For example, the consumer should not know or care if theyâre interacting with a database or other services.

Introduction to REST and HTTP by Example

Letâs see an example of REST over HTTP. The following exchange is a GET request, where GET represents the method or verb. A verb such as GET describes the action to take on a particular resource; in this example, we consider the attendees resource. An Accept header is passed to define the type of content the consumer would like to retrieve. REST defines the notion of a representation in the body and allows for representation metadata to be defined in the headers.

In the examples in this chapter, we represent a request above the --- separator and a response below:

GET http://mastering-api.com/attendees

Accept: application/json

---

200 OK

Content-Type: application/json

{

"displayName": "Jim",

"id": 1

}

The response includes the status code and message from the server, which enables the consumer to interrogate the result of the operation on the server-side resource.

The status code of this request was a 200 OK, meaning the request was successfully processed by the producer.

In the response body a JSON representation containing the conference attendees is returned.

Many content types are valid for return from a REST, however it is important to consider if the content type is parsable by the consumer.

For example, returning application/pdf is valid but would not represent an exchange that could easily be used by another system.

We will explore approaches to modeling content types, primarily looking at JSON, later in this chapter.

Note

REST is relatively straightforward to implement because the client and server relationship is stateless, meaning no client state is persisted by the server. The client must pass the context back to the server in subsequent requests; for example, a request for http://mastering-api.com/attendees/1 would retrieve more information on a specific attendee.

The Richardson Maturity Model

Speaking at QCon in 2008, Leonard Richardson presented his experiences of reviewing many REST APIs. Richardson found levels of adoption that teams apply to building APIs from a REST perspective. Martin Fowler also covered Richardsonâs maturity heuristics on his blog. Table 1-1 explores the different levels represented by Richardsonâs maturity heuristics and their application to RESTful APIs.

Level 0 - HTTP/RPC |

Establishes that the API is built using HTTP and has the notion of a single URI.

Taking our preceding example of |

Level 1 - Resources |

Establishes the use of resources and starts to bring in the idea of modeling resources in the context of the URI.

In our example, if we added |

Level 2 - Verbs (Methods) |

Starts to introduce the correct modeling of multiple resource URIs accessed by different request methods (also known as HTTP verbs) based on the effect of the resources on the server.

An API at level 2 can make guarantees around |

Level 3 - Hypermedia Controls |

This is the epitome of REST design and involves navigable APIs by the use of HATEOAS (Hypertext As The Engine Of Application State).

In our example, when we call |

When designing API exchanges, the different levels of Richardson Maturity are important to consider. Moving toward level 2 will enable you to project an understandable resource model to the consumer, with appropriate actions available against the model. In turn, this reduces coupling and hides the full detail of the backing service. Later we will also see how this abstraction is applied to versioning.

If the consumer is the CFP team, modeling an exchange with low coupling and projecting a RESTful model would be a good starting point. If the consumer is the legacy conference team, we may still choose to use a RESTful API, but there is also another option with RPC. In order to start to consider this type of traditionally eastâwest modeling, we will explore RPC.

Introduction to Remote Procedure Call (RPC) APIs

A Remote Procedure Call (RPC) involves calling a method in one process but having it execute code in another process. While REST can project a model of the domain and provides an abstraction from the underlying technology to the consumer, RPC involves exposing a method from one process and allows it to be called directly from another.

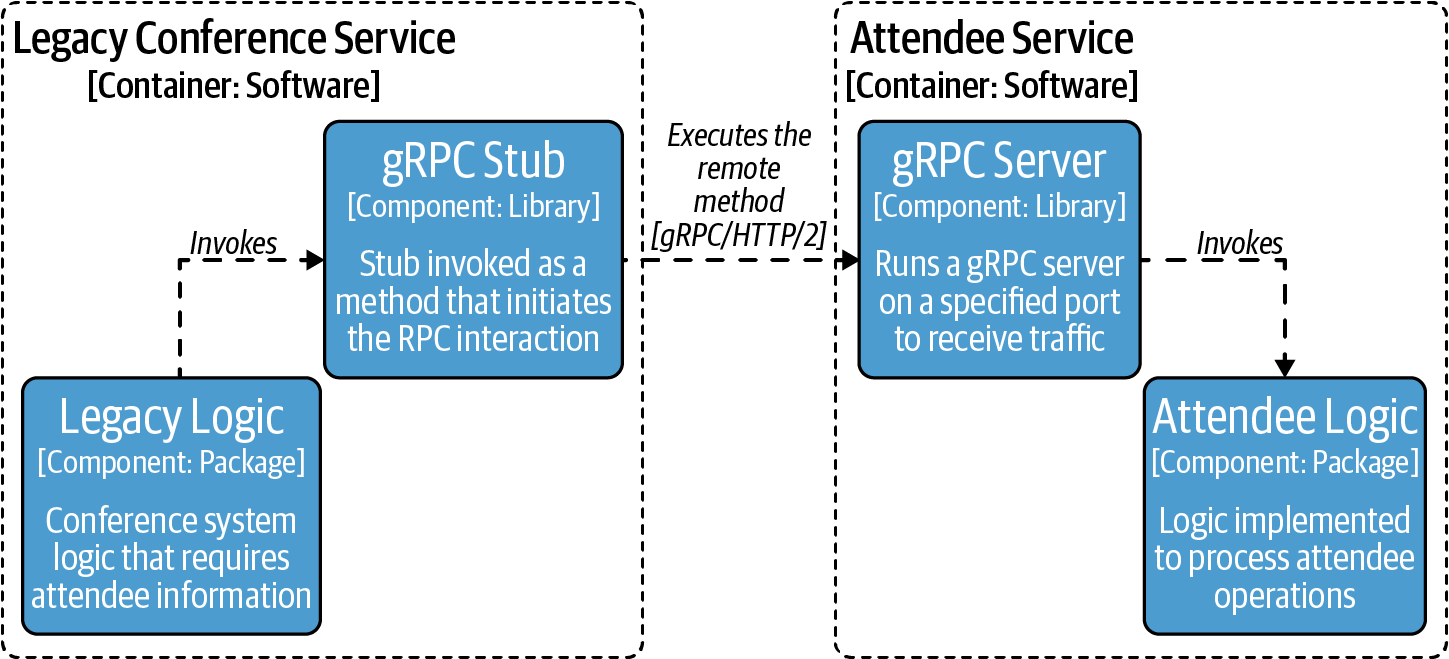

gRPC is a modern open source high-performance RPC. gRPC is under stewardship of the Linux Foundation and is the de facto standard for RPC across most platforms. Figure 1-1 describes an RPC call in gRPC, which involves the legacy conference service invoking the remote method on the Attendee service. The gRPC Attendee service starts and exposes a gRPC server on a specified port, allowing methods to be invoked remotely. On the client side (the legacy conference service), a stub is used to abstract the complexity of making the remote call into the library. gRPC requires a schema to fully cover the interaction between producer and consumer.

Figure 1-1. Example C4 component diagram using gRPC

A key difference between REST and RPC is state. REST is by definition statelessâwith RPC state depends on the implementation. RPC-based integrations in certain situations can also build up state as part of the exchange. This buildup of state has the convenience of high performance at the potential cost of reliability and routing complexities. With RPC the model tends to convey the exact functionality at a method level that is required from a secondary service. This optionality in state can lead to an exchange that is potentially more coupled between producer and consumer. Coupling is not always a bad thing, especially in eastâwest services where performance is a key consideration.

A Brief Mention of GraphQL

Before we explore REST and RPC styles in detail, we would be remiss not to mention GraphQL and where it fits into the API world. RPC offers access to a series of individual functions provided by a producer but does not usually extend a model or abstraction to the consumer. REST, on the other hand, extends a resource model for a single API provided by the producer. It is possible to offer multiple APIs on the same base URL using API gateways. We will explore this notion further in Chapter 3. If we offer multiple APIs in this way, the consumer will need to query sequentially to build up state on the client side. The consumer also needs to understand the structure of all services involved in the query. This approach is wasteful if the consumer is only interested in a subset of fields on the response. Mobile devices are constrained by smaller screens and network availability, so GraphQL is excellent in this scenario.

GraphQL introduces a technology layer over existing services, datastores, and APIs that provides a query language to query across multiple sources. The query language allows the client to ask for exactly the fields required, including fields that span across multiple APIs. GraphQL uses the GraphQL schema language, to specify the types in individual APIs and how APIs combine. One major advantage of introducing a GraphQL schema in your system is the ability to provide a single version across all APIs, removing the need for potentially complex version management on the consumer side.

GraphQL excels when a consumer requires uniform API access over a wide range of interconnected services. The schema provides the connection and extends the domain model, allowing the customer to specify exactly what is required on the consumer side. This works extremely well for modeling a user interface and also reporting systems or data warehousingâstyle systems. In systems where vast amounts of data are stored across different subsystems, GraphQL can provide an ideal solution to abstracting away internal system complexity.

It is possible to place GraphQL over existing legacy systems and use this as a facade to hide away the complexity, though providing GraphQL over a layer of well-designed APIs often means the facade is simpler to implement and maintain. GraphQL can be thought of as a complementary technology and should be considered when designing and building APIs. GraphQL can also be thought of as a complete approach to building up an entire API ecosystem.

GraphQL shines in certain scenarios and we would encourage you to take a look at Learning GraphQL (OâReilly) and GraphQL in Action (OâReilly) for a deeper dive into this topic.

REST API Standards and Structure

REST has some very basic rules, but for the most part the implementation and design is left as an exercise for the developer. For example, what is the best way to convey errors? How should pagination be implemented? How do you accidentally avoid building an API where compatibility frequently breaks? At this point, it is useful to have a more practical definition around APIs to provide uniformity and expectations across different implementations. This is where standards or guidelines can help, however there are a variety of sources to choose from.

For the purposes of discussing design, we will use the Microsoft REST API Guidelines, which represent a series of internal guidelines that have been open sourced. The guidelines use RFC-2119, which defines terminology for standards such as MUST, SHOULD, SHOULD NOT, MUST NOT, etc., allowing the developer to determine whether requirements are optional or mandatory.

Tip

As REST API standards are evolving, an open list of API standards are available on the bookâs Github page. Please contribute via pull request any open standards you think would be useful for other readers to consider.

Letâs consider the design of the Attendee API using the Microsoft REST API Guidelines and introduce an endpoint to create a new attendee.

If you are familiar with REST, the thought will immediately be to use POST:

POST http://mastering-api.com/attendees

{

"displayName": "Jim",

"givenName": "James",

"surname": "Gough",

"email": "jim@mastering-api.com"

}

---

201 CREATED

Location: http://mastering-api.com/attendees/1

The Location header reveals the location of the new resource created on the server, and in this API we are modeling a unique ID for the user. It is possible to use the email field as a unique ID, however the Microsoft REST API Guidelines recommend in section 7.9 that personally identifiable information (PII) should not be part of the URL.

Warning

The reason for removing sensitive data from the URL is that paths or query parameters might be inadvertently cached in the networkâfor example, in server logs or elsewhere.

Another aspect of APIs that can be difficult is naming. As we will discuss in âAPI Versioningâ, something as simple as changing a name can break compatibility. There is a short list of standard names that should be used in the Microsoft REST API Guidelines, however teams should expand this to have a common domain data dictionary to supplement the standards. In many organizations it is incredibly helpful to proactively investigate the requirements around data design and in some cases governance. Organizations that provide consistency across all APIs offered by a company present a uniformity that enables consumers to understand and connect responses. In some domains there may already be widely known terminologyâuse them!

Collections and Pagination

It seems reasonable to model the GET /attendees request as a response containing a raw array.

The following source snippet shows an example of what that might look like as a response body:

GET http://mastering-api.com/attendees

---

200 OK

[

{

"displayName": "Jim",

"givenName": "James",

"surname": "Gough",

"email": "jim@mastering-api.com",

"id": 1,

},

...

]

Letâs consider an alternative model to the GET /attendees request that nests the array of attendees inside an object.

It may seem strange that an array response is returned in an object, however the reason for this is that it allows for us to model bigger collections and pagination.

Pagination involves returning a partial result, while providing instructions for how the consumer can request the next set of results.

This is reaping the benefits of hindsight; adding pagination later and converting from an array to an object in order to add a @nextLink (as recommended by the standards) would break compatibility:

GET http://mastering-api.com/attendees

---

200 OK

{

"value": [

{

"displayName": "Jim",

"givenName": "James",

"surname": "Gough",

"email": "jim@mastering-api.com",

"id": 1,

}

],

"@nextLink": "{opaqueUrl}"

}

Filtering Collections

Our conference is looking a little lonely with only one attendee, however when collections grow in size we may need to add filtering in addition to pagination.

The filtering standard provides an expression language within REST to standardize how filter queries should behave, based upon the OData Standard.

For example, we could find all attendees with the displayName Jim using:

GET http://mastering-api.com/attendees?$filter=displayName eq 'Jim'

It is not necessary to complete all filtering and searching features from the start. However, designing an API in line with the standards will allow the developer to support an evolving API architecture without breaking compatibility for consumers. Filtering and querying is a feature that GraphQL is really good at, especially if querying and filtering across many of your services becomes relevant.

Error Handling

An important consideration when extending APIs to consumers is defining what should happen in various error scenarios. Error standards are useful to define upfront and share with producers to provide consistency. It is important that errors describe to the consumer exactly what has gone wrong with the request, as this will avoid increasing the support required for the API.

The guidelines state âFor non-success conditions, developers SHOULD be able to write one piece of code that handles errors consistently.â

An accurate status code must be provided to the consumer, because often consumers will build logic around the status code provided in the response.

We have seen many APIs that return errors in the body along with a 2xx type of response, which is used to indicate success.

3xx status codes for redirects are actively followed by some consuming library implementations, enabling providers to relocate and access external sources.

4xx usually indicates a client-side error; at this point the content of the message field is extremely useful to the developer or end user.

5xx usually indicates a failure on the server side and some client libraries will retry on these types of failures.

It is important to consider and document what happens in the service based on an unexpected failureâfor example, in a payment system does a 500 mean the payment has gone through or not?

Warning

Ensure that the error messages sent back to an external consumer do not contain stack traces and other sensitive information. This information can help a hacker aiming to compromise the system. The error structure in the Microsoft guidelines has the concept of an InnerError, which could be useful in which to place more detailed stack traces/descriptions of issues. This would be incredibly helpful for debugging but must be stripped prior to an external consumer.

We have just scratched the surface on building REST APIs, but clearly there are many important decisions to be made when beginning to build an API. If we combine the desire to present intuitive APIs that are consistent and allow for an evolving and compatible API, it is worth adopting an API standard early.

ADR Guideline: Choosing an API Standard

To make your decision on API standards, the guideline in Table 1-2 lists important topics to consider. There are a range of guidelines to choose from, including the Microsoft guidelines discussed in this section, and finding one that best matches the styles of APIs being produced is a key decision.

Decision |

Which API standard should we adopt? |

Discussion Points |

Does the organization already have other standards within the company? Can we extend those standards to external consumers? Are we using any third-party APIs that we will need to expose to a consumer (e.g., Identity Services) that already have a standard? What does the impact of not having a standard look like for our consumers? |

Recommendations |

Pick an API standard that best matches the culture of the organization and formats of APIs you may already have in the inventory. Be prepared to evolve and add to a standard any domain/industry-specific amendments. Start with something early to avoid having to break compatibility later for consistency. Be critical of existing APIs. Are they in a format that consumers would understand or is more effort required to offer the content? |

Specifying REST APIs Using OpenAPI

As weâre beginning to see, the design of an API is fundamental to the success of an API platform. The next consideration weâll discuss is sharing the API with developers consuming our APIs.

API marketplaces provide a public or private listing of APIs available to a consumer. A developer can browse documentation and quickly try out an API in the browser to explore the API behavior and functionality. Public and private API marketplaces have placed REST APIs prominently into the consumer space. The success of REST APIs has been driven by both the technical landscape and the low barrier to entry for both the client and server.

As the number of APIs grew, it quickly became necessary to have a mechanism to share the shape and structure of APIs with consumers. This is why the OpenAPI Initiative was formed by API industry leaders to construct the OpenAPI Specification (OAS). Swagger was the original reference implementation of the OpenAPI Specifications, but most tooling has now converged on using OpenAPI.

The OpenAPI Specifications are JSON- or YAML-based representations of the API that describe the structure, the domain objects exchanged, and any security requirements of the API. In addition to the structure, they also convey metadata about the API, including any legal or licensing requirements, and also carry documentation and examples that are useful to developers consuming the API. OpenAPI Specifications are an important concept surrounding modern REST APIs, and many tools and products have been built around its usage.

Practical Application of OpenAPI Specifications

Once an OAS is shared, the power of the specification starts to become apparent. OpenAPI.Tools documents a full range of available open and closed source tools. In this section we will explore some of the practical applications of tools based on their interaction with the OpenAPI Specification.

In the situation where the CFP team is the consumer, sharing the OAS enables the team to understand the structure of the API. Using some of the following practical applications can help both improve the developer experience and ensure the health of the exchange.

Code Generation

Perhaps one of the most useful features of an OAS is allowing the generation of client-side code to consume the API. As discussed earlier, we can include the full details of the server, security, and of course the API structure itself. With all this information we can generate a series of model and service objects that represent and invoke the API. The OpenAPI Generator project supports a wide range of languages and toolchains. For example, in Java you can choose to use Spring or JAX-RS and in TypeScript you can choose a combination of TypeScript with your favorite framework. It is also possible to generate the API implementation stubs from the OAS.

This raises an important question about what should come firstâthe specification or the server-side code? In Chapter 2, we discuss âcontract tracing,â which presents a behavior-driven approach to testing and building APIs. The challenge with OpenAPI Specifications is that alone they only convey the shape of the API. OpenAPI Specifications do not fully model the semantics (or expected behavior) of the API under different conditions. If you are going to present an API to external users, it is important that the range of behaviors is modeled and tested to help avoid having to drastically change the API later.

APIs should be designed from the perspective of the consumer and consider the requirement to abstract the underlying representation to reduce coupling. It is important to be able to freely refactor components behind the scenes without breaking API compatibility, otherwise the API abstraction loses value.

OpenAPI Validation

OpenAPI Specifications are useful for validating the content of an exchange to ensure the request and response match the expectations of the specification. At first it might not seem apparent where this would be usefulâif code is generated, surely the exchange will always be right? One practical application of OpenAPI validation is in securing APIs and API infrastructure. In many organizations a zonal architecture is common, with a notion of a demilitarized zone (DMZ) used to shield a network from inbound traffic. A useful feature is to interrogate messages in the DMZ and terminate the traffic if the specification does not match. We will cover security in more detail in Chapter 6.

Atlassian, for example, open sourced a tool called the swagger-request-validator, which is capable of validating JSON REST content.

The project also has adapters that integrate with various mocking and testing frameworks to help ensure that API Specifications are conformed to as part of testing.

The tool has an OpenApiInteractionValidator, which is used to create a ValidationReport on an exchange.

The following code demonstrates building a validator from the specification, including any basePathOverridesâwhich may be necessary if deploying an API behind infrastructure that alters the path.

The validation report is generated from analyzing the request and response at the point where validation is executed:

//Using the location of the specification create an interaction validator//The base path override is useful if the validator will be used//behind a gateway/proxyfinalOpenApiInteractionValidatorvalidator=OpenApiInteractionValidator.createForSpecificationUrl(specUrl).withBasePathOverride(basePathOverride).build;//Requests and Response objects can be converted or created using a builderfinalValidationReportreport=validator.validate(request,response);if(report.hasErrors()){// Capture or process error information}

Examples and Mocking

The OAS can provide example responses for the paths in the specification. Examples, as weâve discussed, are useful for documentation to help developers understand the expected API behavior. Some products have started to use examples to allow the user to query the API and return example responses from a mock service. This can be really useful in features such as a developer portal, which allows developers to explore documentation and invoke APIs. Another useful feature of mocks and examples is the ability to share ideas between the producer and consumer ahead of committing to build the service. Being able to âtry outâ the API is often more valuable than trying to review if a specification would meet your requirements.

Examples can potentially introduce an interesting problem, which is that this part of the specification is essentially a string (in order to model XML/JSON, etc.).

openapi-examples-validator validates that an example matches the OAS for the corresponding request/response component of the API.

API Versioning

We have explored the advantages of sharing an OAS with a consumer, including the speed of integration. Consider the case where multiple consumers start to operate against the API. What happens when there is a change to the API or one of the consumers requests the addition of new features to the API?

Letâs take a step back and think about if this was a code library built into our application at compile time. Any changes to the library would be packaged as a new version and until the code is recompiled and tested against the new version, there would be no impact to production applications. As APIs are running services, we have a few upgrade options that are immediately available to us when changes are requested:

- Release a new version and deploy in a new location.

-

Older applications continue to operate against the older version of the APIs. This is fine from a consumer perspective, as the consumer only upgrades to the new location and API if they need the new features. However, the owner of the API needs to maintain and manage multiple versions of the API, including any patching and bug fixing that might be necessary.

- Release a new version of the API that is backward compatible with the previous version of the API.

-

This allows additive changes without impacting existing users of the API. There are no changes required by the consumer, but we may need to consider downtime or availability of both old and new versions during the upgrade. If there is a small bug fix that changes something as small as an incorrect field name, this would break compatibility.

- Break compatibility with the previous API and all consumers must upgrade code to use the new API.

-

This seems like an awful idea at first, as that would result in things breaking unexpectedly in production.1 However, a situation may present itself where we cannot avoid breaking compatibility with older versions. This type of change can trigger a whole-system lockstep change that requires coordination of downtime.

The challenge is that all of these different upgrade options offer advantages but also drawbacks either to the consumer or the producer. The reality is that we want to be able to support a combination of all three options. In order to do this we need to introduce rules around versioning and how versions are exposed to the consumer.

Semantic Versioning

Semantic versioning offers an approach that we can apply to REST APIs to give us a combination of the preceding upgrade options. Semantic versioning defines a numerical representation attributed to an API release. That number is based on the change in behavior in comparison to the previous version, using the following rules:

-

A major version introduces noncompatible changes with previous versions of the API. In an API platform, upgrading to a new major version is an active decision by the consumer. There is likely going to be a migration guide and tracking as consumers upgrade to the new API.

-

A minor version introduces a backward compatible change with the previous version of the API. In an API service platform, it is acceptable for consumers to receive minor versions without making an active change on the client side.

-

A patch version does not change or introduce new functionality but is used for bug fixes on an existing

Major.Minorversion of functionality.

Formatting for semantic versioning can be represented as Major.Minor.Patch.

For example, 1.5.1 would represent major version 1, minor version 5, with patch upgrade of 1.

In Chapter 5 you will explore how semantic versioning connects with the concept of API lifecycle and releases.

OpenAPI Specification and Versioning

Now that we have explored versioning we can look at examples of breaking changes and nonbreaking changes using the Attendee API specification. There are several tools to choose from to compare specifications, and in this example we will use openapi-diff from OpenAPITools.

We will start with a breaking change: we will change the name of the givenName field to

firstName. This is a breaking change because consumers will be expecting to parse givenName, not firstName. We can run the diff tool from a docker container using the following command:

$docker run --rm -t \

-v $(pwd):/specs:ro \

openapitools/openapi-diff:latest /specs/original.json /specs/first-name.json

==========================================================================

...

- GET /attendees

Return Type:

- Changed 200 OK

Media types:

- Changed */*

Schema: Broken compatibility

Missing property: [n].givenName (string)

--------------------------------------------------------------------------

-- Result --

--------------------------------------------------------------------------

API changes broke backward compatibility

--------------------------------------------------------------------------

We can try to add a new attribute to the /attendees return type to add an additional field called age.

Adding new fields does not break existing behavior and therefore does not break compatibility:

$ docker run --rm -t \

-v $(pwd):/specs:ro \

openapitools/openapi-diff:latest --info /specs/original.json /specs/age.json

==========================================================================

...

- GET /attendees

Return Type:

- Changed 200 OK

Media types:

- Changed */*

Schema: Backward compatible

--------------------------------------------------------------------------

-- Result --

--------------------------------------------------------------------------

API changes are backward compatible

--------------------------------------------------------------------------

It is worth trying this out to see which changes would be compatible and which would not. Introducing this type of tooling as part of the API pipeline is going to help avoid unexpected noncompatible changes for consumers. OpenAPI Specifications are an important part of an API program, and when combined with tooling, versioning, and lifecycle, they are invaluable.

Implementing RPC with gRPC

Eastâwest services such as Attendee tend to be higher traffic and can be implemented as microservices used across the architecture. gRPC may be a more suitable tool than REST for eastâwest services, owing to the smaller data transmission and speed within the ecosystem. Any performance decisions should always be measured in order to be informed.

Letâs explore using a Spring Boot Starter to rapidly create a gRPC server.

The following .proto file models the same attendee object that we explored in our OpenAPI Specification example.

As with OpenAPI Specifications, generating code from a schema is quick and supported in multiple languages.

The attendees .proto file defines an empty request and returns a repeated Attendee response.

In protocols used for binary representations, it is important to note that the position and order of fields is critical, as they govern the layout of the message.

Adding a new service or new method is backward compatible as is adding a field to a message, but care is required.

Any new fields that are added must not be mandatory fields, otherwise backward compatibility would break.

Removing a field or renaming a field will break compatibility, as will changing the data type of a field. Changing the field number is also an issue as field numbers are used to identify fields on the wire. The restrictions of encoding with gRPC mean the definition must be very specific. REST and OpenAPI are quite forgiving as the specification is only a guide.2 Extra fields and ordering do not matter in OpenAPI, and therefore versioning and compatibility is even more important when it comes to gRPC:

syntax = "proto3";

option java_multiple_files = true;

package com.masteringapi.attendees.grpc.server;

message AttendeesRequest {

}

message Attendee {

int32 id = 1;

string givenName = 2;

string surname = 3;

string email = 4;

}

message AttendeeResponse {

repeated Attendee attendees = 1;

}

service AttendeesService {

rpc getAttendees(AttendeesRequest) returns (AttendeeResponse);

}

The following Java code demonstrates a simple structure for implementing the behavior on the generated gRPC server classes:

@GrpcServicepublicclassAttendeesServiceImplextendsAttendeesServiceGrpc.AttendeesServiceImplBase{@OverridepublicvoidgetAttendees(AttendeesRequestrequest,StreamObserver<AttendeeResponse>responseObserver){AttendeeResponse.BuilderresponseBuilder=AttendeeResponse.newBuilder();//populate responseresponseObserver.onNext(responseBuilder.build());responseObserver.onCompleted();}}

You can find the Java service modeling this example on this bookâs GitHub page.

gRPC cannot be queried directly from a browser without additional libraries, however you can install gRPC UI to use the browser for testing.

grpcurl also provides a command-line tool:

$ grpcurl -plaintext localhost:9090 \

com.masteringapi.attendees.grpc.server.AttendeesService/getAttendees

{

"attendees": [

{

"id": 1,

"givenName": "Jim",

"surname": "Gough",

"email": "gough@mail.com"

}

]

}

gRPC gives us another option for querying our service and defines a specification for the consumer to generate code. gRPC has a more strict specification than OpenAPI and requires methods/internals to be understood by the consumer.

Modeling Exchanges and Choosing an API Format

In the Introduction we discussed the concept of traffic patterns and the difference between requests originating from outside the ecosystem and requests within the ecosystem. Traffic patterns are an important factor in determining the appropriate format of API for the problem at hand. When we have full control over the services and exchanges within our microservices-based architecture, we can start to make compromises that we would not be able to make with external consumers.

It is important to recognize that the performance characteristics of an eastâwest service are likely to be more applicable than a northâsouth service. In a northâsouth exchange, traffic originating from outside the producerâs environment will generally involve the exchange using the internet. The internet introduces a high degree of latency, and an API architecture should always consider the compounding effects of each service. In a microservices-based architecture it is likely that one northâsouth request will involve multiple eastâwest exchanges. High eastâwest traffic exchange needs to be efficient to avoid cascading slow-downs propagating back to the consumer.

High-Traffic Services

In our example, Attendees is a central service.

In a microservices-based architecture, components will keep track of an attendeeId.

APIs offered to consumers will potentially retrieve data stored in the Attendee service, and at scale it will be a high-traffic component.

If the exchange frequency is high between services, the cost of network transfer due to payload size and limitations of one protocol versus another will be more profound as usage increases.

The cost can present itself in either monetary costs of each transfer or the total time taken for the message to reach the destination.

Large Exchange Payloads

Large payload sizes may also become a challenge in API exchanges and are susceptible to decreasing transfer performance across the wire. JSON over REST is human readable and will often be more verbose than a fixed or binary representation fuelling an increase in payload sizes.

Tip

A common misconception is that âhuman readabilityâ is quoted as a primary reason to use JSON in data transfers. The number of times a developer will need to read a message versus the performance consideration is not a strong case with modern tracing tools. It is also rare that large JSON files will be read from beginning to end. Better logging and error handling can mitigate the human-readable argument.

Another factor in large payload exchanges is the time it takes components to parse the message content into language-level domain objects. Performance time of parsing data formats varies vastly depending on the language a service is implemented in. Many traditional server-side languages can struggle with JSON compared to a binary representation, for example. It is worth exploring the impact of parsing and include that consideration when choosing an exchange format.

HTTP/2 Performance Benefits

Using HTTP/2-based services can help to improve performance of exchanges by supporting binary compression and framing. The binary framing layer is transparent to the developer, but behind the scenes will split and compress the message into smaller chunks. The advantage of binary framing is that it allows for a full request and response multiplexing over a single connection. Consider processing a list in another service and the requirement is to retrieve 20 different attendees; if we retrieved these as individual HTTP/1 requests it would require the overhead of creating 20 new TCP connections. Multiplexing allows us to perform 20 individual requests over a single HTTP/2 connection.

gRPC uses HTTP/2 by default and reduces the size of exchange by using a binary protocol. If bandwidth is a concern or cost, then gRPC will provide an advantage, in particular as content payloads increase significantly in size. gRPC may be beneficial compared to REST if payload bandwidth is a cumulative concern or the service exchanges large volumes of data. If large volumes of data exchanges are frequent, it is also worth considering some of the asynchronous capabilities of gRPC.

Tip

HTTP/3 is on the way and it will change everything. HTTP/3 uses QUIC, a transport protocol built on UDP. You can find out more in HTTP/3 explained.

Vintage Formats

Not all services in an architecture will be based on a modern design. In Chapter 8 we will look at how to isolate and evolve vintage components, as older components will be an active consideration for evolving architectures. It is important that those involved with an API architecture understand the overall performance impact of introducing vintage components.

Guideline: Modeling Exchanges

When the consumer is the legacy conference system team, the exchange is typically an eastâwest relationship. When the consumer is the CFP team, the exchange is typically a northâsouth relationship. The difference in coupling and performance requirements will require the teams to consider how the exchange is modeled. You will see some aspects for consideration in the guideline shown in Table 1-3.

Decision |

What format should we use to model the API for our service? |

Discussion Points |

Is the exchange a northâsouth or eastâwest exchange? Are we in control of the consumer code? Is there a strong business domain across multiple services or do we want to allow consumers to construct their own queries? What versioning considerations do we need to have? What is the deployment/change frequency of the underlying data model? Is this a high-traffic service where bandwidth or performance concerns have been raised? |

Recommendations |

If the API is consumed by external users, REST is a low barrier to entry and provides a strong domain model. External users also usually means that a service with loose coupling and low dependency is desirable. If the API is interacting between two services under close control of the producer or the service is proven to be high traffic, consider gRPC. |

Multiple Specifications

In this chapter we have explored a variety of API formats to consider in an API architecture, and perhaps the final question is âCan we provide all formats?â The answer is yes, we can support an API that has a RESTful presentation, a gRPC service, and connections into a GraphQL schema. However, it is not going to be easy and may not be the right thing to do. In this final section, we will explore some of the options available for a multiformat API and the challenges it can present.

Does the Golden Specification Exist?

The .proto file for attendees and the OpenAPI Specification do not look too dissimilar; they contain the same fields and both have data types.

Is it possible to generate a .proto file from an OAS using the openapi2proto tool?

Running openapi2proto --spec spec-v2.json will output the .proto file with fields ordered alphabetically by default.

This is fine until we add a new field to the OAS that is backward compatible and suddenly the ID of all fields changes, breaking backward compatibility.

The following sample .proto file shows that adding a_new_field would be alphabetically added to the beginning, changing the binary format and breaking existing services:

message Attendee {

string a_new_field = 1;

string email = 2;

string givenName = 3;

int32 id = 4;

string surname = 5;

}

Note

Other tools are available to solve the specification conversion problem, however it is worth noting that some tools only support OpenAPI Specification version 2. The time taken to move between versions 2 and 3 in some of the tools built around OpenAPI has led to many products needing to support both versions of the OAS.

An alternative option is grpc-gateway, which generates a reverse proxy providing a REST facade in front of the gRPC service.

The reverse proxy is generated at build time against the .proto file and will produce a best-effort mapping to REST, similar to openapi2proto.

You can also supply extensions within the .proto file to map the RPC methods to a nice representation in the OAS:

import "google/api/annotations.proto";

//...

service AttendeesService {

rpc getAttendees(AttendeesRequest) returns (AttendeeResponse) {

option(google.api.http) = {

get: "/attendees"

};

}

Using grpc-gateway gives us another option for presenting both a REST and gRPC service. However, grpc-gateway involves several commands and a setup that would only be familiar to developers who work with the Go language or build environment.

Challenges of Combined Specifications

Itâs important to take a step back here and consider what we are trying to do. When converting from OpenAPI we are effectively trying to convert our RESTful representation into a gRPC series of calls. We are trying to convert an extended hypermedia domain model into a lower-level function-to-function call. This is a potential conflation of the difference between RPC and APIs and is likely going to result in wrestling with compatibility.

With converting gRPC to OpenAPI we have a similar issue; the objective is trying to take gRPC and make it look like a REST API. This is likely going to create a difficult series of issues when evolving the service.

Once specifications are combined or generated from one another, versioning becomes a challenge. It is important to be mindful of how both the gRPC and OpenAPI Specifications maintain their individual compatibility requirements. An active decision should be made as to whether coupling the REST domain to an RPC domain makes sense and adds overall value.

Rather than generate RPC for eastâwest from northâsouth, what makes more sense is to carefully design the microservices-based architecture (RPC) communication independently from the REST representation, allowing both APIs to evolve freely. This is the choice we have made for the conference case study and would be recorded as an ADR in the project.

Summary

In this chapter we have covered how to design, build, and specify APIs and the different circumstances under which you may choose REST or gRPC. It is important to remember that it is not REST versus gRPC, but rather given the situations, which is the most appropriate choice for modeling the exchange. The key takeaways are:

-

The barrier to building REST- and RPC-based APIs is low in most technologies. Carefully considering the design and structure is an important architectural decision.

-

When choosing between REST and RPC models, consider the Richardson Maturity Model and the degree of coupling between the producer and consumer.

-

REST is a fairly loose standard. When building APIs, conforming to an agreed API standard ensures your APIs are consistent and have the expected behavior for your consumers. API standards can also help to short-circuit potential design decisions that could lead to an incompatible API.

-

OpenAPI Specifications are a useful way of sharing API structure and automating many coding-related activities. You should actively select OpenAPI features and choose what tooling or generation features will be applied to projects.

-

Versioning is an important topic that adds complexity for the producer but is necessary to ease API usage for the consumer. Not planning for versioning in APIs exposed to consumers is dangerous. Versioning should be an active decision in the product feature set and a mechanism to convey versioning to consumers should be part of the discussion.

-

gRPC performs incredibly well in high-bandwidth exchanges and is an ideal option for eastâwest exchanges. Tooling for gRPC is powerful and provides another option when modeling exchanges.

-

Modeling multiple specifications starts to become quite tricky, especially when generating from one type of specification to another. Versioning complicates matters further but is an important factor to avoid breaking changes. Teams should think carefully before combining RPC representations with RESTful API representations, as there are fundamental differences in terms of usage and control over the consumer code.

The challenge for an API architecture is to meet the requirements from a consumer business perspective, to create a great developer experience around APIs, and to avoid unexpected compatibility issues. In Chapter 2 you will explore testing, which is essential in ensuring services meet these objectives.

Get Mastering API Architecture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.