Chapter 4. Rightsizing Your Microservices: Finding Service Boundaries

One of the most challenging aspects of building a successful microservices system is the identification of proper microservice boundaries. It makes intuitive sense that breaking up a large codebase into smaller, simpler, more loosely coupled parts improves maintainability, but how do we decide where and how to split the code into parts to achieve those desired properties? What rules do we use to know where one service ends and another one starts? Answering these fundamental questions is challenging. A lot of teams new to microservices stumble at them. Drawing the microservice boundaries incorrectly can significantly diminish the benefits of using microservices, or in some cases even derail the entire effort. It is then not surprising that the most frequent, most pressing question microservices practitioners ask is: how can a bigger application be properly sliced into a collection of microservices?

In this chapter, we look deep into the leading methodology for the effective analysis, modeling, and decomposition of large domains (Domain-Driven Design), explain the efficiency benefits of using Event Storming for domain analysis, and close by introducing the Universal Sizing Formula, a unique guidance for the effective sizing of microservices.

Why Boundaries Matter, When They Matter, and How to Find Them

Right in the title of the architectural pattern, we have the word micro—the architecture we are designing is that of “micro” services! But how “micro” should our services be? We are obviously not measuring the physical length of something and assuming that micro means one-millionth of a meter (i.e., of the base unit of length in the International System of Units). So what does micro mean for our purposes? How are we supposed to slice up our larger problem into smaller services to achieve the promised benefits of “micro” services? Maybe we could print our source code on paper, glue everything together, and measure the literal length of that? Or jokes aside, should we go by the number of lines in our source code—keeping that number small to ensure each of our microservices is also small enough? What is “enough,” however? Maybe we just arbitrarily declare that each microservice must have no greater than 500 lines of code? We could also draw boundaries at the familiar, functional edges of our source code and say that each granular capability represented by a function in the source code of our system is a microservice. This way we could build our entire application with, say, serverless functions, declaring each such function to be a microservice. Clean and easy! Right? Maybe not.

In practice, each of these simplistic approaches has indeed been tried and they all have significant drawbacks. While source lines of code (SLOC) has historically enjoyed some usage as a measure of effort/complexity, it has since been widely acknowledged to be a poor measurement for determining the complexity or the true size of any code and one that can be easily manipulated. Therefore, even if our goal were to create “small” services with the hope of keeping them simple, lines of code would be a poor measurement.

Drawing boundaries at functional edges is even more tempting. And it has become even more tempting with the increase in popularity of serverless functions such as Amazon Web Services’ Lambda functions. Building on top of the productivity and wide adoption of AWS Lambdas, many teams have rushed into declaring those functions “microservices.” There are a number of significant problems if you go down this road, the most important of which are:

- Drawing boundaries based on technical needs is an anti-pattern

-

Per Lewis and Fowler, microservices should be “organized around business capabilities,” not technical needs. Similarly, Parnas, in an article from 1972, recommends decomposing systems based on modular encapsulation of design changes over time. Neither approach necessarily aligns strongly with the boundaries of serverless functions.

- Too much granularity, too soon

-

An explosive level of granularity early in the microservices project life cycle can introduce crushing levels of complexity that will stop the microservices effort in its tracks, even before it has a chance to take off and succeed.

In Chapter 1 we stated the primary goal of a microservices architecture: it is primarily about minimization of coordination costs, in a complex, multiteam environment, to achieve harmony between speed and safety, at scale. Therefore, services should be designed in a way that minimizes coordination needs between the teams working on different microservices. However, if we break code up into functions in a way that does not necessarily lead to minimized coordination, we will end up with incorrectly sized microservices. Just assuming that any way of organizing code into serverless functions will reduce coordination is misguided.

Earlier we stated that an important reason for avoiding a size-based or functions-aligned approach when splitting an application into microservices is the danger of premature optimization—having too many services that are too small too early in your microservices journey. Early adopters of microservices, such as Netflix, SoundCloud, Amazon, and others, eventually found themselves having a lot of microservices! That, however, does not mean that these companies started with hundreds of very granular microservices on day one. Rather, a large number of microservices is what they optimized for after years of development, after having achieved the operational maturity capable of handling the level of complexity associated with the high granularity of microservices.

Avoid Creating Too Many Microservices Too Early

The sizing of services in a microservices architecture is most certainly a journey that should unfold in time. A sure way to sabotage the entire effort is to attempt designing an overly granular system early in that journey.

Whether you are working on a greenfield project or decomposing an existing monolith, the approach should absolutely be to start with only a handful of services and slowly increase the number of microservices over time. If this leads to some of your microservices initially being larger than in their target state, it is totally OK. You can split them up later.

Even if we are starting with just a few microservices, taking it slow, we need some reliable methodology to determine how to size microservices. Next, we will explore best practices successfully used in the industry.

Domain-Driven Design and Microservice Boundaries

At the onset of figuring out microservices design best practices, Sam Newman introduced some foundational ground rules in his book Building Microservices (O’Reilly). He suggested that when drawing service boundaries, we should strive for such a design that the resulting services are:

- Loosely coupled

-

Services should be fairly unaware and independent of each other, so that a code modification in one of them doesn’t result in ripple effects in others. We’ll also probably want to limit the number of different types of runtime calls from one service to another since, beyond the potential performance problem, chatty communications can also lead to a tight coupling of components. Taking our “coordination minimization” approach, the benefit of the loose coupling of the services is quite obvious.

- Highly cohesive

-

Features present in a service should be highly related, while unrelated features should be encapsulated elsewhere. This way, if you need to change a logical unit of functionality, you should be able to change it in one place, minimizing time to releasing that change (an important metric). In contrast, if we had to change the code in a number of services, we would have to release lots of different services at the same time to deliver that change. That would require significant levels of coordination, especially if those services are “owned” by multiple teams, and it would directly compromise our goal of minimizing coordination costs.

- Aligned with business capabilities

-

Since most requests for the modification or extension of functionality are driven by business needs, if our boundaries are closely aligned with the boundaries of business capabilities, it would naturally follow that the first and second design requirements, above, are more easily satisfied. During the days of monolith architectures, software engineers often tried to standardize on “canonical data models.” However, the practice demonstrated, over and over again, that detailed data models for modeling reality do not last for long—they change quite often and standardizing on them leads to frequent rework. Instead, what is more durable is a set of business capabilities that your subsystems provide. An accounting module will always be able to provide the desired set of capabilities to your larger system, regardless of how its inner workings may evolve over time.

These design principles have proven to be very useful and received wide adoption among microservices practitioners. However, they are fairly high-level, aspirational principles and arguably do not provide the specific service-sizing guidance needed by day-to-day practitioners. In search of a more practical methodology, many turned to Domain-Driven Design.

The software design methodology known as Domain-Driven Design (DDD) significantly predates microservices architecture. It was introduced by Eric Evans in 2003, in his seminal book of the same name, Domain-Driven Design: Tackling Complexity in the Heart of Software (Addison-Wesley). The main premise of the methodology is the assertion that, when analyzing complex systems, we should avoid seeking a single unified domain model representing the entire system. Rather, as Evans said in his book:

Multiple models coexist on big projects, and this works fine in many cases. Different models apply in different contexts.

Once Evans established that a complex system is fundamentally a collection of multiple domain models, he made the critical additional step of introducing the notion of bounded context. Specifically, he stated that:

A Bounded Context defines the range of applicability of each model.

Bounded contexts allow implementation and runtime execution of different parts of the larger system to occur without corrupting the independent domain models present in that system. After defining bounded contexts, Eric went on to also helpfully provide a formula for identifying the optimal edges of a bounded context by establishing the concept of Ubiquitous Language.

To understand the meaning of Ubiquitous Language, it is important to observe that a well-defined domain model first and foremost provides a common vocabulary of defined terms and notions, a common language for describing the domain, that subject-matter experts and engineers develop together in close collaboration, balancing the business requirements and implementation considerations. This common language, or shared vocabulary, is what in DDD we call Ubiquitous Language. The importance of this observation lies in acknowledging that same words may carry different meanings in different bounded contexts. A classic example of this is shown in Figure 4-1. The term account carries significantly different meaning in the identity and access management, customer management, and financial accounting contexts of an online reservation system.

Figure 4-1. Depending on the domain where it appears, “account” can have different meanings

Indeed, for an identity and access management context, an account is a set of credentials used for authentication and authorization. For a customer management-bounded context, an account is a set of demographic and contact attributes, while for a financial accounting context, it’s probably payment information and a list of past transactions. We can see that the same basic English word is used with significantly different meaning in different contexts, and it is OK because we only need to agree on the ubiquitous meaning of the terms (the Ubiquitous Language) within the bounded context of a specific domain model. According to DDD, by observing edges across which terms change their meaning, we can identify the boundaries of the contexts.

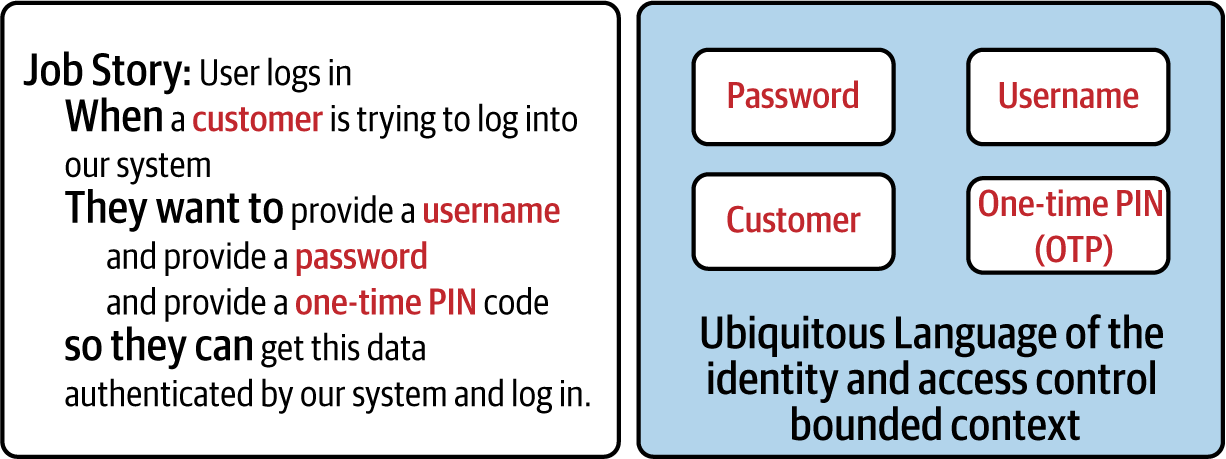

In DDD, not all terms that come to mind when discussing a domain model make into the corresponding Ubiquitous Language. Concepts in a bounded context that are core to the context’s primary purpose are part of the team’s Ubiquitous Language, all others should be left out. These core concepts can be discovered from the set of JTBDs that you create for the bounded context. As an example, let’s look at Figure 4-2.

Figure 4-2. Using Job Story syntax to identify key terms of a Ubiquitous Language

In this example, we are using the Job Story format that we introduced in Chapter 3 and applying it to a job from the identity and access control bounded context. We can see that key nouns, highlighted in Figure 4-2, correspond to the terms in the related Ubiquitous Language. We highly recommend the technique of using key nouns from well-written Job Stories in the identification of the vocabulary terms relevant to your Ubiquitous Language.

Now that we have discussed some key concepts of DDD, let’s also look at something that can be very useful in designing microservice interactions properly: context mapping. We will explore key aspects of context mapping in the next section.

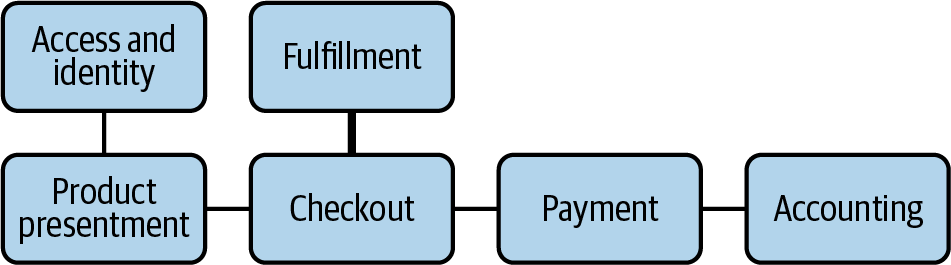

Context Mapping

In DDD, we do not attempt to describe a complex system with a single domain model. Rather, we design multiple independent models that coexist in the system. These subdomains typically communicate with each other using published interface descriptions. The representation of various domains in a larger system and the way they collaborate with each other is called a context map. Consequently, the act of identifying and describing said collaborations is known as context mapping, as shown in Figure 4-3.

Figure 4-3. Context mapping

DDD identifies several major types of collaboration interactions when mapping bounded contexts. The most basic type is known as a shared kernel. It occurs when two domains are developed largely independently and, almost by accident, they end up overlapping on some subset of each other’s domains (see Figure 4-4). Two parties may agree to collaborate on this shared kernel, which may also include shared code and data model, as well as the domain description.

While tempting on the surface of things (after all, the desire for collaboration is one of the most human of instincts), the shared kernel is a problematic pattern, especially when used for microservices architectures. By definition, a shared kernel immediately requires a high degree of coordination between two independent teams to even jump-start the relationship, and keeps requiring coordination for any further modifications. Sprinkling your microservices architecture with shared kernels will introduce many points of tight coordination. In cases when you do have to use a shared kernel in a microservices ecosystem, it’s advised that one team is designated as the primary owner/curator, and everybody else is a contributor.

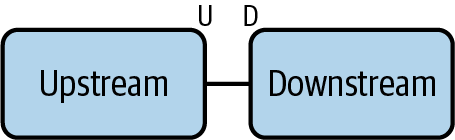

Alternatively, two bounded contexts can engage in what DDD calls an Upstream–Downstream kind of relationship. In this type of relationship, the Upstream acts as the provider of some capability, and the Downstream is the consumer of said capability. Since domain definitions and implementations do not overlap, this type of relationship is more loosely coupled than a shared kernel (see Figure 4-5).

Figure 4-5. Upstream–Downstream relationship

Depending on the type of coordination and coupling, an Upstream–Downstream mapping can be introduced in several forms:

- Customer–Supplier

-

In a customer–supplier scenario, Upstream (supplier) provides functionality to the Downstream (customer). As long as the provided functionality is valuable, everybody is happy; however, Upstream carries the overhead of backwards compatibility. When Upstream modifies their service, they need to ensure that they do not break anything for the customer. More dramatically, the Downstream (customer) carries the risk of the Upstream intentionally or unintentionally breaking something for it, or ignoring the customer’s future needs.

- Conformist

-

An extreme case of the risks for a customer–supplier relationship is the conformist relationship. It’s a variation on Upstream–Downstream, when the Upstream explicitly does not or cannot care about the needs of its Downstream. It’s a use-at-your-own-risk kind of relationship. The Upstream provides some valuable capability that the Downstream is interested in using, but given that the Upstream will not cater to its needs, the Downstream needs to constantly conform to the changes in the Upstream.

Conformist relationships often occur in large organizations and systems when a much larger subsystem is used by a smaller one. Imagine developing a small, new capability inside an airline reservation system and needing to use, say, an enterprise payments system. Such a large enterprise system is unlikely to give the time of day to some small, new initiative, but you also cannot just reimplement a whole payments system on your own. Either you will have to become a conformist, or another viable solution may be to separate ways. The latter doesn’t always mean that you will implement similar functionality yourself. Something like a payments system is complex enough that no small team should implement it as a side job of another goal, but you might be able go outside the confines of your enterprise and use a commercially available payments vendor instead, if your company allows it.

In addition to becoming a conformist or going separate ways, the Downstream has a few more DDD-sanctioned ways of protecting itself from the negligence of its Upstream: an anti-corruption layer and using Upstreams that provide open host interfaces.

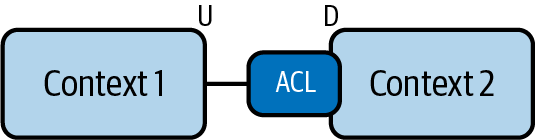

- Anti-corruption layer

-

In this scenario, the Downstream creates a translation layer called an anti-corruption layer (ACL) between its and the Upstream’s Ubiquitous Languages, to guard itself from future breaking changes in the Upstream’s interface. Creating an ACL is an effective, sometimes necessary, measure of protection, but teams should keep in mind that in the long term this can be quite expensive for the Downstream to maintain (see Figure 4-6).

Figure 4-6. Anti-corruption layer

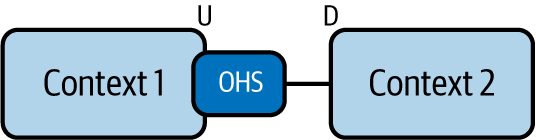

- Open host service

-

When the Upstream knows that multiple Downstreams may be using its capabilities, instead of trying to coordinate the needs of its many current and future consumers, it should instead define and publish a standard interface, which all consumers will need to adopt. in DDD, such Upstreams are known as open host services. By providing an open, easy protocol for all authorized parties to integrate with, and maintaining said protocol’s backwards compatibility or providing clear and safe versioning for it, the open host can scale its operations without much drama. Practically all public services (APIs) use this approach. For example, when you are using the APIs of a public cloud provider (AWS, Google, Azure, etc.), they usually don’t know or cater to you specifically as they have millions of customers, but they are able to provide and evolve a useful service by operating as an open host (see Figure 4-7).

Figure 4-7. Open host service

In addition to relation types between domains, context mappings can also differentiate based on the integration types used between bounded contexts.

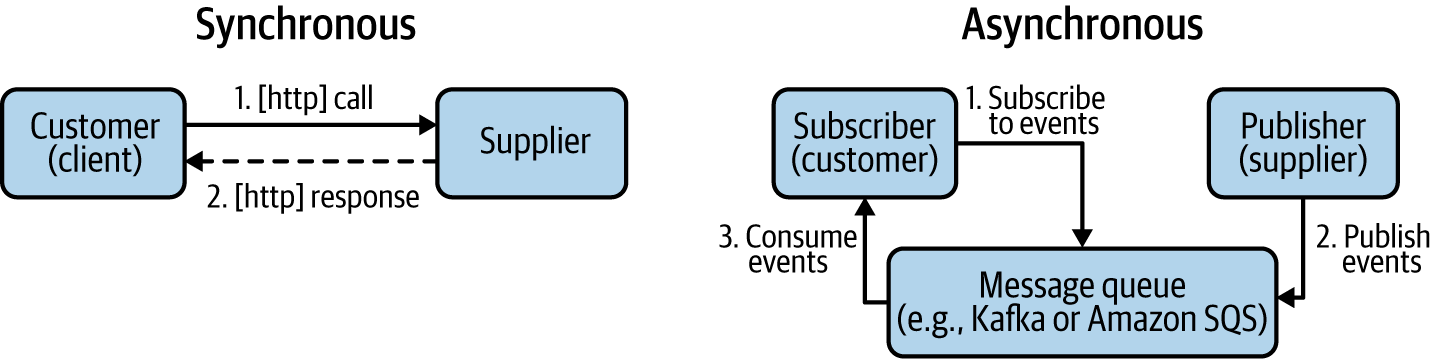

Synchronous Versus Asynchronous Integrations

Integration interfaces between bounded contexts can be synchronous or asynchronous, as shown in Figure 4-8. None of the integration patterns fundamentally assume one or the other style.

Common patterns for synchronous integrations between contexts are RESTful APIs deployed over HTTP, gRPC services using binary formats such as protobuf, and more recently services using GraphQL interfaces.

On the asynchronous side, publish–subscribe types of interactions lead the way. In this interaction pattern, the Upstream can generate events, and Downstream services have workers able and interested in processing those, as depicted in Figure 4-8.

Figure 4-8. Synchronous and asynchronous integrations

Publish–subscribe interactions are more complex to implement and debug, but they can provide a superior level of scalability, resilience, and flexibility, in that: multiple receivers, even if implemented with heterogeneous tech stack, can subscribe to the same events using a uniform approach and implementation.

To wrap up the discussion of Domain-Driven Design’s key concepts, we should explore the concept of an aggregate. We discuss it in the next section.

A DDD Aggregate

In DDD, an aggregate is a collection of related domain objects that can be viewed as a single unit by external consumers. Those external consumers only reference a single entity in the aggregate, and that entity is known in DDD as an aggregate root. Aggregates allow domains to hide internal complexities of a domain, and expose only information and capabilities (interface) that are “interesting” to an external consumer. For instance, in the Upstream–Downstream mappings that we discussed earlier, the Downstream does not have to, and typically will not want to, know about every single domain object within the Upstream. Instead, it will view the Upstream as an aggregate, or a collection of aggregates.

We will see the notion of an aggregate resurface, in the next section when we discuss Event Storming—a powerful methodology that can greatly streamline the process of domain-driven analysis and turn it into a much faster and more fun exercise.

Introduction to Event Storming

Domain-Driven Design is a powerful methodology for analyzing both the whole-system-level (called “strategic” in DDD) as well as the in-depth (called “tactical”) composition of your large, complex systems. We have also seen that DDD analysis can help us identify fairly autonomous subcomponents, loosely coupled across bounded contexts of their respective domains.

It’s very easy to jump to the conclusion that in order to fully learn how to properly size microservices, we just need to become really good in domain-driven analysis; if we make our entire company also learn and fall in love with it (because DDD is certainly a team sport), we’ll be on our way to success!

In the early days of microservices architectures, DDD was so universally proclaimed as the one true way to size microservices that the rise of microservices gave a huge boost to the practice of DDD, as well—or at least more people became aware of it, and referenced it. Suddenly, many speakers were talking about DDD at all kinds of software conferences, and a lot of teams started claiming that they were employing it in their daily work. Alas, a close look easily uncovered that the reality was somewhat different and that DDD had become one of those “much-talked-about-less-practiced” things.

Don’t get us wrong: there were people using DDD way before microservices, and there are plenty using it now as well, but speaking specifically of using it as a tool for sizing microservices, it was more hype and vaporware than reality.

There are two primary reasons why more people talked about DDD than practiced it in earnest: it is complex and it is expensive. Practicing DDD requires quite a lot of knowledge and experience. Eric Evans’s original book on the subject is a hefty 520 pages long, and you would need to read at least a few more books to really get it, not to mention gain some experience actually implementing it on a number of projects. There simply were not enough people with the skills and experience and the learning curve was steep.

To exacerbate the problem, as we mentioned, DDD is a team sport, and a time-consuming one at that. It’s not enough to have a handful of technologists well-versed in DDD; you also need to sell your business, product, design, etc., teams on participating in long and intense domain-design sessions, not to mention explain to them at least the basics of what you are trying to achieve. Now, in the grand scheme of things, is it worth it? Very likely, yes: especially for large, risky, expensive systems, DDD can have many benefits. However, if you are just looking to move quickly and size some microservices, and you have already cashed in your political capital at work, selling everybody on the new thing called microservices—good luck also asking a whole bunch of busy people to give you enough time to size your services right! It was just not happening—too expensive and too time-consuming.

And then suddenly a fellow by the name of Alberto Brandolini, who had invested decades in understanding better ways for teams to collaborate, found a shortcut! He proposed a fun, lightweight, and inexpensive process called Event Storming, which is heavily based and inspired by the concepts of DDD but can help you find bounded contexts in a matter of hours instead of weeks or months. The introduction of Event Storming was a breakthrough for inexpensive applicability of DDD specifically for the sake of service sizing. Of course, it’s not a full replacement, and it won’t give you all the benefits of formal DDD (otherwise it would be magic). But as far as the discovery of bounded contexts goes, with good approximation—it is indeed magical!

Event Storming is a highly efficient exercise that helps identify bounded contexts of a domain in a streamlined, fun, and efficient manner, typically much faster than with more traditional, full DDD. It is a pragmatic approach that lowers the cost of DDD analysis enough to make it viable in situations in which DDD would not be affordable otherwise. Let’s see how this “magic” of Event Storming is actually executed.

The Event-Storming Process

The beauty of Event Storming is in its ingenious simplicity. In physical spaces (preferred, when possible), all you need to hold a session of Event Storming is a very long wall (the longer the better), a bunch of supplies, mostly stickies and Sharpies, and four to five hours of time from well-represented members of your team. For a successful Event Storming session, it is critical that participants are not only engineers. Broad participation from such groups as product, design, and business stakeholders makes a significant difference. You can also host virtual Event Storming sessions using digital collaboration tools that can mimic the physical process described here.

The process of hosting physical Event Storming sessions starts by purchasing the supplies. To make things easier, we’ve created an Amazon shopping list that we use for Event Storming sessions (see Figure 4-9). It is comprised of:

-

A large number of stickies of different colors, most importantly, orange and blue, and then several other colors for various object types. You need a lot of those. (Stores never had enough for me, so I got in the habit of buying online.)

-

A roll of 1/2-inch white artist tape.

-

A long roll of paper (e.g., IKEA Mala Drawing Paper) that we are going to hang on the wall using the artist tape. Go ahead and create multiple “lanes.”

-

At least as many Sharpies as the number of session participants. Everybody needs to have their own!

-

Did we already mention a long, unobstructed wall that we can tape the roll of paper to?

Figure 4-9. Required supplies for an Event Storming session

During Event Storming sessions, broad participation, e.g., from subject-matter experts, product owners, and interaction designers, is very valuable. Event Storming sessions are short enough (just several hours rather than analysis requiring days or weeks) that, considering the value of their outcomes, the clarity they bring for all represented groups and the time they save in the long term, they are time well-invested for all participants. An Event Storming session that is limited to just software engineers is mostly useless, since it happens in a bubble and cannot lead to the cross-functional conversations necessary for desired outcomes.

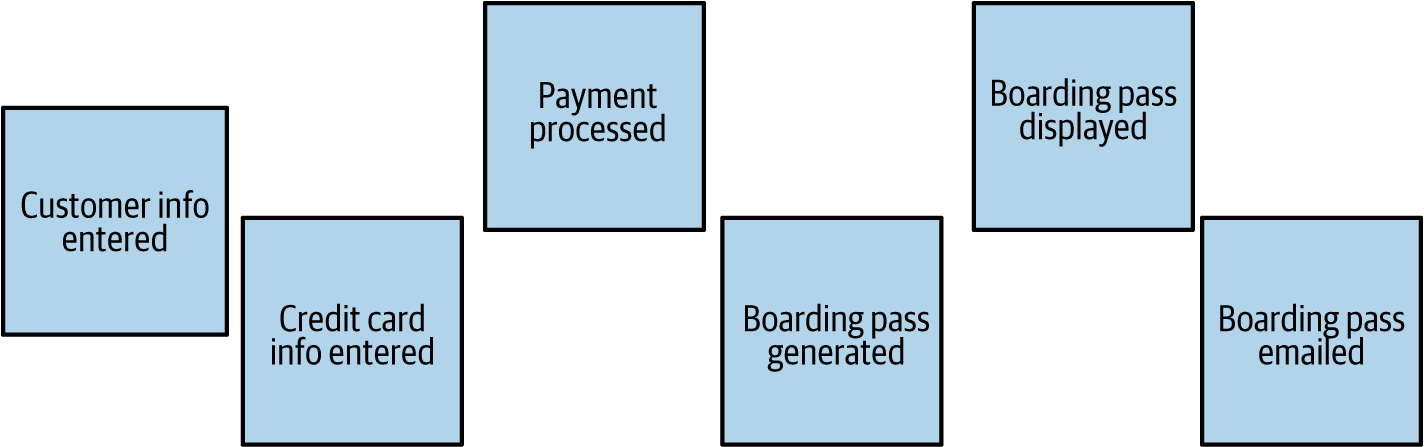

Once we have the supplies, the large room with a wide-open wall with a roll of paper we have taped to it, and all the required people, we (the facilitator) ask everybody to grab a bunch of orange stickies and a personal Sharpie. Then we give them a simple assignment: to write the key events of the domain being analyzed as orange sticky notes (one event per one note), expressed in a verb in the past tense, and place the notes along a timeline on the paper taped to the wall to create a “lane” of time, as shown in Figure 4-10.

Figure 4-10. An event timeline with sticky notes

Participants should not obsess about the exact sequence of events, and at this stage there should be no coordination of events among participants. The only thing they are asked is to individually think of as many events as possible and put the events they think occur earlier in time to the left, and put the later events more to the right. It is not their job to weed out duplicates. At least, not yet. This phase of the assignment usually takes 30 minutes to an hour, depending on the size of the problem and the number of participants. Usually, you want to see at least 100 event sticky notes generated before you can call it a success.

In the second phase of the exercise, the group is asked to look at the resulting set of notes on the wall, and with the help of the facilitator, to start arranging them into a more coherent timeline, identifying and removing duplicates. Given enough time, it is very helpful for the participants to start creating a “storyline,” walking through the events in an order that creates something like a “user journey.” In this phase, the team may have some questions or confusion; we don’t try to solve these issues, but rather capture them as “hotspots”—differently colored sticky notes (typically purple) that have the questions on them. Hotspots will need to be answered offline, in follow-ups. This phase can likewise take 30 to 60 minutes.

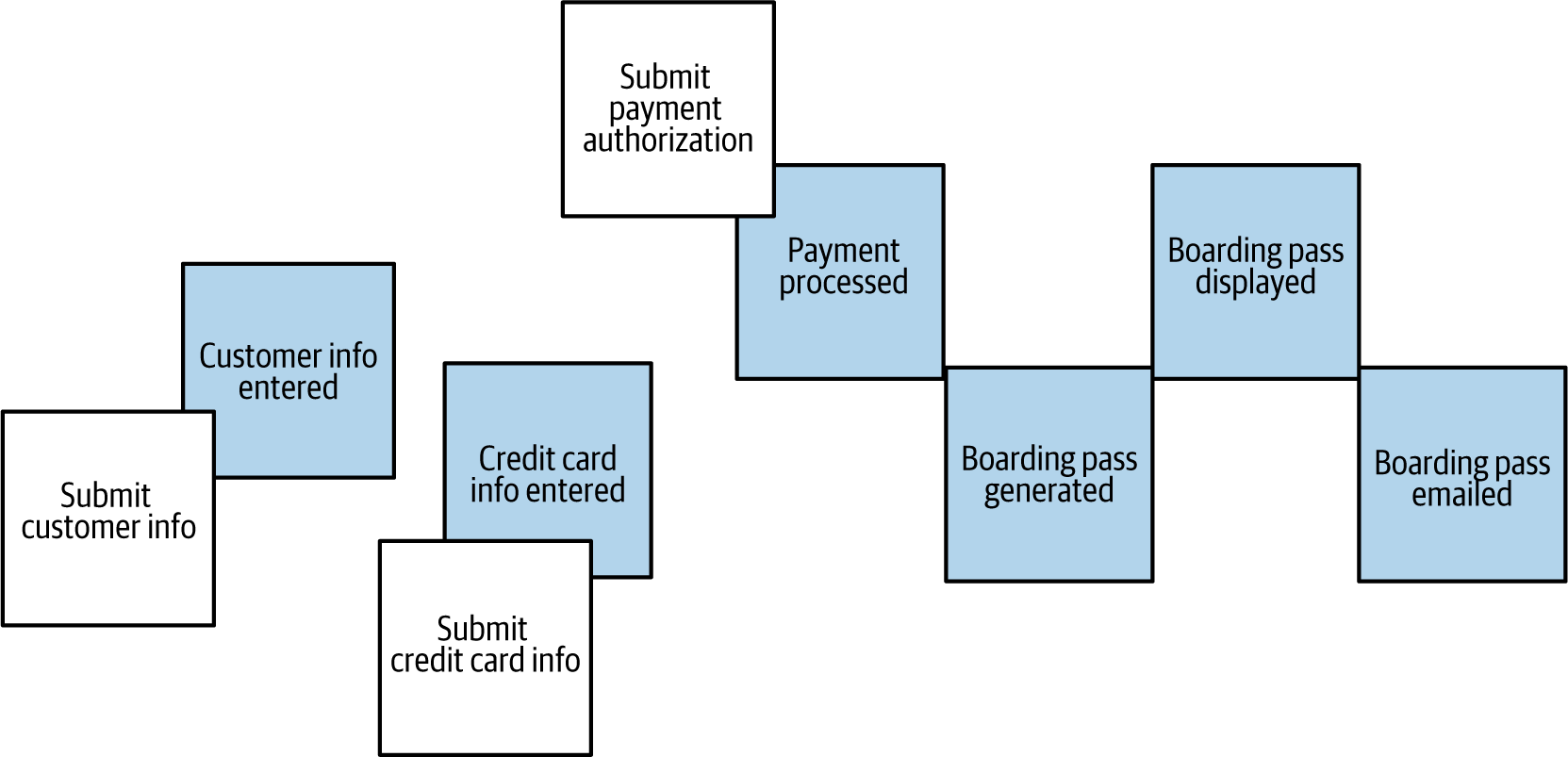

In the third stage, we create what in Event Storming is known as a reverse narrative. Basically, we walk the timeline backward, from the end to the start, and identify commands; things that caused the events. We use sticky notes of a different color (typically blue) for the commands. At this stage your storyboard may look something like Figure 4-11.

Figure 4-11. Introducing commands to the Event Storming timeline

Be aware that a lot of commands will have one-to-one relationship with an event. It will feel redundant, like the same thing worded in the past versus present. Indeed, if you look at the previous figure, the first two commands are like that. It often confuses people new to Event Storming. Just ignore it! We don’t pass judgment during Event Storming, and while some commands may be 1:1 with events, some will not be. For example, the “Submit payment authorization” command triggers a whole bunch of events. Just capture what you know/think happens in real life and don’t worry about making things “pretty” or “neat.” The real world you are modeling is also usually messy.

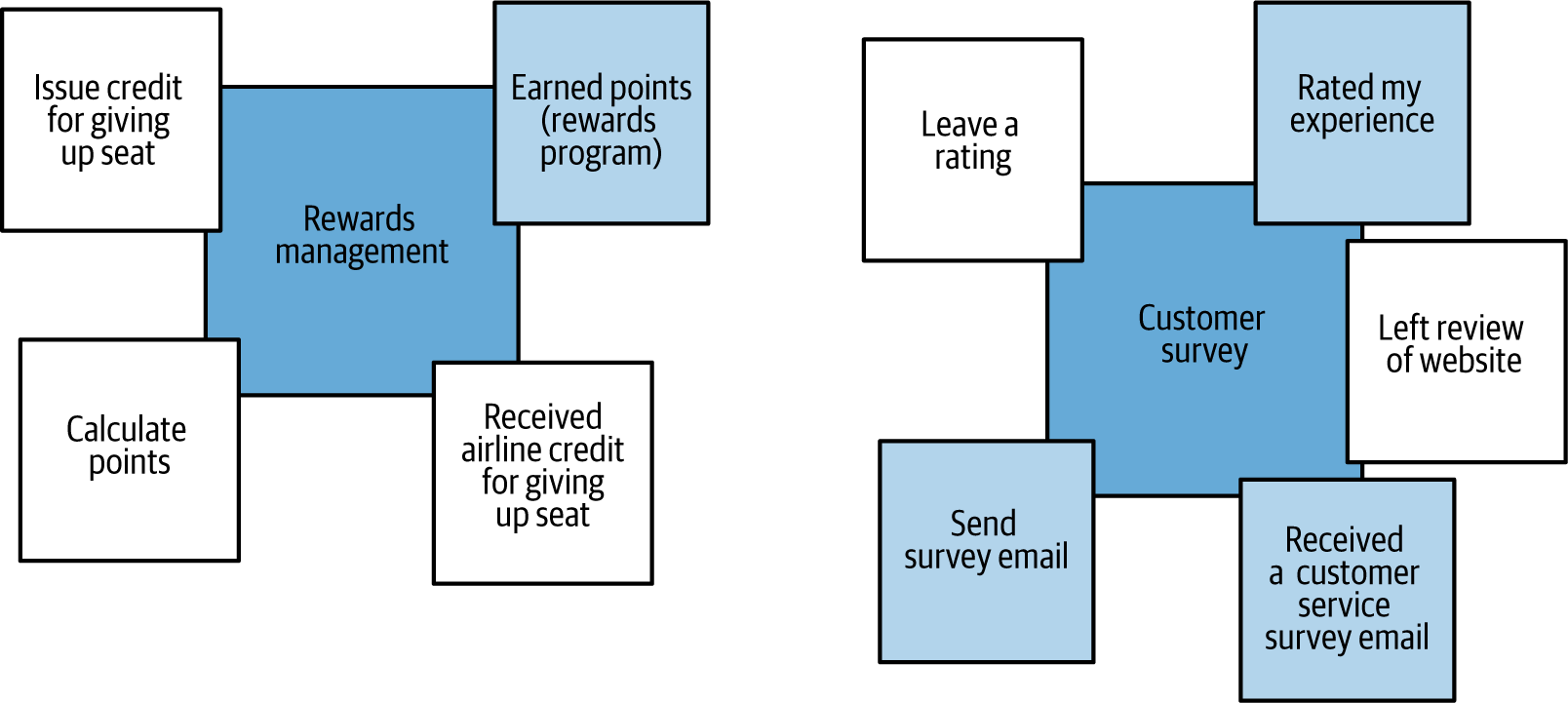

In the next phase, we acknowledge that commands do not produce events directly. Rather, special types of domain entities accept commands and produce events. In Event Storming, these entities are called aggregates (yes, the name is inspired by the similar notion in DDD). What we do in this stage is rearrange our commands and events, breaking the timeline when needed, such that the commands that go to the same aggregate are grouped around that aggregate and the events “fired” by that aggregate are also moved to it. You can see an example of this stage of Event Storming in Figure 4-12.

Figure 4-12. Aggregates on an Event Storming timeline

This phase of the exercise can take 15 to 25 minutes. Once we are done with it, you should discover that our wall now looks less like a timeline of events and more like a cluster of events and commands grouped around aggregates.

Guess what? These clusters are the bounded contexts we were looking for.

The only thing left is to classify various contexts by the level of their priority (similar to “root,” “supportive,” and “generic” in DDD). To do this, we create a matrix of bounded context/subdomains and rank them across two properties: difficulty and competitive edge. In each category, we use T-shirt sizes <S, M, or L> to rank accordingly. In the end, the decision making as to when to invest effort is based on the following guidelines:

-

Large competitive advantage/large effort: these are the contexts to design and implement in-house and spend most time on.

-

Small advantage/large effort: buy!

-

Small advantage/small effort: great assignments to trainees.

-

Other combinations are a coin toss and require a judgment call.

Note

This last phase, the “competitive analysis,” is not part of Brandolini’s original Event Storming process, and was proposed by Greg Young for prioritizing domains in DDD in general. We find it to be a useful and fun exercise when done with an adequate level of humor.

The entire process is very interactive, requires the involvement of all participants, and usually ends up being fun. It will require experienced facilitator to keep things moving smoothly, but the good news is that being a good facilitator doesn’t take the same effort as becoming a rocket scientist (or DDD expert). After reading this book and facilitating some mock sessions for practice, you can easily become a world-class Event Storming facilitator!

As a facilitator, it is a good idea to watch the time and have a plan for your session. For a four-hour session rough allocation of time would look like:

-

Phase 1 (~30 min): Discover domain events

-

Phase 2 (~45 min): Enforce the timeline

-

Phase 3 (~60 min): Reverse narrative and Command Identification

-

Phase 4 (~30 min): Identify aggregates/bounded contexts

-

Phase 5 (~15 min): Competitive analysis

And if you noticed that these times do not add up to 4 hours, keep in mind that you will want to give people some breaks in the middle, as well as leave yourself time to prepare the space and provide guidance in the beginning.

Introducing the Universal Sizing Formula

Bounded contexts are a fantastic starting point for rightsizing microservices. We have to be cautious, however, to not assume that microservice boundaries are synonymous with the bounded contexts from DDD or Event Storming. They are not. As a matter of fact, microservice boundaries cannot be assumed to be constant over time. They evolve over time and tend to follow an increasing granularity of microservices as the organizations and applications they are part of mature. For example, Adrian Cockroft noted that this was definitely a repeating trend that they had observed during his time at Netflix.

Nobody Gets Microservice Boundaries Perfectly at the Outset

In successful cases of microservices adoption, teams do not start with hundreds of microservices. They start with a much smaller number, closely aligned with bounded contexts. As time goes by, teams split microservices when they run into coordination dependencies that they need to eliminate. This also means that teams are not expected to get service boundaries “right” out of the gate. Instead, boundaries evolve over time, with a general direction of increased granularity.

It is worth noting that it’s typically easier to split a service than to merge several services back together, or to move a capability from one service to another. This is another reason why we recommend starting with a coarse-grained design and waiting until we learn more about the domain and have enough complexity before we split and increase service granularity.

We have found that there are three principles that work well together when thinking about the granularity of microservices. We call these principles the Universal Sizing Formula for microservices.

The Universal Sizing Formula

To achieve a reasonable sizing of microservices, you should:

-

Start with just a few microservices, possibly using bounded contexts.

-

Keep splitting as your application and services grow, being guided by the needs of coordination avoidance.

-

Be on the right trajectory for decreasing coordination. This is vastly more important than the current state of how “perfectly” you get service sizing.

Summary

In this chapter we addressed a critical question of how to properly size microservices head-on. We looked at Domain-Driven Design, a popular methodology for modeling decomposition in complex systems; explained the process of conducting a highly efficient domain analysis with the Event Storming methodology, and introduced the Universal Sizing Formula, which offers unique guidance for effective sizing of microservices.

In the following chapters we will go deeper into implementation, showing how to manage data in a loosely coupled, componentized microservices environment. We also will walk you through a sample implementation for our demo project: an online reservation system.

Get Microservices: Up and Running now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.