Our ears let us know approximately which direction sounds are coming from. Some sounds, like echoes, are not always informative, and there is a mechanism for filtering them out.

A major purpose of audition is telling where things are. There’s an analogy used by auditory neuroscientists that gives a good impression of just how hard a job this is. The information bottleneck for the visual system is the ganglion cells that connect the eyes to the brain [[Hack #13]]. There are about a million in each eye, so, in your vision, there are about two million channels of information available to determine where something is. In contrast, the bottleneck in hearing involves just two channels: one eardrum in each ear. Trying to locate sounds using the vibrations reaching the ears is like trying to say how many boats are out on a lake and where they are, just by looking at the ripples in two channels cut out from the edge of the lake. It’s pretty difficult stuff.

Your brain uses a number of cues to solve this problem. A sound will reach the near ear before the far ear, the time difference depending on the position of the sound’s source. This cue is known as the interaural (between the ears) time difference. A sound will also be more intense at the near ear than the far ear. This cue is known as the interaural level difference. Both these cues are used to locate sounds on the horizontal plane: the time difference (delay) for low-frequency sounds and the level difference (intensity) for high-frequency sounds (this is known as the Duplex Theory of sound localization). To locate sounds on the vertical plane, other cues in the spectrum of the sound (spectral cues) are used. The direction a sound comes from affects the way it is reflected by the outer ear (the ears we all see and think of as ears, but which auditory neuroscientists call pinnae). Depending on the sound’s direction, different frequencies in the sound are amplified or attenuated. Spectral cues are further enhanced by the fact that our ears are slightly different shapes, thus differently distort the sound vibrations.

The main cue is the interaural time difference. This cue dominates the others if they conflict. The spectral cues, providing elevation (up-down) information, aren’t as accurate and are often misleading.

Echoes are a further misleading factor, and seeing how we cope with them is a good way to really feel the complexity of the job of sound localization. Most environments—not just cavernous halls but the rooms in your house too—produce echoes. It’s hard enough to work out where a single sound is coming from, let alone having to distinguish between original sounds and their reverberations, all of which come at you from different directions. The distraction of these anomalous locations is mitigated by a special mechanism in the auditory system.

Those echoes that arrive at your ears within a very short interval are grouped together with the original sound, which arrives earliest. The brain takes only the first part of the sound to place the whole group. This is noticeable in a phenomenon known as the Haas Effect, also called the principle of first arrival or precedence effect.

The Haas Effect operates below a threshold of about 30–50 milliseconds between one sound and the next. Now, if the sounds are far enough apart, above the threshold, then you’ll hear them as two sounds from two locations, just as you should. That’s what we traditionally call echoes. By making echoes yourself and moving from an above-threshold delay to beneath it, you can hear the mechanism that deals with echoes come into play.

You can demonstrate the Haas Effect by clapping at a large wall. 1 Stand about 10 meters from the wall and clap your hands. At this distance, the echo of your hand clap will reach your ears more than 50 milliseconds after the original sound of your clap. You hear two sounds.

Now try walking toward the wall, while still clapping every pace. At about 5 meters—where the echo reaches your ears less than 50 ms after the original sound of the clap—you stop hearing sound coming from two locations. The location of the echo has merged with that of the original sound; both now appear to come as one sound from the direction of your original clap. This is the precedence effect in action, just one of many mechanisms that exist to help you make sense of the location of sounds.

The initial computations used in locating sounds occur in the brainstem, in a peculiarly named region called the superior olive. Because the business of localization begins in the brainstem, surprising sounds are able to quickly produce a turn of the head or body to allow us to bring our highest resolution sense, vision, to bear in figuring out what is going on. The rapidity of this response wouldn’t be possible if the information from the two ears were integrated only later in processing.

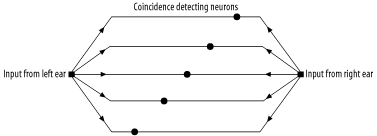

The classic model for how interaural time differences are processed is called the Jeffress Model, and it works as shown in Figure 4-1. Cells in the midbrain indicate a sound’s position by increasing their firing in response to sound, and each cell takes sound input from both ears. The cell that fires most is the one that receives a signal from both ears simultaneously. Because these cells are most active when the inputs from both sides are synchronized, they’re known as coincidence-detector neurons.

Figure 4-1. Neurons used in computing sound position fire when inputs from the left and right ear arrive simultaneously. Differences in time delays along the connecting lines mean that different arrival times between signals at the left and right ear trigger different neurons.

Now imagine if a sound comes from your left, reaching your right ear only after a tiny delay. If a cell is going to receive both signals simultaneously, it must be because the left-ear signal has been slowed down, somewhere in the brain, to exactly compensate for the delay. The Jeffress Model posits that the brain contains an array of coincidence-detector cells, each with particular delays for the signals from either side. By this means, each possible location can be represented with the activity of neurons with the appropriate delays built in.

Note

The Jeffress Model may not be entirely correct. Most of the neurobiological evidence for it comes from work with barn owls, which can strike prey in complete darkness. Evidence from small mammals suggests other mechanisms also operate. 2

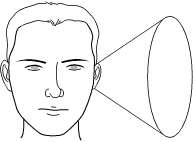

An ambiguity of localization comes with using interaural time difference, because sounds need not be on the horizontal plane—they could be in front, behind, above, or below. A sound that comes in from your front right, on the same level as you, at an angle of 33° will sound, in terms of the interaural differences in timing and intensity, just like the same sound coming from your back right at an angle of 33° or from above right at an angle of 33°. Thus, there is a “cone of confusion” as to where you place a sound, and that is what is shown in Figure 4-2. Normally you can use the other cues, such as the distortion introduced by your ears (spectral cues) to reduce the ambiguity.

The more information in a sound, the easier it is to localize. Noise containing different frequencies is easier to localize. This is why they now add white noise, which contains all frequencies in equal proportions, to the siren sounds of emergency vehicles, 3 unlike the pure tones that have historically been used.

If you are wearing headphones, you don’t get spectral cues from the pinnae, so you can’t localize sounds on the up-down dimension. You also don’t have the information to decide if a sound is coming from in front or from behind.

Even without headphones, our localization in the up-down dimension is pretty poor. This is why a sound from down and behind you (if you are on a balcony, for example) can sound right behind you. By default, we localize sounds to either the center left or center right of our ears—this broad conclusion is enough to tell us which way to turn our heads, despite the ambiguity that prevents more precise localization.

This ambiguity in hearing is the reason we cock our heads when listening. By taking multiple readings of a sound source, we overlap the ambiguities and build up a composite, interpolated set of evidence on where a sound might be coming from. (And if you watch a bird cocking its head, looking at the ground, it’s listening to a worm and doing the same. 4 )

Still, hearing provides a rough, quick idea of where a sound is coming from. It’s enough to allow us to turn toward the sound or to process sounds differently depending on where they come from [[Hack #54]].

Wall and clapping idea thanks to Geoff Martin and from his web site at http://www.tonmeister.ca/main/textbook/ .

McAlpine, D., & Grothe, B. (2003). Sound localization and delay lines—Do mammals fit the model? Trends in Neurosciences, 26(7), 347–350.

“Siren Sounds: Do They Actually Contribute to Traffic Accidents?” ( http://www.soundalert.com/pdfs/impact.pdf ).

Montgomerie, R., & Weatherhead, P. J. (1997). How robins find worms. Animal Behaviour, 54, 137–143.

Get Mind Hacks now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.