Chapter 8. Mining the Semantically Marked-Up Web: Extracting Microformats, Inferencing over RDF, and More

While the previous chapters attempted to provide an overview of some popular websites from the social web, this chapter connects that discussion with a highly pragmatic discussion about the semantic web. Think of the semantic web as a version of the Web much like the one that you are already experiencing, except that it has evolved to the point that machines are routinely extracting vast amounts of information that they are able to reason over and making automated decisions based upon that information.

The semantic web is a topic worthy of a book in itself, and this chapter is not intended to be any kind of proper technical discussion about it. Rather, it is framed as a technically oriented “cocktail discussion” and attempts to connect the highly pragmatic social web with the more grandiose vision for a semantic web. It is considerably more hypothetical than the chapters before it, but there is still some important technology covered in this chapter.

One of the primary topics that you’ll learn about in this chapter from a practitioner’s standpoint is microformats, a relatively simple technique for embedding unambiguous structured data in web pages that machines can rather trivially parse out and use for various kinds of automated reasoning. As you’re about to learn, microformats are an exciting and active chapter in the Web’s evolution and are being actively pursued by the IndieWeb, as well as a number of powerful corporations through an effort called Schema.org. The implications of microformats for social web mining are vast.

Before wrapping up the chapter, we’ll bridge the microformats discussion with the more visionary hopes for the semantic web by taking a brief look at some technology for inferencing over collections of facts with inductive logic to answer questions. By the end of the chapter, you should have a pretty good idea of where the mainstream Web stands with respect to embedding semantic metadata into web pages, and how that compares with something a little closer to a state-of-the-art implementation.

Note

Always get the latest bug-fixed source code for this chapter (and every other chapter) online at http://bit.ly/MiningTheSocialWeb2E. Be sure to also take advantage of this book’s virtual machine experience, as described in Appendix A, to maximize your enjoyment of the sample code.

Overview

As mentioned, this chapter is a bit more hypothetical than the chapters before it and provides some perspective that may be helpful as you think about the future of the Web. In this chapter you’ll learn about:

Common types of microformats

How to identify and manipulate common microformats from web pages

The currently utility of microformats in the Web

A brief overview of the semantic web and semantic web technology

Performing inference on semantic web data with a Python toolkit called FuXi

Microformats: Easy-to-Implement Metadata

In terms of the Web’s ongoing evolution, microformats are quite an important step forward because they provide an effective mechanism for embedding “smarter data” into web pages and are easy for content authors to implement. Put succinctly, microformats are simply conventions for unambiguously embedding metadata into web pages in an entirely value-added way. This chapter begins by briefly introducing the microformats landscape and then digs right into some examples involving specific uses of geo, hRecipe, and hResume microformats. As we’ll see, some of these microformats build upon constructs from other, more fundamental microformats—such as hCard—that we’ll also investigate.

Although it might seem like somewhat of a stretch to call data decorated with microformats like geo or hRecipe “social data,” it’s still interesting and inevitably plays an increased role in social data mashups. At the time the first edition of this book was published back in early 2011, nearly half of all web developers reported some use of microformats, the microformats.org community had just celebrated its fifth birthday, and Google reported that 94% of the time, microformats were involved in rich snippets. Since then, Google’s rich snippets initiative has continued to gain momentum, and Schema.org has emerged to try to ensure a shared vocabulary across vendors implementing semantic markup with comparable technologies such as microformats, HTML5 microdata, and RDFa. Accordingly, you should expect to see continued growth in semantic markup overall, although not all semantic markup initiatives may continue to grow at the same pace. As with almost anything else, market dynamics, corporate politics, and technology trends all play a role.

Note

Web Data Commons extracts structured data such as microformats from the Common Crawl web corpus and makes it available to the public for study and consumption. In particular, the detailed results from the August 2012 corpus provide some intriguing statistics. It appears that the combined initiatives by Schema.org have been significant.

The story of microformats is largely one of narrowing the gap between a fairly ambiguous Web primarily based on the human-readable HTML 4.01 standard and a more semantic Web in which information is much less ambiguous and friendlier to machine interpretation. The beauty of microformats is that they provide a way to embed structured data that’s related to aspects of life and social activities such as calendaring, résumés, food, products, and product reviews, and they exist within HTML markup right now in an entirely backward-compatible way. Table 8-1 provides a synopsis of a few popular microformats and related initiatives you’re likely to encounter if you look around on the Web. For more examples, see http://bit.ly/1a1oKLV.

| Technology | Purpose | Popularity | Markup specification | Type |

| XFN | Representing human-readable relationships in hyperlinks | Widely used by blogging platforms in the early 2000s to implicitly represent social graphs. Rapidly increased in popularity after the retirement of Google’s Social Graph API but is now finding new life in IndieWeb’s RelMeAuth initiative for web sign-in. | Semantic HTML, XHTML | Microformat |

| geo | Embedding geocoordinates for people and objects | Widely used, especially by sites such as OpenStreetMap and Wikipedia. | Semantic HTML, XHTML | Microformat |

| hCard | Identifying people, companies, and other contact info | Widely used and included in other popular microformats such as hResume. | Semantic HTML, XHTML | Microformat |

| hCalendar | Embedding iCalendar data | Continuing to steadily grow. | Semantic HTML, XHTML | Microformat |

| hResume | Embedding résumé and CV information | Widely used by sites such as LinkedIn, which presents public résumés in hResume format for its more than 200 million worldwide users. | Semantic HTML, XHTML | Microformat |

| hRecipe | Identifying recipes | Widely used by niche food sites such as subdomains on about.com (e.g., thaifood.about.com). | Semantic HTML, XHTML | Microformat |

| Microdata | Embedding name/value pairs into web pages authored in HTML5 | A technology that emerged as part of HTML5 and has steadily gained traction, especially because of Google’s rich snippets initiative and Schema.org. | HTML5 | W3C initiative |

| RDFa | Embedding unambiguous facts into XHTML pages according to specialized vocabularies created by subject-matter experts | The basis of Facebook’s Open Graph protocol and Open Graph concepts, which have rapidly grown in popularity. Otherwise, somewhat hit-or-miss depending on the particular vocabulary. | XHTML[a] | W3C initiative |

| Open Graph protocol | Embedding profiles of real-world things into XHTML pages | Has seen rapid growth and still has tremendous potential given Facebook’s more than 1 billion users. | XHTML (RDFa-based) | Facebook platform initiative |

[a] Embedding RDFa into semantic markup and HTML5 continues to be an active effort at the time of this writing. See the W3C HTML+RDFa 1.1 Working Draft. | ||||

If you know much about the short history of the Web, you’ll recognize that innovation is rampant and that the highly decentralized way in which the Web operates is not conducive to overnight revolutions; rather, change seems to happen continually, fluidly, and in an evolutionary way. For example, as of mid-2013 XFN (the XHTML Friends Network) seems to have lost most of its momentum in representing social graphs, due to the declining popularity of blogrolls and because large social web properties such as Facebook, Twitter, and others have not adopted it and instead have pursued their own initiatives for socializing data. Significant technological investments such as Google’s Social Graph API used XFN as a foundation and contributed to its overall popularity. However, the retirement of Google’s Social Graph API back in early 2012 seems to have had a comparable dampening effect. It appears that many implementers of social web technologies were building directly on Google’s Social Graph API and effectively using it as a proxy for microformats such as XFN rather than building on XFN directly.

Whereas the latter would have been a safer bet looking back, the convenience of the former took priority and somewhat ironically led to a “soft reset” of XFN. However, XFN is now finding a new and exciting life as part of an IndieWeb initiative known as RelMeAuth, the technology behind a web sign-in that proposes an intriguing open standard for using your own website or other social profiles for authentication.

Note

In the same way that XFN is fluidly evolving to meet new needs of the Web, microformats in general have evolved to fill the voids of “intelligent data” on the Web and serve as a particularly good example of bridging existing technology with emerging technology for everyone’s mutual benefit.

There are many other microformats that you’re likely to encounter. Although a good rule of thumb is to watch what the bigger fish in the pond—such as Google, Yahoo!, Bing, and Facebook—are doing, you should also keep an eye on what is happening in communities that define and shape open standards. The more support a microformat or semantic gets from a player with significant leverage, the more likely it will be to succeed and become useful for data mining, but never underestimate the natural and organic effects of motivated community leaders who genuinely seek to serve the greater good with allegiance to no particular corporate entity. The collaborative efforts of providers involved with Schema.org should be of particular interest to watch over the near term, but so should IndieWeb and W3C initiatives.

Note

See HTML, XML, and XHTML for a brief aside about semantic markup as it relates to HTML, XML, and XHTML.

Geocoordinates: A Common Thread for Just About Anything

The implications of using microformats are subtle yet somewhat profound: while a human might be reading an article about a place like Franklin, Tennessee and intuitively know that a dot on a map on the page denotes the town’s location, a robot could not reach the same conclusion easily without specialized logic that targets various pattern-matching possibilities. Such page scraping is a messy proposition, and typically just when you think you have all of the possibilities figured out, you find that you’ve missed one. Embedding proper semantics into the page that effectively tag unstructured data in a way that even Robby the Robot could understand removes ambiguity and lowers the bar for crawlers and developers. It’s a win-win situation for the producer and the consumer, and hopefully the net effect is increased innovation for everyone.

Although it’s certainly true that standalone geodata isn’t particularly social, important but nonobvious relationships often emerge from disparate data sets that are tied together with a common geographic context.

Geodata is ubiquitous. It plays a powerful part in too many social mashups to name, because a particular point in space can be used as the glue for clustering people together. The divide between “real life” and life on the Web continues to close, and just about any kind of data becomes social the moment that it is tied to a particular individual in the real world. For example, there’s an awful lot that you might be able to tell about people based on where they live and what kinds of food they like. This section works through some examples of finding, parsing, and visualizing geo-microformatted data.

One of the simplest and most widely used microformats that embeds

geolocation information into web pages is appropriately called geo. The

specification is inspired by a property with the same name from vCard, which provides a means of

specifying a location. There are two possible means of embedding a

microformat with geo. The following HTML snippet

illustrates the two techniques for describing Franklin, Tennessee:

<!-- The multiple class approach --><spanstyle="display: none"class="geo"><spanclass="latitude">36.166</span><spanclass="longitude">-86.784</span></span><!-- When used as one class, the separator must be a semicolon --><spanstyle="display: none"class="geo">36.166; -86.784</span>

As you can see, this microformat simply wraps latitude and

longitude values in tags with corresponding class names, and packages

them both inside a tag with a class of geo. A number of popular sites, including

Wikipedia and OpenStreetMap, use geo and other microformats to expose

structured data in their pages.

Note

A common practice with geo

is to hide the information that’s encoded from the user. There are

two ways that you might do this with traditional CSS: style="display: none" and style="visibility: hidden". The former

removes the element’s placement on the page entirely so that the

layout behaves as though it is not there at all. The latter hides

the content but reserves the space it takes up on the page.

Example 8-1 illustrates a simple

program that parses geo-microformatted data from a Wikipedia page to

show how you could extract coordinates from content implementing the

geo microformat. Note that Wikipedia’s terms of use

define a bot

policy that you should review prior to attempting to retrieve

any content with scripts such as the following. The gist is that you’ll

need to download data archives that Wikipedia periodically updates as

opposed to writing bots to pull nontrivial volumes of data from the live

site. (It’s fine for us to yank a web page here for educational

purposes.)

Warning

As should always be the case, carefully review a website’s terms of service to ensure that any scripts you run against it comply with its latest guidelines.

importrequests# pip install requestsfromBeautifulSoupimportBeautifulSoup# pip install BeautifulSoup# XXX: Any URL containing a geo microformat...URL='http://en.wikipedia.org/wiki/Franklin,_Tennessee'# In the case of extracting content from Wikipedia, be sure to# review its "Bot Policy," which is defined at# http://meta.wikimedia.org/wiki/Bot_policy#Unacceptable_usagereq=requests.get(URL,headers={'User-Agent':"Mining the Social Web"})soup=BeautifulSoup(req.text)geoTag=soup.find(True,'geo')ifgeoTagandlen(geoTag)>1:lat=geoTag.find(True,'latitude').stringlon=geoTag.find(True,'longitude').string'Location is at',lat,lonelifgeoTagandlen(geoTag)==1:(lat,lon)=geoTag.string.split(';')(lat,lon)=(lat.strip(),lon.strip())'Location is at',lat,lonelse:'No location found'

The following sample results illustrate that the output is just a set of coordinates, as expected:

Location is at 35.92917 -86.85750

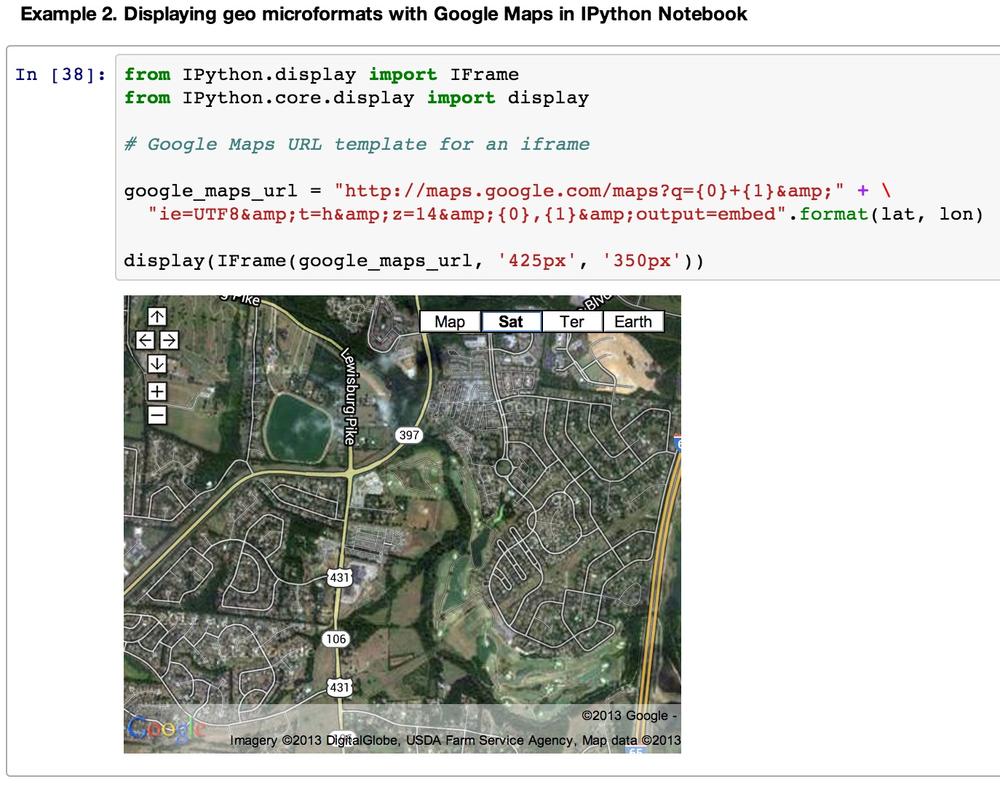

To make the output a little bit more interesting, however, you could display the results directly in IPython Notebook with an inline frame, as shown in Example 8-2.

fromIPython.displayimportIFramefromIPython.core.displayimportdisplay# Google Maps URL template for an iframegoogle_maps_url="http://maps.google.com/maps?q={0}+{1}&"+\"ie=UTF8&t=h&z=14&{0},{1}&output=embed".format(lat,lon)display(IFrame(google_maps_url,'425px','350px'))

Sample results after executing this call in IPython Notebook are shown in Figure 8-1.

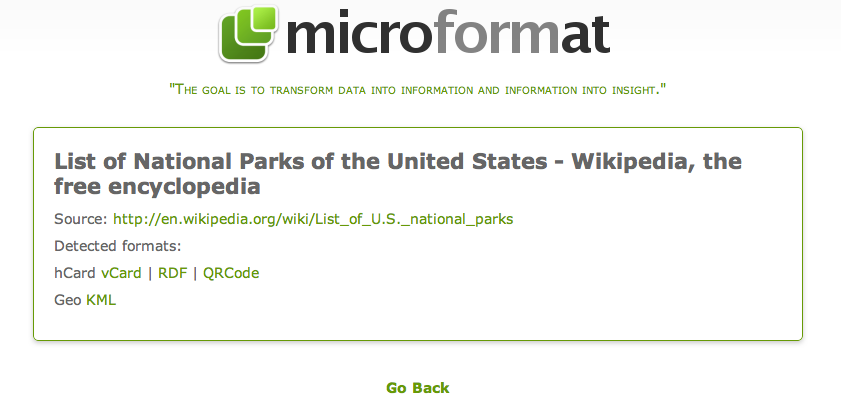

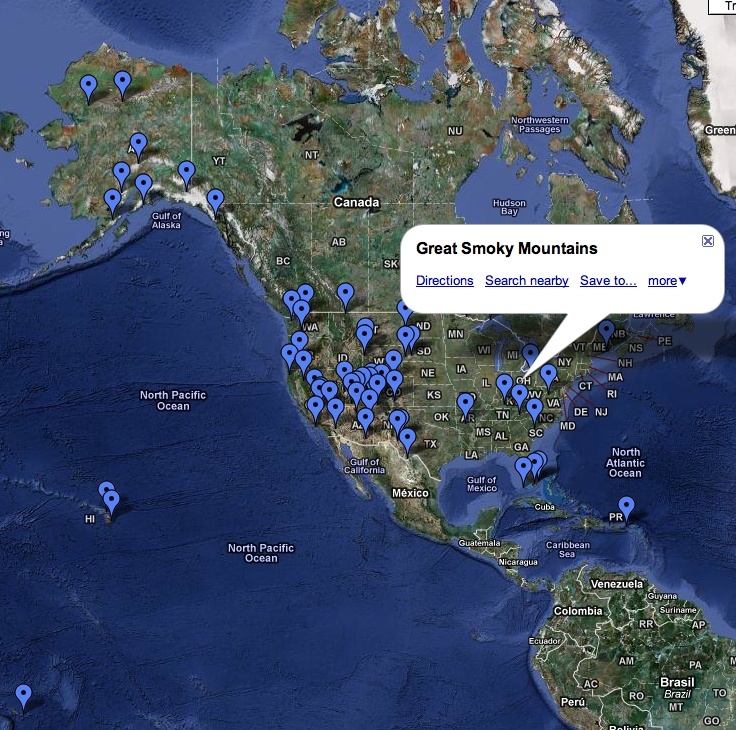

The moment you find a web page with compelling geodata embedded, the first thing you’ll want to do is visualize it. For example, consider the “List of National Parks of the United States” Wikipedia article. It displays a nice tabular view of the national parks and marks them up with geoformatting, but wouldn’t it be nice to quickly load the data into an interactive tool for visual inspection? A terrific little web service called microform.at extracts several types of microformats from a given URL and passes them back in a variety of useful formats. It exposes multiple options for detecting and interacting with microformat data in web pages, as shown in Figure 8-2.

If you’re given the option, KML (Keyhole Markup Language) output is one of the more ubiquitous ways to visualize geodata. You can either download Google Earth and load the KML file locally, or type a URL containing KML data directly into the Google Maps search bar to bring it up without any additional effort required. In the results displayed for microform.at, clicking on the “KML” link triggers a file download that you can use in Google Earth, but you can copy it to the clipboard via a right-click and pass that to Google Maps.

Figure 8-3 displays the Google Maps

visualization for http://microform.at/?type=geo&url=http%3A%2F%2Fen.wikipedia.org%2Fwiki%2FList_of_U.S._national_parks—the

KML results for the aforementioned Wikipedia article, which is just the

base URL http://microform.at with type

and url query string parameters.

The ability to start with a Wikipedia article containing semantic markup such as geodata and trivially visualize it is a powerful analytical capability because it delivers insight quickly for so little effort. Browser extensions such as the Firefox Operator add-on aim to minimize the effort even further. Only so much can be said in one chapter, but a neat way to spend an hour or so would be to mash up the national park data from this section with contact information from your LinkedIn professional network to discover how you might be able to have a little bit more fun on your next (possibly contrived) business trip. (See Visualizing geographic clusters with Google Earth for an example of how to harvest and analyze geodata by applying the k-means technique for finding clusters and computing centroids for those clusters.)

Using Recipe Data to Improve Online Matchmaking

Since Google’s rich snippets initiative took off, there’s been an ever-increasing awareness of microformats, and many of the most popular foodie websites have made solid progress in exposing recipes and reviews with hRecipe and hReview. Consider the potential for a fictitious online dating service that crawls blogs and other social hubs, attempting to pair people together for dinner dates. One could reasonably expect that having access to enough geo and hRecipe information linked to specific people would make a profound difference in the “success rate” of first dates.

People could be paired according to two criteria: how close they live to each other and what kinds of foods they eat. For example, you might expect a dinner date between two individuals who prefer to cook vegetarian meals with organic ingredients to go a lot better than a date between a BBQ lover and a vegan. Dining preferences and whether specific types of allergens or organic ingredients are used could be useful clues to power the right business idea. While we won’t be trying to launch a new online dating service, we’ll get the ball rolling in case you decide to take this idea and move forward with it.

About.com is one of the more prevalent online sites that’s really embracing microformat initiatives for the betterment of the entire Web, exposing recipe information in the hRecipe microformat and using the hReview microformat for reviews of the recipes; epicurious and many other popular sites have followed suit, due to the benefits afforded by Schema.org initiatives that take advantage of this information for web searches. This section briefly demonstrates how search engines (or you) might parse out the structured data from recipes and reviews contained in web pages for indexing or analyzing. An adaptation of Example 8-1 that parses out hRecipe-formatted data is shown in Example 8-3.

Warning

Although the spec is well defined, microformat implementations may vary subtly. Consider the following code samples that parse web pages more of a starting template than a robust, full-spec parser. A microformats parser implemented in Node.js, however, emerged on GitHub in early 2013 and may be worthy of consideration if you are seeking a more robust solution for parsing web pages with microformats.

importsysimportrequestsimportjsonimportBeautifulSoup# Pass in a URL containing hRecipe...URL='http://britishfood.about.com/od/recipeindex/r/applepie.htm'# Parse out some of the pertinent information for a recipe.# See http://microformats.org/wiki/hrecipe.defparse_hrecipe(url):req=requests.get(URL)soup=BeautifulSoup.BeautifulSoup(req.text)hrecipe=soup.find(True,'hrecipe')ifhrecipeandlen(hrecipe)>1:fn=hrecipe.find(True,'fn').stringauthor=hrecipe.find(True,'author').find(text=True)ingredients=[i.stringforiinhrecipe.findAll(True,'ingredient')ifi.stringisnotNone]instructions=[]foriinhrecipe.find(True,'instructions'):iftype(i)==BeautifulSoup.Tag:s=''.join(i.findAll(text=True)).strip()eliftype(i)==BeautifulSoup.NavigableString:s=i.string.strip()else:continueifs!='':instructions+=[s]return{'name':fn,'author':author,'ingredients':ingredients,'instructions':instructions,}else:return{}recipe=parse_hrecipe(URL)json.dumps(recipe,indent=4)

For a sample URL such as a popular apple pie recipe, you should get something like the following (abbreviated) results:

{"instructions":["Method","Place the flour, butter and salt into a large clean bowl...","The dough can also be made in a food processor by mixing the flour...","Heat the oven to 425°F/220°C/gas 7.","Meanwhile simmer the apples with the lemon juice and water..."],"ingredients":["Pastry","7 oz/200g all purpose/plain flour","pinch of salt","1 stick/ 110g butter, cubed or an equal mix of butter and lard","2-3 tbsp cold water","Filling","1 ½ lbs/700g cooking apples, peeled, cored and quartered","2 tbsp lemon juice","½ cup/ 100g sugar","4 - 6 tbsp cold water","1 level tsp ground cinnamon ","¼ stick/25g butter","Milk to glaze"],"name":"\t\t\t\t\t\t\tTraditional Apple Pie Recipe\t\t\t\t\t","author":"Elaine Lemm"}

Aside from space and time, food may be the next most fundamental thing that brings people together, and exploring the opportunities for social analysis and data analytics involving people, food, space, and time could really be quite interesting and lucrative. For example, you might analyze variations of the same recipe to see whether there are any correlations between the appearance or lack of certain ingredients and ratings/reviews for the recipes. You could then try to use this as the basis for better reaching a particular target audience with recommendations for products and services, or possibly even for prototyping that dating site that hypothesizes that a successful first date might highly correlate with a successful first meal together.

Pull down a few different apple pie recipes to determine which ingredients are common to all recipes and which are less common. Can you correlate the appearance or lack of different ingredients to a particular geographic region? Do British apple pies typically contain ingredients that apple pies cooked in the southeast United States do not, and vice versa? How might you use food preferences and geographic information to pair people?

The next section introduces an additional consideration for constructing an online matchmaking service like the one we’ve discussed.

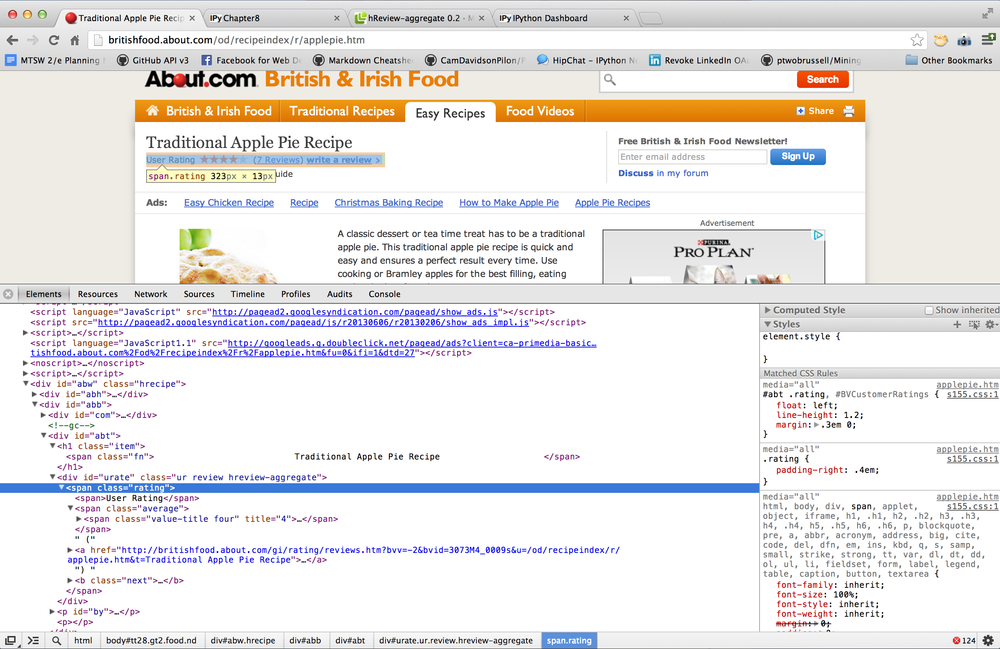

Retrieving recipe reviews

This section concludes our all-too-short survey of microformats by briefly introducing hReview-aggregate, a variation of the hReview microformat that exposes the aggregate rating about something through structured data that’s easily machine parseable. About.com’s recipes implement hReview-aggregate so that the ratings for recipes can be used to prioritize search results and offer a better experience for users of the site. Example 8-4 demonstrates how to extract hReview information.

importrequestsimportjsonfromBeautifulSoupimportBeautifulSoup# Pass in a URL that contains hReview-aggregate info...URL='http://britishfood.about.com/od/recipeindex/r/applepie.htm'defparse_hreview_aggregate(url,item_type):req=requests.get(URL)soup=BeautifulSoup(req.text)# Find the hRecipe or whatever other kind of parent item encapsulates# the hReview (a required field).item_element=soup.find(True,item_type)item=item_element.find(True,'item').find(True,'fn').text# And now parse out the hReviewhreview=soup.find(True,'hreview-aggregate')# Required fieldrating=hreview.find(True,'rating').find(True,'value-title')['title']# Optional fieldstry:count=hreview.find(True,'count').textexceptAttributeError:# optionalcount=Nonetry:votes=hreview.find(True,'votes').textexceptAttributeError:# optionalvotes=Nonetry:summary=hreview.find(True,'summary').textexceptAttributeError:# optionalsummary=Nonereturn{'item':item,'rating':rating,'count':count,'votes':votes,'summary':summary}# Find hReview aggregate information for an hRecipereviews=parse_hreview_aggregate(URL,'hrecipe')json.dumps(reviews,indent=4)

Here are truncated sample results for Example 8-4:

{"count":"7","item":"Traditional Apple Pie Recipe","votes":null,"summary":null,"rating":"4"}

There’s no limit to the innovation that can happen when you combine geeks and food data, as evidenced by the popularity of the much-acclaimed Cooking for Geeks, also from O’Reilly. As the capabilities of food sites evolve to provide additional APIs, so will the innovations that we see in this space. Figure 8-4 displays a screenshot of the underlying HTML source for a sample web page that displays its hReview-aggregate implementation for those who might be interested in viewing the source of a page.

Note

Most modern browsers now implement CSS query selectors natively,

and you can use document.querySelectorAll to poke around

in the developer console for your particular browser to review

microformats in JavaScript. For example, run document.querySelectorAll(".hrecipe") to

query for any nodes that have the hrecipe class applied, per the

specification.

Accessing LinkedIn’s 200 Million Online Résumés

LinkedIn implements hResume (which itself extensively builds on top of the hCard and hCalendar microformats) for its 200 million users, and this section provides a brief example of the rich data that it makes available for search engines and other machines to consume as structured data. hResume is particularly rich in that you may be able to discover contact information, professional career experience, education, affiliations, and publications, with much of this data being composed as embedded hCalendar and hCard information.

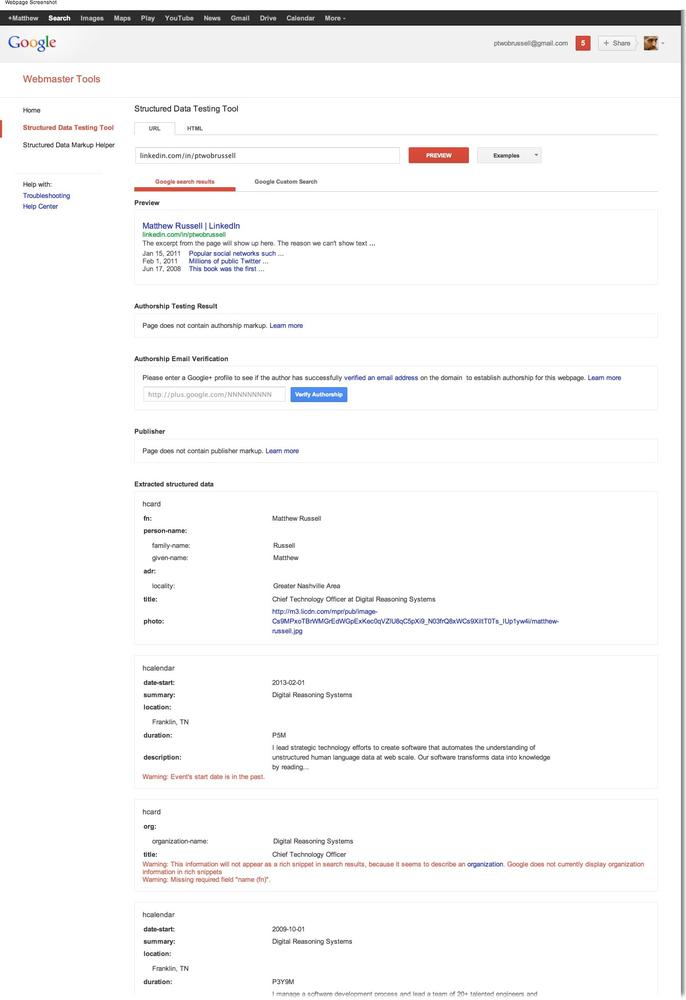

Given that LinkedIn’s implementation is rather extensive and that our scope here is not to write a robust microformats parser for the general case, we’ll opt to take a look at Google’s Structured Data Testing Tool instead of implementing another Python parser in this short section. Google’s tool allows you to plug in any URL, and it’ll extract the semantic markup that it finds and even show you a preview of what Google might display in its search results. Depending on the amount of information in the web page, the results can be extensive. For example, a fairly thorough LinkedIn profile produces multiple screen lengths of results that are broken down by all of the aforementioned fields that are available. LinkedIn’s hResume implementation is thorough, to be sure, as the sample results in Figure 8-5 illustrate. Given the sample code from earlier in this chapter, you’ll have a good starting template to parse out hResume data should you choose to accept this challenge.

Note

You can use Google’s Structured Data Testing Tool on any arbitrary URL to see what structured data may be tucked beneath the surface. In fact, this would be a good first step to take before implementing a parser, and a good help in debugging your implementation, which is part of the underlying intention behind the tool.

From Semantic Markup to Semantic Web: A Brief Interlude

To bring the discussion back full circle before transitioning into coverage of the semantic web, let’s briefly reflect on what we’ve explored so far. Basically, we’ve learned that there are active initiatives under way that aim to make it possible for machines to extract the structured content in web pages for many ubiquitous things such as résumés, recipes, and geocoordinates. Content authors can feed search engines with machine-readable data that can be used to enhance relevance rankings, provide more informative displays to users, and otherwise reach consumers in increasingly useful ways right now. The last two words of the previous sentence are important, because in reality the vision for a more grandiose semantic web as could be defined in a somewhat ungrounded manner and all of the individual initiatives to get there are two quite different things.

For all of the limitations with microformats, the reality is that they are one important step in the Web’s evolution. Content authors and consumers are both benefitting from them, and the heightened awareness of both parties is likely to lead to additional evolutions that will surely manifest in even greater things to come.

Although it is quite reasonable to question exactly how scalable this approach is for the longer haul, it is serving a relevant purpose for the current Web as we know it, and we should be grateful that corporate politicos and Open Web advocates have been able to cooperate to the point that the Web continues to evolve in a healthy direction. However, it’s quite all right if you are not satisfied with the idea that the future of the Web might depend on small armies of content providers carefully publishing metadata in pages (or writing finely tuned scripts to publish metadata in pages) so that machines can better understand them. It will take many more years to see the fruition of it all, but keep in mind from previous chapters that technologies such as natural language processing (NLP) continue to receive increasing amounts of attention by academia and industry alike.

Eventually, through the gradual evolution of technologies that can understand human language, we’ll one day realize a Web filled with robots that can understand human language data in the context in which it is used, and in nonsuperficial ways. In the meantime, contributing efforts of any kind are necessary and important for the Web’s continued growth and betterment. A Web in which bots are able to consume human language data that is not laden with gobs of structured metadata that describe it and to effectively coerce it into the kind of structured data that can be reasoned over is one we should all be excited about, but unfortunately, it doesn’t exist yet. Hence, it’s not difficult to make a strong case that the automated understanding of natural language data is among the worthiest problems of the present time, given the enormous impact it could have on virtually all aspects of life.

The Semantic Web: An Evolutionary Revolution

Semantic web can mean different things to different people, so let’s start out by dissecting the term. Given that the Web is all about sharing information and that a working definition of semantics is “enough meaning to result in an action,”[29] it seems reasonable to assert that the semantic web is generally about representing knowledge in a meaningful way—but for whom to consume? Let’s take what may seem like a giant leap of faith and not assume that it’s a human who is consuming the information that’s represented. Let’s instead consider the possibilities that could be realized if information were shared in a fully machine-understandable way—a way that is unambiguous enough that a reasonably sophisticated user agent like a web robot could extract, interpret, and use the information to make important decisions.

Some steps have been made in this direction: for instance, we discussed how microformats already make this possible for limited contexts earlier in this chapter, and in Chapter 2 we looked at how Facebook is aggressively bootstrapping an explicit graph construct into the Web with its Open Graph protocol (see Understanding the Open Graph Protocol). It may be helpful to reflect on how we’ve arrived at this point.

The Internet is just a network of networks,[30] and what’s fascinating about it from a technical standpoint is how layers of increasingly higher-level protocols build on top of lower-level protocols to ultimately produce a fault-tolerant worldwide computing infrastructure. In our online activity, we rely on dozens of protocols every single day, without even thinking about it. However, there is one ubiquitous protocol that is hard not to think about explicitly from time to time: HTTP, the prefix of just about every URL that you type into your browser and the enabling protocol for the extensive universe of hypertext documents (HTML pages) and the links that glue them all together into what we know as the Web. But as you’ve known for a long time, the Web isn’t just about hypertext; it includes various embedded technologies such as JavaScript, Flash, and emerging HTML5 assets such as audio and video streams.

The notion of a cyberworld of documents, platforms, and applications that we can interact with via modern-day browsers (including ones on mobile or tablet devices) over HTTP is admittedly fuzzy, but it’s probably pretty close to what most people think of when they hear the term “the Web.” To a degree, the motivation behind the Web 2.0 idea that emerged back in 2004 was to more precisely define the increasingly blurry notion of exactly what the Web was and what it was becoming. Along those lines, some folks think of the Web as it existed from its inception until the present era of highly interactive web applications and user collaboration as Web 1.0, the era of emergent rich Internet applications (RIAs) and collaboration as Web 2.x, and the era of semantic karma that’s yet to come as Web 3.0 (see Table 8-2).

At present, there’s no real consensus about what Web 3.0 really means, but most discussions of the subject generally include the phrase semantic web and the notion of information being consumed and acted upon by machines in ways that are not yet possible at web scale. It’s still difficult for machines to extract and make inferences about the facts contained in documents available online. Keyword searching and heuristics can certainly provide listings of relevant search results, but human intelligence is still required to interpret and synthesize the information in the documents themselves. Whether Web 3.0 and the semantic web are really the same thing is open for debate; however, it’s generally accepted that the term semantic web refers to a web that’s much like the one we already know and love, but that has evolved to the point where machines can extract and act on the information contained in documents at a granular level.

In that regard, we can look back on the movement with microformats and see how that kind of evolutionary progress really could one day become revolutionary.

| Manifestation/era | Characteristics |

| Internet | Application protocols such as SMTP, FTP, BitTorrent, HTTP, etc. |

| Web 1.0 | Mostly static HTML pages and hyperlinks |

| Web 2.0 | Platforms, collaboration, rich user experiences |

| Social web (Web 2.x) | People and their virtual and real-world social connections and activities |

| Semantically marked-up web (Web 2.x) | Increasing amounts of machine-readable content such as microformats, RDFa, and microdata |

| Web 3.0 (the semantic web) | Prolific amounts of machine-understandable content |

Man Cannot Live on Facts Alone

The semantic web’s fundamental construct for representing knowledge is called a triple, which is a highly intuitive and natural way of expressing a fact. As an example, the sentence we’ve considered on many previous occasions—“Mr. Green killed Colonel Mustard in the study with the candlestick”—expressed as a triple might be something like (Mr. Green, killed, Colonel Mustard), where the constituent pieces of that triple refer to the subject, predicate, and object of the sentence.

The Resource Description Framework (RDF) is the semantic web’s model for defining and enabling the exchange of triples. RDF is highly extensible in that while it provides a basic foundation for expressing knowledge, it can also be used to define specialized vocabularies called ontologies that provide precise semantics for modeling specific domains. More than a passing mention of specific semantic web technologies such as RDF, RDFa, RDF Schema, and OWL would be well out of scope here at the eleventh hour, but we will work through a high-level example that attempts to provide some context for the semantic web in general.

As you read through the remainder of this section, keep in mind that RDF is just a way to express knowledge. It may have been manufactured by a nontrivial amount of human labor that has interlaced valuable metadata into web pages, or by a small army of robots (such as web agents) that perform natural language processing and automatically extract tuples of information from human language data. Regardless of the means involved, RDF provides a basis for modeling knowledge, and it lends itself to naturally being expressed as a graph that can be inferenced upon for the purposes of answering questions. We’ll illustrate this idea with some working code in the next section.

Open-world versus closed-world assumptions

One interesting difference between the way inference works in logic programming languages such as Prolog[31] as opposed to in other technologies, such as the RDF stack, is whether they make open-world or closed-world assumptions about the universe. Logic programming languages such as Prolog and most traditional database systems assume a closed world, while RDF technology generally assumes an open world.

In a closed world, everything that you haven’t been explicitly told about the universe should be considered false, whereas in an open world, everything you don’t know is arguably more appropriately handled as being undefined (another way of saying “unknown”). The distinction is that reasoners who assume an open world will not rule out interpretations that include facts that are not explicitly stated in a knowledge base, whereas reasoners who assume the closed world of the Prolog programming language or most database systems will rule out such facts. Furthermore, in a system that assumes a closed world, merging contradictory knowledge would generally trigger an error, while a system assuming an open world may try to make new inferences that somehow reconcile the contradictory information. As you might imagine, open-world systems are quite flexible and can lead to some conundrums; the potential can become especially pronounced when disparate knowledge bases are merged.

Intuitively, you might think of it like this: systems predicated upon closed-world reasoning assume that the data they are given is complete, and it is typically not the case that every previous fact (explicit or inferred) will still hold when new ones are added. In contrast, open-world systems make no such assumption about the completeness of their data and are monotonic. As you might imagine, there is substantial debate about the merits of making one assumption versus the other. As someone interested in the semantic web, you should at least be aware of the issue. As the matter specifically relates to RDF, official guidance from the W3C documentation states:[32]

To facilitate operation at Internet scale, RDF is an open-world framework that allows anyone to make statements about any resource. In general, it is not assumed that complete information about any resource is available. RDF does not prevent anyone from making assertions that are nonsensical or inconsistent with other statements, or the world as people see it. Designers of applications that use RDF should be aware of this and may design their applications to tolerate incomplete or inconsistent sources of information.

You might also check out Peter Patel-Schneider and Ian Horrocks’s “Position Paper: A Comparison of Two Modelling Paradigms in the Semantic Web” if you’re interested in pursuing this topic further. Whether or not you decide to dive into this topic right now, keep in mind that the data that’s available on the Web is incomplete, and that making a closed-world assumption (i.e., considering all unknown information emphatically false) will entail severe consequences sooner rather than later.

Inferencing About an Open World

Foundational languages such as RDF Schema and OWL are designed so that precise vocabularies can be used to express facts such as the triple (Mr. Green, killed, Colonel Mustard) in a machine-readable way, and this is a necessary (but not sufficient) condition for the semantic web to be fully realized. Generally speaking, once you have a set of facts, the next step is to perform inference over the facts and draw conclusions that follow from the facts. The concept of formal inference dates back to at least ancient Greece with Aristotle’s syllogisms, and the obvious connection to how machines can take advantage of it has not gone unnoticed by researchers interested in artificial intelligence for the past 50 or so years. The Java-based landscape that’s filled with enterprise-level options such as Jena and Sesame certainly seems to be where most of the heavyweight action resides, but fortunately, we do have a couple of solid options to work with in Python.

One of the best Pythonic options capable of inference that you’re likely to encounter is FuXi. FuXi is a powerful logic-reasoning system for the semantic web that uses a technique called forward chaining to deduce new information from existing information by starting with a set of facts, deriving new facts from the known facts by applying a set of logical rules, and repeating this process until a particular conclusion can be proved or disproved, or there are no more new facts to derive. The kind of forward chaining that FuXi delivers is said to be both sound (because any new facts that are produced are true) and complete (because any facts that are true can eventually be proven). A full-blown discussion of propositional and first-order logic could easily fill a book; if you’re interested in digging deeper, the now-classic textbook Artificial Intelligence: A Modern Approach, by Stuart Russell and Peter Norvig (Prentice Hall), is probably the most comprehensive resource.

To demonstrate the kinds of inferencing capabilities a system such as FuXi can provide, let’s consider the famous example of Aristotle’s syllogism[33] in which you are given a knowledge base that contains the facts “Socrates is a man” and “All men are mortal,” which allows you to deduce that “Socrates is mortal.” While this problem may seem too trivial, keep in mind that the deterministic algorithms that produce the new fact that “Socrates is mortal” work the same way when there are significantly more facts available—and those new facts may produce additional new facts, which produce additional new facts, and so on. For example, consider a slightly more complex knowledge base containing a few additional facts:

Socrates is a man.

All men are mortal.

Only gods live on Mt. Olympus.

All mortals drink whisky.

Chuck Norris lives on Mt. Olympus.

If presented with the given knowledge base and then posed the question, “Does Socrates drink whisky?” you must first infer an intermediate fact before you can definitively answer the question: you would have to deduce that “Socrates is mortal” before you could conclusively affirm the follow-on fact that “Socrates drinks whisky.” To illustrate how all of this would work in code, consider the same knowledge base now expressed in Notation3 (N3), a simple yet powerful syntax that expresses facts and rules in RDF, as shown here:

#Assign a namespace for logic predicates

@prefix log: <http://www.w3.org/2000/10/swap/log#> .

#Assign a namespace for the vocabulary defined in this document

@prefix : <MiningTheSocialWeb#> .

#Socrates is a man

:Socrates a :Man.

@forAll :x .

#All men are mortal: Man(x) => Mortal(x)

{ :x a :Man } log:implies { :x a :Mortal } .

#Only gods live at Mt Olympus: Lives(x, MtOlympus) <=> God(x)

{ :x :lives :MtOlympus } log:implies { :x a :god } .

{ :x a :god } log:implies { :x :lives :MtOlympus } .

#All mortals drink whisky: Mortal(x) => Drinks(x, whisky)

{ :x a :Man } log:implies { :x :drinks :whisky } .

#Chuck Norris lives at Mt Olympus: Lives(ChuckNorris, MtOlympus)

:ChuckNorris :lives :MtOlympus .While there are many different formats for expressing RDF, many semantic web tools choose N3 because its readability and expressiveness make it accessible. Skimming down the file, we see some namespaces that are set up to ground the symbols in the vocabulary that is used, and a few assertions that were previously mentioned. Let’s see what happens when you run FuXi from the command line and tell it to parse the facts from the sample knowledge base that was just introduced and to accumulate additional facts about it with the following command:

$FuXi --rules=chuck-norris.n3 --ruleFacts --naive

Note

If you are installing FuXi on your own machine for the first time, your simplest and quickest option for installation may be to follow these instructions. Of course, if you are following along with the IPython Notebook as part of the virtual machine experience for this book, this installation dependency (like all others) is already taken care of for you, and the sample code in the corresponding IPython Notebook for this chapter should “just work.”

You should see output similar to the following if you run FuXi from the command line against a file named chuck-norris.n3 containing the preceding N3 knowledge base:

('Parsing RDF facts from ', 'chuck-norris.n3')

('Time to calculate closure on working memory: ', '1.66392326355 milli seconds')

<Network: 3 rules, 6 nodes, 3 tokens in working memory, 3 inferred tokens>

@prefix : <file:///.../ipynb/resources/ch08-semanticweb/MiningTheSocialWeb#> .

@prefix iw: <http://inferenceweb.stanford.edu/2004/07/iw.owl#> .

@prefix log: <http://www.w3.org/2000/10/swap/log#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix skolem: <http://code.google.com/p/python-dlp/wiki/SkolemTerm#> .

@prefix xml: <http://www.w3.org/XML/1998/namespace> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

:ChuckNorris a :god .

:Socrates a :Mortal ;

:drinks :whisky .The output of the program tells us a few things that weren’t explicitly stated in the initial knowledge base:

Chuck Norris is a god.

Socrates is a mortal.

Socrates drinks whisky.

Although deriving these facts may seem obvious to most human beings, it’s quite another story for a machine to have derived them—and that’s what makes things exciting. Also keep in mind that the facts that are given or deduced obviously don’t need to make sense in the world as we know it in order to be logically inferred from the initial information contained in the knowledge base.

Warning

Careless assertions about Chuck Norris (even in an educational context involving a fictitious universe) could prove harmful to your computer’s health, or possibly even your own health.[34]

If this simple example excites you, by all means, dig further into FuXi and the potential the semantic web holds. The example that was just provided barely scratches the surface of what it is capable of doing. There are numerous data sets available for mining, and vast technology toolchains that are a part of an entirely new realm of exciting technologies that you may not have previously encountered. The semantic web is arguably a much more advanced and complex topic than the social web, and investigating it is certainly a worthy pursuit—especially if you’re excited about the possibilities that inference brings to social data. It seems pretty clear that the future of the semantic web is largely undergirded by social data and many, many evolutions of technology involving social data along the way. Whether or not the semantic web is a journey or a destination, however, is up to us.

Closing Remarks

This chapter has been an attempt to entertain you with a sort of cocktail discussion about the somewhat slippery topic of the semantic web. Volumes could be written on the history of the Web, various visions for the semantic web, and competing philosophies around all of the technologies involved; alas, this chapter has been a mere sketch intended to pique your interest and introduce you to some of the fundamentals that you can pursue as part of your ongoing professional and entrepreneurial endeavors.

If you take nothing else from this chapter, remember that microformats are a way of decorating markup with metadata to expose specific types of structured information such as recipes, contact information, and other kinds of ubiquitous data. Microformats have vast potential to further the vision for the semantic web because they allow content publishers to take existing content and ensure that it conforms to a shared standard that can be understood and reasoned over by machines. Expect to see significant growth and interest in microformats in the months and years to come. Initiatives by communities of practice such as the microformats and IndieWeb communities are also notable and worth following.

While the intentional omission of semantic web discussion prior to this chapter may have created the impression of an arbitrary and rigid divide between the social and semantic webs, the divide is actually quite blurry and constantly in flux. It is likely that the combination of the undeniable proliferation of social data on the Web; initiatives such as the microformats published by parties ranging from Wikipedia to About.com, LinkedIn, Facebook’s Open Graph protocol, and the open standards communities; and the creative efforts of data hackers is greatly accelerating the realization of a semantic web that may not be all that different from the one that’s been hyped for the past 20 years.

The proliferation of social data has the potential to be a great catalyst for the development of a semantic web that will enable agents to make nontrivial actions on our behalf. We’re not there yet, but be hopeful and heed the wise words of Epicurus, who perhaps said it best: “Don’t spoil what you have by desiring what you don’t have; but remember that what you now have was once among the things only hoped for.”

Note

The source code outlined for this chapter and all other chapters is available at GitHub in a convenient IPython Notebook format that you’re highly encouraged to try out from the comfort of your own web browser.

Recommended Exercises

Go hang out on the #microformats IRC (Internet Relay Chat) channel and become involved in its community.

Watch some excellent video slides that present an overview on microformats by Tantek Çelik, a microformats community leader.

Review the examples in the wild on the microformats wiki and consider making some of your own contributions.

Explore some websites that implement microformats and poke around in the HTML source of the pages with your browser’s developer console and the built-in

document.querySelectorAllfunction.Review and follow along with a more comprehensive FuXi tutorial online.

Review RelMeAuth and attempt to implement and use web sign-in. You might find the Python-based GitHub project relmeauth helpful as a starting point.

Take a look at PaySwarm, an open initiative for web payments. (It’s somewhat off topic, but an important open web initiative involving money, which is an inherently social entity.)

Begin a survey of the semantic web as part of an independent research project.

Take a look at DBPedia, an initiative that extracts structured data from Wikipedia for purposes of semantic reasoning. What can you do with FuXi and DBPedia data?

Online Resources

The following list of links from this chapter may be useful for review:

[29] As defined in Programming the Semantic Web (O’Reilly).

[30] Inter-net literally implies “mutual or cooperating networks.”

[31] You’re highly encouraged to check out a bona fide logic-based programming language like Prolog that’s written in a paradigm designed specifically so that you can represent knowledge and deduce new information from existing facts. GNU Prolog is a fine place to start.

[33] In modern parlance, a syllogism is more commonly called an implication.

Get Mining the Social Web, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.