Chapter 4. Working with .NET Components

Having seen the language-integration examples in the previous chapter, you now know that all .NET assemblies are essentially binary components.[1] You can treat each .NET assembly as a component that you can plug into another component or application, without the need for source code, since all the metadata for the component is stored inside the .NET assembly. While you have to write a ton of plumbing code to build a component in COM, creating a component in .NET involves no extra work, as all .NET assemblies are components by nature.

In this chapter, we examine the more advanced topics, including component deployment, distributed components, and enterprise services, such as transaction management, object pooling, role-based security, and message queuing.

Deployment Options

For a simple program like hello.exe that we built in Chapter 2, deployment is easy: copy the assembly into a directory, and it’s ready to run. When you want to uninstall it, remove the file from the directory. However, when you want to share components with other applications, you’ve got to do some work.

In COM, you must store activation and marshaling[2] information in the registry for components to interoperate; as a result, any COM developer can discuss at length the pain and suffering inherent in COM and the system registry. In .NET, the system registry is no longer necessary for component integration.

In the .NET environment, components can be private, meaning that they are unpublished and used by known clients, or shared, meaning that they are published and can be used by any clients. This section discusses several options for deploying private and shared components.

Private Components

If you have private components that are used only by specific clients, you have two deployment options. You can store the private components and the clients that use these components in the same directory, or you can store the components in a component-specific directory that the client can access. Since these clients use the exact private components that they referenced at build time, the CLR doesn’t support version checking or enforce version policies on private components.

To install your applications in either of these cases, perform a

simple

xcopy

of your application files from the source installation directory to

the destination directory. When you want to remove the application,

remove these directories. You don’t have to write

code to store information into the registry, so

there’s no worrying about whether

you’ve missed inserting a registry setting for

correct application execution. In addition, because nothing is stored

in the registry, you don’t have to worry about

registry residues.

One-directory deployment

For simplicity, you can place supporting assemblies in the same directory as the client application. For example, in Chapter 3, we placed the vehicle, car, and plane assemblies in the same directory as the client application, drive.exe. Since both the client application and supporting assemblies are stored within the same directory, the CLR has no problem resolving this reference at runtime (i.e., find and load plane.dll and activate the Plane class). If you move any of the DLLs to a different directory (e.g., put vehicle.dll in c:\temp), you will get an exception when you execute drive.exe. This is because the CLR looks for the vehicle assembly in the following order:

It looks for a file called vehicle.dll within the same directory as drive.exe.

Assuming that the CLR hasn’t found vehicle.dll, it looks for vehicle.dll in a subdirectory with the same name as the assembly name (i.e., vehicle).

Assuming that the CLR hasn’t found the vehicle assembly, it looks for a file called vehicle.exe in the same directory as drive.exe.

Assuming that the CLR hasn’t found vehicle.exe, it looks for vehicle.exe in a subdirectory with the same name as the assembly name (i.e., vehicle).

At this point, the CLR throws and exception, indicating that it has failed to find the vehicle assembly.

The search for other supporting assemblies, such as car and plane, follows the same order. In steps 2 and 4, the CLR looks for the supporting assemblies in specific subdirectories. Let’s investigate an example of this in the following section, which we’ve called multiple-directory deployment.

Multiple-directory deployment

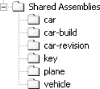

Instead of storing all assemblies in the same directory as your client application, you can also use multiple, private subdirectories to segregate your assemblies so that they are easier to find and manage. For example, we will separate the vehicle, car, and plane assemblies into their own private directories, as shown in Figure 4-1. We will leave the drive.exe application in the top directory, MultiDirectories.

When you build the vehicle assembly, you

don’t have to do anything special, as it

doesn’t reference or use any third-party assemblies.

However, when you build the car or plane assembly, you must refer to

the correct vehicle component (i.e., the one in the

vehicle directory). For example, to build the

plane assembly successfully, you must explicitly refer to

vehicle.dll using a specific or relative path,

as shown in the following command (cd to the

plane directory):

csc /r:..\vehicle\vehicle.dll /t:library /out:plane.dll plane.cs

You can build the car assembly the same way you build the plane

assembly. To compile your client application, you must also refer to

your dependencies using the correct paths (cd to

the main directory, MultiDirectories, before you

type this command all on one line):

vjc /r:vehicle\vehicle.dll;car\car.dll;plane\plane.dll

/t:exe /out:drive.exe drive.jslBased on the previously discussed search algorithm, the CLR can find the supporting assemblies within the appropriate subdirectories.

Shared Components

Unlike application-private assemblies, shared assemblies—ones that can be used by any client application—must be published or registered in the system Global Assembly Cache (GAC). When you register your assemblies against the GAC, they act as system components, such as a system DLL that every process in the system can use. A prerequisite for GAC registration is that the component must possess originator and version information. In addition to other metadata, these two items allow multiple versions of the same component to be registered and executed on the same machine. Again, unlike COM, you don’t have to store any information in the system registry for clients to use these shared assemblies.

There are three general steps to registering your shared assemblies against the GAC:

Use the shared named (sn.exe) utility to obtain a public/private key pair. This utility generates a random key pair for you and saves the key information in an output file—for example, originator.key.

Build your assembly with an assembly version number and the key information from originator.key.

Use the .NET Global Assembly Cache Utility (gacutil.exe) to register your assembly in the GAC. This assembly is now a shared assembly and can be used by any client.

The commands that we use in this section refer to relative paths, so if you’re following along, make sure that you create the directory structure, as shown in Figure 4-2. The vehicle, plane, and car directories hold their appropriate assemblies, and the key directory holds the public/private key pair that we will generate in a moment. The car-build directory holds a car assembly with a modified build number, and the car-revision directory holds a car assembly with a modified revision number.

Generating a random key pair

We will perform the first step once and reuse the key pair for all shared assemblies that we build in this section. We’re doing this for brevity only because you can use different key information for each assembly, or even each version, that you build. Here’s how to generate a random key pair (be sure to issue this command in the key directory):

sn -k originator.key

The

-k option

generates a random key pair and saves the key information into the

originator.key file. We will use this file as

input when we build our shared assemblies. Let’s now

examine steps 2 and 3 of registering your shared assemblies against

the GAC.

Making the vehicle component a shared assembly

In order to add version and key information into the vehicle component (developed using Managed C++), we need to make some minor modifications to vehicle.cpp, as follows:

#using<mscorlib.dll> using namespace System; using namespace System::Reflection; [assembly:AssemblyVersion("1.0.0.0")]; [assembly:AssemblyKeyFile("..\\key\\originator.key")]; public _ _gc _ _interface ISteering { void TurnLeft( ); void TurnRight( ); }; public _ _gc class Vehicle : public ISteering { public: virtual void TurnLeft( ) { Console::WriteLine("Vehicle turns left."); } virtual void TurnRight( ) { Console::WriteLine("Vehicle turn right."); } virtual void ApplyBrakes( ) = 0; };

The first boldface line indicates that we’re using

the Reflection namespace, which defines

the attributes that the compiler will intercept to inject the correct

information into our assembly manifest. (For a discussion of

attributes, see Section 4.3.1

later in this chapter.) We use the

AssemblyVersion

attribute to indicate the version of this assembly, and we use

the

AssemblyKeyFile

attribute to indicate the file containing the key information that

the compiler should use to derive the public-key-token value, to be

revealed in a moment.

Once you’ve done this, you can build this assembly using the following commands, which you’ve seen before:

cl /CLR /c vehicle.cpp link -dll /out:vehicle.dll vehicle.obj

After you’ve built the assembly, you can use the .NET GAC Utility to register this assembly into the GAC, as follows:

gacutil.exe /i vehicle.dll

Successful registration against the cache turns this component into a shared assembly. A version of this component is copied into the GAC so that even if you delete this file locally, you will still be able to run your client program.[3]

Making the car component a shared assembly

In order to add version and key information into the car component, we need to make some minor modifications to car.vb, as follows:

Imports System Imports System.Reflection <Assembly:AssemblyVersion("1.0.0.0")> <assembly:AssemblyKeyFile("..\\key\\originator.key")> Public Class Car Inherits Vehicle Overrides Public Sub TurnLeft( ) Console.WriteLine("Car turns left.") End Sub Overrides Public Sub TurnRight( ) Console.WriteLine("Car turns right.") End Sub Overrides Public Sub ApplyBrakes( ) Console.WriteLine("Car trying to stop.") Console.WriteLine("ORIGINAL VERSION - 1.0.0.0.") throw new Exception("Brake failure!") End Sub End Class

Having done this, you can now build it with the following command:

vbc /r:..\vehicle\vehicle.dll /t:library /out:car.dll car.vb

Once you’ve built this component, you can register it against the GAC:

gacutil /i car.dll

At this point, you can delete car.dll in the local directory because it has been registered in the GAC.

Making the plane component a shared assembly

In order to add version and key information into the plane component, we need to make some minor modifications to plane.cs, as follows:

using System; using System.Reflection; [assembly:AssemblyVersion("1.0.0.0")] [assembly:AssemblyKeyFile("..\\key\\originator.key")] public class Plane : Vehicle { override public void TurnLeft( ) { Console.WriteLine("Plane turns left."); } override public void TurnRight( ) { Console.WriteLine("Plane turns right."); } override public void ApplyBrakes( ) { Console.WriteLine("Air brakes being used."); } }

Having done this, you can build the assembly with the following commands:

csc /r:..\vehicle\vehicle.dll /t:library /out:plane.dll plane.cs gacutil /i plane.dll

Of course, the last line in this snippet simply registers the component into the GAC.

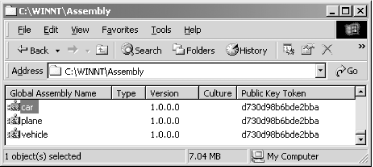

Viewing the GAC

Now that we’ve registered all our components into the GAC, let’s see what the GAC looks like. Microsoft has shipped a shell extension, the Shell Cache Viewer, to make it easier for you to view the GAC. On our machines, the Shell Cache Viewer appears when we navigate to C:\WINDOWS\Assembly, as shown in Figure 4-3.[4]

As you can see, the Shell Cache Viewer shows that all our components have the same version number because we used 1.0.0.0 as the version number when we built our components. Additionally, it shows that all our components have the same public-key-token value because we used the same key file, originator.key.

Building and testing the drive.exe

You should copy the previous drive.jsl source-code file into the Shared Assemblies directory, the root of the directory structure (shown in Figure 4-2) we are working with in this section. Having done this, you can build this component as follows (remember to type everything on one line):

vjc /r:vehicle\vehicle.dll;car\car.dll;plane\plane.dll

/t:exe /out:drive.exe drive.jslOnce you’ve done this, you can execute the drive.exe component, which will use the vehicle.dll, car.dll, and plane.dll assemblies registered in the GAC. You should see the following as part of your output:

ORIGINAL VERSION - 1.0.0.0.

To uninstall these shared components (assuming that you have administrative privileges), select the appropriate assemblies and press the Delete key (but if you do this now, you must reregister these assemblies because we’ll need them in the upcoming examples). When you do this, you’ve taken all the residues of these components out of the GAC. All that’s left is to delete any files that you’ve copied over from your installation diskette—typically, all you really have to do is recursively remove the application directory.

Adding new versions

Unlike private assemblies, shared assemblies can take advantage of the rich versioning policies that the CLR supports. Unlike earlier OS-level infrastructures, the CLR enforces versioning policies during the loading of all shared assemblies. By default, the CLR loads the assembly with which your application was built, but by providing an application configuration file, you can command the CLR to load the specific assembly version that your application needs. Inside an application configuration file, you can specify the rules or policies that the CLR should use when loading shared assemblies on which your application depends.

Let’s make some code changes to our car component to demonstrate the default versioning support. Remember that Version 1.0.0.0 of our car component’s ApplyBrakes( ) method throws an exception, as follows:

Overrides Public Sub ApplyBrakes( )

Console.WriteLine("Car trying to stop.")

Console.WriteLine("ORIGINAL VERSION - 1.0.0.0.")

throw new Exception("Brake failure!")

End SubLet’s create a different build to remove this exception. To do this, make the following changes to the ApplyBrakes( ) method (store this source file in the car-build directory):

Overrides Public Sub ApplyBrakes( )

Console.WriteLine("Car trying to stop.")

Console.WriteLine("BUILD NUMBER change - 1.0.1.0.")

End SubIn addition, you need to change the build number in your code as follows:

<Assembly:AssemblyVersion("1.0.1.0")>

Now build this component, and register it using the following commands:

vbc /r:..\vehicle\vehicle.dll

/t:library /out:car.dll car.vb

gacutil /i car.dllNotice that we’ve specified that this version is 1.0.1.0, meaning that it’s compatible with Version 1.0.0.0. After registering this assembly with the GAC, execute your drive.exe application, and you will see the following statement as part of the output:

ORIGINAL VERSION - 1.0.0.0.

This is the default behavior—the CLR will load the version of the assembly with which your application was built. And just to prove this statement further, suppose that you provide Version 1.0.1.1 by making the following code changes (store this version in the car-revision directory):

Overrides Public Sub ApplyBrakes( )

Console.WriteLine("Car trying to stop.")

Console.WriteLine("REVISION NUMBER change - 1.0.1.1.")

End Sub

<Assembly:AssemblyVersion("1.0.1.1")>

This time, instead of changing the build number, you’re changing the revision number, which should still be compatible with the previous two versions. If you build this assembly, register it against the GAC and execute drive.exe again; you will get the following statement as part of your output:

ORIGINAL VERSION - 1.0.0.0.

Again, the CLR chooses the version with which your application was built.

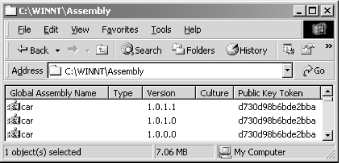

As shown in Figure 4-4, you can use the Shell Cache Viewer to verify that all three versions exist on the system simultaneously. This implies that the support exists for side-by-side execution—which terminates DLL Hell in .NET.

If you want your program to use a different, compatible version of the car assembly, you have to provide an application configuration file. The name of an application configuration file is composed of the physical executable name and “.config” appended to it. For example, since our client program is named drive.exe, its configuration file must be named drive.exe.config.

Here’s a drive.exe.config file

that allows you to tell the CLR to load Version 1.0.1.0 of the car

assembly for you (instead of loading the default Version, 1.0.0.0).

The two boldface attributes say that although we built our client

with Version 1.0.0.0 (oldVersion) of the car

assembly, load 1.0.1.0 (newVersion) for us when we

run drive.exe.

<?xml version ="1.0"?>

<configuration>

<runtime>

<assemblyBinding xmlns="urn:schemas-microsoft-com:asm.v1">

<dependentAssembly>

<assemblyIdentity name="car"

publicKeyToken="D730D98B6BDE2BBA"

culture="" />

<bindingRedirect oldVersion="1.0.0.0"

newVersion="1.0.1.0" />

</dependentAssembly>

</assemblyBinding>

</runtime>

</configuration>In this configuration file, the

name

attribute of the

assemblyIdentity tag

indicates the shared assembly’s human-readable name

that is stored in the GAC. Although the name value

can be anything, you must replace the

publicKeyToken value appropriately in order to

execute drive.exe. The

publicKeyToken attribute

records the public-key-token value, which is an 8-byte hash of the

public key used to build this component. There are several ways to

get this 8-byte hash: you can copy it from the Shell Cache Viewer,

you can copy it from the IL dump of your component, or you can use

the Shared Name utility to get it, as follows:

sn -T car.dll

Once you create the previously shown configuration file (stored in the same directory as the drive.exe executable) and execute drive.exe, you will see the following as part of your output:

BUILD NUMBER change - 1.0.1.0.

If you change the configuration file to

newVersion=1.0.1.1 and execute

drive.exe again, you will see the following as

part of your output:

REVISION NUMBER change - 1.0.1.1.

Having gone over all these examples, you should realize that you have full control over which dependent assembly versions the CLR should load for your applications. It doesn’t matter which version was built with your application: you can choose different versions at runtime merely by changing a few attributes in the application configuration file.

Distributed Components

A component technology should support distributed computing, allowing you to activate and invoke remote services, as well as services in another application domain.[5] Distributed COM, or DCOM, is the wire protocol that provides support for distributed computing using COM. Although DCOM is fine for distributed computing, it is inappropriate for global cyberspace because it doesn’t work well in the face of firewalls and NAT software. Some other shortcomings of DCOM are expensive lifecycle management, protocol negotiation, and binary formats.

To eliminate or at least mitigate these shortcomings, .NET provides a host of different distributed support. The Remoting API in .NET allows you to use a host of channels, such as TCP and HTTP (which uses SOAP by default), for distributed computing. It even permits you to plug in your own custom channels, should you require this functionality. Best of all, since the framework is totally object-oriented, distributed computing in .NET couldn’t be easier. To show you how simple it is to write a distributed application in .NET, let’s look at an example using sockets, otherwise known as the TCP channel in .NET.

Distributed Hello Server

In this example, we’ll write a distributed Hello application, which outputs a line of text to the console whenever a client invokes its exposed method, SayHello( ). Since we’re using the TCP channel, we’ll tell the compiler that we need the definitions in the System.Runtime.Remoting and System.Runtime.Remoting.Channels.Tcp namespaces.

Note that this class, CoHello, derives from MarshalByRefObject.[6]

This is the key to distributed computing in .NET because it gives this object a distributed identity, allowing the object to be referenced across application domains, or even process and machine boundaries. A marshal-by-reference object requires a proxy to be set up on the client side and a stub to be set up on the server side, but since both of these are automatically provided by the infrastructure, you don’t have to do any extra work. Your job is to derive from MarshalByRefObject to get all the support for distributed computing:

using System; using System.Runtime.Remoting; using System.Runtime.Remoting.Channels; using System.Runtime.Remoting.Channels.Tcp; public class CoHello : MarshalByRefObject { public static void Main( ) { TcpChannel channel = new TcpChannel(4000); ChannelServices.RegisterChannel(channel); RemotingConfiguration.RegisterWellKnownServiceType ( typeof(CoHello), // Type name "HelloDotNet", // URI WellKnownObjectMode.Singleton // SingleCall or Singleton ); System.Console.WriteLine("Hit <enter> to exit . . . "); System.Console.ReadLine( ); } public void SayHello( ) { Console.WriteLine("Hello, Universe of .NET"); } }

The SayHello( ) method is public, meaning that any external client can call this method. As you can see, this method is very simple, but the interesting thing is that a remote client application (which we’ll develop shortly) can call it because the Main( ) function uses the TcpChannel class. Look carefully at Main( ), and you’ll see that it instantiates a TcpChannel, passing in a port number from which the server will listen for incoming requests.[7]

Once we have created a channel object, we then register the channel to the ChannelServices, which supports channel registration and object resolution. Having done this, you must then register your object with the RemotingConfiguration so that it can be activated—you do this by calling the RegisterWellKnownServiceType( ) method of the RemotingConfiguration class. When you call this method, you must pass in the class name, a URI, and an object-activation mode. The URI is important because it’s a key element that the client application will use to refer specifically to this registered object. The object-activation mode can be either Singleton, which means that the same object will service many calls, or SingleCall, which means an object will service at most one call.

Here’s how to build this distributed application:

csc server.cs

Once you’ve done this, you can start the server program, which will wait endlessly until you hit the Enter key. The server is now ready to service client requests.

Remote Hello Client

Now that we have a server waiting, let’s develop a client to invoke the remote SayHello( ) method. Instead of registering an object with the remoting configuration, we need to activate a remote object. So let’s jump into the code now to see how this works. As you examine the following program, note these items:

We’re using types in the System.Runtime.Remoting and System.Runtime.Remoting.Channels.Tcp namespaces, since we want to use the TCP channel.

Our Client class doesn’t need to derive from anything because it’s not a server-side object that needs to have a distributed identity.

Since we’re developing a client application, we don’t need to specify a client port when we instantiate the TcpChannel.

Other than these items, the key thing to note is object activation, shown in the second boldface statement in the following code. To invoke remote methods, you must first activate the remote object and obtain an associated proxy on the client side. To activate the object and get a reference to the associated proxy, you call the GetObject( ) method of the Activator class. When you do this, you must pass along the remote class name and its fully qualified location, including the complete URI. Once you’ve successfully done this, you can then invoke remote methods.

using System;

using System.Runtime.Remoting;

using System.Runtime.Remoting.Channels;

using System.Runtime.Remoting.Channels.Tcp;

public class Client

{

public static void Main( )

{

try

{

TcpChannel channel = new TcpChannel( );

ChannelServices.RegisterChannel(channel);

CoHello h = (CoHello) Activator.GetObject(

typeof(CoHello), // Remote type

"tcp://127.0.0.1:4000/HelloDotNet" // Location

);

h.SayHello( );

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}

}To build this client application, you must include references to the server.exe assembly:

csc /r:Server.exe Client.cs

If you’re familiar with DCOM, you must be relieved to find that it’s relatively simple to write distributed applications in .NET.[8]

Distributed Garbage Collector

Because the .NET distributed garbage collector is different from that of DCOM, we must briefly cover this facility. Instead of using DCOM’s delta pinging, which requires few network packets when compared to normal pinging (but still too many for a distributed protocol), .NET remoting uses leases to manage object lifetimes. If you’ve ever renewed the lease to an IP address on your Dynamic Host Configuration Protocol (DHCP) network, you’ve pretty much figured out this mechanism because it’s based on similar concepts.

In .NET, distributed objects give out leases instead of relying on reference counting (as in COM) for lifetime management. An application domain where the remote objects reside has a special object called the lease manager, which manages all the leases associated with these remote objects. When a lease expires, the lease manager contacts a sponsor, telling the sponsor that the lease has expired. A sponsor is simply a client that has previously registered itself with the lease manager during an activation call, indicating to the lease manager that it wants to know when a lease expires. If the lease manager can contact the sponsor, the sponsor may then renew the lease. If the sponsor refuses to renew the lease or if the lease manager can’t contact the sponsor after a configurable timeout period, the lease manager will void the lease and remove the object. There are two other ways in which a lease can be renewed: implicitly, via each call to the remote object, or explicitly, by calling the Renew( ) method of the ILease interface.

COM+ Services in .NET

COM programming requires lots of housekeeping and infrastructure-level code to build large-scale, enterprise applications. To make it easier to develop and deploy transactional and scalable COM applications, Microsoft released Microsoft Transaction Server (MTS). MTS allows you to share resources, thereby increasing the scalability of an application. COM+ Services were the natural evolution of MTS. While MTS was just another library on top of COM, COM+ Services were subsumed into the COM library, thus combining both COM and MTS into a single runtime.

COM+ Services have been very valuable to the development shops using the COM model to build applications that take advantage of transactions, object pooling, role-based security, etc. If you develop enterprise .NET applications, the COM+ Services in .NET are a must.

In the following examples, rather than feeding you more principles, we’ll show you examples for using major COM+ Services in .NET, including examples on transactional programming, object pooling, and role-based security. But before you see these examples, let’s talk about the key element—attributes—that enables the use of these services in .NET.

Attribute-Based Programming

Attributes

are the key element that helps you

write less code and allows an infrastructure to automatically inject

the necessary code for you at runtime. If you’ve

used

IDL (Interface

Definition Language) before, you have seen the in

or out attributes, as in the following example:

HRESULT SetAge([in] short age); HRESULT GetAge([out] short *age);

IDL allows you to add these attributes so that the marshaler will

know how to optimize the use of the network. Here, the

in attribute tells the marshaler to send the

contents from the client to the server, and the

out attribute tells the marshaler to send the

contents from the server to the client. In the SetAge( ) method,

passing age from the server to the client will

just waste bandwidth. Similarly, there’s no need to

pass age from the client to the server in the

GetAge( ) method.

Developing custom attributes

While

in and

out are built-in

attributes the MIDL compiler supports, .NET allows you to create your

own custom attributes by deriving from the

System.Attribute class.

Here’s an example of a custom attribute:

using System;

public enum Skill { Guru, Senior, Junior }

[AttributeUsage(AttributeTargets.Class |

AttributeTargets.Field |

AttributeTargets.Method |

AttributeTargets.Property |

AttributeTargets.Constructor|

AttributeTargets.Event)]

public class AuthorAttribute : System.Attribute

{

public AuthorAttribute(Skill s)

{

level = s;

}

public Skill level;

}The AttributeUsage attribute that we’ve applied to

our AuthorAttribute class specifies the rules for using

AuthorAttribute.[9] Specifically, it

says that AuthorAttribute can prefix or describe a

class or any class member.

Using custom attributes

Given that we have this attribute, we can write a simple class to

make use of it. To apply our attribute to a class or a member, we

simply make use of the attribute’s available

constructors. In our case, we have only one and it’s

AuthorAttribute( ), which takes an author’s skill

level. Although you can use AuthorAttribute( ) to instantiate this

attribute, .NET allows you to drop the Attribute

suffix for convenience, as shown in the following code listing:

[Author(Skill.Guru)]

public class Customer

{

[Author(Skill.Senior)]

public void Add(string strName)

{

}

[Author(Skill.Junior)]

public void Delete(string strName)

{

}

}You’ll notice that we’ve applied the Author attribute to the Customer class, telling the world that a guru wrote this class definition. This code also shows that a senior programmer wrote the Add( ) method and that a junior programmer wrote the Delete( ) method.

Inspecting attributes

You won’t see the full benefits of attributes until you write a simple interceptor-like program, which looks for special attributes and provides additional services appropriate for these attributes. Real interceptors include marshaling, transaction, security, pooling, and other services in MTS and COM+.

Here’s a simple interceptor-like program that uses

the Reflection API to look for AuthorAttribute and provide additional

services. You’ll notice that we can ask a type,

Customer in this case, for all of its custom

attributes. In our code, we ensure that the Customer class has

attributes and that the first attribute is

AuthorAttribute before we output the appropriate

messages to the console. In addition, we look for all members that

belong to the Customer class and check whether they have custom

attributes. If they do, we ensure that the first attribute is an

AuthorAttribute before we output the appropriate

messages to the console.

using System;

using System.Reflection;

public class interceptor

{

public static void Main( )

{

Object[] attrs = typeof(Customer).GetCustomAttributes(false);

if ((attrs.Length > 0) && (attrs[0] is AuthorAttribute))

{

Console.WriteLine("Class [{0}], written by a {1} programmer.",

typeof(Customer).Name, ((AuthorAttribute)attrs[0]).level);

}

MethodInfo[] mInfo = typeof(Customer).GetMethods( );

for ( int i=0; i < mInfo.Length; i++ )

{

attrs = mInfo[i].GetCustomAttributes(false);

if ((attrs.Length > 0) && (attrs[0] is AuthorAttribute))

{

AuthorAttribute a = (AuthorAttribute)attrs[0];

Console.WriteLine("Method [{0}], written by a {1} programmer.",

mInfo[i].Name, (a.level));

if (a.level == Skill.Junior)

{

Console.WriteLine("***Performing automatic " +

"review of {0}'s code***", a.level);

}

}

}

}

}It is crucial to note that when this program sees a piece of code written by a junior programmer, it automatically performs a rigorous review of the code. If you compile and run this program, it will output the following to the console:

Class [Customer], written by a Guru programmer. Method [Add], written by a Senior programmer. Method [Delete], written by a Junior programmer. ***Performing automatic review of Junior's code***

Although our interceptor-like program doesn’t intercept any object-creation and method invocations, it does show how a real interceptor can examine attributes at runtime and provide necessary services stipulated by the attributes. Again, the key here is the last boldface line, which represents a special service that the interceptor provides as a result of attribute inspection.

Transactions

In this section, we’ll show you that it’s easy to write a .NET class to take advantage of the transaction support that COM+ Services provide. All you need to supply at development time are a few attributes, and your .NET components are automatically registered against the COM+ catalog the first time they are used. Put differently, not only do you get easier programming, but you also get just-in-time and automatic registration of your COM+ application.[10]

To develop a .NET class that supports transactions, here’s what must happen:

Besides these two requirements, you can use the ContextUtil class (which is a part of the System.EnterpriseServices namespace) to obtain information about the COM+ object context. This class exposes the major functionality found in COM+, including methods such as SetComplete( ), SetAbort( ), and IsCallerInRole( ), and properties such as IsInTransaction and MyTransactionVote.

In addition, while it’s not necessary to specify any COM+ application installation options, you should do so because you get to specify what you want, including the name of your COM+ application, its activation setting, its versions, and so on. For example, in the following code listing, if you don’t specify the ApplicationName attribute, .NET will use the module name as the COM+ application name, displayed in the Component Services Explorer (or COM+ Explorer). For example, if the name of module is crm.dll, the name of your COM+ application will be crm. Other than this attribute, we also use the ApplicationActivation attribute to specify that this component will be installed as a library application, meaning that the component will be activated in the creator’s process:

using System; using System.Reflection; using System.EnterpriseServices; [assembly: ApplicationName(".NET Framework Essentials CRM")] [assembly: ApplicationActivation(ActivationOption.Library)] [assembly: AssemblyKeyFile("originator.key")] [assembly: AssemblyVersion("1.0.0.0")]

The rest should look extremely familiar. In the Add( ) method, we simply call SetComplete( ) when we’ve successfully added the new customer into our databases. If something has gone wrong during the process, we will vote to abort this transaction by calling SetAbort( ).

[Transaction(TransactionOption.Required)]

public class Customer : ServicedComponent

{

public void Add(string strName)

{

try

{

Console.WriteLine("New customer: {0}", strName);

// Add the new customer into the system

// and make appropriate updates to

// several databases.

ContextUtil.SetComplete( );

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

ContextUtil.SetAbort( );

}

}

}Instead of calling SetComplete( ) and SetAbort( ) yourself, you can also use the AutoComplete attribute, as in the following code, which is conceptually equivalent to the previously shown Add( ) method:

[AutoComplete]

public void Add(string strName)

{

Console.WriteLine("New customer: {0}", strName);

// Add the new customer into the system

// and make appropriate updates to

// several databases.

}Here’s how you build this assembly:

csc /t:library /out:crm.dll crm.cs

Since this is a shared assembly, remember to register it against the GAC by using the GAC utility:

gacutil /i crm.dll

At this point, the assembly has not been registered as a COM+ application, but we don’t need to register it manually. Instead, .NET automatically registers and hosts this component for us in a COM+ application the first time we use this component. So, let’s write a simple client program that uses this component at this point. As you can see in the following code, we instantiate a Customer object and add a new customer:

using System;

public class Client

{

public static void Main( )

{

try

{

Customer c = new Customer( );

c.Add("John Osborn");

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}

}We can build this program as follows:

csc /r:crm.dll /t:exe /out:client.exe client.cs

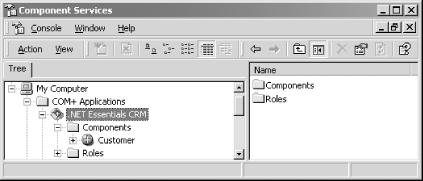

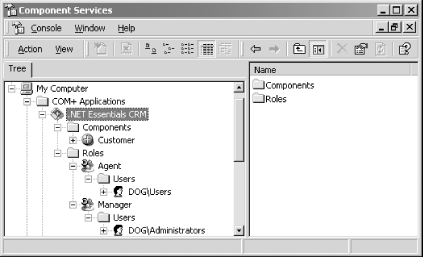

When we run this application, COM+ Services automatically create a

COM+ application called .NET Framework

Essentials

CRM to host our

crm.dll .NET assembly, as shown in Figure 4-5. In addition to adding our component to the

created COM+ application, .NET also inspects our metadata for

provided attributes and configures the associated services in the

COM+ catalog.

Object Pooling

A pool is technical term that refers to a group of resources, such as connections, threads, and objects. Putting a few objects into a pool allows hundreds of clients to share these few objects (you can make the same assertion for threads, connections, and other objects). Pooling is, therefore, a technique that minimizes the use of system resources, improves performance, and helps system scalability.

Missing in MTS, object pooling is a nice feature in COM+ that allows you to pool objects that are expensive to create. Similar to providing support for transactions, if you want to support object pooling in a .NET class, you need to derive from ServicedComponent, override any of the Activate( ), Deactivate( ), and CanBePooled( ) methods, and specify the object-pooling requirements in an ObjectPooling attribute, as shown in the following example:[11]

using System;

using System.Reflection;

using System.EnterpriseServices;

[assembly: ApplicationName(".NET Framework Essentials CRM")]

[assembly: ApplicationActivation(ActivationOption.Library)]

[assembly: AssemblyKeyFile("originator.key")]

[assembly: AssemblyVersion("1.0.0.0")]

[Transaction(TransactionOption.Required)]

[ObjectPooling(MinPoolSize=1, MaxPoolSize=5)]

public class Customer : ServicedComponent

{

public Customer( )

{

Console.WriteLine("Some expensive object construction.");

}

[AutoComplete]

public void Add(string strName)

{

Console.WriteLine("Add customer: {0}", strName);

// Add the new customer into the system

// and make appropriate updates to

// several databases.

}

override protected void Activate( )

{

Console.WriteLine("Activate");

// Pooled object is being activated.

// Perform the appropriate initialization.

}

override protected void Deactivate( )

{

Console.WriteLine("Deactivate");

// Object is about to be returned to the pool.

// Perform the appropriate clean up.

}

override protected bool CanBePooled( )

{

Console.WriteLine("CanBePooled");

return true; // Return the object to the pool.

}

}Take advantage of the Activate( ) and Deactivate( ) methods to perform appropriate initialization and cleanup. The CanBePooled( ) method lets you tell COM+ whether your object can be pooled when this method is called. You need to provide the expensive object-creation functionality in the constructor, as shown in the constructor of this class.

Given this Customer class that supports both transaction and object

pooling, you can write the following client-side code to test object

pooling. For brevity, we will create only two objects, but you can

change this number to anything you like so that you can see the

effects of object pooling. Just to ensure that you have the correct

configuration, delete the current .NET

Framework

Essentials

CRM COM+ application from the Component Services

Explorer before running the following code:

for (int i=0; i<2; i++)

{

Customer c = new Customer( );

c.Add(i.ToString( ));

}Running this code produces the following results:

Some expensive object construction.

Activate

Add customer: 0

Deactivate

CanBePooled

Activate

Add customer: 1

Deactivate

CanBePooledWe’ve created two objects, but since

we’ve used object pooling, only one object is really

needed to support our calls, and that’s why you see

only one output statement that says Some

expensive

object

construction. In this case, COM+ creates only one

Customer object, but activates and deactivates it twice to support

our two calls. After each call, it puts the object back into the

object pool. When a new call arrives, it picks the same object from

the pool to service the request.

Role-Based Security

Role-based security in MTS and COM+ has drastically simplified the development and configuration of security for business applications. This is because it abstracts away the complicated details for dealing with access control lists (ACL) and security identifiers (SID). All .NET components that are hosted in a COM+ application can take advantage of role-based security. You can fully configure role-based security using the Component Services Explorer, but you can also manage role-based security in your code to provide fine-grain security support that’s missing from the Component Services Explorer.

Configuring role-based security

In order to demonstrate role-based security, let’s

add two roles to our COM+ application, .NET

Framework

Essentials

CRM. The first role represents

Agent who can use the

Customer class in every way but can’t delete

customers. You should create this role and add to it the local

Users group, as

shown in Figure 4-6. The second role represents

Manager who can use the Customer class in every

way, including deleting customers. Create this role and add to it the

local

Administrators group.

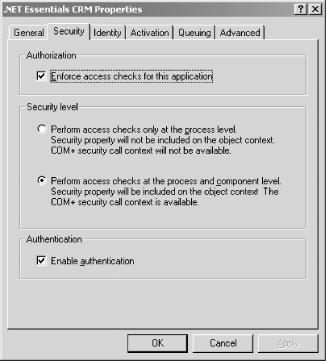

Once you create these roles, you need to enable access checks for the

.NET

Framework

Essentials CRM COM+ application. Launch the COM+

application’s Properties sheet (by selecting

.NET Framework Essentials CRM and pressing

Alt-Enter), and select the Security tab. Enable access checks to your

COM+ application by providing the options, as shown in Figure 4-7.

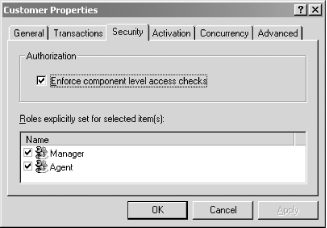

Once you have enabled access checks at the application level, you

need to enforce access checks at the class level, too. To do this,

launch Customer’s Properties

sheet, and select the Security tab. Enable access checks to this .NET

class by providing the options shown in Figure 4-8.

Here, we’re saying that no one can access the

Customer class except for those that belong to the

Manager or

Agent role.

Now, if you run the client application developed in the last section,

everything will work because you are a user on your machine. But if

you uncheck both the Manager

[12]

and

Agent roles in Figure 4-8 and

rerun the client application, you get the following message as part

of your output:

System.UnauthorizedAccessException: Access is denied.

You’re getting this exception because you’ve removed yourself from the roles that have access to the Customer class. Once you’ve verified this, put the configuration back to what is shown in Figure 4-8 to prepare the environment for the next test that we’re about to illustrate.

Programming role-based security

We’ve allowed

anyone in the Agent and Manager

roles to access our class, but let’s invent a rule

allowing only users under the Manager role to

delete a customer from the system (for lack of a better example). So

let’s add a new method to the Customer

class—we’ll call this method

Delete( ),

as shown in the following code. Anyone belonging to the

Agent or Manager role can

invoke this method, so we’ll first output to the

console the user account that invokes this method. After doing this,

we’ll check to ensure that this user belongs to the

Manager role. If so, we allow the call to go

through; otherwise, we throw an exception indicating that only

managers can perform a deletion. Believe it our not, this is the

basic premise for programming role-based security:

[AutoComplete]

public void Delete(string strName)

{

try

{

SecurityCallContext sec;

sec = SecurityCallContext.CurrentCall;

string strCaller = sec.DirectCaller.AccountName;

Console.WriteLine("Caller: {0}", strCaller);

bool bInRole = sec.IsCallerInRole("Manager");

if (!bInRole)

{

throw new Exception ("Only managers can delete customers.");

}

Console.WriteLine("Delete customer: {0}", strName);

// Delete the new customer from the system

// and make appropriate updates to

// several databases.

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}Here’s the client code that includes a call to the Delete( ) method:

using System;

public class Client

{

public static void Main( )

{

try

{

Customer c = new Customer( );

c.Add("John Osborn");

// Success depends on the role

// under which this method

// is invoked.

c.Delete("Jane Smith");

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}

}Once you’ve built this program, you can test it

using an account that belongs to the local Users

group, since we added this group to the Agent role

earlier. On Windows 2000 or XP, you can use the

following command to launch a command window using a specific

account:

runas /user:DEVTOUR\student cmd

Of course, you should replace DEVTOUR and

student with your own machine name and user

account, respectively. After running this command, you will need to

type in the correct password, and a new command window will appear.

Execute the client under this user account, and

you’ll see the following output:

Add customer: John Osborn Caller: DEVTOUR\student System.Exception: Only managers can delete customers. at Customer.Delete(String strName)

You’ll notice that the Add( ) operation went through

successfully, but the Delete( ) operation failed, because we executed

the client application under an account that’s

missing from the Manager role.

To remedy this, we need to use a user account that belongs to the

Manager role—any account that belongs to the

Administrators group will do. So, start another

command window using a command similar to the following:

runas /user:DEVTOUR\instructor cmd

Execute the client application again, and you’ll get the following output:

Add customer: John Osborn Caller: DEVTOUR\instructor Delete customer: Jane Smith

As you can see, since we’ve executed the client

application using an account that belongs to the

Manager role, the Delete( ) operation went through

without problems.

Message Queuing

In addition to providing support for COM+ Services, .NET also supports message queuing. If you’ve used Microsoft Message Queuing (MSMQ) services before, you’ll note that the basic programming model is the same but the classes in the System.Messaging namespace make it extremely easy to develop message-queuing applications. The System.Messaging namespace provides support for basic functionality, such as connecting to a queue, opening a queue, sending messages to a queue, receiving messages from a queue, and peeking for messages on the queue. To demonstrate how easy it is to use the classes in System.Messaging, let’s build two simple applications: one to enqueue messages onto a private queue on the local computer and another to dequeue these messages from the same queue.[13]

Enqueue

Here’s a simple program that enqueues a Customer object onto a private queue on the local computer. Notice first that we need to include the System.Messaging namespace because it contains the classes that we want to use:

using System;

using System.Messaging;

While the following Customer structure is very simple, it can be as complex as you want because it will be serialized into an XML-formatted buffer by default before it’s placed into the queue:

public struct Customer

{

public string Last;

public string First;

}Our program first checks whether a private queue on the local computer exists. If this queue is missing, the program will create it. Next, we instantiate a MessageQueue class, passing in the target queue name. Once we have this MessageQueue object, we invoke its Send( ) method, passing in the Customer object, as shown in the following code. This will put our customer object into our private queue:

public class Enqueue

{

public static void Main( )

{

try

{

string path = ".\\PRIVATE$\\NFE_queue";

if(!MessageQueue.Exists(path))

{

// Create our private queue.

MessageQueue.Create(path);

}

// Initialize the queue.

MessageQueue q = new MessageQueue(path);

// Create our object.

Customer c = new Customer( );

c.Last = "Osborn";

c.First = "John";

// Send it to the queue.

q.Send(c);

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}

}Use the following command to build this program:

csc /t:exe /out:enqueue.exe enqueue.cs

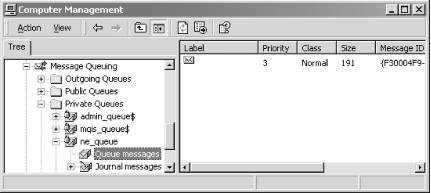

Execute this program, examine the Computer Management console, and

you will see your message in the private queue called

nfe_queue, as shown in Figure 4-9.

Dequeue

Now that there’s a message in our private message queue, let’s write a program to dequeue and examine the message. After ensuring that the private queue we want exists, we initialize it by instantiating a MessageQueue class, passing in the path to our private queue. Next, we tell the MessageQueue object that the type of object we want to dequeue is Customer. To actually dequeue the object, we need to invoke the Receive( ) method, passing in a timeout in terms of a TimeSpan object, whose parameters stand for hours, minutes, and seconds, respectively. Finally, we cast the body of the received Message object into a Customer object and output its contents:

using System;

using System.Messaging;

using System.Runtime.Serialization;

public struct Customer

{

public string Last;

public string First;

}

public class Dequeue

{

public static void Main( )

{

try

{

string strQueuePath = ".\\PRIVATE$\\NFE_queue";

// Ensure that the queue exists.

if (!MessageQueue.Exists(strQueuePath))

{

throw new Exception(strQueuePath + " doesn't exist!");

}

// Initialize the queue.

MessageQueue q = new MessageQueue(strQueuePath);

// Specify the types we want to get back.

string[] types = {"Customer, dequeue"};

((XmlMessageFormatter)q.Formatter).TargetTypeNames = types;

// Receive the message (5-second timeout).

Message m = q.Receive(new TimeSpan(0,0,5));

// Convert the body into the type we want.

Customer c = (Customer) m.Body;

Console.WriteLine("Customer: {0}, {1}", c.Last, c.First);

}

catch(Exception e)

{

Console.WriteLine(e.ToString( ));

}

}

}Compile and execute this program, look at the

Computer

Management console,

press F5 to refresh the screen, and you will realize that the

previous message is no longer there.

Summary

In this chapter, we’ve touched on many aspects of component-oriented programming, including deployment strategies, distributed computing, and enterprise services such as transaction management, object pooling, role-based security, and message queuing. We have to give due credit to Microsoft for making componentization easier in the .NET Framework. Case in point: without .NET, it would be impossible for us to show the complete code for all of these programs in a single chapter of a book.[14]

[1] Remember, as we explained in Chapter 1, we’re using the term “component” as a binary, deployable unit, not as a COM class.

[2] Distributed applications require a communication layer to assemble and disassemble application data and network streams. This layer is formally known as a marshaler in Microsoft terminology. Assembling and disassembling an application-level protocol network buffer are formally known as marshaling and unmarshaling, respectively.

[3] However, don’t delete the file now because we need it to build the car and plane assemblies.

[4] This path is entirely dependent upon the %windir% setting on your machine.

[5] Each Windows process requires its own memory address space, making it fairly expensive to run multiple Windows processes. An application domain is a lightweight or virtual process. All application domains of a given Windows process can use the same memory address space.

[6] If you fail to do this, your object will not have a distributed identity since the default is marshal-by-value, which means that a copy of the remote object is created on the client side.

[7] Believe it or not, all you really have to do is replace TcpChannel with HttpChannel to take advantage of HTTP and SOAP as the underlying communication protocols.

[8] In fact, if you have a copy of Learning DCOM (O’Reilly) handy, compare these programs with their DCOM counterparts in Appendix D, and you will see what we mean.

[9] You don’t have to postfix your attribute class name with the word “Attribute”, but this is a standard naming convention that Microsoft uses. C# lets you name your attribute class any way you like; for example, Author is a valid class name for your attribute.

[10] Automatic registration is nice during development, but don’t use this feature in a production environment, because not all clients will have the administrative privilege to set up COM+ applications.

[11] Mixing transactions and object pooling should be done with care. See COM and .NET Component Services, by Juval Löwy (O’Reilly).

[12] Since

you’re a developer, you’re probably

an administrator on your machine, so you need to uncheck the

Manager role, too, in order to see an access

violation in the test that we’re about to

illustrate.

[13] To execute these programs, you must have MessageQueuing installed on your system. You can verify this by launching the ComputerManagement console, as shown in Figure 4-9.

[14] For additional information on programming .NET component-based applications, see O’Reilly’s Programming .NET Components, by Juval Löwy.

Get .NET Framework Essentials, 3rd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.