Chapter 1. Organizing Data: Vantage, Domain, Action, and Validity

Security analysis is the process of applying data to make security decisions. Security decisions are disruptive and restrictiveâdisruptive because youâre fixing something, restrictive because youâre constraining behavior. Effective security analysis requires making the right decision and convincing a skeptical audience that this is the right decision. The foundations of these decisions are quality data and quality reasoning; in this chapter, I address both.

Security monitoring on a modern network requires working with multiple sensors that generate different kinds of data and are created by many different people for many different purposes. A sensor can be anything from a network tap to a firewall log; it is something that collects information about your network and can be used to make judgment calls about your networkâs security.

I want to pull out and emphasize a very important point here: quality source data is integral to good security analysis. Furthermore, the effort spent acquiring a consistent source of quality data will pay off further down the analysis pipelineâyou can use simpler (and faster) algorithms to identify phenomena, youâll have an easier time verifying results, and youâll spend less time cross-correlating and double-checking information.

So, now that youâre raring to go get some quality data, the question obviously pops up: what is quality data? The answer is that security data collection is a trade-off between expressiveness and speedâpacket capture (pcap) data collected from a span port can tell you if someone is scanning your network, but itâs going to also produce terabytes of unreadable traffic from the HTTPS server youâre watching. Logs from the HTTPS server will tell you about file accesses, but nothing about the FTP interactions going on as well. The questions you ask will also be situationalâhow you decide to deal with an advanced persistent threat (APT) is a function of how much risk you face, and how much risk you face will change over time.

That said, there are some basic goals we can establish about security data. We would like the data to express as much information with as small a footprint as possibleâso data should be in a compact format, and if different sensors report the same event, we would like those descriptions to not be redundant. We want the data to be as accurate as possible as to the time of observation, so information that is transient (such as the relationships between IP addresses and domain names) should be recorded at the time of collection. We also would like the data to be expressive; that is, we would like to reduce the amount of time and effort an analyst needs to spend cross-referencing information. Finally, we would like any inferences or decisions in the data to be accountable; for example, if an alert is raised because of a rule, we want to know the ruleâs history and provenance.

While we canât optimize for all of these criteria, we can use them as guidance for balancing these requirements. Effective monitoring will require juggling multiple sensors of different types, which treat data differently. To aid with this, I classify sensors along three attributes:

- Vantage

-

The placement of sensors within a network. Sensors with different vantages will see different parts of the same event.

- Domain

-

The information the sensor provides, whether thatâs at the host, a service on the host, or the network. Sensors with the same vantage but different domains provide complementary data about the same event. For some events, you might only get information from one domain. For example, host monitoring is the only way to find out if a host has been physically accessed.

- Action

-

How the sensor decides to report information. It may just record the data, provide events, or manipulate the traffic that produces the data. Sensors with different actions can potentially interfere with each other.

This categorization serves two purposes. First, it provides a way to break down and classify sensors by how they deal with data. Domain is a broad characterization of where and how the data is collected. Vantage informs us of how the sensor placement affects collection. Action details how the sensor actually fiddles with data. Together, these attributes provide a way to define the challenges data collection poses to the validity of an analystâs conclusions.

Validity is an idea from experimental design, and refers to the strength of an argument. A valid argument is one where the conclusion follows logically from the premise; weak arguments can be challenged on multiple axes, and experimental design focuses on identifying those challenges. The reason security people should care about it goes back to my point in the introduction: security analysis is about convincing an unwilling audience to reasonably evaluate a security decision and choose whether or not to make it. Understanding the validity and challenges to it produces better results and more realistic analyses.

Domain

We will now examine domain, vantage, and action in more detail. A sensorâs domain refers to the type of data that the sensor generates and reports. Because sensors include antivirus (AV) and similar systems, where the line of reasoning leading to a message may be opaque, the analyst needs to be aware that these tools import their own biases.

Table 1-1 breaks down the four major domain classes used in this book. This table divides domains by the event model and the sensor uses, with further description following.

| Domain | Data sources | Timing | Identity |

|---|---|---|---|

Network |

PCAP, NetFlow |

Real-time, packet-based |

IP, MAC |

Service |

Logs |

Real-time, event-based |

IP, Service-based IDs |

Host |

System state, signature alerts |

Asynchronous |

IP, MAC, UUID |

Active |

Scanning |

User-driven |

IP, Service-based IDs |

Sensors operating in the network domain derive all of their data from some form of packet capture. This may be straight pcap, packet headers, or constructs such as NetFlow. Network data gives the broadest view of a network, but it also has the smallest amount of useful data relative to the volume of data collected. Network domain data must be interpreted, it must be readable,1 and it must be meaningful; network traffic contains a lot of garbage.

Sensors in the service domain derive their data from services.

Examples of services include server applications like nginx or apache

(HTTP daemons), as well as internal processes like syslog and the

processes that are moderated by it. Service data provides you with

information on what actually happened, but this is done by

interpreting data and providing an event model that may be only

tangentially related to reality. In addition, to collect service

data, you need to know the service exists, which can be surprisingly

difficult to find out, given the tendency for hardware manufacturers

to shop web servers into every open port.

Sensors in the host domain collect information on the hostâs state. For our purposes, these types of tools fit into two categories: systems that provide information on system state such as disk space, and host-based intrusion detection systems such as file integrity monitoring or antivirus systems. These sensors will provide information on the impact of actions on the host, but are also prone to timing issuesâmany of the state-based systems provide alerts at fixed intervals, and the intrusion-based systems often use huge signature libraries that get updated sporadically.

Finally, the active domain consists of sensing controlled by the

analyst. This includes scanning for vulnerabilities, mapping tools

such as traceroute, or even something as simple as opening a

connection to a new web server to find out what the heck it does.

Active data also includes beaconing and other information that is sent out to ensure that we know something is happening.

Vantage

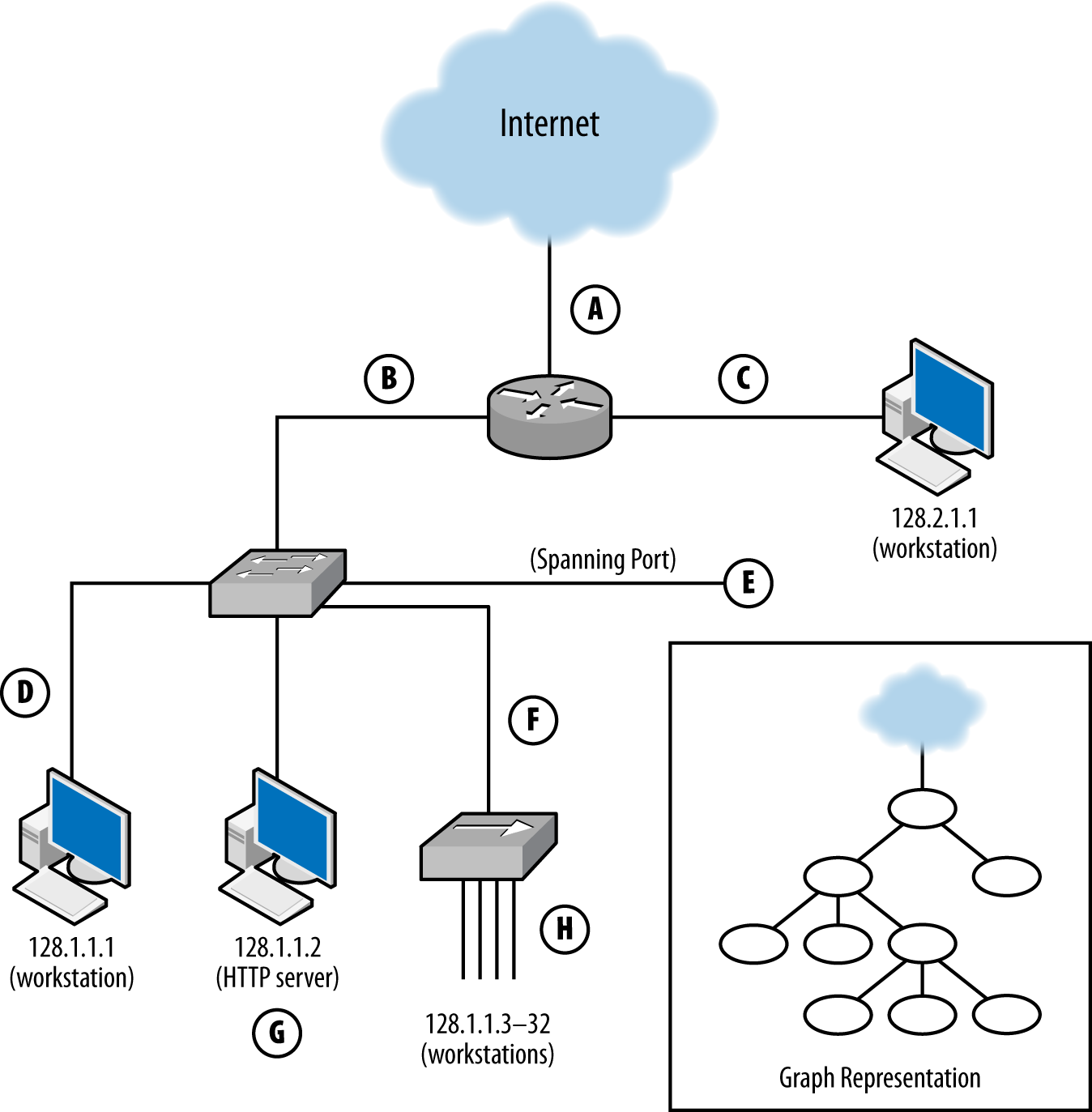

A sensorâs vantage describes the packets that sensor will be able to observe. Vantage is determined by an interaction between the sensorâs placement and the routing infrastructure of a network. In order to understand the phenomena that impact vantage, look at Figure 1-1. This figure describes a number of unique potential sensors differentiated by capital letters. In order, they are:

- A

-

Monitors the interface that connects the router to the internet.

- B

-

Monitors the interface that connects the router to the switch.

- C

-

Monitors the interface that connects the router to the host with IP address 128.2.1.1.

- D

-

Monitors host 128.1.1.1.

- E

-

Monitors a spanning port operated by the switch. A spanning port records all traffic that passes the switch (see âNetwork Layers and Vantageâ for more information on spanning ports).

- F

-

Monitors the interface between the switch and the hub.

- G

-

Collects HTTP log data on host 128.1.1.2.

- H

-

Sniffs all TCP traffic on the hub.

Figure 1-1. Vantage points of a simple network and a graph representation

Each of these sensors has a different vantage, and will see different traffic based on that vantage. You can approximate the vantage of a network by converting it into a simple node-and-link graph (as seen in the corner of Figure 1-1) and then tracing the links crossed between nodes. A link will be able to record any traffic that crosses that link en route to a destination. For example, in Figure 1-1:

-

The sensor at position A sees only traffic that moves between the network and the internetâit will not, for example, see traffic between 128.1.1.1 and 128.2.1.1.

-

The sensor at B sees any traffic that originates from or ends up at one of the addresses âbeneath it,â as long as the other address is 128.2.1.1 or the internet.

-

The sensor at C sees only traffic that originates from or ends at 128.2.1.1.

-

The sensor at D, like the sensor at C, only sees traffic that originates or ends at 128.1.1.1.

-

The sensor at E sees any traffic that moves between the switchesâ ports: traffic from 128.1.1.1 to anything else, traffic from 128.1.1.2 to anything else, and any traffic from 128.1.1.3 to 128.1.1.32 that communicates with anything outside that hub.

-

The sensor at F sees a subset of what the sensor at E sees, seeing only traffic from 128.1.1.3 to 128.1.1.32 that communicates with anything outside that hub.

-

G is a special case because it is an HTTP log; it sees only HTTP/S traffic (port 80 and 443) where 128.1.1.2 is the server.

-

Finally, H sees any traffic where one of the addresses between 128.1.1.3 and 128.1.1.32 is an origin or a destination, as well as traffic between those hosts.

Note that no single sensor provides complete coverage of this network. Furthermore, instrumentation will require dealing with redundant traffic. For instance, if I instrument H and E, I will see any traffic from 128.1.1.3 to 128.1.1.1 twice. Choosing the right vantage points requires striking a balance between complete coverage of traffic and not drowning in redundant data.

Choosing Vantage

When instrumenting a network, determining vantage is a three-step process: acquiring a network map, determining the potential vantage points, and then determining the optimal coverage.

The first step involves acquiring a map of the network and how itâs connected, together as well as a list of potential instrumentation points. Figure 1-1 is a simplified version of such a map.

The second step, determining the vantage of each point, involves identifying every potentially instrumentable location on the network and then determining what that location can see. This value can be expressed as a range of IP address/port combinations. Table 1-2 provides an example of such an inventory for Figure 1-1. A graph can be used to make a first guess at what vantage points will see, but a truly accurate model requires more in-depth information about the routing and networking hardware. For example, when dealing with routers it is possible to find points where the vantage is asymmetric (note that the traffic in Table 1-2 is all symmetric). Refer to âThe Basics of Network Layeringâ for more information.

| Vantage point | Source IP range | Destination IP range |

|---|---|---|

A |

internet |

128.1, 2.1.1â32 |

128.1, 2.1.1â32 |

internet |

|

B |

128.1.1.1â32 |

128.2.1.1, internet |

128.2.1.1, internet |

128.1.1.1â32 |

|

C |

128.2.1.1 |

128.1.1.1â32, internet |

128.1.1.1â32, internet |

128.2.1.1 |

|

D |

128.1.1.1 |

128.1.1.2-32, 128.2.1.1, internet |

128.1.1.2â32, 128.2.1.1, internet |

128.1.1.1 |

|

E |

128.1.1.1 |

128.1.1.2â32, 128.2.1.1, internet |

128.1.1.2 |

128.1.1.1, 128.1.1.3â32, 128.2.1.1, internet |

|

128.1.1.3â32 |

128.1.1.1-2, 128.2.1.1, internet |

|

F |

128.1.1.3â32 |

128.1.1.1-2, 128.2.1.1, internet |

128.1.1.1â32, 128.2.1.1, internet |

128.1.1.3â32 |

|

G |

128.1, 2.1.1â32, internet |

128.1.1.2:tcp/80 |

128.1.1.2:tcp/80 |

128.1, 2.1.1â32 |

|

H |

128.1.1.3-32 |

128.1.1.1â32, 128.2.1.1, internet |

128.1.1.1-32, 128.2.1.1, internet |

128.1.1.3â32 |

The final step is to pick the optimal vantage points shown by the worksheet. The goal is to choose a set of points that provide monitoring with minimal redundancy. For example, sensor E provides a superset of the data provided by sensor F, meaning that there is no reason to include both. Choosing vantage points almost always involves dealing with some redundancy, which can sometimes be limited by using filtering rules. For example, in order to instrument traffic between the hosts 128.1.1.3â32, point H must be instrumented, and that traffic will pop up again and again at points E, F, B, and A. If the sensors at those points are configured to not report traffic from 128.1.1.3â32, the redundancy problem is moot.

Actions: What a Sensor Does with Data

A sensorâs action describes how the sensor interacts with the data it collects. Depending on the domain, there are a number of discrete actions a sensor may take, each of which has different impacts on the validity of the output:

- Report

-

A report sensor simply provides information on all phenomena that the sensor observes. Report sensors are simple and important for baselining. They are also useful for developing signatures and alerts for phenomena that control sensors havenât yet been configured to recognize. Report sensors include NetFlow collectors,

tcpdump, and server logs. - Event

-

An event sensor differs from a report sensor in that it consumes multiple data sources to produce an event that summarizes some subset of that data. For example, a host-based intrusion detection system (IDS) might examine a memory image, find a malware signature in memory, and send an event indicating that its host was compromised by malware. At their most extreme, event sensors are black boxes that produce events in response to internal processes developed by experts. Event sensors include IDS and antivirus (AV) sensors.

- Control

-

A control sensor, like an event sensor, consumes multiple data sources and makes a judgment about that data before reacting. Unlike an event sensor, a control sensor modifies or blocks traffic when it sends an event. Control sensors include intrusion prevention systems (IPSs), firewalls, antispam systems, and some antivirus systems.

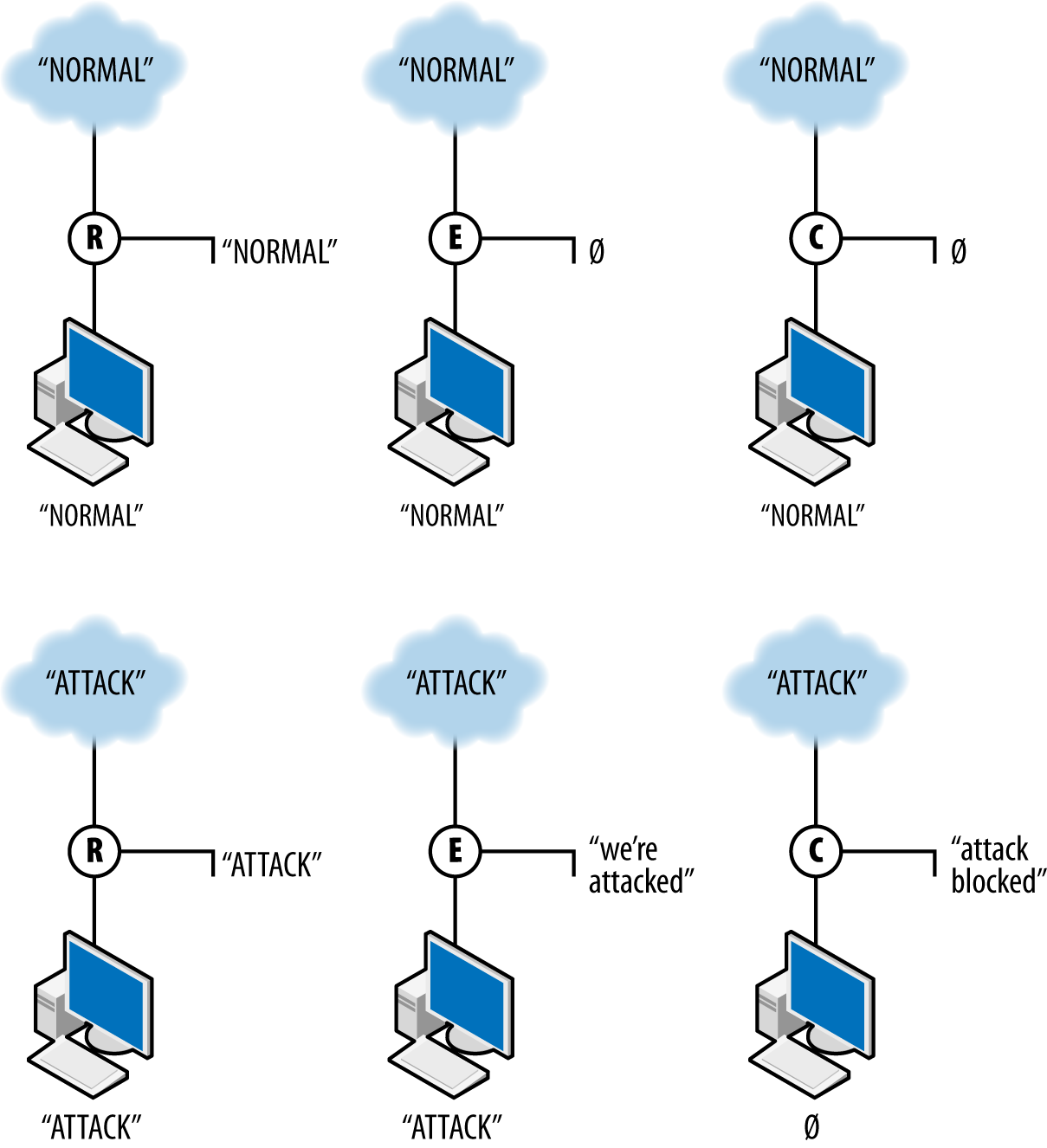

A sensorâs action not only affects how the sensor reports data, but also

how it interacts with the data itâs observing. Control sensors can

modify or block traffic. Figure 1-2 shows how sensors with these three

different types of action interact with data. The figure shows the

work of three sensors: R, a report sensor; E, an event sensor;

and C, a control sensor. The event and control sensors are

signature matching systems that react to the string ATTACK. Each

sensor is placed between the internet and a single target.

R, the reporter, simply reports the traffic it observes. In this case, it reports both normal and attack traffic without affecting the traffic and effectively summarizes the data observed. E, the event sensor, does nothing in the presence of normal traffic but raises an event when attack traffic is observed. E does not stop the traffic; it just sends an event. C, the controller, sends an event when it sees attack traffic and does nothing to normal traffic. In addition, however, C blocks the aberrant traffic from reaching the target. If another sensor is further down the route from C, it will never see the traffic that C blocks.

Figure 1-2. Three different sensor actions

Validity and Action

Validity, as Iâm going to discuss it, is a concept used in experimental design. The validity of an argument refers to the strength of that argument, of how reasonably the premise of an argument leads to the conclusion. Valid arguments have a strong link, weakly valid arguments are easily challenged.

For security analysts, validity is a good jumping-off point for identifying the challenges your analysis will face (and you will be challenged). Are you sure the sensorâs working? Is this a real threat? Why do we have to patch this mission-critical system? Security in most enterprises is a cost center, and you have to be able to justify the expenses youâre about to impose. If you canât answer challenges internally, you wonât be able to externally.

This section is a brief overview of validity. I will return to this topic throughout the book, identifying specific challenges within context. Initially, I want to establish a working vocabulary, starting with the four major categories used in research. I will introduce these briefly here, then explore them further in the subsections that follow. The four types of validity we will consider are:

- Internal

-

The internal validity of an argument refers to cause and effect. If we describe an experiment as an âIf I do A, then B happensâ statement, then internal validity is concerned with whether or not A is related to B, and whether or not there are other things that might affect the relationship that I havenât addressed.

- External

-

The external validity of an argument refers to the generalizability of an experimentâs results to the outside world as a whole. An experiment has strong external validity if the data and the treatment reflect the outside world.

- Statistical

-

The statistical validity of an argument refers to the use of proper statistical methodology and technique in interpreting the gathered data.

- Construct

-

A construct is a formal system used to describe a behavior, something that can be tested or challenged. For example, if I want to establish that someone is transferring files across a network, I might use the volume of data transferred as a construct. Construct validity is concerned with whether the constructs are meaningfulâif they are accurate, if they can be reproduced, if they can be challenged.

In experimental construction, validity is not proven, but challenged. Itâs incumbent on the researcher to demonstrate that validity has been addressed. This is true whether the researcher is a scientist conducting an experiment, or a security analyst explaining a block decision. Figuring out the challenges to validity is a problem of expertiseâvalidity is a living problem, and different fields have identified different threats to validity since the development of the concept.

For example, sociologists have expanded on the category of external validity to further subdivide it into population and ecological validity. Population validity refers to the generalizability of a sampled population to the world as a whole, and ecological validity refers to the generalizability of the testing environment to reality. As security personnel, we must consider similar challenges to the validity of our data, imposed by the perversity of attackers.

Internal Validity

The internal validity of an argument refers to the cause/effect relationship in an experiment. An experiment has strong internal validity if it is reasonable to believe that the effect was caused by the experimenterâs hypothesized cause. In the case of internal validity, the security analyst should particularly consider the following issues:

- Timing

-

Timing, in this case, refers to the process of data collection and how it relates to the observed phenomenon. Correlating security and event data requires a clear understanding of how and when the data is collected. This is particularly problematic when comparing data such as NetFlow (where the timing of a flow is impacted by cache management issues for the flow collector), or sampled data such as system state. Addressing these issues of timing begins with record-keepingânot only understanding how the data is collected, but ensuring that timing information is coordinated and consistent across the entire system.

- Instrumentation

-

Proper analysis requires validating that the data collection systems are collecting useful data (which is to say, data that can be meaningfully correlated with other data), and that theyâre collecting data at all. Regularly testing and auditing your collection systems is necessary to differentiate actual attacks from glitches in data collection.

- Selection

-

Problems of selection refer to the impact that choosing the target of a test can have on the entire test. For security analysts, this involves questions of the mission of a system (is it for research? marketing?), the placement of the system on the network (before a DMZ, outward facing, inward facing?), and questions of mobility (desktop? laptop? embedded?).

- History

-

Problems of history refer to events that affect an analysis while that analysis is taking place. For example, if an analyst is studying the impact of spam filtering when, at the same time, a major spam provider is taken down, then she has to consider whether her results are due to the filter or a global effect.

- Maturation

-

Maturation refers to the long-term effects a test has on the test subject. In particular, when dealing with long-running analyses, the analyst has to consider the impact that dynamic allocation has on identityâif you are analyzing data on a DHCP network, you can expect IP addresses to change their relationship to assets when leases expire. Round robin DNS allocation or content distribution networks (CDNs) will result in different relationships between individual HTTP requests.

External Validity

External validity is concerned with the ability to draw general conclusions from the results of an analysis. If a result has strong external validity, then the result is generalizable to broader classes than the sample group. For security analysis, external validity is particularly problematic because we lack a good understanding of general network behaviorâa problem that has been ongoing for decades.

The basic mechanism for addressing external validity is to ensure that the data selected is representative of the target population as a whole, and that the treatments are consistent across the set (e.g., if youâre running a study on students, you have to account for income, background, education, etc., and deliver the same test). However, until the science of network traffic advances to develop quality models for describing normal network behavior,2 determining whether models represent a realistic sample is infeasible. The best mechanism for accommodating this right now is to rely on additional corpora. Thereâs a long tradition in computer science of collecting datasets for analyses; while theyâre not necessarily representative, theyâre better than nothing.

All of this is predicated on the assumption that you need a general result. If the results can be constrained only to one network (e.g., the one youâre watching), then external validity is much less problematic.

Construct Validity

When you conduct an analysis, you develop some formal structure to describe what youâre looking for. That formal structure might be a survey (âTell me on a scale of 1â10 how messed up your system isâ), or it might be a measurement (bytes/second going to http://www.evilland.com). This formal structure, the construct, is how you evaluate your analysis.

Clear and well-defined constructs are critical for communicating the meaning of your results. While this might seem simple, itâs amazing how quickly construct disagreements can turn into significant scientific or business decisions. For example, consider the question of âhow big is a botnet?â A network security person might decide that a botnet consists of everything that communicates with a particular command and control (C&C) server. A forensics person may argue that a botnet is characterized by the same malware hash present on different machines. A law enforcement person would say itâs all run by the same crime syndicate.

Statistical Validity

Statistical conclusion validity is about using statistical tools correctly. This will be covered in depth in Chapter 11.

Attacker and Attack Issues

Finally, we have to consider the unique impact of security experimentation. Security experimentation and analysis has a distinct headache in that the subject of our analysis hates us and wants us to fail. To that end, we should consider challenges to the validity of the system that come from the attacker. These include issues of currency, resources and timing, and the detection system:

- Currency

-

When evaluating a defensive system, you should be aware of whether the defense is a reasonable defense against current or foreseeable attacker strategies. There are an enormous number of vulnerabilities in the Common Vulnerability Enumeration (CVE; see Chapter 7), but the majority of exploits in the wild draw from a very small pool of those vulnerabilities. By maintaining a solid awareness of the current threat environment (see Chapter 17), you can focus on the more germane strategies.

- Resources and timing

-

Questions of resources and timing are focused on whether or not a detection system or test can be evaded if the attacker slows down, speeds up, or otherwise splits the attack among multiple hosts. For example, if your defensive system assumes that the attacker communicates with one outside address, what happens if the attacker rotates among a pool of addresses? If your defense assumes that the attacker transfers a file quickly, what happens if the attacker takes his timeâhours, or maybe days?

- Detection

-

Finally, questions about the detection system involve asking how an attacker can attack or manipulate your detection system itself. For example, if you are using a training set to calibrate a detector, have you accounted for attacks within the training set? If your system is relying on some kind of trust (IP address, passwords, credential files), what are the implications of that trust being compromised? Can the attacker launch a DDoS attack or otherwise overload your detection system, and what are the implications if he does?

Further Reading

-

Two generally excellent resources for computer security experimentation are the proceedings of the USENIX CSET (Computer Security Experimentation and Test) and LASER (Learning from Authoritative Security Experiment Results) workshops. Pointers to the CSET Workshop proceedings are at https://www.usenix.org/conferences/byname/135, while LASER proceedings are accessible at http://www.laser-workshop.org/workshops/.

-

R. Lippmann et al., âEvaluating Intrusion Detection Systems: The 1998 DARPA Off-Line Intrusion Detection Evaluation,â Proceedings of the 2000 DARPA Information Survivability Conference and Exposition, Hilton Head, SC, 2000.

-

J. McHugh, âTesting Intrusion Detection Systems: A Critique of the 1998 and 1999 DARPA Intrusion Detection System Evaluations as Performed by Lincoln Laboratory,â ACM Transactions on Information and System Security 3:4 (2000): 262â294.

-

S. Axelsson, âThe Base-Rate Fallacy and the Difficulty of Intrusion Detection,â ACM Transactions on Information and System Security 3:3 (2000): 186â205.

-

R. Fisher, âMathematics of a Lady Tasting Tea,â in The World of Mathematics, vol. 3, ed. J. Newman (New York, NY: Simon & Schuster, 1956).

-

W. Shadish, T. Cook, and D. Campbell, Experimental and Quasi-Experimental Designs for Generalized Causal Inference (Boston, MA: Cengage Learning, 2002).

-

R. Heuer, Jr., Psychology of Intelligence Analysis (Military Bookshop, 2010), available at http://bit.ly/1lY0nCR.

Get Network Security Through Data Analysis, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.