Chapter 4. Data in the Service Domain

This chapter is concerned with the practical problem of collecting data moderated by a service, which is to say service domain data. At the conclusion of this chapter, you will be able to identify the sources for service domain data and understand how vantage impacts this data and the challenges to its validity.

What and Why

Practically speaking, service domain data consists of log data generated by various services operating on a host. Service domain data is characterized by the serviceâs moderation of thingsâwhere network data deals in packets which may or may not do anything, service data deals in events that are defined by the service. While data in the host domain consists of the hostâs current state, the service domain contains the events that caused a state change.

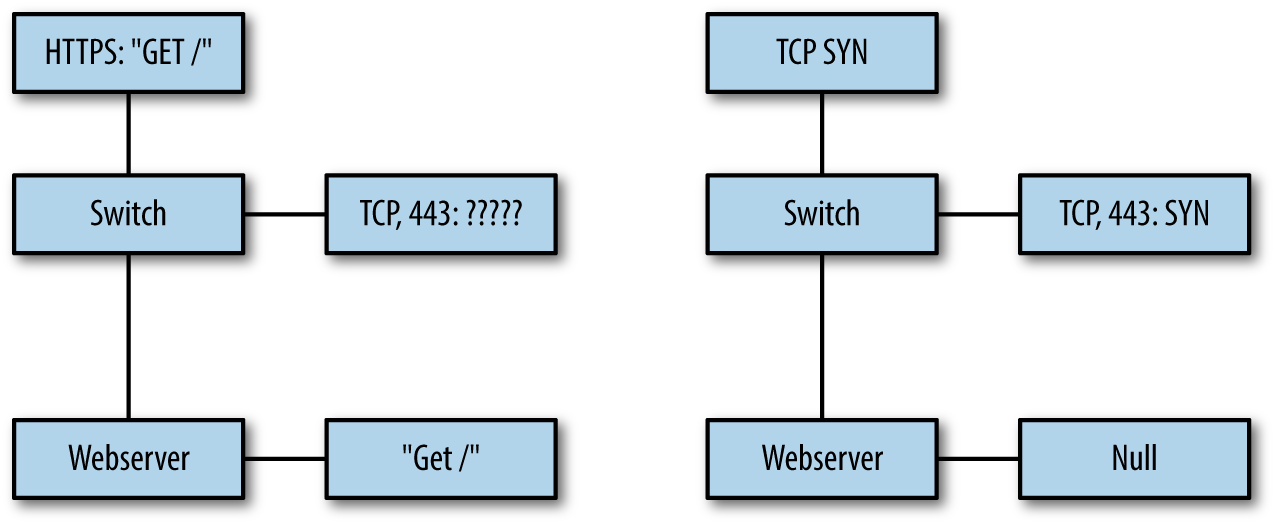

Service data is therefore distinguished from the other categories by the impact of a service moderating the information you receive. To understand how this moderation impacts use, consider the scenario shown in Figure 4-1. This figure shows how data in two domains is generated for two different phenomena.

Figure 4-1. Comparing service and network domain data

On the left is a simple GET request via HTTPS. In this example, data is collected from two points: a span port on a switch collecting raw pcap, and the logs from the web server. Since the HTTPS packets are encrypted, the only information the span port can provide is header-basedâports, protocol, and nothing else. Meanwhile, the web server can decrypt and process the data, recording that information.

While encryption is the most obvious challenge to the validity of inferences using packet capture, all network-based instrumentation faces challenges to its internal validity because TCP/IP design is built on the end-to-end principle. Systems designed around this principle push more functionality to the endpoints, while keeping the network itself relatively simple.

The practical impact of the end-to-end principle on network monitoring is that nothing seen on the network can be taken at face value, especially once you allow for perverse and creative packet crafting. For example, an attacker can send perfectly valid DNS request packets or a realistic-looking half of a TCP session, and from the network traffic alone, the observer cannot guarantee that this wasnât real traffic. In the network domain, the best the defender can do is guess something happened, while in the service domain, the defender can find out what happened.

Instrumenting at the service domain is not a panacea, however, as shown in the righthand side of the figure. In this case, a scanner is hitting the network with half-open scans, and the pcap sensor can see this information. But the web server has never received an HTTPS session from this, and doesnât record the information.

The net result of this is that no single collection point will address all of the potential phenomena you can face. Understanding the strength of each domain, and the data you can effectively collect in each case, is critical to determining what data to collect.

Logfiles as the Basis for Service Data

The foundational data you deal with when working with service information is service-specific logfiles. A service-specific logfile is a record of the transactions that the service engages in.

The concept of a transaction is critical here. A transaction is an interaction with the service as defined by the service. Example transactions may include fetching a web page (for a web server), sending out a DNS query (for a DNS server), or listing all the mail in a folder (for an IMAP server). Transactions are service-specific, and determining what transactions the service provides will require diving into its documentation and relevant standards.

Accessing and Manipulating Logfiles

Operating systems have dozens of processes generating log data at any time. In Unix systems, these logfiles are usually stored as text files in the /var/log directory. Example 4-1 shows this directory for macOS (the ellipses indicate where lines were removed for clarity).

Example 4-1. A /var/log directory from a macOS system

drwxr-xr-x 2 _uucp wheel 68 Jun 20 2012 uucp ... drwxr-xr-x 2 root wheel 68 Dec 9 2012 apache2 drwxr-xr-x 2 root wheel 68 Jan 7 01:47 ppp drwxr-xr-x 3 root wheel 102 Mar 12 12:43 performance ... -rw-r--r-- 1 root wheel 332 Jun 1 05:30 monthly.out -rw-r----- 1 root admin 6957 Jun 5 00:30 system.log.7.bz2 -rw-r----- 1 root admin 5959 Jun 6 00:30 system.log.6.bz2 -rw-r----- 1 root admin 5757 Jun 7 00:30 system.log.5.bz2 -rw-r----- 1 root admin 5059 Jun 8 00:30 system.log.4.bz2 -rw-r--r-- 1 root wheel 870 Jun 8 03:15 weekly.out -rw-r----- 1 root admin 10539 Jun 9 00:30 system.log.3.bz2 -rw-r----- 1 root admin 8476 Jun 10 00:30 system.log.2.bz2 -rw-r----- 1 root admin 5345 Jun 11 00:31 system.log.1.bz2 -rw-r--r-- 1 root wheel 131984 Jun 11 18:57 vnetlib drwxrwx--- 33 root admin 1122 Jun 12 00:23 DiagnosticMessages -rw-r----- 1 root admin 8546 Jun 12 00:30 system.log.0.bz2 -rw-r--r-- 1 root wheel 108840 Jun 12 03:15 daily.out -rw-r--r-- 1 root wheel 22289 Jun 12 04:51 fsck_hfs.log -rw-r----- 1 root admin 899464 Jun 12 20:11 install.log

Note several features of this directory. The system.log files are started daily at 00:30 and are differentiated numerically. There are a number of subdirectories for handling various services. Check the configuration of each individual service you want to acquire logfiles for, but itâs not uncommon for Unix systems to dump them to a subdirectory of /var/log by default.

Unix logfiles are almost always plain text. For example, a brief snippet of a system log reads as follows:

$ cat system.log

Jun 19 07:24:49 local-imac.home loginwindow[58]: in pam_sm_setcred(): Done

getpwnam()

Jun 19 07:24:49 local-imac.home loginwindow[58]: in pam_sm_setcred(): Done

setegid() & seteuid()

Jun 19 07:24:49 local-imac.home loginwindow[58]: in pam_sm_setcred():

pam_sm_setcred: krb5 user admin doesn't have a principal

Jun 19 07:24:49 local-imac.home loginwindow[58]: in pam_sm_setcred(): Done

cleanup3

The majority of Unix system logs are text messages created by filling in templates with specific event information. This kind of templated text is easy to read, but doesnât scale very well.

As of Vista, Windows has extensively revamped its logging structure. Windows logs are further subdivided into several classes, notably:

- Application log

-

Contains messages from individual applications. Note that services such as IIS may use auxiliary logs to contain additional information.

- Security log

-

Contains security events, such as logon attempts and audit policy changes.

- System log

-

Contains messages about system status, such as driver failures.

- Forwarded events log

-

Stores events from remote hosts.

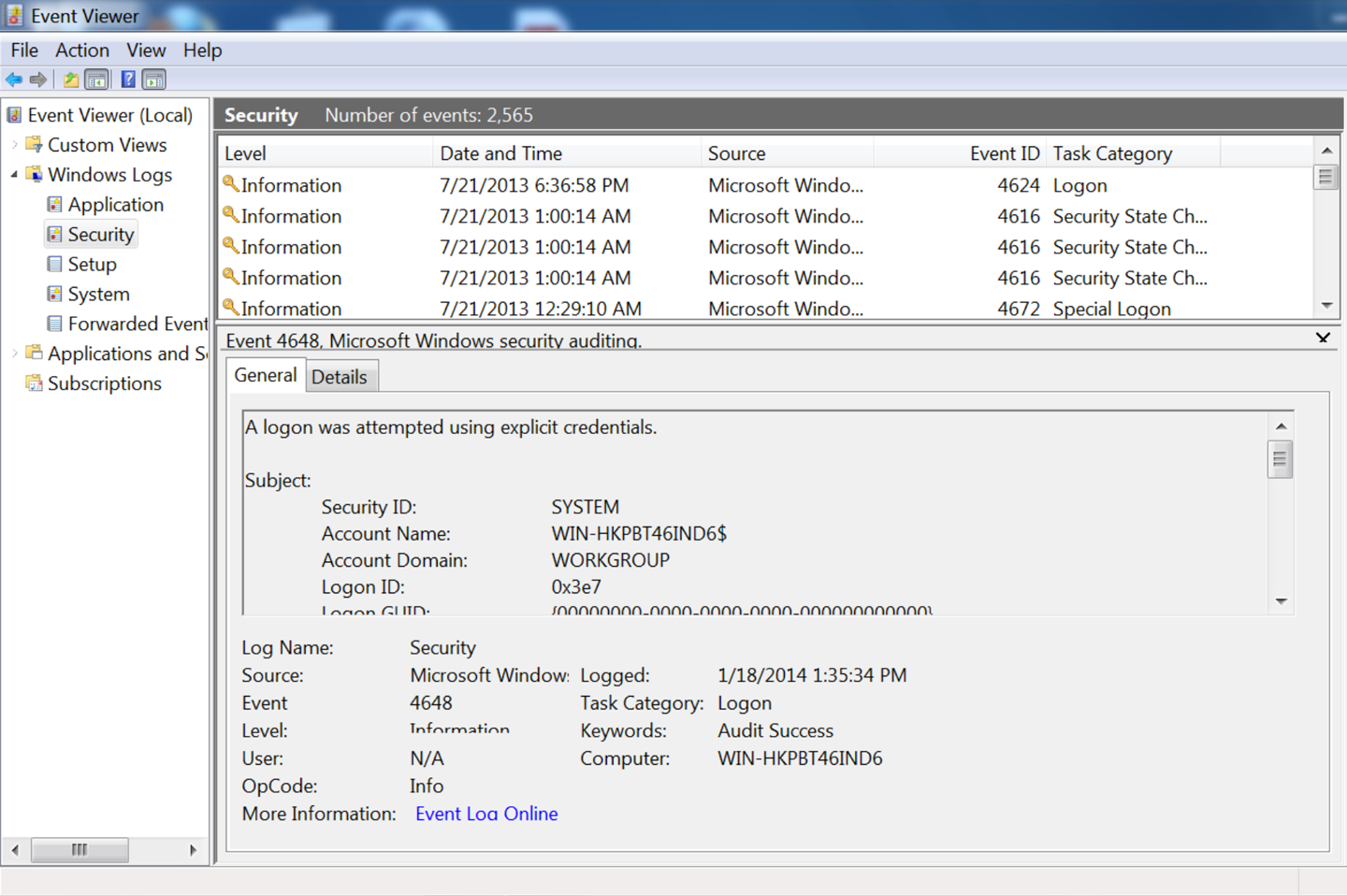

These logs are recorded in %SystemRoot%\System32\Config by default on most Windows installs; however, the more effective mechanism for accessing and reading the files is to use the Windows Event Viewer, as seen in Figure 4-2.

Note the use of the event ID in Figure 4-2; as with Unix systems, the Windows event messages are templated text, though Windows explicitly identifies the type of event using a unique numeric code. These messages are accessible from Microsoftâs website.

Figure 4-2. The Windows event log

On Linux, application logfiles are much less consistently located. As seen in the /var/log directory, administrative structure may be set up to record a logfile in a fixed location, but almost every application has the ability to move around logfiles as necessary. When working with a particular application, consult its documentation to find out where it drops logs.

The Contents of Logfiles

Logs are usually designed to provide debugging and troubleshooting information for an administrator on the host. Because of this, you will often find that host-based logs require both some degree of parsing and some degree of reorganization to make them satisfactory security logs. In this section, we discuss mechanisms for interpreting, troubleshooting, and converting host log data.

The Characteristics of a Good Log Message

Before discussing how to convert a log message, and before complaining about how bad most log messages are, it behooves us to describe what a good security message should look like. A good security log should be descriptive, it should be relatable to other data, and it should be complete.

A descriptive message is one that contains enough information for an analyst to identify all necessary accessible resources for the event described by the message. For example, if a host log records that a user attempted to illegally access a file, it should contain the userâs ID and the file accessed. A host log recording a change in group permissions for a user needs to record the user and the group. A log recording a failed remote login attempt should include the ID that attempted the login and the address that attempted the login.

For example, consider a log message about a failed login attempt on host 192.168.2.2, local name myhost. A nondescriptive message would look like this:

Mar 29 11:22:45.221 myhost sshd[213]: Failed login attempt

This message doesnât tell me anything about why the failure occurred and doesnât provide any information to differentiate between this and any other failed login attempts. I have no information on the target of the attack; is it against the admin account or some user? Analysts with only this information will have to reconstruct the attempt solely from timing data, and they canât even be sure what host was contacted because the name of the host is nondescriptive and there is no addressing information.

A more descriptive message would look like this:

Mar 29 11:22:45.221 myhost (192.168.2.2) sshd[213]: Failed

login attempt from host 192.168.3.1 as 'admin',

incorrect password

A good mental exercise for building a descriptive message is to fall back to the âfive Ws and one Hâ approach from investigation and journalism: who, what, when, where, why, and how. The nondescriptive log message provides the what (failed login) and when, and a partial answer to where (myhost). The descriptive log message provides the who (192.168.3.1 as admin), why, and how (incorrect password), and a better where.

A relatable message is one where the event is easily related to information from other sources. For host-based events this requires IP address and timing information, including whether an event was remote or physically local, the IP address and port of the event if it was remote, and the IP address and port of the host. Relatability is a particular headache when dealing with service logs, as these types of logs often introduce additional addressing schemes on top of IP. For example, hereâs an unrelatable mail log message:

Mar 29 11:22:45.221 myhost (192.168.2.2) myspamapp[213]:

Message <21394.283845@spam.com> title 'Herbal Remedies and Tiny Cars'

from 'spammer@spam.com' rejected due to unsolicited commercial content

The message has a lot of information, but thereâs no way to relate the message sent back to the particular IP address that sent the message. When looking at log messages, consider how you will relate this information to other sources, particularly network traffic. A more relatable message would be as follows:

Mar 29 11:22:45.221 myhost (192.168.2.2) myspamapp[213]:

Message <21394.283845@spam.com> title 'Herbal Remedies and Tiny Cars'

from 'spammer@spam.com' at SMTP host 192.168.3.1:2034 rejected due

to unsolicited commercial content

This example includes client port and addressing information, so we can now relate it to network traffic.

A complete log message is one that contains all the information about a particular event within that single log message. Completeness reduces the number of records an analyst has to search through and provides the analyst with a clear indicator that there is no further information to acquire from this process. Incomplete messages are usually a function of complicated process. For example, an antispam tool might run several different filters on a message, with each filter and the final decision being a separate log line:

Mar 29 11:22:45.221 myhost (192.168.2.2) myspamapp[213]:

Received Message <21394.283845@spam.com> title

'Herbal Remedies and Tiny Cars' from 'spammer@spam.com' at

SMTP host 192.168.3.1:2034

Mar 29 11:22:45.321 myhost (192.168.2.2) myspamapp[213]:

Message <21394.283845@spam.com> passed reputation filter

Mar 29 11:22:45.421 myhost (192.168.2.2) myspamapp[213]:

Message <21394.283845@spam.com> FAILED Bayesian filter

Mar 29 11:22:45.521 myhost (192.168.2.2) myspamapp[213]:

Message <21394.283845@spam.com> Dropped

With incomplete messages, you have to track state across multiple messages, each of which gives a snippet of information and which youâre going to have to group together to do any useful analysis. Consequently, I prefer the message to be aggregated at the start, like this:

Mar 29 11:22:45.521 myhost (192.168.2.2) myspamapp[213]:

Received Message <21394.283845@spam.com> title

'Herbal Remedies and Tiny Cars' from 'spammer@spam.com' at

SMTP host 192.168.3.1:2034 reputation=pass Bayesian=FAIL decision=DROP

Log messages are often only minimally modifiable directly. Instead, to build an effective message you might have to write some kind of logging shim. For example, if the log system outputs syslog messages, you can receive and parse those messages, convert them to a friendlier format, and then forward them on. When considering converting logfiles, in addition to the rules just mentioned, consider the following:

- Convert time to epoch time

-

Almost all record correlation involves identifying the same phenomenon from different sensors, meaning that you need to look for records that are close in time. Converting all time values to epoch time reduces parsing complexity, throws out the nightmare of time zones and daylight saving time, and ensures a consistent treatment for a consistent value.

- Make sure sensors are synchronized

-

A corollary to the first note, make sure that when sensors report the same event, they are reporting the same time. Trying to correct for this after the fact is terribly difficult, so make sure that all the sensors are coordinated, that they all report the same time, and that the clocks are corrected and resynchronized regularly.

- Include addressing information

-

Wherever possible, include the flow five-tuple (source IP, destination IP, source port, destination port, protocol). If some of the values can be inferred from the record (e.g., HTTP servers are running TCP), they can be dropped.

- Ensure that delimiters are understood by the logger

-

On several occasions, I have encountered helpful administrators reconfiguring HTTP logs to use pipes rather than spaces as delimiters. A worthy sentiment, except when the logging module doesnât know to escape the pipe when it occurs in text. If the logger can change its delimiter and understands that the change requires escaping the character, let the logger do it.

- Use error codes rather than text if possible

-

Text doesnât scale wellâitâs bulky, difficult to parse, and often repetitive. Logging systems that generate template messages can also include an error code of some kind as a compact representation of the message. Use this rather than text to save space.

Existing Logfiles and How to Manipulate Them

We can break logfiles into three major categories: columnar, templated, or stateful. Columnar logs record records in discrete columns that are distinguishable by delimiters or fixed text width. Templated logfiles look like English text, but the text comes from a set of document templates and is enumerable. Stateful logfiles use multiple text records to describe a single event.

Columnar data, such as NCSA HTTPdâs CLF format, records one message per event. This message is a summary of the entire event, and consists of a fixed set of fields in columnar format. Columnar logs are relatively easy to deal with as the fields are cleanly delineated and the format is rigid; every message has the same columns and the same information.

When dealing with columnar data, keep in mind the following:

-

Is the data delimited or fixed-width? If itâs fixed-width, are there fields that could conceivably exceed that width, and if so, are the results truncated or is the column expanded?

-

If the data is delimited, is the delimiter escaped when used in the fields? Customizable formats (such as HTTP logs) may use a default delimiter and automatically escape it; if you decide to use your own delimiter, it probably wonât be automatically escaped.

-

Is there a maximum record length? If there is a maximum record length, you may encounter truncated messages with missing fields.

ELF and CLF logfiles, discussed in the next chapter, are good examples of columnar formats.

Templated text messages record one message per event, but the events are recorded as unformatted English text. The messages are templated in the sense that they come from a fixed and enumerable set of templates. Where possible, itâs best to convert templated text messages into some kind of indexed numeric format. In the best case, this is at least partly done. For example, the Windows event log shown in Figure 4-2 has an event ID that describes the type of event and can be used to determine the other arguments that will be provided.

When dealing with templated text, keep in mind the following:

-

Can you get a complete list of the log messages? As an example, consider the Windows logfile in Figure 4-2. Each of these messages is text, but it has a unique integer ID for the message. Check the documentation for a list of all potential log messages.

Stateful Logfiles

In a stateful logfile, a single transaction is split across multiple messages.1 Stateful logfiles are extremely frustrating for operational analysisâyou spend time and cycles reconstructing the event that happened.

Again, some kind of shim is the usual solution here. In general, a shim for a stateful logfile will be some kind of cache holding partially formed records. As it receives signals that a new transaction is happening, it pushes a new record into the cache. When it receives a signal that the transaction completed, or it times out (a critical step), it removes the relevant records from the cache. In between, it will fill each record with the appropriate messages, and by doing so converts the logfiles into a stateless format. In practice, building these shims requires managing a number of different failure modes based around when the message terminates.

This is the kind of work where, to generate a quality logfile, youâre going to have to run the system through its paces and see whether there are hidden errors. To help prep for these errors, consider stateful logs in three categories: stateful with delimiters, stateful with identifiers, and interleaved.

If a logfile is stateful with identifiers, then the messages have individual ID numbers that link together the transaction. The following truncated IronPort example is a good example of a stateful message with IDs:

Thu Mar 24 16:58:31 2016 Info: Start MID 452 ICID 98

Thu Mar 24 16:58:31 2016 Info: MID 452 ICID 98 From: <alerts@fakecompany.org>

Thu Mar 24 16:58:31 2016 Info: MID 452 ICID 98 RID 0 To: \

<elvis.presley@graceland.int>

Thu Mar 24 16:58:31 2016 Info: MID 452 Subject 'Sent to elvis presley to test \

regexps in filtering'

Thu Mar 24 16:58:31 2016 Info: MID 452 ready 665 bytes from \

<alerts@fakecompany.org>

Thu Mar 24 16:58:31 2016 Info: MID 452 matched all recipients for per-recipient \

policy DEFAULT in the inbound table

Thu Mar 24 16:58:31 2016 Info: Delivery start DCID 151 MID 452 to RID [0]

Thu Mar 24 16:58:31 2016 Info: Message done DCID 151 MID 452 to RID [0] \

[('x-customheader', '192.168.1.1')]

Thu Mar 24 16:58:31 2016 Info: MID 452 RID [0] Response 'Ok'

Thu Mar 24 16:58:31 2016 Info: Message finished MID 452 done

Thu Mar 24 16:58:33 2016 Info: Start MID 453 ICID 98

When working with these types of logs, check for the following pitfalls:

-

Determine the format of the identifiers, and how they are assigned. If identifiers are assigned linearly, (e.g., ID 1, ID 2, ID 3), be prepared for gaps in ID numbers and have contingencies ready, such as a secondary alert.

-

Determine messages for creating and destroying the identifiers. Expect to have a timeout or other mechanism for dealing with messages that donât close.

-

When creating a shim, fill in all the fields you will report on with a default unreported value, then update those fields as you encounter their values in the logfile. This way you can ship the record even if you donât have all the fields, and it will be clear which fields were omitted.

A logfile is stateful with delimiters if it uses some kind of

discrete begin/end messages to indicate the beginning and ending of a

transaction, and the entire transaction is contained within those begin and end

messages. Example 4-2 shows a representative

format; as this example shows, each individual event is prefaced with

a timestamp, and then there are a variable number of fields. Also of

note is that there is no explicit delimiter for ending the messageâthe Request-Authenticator field may be a delimiter, but given the

differences in the fields shown in both entries, it canât be verified.

Example 4-2. An example of a delimited log: GNU RADIUS packets2

Fri Dec 15 18:00:24 2000

Acct-Session-Id = "2193976896017"

User-Name = "e2"

Acct-Status-Type = Start

Acct-Authentic = RADIUS

Service-Type = Framed-User

Framed-Protocol = PPP

Framed-IP-Address = 11.10.10.125

Calling-Station-Id = "+15678023561"

NAS-IP-Address = 11.10.10.11

NAS-Port-Id = 8

Acct-Delay-Time = 0

Timestamp = 976896024

Request-Authenticator = Unverified

Fri Dec 15 18:32:09 2000

Acct-Session-Id = "2193976896017"

User-Name = "e2"

Acct-Status-Type = Stop

Acct-Authentic = RADIUS

Acct-Output-Octets = 5382

Acct-Input-Octets = 7761

Service-Type = Framed-User

Framed-Protocol = PPP

Framed-IP-Address = 11.10.10.125

Acct-Session-Time = 1905

NAS-IP-Address = 11.10.10.11

NAS-Port-Id = 8

Acct-Delay-Time = 0

Timestamp = 976897929

Request-Authenticator = UnverifiedWhen working with log data, expect to run into common problems, in particular with how log messages are delimited. From experience, I recommend checking the following:

-

Verify that logs have both start and end delimiters. Itâs not uncommon for these log formats to explicitly start a log message, and then never end it.

-

Verify that a single transaction happens between delimiters. If at all possible, test this empirically by running a long transaction and, while that is running, running a simultaneous short transaction. If messages overlap, you have an interleaved log.

-

Determine the format of starting and ending messages, and be prepared to deal with incomplete messages.

The most problematic cases are stateful interleaved logfiles; these are logfiles where events take multiple lines, and multiple events happen simultaneously. In an interleaved logfile, you will see messages from event A, messages from event B, and then more messages from event A.

Stateful interleaved logfiles have serious internal validity problems and should, when encountered, be considered a secondary source of dataâacceptable for debugging, terrible for forensics. Your best solution when encountering this type of message is to keep it in the original format, since you canât trust that youâre seeing events in sequence

Further Reading

-

The OSSEC Log Samples page.

-

A. Chuvakin and K. Schmidt, Logging and Log Management: The Authoritative Guide to Dealing with Syslog, Audit Logs, Alerts, and other IT âNoiseâ (Rockland, MA: Syngress Publishing, 2012).

-

J. Turnbull, The Art of Monitoring, available at http://www.artofmonitoring.com.

1 In the previous edition of the book, I referred to stateful logfiles as annotative. Iâve switched in order to focus on the major source of trouble in dealing with these files: state.

2 From https://www.gnu.org/software/radius/manual/html_mono/radius.html#SEC182

Get Network Security Through Data Analysis, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.