Three issues are driving the increasing use of parallel processing in database environments:

- The need for increased speed or performance

Database sizes are increasing, queries are becoming more complex—especially in data warehouse systems—and the database software must somehow cope with the increasing demands that result from this complexity.

- The need for scalability

This requirement goes hand-in-hand with performance. Databases often grow rapidly, and companies need a way to easily and cost-effectively scale their systems to match that growth.

- The need for high availability

High availability refers to the need to keep a database up and running with minimal or no downtime. With the increasing use of the Internet, companies need to accommodate users at all hours of the day and night.

Database sizes have been increasing steadily, and it’s now quite common to find data warehouses holding several hundred gigabytes of data. Some databases, referred to as Very Large Databases (VLDBs), even hold several terabytes of data. Complex queries are run on these data warehouses to gather business intelligence and to aid in decision making. Such queries require a lot of processing time to execute. By executing these queries in parallel, you can reduce the elapsed time while still providing the required processor time.

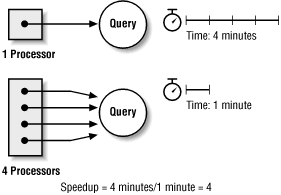

Speedup is defined as the ratio between the runtime with one processor and the runtime using multiple processors. It measures the performance improvement gained using multiple processors instead of a single processor and is calculated using the following formula:

Speedup = Time1 / Timem

Time1 is the time it takes to execute a task using only one processor, while Timem is the time it takes to execute that same task using m processors.

Figure 1.1 shows a query that takes four minutes to complete using one processor, but that takes only one minute to complete using four processors.

Plugging the values from Figure 1.1 into the speedup formula yields the following results:

Speedup = 4 / 1 Speedup = 4.0

In this case, the speedup is 4. Multiplying the number of processors by 4 caused the query to finish in one-fourth the time. Unfortunately, such an ideal result is seldom achieved in real life.

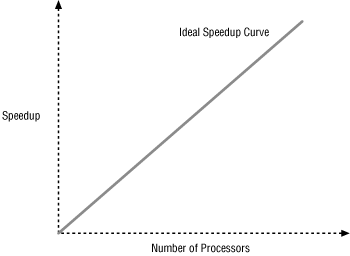

In an ideal world, the parallel processing speedup would track with the number of processors used for any given task. In other words, the ideal speedup curve is a 45-degree line like the one you see in Figure 1.2.

The ideal speedup curve is rarely reached because parallelism entails a certain amount of overhead. The inherent parallelism of the application also plays an important role in the amount of speedup you can achieve. Some tasks are easily divided into parts that can be processed in parallel. The join of two large tables, for example, can be done in parallel. Other tasks, however, cannot be divided. A nonpartitioned index scan is one such example. If an application has little or no inherent parallelism, then little or no speedup will be achieved.

Efficiency is the speedup divided by the number of processors used. In our example, the number of processors is 4, and the speedup achieved is also 4. The efficiency then is 100%, which represents an ideal case.

Scalability is the ability to maintain performance levels as the workload increases by incrementally adding more system capacity (adding more processors and disks). On a single-processor system, it is very difficult to achieve scalability beyond a certain point. Parallel systems provide better scalability.

In many applications, the number of database users and the transaction volume are both likely to increase over time. The demand for added processing power to handle the increased load, without the loss of response time, can be met by using parallel systems. For example, to handle a higher load, an SMP system with four processors can be augmented to eight processors with additional memory and disk capacity.

In situations in which several thousand users are expected to use a database, the processing and memory requirements may be beyond the capacity of a single SMP system. In such a situation, you’ll want to consider using parallel database systems. These allow you to have several nodes, each with its own copy of the database server software and memory structures, working together on a single, shared database. Such parallel systems can be clusters or MPP systems. As the user population grows, the number of nodes in the cluster or MPP system may be increased as needed to handle the additional load.

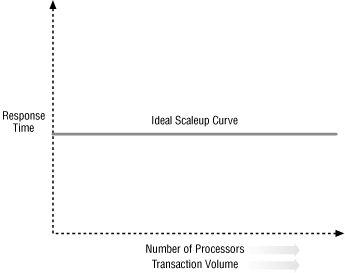

Scaleup is the ability of an application to retain response time as the job size or the transaction volume increases by adding additional processors and disks. The term scalability often is used in reference to scaleup.

In database applications, scaleup can be either batch or transactional. With batch scaleup, larger batch jobs can be supported without a loss of response time. With transaction scaleup, larger numbers of transactions can be supported without loss of response time. In both cases, response time is maintained by the addition of more processors. For example, a 4-processor system can provide the same response time with a workload of 400 transactions per minute as the response time of a single-processor system that supports a workload of 100 transactions per minute.

There is a key difference between scaleup and speedup: when calculating speedup, the problem size is kept fixed, whereas scaleup is calculated by increasing the problem size or transaction volume. Scaleup is measured in terms of how much the transaction volume can be increased by adding more processors while still maintaining a constant response time. Scaleup is calculated using the following formula:

Scaleup = Volumem / Volume1

Volumem is the transaction volume executed in a given amount of time using m processors, while Volume1 is the transaction volume executed in the same time using one processor. For our previous example:

Scaleup = 400 / 100 Scaleup = 4

this scaleup of 4 is achieved with 4 processors. This is an example of ideal (linear) scaleup. Figure 1.3 shows a graph of an ideal transaction scaleup. Notice that the response time remains constant by increasing the number of processors as the transaction volume is increased.

The curve, or flat line, really, in Figure 1.3 represents an ideal. In reality, after a certain point, the response time increases for higher transaction volumes even if additional processors are added.

Databases are used in the mission-critical applications in organizations such as stock exchanges, banks, and airlines. Many database applications are expected to be available 24 hours a day, 7 days a week, all year round. The availability of such databases (along with other system components) is crucial to the success of these organizations. With an e-commerce application, for example, customers may log in any time of the day or night to request products and services. Database downtime causes loss of revenue and customer dissatisfaction. As the negative impact of database downtime has increased for many applications, high availability requirements have become an important factor in the design of database software.

Running parallel databases on a multinode parallel system is one way to provide high availability. Other high availability options include maintaining standby databases and replicated databases. With a parallel database, when one node goes down, it affects only the subset of users connected to the failed node; moreover, users of the failed node still can access the database after switching to one of the surviving nodes.

Economics is another driver toward parallel computing. It costs money to make processors faster. After a certain limit, increasing the processing power on a single CPU system becomes technically very difficult. Once that limit has been reached, SMP systems often provide better performance for the price. Likewise, when the scalability limit of SMP systems is reached, clusters or MPP systems may provide better price/performance ratios.

Database applications can exploit two types of parallelism in a parallel computing environment: inter-query parallelism and intra-query parallelism. While inter-query parallelism has been around for many years, database vendors recently have started to implement intra-query parallelism as well.

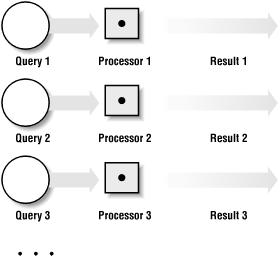

Inter-query parallelism is the ability to use multiple processors to execute several independent queries simultaneously. Figure 1.4 illustrates inter-query parallelism, showing how three independent queries can be performed simultaneously by three processors. Inter-query parallelism does not provide speedup, because each query is still executed by only one processor.

In online transaction processing (OLTP) applications, each query is independent and takes a relatively short time to execute. As the number of OLTP users increases, more queries are generated. Without inter-query parallelism, all queries will be performed by a single processor in a time-shared manner. This slows down response time. With inter-query parallelism, queries generated by OLTP users can be distributed over multiple processors. Since the queries are performed simultaneously by multiple processors, response time remains satisfactory.

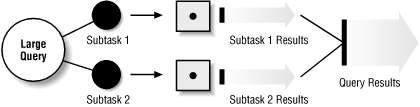

Intra-query parallelism is the ability to break a single query into subtasks and to execute those subtasks in parallel using a different processor for each. The result is a decrease in the overall elapsed time needed to execute a single query. Intra-query parallelism is very beneficial in decision support system (DSS) applications, which often have complex, long-running queries. As DSS systems have become more widely used, database vendors have been increasing their support for intra-query parallelism.

Figure 1.5 shows how one large query may be decomposed into two subtasks, which then are executed simultaneously using two processors. The results of the subtasks then are merged to generate a result for the original query. Intra-query parallelism is useful not only with queries, but also with other tasks such as data loading, index creation, and so on. Chapter 3, and Chapter 4, provide detailed information on Oracle’s support of intra-query parallelism.

Get Oracle Parallel Processing now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.