Chapter 1. What Problems Are You Solving and Are You Ready?

Modern businesses have to adapt to a changing infrastructure landscape to achieve their growth goals. Part of that transition has been the transformation in the enterprise to use tools such as containers and Kubernetes. These new tools, while offering many benefits, come with a high degree of uncertainty too. The pressure to use new technology to stay competitive is constantly vying with other needs of the business, such as operational reliability. A new deployment system and infrastructure has to solve more problems than it introduces.

How can an enterprise harmonize Kubernetes adoption with the need to protect itself, to preserve existing value, and to ensure compliance? In this piece, we’ll discuss what you need to prepare for adopting Kubernetes in your business.

Is the Business Ready?

Why would you be interested in using Kubernetes in the first place? The constructs Kubernetes has allow us to move faster, deploy more software, and quickly roll back changes in the event of a buggy build. This workflow is beneficial to developers because it is can free them up from laborious infrastructure tasks and allow them to focus on shipping more code.

Some folks on your team might need some convincing before jumping in to Kubernetes with both feet. After all, there’s a whirlwind of buzzwords and hype out there, and even for experts, it’s hard to keep up. When articulating the value of adopting Kubernetes, especially in the enterprise, you need to meet your audience where their needs are. That includes addressing topics such as security, safe migration, and organizational challenges for rolling out Kubernetes.

Questions Kubernetes opens up include:

-

Who is going to handle what responsibilities in the migration and day-to-day operations?

-

What about teams with special hardware or security needs?

-

How and where should we deploy Kubernetes?

All of these questions and their counterparts are the source of a lot of angst. That’s what makes clearly articulating the business value of a container orchestration tool like Kubernetes vital. You’ll need to be prepared to address a lot of objections.

In addition to addressing objections, you also want to proactively demonstrate what’s great about Kubernetes. Kubernetes can enable teams to deploy incredibly rapidly compared to how teams managed their code rollouts in the past. Not only can it allow for that rapid rollout, but it has the primitives to make that scalable and address concerns about monitoring and availability.

Instead of spending time fretting over configuration of the infrastructure, your team can define their applications using code and track them in version control, so everyone can easily follow what’s going on. Kubernetes then serves as the beating heart of your deployment infrastructure, absorbing those definitions and handling many of the scheduling hassles your engineering team would otherwise be ensnared with. Painting a vivid picture of life in greener pastures will go a long way towards successfully making a case.

Finally, once your organization is in alignment about the benefits of adopting Kubernetes, you’ll want to manage expectations clearly. If the business is under the impression that there’ll be an easy lift-and-shift from existing code running in virtual machines (VMs) to Kubernetes resulting in instant benefit, there will be a lot of disappointment. While Kubernetes is often presented as a panacea for a variety of problems, the truth is that your managers and engineers need to be prepared for lots of refactoring existing apps to get them working properly on Kubernetes. If a migration is handled improperly, it can have catastrophic results for the business.

Are the People Ready?

Your company might also need to be prepared to restructure some of the organization for maximum effectiveness while adopting Kubernetes. Organizations with traditional structure often have a hierarchical system in which a few key architects have veto power. Such an organization might also set policies, design principles, and formal processes in place that everyone has to follow. This kind of rigidity can make it difficult for an individual or project to make a change. Businesses can begin to operate more effectively as they adopt Kubernetes if they can make small changes from a strict and hierarchical model to a more egalitarian one with a strong culture of ownership.

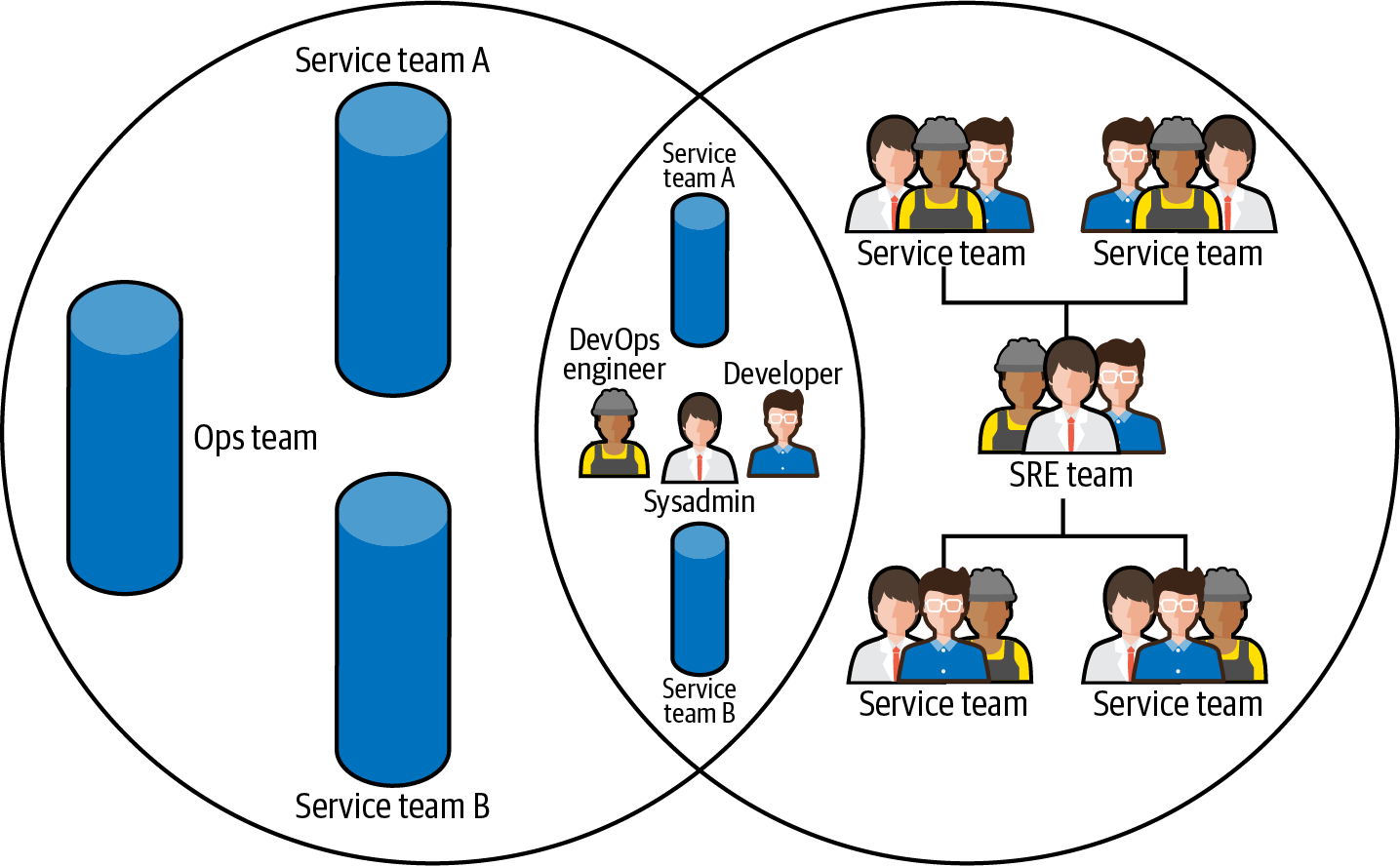

One way to help promote such a change is by developing a site reliability engineering (SRE) team. Instead of working on one specific service or subsystem, the site reliability team in your organization can focus on developing solutions that benefit everyone and improve application reliability across the board. The development of a site reliability engineering department can enable your development teams to breathe easier when it comes to production deployments as they know someone has their back when it comes to monitoring, infrastructure deployment, and Kubernetes best practices.

As you can see in Figure 1-1, teams can start small by spinning off operators or having bridge members that help them adopt cloud native practices without having to refactor the business to an edgy SRE model right away. These folks can be responsible for deploying software around or on top of Kubernetes.

What type of software will your SRE or pseudo-SRE team be deploying? One important addition is the introduction of a core control plane as a consolidated “single pane of glass.” Instead of each team rolling their own solutions, a common platform will serve as the interface point for the underlying infrastructure. While forcing standardization can cause slowdowns initially, it’s building equity by freeing the team to ship more code down the line. The SRE team can also be responsible for deployments of shared components for systems such as monitoring and tracing.

Figure 1-1. Enterprises and siloed organizations can learn from site reliability engineering practices slowly, as operators and developers from previously disparate departments work together on Kubernetes-related projects.

Once teams adapt to the addition of an SRE team and deployment standardization, they will be able to deploy more frequently and with less fear, and make improvements at bridging the gaps between the development and operations wings. Enabling a team to make smaller, more concise calls about what they need is the key to more easily scaling operations teams. Standardizing on a common underlying deployment platform (i.e., Kubernetes) as well as common tooling for interacting with it is key for success.

Are Your Systems Ready?

As we’ll discuss in more detail in Chapter 2, preparing for an effective Kubernetes rollout on the systems side requires a lot of careful thought and planning to ensure it is done correctly. Getting too lax about security, for instance, could come back to haunt you.

For example, you could take special care in configuring your ingress and networking, which we will discuss in Chapter 2. You can also meticulously code review changes, audit what’s happening within your Kubernetes cluster, and run a scanning system for your container images to catch vulnerabilities in your dependencies quickly.

You can also look at existing workflows within your company to see where you can start making changes in the quest to adopt Kubernetes, and establish if your business is even ready for such a jump in the first place. Are you already automating common operational tasks, or is it tedious and manual? Are you using Git or other version control systems? Are you taking advantage of existing virtualization or cloud systems? If you aren’t already taking these baby steps in the direction of cloud native, you will be in for a huge culture shock if you try to adopt Kubernetes. Take some small steps in that direction first if your systems aren’t already headed that way.

Modern Applications Initiative

To successfully adopt Kubernetes, you must prepare the applications that will run within it to follow the way of cloud native. Kubernetes is a solution that can make your infrastructure sing when everything is done its way, but might make everything wail with horrible cries when it’s not. Often, net new apps are the best fit to run on it, as they don’t have to unlearn the old ways of the world they were originally coded for.

Kubernetes won’t magically be able to take a traditional application, such as a legacy monolith depending on a huge SQL database, and transform it into a fast modern app. To get ready for your applications to run on Kubernetes, you’ll have to perform a lot of prep work. While vendors might promise that lift-and-shift from an old model can be relatively straightforward, this couldn’t be farther from the truth. Adopting Kubernetes requires adopting a whole new mindset. Everything from secrets management, to dependency installation, to networking will be different from what you might be used to when working with legacy systems. Of course, that can also be a good thing—many of you are probably fed up with the old way of doing things and looking for change.

Being able to use modern cloud systems and tools built on top of Kubernetes will enable engineers to be more productive. In a slow-moving environment, access to certain legacy data systems might be impractical or impossible. Adapting to the new landscape with heavy lifting done by cloud, container platforms, and trusted virtual control planes in the data center will help companies to speed up.

We’ll discuss more on architecture and considerations for cloud native applications in Chapter 4.

Cloud Native Applications Are About “How”

Many folks have probably heard the term “cloud native” bandied about. It’s easy to get an incomplete picture of what it means, and you might be surprised to find out that it doesn’t just encompass the public cloud, but private clouds too. It’s a philosophy and system of design that can be leveraged to deploy applications that can fundamentally handle resiliency, scale, and several other concerns naturally. Adopting cloud native practices can help your team learn, grow, and deliver high-quality software.

To best benefit from what cloud native applications have to offer, you have to be continually asking yourself “how.” After all, it’s not magic behind the scenes with tools like Kubernetes, but a system that will do exactly what you tell it to do like any other computer. So it helps to understand how it will be able to deliver on its promises—for instance, how will you scale out apps when needed? This requires an understanding of not only Kubernetes concepts, but how you will deliver the underlying hardware and resources to allow those apps breathing room. After all, the control of Kubernetes will simply not schedule our apps if the resource claims cannot be enforced.

What’s another “how” for cloud native apps? One thing you can’t take for granted is resiliency, i.e., how will you run Kubernetes with high availability, a stable networking configuration, and with the right trade-offs between flexibility and security? We’ll discuss more on those topics specifically in Chapter 4.

Likewise, how will you properly adapt your current applications to conform to the Kubernetes way? Kubernetes enforces a new model for application architecture, which requires extra thinking about how you will define your workloads. The blessing of highly automated and flexible systems like Kubernetes comes with responsibility, too.

Identifying the Low-Hanging Fruit of Apps

As you identify ways that your business can move forward with Kubernetes and cloud native, identifying key areas to get started in will help enable your success. For instance, Kubernetes has a lot of obstacles and risks when it comes to running stateful workloads such as databases, so those types of workloads should be avoided for early picks. There’s also no need to jump in the deep end of the pool by migrating tier 1 workloads, a project which would be risky for teams without much container experience under their belt.

Instead, for your first choices to deploy on Kubernetes, it’s best to choose applications that are fairly simple, so they can be adapted with relative ease. There’s probably a lot of low-hanging fruit on tier 3, 4, 5, and beyond that your team can pluck. In terms of identifying good candidates, there are some common qualities that will help you assess them. If they are stateless, that’s a big win, because managing state on Kubernetes requires extra planning and caution.

The less pressure there is on the applications in their final environment, the better. Development use cases can excel, like allowing software engineers to run their forks on a test cluster to accelerate their workflow. Production apps that are only lightly relied upon (e.g., an internal best-effort app where no one needs to get paged if it goes down for a while) also can make for a great fit—they offer some of the excitement of deploying something to production, with low risks and an opportunity to experiment and learn what works well and what doesn’t.

All in all, it’s best to walk before you run—and before you know it, you’ll be sprinting around the cloud native landscape like a pro.

Get Overcoming Infrastructure Obstacles When Deploying Production-Ready Kubernetes now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.