Chapter 4. Understanding Cause and Effect

When problems occur we often assume that all we need to do is reason about the options, select one, and then execute it. This assumes that causality is determinable and therefore that we have a valid means of eliminating options. We believe that if we take a certain action we can predict the resulting effect, or that given an effect we can determine the cause.

However, in IT systems this is not always the case, and as practitioners we must acknowledge that there are in fact systems in which we can determine cause and effect and those in which we cannot.

In Chapter 8 we will see an example that illustrates the negligible value identifying cause provides in contrast to the myriad learnings and action items that surface as the result of moving our focus away from cause and deeper into the phases of the incident lifecycle.

Cynefin

The Cynefin (pronounced kun-EV-in) complexity framework is one way to describe the true nature of a system, as well as appropriate approaches to managing systems. This framework first differentiates between ordered and unordered systems. If knowledge exists from previous experience and can be leveraged, we categorize the system as “ordered.” If the problem has not been experienced before, we treat it as an “unordered system.”

Cynefin, a Welsh word for habitat, has been popularized within the DevOps community as a vehicle for helping us to analyze behavior and decide how to act or make sense of the nature of complex systems. Broad system categories and examples include:

- Ordered

-

Complicated systems, such as a vehicle. Complicated systems can be broken down and understood given enough time and effort. The system is “knowable.”

- Unordered

-

Complex systems, such as traffic on a busy highway. Complex systems are unpredictable, emergent, and only understood in retrospect. The system is “unknowable.”

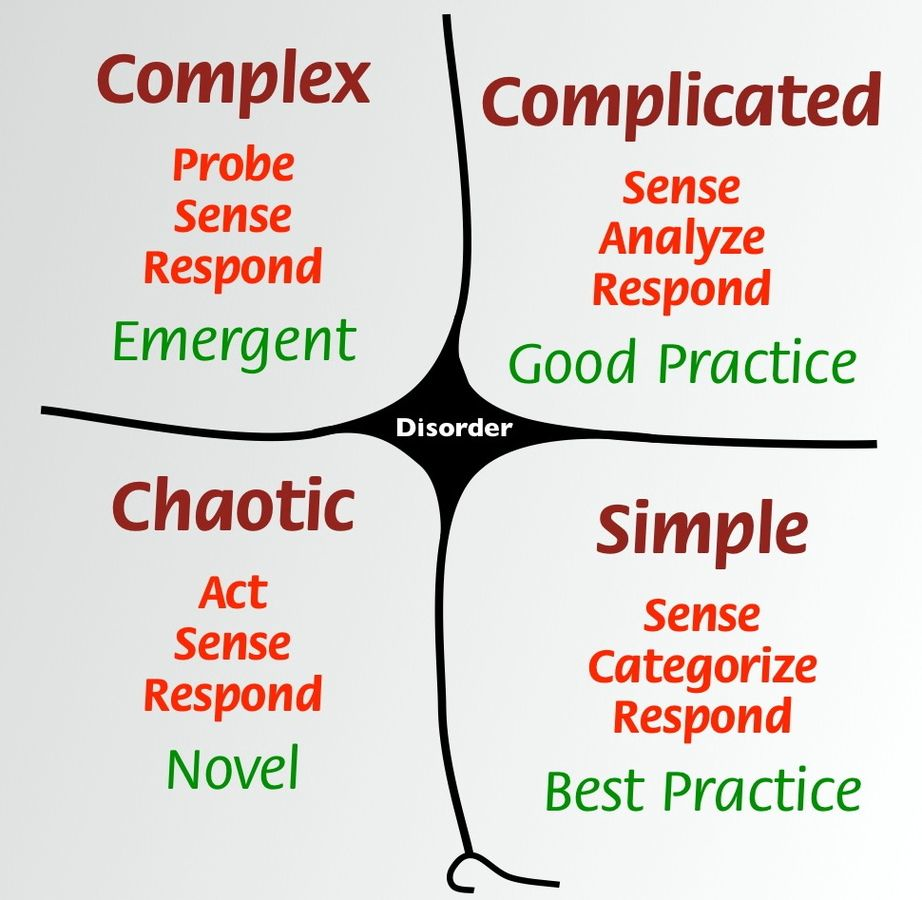

As Figure 4-1 shows, we can then go one step further and break down the categorization of systems into five distinct domains that provide a bit more description and insight into the appropriate behaviors and methods of interaction.

We can conceptualize the domains as follows:

-

Simple—known knowns

-

Complicated—known unknowns

-

Complex—unknown unknowns

-

Chaotic—unknowable unknowns

-

Disorder—yet to be determined

Ordered systems are highly constrained; their behavior is predictable, and the relationship between cause and effect is either obvious from experience or can be determined by analysis. If the cause is obvious then we have a simple system, and if it is not obvious but can be determined through analysis we say it is a complicated system, as cause and effect (or determination of the cause) are separated by time.1

Figure 4-1. Cynefin offers five contexts or “domains” of decisionmaking: simple, complicated, complex, chaotic, and a center of disorder. (Source: Snowden, https://commons.wikimedia.org/w/index.php?curid=53504988)

Tip

IT systems and the work required to operate and support them fall into the complicated, complex, and at times chaotic domains, but rarely into the simple one.

Within the realm of unordered systems, we find that some of these systems are in fact stable and that their constraints and behavior evolve along with system changes. Causality of problems can only be determined in hindsight, and analysis will provide no path toward helping us to predict (and prevent) the state or behavior of the system. This is known as a “complex” system and is a subcategory of unordered systems.

Other aspects of the Cynefin complexity framework help us to see the emergent behavior of complex systems, how known “best practices” apply only to simple systems, and that when dealing with a chaotic domain, your best first step is to “act,” then to “sense” and finally “probe” for the correct path out of the chaotic realm.

From Sense-Making to Explanation

Humans, being an inquisitive species, seek explanations for the events that unfold in our lives. It’s unnerving not to know what made a system fail. Many will want to begin investing time and energy into implementing countermeasures and enhancements, or perhaps behavioral adjustments will be deemed necessary to avoid the same kind of trouble. Just don’t fall victim to your own or others’ tendencies toward bias or to seeking out punishment, shaming, retribution, or blaming.

Cause is something we construct, not find.

Sidney Dekker, The Field Guide to Understanding Human Error

By acknowledging the complexity of systems and their state we accept that it can be difficult, if not impossible, to distinguish between mechanical and human contributions. And if we look hard enough, we will construct a cause for the problem. How we construct that cause depends on the accident model we apply.2

Evaluation Models

Choosing a model helps us to determine what to look for as we seek to understand cause and effect and, as part of our systems thinking approach, suggests ways to explain the relationships between the many factors contributing to the problem. Three kinds of model are regularly applied to post-incident reviews:3

- Sequence of events model

-

Suggests that one event causes another, which causes another, and so on, much like a set of dominoes where a single event kicks off a series of events leading to the failure.

- Epidemiological model

-

Sees problems in the system as latent. Hardware and software as well as managerial and procedural issues hide throughout.

- Systemic model

-

Takes the stance that problems within systems come from the “normal” behavior and that the state of the system itself is a “systemic by-product of people and organizations trying to pursue success with imperfect knowledge and under the pressure of other resource constraints (scarcity, competition, time limits).”4

The latter is represented by today’s post-incident review process and is what most modern IT organizations apply in their efforts to “learn from failure.”

In order to understand systems in their normal state, we examine details by constructing a timeline of events. This positions us to ask questions about how things happened, which in turn helps to reduce the likelihood of poor decisions or an individual’s explicit actions being presumed to have caused the problems. Asking and understanding how someone came to a decision helps to avoid hindsight bias or a skewed perspective on whether responders are competent to remediate issues.

In modern IT systems, failures will occur. However, when we take a systemic approach to analyzing what took place, we can accept that in reality, the system performed as expected. Nothing truly went wrong, in the sense that what happened was part of the “normal” operation of the system. This method allows us to discard linear cause/effect relationships and remain “closer to the real complexity behind system success and failure.”5

Note

You’ve likely picked up on a common thread regarding “sequence of events” modeling (a.k.a. root cause analysis) in this book. These exercises and subsequent reports are still prevalent throughout the IT world. In many cases, organizations do submit that there was more than one cause to a system failure and set out to identify the multitude of factors that played a role. Out of habit, teams often falsely identify these as root causes of the incident, correctly pointing out that many things conspired at once to “cause” the problem, but unintentionally sending the misleading signal that one single element was the sole true reason for the failure. Most importantly, by following this path we miss an opportunity to learn.

Within modern IT systems, the accumulation and growth of interconnected components, each with their own ways of interacting with other parts of the system, means that distilling problems down to a single entity is theoretically impossible.

Post-accident attribution [of the] accident to a ‘root cause’ is fundamentally wrong. Because overt failure requires multiple faults, there is no isolated “cause” of an accident. There are multiple contributors to accidents. Each of these is [necessarily] insufficient in itself to create an accident. Only jointly are these causes sufficient to create an accident.Richard I. Cook, MD, How Complex Systems Fail

Instead of obsessing about prediction and prevention of system failures, we can begin to create a future state that helps us to be ready and prepared for the reality of working with “unknown unknowns.”

This will begin to make sense in Chapter 8, when we see how improvements to the detection, response, and remediation phases of an incident are examined more deeply during the post-incident review process, further improving our readiness by enabling us to be prepared, informed, and rehearsed to quickly respond to failures as they occur.

To be clear, monitoring and trend analysis are important. Monitoring and logging of various metrics is critical to maintaining visibility into a system’s health and operability. Without discovery and addressing of problems early, teams may not have the capacity and data to handle problems at the rate at which they could happen. Balancing efforts of proactive monitoring and readiness for inevitable failure provides the best holistic approach.

The case study of CSG International at the end of Chapter 9 serves as an illustration of this point. They weren’t thinking holistically; a post-incident review provided them with a clearer vision of the work actually performed, leading to the opening up of much-needed additional resources.

This is not to say RCAs can’t provide value. They can play a role in improving the overall health of the system.

Corrective actions can effectively implement fixes to the system and restore service. However, when we identify something within our systems that has broken and we instinctually act to identify and rectify the cause, we miss out on the real value of analyzing incidents retrospectively. We miss the opportunity to learn when we focus solely on returning our systems to their state prior to failure rather than spending efforts to make them even better than before.

We waste a chance to create adaptable, flexible, or even perhaps self-healing systems. We miss the opportunity to spot ways in which the system can start to evolve with the service we are providing. As demand for our services and resources changes in unexpected ways, we must design our systems to be able to respond in unison.

Think back to the huddled group discussing the events surrounding the botched migration I was involved in. Had we performed an analysis focused solely on identifying cause—who was to blame and what needed to be fixed—we would have left a lot of amazing information on the table.

Instead, we discovered a huge amount about the system that can now be categorized as “known” by a greater number of people in the organization, nudging our sense of certainty about the “normal” behavior and state of our system further in our favor.

Get Post-Incident Reviews now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.