Chapter 4. Iteration and Flow Control

Having covered the essential aspects of ES6 in Chapter 2, and symbols in Chapter 3, weâre now in great shape to understand promises, iterators, and generators. Promises offer a different way of attacking asynchronous code flows. Iterators dictate how an object is iterated, producing the sequence of values that gets iterated over. Generators can be used to write code that looks sequential but works asynchronously, in the background, as weâll learn toward the end of the chapter.

To kick off the chapter, weâll start by discussing promises. Promises have existed in user-land for a long time, but theyâre a native part of the language starting in ES6.

4.1 Promises

Promises can be vaguely defined as âa proxy for a value that will eventually become available.â While we can write synchronous code inside promises, promise-based code flows in a strictly asynchronous manner. Promises can make asynchronous flows easier to reason aboutâonce youâve mastered promises, that is.

4.1.1 Getting Started with Promises

As an example, letâs take a look at the new fetch API for the browser. This API is a simplification of XMLHttpRequest. It aims to be super simple to use for the most basic use cases: making a GET request against an HTTP resource. It provides an extensive API that caters to advanced use cases, but thatâs not our focus for now. In its most basic incarnation, you can make a GET /items HTTP request using a piece of code like the following.

fetch('/items')

The fetch('/items') statement doesnât seem all that exciting. It makes a âfire and forgetâ GET request against /items, meaning you ignore the response and whether the request succeeded. The fetch method returns a Promise. You can chain a callback using the .then method on that promise, and that callback will be executed once the /items resource finishes loading, receiving a response object parameter.

fetch('/items').then(response=>{// do something})

The following bit of code displays the promise-based API with which fetch is actually implemented in browsers. Calls to fetch return a Promise object. Much like with events, you can bind as many reactions as youâd like, using the .then and .catch methods.

constp=fetch('/items')p.then(res=>{// handle response})p.catch(err=>{// handle error})

Reactions passed to .then can be used to handle the fulfillment of a promise, which is accompanied by a fulfillment value; and reactions passed to .catch are executed with a rejection reason that can be used when handling rejections. You can also register a reaction to rejections in the second argument passed to .then. The previous piece of code could also be expressed as the following.

constp=fetch('/items')p.then(res=>{// handle response},err=>{// handle error})

Another alternative is to omit the fulfillment reaction in .then(fulfillment, rejection), this being similar to the omission of a rejection reaction when calling .then. Using .then(null, rejection) is equivalent to .catch(rejection), as shown in the following snippet of code.

constp=fetch('/items')p.then(res=>{// handle response})p.then(null,err=>{// handle error})

When it comes to promises, chaining is a major source of confusion. In an event-based API, chaining is made possible by having the .on method attach the event listener and then returning the event emitter itself. Promises are different. The .then and .catch methods return a new promise every time. Thatâs important because chaining can have wildly different results depending on where you append a .then or a .catch call.

A promise is created by passing the Promise constructor a resolver that decides how and when the promise is settled, by calling either a resolve method that will settle the promise in fulfillment or a reject method thatâd settle the promise as a rejection. Until the promise is settled by calling either function, itâll be in a pending state and any reactions attached to it wonât be executed. The following snippet of code creates a promise from scratch where weâll wait for a second before randomly settling the promise with a fulfillment or rejection result.

newPromise(function(resolve,reject){setTimeout(function(){if(Math.random()>0.5){resolve('random success')}else{reject(newError('random failure'))}},1000)})

Promises can also be created using Promise.resolve and Promise.reject. These methods create promises that will immediately settle with a fulfillment value and a rejection reason, respectively.

Promise.resolve({result:123}).then(data=>console.log(data.result))// <- 123

When a p promise is fulfilled, reactions registered with p.then are executed. When a p promise is rejected, reactions registered with p.catch are executed. Those reactions can, in turn, result in three different situations depending on whether they return a value, a Promise, a thenable, or throw an error. Thenables are objects considered promise-like that can be cast into a Promise using Promise.resolve as observed in Section 4.1.3: Creating a Promise from Scratch.

A reaction may return a value, which would cause the promise returned by .then to become fulfilled with that value. In this sense, promises can be chained to transform the fulfillment value of the previous promise over and over, as shown in the following snippet of code.

Promise.resolve(2).then(x=>x*7).then(x=>x-3).then(x=>console.log(x))// <- 11

A reaction may return a promise. In contrast with the previous piece of code, the promise returned by the first .then call in the following snippet will be blocked until the one returned by its reaction is fulfilled, which will take two seconds to settle because of the setTimeout call.

Promise.resolve(2).then(x=>newPromise(function(resolve){setTimeout(()=>resolve(x*1000),x*1000)})).then(x=>console.log(x))// <- 2000

A reaction may also throw an error, which would cause the promise returned by .then to become rejected and thus follow the .catch branch, using said error as the rejection reason. The following example shows how we attach a fulfillment reaction to the fetch operation. Once the fetch is fulfilled the reaction will throw an error and cause the rejection reaction attached to the promise returned by .then to be executed.

constp=fetch('/items').then(res=>{thrownewError('unexpectedly')}).catch(err=>console.error(err))

Letâs take a step back and pace ourselves, walking over more examples in each particular use case.

4.1.2 Promise Continuation and Chaining

In the previous section weâve established that you can chain any number of .then calls, each returning its own new promise, but how exactly does this work? What is a good mental model of promises, and what happens when an error is raised?

When an error happens in a promise resolver, you can catch that error using p.catch as shown next.

newPromise((resolve,reject)=>reject(newError('oops'))).catch(err=>console.error(err))

A promise will settle as a rejection when the resolver calls reject, but also if an exception is thrown inside the resolver as well, as demonstrated by the next snippet.

newPromise((resolve,reject)=>{thrownewError('oops')}).catch(err=>console.error(err))

Errors that occur while executing a fulfillment or rejection reaction behave in the same way: they result in a promise being rejected, the one returned by the .then or .catch call that was passed the reaction where the error originated. Itâs easier to explain this with code, such as the following piece.

Promise.resolve(2).then(x=>{thrownewError('failed')}).catch(err=>console.error(err))

It might be easier to decompose that series of chained method calls into variables, as shown next. The following piece of code might help you visualize the fact that, if you attached the .catch reaction to p1, you wouldnât be able to catch the error originated in the .then reaction. While p1 is fulfilled, p2âa different promise than p1, resulting from calling p1.thenâis rejected due to the error being thrown. That error could be caught, instead, if we attached the rejection reaction to p2.

constp1=Promise.resolve(2)constp2=p1.then(x=>{thrownewError('failed')})constp3=p2.catch(err=>console.error(err))

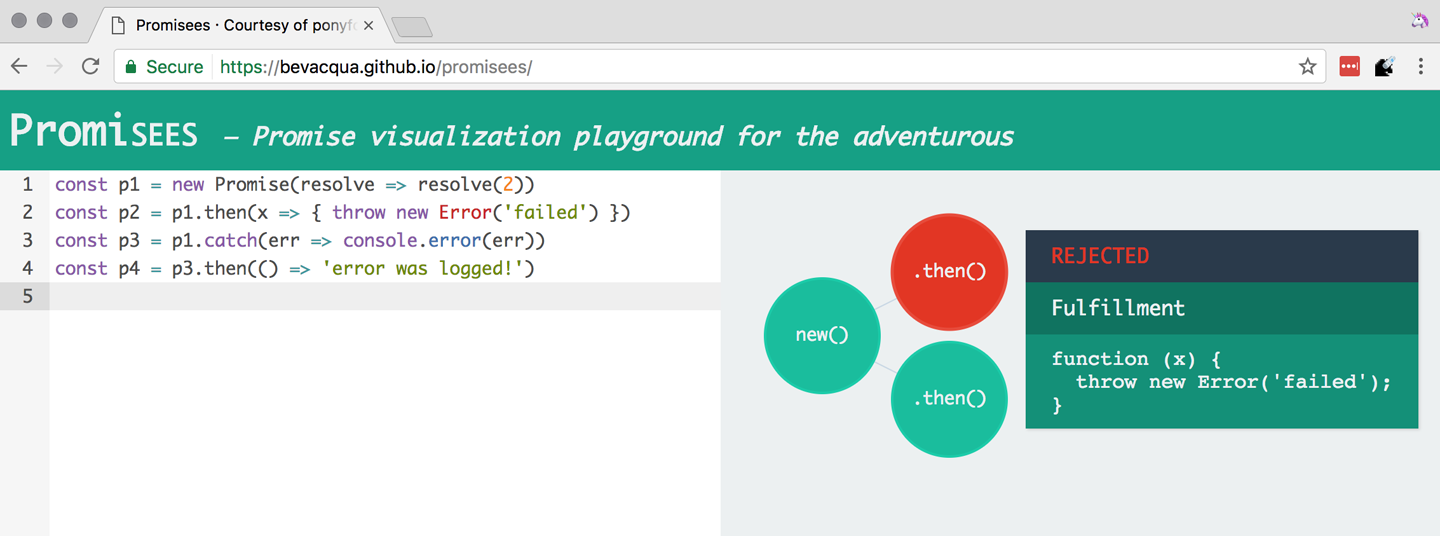

Here is another situation where it might help you to think of promises as a tree-like data structure. In Figure 4-2 it becomes clear that, given the error originates in the p2 node, we couldnât notice it by attaching a rejection reaction to p1.

Figure 4-2. Understanding the tree structure of promises reveals that rejection reactions can only catch errors that arise in a given branch of promise-based code.

In order for the reaction to handle the rejection in p2, weâd have to attach the reaction to p2 instead, as shown in Figure 4-3.

Figure 4-3. By attaching a rejection handler on the branch where an error is produced, weâre able to handle the rejection.

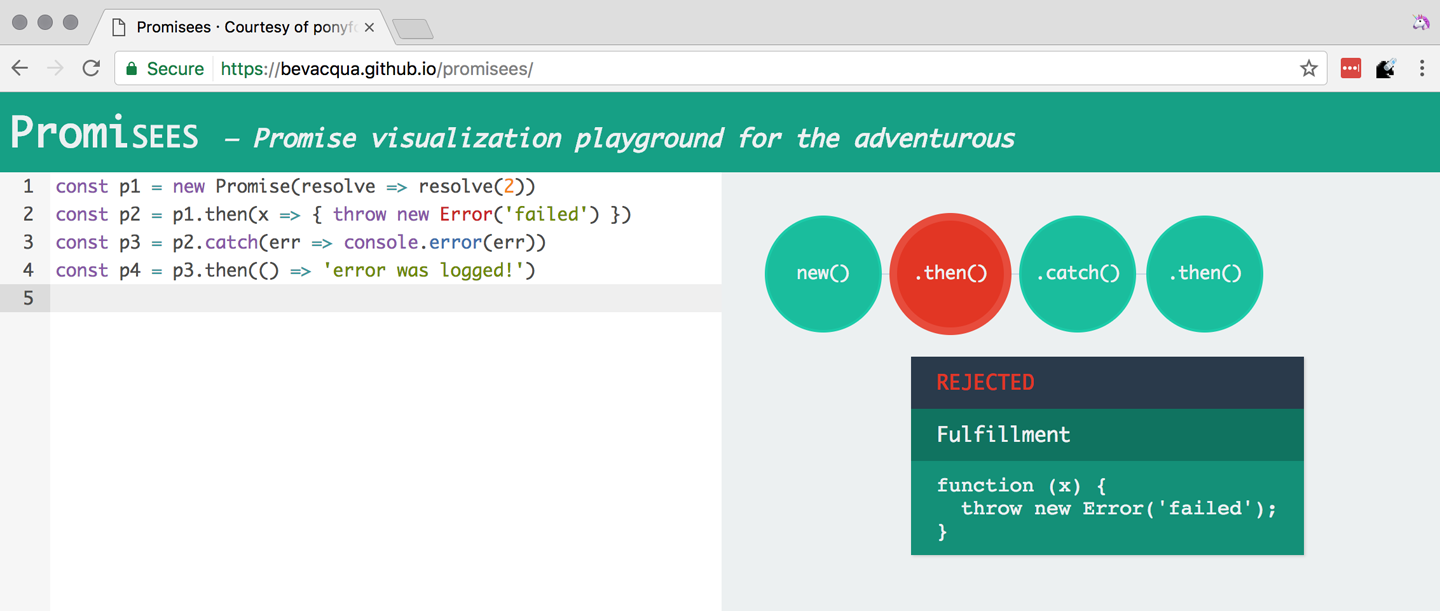

Weâve established that the promise you attach your reactions onto is important, as it determines what errors it can capture and what errors it cannot. Itâs also worth noting that as long as an error remains uncaught in a promise chain, a rejection handler will be able to capture it. In the following example weâve introduced an intermediary .then call in between p2, where the error originated, and p4, where we attach the rejection reaction. When p2 settles with a rejection, p3 becomes settled with a rejection, as it depends on p2 directly. When p3 settles with a rejection, the rejection handler in p4 fires.

constp1=Promise.resolve(2)constp2=p1.then(x=>{thrownewError('failed')})constp3=p2.then(x=>x*2)constp4=p3.catch(err=>console.error(err))

Typically, promises like p4 fulfill because the rejection handler in .catch doesnât raise any errors. That means a fulfillment handler attached with p4.then would be executed afterwards. The following example shows how you could print a statement to the browser console by creating a p4 fulfillment handler that depends on p3 to settle successfully with fulfillment.

constp1=Promise.resolve(2)constp2=p1.then(x=>{thrownewError('failed')})constp3=p2.catch(err=>console.error(err))constp4=p3.then(()=>console.log('crisis averted'))

Similarly, if an error occurred in the p3 rejection handler, we could capture that one as well using .catch. The next piece of code shows how an exception being thrown in p3 could be captured using p3.catch just like with any other errors arising in previous examples.

constp1=Promise.resolve(2)constp2=p1.then(x=>{thrownewError('failed')})constp3=p2.catch(err=>{thrownewError('oops')})constp4=p3.catch(err=>console.error(err))

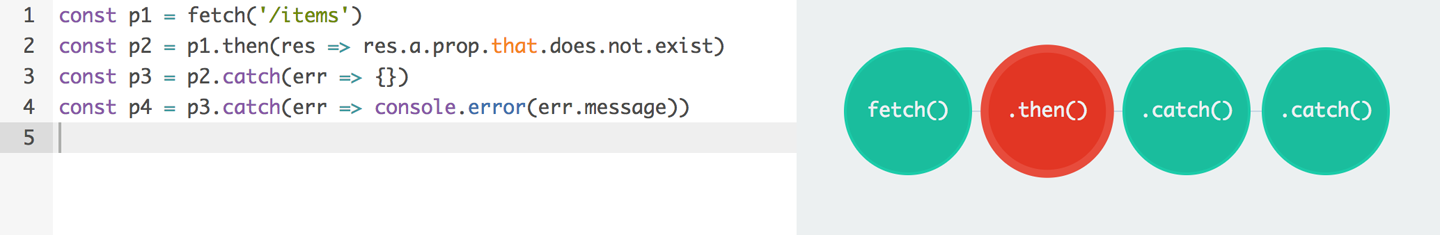

The following example prints err.message once instead of twice. Thatâs because no errors happened in the first .catch, so the rejection branch for that promise wasnât executed.

fetch('/items').then(res=>res.a.prop.that.does.not.exist).catch(err=>console.error(err.message)).catch(err=>console.error(err.message))// <- 'Cannot read property "prop" of undefined'

In contrast, the next snippet will print err.message twice. It works by saving a reference to the promise returned by .then, and then tacking two .catch reactions onto it. The second .catch in the previous example was capturing errors produced in the promise returned from the first .catch, while in this case both rejection handlers branch off of p.

constp=fetch('/items').then(res=>res.a.prop.that.does.not.exist)p.catch(err=>console.error(err.message))p.catch(err=>console.error(err.message))// <- 'Cannot read property "prop" of undefined'// <- 'Cannot read property "prop" of undefined'

We should observe, then, that promises can be chained arbitrarily. As we just saw, you can save a reference to any point in the promise chain and then append more promises on top of it. This is one of the fundamental points to understanding promises.

Letâs use the following snippet as a crutch to enumerate the sequence of events that arise from creating and chaining a few promises. Take a moment to inspect the following bit of code.

constp1=fetch('/items')constp2=p1.then(res=>res.a.prop.that.does.not.exist)constp3=p2.catch(err=>{})constp4=p3.catch(err=>console.error(err.message))

Here is an enumeration of what is going on as that piece of code is executed:

-

fetchreturns a brand newp1promise. -

p1.thenreturns a brand newp2promise, which will react ifp1is fulfilled. -

p2.catchreturns a brand newp3promise, which will react ifp2is rejected. -

p3.catchreturns a brand newp4promise, which will react ifp3is rejected. -

When

p1is fulfilled, thep1.thenreaction is executed. -

Afterwards,

p2is rejected because of an error in thep1.thenreaction. -

Since

p2was rejected,p2.catchreactions are executed, and thep2.thenbranch is ignored. -

The

p3promise fromp2.catchis fulfilled, because it doesnât produce an error or result in a rejected promise. -

Because

p3was fulfilled, thep3.catchis never followed. Thep3.thenbranch wouldâve been used instead.

You should think of promises as a tree structure. This bears repetition: you should think of promises as a tree structure.1 Letâs reinforce this concept with Figure 4-4.

Figure 4-4. Given the tree structure, we realize that p3 is fulfilled, as it doesnât produce an exception nor is it rejected. For that reason, p4 can never follow the rejection branch, given its parent was fulfilled.

It all starts with a single promise, which weâll next learn how to construct. Then you add branches with .then or .catch. You can tack as many .then or .catch calls as you want onto each branch, creating new branches and so on.

4.1.3 Creating a Promise from Scratch

We already know that promises can be created using a function such as fetch, Promise.resolve, Promise.reject, or the Promise constructor function. Weâve already used fetch extensively to create promises in previous examples. Letâs take a more nuanced look at the other three ways we can create a promise.

Promises can be created from scratch by using new Promise(resolver). The resolver parameter is a function that will be used to settle the promise. The resolver takes two arguments: a resolve function and a reject function.

The pair of promises shown in the next snippet are settled in fulfillment and rejection, respectively. Here weâre settling the first promise with a fulfillment value of 'result', and rejecting the second promise with an Error object, specifying 'reason' as its message.

newPromise(resolve=>resolve('result'))newPromise((resolve,reject)=>reject(newError('reason')))

Resolving and rejecting promises without a value is possible, but not that useful. Usually promises will fulfill with a result such as the response from an Ajax call, as weâve seen with fetch. Youâll definitely want to state the reason for your rejectionsâtypically wrapping them in an Error object so that you can report back a stack trace.

As you may have guessed, thereâs nothing inherently synchronous about promise resolvers. Settlement can be completely asynchronous for fulfillment and rejection alike. Even if the resolver calls resolve right away, the result wonât trickle down to reactions until the next tick. Thatâs the whole point of promises! The following example creates a promise that becomes fulfilled after two seconds elapse.

newPromise(resolve=>setTimeout(resolve,2000))

Note that only the first call made to one of these functions will have an impactâonce a promise is settled its outcome canât change. The following code snippet creates a promise thatâs fulfilled after the provided delay or rejected after a three-second timeout. Weâre taking advantage of the fact that calling either of these functions after a promise has been settled has no effect, in order to create a race condition where the first call to be made will be the one that sticks.

functionresolveUnderThreeSeconds(delay){returnnewPromise(function(resolve,reject){setTimeout(resolve,delay)setTimeout(reject,3000)})}resolveUnderThreeSeconds(2000)// becomes fulfilled after 2sresolveUnderThreeSeconds(7000)// becomes rejected after 3s

When creating a new promise p1, you could call resolve with another promise p2âbesides calling resolve with nonpromise values. In those cases, p1 will be resolved but blocked on the outcome of p2. Once p2 settles, p1 will be settled with its value and outcome. The following bit of code is, thus, effectively the same as simply doing fetch('/items').

newPromise(resolve=>resolve(fetch('/items')))

Note that this behavior is only possible when using resolve. If you try to replicate the same behavior with reject youâll find that the p1 promise is rejected with the p2 promise as the rejection reason. While resolve may result in a promise being fulfilled or rejected, reject always results in the promise being rejected. If you resolve to a rejected promise or a promise thatâs eventually rejected, then your promise will be rejected as well. The opposite isnât true for rejections. If you reject in a resolver, the promise will be rejected no matter what value is passed into reject.

In some cases youâll know beforehand about a value you want to settle a promise with. In these cases you could create a promise from scratch, as shown next. This can be convenient when you want to set off the benefits of promise chaining, but donât otherwise have a clear initiator that returns a Promiseâsuch as a call to fetch.

newPromise(resolve=>resolve(12))

That could prove to be too verbose when you donât need anything other than a pre-settled promise. You could use Promise.resolve instead, as a shortcut. The following statement is equivalent to the previous one. The differences between this statement and the previous one are purely semantics: you avoid declaring a resolver function and the syntax is more friendly to promise continuation and chaining when it comes to readability.

Promise.resolve(12)

Like in the resolve(fetch) case we saw earlier, you could use Promise.resolve as a way of wrapping another promise or casting a thenable into a proper promise. The following piece of code shows how you could use Promise.resolve to cast a thenable into a proper promise and then consume it as if it were any other promise.

Promise.resolve({then:resolve=>resolve(12)}).then(x=>console.log(x))// <- 12

When you already know the rejection reason for a promise, you can use Promise.reject. The following piece of code creates a promise thatâs going to settle into a rejection along with the specified reason. You can use Promise.reject within a reaction as a dynamic alternative to throw statements. Another use for Promise.reject is as an implicit return value for an arrow function, something that canât be done with a throw statements.

Promise.reject(reason)fetch('/items').then(()=>Promise.reject(newError('arbitrarily')))fetch('/items').then(()=>{thrownewError('arbitrarily')})

Presumably, you wonât be calling new Promise directly very often. The promise constructor is often invoked internally by libraries that support promises or native functions like fetch. Given that .then and .catch provide tree structures that unfold beyond the original promise, a single call to new Promise in the entry point to an API is often sufficient. Regardless, understanding promise creation is essential when leveraging promise-based control flows.

4.1.4 Promise States and Fates

Promises can be in three distinct states: pending, fulfilled, and rejected. Pending is the default state. A promise can then transition into either fulfillment or rejection.

A promise can be resolved or rejected exactly once. Attempting to resolve or reject a promise for a second time wonât have any effect.

When a promise is resolved with a nonpromise, nonthenable value, it settles in fulfillment. When a promise is rejected, itâs also considered to be settled.

A promise p1 thatâs resolved to another promise or thenable p2 stays in the pending state, but is nevertheless resolved: it canât be resolved again nor rejected. When p2 settles, its outcome is forwarded to p1, which becomes settled as well.

Once a promise is fulfilled, reactions that were attached with p.then will be executed as soon as possible. The same goes for rejected promises and p.catch reactions. Reactions attached after a promise is settled are also executed as soon as possible.

The contrived example shown next could be used to explain how you can make a fetch request, and create a second fetch promise in a .then reaction to the first request. The second request will only begin when and if the first promise settles in fulfillment. The console.log statement will only begin when and if the second promise settles in fulfillment, printing done to the console.

fetch('/items').then(()=>fetch('/item/first')).then(()=>console.log('done'))

A less contrived example would involve other steps. In the following piece of code we use the outcome of the first fetch request in order to construct the second request. To do that, we use the res.json method, which returns a promise that resolves to the object from parsing a JSON response. Then we use that object to construct the endpoint we want to request in our second call to fetch, and finally we print the item object from the second response to the console.

fetch('/items').then(res=>res.json()).then(items=>fetch(`/item/${items[0].slug}`)).then(res=>res.json()).then(item=>console.log(item))

Weâre not limited to returning promises or thenables. We could also return values from .then and .catch reactions. Those values would be passed to the next reaction in the chain. In this sense, a reaction can be regarded as the transformation of input from the previous reaction in the chain into the input for the next reaction in the chain. The following example starts by creating a promise fulfilled with [1, 2, 3]. Then thereâs a reaction that maps those values into [2, 4, 6]. Those values are then printed to the console in the following reaction in the chain.

Promise.resolve([1,2,3]).then(values=>values.map(value=>value*2)).then(values=>console.log(values))// <- [2, 4, 6]

Note that you can transform data in rejection branches as well. Keep in mind that, as we first learned in Section 4.1.3: Creating a Promise from Scratch, when a .catch reaction executes without errors and doesnât return a rejected promise either, it will fulfill, following .then reactions.

4.1.5 Promise#finally Proposal

Thereâs a TC39 proposal2 for a Promise#finally method, which would invoke a reaction when a promise settles, regardless of whether it was fulfilled or rejected.

We can think of the following bit of code as a rough ponyfill for Promise#finally. We pass the reaction callback to p.then as both a fulfillment reaction and a rejection reaction.

functionfinally(p,fn){returnp.then(fn,fn)}

There are a few semantic differences involved. For one, reactions passed to Promise#finally donât receive any arguments, since the promise couldâve settled as either a fulfillment value or a rejection reason. Typically, Promise#finally variants in user-land are used for use cases such as hiding a loading spinner that was shown before a fetch request and other cleanup, where we donât need access to the promiseâs settlement value. The following snippet has an updated ponyfill which doesnât pass any arguments to either reaction.

functionfinally(p,fn){returnp.then(()=>fn(),()=>fn())}

Reactions passed to Promise#finally resolve to the result of the parent promise.

constp1=Promise.resolve('value')constp2=p1.finally(()=>{})constp3=p2.then(data=>console.log(data))// <- 'value'

This is unlike p.then(fn, fn), which would produce a new fulfillment value unless itâs explicitly forwarded in the reaction, as shown next.

constp1=Promise.resolve('value')constp2=p1.then(()=>{},()=>{})constp3=p2.then(data=>console.log(data))// <- undefined

The following code listing has a complete ponyfill for Promise#finally.

functionfinally(p,fn){returnp.then(result=>resolve(fn()).then(()=>result),err=>resolve(fn()).then(()=>Promise.reject(err)))}

Note that if the reaction passed to Promise#finally is rejected or throws, then the promise returned by Promise#finally will settle with that rejection reason, as shown next.

constp1=Promise.resolve('value')constp2=p1.finally(()=>Promise.reject('oops'))constp3=p2.catch(err=>console.log(err))// <- 'oops'

As we can observe after carefully reading the code for our ponyfill, if either reaction results in an exception being thrown then the promise would be rejected. At the same time, returning a rejected promise via Promise.reject or some other means would imply resolve(fn()) results in a rejected promise, which wonât follow the .then reactions used to return the original settlement value of the promise weâre calling .finally on.

4.1.6 Leveraging Promise.all and Promise.race

When writing asynchronous code flows, there are pairs of tasks where one of them depends on the outcome of another, so they must run in series. There are also pairs of tasks that donât need to know the outcome of each other in order to run, so they can be executed concurrently. Promises already excel at asynchronous series flows, as a single promise can trigger a chain of events that happen one after another. Promises also offer a couple of solutions for concurrent tasks, in the form of two API methods: Promise.all and Promise.race.

In most cases youâll want code that can be executed concurrently to take advantage of that, as it could make your code run much faster. Suppose you wanted to pull the description of two products in your catalog, using two distinct API calls, and then print out both of them to the console. The following piece of code would run both operations concurrently, but it would need separate print statements. In the case of printing to the console, that wouldnât make much of a difference, but if we needed to make single function call passing in both products, we couldnât do that with two separate fetch requests.

fetch('/products/chair').then(r=>r.json()).then(p=>console.log(p))fetch('/products/table').then(r=>r.json()).then(p=>console.log(p))

The Promise.all method takes an array of promises and returns a single promise p. When all promises passed to Promise.all are fulfilled, p becomes fulfilled as well with an array of results sorted according to the provided promises. If a single promise becomes rejected, p settles with its rejection reason immediately. The following example uses Promise.all to fetch both products and print them to the console using a single console.log statement.

Promise.all([fetch('/products/chair'),fetch('/products/table')]).then(products=>console.log(products[0],products[1]))

Given that the results are provided as an array, its indices have no semantic meaning to our code. Using parameter destructuring to pull out variable names for each product might make more sense when reading the code. The following example uses destructuring to clean that up. Keep in mind that even though thereâs a single argument, destructuring forces us to use parentheses in the arrow function parameter declaration.

Promise.all([fetch('/products/chair'),fetch('/products/table')]).then(([chair,table])=>console.log(chair,table))

The following example shows how if a single promise is rejected, p will be rejected as well. Itâs important to understand that, as a single rejected promise might prevent an otherwise fulfilled array of promises from fulfilling p. In the example, rather than wait until p2 and p3 settle, p becomes immediately rejected.

constp1=Promise.reject('failed')constp2=fetch('/products/chair')constp3=fetch('/products/table')constp=Promise.all([p1,p2,p3]).catch(err=>console.log(err))// <- 'failed'

In summary, Promise.all has three possible outcomes:

-

Settle with all fulfillment

resultsas soon as all of its dependencies are fulfilled -

Settle with a single rejection

reasonas soon as one of its dependencies is rejected -

Stay in a pending state because at least one dependency stays in pending state and no dependencies are rejected

The Promise.race method is similar to Promise.all, except the first dependency to settle will âwinâ the race, and its result will be passed along to the promise returned by Promise.race.

Promise.race([newPromise(resolve=>setTimeout(()=>resolve(1),1000)),newPromise(resolve=>setTimeout(()=>resolve(2),2000))]).then(result=>console.log(result))// <- 1

Rejections will also finish the race, and the resulting promise will be rejected. Using Promise.race could be useful in scenarios where we want to time out a promise we otherwise have no control over. For instance, in the following piece of code thereâs a race between a fetch request and a promise that becomes rejected after a five-second timeout. If the request takes more than five seconds, the race will be rejected.

functiontimeout(delay){returnnewPromise(function(resolve,reject){setTimeout(()=>reject('timeout'),delay)})}Promise.race([fetch('/large-resource-download'),timeout(5000)]).then(res=>console.log(res)).catch(err=>console.log(err))

4.2 Iterator Protocol and Iterable Protocol

JavaScript gets two new protocols in ES6: iterators and iterables. These two protocols are used to define iteration behavior for any object. Weâll start by learning about how to turn an object into an iterable sequence. Later, weâll look into laziness and how iterators can define infinite sequences. Lastly, weâll go over practical considerations while defining iterables.

4.2.1 Understanding Iteration Principles

Any object can adhere to the iterable protocol by assigning a function to the Symbol.iterator property for that object. Whenever an object needs to be iterated its iterable protocol method, assigned to Symbol.iterator, is called once.

The spread operator was first introduced in Chapter 2, and itâs one of a few language features in ES6 that leverage iteration protocols. When using the spread operator on a hypothetical iterable object, as shown in the following code snippet, Symbol.iterator would be asked for an object that adheres to the iterator protocol. The returned iterator will be used to obtain values out of the object.

constsequence=[...iterable]

As you might remember, symbol properties canât be directly embedded into object literal keys. The following bit of code shows how youâd add a symbol property using pre-ES6 language semantics.

constexample={}example[Symbol.iterator]=fn

We could, however, use a computed property name to fit the symbol key in the object literal, avoiding an extra statement like the one in the previous snippet, as demonstrated next.

constexample={[Symbol.iterator]:fn}

The method assigned to Symbol.iterator must return an object that adheres to the iterator protocol. That protocol defines how to get values out of an iterable sequence. The protocol dictates iterators must be objects with a next method. The next method takes no arguments and should return an object with these two properties found below:

-

valueis the current item in the sequence -

doneis a Boolean indicating whether the sequence has ended

Letâs use the following piece of code as a crutch to understand the concepts behind iteration protocols. Weâre turning the sequence object into an iterable by adding a Symbol.iterator property. The iterable returns an iterator object. Each time next is asked for the following value in the sequence, an element from the items array is provided. When i goes beyond the last index on the items array, we return done: true, indicating the sequence has ended.

constitems=['i','t','e','r','a','b','l','e']constsequence={[Symbol.iterator](){leti=0return{next(){constvalue=items[i]i++constdone=i>items.lengthreturn{value,done}}}}}

JavaScript is a progressive language: new features are additive, and they practically never break existing code. For that reason, iterables canât be taken advantage of in existing constructs such as forEach and for..in. In ES6, there are a few ways to go over iterables: for..of, the ... spread operator, and Array.from.

The for..of iteration method can be used to loop over any iterable. The following example demonstrates how we could use for..of to loop over the sequence object we put together in the previous example, because it is an iterable object.

for(constitemofsequence){console.log(item)// <- 'i'// <- 't'// <- 'e'// <- 'r'// <- 'a'// <- 'b'// <- 'l'// <- 'e'}

Regular objects can be made iterable with Symbol.iterator, as weâve just learned. Under the ES6 paradigm, constructs like Array, String, NodeList in the DOM, and arguments are all iterable by default, giving for..of increased usability. To get an array out of any iterable sequence of values, you could use the spread operator, spreading every item in the sequence onto an element in the resulting array. We could also use Array.from to the same effect. In addition, Array.from can also cast array-like objects, those with a length property and items in zero-based integer properties, into arrays.

console.log([...sequence])// <- ['i', 't', 'e', 'r', 'a', 'b', 'l', 'e']console.log(Array.from(sequence))// <- ['i', 't', 'e', 'r', 'a', 'b', 'l', 'e']console.log(Array.from({0:'a',1:'b',2:'c',length:3}))// <- ['a', 'b', 'c']

As a recap, the sequence object adheres to the iterable protocol by assigning a method to [Symbol.iterator]. That means that the object is iterable: it can be iterated. Said method returns an object that adheres to the iterator protocol. The iterator method is called once whenever we need to start iterating over the object, and the returned iterator is used to pull values out of sequence. To iterate over iterables, we can use for..of, the spread operator, or Array.from.

In essence, the selling point about these protocols is that they provide expressive ways to effortlessly iterate over collections and array-likes. Having the ability to define how any object may be iterated is huge, because it enables libraries to converge under a protocol the language natively understands: iterables. The upside is that implementing the iterator protocol doesnât have a high-effort cost because, due to its additive nature, it wonât break existing behavior.

For example, jQuery and document.querySelectorAll both return array-likes. If jQuery implemented the iterator protocol on their collectionâs prototype, then you could iterate over collection elements using the native for..of construct.

for(constelementof$('li')){console.log(element)// <- a <li> in the jQuery collection}

Iterable sequences arenât necessarily finite. They may have an uncountable amount of elements. Letâs delve into that topic and its implications.

4.2.2 Infinite Sequences

Iterators are lazy in nature. Elements in an iterator sequence are generated one at a time, even when the sequence is finite. Note that infinite sequences couldnât be represented without the laziness property. An infinite sequence canât be represented as an array, meaning that using the spread operator or Array.from to cast a sequence into an array would crash JavaScript execution, as weâd go into an infinite loop.

The following example shows an iterator that represents an infinite sequence of random floating numbers between 0 and 1. Note how items returned by next donât ever have a done property set to true, which would signal that the sequence has ended. It uses a pair of arrow functions that implicitly return objects. The first one returns the iterator object used to loop over the infinite sequence of random numbers. The second arrow function is used to pull each individual value in the sequence, using Math.random.

constrandom={[Symbol.iterator]:()=>({next:()=>({value:Math.random()})})}

Attempting to cast the iterable random object into an array using either Array.from(random) or [...random] would crash our program, since the sequence never ends. We must be very careful with these types of sequences as they can easily crash and burn our browser and Node.js server processes.

There are a few different ways you can access a sequence safely, without risking an infinite loop. The first option is to use destructuring to pull values in specific positions of the sequence, as shown in the following piece of code.

const[one,another]=randomconsole.log(one)// <- 0.23235511826351285console.log(another)// <- 0.28749457537196577

Destructuring infinite sequences doesnât scale very well, particularly if we want to apply dynamic conditions, such as pulling the first i values out of the sequence or pulling values until we find one that doesnât match a condition. In those cases weâre better off using for..of, where weâre better able to define conditions that prevent infinite loops while taking as many elements as we need, in a programmatic fashion. The next example loops over our infinite sequence using for..of, but it breaks the loop as soon as a value is higher than 0.8. Given that Math.random produces values anywhere between 0 and 1, the loop will eventually break.

for(constvalueofrandom){if(value>0.8){break}console.log(value)}

It can be hard to understand code like that when reading it later, as a lot of the code is focused on how the sequence is iterated, printing values from random until one of those values is large enough. Abstracting away part of the logic into another method might make the code more readable.

As another example, a common pattern when extracting values from an infinite or very large sequence is to âtakeâ the first few elements in the sequence. While you could accommodate that use case through for..of and break, youâd be better off abstracting it into a take method. The following example shows a potential implementation of take. It receives a sequence parameter and the amount of entries youâd like to take from the sequence. It returns an iterable object, and whenever that object is iterated it constructs an iterator for the provided sequence. The next method defers to the original sequence while the amount is at least 1, and then ends the sequence.

functiontake(sequence,amount){return{[Symbol.iterator](){constiterator=sequence[Symbol.iterator]()return{next(){if(amount--<1){return{done:true}}returniterator.next()}}}}}

Our implementation works great on infinite sequences because it provides them with a constant exit condition: whenever the amount counter is depleted, the sequence returned by take ends. Instead of looping to pull values out of random, you can now write a piece of code like the following.

[...take(random,2)]// <- [0.304253100650385, 0.5851333604659885]

This pattern allows you to reduce any infinite sequence into a finite one. If your desired finite sequence wasnât just âthe first N values,â but rather our original âall values before the first one larger than 0.8,â you could easily adapt take by changing its exit condition. The range function shown next has a low parameter that defaults to 0, and a high parameter defaulting to 1. Whenever a value in the sequence is out of bounds, we stop pulling values from it.

functionrange(sequence,low=0,high=1){return{[Symbol.iterator](){constiterator=sequence[Symbol.iterator]()return{next(){constitem=iterator.next()if(item.value<low||item.value>high){return{done:true}}returnitem}}}}}

Now, instead of breaking in the for..of loop because we fear that the infinite sequence will never end, we guaranteed that the loop will eventually break outside of our desired range. This way, your code becomes less concerned with how the sequence is generated, and more concerned with what the sequence will be used for. As shown in the following example, you wonât even need a for..of loop here either, because the escape condition now resides in the intermediary range function.

constlow=[...range(random,0,0.8)]// <- [0.68912092433311, 0.059788614744320, 0.09396195202134]

This sort of abstraction of complexity into another function often helps keep code focused on its intent, while striving to avoid a for..of loop when all we wanted was to produce a derivated sequence. It also shows how sequences can be composed and piped into one another. In this case, we first created a multipurpose and infinite random sequence, and then piped it through a range function that returns a derivated sequence that ends when it meets values that are below or above a desired range. An important aspect of iterators is that despite having been composed, the iterators produced by the range function can be lazily iterated as well, effectively meaning you can compose as many iterators you need into mapping, filtering, and exit condition helpers.

Besides the technical implications of creating iterable objects, letâs go over a couple of practical examples on how we can benefit from iterators.

4.2.3 Iterating Object Maps as Key/Value Pairs

Thereâs an abundance of practical situations that benefit from turning an object into an iterable. Object maps, pseudoarrays that are meant to be iterated, the random number generator we came up with in Section 4.2.2: Infinite Sequences, and classes or plain objects with properties that are often iterated could all turn a profit from following the iterable protocol.

Oftentimes, JavaScript objects are used to represent a map between string keys and arbitrary values. In the next snippet, as an example, we have a map of color names and hexadecimal RGB representations of that color. There are cases when youâd welcome the ability to effortlessly loop over the different color names, hexadecimal representations, or key/value pairs.

constcolors={green:'#0e0',orange:'#f50',pink:'#e07'}

The following code snippet implements an iterable that produces a [key, value] sequence for each color in the colors map. Given that thatâs assigned to the Symbol.iterator property, weâd be able to go over the list with minimal effort.

constcolors={green:'#0e0',orange:'#f50',pink:'#e07',[Symbol.iterator](){constkeys=Object.keys(colors)return{next(){constdone=keys.length===0constkey=keys.shift()return{done,value:[key,colors[key]]}}}}}

When we wanted to pull out all the key/value pairs, we could use the ... spread operator as shown in the following bit of code.

console.log([...colors])// <- [['green', '#0e0'], ['orange', '#f50'], ['pink', '#e07']]

The fact that weâre polluting our previously tiny colors map with a large iterable definition could represent a problem, as the iterable behavior has little to do with the concern of storing pairs of color names and codes. A good way of decoupling the two aspects of colors would be to extract the logic that attaches a key/value pair iterator into a reusable function. This way, we could eventually move keyValueIterable somewhere else in our codebase and leverage it for other use cases as well.

functionkeyValueIterable(target){target[Symbol.iterator]=function(){constkeys=Object.keys(target)return{next(){constdone=keys.length===0constkey=keys.shift()return{done,value:[key,target[key]]}}}}returntarget}

We could then call keyValueIterable passing in the colors object, turning colors into an iterable object. You could in fact use keyValueIterable on any objects where you want to iterate over key/value pairs, as the iteration behavior doesnât make assumptions about the object. Once weâve attached a Symbol.iterator behavior, weâll be able to treat the object as an iterable. In the next code snippet, we iterate over the key/value pairs and print only the color codes.

constcolors=keyValueIterable({green:'#0e0',orange:'#f50',pink:'#e07'})for(const[,color]ofcolors){console.log(color)// <- '#0e0'// <- '#f50'// <- '#e07'}

4.2.4 Building Versatility Into Iterating a Playlist

Imagine you were developing a song player where a playlist could be reproduced once and then stopped, or be put on repeat and reproduced indefinitely. Whenever you have a use case of looping through a list indefinitely, you could leverage the iterable protocol as well.

Suppose a human adds a few songs to her library, and they are stored in an array as shown in the next bit of code.

constsongs=['Bad moon rising â Creedence','Donât stop me now â Queen','The Scientist â Coldplay','Somewhere only we know â Keane']

We could create a playlist function that returns a sequence, representing all the songs that will be played by our application. This function would take the songs provided by the human as well as the repeat value, which indicates how many times she wants the songs to be reproduced in a loopâonce, twice, or Infinity timesâbefore coming to an end.

The following piece of code shows how we could implement playlist. We start with an empty playlist and use an index number to track where in the song list we are positioned. We return the next song in the list by incrementing the index, until there arenât any songs left in the current loop. At this point we decrement the repeat counter and reset the index. The sequence ends when there arenât any songs left and repeat reaches zero.

functionplaylist(songs,repeat){return{[Symbol.iterator](){letindex=0return{next(){if(index>=songs.length){repeat--index=0}if(repeat<1){return{done:true}}constsong=songs[index]index++return{done:false,value:song}}}}}}

The following bit of code shows how the playlist function can take an array and produce a sequence that goes over the provided array for the specified amount of times. If we specified Infinity, the resulting sequence would be infinite, and otherwise itâd be finite.

console.log([...playlist(['a','b'],3)])// <- ['a', 'b', 'a', 'b', 'a', 'b']

To iterate over the playlist weâd probably come up with a player function. Assuming a playSong function that reproduces a song and invokes a callback when the song ends, our player implementation could look like the following function, where we asynchronously loop the iterator coming from a sequence, requesting new songs as previous ones finish playback. Given that thereâs always a considerable waiting period in between g.next callsâwhile the songs are actually playing inside playSongâthereâs little risk of being stuck in an infinite loop thatâd crash the runtime, even when the sequence produced by playlist is infinite.

functionplayer(sequence){constg=sequence()more()functionmore(){constitem=g.next()if(item.done){return}playSong(item.value,more)}}

Putting everything together, the music library would play a song list on repeat with a few lines of code, as presented in the next code snippet.

constsongs=['Bad moon rising â Creedence','Donât stop me now â Queen','The Scientist â Coldplay','Somewhere only we know â Keane']constsequence=playlist(songs,Infinity)player(sequence)

A change allowing the human to shuffle her playlist wouldnât be complicated to introduce. Weâd have to tweak the playlist function to include a shuffle flag, and if that flag is present weâd sort the song list at random.

functionplaylist(inputSongs,repeat,shuffle){constsongs=shuffle?shuffleSongs(inputSongs):inputSongsreturn{[Symbol.iterator](){letindex=0return{next(){if(index>=songs.length){repeat--index=0}if(repeat<1){return{done:true}}constsong=songs[index]index++return{done:false,value:song}}}}}}functionshuffleSongs(songs){returnsongs.slice().sort(()=>Math.random()>0.5?1:-1)}

Lastly, weâd have to pass in the shuffle flag as true if we wanted to shuffle songs in the playlist. Otherwise, songs would be reproduced in the original order provided by the user. Here again weâve abstracted away something that usually would involve many lines of code used to decide what song comes next into a neatly decoupled function thatâs only concerned with producing a sequence of songs to be reproduced by a song player.

console.log([...playlist(['a','b'],3,true)])// <- ['a', 'b', 'b', 'a', 'a', 'b']

You may have noticed how the playlist function doesnât necessarily need to concern itself with the sort order of the songs passed to it. A better design choice may well be to extract shuffling into the calling code. If we kept the original playlist function without a shuffle parameter, we could still use a snippet like the following to obtain a shuffled song collection.

functionshuffleSongs(songs){returnsongs.slice().sort(()=>Math.random()>0.5?1:-1)}console.log([...playlist(shuffleSongs(['a','b']),3)])// <- ['a', 'b', 'b', 'a', 'a', 'b']

Iterators are an important tool in ES6 that help us not only to decouple code, but also to come up with constructs that were previously harder to implement, such as the ability of dealing with a sequence of songs indistinctlyâregardless of whether the sequence is finite or infinite. This indifference is, in part, what makes writing code leveraging the iterator protocol more elegant. It also makes it risky to cast an unknown iterable into an array (with, say, the ... spread operator), as youâre risking crashing your program due to an infinite loop.

Generators are an alternative way of creating functions that return an iterable object, without explicitly declaring an object literal with a Symbol.iterator method. They make it easier to implement functions, such as the range or take functions in Section 4.2.2: Infinite Sequences, while also allowing for a few more interesting use cases.

4.3 Generator Functions and Generator Objects

Generators are a new feature in ES6. The way they work is that you declare a generator function that returns generator objects g. Those g objects can then be iterated using any of Array.from(g), [...g], or for..of loops. Generator functions allow you to declare a special kind of iterator. These iterators can suspend execution while retaining their context.

4.3.1 Generator Fundamentals

We already examined iterators in the previous section, learning how their .next() method is called one at a time to pull values from a sequence. Instead of a next method whenever you return a value, generators use the yield keyword to add values into the sequence.

Here is an example generator function. Note the * after function. Thatâs not a typo, thatâs how you mark a generator function as a generator.

function*abc(){yield'a'yield'b'yield'c'}

Generator objects conform to both the iterable protocol and the iterator protocol:

-

A generator object

charsis built using theabcfunction -

Object

charsis an iterable because it has aSymbol.iteratormethod -

Object

charsis also an iterator because it has a.nextmethod -

The iterator for

charsis itself

The same statements can also be demonstrated using JavaScript code.

constchars=abc()typeofchars[Symbol.iterator]==='function'typeofchars.next==='function'chars[Symbol.iterator]()===charsconsole.log(Array.from(chars))// <- ['a', 'b', 'c']console.log([...chars])// <- ['a', 'b', 'c']

When you create a generator object, youâll get an iterator that uses the generator function to produce an iterable sequence. Whenever a yield expression is reached, its value is emitted by the iterator and generator function execution becomes suspended.

The following example shows how iteration can trigger side effects within the generator function. The console.log statements after each yield statement will be executed when generator function execution becomes unsuspended and asked for the next element in the sequence.

function*numbers(){yield1console.log('a')yield2console.log('b')yield3console.log('c')}

Suppose you created a generator object for numbers, spread its contents onto an array, and printed it to the console. Taking into account the side effects in numbers, can you guess what the console output would look like for the following piece of code? Given that the spread operator iterates over the sequence to completion in order to give you an array, all side effects would be executed while constructing the array via destructuring, before the console.log statement printing the array is ever reached.

console.log([...numbers()])// <- 'a'// <- 'b'// <- 'c'// <- [1, 2, 3]

If we now used a for..of loop instead, weâd be able to preserve the order declared in the numbers generator function. In the next example, elements in the numbers sequence are printed one at a time in a for..of loop. The first time the generator function is asked for a number, it yields 1 and execution becomes suspended. The second time, execution is unsuspended where the generator left off, 'a' is printed to the console as a side effect, and 2 is yielded. The third time, 'b' is the side effect, and 3 is yielded. The fourth time, 'c' is a side effect and the generator signals that the sequence has ended.

for(constnumberofnumbers()){console.log(number)// <- 1// <- 'a'// <- 2// <- 'b'// <- 3// <- 'c'}

Besides iterating over a generator object using spread, for..of, and Array.from, we could use the generator object directly, and iterate over that. Letâs investigate how thatâd work.

4.3.2 Iterating over Generators by Hand

Generator iteration isnât limited to for..of, Array.from, or the spread operator. Just like with any iterable object, you can use its Symbol.iterator to pull values on demand using .next, rather than in a strictly synchronous for..of loop or all at once with Array.from or spread. Given that a generator object is both iterable and iterator, you wonât need to call g[Symbol.iterator]() to get an iterator: you can use g directly because itâs the same object as the one returned by the Symbol.iterator method.

Assuming the numbers iterator we created earlier, the following example shows how you could iterate it by hand using the generator object and a while loop. Remember that any items returned by an iterator need a done property that indicates whether the sequence has ended, and a value property indicating the current value in the sequence.

constg=numbers()while(true){constitem=g.next()if(item.done){break}console.log(item.value)}

Using iterators to loop over a generator might look like a complicated way of implementing a for..of loop, but it also allows for some interesting use cases. Particularly: for..of is always a synchronous loop, whereas with iterators weâre in charge of deciding when to invoke g.next. In turn, that translates into additional opportunities such as running an asynchronous operation and then calling g.next once we have a result.

Whenever .next() is called on a generator, there are four different kinds of âeventsâ that can suspend execution in the generator while returning a result to the caller of .next(). Weâll promptly explore each of these scenarios:

-

Reaching the end of the generator function signals

{ done: true }, as the function implicitly returnsundefined

Once the g generator finishes iterating over a sequence, subsequent calls to g.next() will have no effect and just return { done: true }. The following code snippet demonstrates the idempotence we can observe when calling g.next repeatedly once a sequence has ended.

function*generator(){yield'only'}constg=generator()console.log(g.next())// <- { done: false, value: 'only' }console.log(g.next())// <- { done: true }console.log(g.next())// <- { done: true }

4.3.3 Mixing Generators into Iterables

Letâs do a quick recap of generators. Generator functions return generator objects when invoked. A generator object has a next method, which returns the next element in the sequence. The next method returns objects with a { value, done } shape.

The following example shows an infinite Fibonacci number generator. We then instantiate a generator object and read the first eight values in the sequence.

function*fibonacci(){letprevious=0letcurrent=1while(true){yieldcurrentconstnext=current+previousprevious=currentcurrent=next}}constg=fibonacci()console.log(g.next())// <- { value: 1, done: false }console.log(g.next())// <- { value: 1, done: false }console.log(g.next())// <- { value: 2, done: false }console.log(g.next())// <- { value: 3, done: false }console.log(g.next())// <- { value: 5, done: false }console.log(g.next())// <- { value: 8, done: false }console.log(g.next())// <- { value: 13, done: false }console.log(g.next())// <- { value: 21, done: false }

Iterables follow a similar pattern. They enforce a contract that dictates we should return an object with a next method. That method should return sequence elements following a { value, done } shape. The following example shows a fibonacci iterable thatâs a rough equivalent of the generator we were just looking at.

constfibonacci={[Symbol.iterator](){letprevious=0letcurrent=1return{next(){constvalue=currentconstnext=current+previousprevious=currentcurrent=nextreturn{value,done:false}}}}}constsequence=fibonacci[Symbol.iterator]()console.log(sequence.next())// <- { value: 1, done: false }console.log(sequence.next())// <- { value: 1, done: false }console.log(sequence.next())// <- { value: 2, done: false }console.log(sequence.next())// <- { value: 3, done: false }console.log(sequence.next())// <- { value: 5, done: false }console.log(sequence.next())// <- { value: 8, done: false }console.log(sequence.next())// <- { value: 13, done: false }console.log(sequence.next())// <- { value: 21, done: false }

Letâs reiterate. An iterable should return an object with a next method: generator functions do just that. The next method should return objects with a { value, done } shape: generator functions do that too. What happens if we change the fibonacci iterable to use a generator function for its Symbol.iterator property? As it turns out, it just works.

The following example shows the iterable fibonacci object using a generator function to define how it will be iterated. Note how that iterable has the exact same contents as the fibonacci generator function we saw earlier. We can use yield, yield*, and all of the semantics found in generator functions hold.

constfibonacci={*[Symbol.iterator](){letprevious=0letcurrent=1while(true){yieldcurrentconstnext=current+previousprevious=currentcurrent=next}}}constg=fibonacci[Symbol.iterator]()console.log(g.next())// <- { value: 1, done: false }console.log(g.next())// <- { value: 1, done: false }console.log(g.next())// <- { value: 2, done: false }console.log(g.next())// <- { value: 3, done: false }console.log(g.next())// <- { value: 5, done: false }console.log(g.next())// <- { value: 8, done: false }console.log(g.next())// <- { value: 13, done: false }console.log(g.next())// <- { value: 21, done: false }

Meanwhile, the iterable protocol also holds up. To verify that, you might use a construct like for..of, instead of manually creating the generator object. The following example uses for..of and introduces a circuit breaker to prevent an infinite loop from crashing the program.

for(constvalueoffibonacci){console.log(value)if(value>20){break}}// <- 1// <- 1// <- 2// <- 3// <- 5// <- 8// <- 13// <- 21

Moving onto more practical examples, letâs see how generators can help us iterate tree data structures concisely.

4.3.4 Tree Traversal Using Generators

Algorithms to work with tree structures can be tricky to understand, often involving recursion. Consider the following bit of code, where we define a Node class that can hold a value and an arbitrary amount of child nodes.

classNode{constructor(value,...children){this.value=valuethis.children=children}}

Trees can be traversed using depth-first search, where we always try to go deeper into the tree structure, and when we canât we move to the next children on the list. In the following tree structure, a depth-first search algorithm would traverse the tree visiting the nodes following the 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 order.

constroot=newNode(1,newNode(2),newNode(3,newNode(4,newNode(5,newNode(6)),newNode(7))),newNode(8,newNode(9),newNode(10)))

One way of implementing depth-first traversal for our tree would be using a generator function that yields the current nodeâs value, and then iterates over its children yielding every item in their sequences using the yield* operator as a way of composing the recursive component of the iterator.

function*depthFirst(node){yieldnode.valuefor(constchildofnode.children){yield*depthFirst(child)}}console.log([...depthFirst(root)])// <- [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

A slightly different way of declaring the traversal algorithm would be to make the Node class iterable using the depthFirst generator. The following piece of code also takes advantage that child is a Node classâand thus an iterableâusing yield* in order to yield the iterable sequence for that child as part of the sequence for its parent node.

classNode{constructor(value,...children){this.value=valuethis.children=children}*[Symbol.iterator](){yieldthis.valuefor(constchildofthis.children){yield*child}}}console.log([...root])// <- [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

If we wanted to change traversal to a breadth-first algorithm, we could change the iterator into an algorithm like the one in the following piece of code. Here, we use a first-in first-out queue to keep a buffer of nodes we havenât visited yet. In each step of the iteration, starting with the root node, we print the current nodeâs value and push its children onto the queue. Children are always added to the end of the queue, but we pull items from the beginning of the queue. That means weâll always go through all the nodes at any given depth before going deeper into the tree structure.

classNode{constructor(value,...children){this.value=valuethis.children=children}*[Symbol.iterator](){constqueue=[this]while(queue.length){constnode=queue.shift()yieldnode.valuequeue.push(...node.children)}}}console.log([...root])// <- [1, 2, 3, 8, 4, 9, 10, 5, 7, 6]

Generators are useful due to their expressiveness, while the iterator protocol allows us to define a sequence we can iterate at our own pace, which comes in handy when a tree has thousands of nodes and we need to throttle iteration for performance reasons.

4.3.5 Consuming Generator Functions for Flexibility

Thus far in the chapter weâve talked about generators in terms of constructing a consumable sequence. Generators can also be presented as an interface to a piece of code that decides how the generator function is to be iterated over.

In this section, weâll be writing a generator function that gets passed to a method, which loops over the generator consuming elements of its sequence. Even though you might think that writing code like this is unconventional at first, most libraries built around generators have their users write the generators while the library retains control over the iteration.

The following bit of code could be used as an example of how weâd like modelProvider to work. The consumer provides a generator function that yields crumbs to different parts of a model, getting back the relevant part of the model each time. A generator object can pass results back to the generator function by way of g.next(result). When we do this, a yield expression evaluates to the result produced by the generator object.

modelProvider(function*(){constitems=yield'cart.items'constitem=items.reduce((left,right)=>left.price>right.price?left:right)constdetails=yield`products.${item.id}`console.log(details)})

Whenever a resource is yielded by the user-provided generator, execution in the generator function is suspended until the iterator calls g.next again, which may even happen asynchronously behind the scenes. The next code snippet implements a modelProvider function that iterates over paths yielded by the generator. Note also how weâre passing data to g.next().

constmodel={cart:{items:[item1,â¦,itemN]},products:{product1:{â¦},productN:{â¦}}}functionmodelProvider(paths){constg=paths()pull()functionpull(data){const{value,done}=g.next(data)if(done){return}constcrumbs=value.split('.')constdata=crumbs.reduce(followCrumbs,model)pull(data)}}functionfollowCrumbs(data,crumb){if(!data||!data.hasOwnProperty(crumb)){returnnull}returndata[crumb]}

The largest benefit of asking consumers to provide a generator function is that providing them with the yield keyword opens up a world of possibilities where execution in their code may be suspended while the iterator performs an asynchronous operation in between g.next calls. Letâs explore more asynchronous uses of generators in the next section.

4.3.6 Dealing with Asynchronous Flows

Going back to the example where we call modelProvider with a user-provided generator, letâs consider what would change about our code if the model parts were to be provided asynchronously. The beauty of generators is that if the way we iterate over the sequence of paths were to become asynchronous, the user-provided function wouldnât have to change at all. We already have the ability to suspend execution in the generator while we fetch a piece of the model, and all itâd take would be to ask a service for the answer to the current path, return that value via an intermediary yield statement or in some other way, and then call g.next on the generator object.

Letâs assume weâre back at the following usage of modelProvider.

modelProvider(function*(){constitems=yield'cart.items'constitem=items.reduce((left,right)=>left.price>right.price?left:right)constdetails=yield`products.${item.id}`console.log(details)})

Weâll be using fetch to make requests for each HTTP resourceâwhich, as you may recall, returns a Promise. Note that given an asynchronous scenario we canât use for..of to go over the sequence, which is limited to synchronous loops.

The next code snippet sends an HTTP request for each query to the model, and the server is now in charge of producing the relevant bits of the model, without the client having to keep any state other than the relevant user authentication bits, such as cookies.

functionmodelProvider(paths){constg=paths()pull()functionpull(data){const{value,done}=g.next(data)if(done){return}fetch(`/model?query=${encodeURIComponent(value)}`).then(response=>response.json()).then(data=>pull(data))}}

Always keep in mind that, while a yield expression is being evaluated, execution of the generator function is paused until the next item in the sequenceâthe next query for the model, in our exampleâis requested to the iterator. In this sense, code in a generator function looks and feels as if it were synchronous, even though yield pauses execution in the generator until g.next resumes execution.

While generators let us write asynchronous code that appears synchronous, this introduces an inconvenience. How do we handle errors that arise in the iteration? If an HTTP request fails, for instance, how do we notify the generator and then handle the error notification in the generator function?

4.3.7 Throwing Errors at a Generator

Before shifting our thinking into user-provided generators, where they retain control of seemingly synchronous functions thanks to yield and suspension, we wouldâve been hard pressed to find a use case for g.throw, a method that can be used to report errors that take place while the generator is suspended. Its applications become apparent when we think in terms of the flow control code driving the moments spent in between yield expressions, where things could go wrong. When something goes wrong processing an item in the sequence, the code thatâs consuming the generator needs to be able to throw that error into the generator.

In the case of modelProvider, the iterator may experience network issuesâor a malformed HTTP responseâand fail to provide a piece of the model. In the following snippet of code, the fetch step was modified by adding an error callback that will be executed if parsing fails in response.json(), in which case weâll throw the exception at the generator function.

fetch(`/model?query=${encodeURIComponent(value)}`).then(response=>response.json()).then(data=>pull(data)).catch(err=>g.throw(err))

When g.next is called, execution in generator code is unsuspended. The g.throw method also unsuspends the generator, but it causes an exception to be thrown at the location of the yield expression. An unhandled exception in a generator would stop iteration by preventing other yield expressions from being reachable. Generator code could wrap yield expressions in try/catch blocks to gracefully manage exceptions forwarded by iteration codeâas shown in the following code snippet. This would allow subsequent yield expressions to be reached, suspending the generator and putting the iterator in charge once again.

modelProvider(function*(){try{console.log('items in the cart:',yield'cart.items')}catch(e){console.error('uh oh, failed to fetch model.cart.items!',e)}try{console.log(`these are our products:${yield'products'}`)}catch(e){console.error('uh oh, failed to fetch model.products!',e)}})

Even though generator functions allow us to suspend execution and then resume asynchronously, we can use the same error handling semanticsâtry, catch, and throwâas with regular functions. Having the ability to use try/catch blocks in generator code lets us treat the code as if it were synchronous, even when there are HTTP requests sitting behind yield expressions, in iterator code.

4.3.8 Returning on Behalf of a Generator

Besides g.next and g.throw, generator objects have one more method at their disposal to determine how a generator sequence is iterated: g.return(value). This method unsuspends the generator function and executes return value at the location of yield, typically ending the sequence being iterated by the generator object. This is no different to what would occur if the generator function actually had a return statement in it.

function*numbers(){yield1yield2yield3}constg=numbers()console.log(g.next())// <- { done: false, value: 1 }console.log(g.return())// <- { done: true }console.log(g.next())// <- { done: true }

Given that g.return(value) performs return value at the location of yield where the generator function was last suspended, a try/finally block could avoid immediate termination of the generated sequence, as statements in the finally block would be executed right before exiting. As shown in the following piece of code, that means yield expressions within the finally block can continue producing items for the sequence.

function*numbers(){try{yield1}finally{yield2yield3}yield4yield5}constg=numbers()console.log(g.next())// <- { done: false, value: 1 }console.log(g.return(-1))// <- { done: false, value: 2 }console.log(g.next())// <- { done: false, value: 3 }console.log(g.next())// <- { done: true, value -1 }

Letâs now look at a simple generator function, where a few values are yielded and then a return statement is encountered.

function*numbers(){yield1yield2return3yield4}

While you may place return value statements anywhere in a generator function, the returned value wonât show up when iterating the generator using the spread operator or Array.from to build an array, nor when using for..of, as shown next.

console.log([...numbers()])// <- [1, 2]console.log(Array.from(numbers()))// <- [1, 2]for(constnumberofnumbers()){console.log(number)// <- 1// <- 2}

This happens because the iterator result provided by executing g.return or a return statement contains the done: true signal, indicating that the sequence has ended. Even though that same iterator result also contains a sequence value, none of the previously shown methods take it into account when pulling a sequence from the generator. In this sense, return statements in generators should mostly be used as circuit-breakers and not as a way of providing the last value in a sequence.

The only way of actually accessing the value returned from a generator is to iterate over it using a generator object, and capturing the iterator result value even though done: true is present, as displayed in the following snippet.

constg=numbers()console.log(g.next())// <- { done: false, value: 1 }console.log(g.next())// <- { done: false, value: 2 }console.log(g.next())// <- { done: true, value: 3 }console.log(g.next())// <- { done: true }

Due to the confusing nature of the differences between yield expressions and return statements, return in generators would be best avoided except in cases where a specific method wants to treat yield and return differently, the end goal always being to provide an abstraction in exchange for a simplified development experience.

In the following section, weâll build an iterator that leverages differences in yield versus return to perform both input and output based on the same generator function.

4.3.9 Asynchronous I/O Using Generators

The following piece of code shows a self-describing generator function where we indicate input sources and an output destination. This hypothetical method could be used to pull product information from the yielded endpoints, which could then be saved to the returned endpoint. An interesting aspect of this interface is that as a user you donât have to spend any time figuring out how to read and write information. You merely determine the sources and destination, and the underlying implementation figures out the rest.

saveProducts(function*(){yield'/products/modern-javascript'yield'/products/mastering-modular-javascript'return'/wishlists/books'})

As a bonus, weâll have saveProducts return a promise thatâs fulfilled after the order is pushed to the returned endpoint, meaning the consumer will be able to execute callbacks after the order is filed. The generator function should also receive product data via the yield expressions, which can be passed into it by calling g.next with the associated product data.

saveProducts(function*(){constp2=yield'/products/modern-javascript'constp2=yield'/products/mastering-modular-javascript'return'/wishlists/books'}).then(response=>{// continue after storing the product list})

Conditional logic could be used to allow saveProducts to target a userâs shopping cart instead of one of their wish lists.

saveProducts(function*(){yield'/products/modern-javascript'yield'/products/mastering-modular-javascript'if(addToCart){return'/cart'}return'/wishlists/books'})

One of the benefits of taking this blanket âinputs and outputâ approach is that the implementation could be changed in a variety of ways, while keeping the API largely unchanged. The input resources could be pulled via HTTP requests or from a temporary cache, they could be pulled one by one or concurrently, or there could be a mechanism that combines all yielded resources into a single HTTP request. Other than semantic differences of pulling one value at a time versus pulling them all at the same time to combine them into a single request, the API would barely change in the face of significant changes to the implementation.

Weâll go over an implementation of saveProducts bit by bit. First off, the following piece of code shows how we could combine fetch and its promise-based API to make an HTTP request for a JSON document about the first yielded product.

functionsaveProducts(productList){constg=productList()constitem=g.next()fetch(item.value).then(res=>res.json()).then(product=>{})}

In order to pull product data in a concurrent seriesâasynchronously, but one at a timeâweâll wrap the fetch call in a recursive function that gets invoked as we get responses about each product. Each step of the way weâll be fetching a product, calling g.next to unsuspend the generator function asking for the next yielded item in the sequence, and then calling more to fetch that item.

functionsaveProducts(productList){constg=productList()more(g.next())functionmore(item){if(item.done){return}fetch(item.value).then(res=>res.json()).then(product=>{more(g.next(product))})}}

Thus far weâre pulling all inputs and passing their details back to the generator via g.next(product)âan item at a time. In order to leverage the return statement, weâll save the products in a temporary array and then POST the list onto the output endpoint present on the iterator item when the sequence is marked as having ended.

functionsaveProducts(productList){constproducts=[]constg=productList()more(g.next())functionmore(item){if(item.done){save(item.value)}else{details(item.value)}}functiondetails(endpoint){fetch(endpoint).then(res=>res.json()).then(product=>{products.push(product)more(g.next(product))})}functionsave(endpoint){fetch(endpoint,{method:'POST',body:JSON.stringify({products})})}}

At this point product descriptions are being pulled down, cached in the products array, forwarded to the generator body, and eventually saved in one fell swoop using the endpoint provided by the return statement.

In our original API design we suggested weâd return a promise from saveProducts so that callbacks could be chained and executed after the save operation. As we mentioned earlier, fetch returns a promise. By adding return statements all the way through our function calls, you can observe how saveProducts returns the output of more, which returns the output of save or details, both of which return the promise created by a fetch call. In addition, each details call returns the result of calling more from inside the details promise, meaning the original fetch wonât be fulfilled until the second fetch is fulfilled, allowing us to chain these promises, which will ultimately resolve when the save call is executed and resolved.

functionsaveProducts(productList){constproducts=[]constg=productList()returnmore(g.next())functionmore(item){if(item.done){returnsave(item.value)}returndetails(item.value)}functiondetails(endpoint){returnfetch(endpoint).then(res=>res.json()).then(product=>{products.push(product)returnmore(g.next(product))})}functionsave(endpoint){returnfetch(endpoint,{method:'POST',body:JSON.stringify({products})}).then(res=>res.json())}}

As you may have noticed, the implementation doesnât hardcode any important aspects of the operation, which means you could use the inputs and output pattern in a generic way as long as you have zero or more inputs you want to pipe into one output. The consumer ends up with an elegant-looking method thatâs easy to understandâthey yield input stores and return an output store. Furthermore, our use of promises makes it easy to concatenate this operation with others. This way, weâre keeping a potential tangle of conditional statements and flow control mechanisms in check, by abstracting away flow control into the iteration mechanism under the saveProducts method.

Weâve looked into flow control mechanisms such as callbacks, events, promises, iterators, and generators. The following two sections delve into async/await, async iterators, and async generators, all of which build upon a mixture of the flow control mechanisms weâve uncovered thus far in this chapter.

4.4 Async Functions