Take a moment to look back at what we’ve achieved so far. We started with a simple web application, with an infrastructure that was ready to fail. We took this app and used AWS services like Elastic Load Balancing and Auto Scaling to make it resilient, with certain components scaling out (and in) with traffic demand. We built an infrastructure (without any investment) that is capable of handling huge traffic at relatively low operational cost. Quite a feat!

Scaling beyond this amount of traffic requires more drastic changes in the application. We need to decouple, and for that we’ll use Amazon SimpleDB, Amazon Simple Notification Service (SNS), and Amazon Simple Queue Service (SQS), together with S3, which we have already seen in action. But these services are more versatile than just allowing us to scale. We can use the decoupling principle in other scenarios, either because we already have distinct components or because we can easily add functionality.

In this chapter, we’ll be presenting many different use cases. Some are already in production, others are planned or dreamed of. The examples are meant to show what you can do with these services and help you to develop your own by using code samples in various languages. We have chosen to use real-world applications that we are working with daily. The languages are Java, PHP, and Ruby, and the examples should be enough to get you going in other languages with libraries available.

In the SQS Developer Guide, you can read that “Amazon SQS is a distributed queue system that enables web service applications to quickly and reliably queue messages that one component in the application generates to be consumed by another component. A queue is a temporary repository for messages that are awaiting processing.”

And that’s basically all it is! You can have many writers hitting a queue at the same time. SQS does its best to preserve order, but the distributed nature makes it impossible to guarantee it. If you really need to preserve order, you can add your own identifier as part of the queued messages, but approximate order is probably enough to work with in most cases. A trade-off like this is necessary in massively scalable services like SQS. This is not very different from eventual consistency, as seen in S3 and (as we will show soon) in SimpleDB.

You can also have many readers, and SQS guarantees each message is delivered at least once. Reading a message is atomic—locks are used to keep multiple readers from processing the same message. Because in such a distributed system you can’t assume a message is not immediately deleted, SQS sets it to invisible. This invisibility has an expiration, called visibility timeout, that defaults to 30 seconds. If this is not enough, you can change it in the queue or per message, although the recommended way is to use different queues for different visibility timeouts. After processing the message, it must be deleted explicitly (if successful, of course).

You can have as many queues as you want, but leaving them inactive is a violation of intended use. We couldn’t figure out what the penalties are, but the principle of cloud computing is to minimize waste. Message size is variable, and the maximum is 64 KB. If you need to work with larger objects, the obvious place to store them is S3. In our examples, we use this combination as well.

One last important thing to remember is that messages are not retained indefinitely. Messages will be deleted after four days by default, but you can have your queue retain them for a maximum duration of two weeks.

We’ll show a number of interesting applications of SQS. For Kulitzer, we want more flexibility in image processing, so we’ve decided to decouple the web application from the image processing. For Marvia, we want to implement delayed PDF processing: users can choose to have their PDFs processed later, at a cheaper rate. And finally, we’ll use Decaf to have our phone monitor our queues and notify when they are out of bounds.

Remember how we handle image processing with Kulitzer? We basically have the web server spawn a background job for asynchronous processing, so the web server (and the user) can continue their business. The idea is perfect, and it works quite well. But there are emerging conversations within the team about adding certain features to Kulitzer that are not easily implemented in the current infrastructure.

Two ideas floating around are RAW images and video. For both these formats, we face the same problem: there is no ready-made solution to cater to our needs. We expect to have to build our service on top of multiple available free (and less free) solutions. Even though these features have not yet been requested and aren’t found on any road map, we feel we need to offer the flexibility for this innovation.

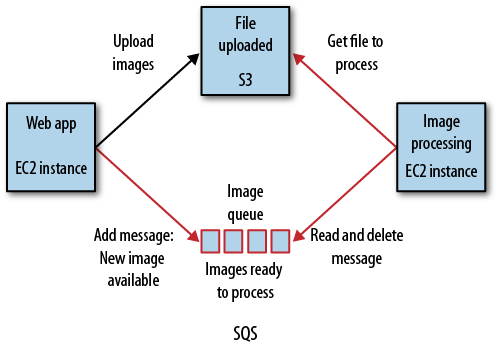

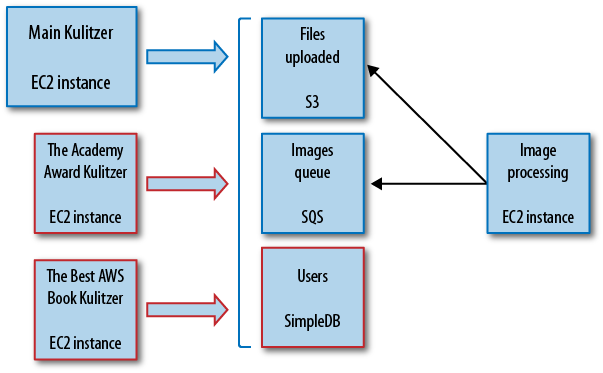

For the postprocessing of images (and video) to be more flexible, we need to separate this component from the web server. The idea is to implement a postprocessing component, picking up jobs from the SQS queue as they become available. The web server will handle the user upload, move the file to S3, and add this message to the queue to be processed. The images (thumbnails, watermarked versions, etc.) that are not yet available will be replaced by a “being processed” image (with an expiration header in the past). As soon as the images are available, the user will see them. Figure 4-1 shows how this could be implemented using a different EC2 instance for image processing in case scalability became a concern. The SQS image queue and the image processing EC2 instance are introduced in this change.

We already have the basics in our application, and we just need to

separate them. We will move the copying of the image to S3 out of the

background jobs, because if something goes wrong we need to be able to

notify the user immediately. The user will wait until the image has been

uploaded, so he can be notified on the spot if something went wrong

(wrong file image, type, etc.). This simplifies the app, making it

easier to maintain. If the upload to S3 was successful, we add an entry

to the images queue.

Note

SQS is great, but there are not many tools to work with. You can use the SQS Scratchpad, provided by AWS, to create your first queues, list queues, etc. It’s not a real app, but it’s valuable nonetheless. You can also write your own tools on top of the available libraries. If you work with Python, you can start the shell, load boto, and do the necessary work by hand.

This is all it takes to create a queue and add the image as a

queue message. You can run this example with irb,

using the Ruby gem from RightScale right_aws. Use

images_queue.size to verify that your message has

really been added to the queue:

require 'rubygems'

require 'right_aws'

# get SQS service with AWS credentials

sqs = RightAws::SqsGen2.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

# create the queue, if it doesn't exist, with a VisibilityTimeout of 120 (seconds)

images_queue = sqs.queue("images", true, 120)

# the only thing we need to pass is the URL to the image in S3

images_queue.send_message(

"https://s3.amazonaws.com/media.kulitzer.com.production/212/24/replication.jpg")That is more or less what we will do in the web application to add

messages to the queue. Getting messages from the queue, which is part of

our image processing component, is done in two steps. If you only get a

message from the queue—receive—SQS will set that

message to invisible for a certain time. After you process the message,

you can delete it from the queue.

right_aws provides a method pop,

which both receives and deletes the message in one operation. We

encourage you not to use this, as it can lead to

errors that are very hard to debug because you have many components in

your infrastructure and a lot of them are transient (as instances can be

terminated by Auto Scaling, for example). This is how we pick up,

process, and, upon success, delete messages from the

images_queue:

require 'rubygems'

require 'right_aws'

sqs = RightAws::SqsGen2.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

# create the queue, if it doesn't exist, with a VisibilityTimeout of 120 (seconds)

images_queue = sqs.queue("images", true, 120)

# now get the messages

message = images_queue.receive

# process the message, if any

# the process method is application-specific

if (process(message.body)) then

message.delete

endThis is all it takes to decouple distinct components of your application. We only pass around the full S3 URL, because that is all we need for now. The different image sizes that are going to be generated will be put in the same directory in the same bucket, and are distinguishable by filename. Apart from being able to scale, we can also scale flexibly. We can use the queue as a buffer, for example, so we can run the processing app with fewer resources. There is no user waiting and we don’t have to be snappy in performance.

Tip

This is also the perfect moment to introduce reduced redundancy storage, which is a less reliable kind of storage than standard S3, but notifies you when a particular file is compromised. The notification can be configured to place a message in the queue we just created, and our post-processing component recognizes it as a compromised file and regenerates it from the original.

Reduced redundancy storage is cheaper than standard S3 and can be enabled per file. Especially with large content repositories, you only require the original to be as redundant as S3 originally was. For all generated files, we don’t need that particular security.

Notification is through SNS, which we’ll see in action in a little bit. But it is relatively easy to have SNS forward the notification to the queue we are working with.

Marvia is a young Dutch company located in the center of Amsterdam. Even though it hasn’t been around for long, its applications are already very impressive. For example, it built the Speurders application for the Telegraaf (one of the largest Dutch daily newspapers), which allows anyone to place a classified ad in the print newspaper. No one from the Telegraaf bothers you, and you can decide exactly how your ad will appear in the newspaper.

But this is only the start. Marvia will expose the underlying technology of its products in what you can call an API. This means Marvia is creating a PDF cloud that will be available to anyone who has structured information that needs to be printed (or displayed) professionally.

One of the examples showing what this PDF cloud can do is Cineville.nl, a weekly film schedule that is distributed in print in and around Amsterdam. Cineville gets its schedule information from different cinemas and automatically has its PDF created. This PDF is then printed and distributed. Cineville has completely eradicated human intervention in this process, illustrating the extent of the new industrialization phase we are entering with the cloud.

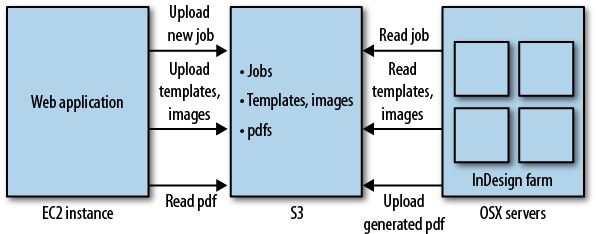

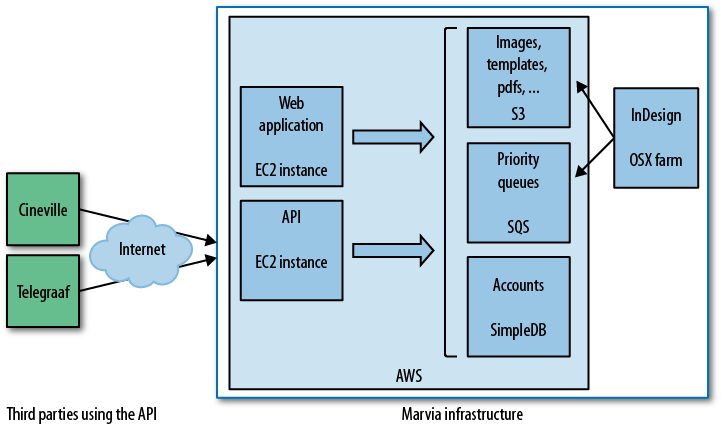

The Marvia infrastructure is already decoupled, and it consists of two distinct components. One component (including a web application) creates the different assets needed for generating a PDF: templates, images, text, etc. These are then sent to the second component, the InDesign farm. This is an OSX-based render farm, and since AWS does not (yet) support OSX, Marvia chose to build it on its own hardware. This architecture is illustrated in Figure 4-2.

The two components already communicate, by sharing files through S3. It works, but it lacks the flexibility to innovate the product. One of the ideas being discussed is to introduce the concept of quality of service. The quality of the PDFs is always the same—they’re very good because they’re generated with care, and care takes time. That’s usually just fine, but sometimes you’re in a hurry and you’re willing to pay something extra for preferential treatment.

Again, we built in flexibility. For now we are stuck with OSX on physical servers, but as soon as that is available in the cloud, we can easily start optimizing resources. We can use spot instances (see the Tip), for example, to generate PDFs with the lowest priority. The possibilities are interesting.

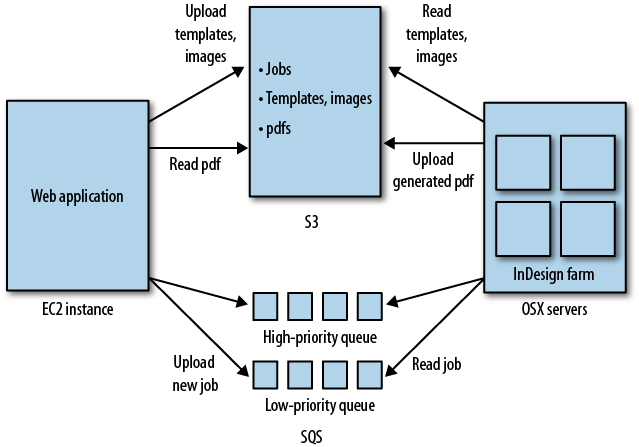

But let’s look at what is necessary to implement a basic version of quality of service. We want to create two queues: high-priority and normal. When there are messages in the high-priority queue, we serve those; otherwise, we work on the rest. If necessary, we could add more rules to prevent starvation of the low-priority queue. These changes are shown in Figure 4-3.

AWS has a full-fledged PHP library, complete with code samples and some documentation. It is relatively easy to install using PHP Extension and Application Repository (PEAR). To install the stable version of the AWS PHP library in your PHP environment, execute the following commands (for PHP 5.3, most required packages are available by default, but you do have to install the JSON library and cURL):

$ pear install pecl/json $ apt-get install curl libcurl3 php5-curl $ pear channel-discover pear.amazonwebservices.com $ pear install aws/sdk

The first script receives messages that are posted with HTTP, and adds them to the right SQS queue. The appropriate queue is passed as a request parameter. The job description is fictional, and hardcoded for the purpose of the example. We pass the job description as an encoded JSON array. The queue is created if it doesn’t exist already:

Note

We have used the AWS PHP SDK as is, but the path to

sdk.class.php might vary depending on your

installation. The SDK reads definitions from a config file in your

home directory or the directory of the script. We have included them

in the script for clarity.

<?php

require_once( '/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

define('AWS_ACCOUNT_ID', '457964863276');

# get queue name

$queue_name = $_GET['queue'];

# construct the message

$job_description = array(

'template' =>

'https://s3-eu-west-1.amazonaws.com/production/templ_1.xml',

'assets' =>

'https://s3-eu-west-1.amazonaws.com/production/assets/223',

'result' =>

'https://s3-eu-west-1.amazonaws.com/production/pdfs/223');

$body = json_encode( $job_description);

$sqs = new AmazonSQS();

$sqs->set_region($sqs::REGION_EU_W1);

$high_priority_jobs_queue = $sqs->create_queue( $queue_name);

$high_priority_jobs_queue->isOK() or

die('could not create queue high-priority-jobs');

# add the message to the queue

$response = $sqs->send_message(

$high_priority_jobs_queue->body->QueueUrl(0),

$body);

pr( $response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>Below, you can see an example of what the output might look like

(we would invoke it with something like

http://<elastic_ip>/write.php?queue=high-priority):

CFSimpleXML Object

(

[@attributes] => Array

(

[ns] => http://queue.amazonaws.com/doc/2009-02-01/

)

[SendMessageResult] => CFSimpleXML Object

(

[MD5OfMessageBody] => d529c6f7bfe37a6054e1d9ee938be411

[MessageId] => 2ffc1f6e-0dc1-467d-96be-1178f95e691b

)

[ResponseMetadata] => CFSimpleXML Object

(

[RequestId] => 491c2cd1-210c-4a99-849a-dbb8767d0bde

)

)In this example, we read messages from the SQS queue, process them, and delete them afterward:

<?php

require_once('/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

define('AWS_ACCOUNT_ID', '457964863276');

$queue_name = $_GET['queue'];

$sqs = new AmazonSQS();

$sqs->set_region($sqs::REGION_EU_W1);

$queue = $sqs->create_queue($queue_name);

$queue->isOK() or die('could not create queue ' . $queue_name);

$receive_response = $sqs->receive_message( $queue->body->QueueUrl(0));

# process the message...

$delete_response = $sqs->delete_message( $queue->body->QueueUrl(0),

(string)$receive_response->body->ReceiptHandle(0));

$body = json_decode($receive_response->body->Body(0));

pr( $body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>The output for this example would look like this (we would

invoke it with something like

http://<elastic_ip>/read.php?queue=high-priority):

stdClass Object

(

[template] => https://s3-eu-west-1.amazonaws.com/production/templ_1.xml

[assets] => https://s3-eu-west-1.amazonaws.com/production/assets/223

[result] => https://s3-eu-west-1.amazonaws.com/production/pdfs/223

)We are going to get a bit ahead of ourselves in this section. Operating apps using advanced services like SQS, SNS, and SimpleDB is the subject of Chapter 7. But we wanted to show using SQS in the Java language too. And, as we only consider real examples interesting (the only exception being Hello World, of course), we’ll show you excerpts of Java source from Decaf.

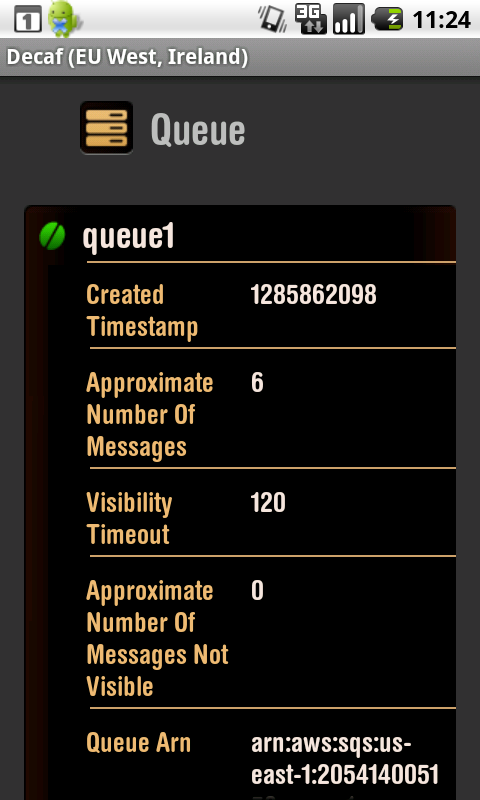

If you start using queues as the glue between the components of your applications, you are probably curious about the state. It is not terribly interesting how many messages are in the queue at any given time if your application is working. But if something goes wrong with the pool of readers or writers, you can experience a couple of different situations:

The queue has more messages than normal.

The queue has too many invisible messages (messages are being processed but not deleted).

The queue doesn’t have enough messages.

We are going to add a simple SQS browser to Decaf. It shows the

queues in a region, and you can see the state of a queue by inspecting

its attributes. The attributes we are interested in are

ApproximateNumberOfMessages and

ApproximateNumberOfMessagesNotVisible. We already

have all the mechanics in place to monitor certain aspects of your

infrastructure automatically; we just need to add appropriate calls to

watch the queues.

For using the services covered in this chapter, there is

AWS SDK

for Android. To set up the SQS service, all you need is to

pass it your account credentials and set the right endpoint for the

region you are working on. In the Decaf examples, we are using the

us-east-1 region. If you are not interested in

Android and just want to use plain Java, there is the AWS SDK for Java,

which covers all the services. All the examples given in this book run

with both sets of libraries.

In this example, we invoke the ListQueues action, which returns a list of

queue URLs. Any other information about the queues (such as current

number of messages, time when it was created, etc.) has to be

retrieved in a separate call passing the queue URL, as we show in the

next sections.

If you have many queues, it is possible to retrieve just some of them by passing a queue name prefix parameter. Only the queues with names starting with that prefix will be returned:

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.sqs.AmazonSQS;

import com.amazonaws.services.sqs.AmazonSQSClient;

// ...

// prepare the credentials

String accessKey = "AKIAIGKECZXA7AEIJLMQ";

String secretKey = "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";

// create the SQS service

AmazonSQS sqsService = new AmazonSQSClient(

new BasicAWSCredentials(accessKey, secretKey));

// set the endpoint for us-east-1 region

sqsService.setEndpoint("https://sqs.us-east-1.amazonaws.com");

// get the current queues for this region

this.queues = sqsService.listQueues().getQueueUrls();The attributes of a queue at the time of writing are:

The approximate number of visible messages it contains. This number is approximate because of the distributed architecture on which SQS is implemented, but generally it should be very close to reality.

The approximate number of messages that are not visible. These are messages that have been retrieved by some component to be processed but have not yet been deleted by that component, and the visibility timeout is not over yet.

The visibility timeout. This is how long a message can be in invisible mode before SQS decides that the component responsible for it has failed and puts the message back in the queue.

The timestamp when the queue was created.

The timestamp when the queue was last updated.

The permissions policy.

The maximum message size. Messages larger than the maximum will be rejected by SQS.

The message retention period. This is how long SQS will keep your messages if they are not deleted. The default is four days. After this period is over, the messages are automatically deleted.

You can change the visibility timeout, policy, maximum message

size, and message retention period by invoking the

SetQueueAttributes action.

You can indicate which attributes you want to list, or use

All to get the whole list, as done here:

import com.amazonaws.services.sqs.AmazonSQS;

import com.amazonaws.services.sqs.AmazonSQSClient;

import com.amazonaws.services.sqs.model.GetQueueAttributesRequest;

// ...

GetQueueAttributesRequest request = new GetQueueAttributesRequest();

// set the queue URL, which identifies the queue (hardcoded for this example)

String queueURL = "https://queue.amazonaws.com/205414005158/queue4";

request = request.withQueueUrl(queueURL);

// we want all the attributes of the queue

request = request.withAttributeNames("All");

// make the request to the service

this.attributes = sqsService.getQueueAttributes(request).getAttributes();Figure 4-4 shows a screenshot of our Android application, listing attributes of a queue.

If we want to check the number of messages in a queue and

trigger an alert (email message, Android notification, SMS, etc.), we

can request the attribute

ApproximateNumberOfMessages:

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.sqs.AmazonSQS;

import com.amazonaws.services.sqs.AmazonSQSClient;

import com.amazonaws.services.sqs.model.GetQueueAttributesRequest;

// ...

// get the attribute ApproximateNumberOfMessages for this queue

GetQueueAttributesRequest request = new GetQueueAttributesRequest();

String queueURL = "https://queue.amazonaws.com/205414005158/queue4";

request = request.withQueueUrl(queueURL);

request = request.withAttributeNames("ApproximateNumberOfMessages");

Map<String, String> attrs = sqsService.getQueueAttributes(request).getAttributes();

// get the approximate number of messages in the queue

int messages = Integer.parseInt(attrs.get("ApproximateNumberOfMessages"));

// compare with max, the user's choice for maximum number of messages

if (messages > max) {

// if number of messages exceeds maximum,

// notify the user using Android notifications...

// ...

}Note

All the AWS web service calls can throw

exceptions—AmazonServiceException,

AmazonClientException, and so on—signaling

different errors. For SQS calls you could get errors such as

“Invalid attribute” and “Nonexistent queue.” In general, you could

get other errors common to all web services such as “Authorization

failure” or “Invalid request.” We are not showing the

try-catch in these examples, but in your

application you should make sure the different error conditions are

handled.

AWS says that SimpleDB is “a highly available, scalable, and flexible nonrelational data store that offloads the work of database administration.” There you have it! In other words, you can store an extreme amount of structured information without worrying about security, data loss, and query performance. And you pay only for what you use.

SimpleDB is not a relational database, but to explain what it is, we will compare it to a relational database since that’s what we know best. SimpleDB is not a database server, so therefore there is no such thing in SimpleDB as a database. In SimpleDB, you create domains to store related items. Items are collections of attributes, or key-value pairs. The attributes can have multiple values. An item can have 256 attributes and a domain can have one billion attributes; together, this may take up to 10 GB of storage.

You can compare a domain to a table, and an item to a record in that table. A traditional relational database imposes the structure by defining a schema. A SimpleDB domain does not require items to be all of the same structure. It doesn’t make sense to have all totally different items in one domain, but you can change the attributes you use over time. As a consequence, you can’t define indexes, but they are implicit: every attribute is indexed automatically for you.

Domains are distinct—they are on their own. Joins, which are the most powerful feature in relational databases, are not possible. You cannot combine the information in two domains with one single query. Joins were introduced to reconstruct normalized data, where normalizing data means ripping it apart to avoid duplication.

Because of the lack of joins, there are two different approaches to handling relations (previously handled by joins). You can either introduce duplication (e.g., store employees in the employer domain and vice versa), or you can use multiple queries and combine the data at the application level. If you have data duplication and if several applications write to your SimpleDB domains, each of them will have to be aware of this when you make changes or add items to maintain consistency. In the second case, each application that reads your data will need to aggregate information from different domains.

There is one other aspect of SimpleDB that is important to understand. If you add or update an item, it does not have to be immediately available. SimpleDB reserves the right to take some time to process the operations you fire at it. This is what is called eventual consistency, and for many kinds of information, it is not a problem.

But in some cases, you need the latest, most up-to-date information. Think of an online auction website like eBay, where people bid for different items. At the moment a purchase is made, it’s important that the right—latest—price is read from the database. To address those situations, SimpleDB introduced two new features in early 2010: consistent read and conditional put/delete. A consistent read guarantees to return values that reflect all previously successful writes. Conditional put/delete guarantees that a certain operation is performed only when one of the attributes exists or has a particular value. With this, you can implement a counter, for example, or implement locking/concurrency.

We have to stress that SimpleDB is a service, and as such, it solves a number of problems for you. Indexing is one we already mentioned. High availability, performance, and infinite scalability are other benefits. You don’t have to worry about replicating your data to protect it against hardware failures, and you don’t have to think of what hardware you are using if you have more load, or how to handle peaks. Also, the software upgrades are taken care of for you.

But even though SimpleDB makes sure your data is safe and highly available by seamlessly replicating it in several data centers, Amazon itself doesn’t provide a way to manually make backups. So if you want to protect your data against your own mistakes and be able to revert back to previous versions, you will have to resort to third-party solutions that can back up SimpleDB data, for example to S3.

Table 4-1 highlights some of the differences between the SimpleDB data store and relational databases.

Tip

SimpleDB is not (yet) part of the AWS Console, but to get an idea of the API that SimpleDB provides, you can play around with the SimpleDB Scratchpad.

Table 4-1. SimpleDB data store versus relational databases

| Relational databases | SimpleDB |

|---|---|

| Tables are organized in databases | No databases; all domains are loose in your AWS account |

| Schemas to define table structure | No predefined structure; variable attributes |

| Tables, records, and columns | Domains, items, and attributes |

| Columns have only one value | Attributes can have multiple values |

| You define indexes manually | All attributes are automatically indexed |

| Data is normalized; broken down to its smallest parts | Data is not always normalized |

| Joins are used to denormalize | No joins; either duplicate data or multiple queries |

| Transactions are used to guarantee data consistency | Eventual consistency, consistent read, or conditional put/delete |

So what can you do with SimpleDB? It is different enough from traditional relational databases that you need to approach your problem from other angles, yet it is similar enough to make that extremely difficult.

AWS itself also seems to struggle with this. If you look at the featured use cases, they mention logging, online games, and metadata indexing. Logging is suitable for SimpleDB, but you do have to realize you can’t use SimpleDB for aggregate reporting: there are no aggregate functions such as SUM, AVERAGE, MIN, etc. Metadata indexing is a very good pattern of applying SimpleDB to your problem; you can have data stored in S3 and use SimpleDB domains to store pointers to S3 objects with more information about them (metadata). It is very quick and easy to search and query this metadata.

Another class of problems SimpleDB is perfect for is sharing information between isolated components of your application (decoupling). Where we use SQS for communicating actions or messages, SimpleDB provides a way to share indexed information, i.e., information that can be searched. A SimpleDB item is limited in size, but you can use S3 for storing bigger objects, for example images and videos, and point to them from SimpleDB. You could call this metadata indexing.

Other classes of problems where SimpleDB is useful are:

- Loosely coupled systems

This is our favorite use of SimpleDB so far. Loosely coupled components share information, but are otherwise independent.

Suppose you have a big system and you suddenly realize you need to scale by separating components. You have to consider what to do with the data that is shared by the resulting components. SimpleDB has the advantage of having no setup or installation overhead, and it’s not necessary to define the structure of all your data in advance. If the data you have to share is not very complex, SimpleDB is a good choice. Your data will be highly available for all your components, and you will be able to retrieve it quickly (by selecting or searching) thanks to indexing.

- Fat clients

For years, everyone has been working on thin clients; the logic of a web application is located on the server side. With Web 2.0, the clients are getting a bit fatter, but still, the server is king. The explosion of smartphone apps and app stores shows that the fat client is making a comeback. These new, smarter apps do a lot themselves, but in the age of the cloud they can’t do everything, of course. SimpleDB is a perfect companion for this type of system: self-contained clients operating on cloud-based information. Not really thick, but certainly not thin: thick-thin-clients.

One advantage of SimpleDB here is that it’s ready to use right away. There is no setup or administration hassle, the data is secure, and, most importantly, SimpleDB provides access through web services that can be called easily from these clients. Typically, many applications take advantage of storing data in the cloud to build different kinds of clients—web, smartphone, desktop—accessing the same data.

- Large (linear) datasets

The obvious example of a large, simple dataset in the context of Amazon is books. A quick calculation shows that you can store 31,250,000 books in one domain, if 32 attributes is enough for one book. We couldn’t find the total number of different titles on Amazon.com, but Kindle was reported to offer 500,000 titles in April 2010. Store PDFs in S3, and you are seriously on your way to building a bookstore.

Other examples are music and film—much of the online movie database IMDb’s functionality could be implemented with SimpleDB. If you need to scale and handle variations in your load, SimpleDB makes sure you have enough resources, and guarantees good performance when searching through your data.

- Hyperpersonal

A new application of cloud-based structured storage (SimpleDB) can be called hyperpersonal storage. For example, saving the settings or preferences of your applications/devices or your personal ToDo list makes recovery or reinstallation very easy. Everyone has literally hundreds (perhaps even thousands) of these implicit or explicit little lists of personal information.

This is information that is normally not shared with others. It is very suitable for SimpleDB because there is no complex structure behind it.

- Scale

Not all applications are created equal, and “some are more equal than others.” Most public web applications would like to have as many users as Amazon.com, but the reality is different. If you are facing the problem of too much load, you have probably already optimized your systems fully. It is time to take more dramatic measures.

As described in Chapter 1, the most drastic thing you can do is eliminate joins. If you get rid of joins, you release part of your information; it becomes isolated and can be used independently of other information. You can then move this free information to SimpleDB, which takes over responsibilities such as scalability and availability while giving you good performance.

On the Web you can buy, sell, and steal, but you can’t usually organize a contest. That is why Kulitzer is one of a kind. Contests are everywhere. There are incredibly prestigious contests like the Cannes Film Festival or the Academy Awards. There are similar prizes in every country and every school. There are other kinds of contests like American Idol, and every year thousands of girls want to be the prom queen.

Kulitzer aims to be one platform for all. If you want to host a contest, you are more than welcome to. But some of these festivals require something special—they want their own Kulitzer. At this moment, we want to meet this demand, but we do not want to invest in developing something like Google Sites where you can just create your own customized contest environment. We will simply set up a separate Kulitzer environment, designed to the wishes of the client.

But the database of users is very important, and we want to keep it on our platform. We believe that, in the end, Kulitzer will be the brand that gives credibility to the contests it hosts. Kulitzer is the reputation system for everyone participating in contests. This means that our main platform and the specials are all built on one user base, with one reputation system, and a recognizable experience for the users (preferences are personal, for example; you take them with you). We are going to move the users to SimpleDB so they can be accessed both by the central Kulitzer and by the separate special Kulitzer environments. Figure 4-5 shows the new architecture. The new components are the SimpleDB users domain and the new EC2 instances for separate contests.

Note

Figure 4-5 does not show the RDS instance used for the database, which we introduced in Chapter 1. With this change, each new special Kulitzer would use an RDS instance of its own for its relational data. In fact, each Kulitzer environment would be run on a different AWS account. To simplify the figure, ELBs are not shown.

The solution of moving the users to SimpleDB falls into several of the classes of problems described above. It is a large linear dataset we need to scale, and we also deal with disparate (decoupled) systems.

In the examples below, we show how to create an item in a

users domain, and then how to retrieve a user item

using Ruby.

To add a user, all you need is something like the

following. We use the RightAWS Ruby library. The attribute

id will be the item name, which is always unique in

SimpleDB. When using SimpleDB with RightAWS, we need an additional gem

called uuidtools, which you can install with

gem install uuidtools:

require 'rubygems'

require 'right_aws'

require 'sdb/active_sdb'

RightAws::ActiveSdb.establish_connection("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

# right_aws' simpledb is still alpha. it works, but it feels a bit

# experimental and does not resemble working with SQS. Anyway, you need

# to subclass Base to access a domain. The name of the class will be

# lowercased to domain name.

class Users < RightAws::ActiveSdb::Base

end

# create domain users if it doesn't exist yet,

# same as RightAws::ActiveSdb.create_domain("users")

Users.create_domain

# add Arjan

# note: id is used by right_aws sdb as the name, and names are unique

# in simpledb. but create is sort of idempotent, doens't matter if you

# create it several times, it starts to act as an update.

Users.create(

'id' => 'arjanvw@gmail.com',

'login' => 'mushimushi',

'description' =>

'total calligraphy enthusiast ink in my veins!!',

'created_at' => '1241263900',

'updated_at' => '1283845902',

'admin' => '1',

'password' =>

'33a1c623d4f95b793c2d0fe0cadc34e14f27a664230061135',

'salt' => 'Koma659Z3Zl8zXmyyyLQ',

'login_count' => '22',

'failed_login_count' => '0',

'last_request_at' => '1271243580',

'active' => '1',

'agreed_terms' => '1')Once you know who you want, it is easy and fast to get

everything. This particular part of the RightScale SimpleDB Ruby

implementation does not follow the SQS way. SQS is more object

oriented and easier to read. But the example below is all it takes to

get a particular item. If you want to perform more complex queries, it

doesn’t get much more difficult. For example, getting all admins can

be expressed with something like administrators = Users.find(

:all, :conditions=> [ "['admin'=?]", "1"]):

require 'rubygems'

require 'right_aws'

require 'sdb/active_sdb'

RightAws::ActiveSdb.establish_connection("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

class Users < RightAws::ActiveSdb::Base

end

# get Arjan

arjan = Users.find('arjanvw@gmail.com')

# reload to get all the attributes

arjan.reload

puts arjan.inspectNote

As we were writing this book, we found that there are not many alternatives to a good SimpleDB solution for Ruby. We chose to use RightAWS because of consistency with the SQS examples, and because we found it was the most comprehensive of the libraries we saw. The implementation of SimpleDB is a bit “Railsy”: it generates IDs automatically and uses those as the names for the items. You can overwrite that behavior, as we did, at your convenience.

With Marvia, we started to implement some of our ideas regarding quality of service, a finer-grained approach to product differentiation (Example 2: Priority PDF Processing for Marvia (PHP)). We created a priority lane for important jobs using SQS, giving the user the option of preferential treatment. We introduced flexibility at the job level, but we want to give more room for product innovation.

The current service is an all-you-can-eat plan for creating your PDFs. This is interesting, but for some users it is too much. In some periods, they generate tens or even hundreds of PDFs per week, but in other periods they only require the service occasionally. These users are more than willing to spend extra money during their busy weeks if they can lower their recurring costs.

We want to introduce this functionality gradually into our application, starting by implementing a fair-use policy on our entry-level products. For this, we need two things: we need to keep track of actual jobs (number of PDFs created), and we need a phone to inform customers if they are over the limit. If we know users consistently breach the fair use, it is an opportunity to upgrade. So for now we only need to start counting jobs.

At the same time, we are working toward a full-blown API that implements many of the features of the current application and that will be open for third parties to use. Extracting the activity per account information from the application and moving it to a place where it can be shared is a good first step in implementing the API. So the idea is to move accounts to SimpleDB. SimpleDB is easily accessible by different components of the application, and we don’t have to worry about scalability. Figure 4-6 shows the new Marvia ecosystem.

Adding an item to a domain in SimpleDB is just as easy as

sending a message to a queue in SQS. An item always has a

name, which is unique within its domain; in this

case, we chose the ID of the account as the name. We are going to

count the number of PDFs generated for all accounts, but we do want to

share if an account is restricted under the fair-use policy of

Marvia.

As with the SQS create_queue method, the

create_domain method is idempotent (it doesn’t

matter if you run it twice; nothing changes). We need to set the

region because we want the lowest latency possible and the rest of our

infrastructure is in Europe. And instead of having to use JSON to add

structure to the message body, we can add multiple attributes as

name/value pairs. We’ll have the attribute fair,

indicating whether this user account is under the fair-use policy, and

PDFs, containing the number of jobs done. We want

both attributes to have a singular value, so we have to specify that

we don’t want to add, but rather

replace, existing attribute/value pairs in the put_attributes method:

<?php

require_once('/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

define('AWS_ACCOUNT_ID', '457964863276');

# construct the message (use zero padding to handle

# simpledb's lexicographic ordering)

$account = array(

'fair' => 'yes',

'PDFs' => sprintf('%05d', '0'));

$sdb = new AmazonSDB();

$sdb->set_region($sdb::REGION_EU_W1);

$accounts = $sdb->create_domain('accounts');

$accounts->isOK() or

die('could not create domain accounts');

$response = $sdb->put_attributes('accounts',

'jurg@9apps.net', $account, true);

pr($response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>If you want to get all attributes of a specific item, you can

use the get_attributes method. Just state the

domain name and item name, and you will get a

CFSimpleXML object with all attributes. This is

supposed to be easy, but we don’t find it very straightforward. But if

you understand it, you can parse XML with very brief statements, which

is convenient compared to other ways of dealing with XML:

<?php

require_once('/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

define('AWS_ACCOUNT_ID', '457964863276');

$sdb = new AmazonSDB();

$sdb->set_region($sdb::REGION_EU_W1);

$accounts = $sdb->create_domain('accounts');

$accounts->isOK() or

die('could not create domain accounts');

$response = $sdb->get_attributes('accounts',

'jurg@9apps.net');

pr($response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>As output when invoking this script, we will see something like this:

CFSimpleXML Object

(

[@attributes] => Array

(

[ns] => http://sdb.amazonaws.com/doc/2009-04-15/

)

[GetAttributesResult] => CFSimpleXML Object

(

[Attribute] => Array

(

[0] => CFSimpleXML Object

(

[Name] => PDFs

[Value] => 00000

)

[1] => CFSimpleXML Object

(

[Name] => fair

[Value] => yes

)

)

)

[ResponseMetadata] => CFSimpleXML Object

(

[RequestId] => eec8b61d-4107-0f1c-45b2-219f5f0b895a

[BoxUsage] => 0.0000093282

)

)Before the introduction of conditional puts and consistent reads, some things were impossible to guarantee: for example, atomically incrementing the value of an attribute. But with conditional puts, we can do just that. SimpleDB does not have an increment operator, so we have to first get the value of the attribute we want to increment. It is possible that someone else updated that particular attribute. We can try, but the conditional put will fail. Not a problem—we can just try again. (We just introduced a possible race condition, but we are not generating thousands of PDFs a second; if we do 100 a day per account, it is a lot.)

To be sure we have the most up-to-date value for this attribute, we do a consistent read. You can consider a consistent read as a flush for writes (puts)—using consistent reads forces all operations to be propagated over the replicated instances of your data. A regular read can get information from a replicated instance that does not yet have the latest updates in the system. A consistent read can be slower, especially if there are operations to be forced to propagate:

<?php

require_once('/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

define('AWS_ACCOUNT_ID', '457964863276');

$sdb = new AmazonSDB();

# $sdb->set_region($sdb::REGION_EU_W1);

$accounts = $sdb->create_domain( 'accounts');

$accounts->isOK() or

die('could not create domain accounts');

# we have to be a bit persistent; even though it

# is unlikely someone else might have incremented

# during our operation

do {

$response = $sdb->get_attributes('accounts',

'jurg@9apps.net', 'PDFs', array( 'ConsistentRead' => 'true'));

$PDFs = (int)$response->body->Value(0);

$account = array('PDFs' => sprintf( '%05d', $PDFs + 1));

$response = $sdb->put_attributes(

'accounts',

'jurg@9apps.net',

$account,

true,

array(

'Expected.Name' => 'PDFs',

'Expected.Value' => sprintf( '%05d', $PDFs)));

} while($response->isOK() === FALSE);

?>Decaf is a mobile tool, and it offers a unique way of interacting with your AWS applications. If your app is on AWS and you use SimpleDB, there are a couple of interesting things you can learn on the go.

Sometimes you’re not at the office, but you would like to search or browse your items in SimpleDB. Perhaps you’re at a party and you want to know if someone you meet is a Kulitzer user. Or perhaps you run into a customer at a networking event and you want to know how many PDFs have been generated by her.

Another example addresses the competitive thrill seeker in us. If we have accounts in SimpleDB, we might want to monitor the size of that domain. We want to know when we hit 1,000 and, after that, 10,000.

So that is what we are going to do here. We are going to implement a basic SimpleDB browser that can search for and monitor certain attributes.

Tip

If you use the Eclipse development environment for Java, you can try out the AWS Toolkit for Eclipse, which provides some nice graphical tools for managing SimpleDB. You can manage your domains and items, and make select queries in a Scratchpad.

Listing existing domains is simple. The ListDomains action returns a list of

domain names. This list has, by default, a maximum of 100 elements

(you can change that by adding a parameter in the request), and if

there are more than the maximum, you get a token to get the next

“page” of results:

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.simpledb.AmazonSimpleDB;

import com.amazonaws.services.simpledb.AmazonSimpleDBClient;

import com.amazonaws.services.simpledb.model.ListDomainsRequest;

import com.amazonaws.services.simpledb.model.ListDomainsResult;

// ...

// prepare the credentials

String accessKey = "AKIAIGKECZXA7AEIJLMQ";

String secretKey = "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";

// create the SimpleDB service

AmazonSimpleDB sdbService = new AmazonSimpleDBClient(

new BasicAWSCredentials(accessKey, secretKey));

// set the endpoint for us-east-1 region

sdbService.setEndpoint("https://sdb.amazonaws.com");

String nextToken = null;

ListDomainsRequest request = new ListDomainsRequest();

List<String> domains = new ArrayList<String>();

// get the existing domains for this region

do {

if (nextToken != null) request = request.withNextToken(nextToken);

ListDomainsResult result = sdbService.listDomains(request);

nextToken = result.getNextToken();

domains.addAll(result.getDomainNames());

} while (nextToken != null);

// show the domains in a list...The way to list the items in a domain is to execute a

select. The syntax is similar to that of an

SQL select statement, but of course there are no

joins; it is only for fetching items from one domain at a time. The

results are paginated, as in the case of domains, in a way that each

response never exceeds 1 MB.

The following retrieves all the items of a given domain:

import com.amazonaws.services.simpledb.AmazonSimpleDB;

import com.amazonaws.services.simpledb.AmazonSimpleDBClient;

import com.amazonaws.services.simpledb.model.Item;

import com.amazonaws.services.simpledb.model.SelectRequest;

import com.amazonaws.services.simpledb.model.SelectResult;

// ...

// determine which domain we want to list

String domainName = ...

AmazonSimpleDB simpleDBService = ...;

// initialize list of items

List<Item> items = new ArrayList<Item>();

// nextToken == null is the first page

String nextToken = null;

// set the select expression which retrieves all the items from this domain

SelectRequest request = new SelectRequest("select * from " + domainName);

do {

if (nextToken != null) request = request.withNextToken(nextToken);

// make the request to the service

SelectResult result = simpleDBService.select(request);

nextToken = result.getNextToken();

items.addAll(result.getItems());

} while (nextToken != null);

// show the items...Note

In these examples, we retrieve all the pages using

NextToken and then we show them all to simplify

the example. When the number of items is very big and you are

showing them to a user, it’s probably best to get a page, show it,

then get more pages (if necessary). Otherwise, the user might have

to wait a long time to see something. Also, the amount of data might

be too big to keep in memory.

Of course, with a select expression, we can

do more complex filtering by adding a where

clause, listing only certain attributes and sorting the items

by one of the attributes. For example, if we have the

users table and we are looking for a user whose

name is “Juan” and whose surname starts with “P”, we would do

something like this:

select name, surname from users

where name like 'Juan%' intersection surname like 'P%'In addition to '*' and a list of attributes,

the output of a select can also be

count(*) or itemName() to return

the name of the item.

If you’d like to know the total number of items in your

domain, one way is to use a select:

select count(*) from users

Another option is to retrieve the metadata of the domain, like we do here:

import com.amazonaws.services.simpledb.AmazonSimpleDB;

import com.amazonaws.services.simpledb.AmazonSimpleDBClient;

import com.amazonaws.services.simpledb.model.DomainMetadataRequest;

import com.amazonaws.services.simpledb.model.DomainMetadataResult;

// determine which domain we want to list

String domainName = ...

AmazonSimpleDB simpleDBService = ...;

// prepare the DomainMetadata request for this domain

DomainMetadataRequest request = new DomainMetadataRequest(domainName);

DomainMetadataResult result = simpleDBService.domainMetadata(request);

// we are interested in the total amount of items

long totalItems = result.getItemCount();

// show results

System.out.println("Domain metadata: " + result);

System.out.println("The domain " + domainName + " has " +

totalItems + " items.");The DomainMetadataResult can look similar to

this:

{ItemCount: 210,

ItemNamesSizeBytes: 3458,

AttributeNameCount: 2,

AttributeNamesSizeBytes: 18,

AttributeValueCount: 245,

AttributeValuesSizeBytes: 7439,

Timestamp: 1287263703, }Both SQS and SimpleDB are kind of passive, or static. You can add things, and if you need something from it, you have to pull. This is OK for many services, but sometimes you need something more disruptive—you need to push instead of pull. This is what Amazon SNS gives us. You can push information to any component that is listening, and the messages are delivered right away. Table 4-2 shows a comparison of SNS and SQS.

Table 4-2. Comparing SNS to SQS

| SQS | SNS |

|---|---|

| Doesn’t require receivers to subscribe | Receivers subscribe and confirm |

| Each message is normally handled by one receiver, then deleted | Notifications are “broadcasted” to all subscribers |

| Pull model: messages are read by receivers when they want | Push model: notifications are automatically pushed to all subscribers |

SNS is not an easy service, but it is incredibly versatile. Luckily,

we are all living in “the network society,” so the essence should be

familiar to most of us. It is basically the same concept as a mailing list

or LinkedIn group—there is something you are interested in (a

topic, in SNS-speak), and you show that interest by

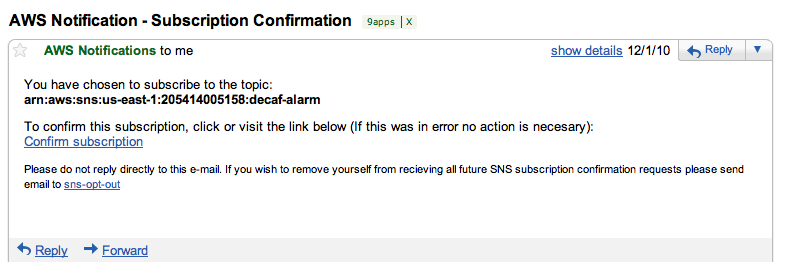

subscribing. Once the topic verifies that you exist by confirming the

subscription, you become part of the group receiving messages on that

topic.

Of course, notifications are not new—we have been using them for a while now. In software systems, one of the more successful models of publish/subscribe communication is the event model. Events are part of most high-level programming languages like Java and Objective-C. In a software system that spans multiple components, there is the notion of an event. In the field of web development, events are abundant, though mostly implicit. Every PHP script or Rails Action Controller method (public, of course) is an event handler; it reacts to a user requesting a page or another piece of software talking to an API.

There is one important difference between SNS and the events in programming languages as we know them: permissions are implemented differently. In Java, for example, you don’t need explicit permissions to subscribe to events from a certain component. This is left to the environment the application operates in. In the Android mobile platform, on the other hand, you have to request access to, say, the compass or the Internet in your application’s manifest file. If you don’t specify that you need those privileges, you get a compile-time error. Because SNS is much more public, not just confined to one user on one machine, permissions are part of the events themselves. You need permission from the topic owner to receive information, and you need permission from the recipient to send information.

SNS can be seen as an event system, but how does it work? In SNS, you create topics. Topics are the conduits for sending (publishing) and receiving messages, or events. Anyone with an AWS account can subscribe to a topic, though that doesn’t mean they will be automatically permitted to receive messages. And the topic owner can subscribe non-AWS users on their behalf. Every subscriber has to explicitly opt in, though that term is usually related to mailing lists and spam. But it is the logical consequence in an open system like the Web (you can see this as the equivalent of border control in a country).

The most interesting thing about SNS has to do with the subscriber, the recipient of the messages. A subscriber can configure an endpoint, specifying how and where the message will be delivered. Currently SNS supports three types of endpoints: HTTP/HTTPS, email, and SQS. And this is exactly the reason we feel it is more than a notification system. You can integrate an API using SNS, enabling totally different ways of execution.

But, even as just a notification system, SNS rocks. (And Amazon promises to support SMS endpoints, as well.)

Tip

Happily, SNS is now included in the AWS Console, so you can take a look for yourself and experiment with topics, subscriptions, and messages.

A contest is the central component of Kulitzer. Suppose our company, 9Apps, needs a new identity. With our (still) limited budget, we can’t ask five high-profile marketing agencies to come to us and pitch for this project—we just don’t have that much money. What we can do, however, is ask a number of designers in our community to participate in a contest organized on Kulitzer. And because we use Kulitzer, we can easily invite our partners and customers to vote on the best design.

In our quest for flexibility, we realize that this problem would benefit from using SNS. The lifecycle of a contest is littered with events. We start a contest, we invite participants and a jury, and admission is opened and closed, as is the voting. And during the different periods of the contest, we want to notify different interested parties of progress. Later, we can add all sorts of components that “listen to a contest” and take action when certain events occur, for example, leaving messages on Facebook walls or sending tweets.

We could implement this with SNS by having a few central topics, like “registration” for indicating that a contest is open for participants, “admission” when the admission process starts, and “judging” when the voting starts. We could then have subscribers to each of these topics, but they would receive notifications related to all existing contests. It would move the burden of implementing security to the components themselves.

Instead, we choose to implement this differently. The idea is that each contest has its own topics for the kind of events we mentioned. The contest will handle granting/revoking permissions with SNS so that listeners are simpler to implement. It will give us much more flexibility to add features to Kulitzer later on.

Note

The current limits for SNS —which is in beta— state that you can have a maximum of 100 topics per account. This would clearly limit us when choosing to have several topics per contest. But we contacted Amazon about this issue, and its reply expressed interest in our use case, so it will not be a problem.

This is not the first time we needed to surpass the limits imposed by AWS. When this happens, Amazon requests that you explain your case. Amazon will then analyze it and decide if these limitations can be lifted for you.

The RightAWS library doesn’t officially support SNS yet, but

we found a branch by Brendon Murphy that does. The branch is called

add_sns, and you can download it from GitHub

and create the gem yourself.

You will need to install some dependencies:

sudo gem install hoe sudo gem install rcov

Then download the sources, extract them, and run the following to generate the gem in the pkg directory:

rake gem

To install it, run the following from the pkg directory:

sudo gem install right_aws-1.11.0.gem

Here, we show how to create the topics for a specific contest:

require "right_aws"

sns = RightAws::Sns.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

contest_id = 12

# create the topics

registration = sns.create_topic("#{contest_id}_registration")

# set a human readable name for the topic

registration.display_name = "Registration process begins"

admission = sns.create_topic("#{contest_id}_admission")

admission.display_name = "Admission process begins"

judging = sns.create_topic("#{contest_id}_judging")

judging.display_name = "Judging process begins"There are two steps to subscribing to a topic:

Call the

subscribemethod, providing the type of endpoint (email,email-json,http,https,sqs).Confirm the subscription by calling

confirm_subscription.

For the second step, SNS will send the endpoint a request for

confirmation in the form of an email message or an HTTP request,

depending on the type of endpoint. In all cases, a string

token is provided, which needs to be sent back in

the confirm_subscription call. If the endpoint is

email, the user just clicks on a link to confirm the subscription, and

the token is sent as a query string parameter of the URL (shown in

Figure 4-7).

If the endpoint is http,

https, or email-json, the token

is sent as part of a JSON object.

Step one is subscribing some email addresses to a topic:

require "right_aws"

sns = RightAws::Sns.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

contest_id = 12

# get the judging topic

topic = sns.create_topic("#{contest_id}_judging")

# Subscribe a few email addresses to the topic.

# This will return "Pending Confirmation" and send a confirmation

# email to the target email address which contains a "Confirm Subscription"

# link back to Amazon Web Services. Other possible subscription protocols

# include http, https, email, email-json and sqs

%w[alice@example.com bob@example.com].each do |email|

topic.subscribe("email", email)

end

# Let's subscribe this address as email-json, imagining it

# is an email box feeding to a script parsing the content. Note

# that the confirmation email this address receives will contain

# content as JSON

topic.subscribe("email-json", "carol-email-bot@example.com")

# Another kind of endpoint is http

topic.subscribe("http",

"http://www.kulitzer.com/contests/update/#{contest_id}_judging")In step two, the endpoint confirms the subscription by sending back the token:

require "right_aws"

# Now, imagine you subscribe an internal resource to the topic and instruct

# it to use the http protocol and post to a url you provide. It will receive

# a post including the following bit of JSON data:

=begin

{

"Type" : "SubscriptionConfirmation",

"MessageId" : "b00e1384-6a4f-4bc5-abd5-9b7f82e3cff4",

"Token" : "51b2ff3edb4487553c7dd2f29566c2aecada20b9...",

"TopicArn" : "arn:aws:sns:us-east-1:235698110812:12_judging",

"Message" : "You have chosen to subscribe to the topic

arn:aws:sns:us-east-1:235698110812:12_judging.\n

To confirm the subscription, visit the SubscribeURL included in this

message.",

"SubscribeURL" :

"https://sns.us-east-1.amazonaws.com/?Action=ConfirmSubscription&

TopicArn=arn:aws:sns:us-east-1:235698110812:12_judging&

Token=51b2ff3edb4487553c7dd2f29566c2aecada20b9…",

"Timestamp" : "2011-01-07T11:41:02.417Z",

"SignatureVersion" : "1",

"Signature" : "UHWoZfMkpH/FrhICs6An0cTtjjcj5nBEweVbWgrARD5B..."

}

=end

# You can confirm the subscription with a call to confirm_subscription.

# Note that in order to receive messages published to a topic, the subscriber

# must be confirmed.

sns = RightAws::Sns.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

arn = "arn:aws:sns:us-east-1:724187402011:12_judging"

topic = sns.topic(arn)

token = "51b2ff3edb4487553c7dd2f29566c2aecada20b9..."

topic.confirm_subscription(token)Publish messages to a topic as follows:

# Sending a message allows for an optional subject to be included along with

# the required message itself.

# Find the sns topic using the arn. Note that you can call

# RightAws::SNS#create_topic if you only know the topic name

# and the current AWS api will fetch it for you. Otherwise,

# you need to store the arn for future lookups. It's probably

# advisable to store the arn's anyway as they serve as a reliable

# uid.

sns = RightAws::Sns.new("AKIAIGKECZXA7AEIJLMQ",

"w2Y3dx82vcY1YSKbJY51GmfFQn3705ftW4uSBrHn")

arn = "arn:aws:sns:us-east-1:235698110812:12_judging"

topic = sns.topic(arn)

message = "Dear Judges, admission is closed, you can now start evaluating

participants."

optional_subject = "Judging begins"

topic.send_message(message, optional_subject)The use of SNS is not limited to sending messages to subscribed users, of course. In Kulitzer, when the status of a contest changes, we might want to update a particular Facebook account to tell the world about it. In Rails, we can implement this by having a separate FacebookController that is subscribed to the different topics and publishes messages to Facebook when notified by SNS of an event.

The core of Marvia is the PDF engine, for lack of a better term. The PDF engine is becoming a standalone service. We are slowly extracting features from the app, moving them to the PDF engine. We introduced priority queues to distinguish between important and less important jobs. We extracted accounts so we can share information and act on that information, for example keeping track of the number of PDFs generated.

One of the missing parts of the PDF engine is notification. We send jobs to the engine, and it helps us keep track of what is created. But we do not know the status of a job. The only way to learn the status is to check whether the PDF has been generated. It would be great if the process that creates the job could register an event handler to be called with status updates. It could be something simple like a message, or something more elaborate like a service that moves the PDF to a Dropbox account. We could even tell the PDF engine to add a message to a particular queue for printing, for example.

But we’ll start with what we need right now: notifying the customer with an email message when the PDF is ready. The emails SNS sends are not really customizable, and we don’t want to expose our end users to notification messages with opt-out pointing to Amazon AWS. We want to hide the implementation as much as possible. Therefore, we have notifications sent to our app, which sends the actual email message.

Creating a topic is not much different from creating a queue in SQS or a domain in SimpleDB. Topic names must be made up of only uppercase and lowercase ASCII letters, numbers, and hyphens, and must be between 1 and 256 characters long. We want a simple mechanism for creating unique topic names, but we still want to be flexible. An easy way is to create a hash of what we would have used if we could. We will create a topic for each Marvia customer account, so we will use the customer’s provided email address. In the following, we create a topic for the customer 9apps:

<?php

require_once('/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

$sns = new AmazonSNS();

$sns->set_region($sns::REGION_EU_W1);

$topic = md5('jurg@9apps.net/status');

# if topic already exists, create_topic returns it

$response = $sns->create_topic($topic);

$response->isOK() or

die('could not create topic ' . $topic);

pr($response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>The endpoint for notification is http; we

are not sending any sensitive information, so HTTPS is not necessary.

But we do want to prepare a nicely formatted email message, so we

won’t use the standard email endpoint. We can then generate the

content of the email message ourselves.

Below, we call subscribe to add an

http endpoint to the topic:

<?php

require_once( '/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

$sns = new AmazonSNS();

$sns->set_region($sns::REGION_EU_W1);

$topic = md5('jurg@9apps.net/status');

$response = $sns->create_topic( $topic);

$response->isOK() or

die( 'could not create topic ' . $topic);

# subscribe to the topic by

# passing topic arn (topic identifier),

# type of endpoint 'http',

# and the given url as endpoint

$response = $sns->subscribe(

(string)$response->body->TopicArn(0),

'http',

'http://ec2-184-72-67-235.compute-1.amazonaws.com/sns/receive.php'

);

pr($response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>Before messages are delivered to this endpoint, it has to be

confirmed by calling confirm_subscription. In the code

below, you can see that the endpoint is responsible for both

confirming the subscription and handling messages. A JSON object is

posted, and this indicates if the HTTP POST is a notification or a

confirmation. The rest of the information, such as the message (if it

is a notification), topic identifier (ARN), and token (if it is a

subscription confirmation), is also part of the JSON object:

<?php

require_once( '/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

$sns = new AmazonSNS();

$sns->set_region($sns::REGION_EU_W1);

# we only accept a POST, before we get the raw input (as json)

if ( $_SERVER['REQUEST_METHOD'] === 'POST' ) {

$json = trim(file_get_contents('php://input'));

$notification = json_decode($json);

# do we have a message? or do we need to confirm?

if( $notification->{'Type'} === 'Notification') {

# a message is text, in our case json so we decode and proceed...

$message = json_decode($notification->{'Message'});

# and now we can act on the message

# ...

} else if($notification->{'Type'} === 'SubscriptionConfirmation') {

# we received a request to confirm subscription, let's confirm...

$response = $sns->confirm_subscription(

$notification->{'TopicArn'},

$notification->{'Token'});

$response->isOK() or

die("could not confirm subscription for topic 'status'");

}

}

?>Publishing a message is not difficult. If you are the owner, you can send a text message to the topic. We need to pass something with a bit more structure, so we use JSON to deliver an object:

<?php

require_once( '/usr/share/php/AWSSDKforPHP/sdk.class.php');

define('AWS_KEY', 'AKIAIGKECZXA7AEIJLMQ');

define('AWS_SECRET_KEY', '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');

$sns = new AmazonSNS();

$sns->set_region($sns::REGION_EU_W1);

$topic = md5( 'jurg@9apps.net/status');

$job = "457964863276";

$response = $sns->create_topic( $topic);

$response->isOK() or

die( 'could not create topic ' . $topic);

$response = $sns->publish(

(string)$response->body->TopicArn(0),

json_encode( array( "job" => $job,

"result" => "200",

"message" => "Job $job finished"))

);

pr( $response->body);

function pr($var) { print '<pre>'; print_r($var); print '</pre>'; }

?>An interesting idea is to use the phone as a beacon, sending notifications to the world if an unwanted situation occurs. We could do that easily with Decaf on Android. We already monitor our EC2 instances with Decaf, and we could use SNS to notify others. Though interesting, the only useful application of this is to check the health of an app in a much more distributed way. Central monitoring services can only implement a limited number of network origins. In the case of Layar, for example, we can add this behavior to the mobile clients.

For now, we’ll implement something else. Just as with SimpleDB and SQS, we are curious about the state of SNS in our application. For queues, we showed how to monitor the number of items in the queue, and for SimpleDB, we did something similar. With SNS, we want to know if the application is operational.

To determine if a particular topic is still working, we only have to listen in for a while by subscribing to the topic. If we see messages, the SNS part of the app works. What we need for this is simple browsing/searching for topics, subscribing to topics with an email address, and sending simple messages.

Getting a list of available topics is very simple. The number of

results are limited and paginated as in SimpleDB. The code below lists

all topics in the given account for the region

us-east-1:

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.sns.AmazonSNS;

import com.amazonaws.services.sns.AmazonSNSClient;

import com.amazonaws.services.sns.model.ListTopicsRequest;

import com.amazonaws.services.sns.model.ListTopicsResult;

import com.amazonaws.services.sns.model.Topic;

// ...

// prepare the credentials

String accessKey = "AKIAIGKECZXA7AEIJLMQ";

String secretKey = "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";

// create the SNS service

AmazonSNS snsService = new AmazonSNSClient(

new BasicAWSCredentials(accessKey, secretKey));

// set the endpoint for us-east-1 region

snsService.setEndpoint("https://sns.us-east-1.amazonaws.com");

List<Topic> topics = new ArrayList<Topic>();

String nextToken = null;

do {

// create the request, with nextToken if not empty

ListTopicsRequest request = new ListTopicsRequest();

if (nextToken != null) request = request.withNextToken(nextToken);

// call the web service

ListTopicsResult result = snsService.listTopics(request);

nextToken = result.getNextToken();

// get that list of topics

topics.addAll(result.getTopics());

// go on if there are more elements

} while (nextToken != null);

// show the list of topics...Given the list of topics, we can give the user the possibility of subscribing to some of these with his email address. For that, we just need to use the ARN and the email address of choice:

import com.amazonaws.services.sns.AmazonSNS; import com.amazonaws.services.sns.model.SubscribeRequest; import com.amazonaws.services.sns.model.Topic; // ... // get sns service AmazonSNS snsService = ... // obtain the email of the user who wants to subscribe String address = ... // obtain the topic chosen by the user (from the list obtained above) Topic topic = ... String topicARN = topic.getTopicArn(); // subscribe the user to the topic with protocol = "email" snsService.subscribe(new SubscribeRequest(topicARN, "email", address));

The user will then receive an email message requesting confirmation of this subscription.

It’s also very easy to send notifications about a given

topic. Just use the topic ARN and provide a String

for a message and an optional subject:

import com.amazonaws.services.sns.AmazonSNS;

import com.amazonaws.services.sns.model.PublishRequest;

import com.amazonaws.services.sns.model.Topic;

// ...

AmazonSNS snsService = ...

// obtain the topic chosen by the user (from the list obtained above)

Topic topic = ...

String topicARN = topic.getTopicArn();

snsService.publish(new PublishRequest(topicARN,

"A server is in trouble!", "server alarm"));In this chapter, we used several real-life examples of usage of three services—SQS, SimpleDB, and SNS. In most cases, these services help in decoupling the components of your system. They introduce a new way for the components to communicate, and allow for each component to scale independently.

In other cases, the benefits lie in the new functionality these services enable, like in the example of priority queues with SQS for processing files. Ease of use, high availability, and the absence of setup and maintenance overhead are usually good reasons to use the SimpleDB data store. SNS can be used to communicate events among different components. It provides different types of endpoints, such as email, HTTP, and SQS.

We used three of our sample applications, Kulitzer, Marvia, and Decaf, to show code examples in three different programming languages—Ruby, PHP, and Java.

In the next chapter, we’ll be making sure our infrastructure stays alive.

Get Programming Amazon EC2 now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.