Chapter 4. Using Custom Resources

In this chapter we introduce you to custom resources (CR), one of the central extension mechanisms used throughout the Kubernetes ecosystem.

Custom resources are used for small, in-house configuration objects without any corresponding controller logic—purely declaratively defined. But custom resources also play a central role for many serious development projects on top of Kubernetes that want to offer a Kubernetes-native API experience. Examples are service meshes such as Istio, Linkerd 2.0, and AWS App Mesh, all of which have custom resources at their heart.

Remember “A Motivational Example” from Chapter 1? At its core, it has a CR that looks like this:

apiVersion:cnat.programming-kubernetes.info/v1alpha1kind:Atmetadata:name:example-atspec:schedule:"2019-07-03T02:00:00Z"status:phase:"pending"

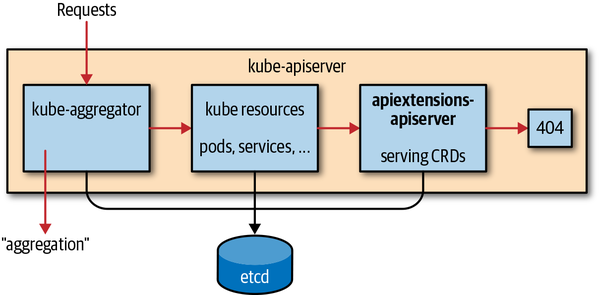

Custom resources are available in every Kubernetes cluster since version 1.7. They are stored in the same etcd instance as the main Kubernetes API resources and served by the same Kubernetes API server. As shown in Figure 4-1, requests fall back to the apiextensions-apiserver, which serves the resources defined via CRDs, if they are neither of the following:

-

Handled by aggregated API servers (see Chapter 8).

-

Native Kubernetes resources.

Figure 4-1. The API Extensions API server inside the Kubernetes API server

A CustomResourceDefinition (CRD) is a Kubernetes resource itself. It describes the available CRs in the cluster. For the preceding example CR, the corresponding CRD looks like this:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:ats.cnat.programming-kubernetes.infospec:group:cnat.programming-kubernetes.infonames:kind:AtlistKind:AtListplural:atssingular:atscope:Namespacedsubresources:status:{}version:v1alpha1versions:-name:v1alpha1served:truestorage:true

The name of the CRD—in this case, ats.cnat.programming-kubernetes.info—must match the plural name followed by the group name. It defines the kind At CR in the API group cnat.programming-kubernetes.info as a namespaced resource called ats.

If this CRD is created in a cluster, kubectl will automatically detect the resource, and the user can access it via:

$ kubectl get ats

NAME CREATED AT

ats.cnat.programming-kubernetes.info 2019-04-01T14:03:33ZDiscovery Information

Behind the scenes, kubectl uses discovery information from the API server to find out about the new resources. Let’s look a bit deeper into this discovery mechanism.

After increasing the verbosity level of kubectl, we can actually see how it learns about the new resource type:

$kubectl get ats -v=7 ... GET https://XXX.eks.amazonaws.com/apis/cnat.programming-kubernetes.info/ v1alpha1/namespaces/cnat/ats?limit=500 ... Request Headers: ... Accept: application/json;as=Table;v=v1beta1;g=meta.k8s.io,application/json User-Agent: kubectl/v1.14.0(darwin/amd64)kubernetes/641856d ... Response Status:200OK in607milliseconds NAME AGE example-at 43s

The discovery steps in detail are:

-

Initially,

kubectldoes not know aboutats. -

Hence,

kubectlasks the API server about all existing API groups via the /apis discovery endpoint. -

Next,

kubectlasks the API server about resources in all existing API groups via the /apis/group versiongroup discovery endpoints. -

Then,

kubectltranslates the given type,ats, to a triple of:-

Group (here

cnat.programming-kubernetes.info) -

Version (here

v1alpha1) -

Resource (here

ats).

-

The discovery endpoints provide all the necessary information to do the translation in the last step:

$ http localhost:8080/apis/

{

"groups": [{

"name": "at.cnat.programming-kubernetes.info",

"preferredVersion": {

"groupVersion": "cnat.programming-kubernetes.info/v1",

"version": "v1alpha1“

},

"versions": [{

"groupVersion": "cnat.programming-kubernetes.info/v1alpha1",

"version": "v1alpha1"

}]

}, ...]

}

$ http localhost:8080/apis/cnat.programming-kubernetes.info/v1alpha1

{

"apiVersion": "v1",

"groupVersion": "cnat.programming-kubernetes.info/v1alpha1",

"kind": "APIResourceList",

"resources": [{

"kind": "At",

"name": "ats",

"namespaced": true,

"verbs": ["create", "delete", "deletecollection",

"get", "list", "patch", "update", "watch"

]

}, ...]

}

This is all implemented by the discovery RESTMapper. We also saw this very common type of RESTMapper in “REST Mapping”.

Warning

The kubectl CLI also maintains a cache of resource types in ~/.kubectl so that it does not have to re-retrieve the discovery information on every access. This cache is invalidated every 10 minutes. Hence, a change in the CRD might show up in the CLI of the respective user up to 10 minutes later.

Type Definitions

Now let’s look at the CRD and the offered features in more detail: as in the cnat example, CRDs are Kubernetes resources in the apiextensions.k8s.io/v1beta1 API group provided by the apiextensions-apiserver inside the Kubernetes API server process.

The schema of CRDs looks like this:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:namespec:group:groupnameversion:versionnamenames:kind:uppercasenameplural:lowercasepluralnamesingular:lowercasesingularname# defaulted to be lowercase kindshortNames:listofstringsasshortnames# optionallistKind:uppercaselistkind# defaulted to bekindListcategories:listofcategorymembershiplike"all"# optionalvalidation:# optionalopenAPIV3Schema:OpenAPIschema# optionalsubresources:# optionalstatus:{}# to enable the status subresource (optional)scale:# optionalspecReplicasPath:JSONpathforthereplicanumberinthespecofthecustomresourcestatusReplicasPath:JSONpathforthereplicanumberinthestatusofthecustomresourcelabelSelectorPath:JSONpathoftheScale.Status.Selectorfieldinthescaleresourceversions:# defaulted to the Spec.Version field-name:versionnameserved:booleanwhethertheversionisservedbytheAPIserver# defaults to falsestorage:booleanwhetherthisversionistheversionusedtostoreobject-...

Many of the fields are optional or are defaulted. We will explain the fields in more detail in the following sections.

After creating a CRD object, the apiextensions-apiserver inside of kube-apiserver will check the names and determine whether they conflict with other resources or whether they are consistent in themselves. After a few moments it will report the result in the status of the CRD, for example:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:ats.cnat.programming-kubernetes.infospec:group:cnat.programming-kubernetes.infonames:kind:AtlistKind:AtListplural:atssingular:atscope:Namespacedsubresources:status:{}validation:openAPIV3Schema:type:objectproperties:apiVersion:type:stringkind:type:stringmetadata:type:objectspec:properties:schedule:type:stringtype:objectstatus:type:objectversion:v1alpha1versions:-name:v1alpha1served:truestorage:truestatus:acceptedNames:kind:AtlistKind:AtListplural:atssingular:atconditions:-lastTransitionTime:"2019-03-17T09:44:21Z"message:no conflicts foundreason:NoConflictsstatus:"True"type:NamesAccepted-lastTransitionTime:nullmessage:the initial names have been acceptedreason:InitialNamesAcceptedstatus:"True"type:EstablishedstoredVersions:-v1alpha1

You can see that the missing name fields in the spec are defaulted and reflected in the status as accepted names. Moreover, the following conditions are set:

-

NamesAccepteddescribes whether the given names in the spec are consistent and free of conflicts. -

Establisheddescribes that the API server serves the given resource under the names instatus.acceptedNames.

Note that certain fields can be changed long after the CRD has been created. For example, you can add short names or columns. In this case, a CRD can be established—that is, served with the old names—although the spec names have conflicts. Hence the NamesAccepted condition would be false and the spec names and accepted names would differ.

Advanced Features of Custom Resources

In this section we discuss advanced features of custom resources, such as validation or subresources.

Validating Custom Resources

CRs can be validated by the API server during creation and updates. This is done based on the OpenAPI v3 schema specified in the validation fields in the CRD spec.

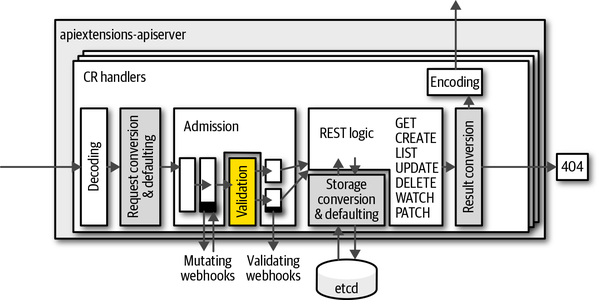

When a request creates or mutates a CR, the JSON object in the spec is validated against this spec, and in case of errors the conflicting field is returned to the user in an HTTP code 400 response. Figure 4-2 shows where validation takes places in the request handler inside the apiextensions-apiserver.

More complex validations can be implemented in validating admission webhooks—that is, in a Turing-complete programming language. Figure 4-2 shows that these webhooks are called directly after the OpenAPI-based validations described in this section. In “Admission Webhooks”, we will see how admission webhooks are implemented and deployed. There, we’ll look into validations that take other resources into account and therefore go far beyond OpenAPI v3 validation. Luckily, for many use cases OpenAPI v3 schemas are sufficient.

Figure 4-2. Validation step in the handler stack of the apiextensions-apiserver

The OpenAPI schema language is based on the JSON Schema standard, which uses JSON/YAML itself to express a schema. Here’s an example:

type:objectproperties:apiVersion:type:stringkind:type:stringmetadata:type:objectspec:type:objectproperties:schedule:type:stringpattern:"^\d{4}-([0]\d|1[0-2])-([0-2]\d|3[01])..."command:type:stringrequired:-schedule-commandstatus:type:objectproperties:phase:type:stringrequired:-metadata-apiVersion-kind-spec

This schema specifies that the value is actually a JSON object;1 that is, it is a string map and not a slice or a value like a number. Moreover, it has (aside from metadata, kind, and apiVersion, which are implicitly defined for custom resources) two additional properties: spec and status.

Each is a JSON object as well. spec has the required fields schedule and command, both of which are strings. schedule has to match a pattern for an ISO date (sketched here with some regular expressions). The optional status property has a string field called phase.

Creating OpenAPI schemata manually can be tedious. Luckily, work is underway to make this much easier via code generation: the Kubebuilder project—see “Kubebuilder”—has developed crd-gen in sig.k8s.io/controller-tools, and this is being extended step by step so that it’s usable in other contexts. The generator crd-schema-gen is a fork of crd-gen in this direction.

Short Names and Categories

Like native resources, custom resources might have long resource names. They are great on the API level but tedious to type in the CLI. CRs can have short names as well, like the native resource daemonsets, which can be queried with kubectl get ds. These short names are also known as aliases, and each resource can have any number of them.

To view all of the available short names, use the kubectl api-resources command like so:

$kubectl api-resources NAME SHORTNAMES APIGROUP NAMESPACED KIND bindingstrueBinding componentstatuses csfalseComponentStatus configmaps cmtrueConfigMap endpoints eptrueEndpoints events evtrueEvent limitranges limitstrueLimitRange namespaces nsfalseNamespace nodes nofalseNode persistentvolumeclaims pvctruePersistentVolumeClaim persistentvolumes pvfalsePersistentVolume pods potruePod statefulsets sts appstrueStatefulSet ...

Again, kubectl learns about short names via discovery information (see “Discovery Information”). Here is an example:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:ats.cnat.programming-kubernetes.infospec:...shortNames:-at

After that, a kubectl get at will list all cnat CRs in the namespace.

Further, CRs—as with any other resource—can be part of categories. The most common use is the all category, as in kubectl get all. It lists all user-facing resources in a cluster, like pods and services.

The CRs defined in the cluster can join a category or create their own category via the categories field:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:ats.cnat.programming-kubernetes.infospec:...categories:-all

With this, kubectl get all will also list the cnat CR in the namespace.

Printer Columns

The kubectl CLI tool uses server-side printing to render the output of kubectl get. This means that it queries the API server for the columns to display and the values in each row.

Custom resources support server-side printer columns as well, via additionalPrinterColumns. They are called “additional” because the first column is always the name of the object. These columns are defined like this:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionmetadata:name:ats.cnat.programming-kubernetes.infospec:additionalPrinterColumns:(optional)-name:kubectlcolumnnametype:OpenAPItypeforthecolumnformat:OpenAPIformatforthecolumn(optional)description:human-readabledescriptionofthecolumn(optional)priority:integer,alwayszerosupportedbykubectlJSONPath:JSONpathinsidetheCRforthedisplayedvalue

The name field is the column name, the type is an OpenAPI type as defined in the data types section of the specification, and the format (as defined in the same document) is optional and might be interpreted by kubectl or other clients.

Further, description is an optional human-readable string, used for documentation purposes. The priority controls in which verbosity mode of kubectl the column is displayed. At the time of this writing (with Kubernetes 1.14), only zero is supported, and all columns with higher priority are hidden.

Finally, JSONPath defines which values are to be displayed. It is a simple JSON path inside of the CR. Here, “simple” means that it supports object field syntax like .spec.foo.bar, but not more complex JSON paths that loop over arrays or similar.

With this, the example CRD from the introduction could be extended with additionalPrinterColumns like this:

additionalPrinterColumns:#(optional)-name:scheduletype:stringJSONPath:.spec.schedule-name:commandtype:stringJSONPath:.spec.command-name:phasetype:stringJSONPath:.status.phase

Then kubectl would render a cnat resource as follows:

$kubectl get ats NAME SCHEDULER COMMAND PHASE foo 2019-07-03T02:00:00Zecho"hello world"Pending

Next up, we have a look at subresources.

Subresources

We briefly mentioned subresources in “Status Subresources: UpdateStatus”. Subresources are special HTTP endpoints, using a suffix appended to the HTTP path of the normal resource. For example, the pod standard HTTP path is /api/v1/namespace/namespace/pods/name. Pods have a number of subresources, such as /logs, /portforward, /exec, and /status.

The corresponding subresource HTTP paths are:

-

/api/v1/namespace/

namespace/pods/name/logs -

/api/v1/namespace/

namespace/pods/name/portforward -

/api/v1/namespace/

namespace/pods/name/exec -

/api/v1/namespace/

namespace/pods/name/status

The subresource endpoints use a different protocol than the main resource endpoint.

At the time of this writing, custom resources support two subresources: /scale and /status. Both are opt-in—that is, they must be explicitly enabled in the CRD.

Status subresource

The /status subresource is used to split the user-provided specification of a CR instance from the controller-provided status. The main motivation for this is privilege separation:

-

The user usually should not write status fields.

-

The controller should not write specification fields.

The RBAC mechanism for access control does not allow rules at that level of detail. Those rules are always per resource. The /status subresource solves this by providing two endpoints that are resources on their own. Each can be controlled with RBAC rules independently. This is often called a spec-status split. Here’s an example of such a rule for the ats resource, which applies only to the /status subresource (while "ats" would match the main resource):

apiVersion:rbac.authorization.k8s.io/v1kind:Rolemetadata:...rules:-apiGroups:[""]resources:["ats/status"]verbs:["update","patch"]

Resources (including custom resources) that have a /status subresource have changed semantics, also for the main resource endpoint:

-

They ignore changes to the status on the main HTTP endpoint during create (the status is just dropped during a create) and updates.

-

Likewise, the /status subresource endpoint ignores changes outside of the status of the payload. A create operation on the /status endpoint is not possible.

-

Whenever something outside of

metadataand outside ofstatuschanges (this especially means changes in the spec), the main resource endpoint will increase themetadata.generationvalue. This can be used as a trigger for a controller indicating that the user desire has changed.

Note that usually both spec and status are sent in update requests, but technically you could leave out the respective other part in a request payload.

Also note that the /status endpoint will ignore everything outside of the status, including metadata changes like labels or annotations.

The spec-status split of a custom resource is enabled as follows:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionspec:subresources:status:{}...

Note here that the status field in that YAML fragment is assigned the empty object. This is the way to set a field that has no other properties. Just writing

subresources:status:

will result in a validation error because in YAML the result is a null value for status.

Warning

Enabling the spec-status split is a breaking change for an API. Old controllers will write to the main endpoint. They won’t notice that the status is always ignored from the point where the split is activated. Likewise, a new controller can’t write to the new /status endpoint until the split is activated.

In Kubernetes 1.13 and later, subresources can be configured per version. This allows us to introduce the /status subresource without a breaking change:

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionspec:...versions:-name:v1alpha1served:truestorage:true-name:v1beta1served:truesubresources:status:{}

This enables the /status subresource for v1beta1, but not for v1alpha1.

Note

The optimistic concurrency semantics (see “Optimistic Concurrency”) are the same as for the main resource endpoints; that is, status and spec share the same resource version counter and /status updates can conflict due to writes to the main resource, and vice versa. In other words, there is no split of spec and status on the storage layer.

Scale subresource

The second subresource available for custom resources is /scale. The /scale subresource is a (projective)2 view on the resource, allowing us to view and to modify replica values only. This subresource is well known for resources like deployments and replica sets in Kubernetes, which obviously can be scaled up and down.

The kubectl scale command makes use of the /scale subresource; for example, the following will modify the specified replica value in the given instance:

$kubectlscale--replicas=3your-custom-resource-v=7I042921:17:53.13835366743round_trippers.go:383]PUThttps://host/apis/group/v1/your-custom-resource/scale

apiVersion:apiextensions.k8s.io/v1beta1kind:CustomResourceDefinitionspec:subresources:scale:specReplicasPath:.spec.replicasstatusReplicasPath:.status.replicaslabelSelectorPath:.status.labelSelector...

With this, an update of the replica value is written to spec.replicas and returned from there during a GET.

The label selector cannot be changed through the /status subresource, only read. Its purpose is to give a controller the information to count the corresponding objects. For example, the ReplicaSet controller counts the corresponding pods that satisfy this selector.

The label selector is optional. If your custom resource semantics do not fit label selectors, just don’t specify the JSON path for one.

In the previous example of kubectl scale --replicas=3 ... the value 3 is written to spec.replicas. Any other simple JSON path can be used, of course; for example, spec.instances or spec.size would be a sensible field name, depending on the context.

The kind of the object read from or written to the endpoint is Scale from the autoscaling/v1 API group. Here is what it looks like:

typeScalestruct{metav1.TypeMeta`json:",inline"`// Standard object metadata; More info: https://git.k8s.io/// community/contributors/devel/api-conventions.md#metadata.// +optionalmetav1.ObjectMeta`json:"metadata,omitempty"`// defines the behavior of the scale. More info: https://git.k8s.io/community/// contributors/devel/api-conventions.md#spec-and-status.// +optionalSpecScaleSpec`json:"spec,omitempty"`// current status of the scale. More info: https://git.k8s.io/community/// contributors/devel/api-conventions.md#spec-and-status. Read-only.// +optionalStatusScaleStatus`json:"status,omitempty"`}// ScaleSpec describes the attributes of a scale subresource.typeScaleSpecstruct{// desired number of instances for the scaled object.// +optionalReplicasint32`json:"replicas,omitempty"`}// ScaleStatus represents the current status of a scale subresource.typeScaleStatusstruct{// actual number of observed instances of the scaled object.Replicasint32`json:"replicas"`// label query over pods that should match the replicas count. This is the// same as the label selector but in the string format to avoid// introspection by clients. The string will be in the same// format as the query-param syntax. More info about label selectors:// http://kubernetes.io/docs/user-guide/labels#label-selectors.// +optionalSelectorstring`json:"selector,omitempty"`}

An instance will look like this:

metadata:name:cr-namenamespace:cr-namespaceuid:cr-uidresourceVersion:cr-resource-versioncreationTimestamp:cr-creation-timestampspec:replicas:3status:replicas:2selector:"environment=production"

Note that the optimistic concurrency semantics are the same for the main resource and for the /scale subresource. That is, main resource writes can conflict with /scale writes, and vice versa.

A Developer’s View on Custom Resources

Custom resources can be accessed from Golang using a number of clients. We will concentrate on:

-

Using the

client-godynamic client (see “Dynamic Client”) -

Using a typed client:

-

As provided by kubernetes-sigs/controller-runtime and used by the Operator SDK and Kubebuilder (see “controller-runtime Client of Operator SDK and Kubebuilder”)

-

As generated by

client-gen, like that in k8s.io/client-go/kubernetes (see “Typed client created via client-gen”)

-

The choice of which client to use depends mainly on the context of the code to be written, especially the complexity of implemented logic and the requirements (e.g., to be dynamic and to support GVKs unknown at compile time).

The preceding list of clients:

-

Decreases in the flexibility to handle unknown GVKs.

-

Increases in type safety.

-

Increases in the completeness of features of the Kubernetes API they provide.

Dynamic Client

The dynamic client in k8s.io/client-go/dynamic is totally agnostic to known GVKs. It does not even use any Go types other than unstructured.Unstructured, which wraps just json.Unmarshal and its output.

The dynamic client makes use of neither a scheme nor a RESTMapper. This means that the developer has to provide all the knowledge about types manually by providing a resource (see “Resources”) in the form of a GVR:

schema.GroupVersionResource{Group:"apps",Version:"v1",Resource:"deployments",}

If a REST client config is available (see “Creating and Using a Client”), the dynamic client can be created in one line:

client,err:=NewForConfig(cfg)

The REST access to a given GVR is just as simple:

client.Resource(gvr).Namespace(namespace).Get("foo",metav1.GetOptions{})

This gives you the deployment foo in the given namespace.

Note

You must know the scope of the resource (i.e., whether it is namespaced or cluster-scoped). Cluster-scoped resources just leave out the Namespace(namespace) call.

The input and output of the dynamic client is an *unstructured.Unstructured—that is, an object that contains the same data structure that json.Unmarshal would output on unmarshaling:

-

Objects are represented by

map[string]interface{}. -

Arrays are represented by

[]interface{}. -

Primitive types are

string,bool,float64, orint64.

The method UnstructuredContent() provides access to this data structure inside of an unstructured object (we can also just access Unstructured.Object). There are helpers in the same package to make retrieval of fields easy and manipulation of the object possible—for example:

name,found,err:=unstructured.NestedString(u.Object,"metadata","name")

which returns the name of the deployment—"foo" in this case. found is true if the field was actually found (not only empty, but actually existing). err reports if the type of an existing field is unexpected (i.e., not a string in this case). Other helpers are the generic ones, once with a deep copy of the result and once without:

funcNestedFieldCopy(objmap[string]interface{},fields...string)(interface{},bool,error)funcNestedFieldNoCopy(objmap[string]interface{},fields...string)(interface{},bool,error)

There are other typed variants that do a type-cast and return an error if that fails:

funcNestedBool(objmap[string]interface{},fields...string)(bool,bool,error)funcNestedFloat64(objmap[string]interface{},fields...string)(float64,bool,error)funcNestedInt64(objmap[string]interface{},fields...string)(int64,bool,error)funcNestedStringSlice(objmap[string]interface{},fields...string)([]string,bool,error)funcNestedSlice(objmap[string]interface{},fields...string)([]interface{},bool,error)funcNestedStringMap(objmap[string]interface{},fields...string)(map[string]string,bool,error)

And finally a generic setter:

funcSetNestedField(obj,value,path...)

The dynamic client is used in Kubernetes itself for controllers that are generic, like the garbage collection controller, which deletes objects whose parents have disappeared. The garbage collection controller works with any resource in the system and hence makes extensive use of the dynamic client.

Typed Clients

Typed clients do not use map[string]interface{}-like generic data structures but instead use real Golang types, which are different and specific for each GVK. They are much easier to use, have considerably increased type safety, and make code much more concise and readable. On the downside, they are less flexible because the processed types have to be known at compile time, and those clients are generated, and this adds complexity.

Before going into two implementations of typed clients, let’s look into the representation of kinds in the Golang type system (see “API Machinery in Depth” for the theory behind the Kubernetes type system).

Anatomy of a type

Kinds are represented as Golang structs. Usually the struct is named as the kind (though technically it doesn’t have to be) and is placed in a package corresponding to the group and version of the GVK at hand. A common convention is to place the GVK group/version.Kind into a Go package:

pkg/apis/group/version

and define a Golang struct Kind in the file types.go.

Every Golang type corresponding to a GVK embeds the TypeMeta struct from the package k8s.io/apimachinery/pkg/apis/meta/v1. TypeMeta just consists of the Kind and ApiVersion fields:

typeTypeMetastruct{// +optionalAPIVersionstring`json:"apiVersion,omitempty" yaml:"apiVersion,omitempty"`// +optionalKindstring`json:"kind,omitempty" yaml:"kind,omitempty"`}

In addition, every top-level kind—that is, one that has its own endpoint and therefore one (or more) corresponding GVRs (see “REST Mapping”)—has to store a name, a namespace for namespaced resources, and a pretty long number of further metalevel fields. All these are stored in a struct called ObjectMeta in the package k8s.io/apimachinery/pkg/apis/meta/v1:

typeObjectMetastruct{Namestring`json:"name,omitempty"`Namespacestring`json:"namespace,omitempty"`UIDtypes.UID`json:"uid,omitempty"`ResourceVersionstring`json:"resourceVersion,omitempty"`CreationTimestampTime`json:"creationTimestamp,omitempty"`DeletionTimestamp*Time`json:"deletionTimestamp,omitempty"`Labelsmap[string]string`json:"labels,omitempty"`Annotationsmap[string]string`json:"annotations,omitempty"`...}

There are a number of additional fields. We highly recommend you read through the extensive inline documentation, because it gives a good picture of the core functionality of Kubernetes objects.

Kubernetes top-level types (i.e., those that have an embedded TypeMeta, and an embedded ObjectMeta, and—in this case—are persisted into etcd) look very similar to each other in the sense that they usually have a spec and a status. See this example of a deployment from k8s.io/kubernetes/apps/v1/types.go:

typeDeploymentstruct{metav1.TypeMeta`json:",inline"`metav1.ObjectMeta`json:"metadata,omitempty"`SpecDeploymentSpec`json:"spec,omitempty"`StatusDeploymentStatus`json:"status,omitempty"`}

While the actual content of the types for spec and status differs significantly between different types, this split into spec and status is a common theme or even a convention in Kubernetes, though it’s not technically required. Hence, it is good practice to follow this structure of CRDs as well. Some CRD features even require this structure; for example, the /status subresource for custom resources (see “Status subresource”)—when enabled—always applies to the status substructure only of the custom resource instance. It cannot be renamed.

Golang package structure

As we have seen, the Golang types are traditionally placed in a file called types.go in the package pkg/apis/group/version. In addition to that file, there are a couple more files we want to go through now. Some of them are manually written by the developer, while some are generated with code generators. See Chapter 5 for details.

The doc.go file describes the API’s purpose and includes a number of package-global code generation tags:

// Package v1alpha1 contains the cnat v1alpha1 API group//// +k8s:deepcopy-gen=package// +groupName=cnat.programming-kubernetes.infopackagev1alpha1

Next, register.go includes helpers to register the custom resource Golang types into a scheme (see “Scheme”):

packageversionimport(metav1"k8s.io/apimachinery/pkg/apis/meta/v1""k8s.io/apimachinery/pkg/runtime""k8s.io/apimachinery/pkg/runtime/schema"group"repo/pkg/apis/group")// SchemeGroupVersion is group version used to register these objectsvarSchemeGroupVersion=schema.GroupVersion{Group:group.GroupName,Version:"version",}// Kind takes an unqualified kind and returns back a Group qualified GroupKindfuncKind(kindstring)schema.GroupKind{returnSchemeGroupVersion.WithKind(kind).GroupKind()}// Resource takes an unqualified resource and returns a Group// qualified GroupResourcefuncResource(resourcestring)schema.GroupResource{returnSchemeGroupVersion.WithResource(resource).GroupResource()}var(SchemeBuilder=runtime.NewSchemeBuilder(addKnownTypes)AddToScheme=SchemeBuilder.AddToScheme)// Adds the list of known types to Scheme.funcaddKnownTypes(scheme*runtime.Scheme)error{scheme.AddKnownTypes(SchemeGroupVersion,&SomeKind{},&SomeKindList{},)metav1.AddToGroupVersion(scheme,SchemeGroupVersion)returnnil}

Then, zz_generated.deepcopy.go defines deep-copy methods on the custom resource Golang top-level types (i.e., SomeKind and SomeKindList in the preceding example code). In addition, all substructs (like those for the spec and status) become deep-copyable as well.

Because the example uses the tag +k8s:deepcopy-gen=package in doc.go, the deep-copy generation is on an opt-out basis; that is, DeepCopy methods are generated for every type in the package that does not opt out with +k8s:deepcopy-gen=false. See Chapter 5 and especially “deepcopy-gen Tags” for more details.

Typed client created via client-gen

With the API package pkg/apis/group/version in place, the client generator client-gen creates a typed client (see Chapter 5 for details, especially “client-gen Tags”), in pkg/generated/clientset/versioned by default (pkg/client/clientset/versioned in old versions of the generator). More precisely, the generated top-level object is a client set. It subsumes a number of API groups, versions, and resources.

The top-level file looks like the following:

// Code generated by client-gen. DO NOT EDIT.packageversionedimport(discovery"k8s.io/client-go/discovery"rest"k8s.io/client-go/rest"flowcontrol"k8s.io/client-go/util/flowcontrol"cnatv1alpha1".../cnat/cnat-client-go/pkg/generated/clientset/versioned/)typeInterfaceinterface{Discovery()discovery.DiscoveryInterfaceCnatV1alpha1()cnatv1alpha1.CnatV1alpha1Interface}// Clientset contains the clients for groups. Each group has exactly one// version included in a Clientset.typeClientsetstruct{*discovery.DiscoveryClientcnatV1alpha1*cnatv1alpha1.CnatV1alpha1Client}// CnatV1alpha1 retrieves the CnatV1alpha1Clientfunc(c*Clientset)CnatV1alpha1()cnatv1alpha1.CnatV1alpha1Interface{returnc.cnatV1alpha1}// Discovery retrieves the DiscoveryClientfunc(c*Clientset)Discovery()discovery.DiscoveryInterface{...}// NewForConfig creates a new Clientset for the given config.funcNewForConfig(c*rest.Config)(*Clientset,error){...}

The client set is represented by the interface Interface and gives access to the API group client interface for each version—for example, CnatV1alpha1Interface in this sample code:

typeCnatV1alpha1Interfaceinterface{RESTClient()rest.InterfaceAtsGetter}// AtsGetter has a method to return a AtInterface.// A group's client should implement this interface.typeAtsGetterinterface{Ats(namespacestring)AtInterface}// AtInterface has methods to work with At resources.typeAtInterfaceinterface{Create(*v1alpha1.At)(*v1alpha1.At,error)Update(*v1alpha1.At)(*v1alpha1.At,error)UpdateStatus(*v1alpha1.At)(*v1alpha1.At,error)Delete(namestring,options*v1.DeleteOptions)errorDeleteCollection(options*v1.DeleteOptions,listOptionsv1.ListOptions)errorGet(namestring,optionsv1.GetOptions)(*v1alpha1.At,error)List(optsv1.ListOptions)(*v1alpha1.AtList,error)Watch(optsv1.ListOptions)(watch.Interface,error)Patch(namestring,pttypes.PatchType,data[]byte,subresources...string)(result*v1alpha1.At,errerror)AtExpansion}

An instance of a client set can be created with the NewForConfig helper function. This is analogous to the clients for core Kubernetes resources discussed in “Creating and Using a Client”:

import(metav1"k8s.io/apimachinery/pkg/apis/meta/v1""k8s.io/client-go/tools/clientcmd"client"github.com/.../cnat/cnat-client-go/pkg/generated/clientset/versioned")kubeconfig=flag.String("kubeconfig","~/.kube/config","kubeconfig file")flag.Parse()config,err:=clientcmd.BuildConfigFromFlags("",*kubeconfig)clientset,err:=client.NewForConfig(config)ats:=clientset.CnatV1alpha1Interface().Ats("default")book,err:=ats.Get("kubernetes-programming",metav1.GetOptions{})

As you can see, the code generation machinery allows us to program logic for custom resources in the very same way as for core Kubernetes resources. Higher-level tools like informers are also available; see informer-gen in Chapter 5.

controller-runtime Client of Operator SDK and Kubebuilder

For the sake of completeness, we want to take a quick look at the third client, which is listed as the second option in “A Developer’s View on Custom Resources”. The controller-runtime project provides the basis for the operator solutions Operator SDK and Kubebuilder presented in Chapter 6.

It includes a client that uses the Go types presented in “Anatomy of a type”.

In contrast to the client-gen–generated client of the previous “Typed client created via client-gen”, and similarly to the “Dynamic Client”, this client is one instance, capable of handling any kind that is registered in a given scheme.

It uses discovery information from the API server to map the kinds to HTTP paths. Note that Chapter 6 will go into greater detail on how this client is used as part of those two operator solutions.

Here is a quick example of how to use controller-runtime:

import("flag"corev1"k8s.io/api/core/v1"metav1"k8s.io/apimachinery/pkg/apis/meta/v1""k8s.io/client-go/kubernetes/scheme""k8s.io/client-go/tools/clientcmd"runtimeclient"sigs.k8s.io/controller-runtime/pkg/client")kubeconfig=flag.String("kubeconfig","~/.kube/config","kubeconfig file path")flag.Parse()config,err:=clientcmd.BuildConfigFromFlags("",*kubeconfig)cl,_:=runtimeclient.New(config,client.Options{Scheme:scheme.Scheme,})podList:=&corev1.PodList{}err:=cl.List(context.TODO(),client.InNamespace("default"),podList)

The client object’s List() method accepts any runtime.Object registered in the given scheme, which in this case is the one borrowed from client-go with all standard Kubernetes kinds being registered. Internally, the client uses the given scheme to map the Golang type *corev1.PodList to a GVK. In a second step, the List() method uses discovery information to get the GVR for pods, which is schema.GroupVersionResource{"", "v1", "pods"}, and therefore accesses /api/v1/namespace/default/pods to get the list of pods in the passed namespace.

The same logic can be used with custom resources. The main difference is to use a custom scheme that contains the passed Go type:

import("flag"corev1"k8s.io/api/core/v1"metav1"k8s.io/apimachinery/pkg/apis/meta/v1""k8s.io/client-go/kubernetes/scheme""k8s.io/client-go/tools/clientcmd"runtimeclient"sigs.k8s.io/controller-runtime/pkg/client"cnatv1alpha1"github.com/.../cnat/cnat-kubebuilder/pkg/apis/cnat/v1alpha1")kubeconfig=flag.String("kubeconfig","~/.kube/config","kubeconfig file")flag.Parse()config,err:=clientcmd.BuildConfigFromFlags("",*kubeconfig)crScheme:=runtime.NewScheme()cnatv1alpha1.AddToScheme(crScheme)cl,_:=runtimeclient.New(config,client.Options{Scheme:crScheme,})list:=&cnatv1alpha1.AtList{}err:=cl.List(context.TODO(),client.InNamespace("default"),list)

Note how the invocation of the List() command does not change at all.

Imagine you write an operator that accesses many different kinds using this client. With the typed client of “Typed client created via client-gen”, you would have to pass many different clients into the operator, making the plumbing code pretty complex. In contrast, the controller-runtime client presented here is just one object for all kinds, assuming all of them are in one scheme.

All three types of clients have their uses, with advantages and disadvantages depending on the context in which they are used. In generic controllers that handle unknown objects, only the dynamic client can be used. In controllers where type safety helps a lot to enforce code correctness, the generated clients are a good fit. The Kubernetes project itself has so many contributors that stability of the code is very important, even when it is extended and rewritten by so many people. If convenience and high velocity with minimal plumbing is important, the controller-runtime client is a good fit.

Summary

We introduced you to custom resources, the central extension mechanisms used in the Kubernetes ecosystem, in this chapter. By now you should have a good understanding of their features and limitations as well as the available clients.

Let’s now move on to code generation for managing said resources.

1 Do not confuse Kubernetes and JSON objects here. The latter is just another term for a string map, used in the context of JSON and in OpenAPI.

2 “Projective” here means that the scale object is a projection of the main resource in the sense that it shows only certain fields and hides everything else.

Get Programming Kubernetes now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.