Throttling

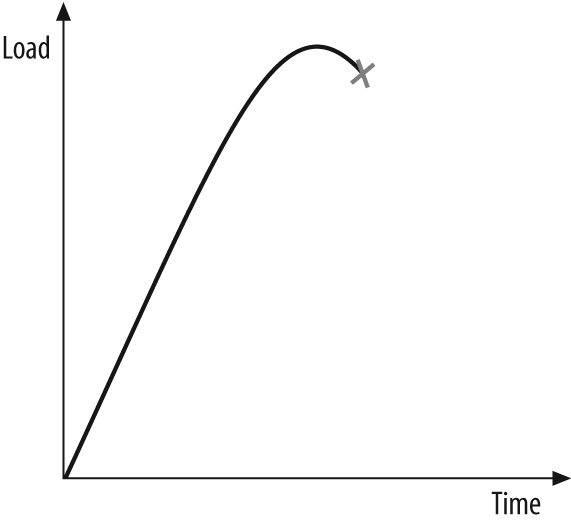

While it is not a direct instance management technique, throttling enables you to restrain client connections and the load they place on your service. You need throttling because software systems are not elastic, as shown in Figure 4-7.

Figure 4-7. The inelastic nature of all software systems

That is, you cannot keep increasing the load on the system and expect an infinite, gradual decline in its performance, as if stretching chewing gum. Most systems will initially handle the increase in load well, but then begin to yield and abruptly snap and break. All software systems behave this way, for reasons that are beyond the scope of this book and are related to queuing theory and the overhead inherent in managing resources. This snapping, inelastic behavior is of particular concern when there are spikes in load, as shown in Figure 4-8.

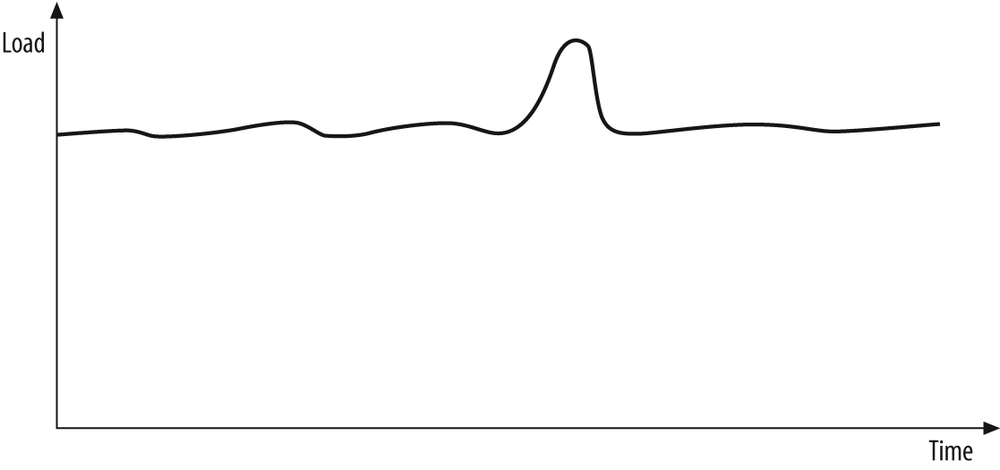

Figure 4-8. A spike in load may push the system beyond its design limit

Even if a system is handling a nominal load well (the horizontal line in Figure 4-8), a spike may push it beyond its design limit, causing it to snap and resulting in the clients experiencing a significant degradation in their level of service. Spikes can also pose a challenge in terms of the rate at which the load grows, even if the absolute level reached would not otherwise cause ...