Chapter 4. Independent Scalability

Monoliths often force you to make decisions early, when you know the least about the forces acting on your system. Your infrastructure engineers probably asked you how much capacity your application needs, forcing a “Take the worst case and double it” mentality, leading to poor resource utilization. Instead of wild guesses, a microservice approach allows you to more efficiently allocate compute. No longer are you forced into a one-size-fits-none approach; each service can scale independently.

The Monolith Forced Decisions Early—with Incomplete Information

Let’s take a quick spin in our hot tub time machine and head back to an era before cloud, microservices, and serverless computing. Servers were homegrown and bespoke, beloved pets if you will. When developers requested a server for a new project, it would take weeks or months for it to become available.

Years ago, one of my peers requested a development database for our architecture team. It was never going to hold a lot of data, it would mostly just act as a repository for some of our architectural artifacts. One would think a small, nonproduction database could be provisioned somewhat quickly. Amazingly, it took an entire year for us to get that simple request fulfilled. Who knows why this was the case—strange things happen in traditional enterprise IT.1 But I do know that these kinds of delays incent a raft of undesirable behavior.

Sadly, this experience is familiar to most IT professionals. Extended lead times force you to make capacity decisions far too early. In fact, the very start of a new initiative is when you know the least about what your systems would actually look like under stress. So you’d make an educated guess, based loosely on the worst-case scenario. Then, you’d double it, and add some buffer. (Plus a little more just in case.) In other words, you would just shrug your shoulders as in Figure 4-1.

Figure 4-1. How much capacity would you say you need?

And while this meant you often heavily overprovisioned infrastructure (and overspent), that was still a good outcome for organizations. After all, adding capacity after the fact was just as painful.

Any developer familiar with agile development practices understands the disadvantages inherent with big up-front planning and design. Agile ultimately leverages nested feedback loops to give you more information while allowing you to delay decisions to the last responsible moment. Distributed architectures and cloud environments bring many of the same advantages to your infrastructure.

Not All Traffic Is Predictable

It wasn’t just your initial server setup that suffered in this era, though. Static infrastructure, combined with your old friend the monolithic application, made it nearly impossible for you to deal with unexpected demand. Even if you had a good understanding of what your systems needed under normal circumstances, you couldn’t predict when a new marketing campaign or a shout-out from an influencer would send thousands (or millions) of hungry customers to your (now) overwhelmed servers.

Things were no better for Ops teams running your data centers. It could take months to add additional servers or racks. Traditional budgeting processes made it very difficult to add capacity in any kind of smooth manner. Instead, operators were forced to move in a stepwise fashion, relying on (at best) educated guesses about the shape of future business demand.

While this approach was logical at the time, it meant many organizations had massive amounts of unused compute resources sitting idle nearly all of the time. Single-digit utilization numbers for servers were common. Business units were paying for unused capacity every month just in case a surge or spike in demand occurred. While this may have been an acceptable tradeoff in the past, today it would charitably be considered an antipattern.

Elastic infrastructure combined with more modular architectures mitigate many of these antipatterns. Today, you can add, and just as importantly reduce, capacity on demand. You can start with a reasonable number of application instances, adapting as you learn more about your load characteristics. Working with your business team, you can spin up extra capacity for that big event and then ramp it down after. You can wait until the last responsible moment, freeing you to make better decisions based on real data, not hunches and guesses.

Most recognize this as a benefit of the public cloud, but it’s true in the enterprise data center as well. And the savings are just as real. Resources not used by your apps can be leveraged by another group in your organization.

Scale Up Where It Is Needed

The monolith also suffered from a structural constraint: you had to scale the entire thing, not just the bits that actually needed the additional capacity. In reality, the load or throughput characteristics of most systems are not uniform. They have different scaling requirements.

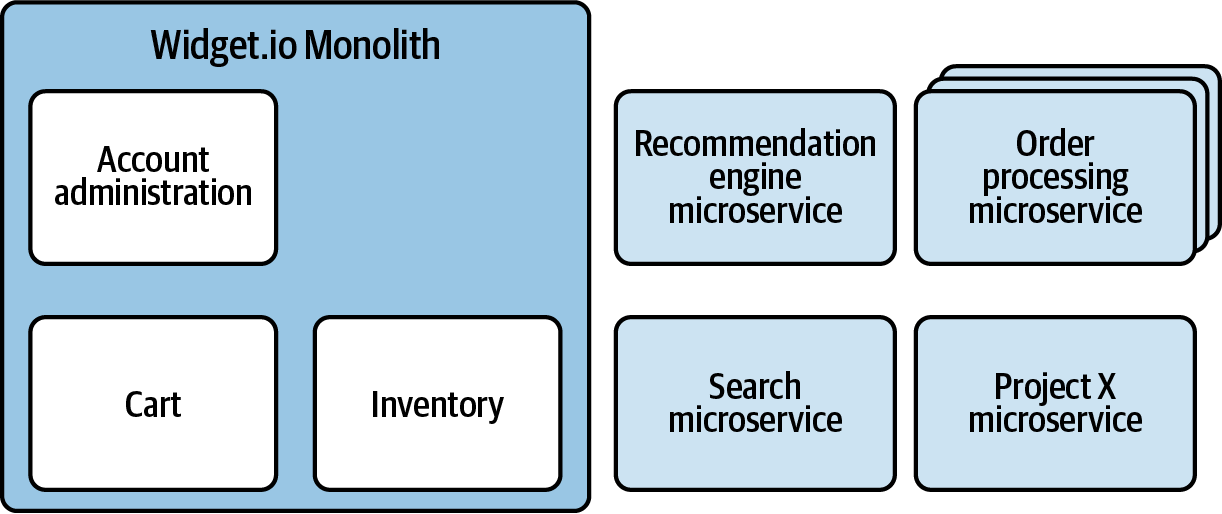

Microservices provide you with a solution: separate these components out into independent microservices. This way, the services can scale at different rates. For example, in Figure 4-2, you are free to spin up additional instances of the Order Processing Microservice to satisfy increased demand, while leaving everything else at a normal run rate.

Figure 4-2. Microservices allow you to scale up the parts of your application that need it

In this scenario, it is quite likely that the Order Processing system requires more capacity than the Account Administration functionality. In the past, you had to scale the entire monolith to support your most volatile component. The monolith approach results in higher infrastructure costs because you are forced to overprovision for the worst case scenario of just a portion of your app. If you refactor the Order Processing functionality to a microservice, you can scale up and down as needed.

But how do you know which parts of your application require more capacity? Monitoring to the rescue!

Monitoring for Fun and Profit

Good monitoring is vital for a healthy microservice biome. But knowing what to monitor isn’t always obvious. Luckily for us, many organizations are sharing their experiences! You owe it to your services to spend some time perusing Site Reliability Engineering by Beyer et al. (O’Reilly), which is filled with wisdom. For example, Rob Ewaschuk identifies the four golden signals: latency, traffic, errors, and saturation.2

The golden signals can indeed provide insights into parts of your system that could benefit from independent scalability. Over time, you will gather priceless intelligence about the actual usage patterns of your application, discovering what is normal (green), what values send a warning about future issues (yellow), and what thresholds are critical, requiring immediate intervention (red).3 Look for areas with significant traffic or where latency exceeds your requirements. Keeping a close eye on saturation and error rates will also help you find the bottlenecks in your system. Where is your system constrained? Memory, I/O, CPU, or network? Proper monitoring provides these insights.

Metrics shouldn’t be a hand-rolled solution; take advantage of tools like Wavefront, Dynatrace, New Relic, Honeycomb, and others. Spring Boot Actuator supports a number of monitoring systems out of the box. Actuator also includes a number of built-in endpoints that can be individually enabled or disabled, and of course you can always add your own.

Don’t expect to get your monitoring “right” the first time—you should actively review the metrics you collect to ensure you are getting the best intelligence about your application. Many companies today have teams of site reliability engineers (SREs). SREs help your product teams determine what they should monitor and, as importantly, the sampling frequency. Oversampling doesn’t always result in better information. In fact, it may generate too much noise to give you accurate trends. Of course, undersampling is also a possibility. Don’t be afraid to tweak your settings until you find the “Goldilocks” level!

Choosing what to monitor can also be tricky. The key service level objectives of your system is a good place to start. Resist the temptation to monitor something simply because it is easy to measure. We are all familiar with, shall we say, less than useful metrics like lines of code. Good metrics give you actionable information about your system.

Last, but not least, monitoring is not just for production. When you monitor staging, as well as lower regions, it validates your monitors. Just as you test your code, you should also ensure your monitors are doing what you expect them to do. Early monitoring not only allows your team to gain much needed familiarity with your toolset, it is also essential for performance and stress testing.4

All Services Are Equal (But Some Services Are More Equal than Others)

While you should never overlook the importance of monitoring, don’t neglect a less technical part of the equation: a conversation with your business partners. It is vital that you understand the growth rate of your services, and how that’s linked to the underlying business. What are the primary business drivers of your services? Do your services need to scale by the number of users or number of orders? What will drive spikes in demand? Take the time to translate this information to the specific services that will be affected.

Don’t be afraid to use a humble spreadsheet to help your business partners understand the cost/benefit scenarios inherent with various approaches. For example, scaling order processing might incur additional costs, but that translates to fewer abandoned carts and more happy customers. While you might have to swizzle together some numbers, it can also help you decide how to deploy limited resources.

Don’t neglect your data in these conversations. At what rate does your data grow? What type of database solution makes the most sense for your problem domain? Are you read-heavy? Write-heavy? Inquiring minds want to know.

Many businesses plan promotions around holidays or marketing events. Others have predictable spikes in business around certain parts of the year. If you are a retailer, odds are you are familiar with Black Friday and the associated sales, as well as the deluge of customers. Some industries have a year-end or quarterly cadence. Take the time to understand the important dates in your company’s calendar and plan accordingly. If you don’t know, ask. Again, you will have to determine what services are most affected by these business events. Internalizing the key dates for your organization can mean the difference between delighted customers and former patrons, lost revenue, and poor word of mouth.

It should be obvious that some services are more business critical than others. Using the information you’ve mined from your business team, you can establish a criticality for a given service. Just as you categorize an outage by severity and scope, you can identify which services are business critical. Returning to our mythical Widget.io Monolith, the ability to process orders is far more important than the product recommendation service. This fact further reinforces our decision to refactor the Order Processing Service. Apply some due diligence to your services. Which of them could be down for a few hours (or days) with minimal impact? Which ones would the CEO know about instantly in the event of an outage?

Modernize Your Architecture to Use Modern Infrastructure

Independent scalability is one of the most powerful benefits of a microservice approach. The monolith forces you to make decisions at a point in the process when you know the least about what your projects would actually need. This leads to overspending on capacity, and your apps are still vulnerable to spikes in demand. Monolithic architectures force you to scale your applications at a coarse-grained level, further exacerbating the issue. Thankfully, things are much better today.

Modern infrastructure allows you to react in real time—adjusting capacity to match demand at any given moment. Instead of paying every month for the extra capacity you only need once a quarter, you can pay as you go, freeing up valuable resources.

Odds are, your applications would benefit from these capabilities. Proper monitoring along with a solid understanding of your business drivers can help you identify modules you should refactor to microservices. Cloud infrastructure allows you to be proactive while also maximizing the utilization of your hardware resources.

As with the previous principles, you can see once again how microservices give you flexibility. Instead of over-allocating resources for those bits that need it, you can tailor your compute to the needs of your application. Doing so can not only save you money, but it also gives you the ability to react to the ebb and flow of demand. From here, it is time to turn our attention to when things don’t quite go as planned.

1 I theorize that a team wrote a database as part of the request, but I could be wrong.

2 For more on this topic, see chapter 6 of Site Reliability Engineering, “Monitoring Distributed Systems.”

3 If you don’t know the scalability characteristics of your app, monitoring is a data-driven way to determine those heuristics.

4 Which you are also running early and often, right?

Get Responsible Microservices now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.