Chapter 1. Goals, Issues, and Processes in Capacity Planning

THIS CHAPTER IS DESIGNED TO HELP YOU ASSEMBLE AND USE THE WEALTH OF TOOLS AND TECHNIQUES presented in the following chapters. If you do not grasp the concepts introduced in this chapter, reading the remainder of this book will be like setting out on the open ocean without knowing how to use a compass, sextant, or GPS device—you can go around in circles forever.

When you break them down, capacity planning and management—the steps taken to organize the resources your site needs to run properly—are, in fact, simple processes. You begin by asking the question: what performance do you need from your website?

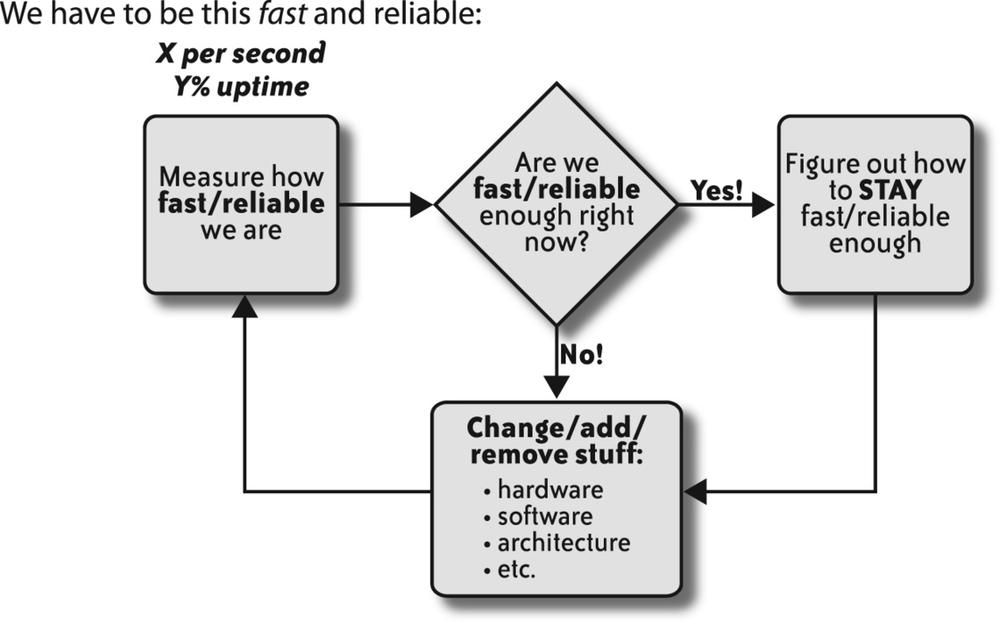

First, define the application’s overall load and capacity requirements using specific metrics, such as response times, consumable capacity, and peak-driven processing. Peak-driven processing is the workload experienced by your application’s resources (web servers, databases, etc.) during peak usage. The process, illustrated in Figure 1-1, involves answering these questions:

How well is the current infrastructure working?

Measure the characteristics of the workload for each piece of the architecture that comprises your applications—web server, database server, network, and so on—and compare them to what you came up with for your performance requirements mentioned above.

What do you need in the future to maintain acceptable performance?

Predict the future based on what you know about past system performance then marry that prediction with what you can afford, and a realistic timeline. Determine what you’ll need and when you’ll need it.

How can you install and manage resources after you gather what you need?

Deploy this new capacity with industry-proven tools and techniques.

Rinse, repeat.

Iterate and calibrate your capacity plan over time.

Your ultimate goal lies between not buying enough hardware and wasting your money on too much hardware.

Let’s suppose you’re a supermarket manager. One of your tasks is to manage the schedule of cashiers. Your challenge is picking the right number of cashiers working at any moment. Assign too few, and the checkout lines will become long, and the customers irate. Schedule too many working at once, and you’re spending more money than necessary. The trick is finding the right balance.

Now, think of the cashiers as servers, and the customers as client browsers. Be aware some cashiers might be better than others, and each day might bring a different amount of customers. Then you need to take into consideration your supermarket is getting more and more popular. A seasoned supermarket manager intuitively knows these variables exist, and attempts to strike a good balance between not frustrating the customers and not paying too many cashiers.

Welcome to the supermarket of web operations.

Quick and Dirty Math

The ideas I’ve just presented are hardly new, innovative, or complex. Engineering disciplines have always employed back-of-the-envelope calculations; the field of web operations is no different.

Because we’re looking to make judgments and predictions on a quickly changing landscape, approximations will be necessary, and it’s important to realize what that means in terms of limitations in the process. Being aware of when detail is needed and when it’s not is crucial to forecasting budgets and cost models. Unnecessary detail means wasted time. Lacking the proper detail can be fatal.

Predicting When Your Systems Will Fail

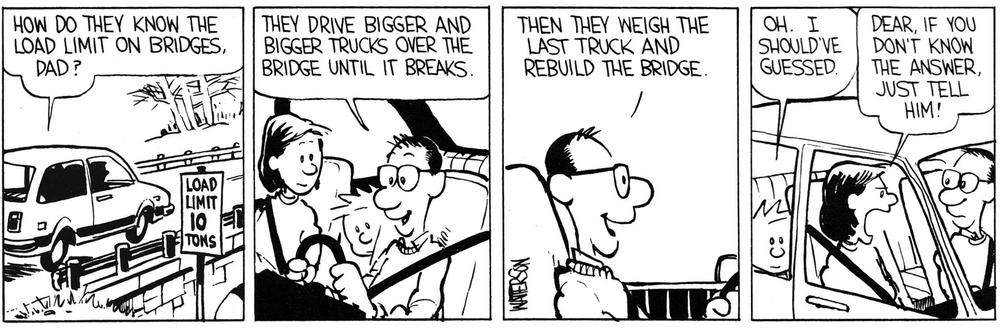

Knowing when each piece of your infrastructure will fail (gracefully or not) is crucial to capacity planning. Capacity planning for the web, more often than one would like to admit, looks like the approach shown in Figure 1-2.

Including this information as part of your calculations is mandatory, not optional. However, determining the limits of each portion of your site’s backend can be tricky. An easily segmented architecture helps you find the limits of your current hardware configurations. You can then use those capacity ceilings as a basis for predicting future growth.

For example, let’s assume you have a database server that responds to queries from your frontend web servers. Planning for capacity means knowing the answers to questions such as these:

Adjusting for periodic spikes and subtracting some comfortable percentage of headroom (or safety factor, which we’ll talk about later) will render a single number with which you can characterize that database configuration vis-à-vis the specific role. Once you find that “red line” metric, you’ll know:

We’ll talk more about these last points in the coming chapters. One thing to note is the entire capacity planning process is going to be architecture-specific. This means the calculations you make to predict increasing capacity may have other constraints specific to your particular application.

For example, to spread out the load, a LAMP application might utilize a MySQL server as a master database in which all live data is written and maintained, and use a second, replicated slave database for read-only database operations. Adding more slave databases to scale the read-only traffic is generally an appropriate technique, but many large websites (including Flickr) have been forthright about their experiences with this approach, and the limits they’ve encountered. There is a limit to how many read-only slave databases you can add before you begin to see diminishing returns as the rate and volume of changes to data on the master database may be more than the replicated slaves can sustain, no matter how many you add. This is just one example where your architecture can have a large effect on your ability to add capacity.

Expanding database-driven web applications might take different paths in their evolution toward scalable maturity. Some may choose to federate data across many master databases. They may split up the database into their own clusters, or choose to cache data in a variety of methods to reduce load on their database layer. Yet others may take a hybrid approach, using all of these methods of scaling. This book is not intended to be an advice column on database scaling, it’s meant to serve as a guide by which you can come up with your own planning and measurement process—one that is right for your environment.

Make Your System Stats Tell Stories

Server statistics paint only part of the picture of your system’s health. Unless they can be tied to actual site metrics, server statistics don’t mean very much in terms of characterizing your usage. And this is something you’ll need to know in order to track how capacity will change over time.

For example, knowing your web servers are processing X requests per second is handy, but it’s also good to know what those X requests per second actually mean in terms of your users. Maybe X requests per second represents Y number of users employing the site simultaneously.

It would be even better to know that of those Y simultaneous users, A percent are uploading photos, B percent are making comments on a heated forum topic, and C percent are poking randomly around the site while waiting for the pizza guy to arrive. Measuring those user metrics over time is a first step. Comparing and graphing the web server hits-per-second against those user interaction metrics will ultimately yield some of the cost of providing service to the users. In the examples above, the ability to generate a comment within the application might consume more resources than simply browsing the site, but it consumes less when compared to uploading a photo. Having some idea of which features tax your capacity more than others gives you context in which to decide where you’ll want to focus priority attention in your capacity planning process. These observations can also help drive any technology procurement justifications.

Quite often, the person approving expensive hardware and software requests is not the same person making the requests. Finance and business leaders must sometimes trust implicitly that their engineers are providing accurate information when they request capital for resources. Tying system statistics to business metrics helps bring the technology closer to the business units, and can help engineers understand what the growth means in terms of business success. Marrying these two metrics together can therefore help the awareness that technology costs shouldn’t automatically be considered a cost center, but rather a significant driver of revenue. It also means that future capital expenditure costs have some real context, so even those non-technical folks will understand the value technology investment brings.

For example, when presenting a proposal for an order of new database hardware, you should have the systems and application metrics on hand to justify the investment. But if you had the pertinent supporting data, you could say something along the lines of “…and if we get these new database servers, we’ll be able to serve our pages X percent faster, which means our pageviews—and corresponding ad revenues—have an opportunity to increase up to Y percent.” Backing up your justifications in this way can also help the business development people understand what success means in terms of capacity management.

Buying Stuff: Procurement Is a Process

After you’ve completed all your measurements, made snap judgments about usage, and sketched out future predictions, you’ll need to actually buy things: bandwidth, storage appliances, servers, maybe even instances of virtual servers. In each case, you’ll need to explain to the people with the checkbooks why you need what you think you need, and why you need it when you think you need it. (We’ll talk more about predicting the future and presenting those findings in Chapter 4.)

Procurement is a process, and should be treated as yet another part of capacity planning. Whether it’s a call to a hosting provider to bring new capacity online, a request for quotes from a vendor, or a trip to your local computer store, you need to take this important segment of time into account.

Smaller companies, while usually a lot less “liquid” than their larger bretheren, can really shine in this arena. Being small often goes hand-in-hand with being nimble. So while you might not be offered the best price on equipment as the big companies who buy in massive bulk, you’ll likely be able to get it faster, owing to a less cumbersome approval process.

Quite often the person you might need to persuade is the CFO, who sits across the hall from you. In the early days of Flickr, we used to be able to get quotes from a vendor and simply walk over to the founder of the company (seated 20 feet away), who could cut and send a check. The servers would arrive in about a week, and we’d rack them in the data center the day they came out of the box. Easy!

Yahoo! has a more involved cycle of vetting hardware requests that includes obtaining many levels of approval and coordinating delivery to various data centers around the world. Purchases having been made, the local site operation teams in each data center then must assemble, rack, cable, and install operating systems on each of the boxes. This all takes more time than when we were a startup. Of course, the flip side is, with such a large company we can leverage buying power. By buying in bulk, we can afford a larger amount of hardware for a better price.

In either case, the concern is the same: the procurement process should be baked into your larger planning exercise. It takes time and effort, just like all the other steps. There is more about this in Chapter 4.

Performance and Capacity: Two Different Animals

The relationship between performance tuning and capacity planning is often misunderstood. While they affect each other, they have different goals. Performance tuning optimizes your existing system for better performance. Capacity planning determines what your system needs and when it needs it, using your current performance as a baseline.

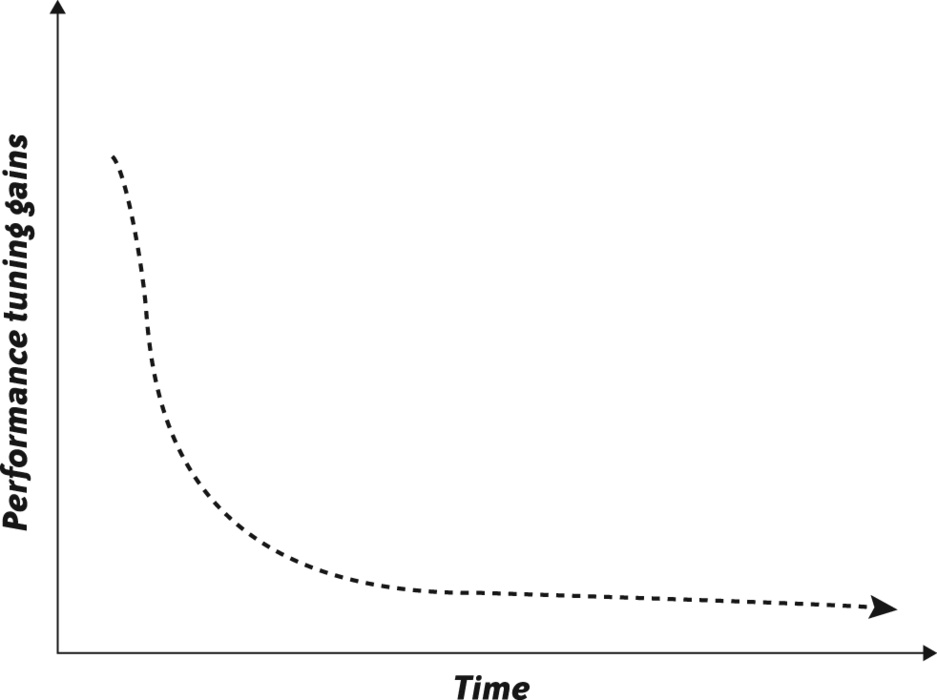

Let’s face it: tuning is fun, and it’s addictive. But after you spend some time tweaking values, testing, and tweaking some more, it can become a endless hole, sucking away time and energy for little or no gain. There are those rare and beautiful times when you stumble upon some obvious and simple parameter that can make everything faster—you find the one MySQL configuration parameter that doubles the cache size, or realize after some testing that those TCP window sizes set in the kernel can really make a difference. Great! But as illustrated in Figure 1-3, for each of those rare gems you discover, the amount of obvious optimizations you find thereafter dwindles pretty rapidly.

Capacity planning must happen without regard to what you might optimize. The first real step in the process is to accept the system’s current performance, in order to estimate what you’ll need in the future. If at some point down the road you discover some tweak that brings about more resources, that’s a bonus.

Here’s a quick example of the difference between performance and capacity. Suppose there is a butcher in San Francisco who prepares the most delectable bacon in the state of California. Let’s assume the butcher shop has an arrangement with a store in San Jose to sell their great bacon there. Every day, the butcher needs to transport the bacon from San Francisco to San Jose using some number of trucks—and the bacon has to get there within an hour. The butcher needs to determine what type of trucks, and how many of them he’ll need to get the bacon to San Jose. The demand for the bacon in San Jose is increasing with time. It’s hard having the best bacon in the state, but it’s a good problem to have.

The butcher has three trucks that suffice for the moment. But he knows he might be doubling the amount of bacon he’ll need to transport over the next couple of months. At this point, he can either:

Make the trucks go faster

Get more trucks

You’re probably seeing the point here. While the butcher might squeeze some extra horsepower out of the trucks by having them tuned up—or by convincing the drivers to break the speed limit—he’s not going to achieve the same efficiency gain that would come from simply purchasing more trucks. He has no choice but to accept the performance of each truck, and work from there.

The moral of this little story? When faced with the question of capacity, try to ignore those urges to make existing gear faster, and focus instead on the topic at hand: finding out what you need, and when.

One other note about performance tuning and capacity: there is no silver bullet formula to tell you when tuning is appropriate and when it’s not. It may be that simply buying more hardware is the correct thing to do, when weighed against engineering time spent on tuning the existing system. Striking this balance between optimization and capacity deployment is a challenge and will differ from environment to environment.

The Effects of Social Websites and Open APIs

As more and more websites install Web 2.0 characteristics, web operations are becoming increasingly important, especially capacity management. If your site contains content generated by your users, utilization and growth isn’t completely under the control of the site’s creators—a large portion of that control is in the hands of the user community, as shown by my example in the Preface concerning the London subway bombing. This can be scary for people accustomed to building sites with very predictable growth patterns, because it means capacity is hard to predict and needs to be on the radar of all those invested—both the business and the technology staff. The challenge for development and operations staff of a social website is to stay ahead of the growing usage by collecting enough data from that upward spiral to drive informed planning for the future.

Providing web services via open APIs introduces a another ball of wax altogether, as your application’s data will be accessed by yet more applications, each with their own usage and growth patterns. It also means users have a convenient way to abuse the system, which puts more uncertainty into the capacity equation. API usage needs to be monitored to watch for emerging patterns, usage edge cases, and rogue application developers bent on crawling the entire database tree. Controls need to be in place to enforce the guidelines or Terms of Service (TOS), which should accompany any open API web service (more about that in Chapter 3).

In my first year of working at Flickr, we grew from 60 photo uploads per minute to 660. We expanded from consuming 200 gigabytes of disk space per day to 880, and we ballooned from serving 3,000 images a second to 8,000. And that was just in the first year.

Capacity planning can become very important, very quickly. But it’s not all that hard; all you need to do is pay a little attention to the right factors. The rest of the chapters in this book will show you how to do this. I’ll split up this process into segments:

Get The Art of Capacity Planning now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.