Chapter 1. Brave New Internet

The coming Internet of Things (IoT) distributes computational devices massively in almost any axis imaginable and connects them intimately to previously noncyber aspects of human life.1 Analysts predict that by 2020 humanity will have over 25 billion networked devices, embedded throughout our homes, clothing, factories, cities, vehicles, buildings, and bodies. If we build this new internet the way we built the current Internet of Computers (IoC), we are heading for trouble: humans cannot effectively reason about security when devices become too long-lived, too cheap, too tightly tied to physical life, too invisible, and too many.

This book explores the deeper issues and principles behind the risks to security, privacy, and society. What we do in the next few years is critical.

Worst-Case Scenarios: Cyber Love Canal

Many visionaries, researchers, and commercial actors herald the coming of the Internet of Things. Computers will no longer look like computers but rather like thermostats, household appliances, lightbulbs, clothing, and automobiles; these embedded systems will permeate our living environments and converse with one another and all other networked computers.

What’s more, these systems will intimately interact with the physical world: with our homes, schools, businesses, and bodies—in fact, that’s the point. In the visions put forth, the myriad embedded devices magically enhance our living environments, adjusting lights, temperature, music, medication, fuel flow, traffic lighting, and elevators.

It’s tempting to insert a utopian sketch of a “typical day in 2025” here, but there are plenty of sketches out there already. It’s also tempting to insert a dystopian sketch of what might happen if a malicious adversary mounts a devious attack, but that’s been done nicely too—see Reeves Wiedeman’s article “The day cars drove themselves into walls.” It’s a scenario that could happen based on what already has [111].

However, I will offer an alternative dystopian vision of this future world. Every object in the home—and every part of the home—is inhabited by essentially invisible computational boxes that can act on the physical environment. But rather than being helpful, these devices are evil, acting in bizarre, dangerous, or unexpected ways, either chaotically or coordinated in exactly the wrong way. We can’t simply turn these devices off because no one knows where the off switches are. What’s worse is that we don’t even know where the devices are!

This vision of dark magic might inspire us to look to horror novels or science fiction for metaphors. However, real life has given us a better metaphor: environmental contamination. We’ve seen buildings contaminated by lead paint and asbestos and rendered unusable by chemical spills at nearby dry cleaners, a research lab rendered uninhabitable by toxic mold, and Superfund sites called brownfields that can’t be built on (at least, without often expensive remediation). In all these cases, technology (usually chemical) intended to make life better somehow backfired and turned suburban utopias into wastelands.

What happened at the Pearl Harbor naval base is widely known. However, Americans over 50 might also remember Love Canal, a neighborhood of Niagara Falls, New York, that became synonymous with chemical contamination catastrophe. Vast amounts of chemical waste buried under land that later supported homes and schools led to massive health problems and the eventual evacuation and abandonment of most of the neighborhood.

What some might term a “cyber Pearl Harbor”—a coordinated, large-scale attack on our computational infrastructure—would indeed be a bad thing.2 However, we should also be worried about a “cyber Love Canal”—buildings and neighborhoods, not to mention segments of our cyberinfrastructure, rendered uninhabitable by widespread “infection” and loss of control of the IoT embedded therein. The way we build and deploy devices today won’t work at the scale of the envisioned IoT and will backfire, like so many hidden chemical dumps. Continuing down this path will similarly lead to “cyber brownfields.”

“Things don’t work” is the security idealist’s standard rant. A more honest observation is that, although flawed, things work well enough to keep it all going. For the most part, we know where the machines are—workstations and laptops in offices, servers in data centers. The operating system (OS) and application software are new enough to still be updated, and machines are usually expensive enough to justify users’ attention to maintenance and patching before too many compromises happen.3

The current IT infrastructure is compromisable and compromised, with occasional lost productivity and higher fraud losses amortized over a large population—yet life goes on, mostly. The fact that I am writing these words on networked machines while the web continues to work proves that.

However, in the IoT, the numbers, distribution, embeddedness, and invisibility of devices will change the game. Suppose we build the IoT the same way we built the current internet. When the inevitable input validation bug is discovered, there will be orders of magnitude more vulnerable machines. Will embedded machines be patchable? Will anyone think to maintain inexpensive parts of the physical infrastructure? Will machines and software last longer than the IoT startups that create them? Will anyone even remember where the machines are?Imagine a world in which we needed to update each door, each electrical outlet, and perhaps even each lightbulb on Patch Tuesday.

When the inevitable happens, what will a compromised machine in the IoT be able to do? It’s no longer just containing data; it’s controlling boiler temperatures, elevator movement, automobile speed, fish tank filters, and insulin pumps. Consider the effects of denial of service on our physical infrastructure. In winter where I live, temperatures of –15°F are common. How many burst pipes and damaged buildings—and maybe even deaths—would we have had if a virus shut down all the heating systems? The recent nonfiction book Five Days at Memorial by Sheri Fink chronicled the horrors of being trapped in a New Orleans hospital when Hurricane Katrina shut down basic infrastructure, including electricity, transport, and communication [31]. Could an infection in the IoT cause similar infrastructure loss?

Fail-stop is bad enough, but compromised machines in the IoT can do more than simply stop; they can behave arbitrarily. What havoc might happen when elevators, automobiles, and door locks start behaving unpredictably? A decade ago, a compromise at my university led to a large server being coopted to distribute illegal content—annoying, but relatively harmless. What could happen when schools, homes, apartment buildings, and shopping malls are full of invisible, forgotten, and compromised smart devices?

What’s Different?

“We already have an internet,” some readers may wonder, “and it works pretty well. Why the fuss about a new one?” This chapter addresses that question. What’s different about the IoT?

Kevin Ashton, credited with inventing the term “IoT,” sees the connection to humanity as the distinguishing factor [5]:

The fact that I was probably the first person to say “Internet of Things” doesn’t give me any right to control how others use the phrase. But what I meant, and still mean, is this: Today computers—and, therefore, the Internet—are almost wholly dependent on human beings for information. Nearly all of the roughly 50 petabytes (a petabyte is 1,024 terabytes) of data available on the Internet were first captured and created by human beings—by typing, pressing a record button, taking a digital picture or scanning a bar code. Conventional diagrams of the Internet include servers and routers and so on, but they leave out the most numerous and important routers of all: people.

Ahmed Banafa goes further—the next stage in the evolution of computing systems, the IoT connects to everything [6]:

The Internet of Things (IoT) represents a remarkable transformation of the way in which our world will soon interact. Much like the World Wide Web connected computers to networks, and the next evolution connected people to the Internet and other people, the IoT looks poised to interconnect devices, people, environments, virtual objects and machines in ways that only science fiction writers could have imagined.

This latter definition is the sense in which I use the term in this book. In the IoT, we are layering computation on top of everything—and then interconnecting it.

Because it thus scales up from the IoC in so many dimensions, the IoT becomes something new. Even in the IoC, internet pioneer Vint Cerf saw the newness created by scale [50 p. 29]:

…It’s difficult to envision what happens when extremely large numbers of people gain access to a technology, such as the Internet. It’s a bit like being the inventor of the automobile and imagining a few dozen of them, not knowing how 50 million or 100 million of them would affect the attitudes, customs, behavior and actions of the entire country…and the world.

However, the IoT will be many orders of magnitude larger than even the current internet and interconnect devices far smaller. What’s more, the amount of data these devices generate will also be orders of magnitude greater; thanks to its embedded machines, a Boeing 737 flying from Los Angeles to Boston generates more bytes of data than the Library of Congress collects in one month.

Lifetimes

Another dimension of scale is time. The devices in the IoT will persist far longer than the current thinking allows for. Unreachable or forgotten devices will disrupt the “penetrate and patch” model governing the current internet, and devices may have baked-in cryptography persisting for decades beyond its security lifetime.

The devices may even outlive the companies and enterprises responsible for maintaining them. Consider some data points:

-

The film 2001: A Space Odyssey, whose creators in 1968 put much thought into imagining the world of 2001, is noted for its “curse”: businesses whose logos are featured prominently in the film ended up disappearing in the real world. Will our predictions today be any better? Who will be around in 30 years to issue software updates to the smart appliances I buy today?

-

In April 2016, Business Insider reported how Google Nest “is dropping support for a line of products—and will make customers’ existing devices completely useless” [86]. In the IoT, how will the often short lifetimes of IT systems mesh with the typically long lifetimes of physical ones?

The IoT in the Physical World

The IoT will intimately tie the cyberworld to physical reality, to a degree never before achieved, and all of these new dimensions will disrupt and subvert the way technologists, policy makers, and the general public reason about the IT infrastructure. The remainder of this chapter explores these connections and implications:

-

Why software requires continual maintenance, and what this means when we change from the IoC to the IoT.

-

The impact that the IoT, so much larger in scale, may have on the physical world.

-

The impact of the physical world on the IoT (the connection works both ways).

-

Worst-case scenarios of an IoT-enabled cyber Pearl Harbor (or cyber Love Canal).

Inevitable and Unfortunate Decay

When it comes to the physical world, engineering generally works—with software, not so much. A friend in grad school once quipped, “Software engineering is the field of the future, and it will always be the field of the future.” Although this was several decades ago, it is unfortunately still true.

When it comes to building physical things out of materials such as wood, steel, and concrete, humanity has figured out how to select the right materials and then assemble them into things like houses and bridges, with a fairly good estimate of how long these houses and bridges will hold up. It does not happen that every few weeks, an announcement goes out that all houses built with a certain kind of door suddenly need to have the doors replaced; it is not the general custom for people to avoid going into houses more than 20 years old because they are worried they will suddenly collapse or be filled with dangerous criminals, simply because the owners neglected to repaint them every “paint Tuesday.”

However, when it comes to things humans build in software, these scenarios—ridiculous for physical infrastructure—are standard practice. It is extremely hard to build software systems that, with high confidence, will last a few years (let alone decades) without the discovery of bugs and critical security issues. Reasons effective software engineering is so hard include:

-

The sheer number of “moving parts” (e.g., it takes at least a half dozen Boeing 747 airplanes to have as many physical parts as lines of code in a basic laptop OS).

-

The way these parts compose and interact (it is unlikely for a part in one airplane to reach out and subvert the functioning of a different part in a different airplane—but this happens all the time in software modules).

-

The economic forces that can make software products be rushed to market with insufficient testing.

-

The fact that, thanks to the internet, the attack surface (perimeter) on a piece of software may be exposed to all the world’s adversaries, all the time.

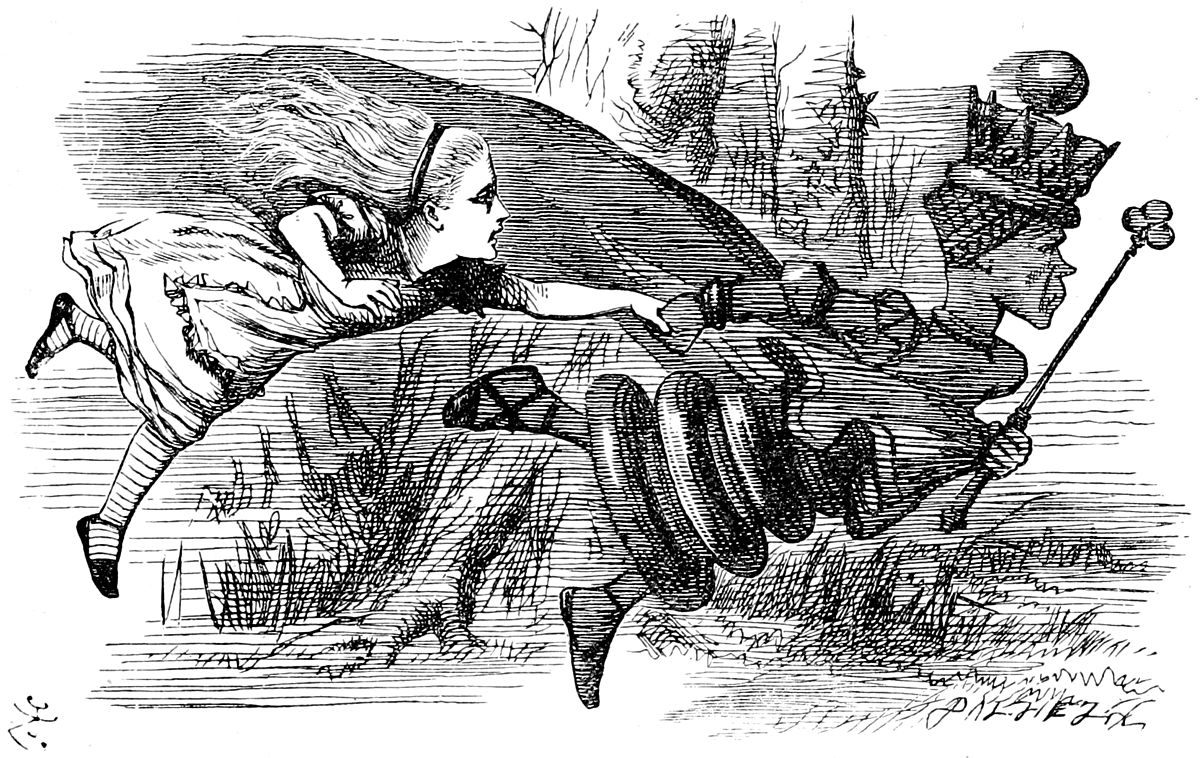

Nonetheless, this is the reality. Systems that do not receive regular patching are assumed to be compromised—leading to a Through the Looking-Glass world where users and administrators must keep running to stay in the same place (Figure 1-1). What’s even stranger about this, from the physical engineering metaphor, is that it’s usually not the case that the components suddenly break and must be fixed. Rather, usually, the components were always broken; it’s only that the defenders (and hopefully the adversaries also) just learned that fact.

Figure 1-1. The need for software updates means we must continually run just to stay in the same place. (John Tenniel illustration for Through the Looking-Glass, public domain.)

Indeed, on the day I was writing this, researchers from cyber security company Check Point announced that Facebook’s Chat service and Messenger application have flaws in how participants construct URLs for communication, and that an adversary can use these flaws to take over conversations [13]. Yesterday, the day before, and even back in 2008 when Chat was announced, this service was considered secure—but the zero-day vulnerabilities were always there.

Zero-Days and Forever-Days

The IoC has thus come to depend on the penetrate and patch paradigm. We keep running to stay in the same place, to reduce the risk of attack via zero-days—holes that adversaries know about but defenders do not. In just one random two-week period in 2016, the US Department of Homeland Security (DHS) Industrial Control Systems Cyber Emergency Response Team announced 11 critical zero-days:

-

In a wireless networking device used in “Commercial Facilities, Energy, Financial Services, and Transportation Systems” internationally

-

In an embedded computer used in “Chemical, Commercial Facilities, Critical Manufacturing, Emergency Services, Energy, Food and Agriculture, Government Facilities, Water and Wastewater Systems” and other sectors internationally

-

In building and automation systems from two vendors used in “Commercial Facilities” internationally

-

In power grid components from three vendors, used internationally

-

In other industrial control systems networking equipment from four vendors, used in “Chemical, Critical Manufacturing, Communications, Energy, Food and Agriculture, Healthcare and Public Health, Transportation Systems, Water and Wastewater Systems, and other sectors” internationally

Critical infrastructure components are in need of urgent update, just to remain as safe as the community thought they were when first deployed.

The widespread use of common, commodity software components in the IoT (and IoC) raises the specter of what my own lab has called zero-day blooms: like algae blooms, rapidly spreading exploitation of newly discovered holes might threaten a wide swath of infrastructure [80]. In just one month in 2016, Ubiquiti Networks announced that a hole in its energy-sector wireless equipment was being exploited in installations worldwide [46], and security firm Trustwave announced that an entity was selling a zero-day vulnerability allegedly “affecting all Windows OS versions”—yes, all of them [20].

Consider the implications if, in 10 years, a similar zero-day surfaces in the commodity OS used by a larger part of the IoT. What will be caught in the zero-day bloom? If the IoT requires “penetrate and patch” to remain secure, how will that mesh with the physical world to which the IoT is tied? Indeed, in the Ubiquiti incident just mentioned, the vulnerability had been discovered and a patch released nearly a year earlier—yet the hole persisted. For another example, content security software company Trend Micro wrote, in December 2015, of 6.1 million smart devices at risk due to vulnerabilities for which patches had existed since 2012 [119]. In January 2016, the Wall Street Journal reported on 10 million home routers at risk because they used unpatched components from 2002 [106]. Indeed, the difficulty—or impossibility—of patching in the IoT has caused some analysts to suggest a new term: forever-days [45], zero-days that are permanent.

The Fix is In?

Because of this inevitable decay, software systems require continual maintenance.

In the IoC

Even in the IoC, where computers look like computers and usually have human attendees, maintaining software is an ongoing challenge. Consider the recent case of Microsoft trying to upgrade machines to Windows 10. The Guardian catalogued some of the complaints [42]:

Scores of users have posted…to complain about Windows 10 automatically installing, seemingly without asking, and often in the middle of doing something important.

[A] Reddit user…posted a warning…after it…“bricked” his father’s computer….

“In some cases the upgrade went OK and the user is just really confused. In others, Windows 10 is asking for a login password the user set years ago and hasn’t used since, that was fun. In still another it’s screwed up access to their shared folders.”

A complicating factor here is that although Microsoft tried to get the user’s permission before starting an upgrade, there was confusion about the interface. As the BBC put it [61]:

Clicking the cross in the top-right hand corner of the pop-up box now agrees to a scheduled upgrade rather than rejecting it. This has caused confusion as clicking the cross typically closes a pop-up notification.

Even here in the IoC, maintenance has not meshed well with how IT is tied to the real world. Engadget and others noted a Windows 10 upgrade dialog box popping up on the weather map during a television news broadcast [101]. The Register wrote that an anti-poaching organization in the Central African Republic had its (donated) laptops begin automatic upgrades, overwhelming its (expensive) satellite network link and rendering the machines inoperable [102].

In the IoC, effective maintenance of software at other granularities than operating systems has also been a problem. The BIOS, and other low-level firmware that runs before the OS boots, provides some examples. Softpedia writes that the ASUS motherboards automatically check for and then install updates—but do so without checking the authenticity of the upgrade [18]. Researchers at Duo found problems with low-level software updating from many other vendors as well [57]. (Chapter 4 will consider further this design pattern of “failure to authenticate.”) Managing updates for components with long lifetimes and many generations can also be problematic, as Oracle learned when the Federal Trade Commission (FTC) sanctioned it for its Java update policy, which fixed recent versions but left older, vulnerable versions exposed [15].

Into the IoT

The challenges that made these software maintenance issues in the IoC problematic will be even stronger in the IoT. Even now, in the early stages of the IoT, maintenance problems emerge:

-

In 2014, Toyota required Prius owners to bring cars to dealers to fix software bugs that could “cause vehicles to halt while being driven” [32].

-

In 2015, Jaguar Land Rover required customers to bring cars to dealers to fix software bugs pertaining to door locks [121].

-

In 2015, BMW patched a security flaw via automatic download [76], as did Tesla [108].

-

In 2015, a software maintenance problem on an Airbus A400M led to an even worse scenario: a crash killing four [38].

-

After the much-publicized Wired demonstration of remote takeover of a Jeep [49], Fiat Chrysler pushed out a software update by physically mailing a USB drive to owners [48] and asking them to install it—a mechanism with questionable security and effectiveness. (On the other hand, Fiat Chrysler also “applied network-level security measures on the Sprint cellular network that communicates with its vehicles” [58].)

-

In June 2016, patching via automatic download did not go so well for Lexus owners, who found the update rendered their navigation systems and radios inoperable [44].

-

In February 2016, a Merge Hemo (a computerized “documentation tool” for cardiac catheterization) crashed during a heart operation because automatic antivirus scanning had started [19].

So far, software maintenance in the IoT has had a mixed record.

As noted earlier, another example of the mismatch between IT and physical reality is relative lifetimes. Things in one’s house (or in bridges or cars) may last longer than the supported lifetime of a given IoT component. For one example, Trend Micro recently noted [39]:

Our researchers have found a hole in the defenses of the systems on chips (SoCs) produced by Qualcomm Snapdragon that, if exploited, allows root access…. So far, Trend Micro security experts have found this vulnerability on the Nexus 5, 6, 6P and the Samsung Galaxy Note Edge. Considering the fact that these devices no longer receive security updates, this is concerning news for anyone who owns one of these phones. However, smartphones aren’t the only problem here. Snapdragon also sells their SoCs to venders producing devices considered part of the IoT, meaning these gadgets are just as at risk.

Referring to this general problem of IoT patching, FTC Chairwoman Edith Ramirez lamented how “it may be difficult to update the software or apply a patch—or even to get news of a fix to consumers” [73]. In one extreme example, in November 2015, Orly Airport (near Paris) was brought “to a standstill” because of software critical to its operations that was running on Windows 3.1 [74]. This OS is so “prehistoric,” according to Longeray, that patches are no longer even available; Alice cannot run fast enough.

The IoT’s Impact on the Physical World

One principal way the IoT differs from the IoC is the intimate connection of the IoT to physical reality. This connection amplifies the consequences of IT—expected behavior, unexpected behavior, malicious action.

For an example of troubling consequences for teachers (like me), Amazon sells some interesting smart watches [55]. As the Independent reported in March 2016:

“This watch is specifically designed for cheating in exams with a special programmed software. It is perfect for covertly viewing exam notes directly on your wrist, by storing text and pictures. It has an emergency button, so when you press it the watch’s screen display changes from text to a regular clock, and blocks all other buttons,” the seller wrote.

However, there are already many examples of deeper consequence.

Houses

Perhaps the most immediately tangible aspect of “physical reality” are the homes and apartments we live in. The marketplace is already filled with smart televisions, thermostats, light switches, door locks, garage door openers, refrigerators, washing machines, dryers (and probably many other things I’ve missed).

One way the IoT has been negatively impacting this intimate physical reality is simply by not working. Adam Clark Estes (in the aptly titled “Why is my smart home so fucking dumb?”) writes [30]:

I unlocked my phone. I found the right home screen. I opened the Wink app. I navigated to the Lights section. I toggled over to the sets of light bulbs that I’d painstakingly grouped and labeled. I tapped “Living Room”—this was it—and the icon went from bright to dark. (Okay, so that was like six taps.)

Nothing happened.

I tapped “Living Room.” The icon—not the lights—went from dark to bright. I tapped “Living Room,” and the icon went from bright to dark. The lights seemed brighter than ever.

“How many gadget bloggers does it take to turn off a light?” said the friend, smirking. “I thought this was supposed to be a smart home.”

I threw my phone at him, got up, walked ten feet to the switch. One tap, and the lights were off.

Terence Eden similarly wrote of “The absolute horror of WiFi light switches” [28].

Adding interconnected smartness to a living space also opens up the potential of malicious manipulation of that space via manipulation of the IT. For example, Matthew Garrett wrote of some penetration testing he did while staying at a hotel that had “decided that light switches are unfashionable and replaced them with a series of Android tablets” [40]. With straightforward Ethernet sniffing, he discovered he could use his own machine to turn on and off lights, turn on and off the television, open and shut curtains—in any room in the hotel.

Numerous competing vendors already offer hubs to serve as the central connectivity point for the smart home; others, such as Samsung and SmartThings, are trying to turn one of the smart appliances into that hub (e.g., [24]). These hubs can also provide a vector for external adversaries to enter the domicile. In 2015, researchers from Veracode found many vulnerabilities in such commercial offerings [82]. In 2016, researchers from Vectra Threat Lab found ways to use web-connected home cameras to penetrate the home network [122]. Many years ago, my own lab found such holes with set-top boxes our university deployed in dorm rooms.

In the IoC, security specialists used to have to explain that the reason it’s important to lock one’s computer even if one doesn’t lock one’s front door is that one’s front door only opens into the neighborhood, while the computer opens to the entire world. Thanks to the IoT, the front door now opens to the entire world as well.

Cars

For many people, after the home, one’s car is one’s castle: an environment that’s personal and part of everyday life. The penetration of IT and networks into the automobile thus provides another vector for the IoT to disrupt physical reality—unpleasantly.

Random failures have caused trouble already. In 2003, Thailand’s finance minister was trapped in his BMW when its computer failed [4]. In 2011, a young man died in France when he was unable to exit a locked car, because (by design) the car could not be opened from the inside if it had been locked from the outside [1]. In June 2015, a man and his dog died in Texas when he was unable to open the door or window in his Corvette, due to a battery failure [92]: “Police believe [he] made a valiant effort to escape, and possibly died while looking through the car’s manual.”

Malfeasance can also happen. In February 2015, surveillance video shows a thief opening a locked Audi and stealing a $15,000 bicycle from inside—apparently by just touching the car [43]. In May 2015, researchers in Germany analyzed BMW’s ConnectedDrive wireless transmissions and found vulnerabilities that would let an external adversary open a locked car [99]. In August 2015, Threatpost wrote of researcher Samy Kamkar’s tools enabling adversarial subversion of various remote car unlocking features [33]. One wonders: could there be a connection?

These incidents are probably overshadowed by the larger story of researchers breaking into the internal networking of cars. Here are some examples:

-

In 2010, researchers from the University of South Carolina and Rutgers identified security vulnerabilities in how tire pressure monitors communicate wirelessly with modern cars [90].

-

In 2010 and 2011, researchers from UC San Diego and the University of Washington identified—and demonstrated on real cars under controlled conditions on closed roads—many ways adversaries, both remote and local, can cause havoc [65, 14].

-

In February 2015, a teenager built a wireless device that could “remotely control…headlights, window wipers, and the horn” and “unlock the car and engage the vehicle’s remote start feature” [74]. Interestingly, these features were deemed “non-safety related.”

Nonetheless, it wasn’t until July 2015 [49], when two researchers remotely took control of Wired reporter Andy Greenberg’s Jeep—live, on the highway—that the potential of adversarial exploitation of the IoT’s penetration into automobiles caught the public’s attention. In the New York Times opinion pages the next month, Zeynep Tufekci quipped [105]:

In announcing the software fix, the company said that no defect had been found. If two guys sitting on their couch turning off a speeding car’s engine from miles away doesn’t qualify, I’m not sure what counts as a defect in Chrysler’s world.

Subsequent work has penetrated cars via digital radio [107], text messaging [41], and WiFi [123]. Technicians at the National Highway Traffic Safety Administration (NHTSA) demonstrated “ways of tampering remotely with door locks, seat-belt tensioners, instrument panels, brakes, steering mechanisms and engines—all while the test cars were being driven” [26]. Richard Doherty of The Envisioneering Group noted “there’s nothing secure and now we’re putting chips into things that go 100 miles per hour and in Germany faster” [11]. Craig Smith, author of The Car Hacker’s Handbook (No Starch Press) [95], observed the potential of an infected car to then compromise the IoT in the car owner’s smart home.

Traffic

Connecting IT to cars in the aggregate can impact how cars, in the aggregate, impact the physical world.

For one example, consider what happened to previously quiet neighborhoods in Los Angeles in late 2014 [89]. Waze, an app using crowdsourced traffic data to help its users find faster routes through town, started routing massive amounts of traffic through these neighborhoods. According to locals, the small residential streets paralleling the busy Interstate 405 freeway became “filled each weekday morning with a parade of exhaust-belching, driveway-blocking, bumper-to-bumper cars.” The app caused controversy again in March 2016, when Israeli soldiers using Waze inadvertently entered Palestinian territory, leading to a firefight with fatalities [120].

Other new car technologies may have unclear impacts. Will self-driving cars reduce traffic and accidents, as Peter Wayner [110] and Baidu [62] claim? Or will, as AP reporter Joan Lowy notes [75], the decreased cost and increased ease of driving cause traffic to skyrocket? (Summer 2016’s news of a fatality from a Tesla on autopilot adds more wrinkles to the discussion [71].) It would seem as if changing from gasoline-powered cars to electric cars would decrease pollution, but researchers from Dartmouth and elsewhere have shown the picture is much more complicated and depends on the local power infrastructure [54]. Similarly, changing to more efficient self-driving cars might reduce the impact on the environment by reducing the time spent driving solo and driving searching for parking—but researchers from the University of Leeds, the University of Washington, and Oak Ridge National Laboratory have similarly found the picture is more complicated [88].

Airplanes

Airplanes are another high-impact transportation medium affected by the IoT.

The planes themselves are distributed cyber-physical systems: computers and networks and sensors and actuators packaged and distributed in a thin metal frame flying through the air and carrying people. Back in the 1980s at Carnegie Mellon University’s Software Engineering Institute, I was told about a fighter jet that had a bug in its software—and it was easier to fix the jet to match the software than the other way around. (Unfortunately, I have never been able to find independent documentation of this problem.) In 2011, the Christian Science Monitor reported that Iranians had captured a US drone by remotely compromising its navigation system and convincing it to land in Iran [85], bringing to mind World War II stories of UK attacks on the more primitive navigation systems used by German bombers [56].

In 2007, associate professor in the department of Electrical and Computer Engineering at Carnegie Melon University, Phil Koopman [64] lamented how, as the airplane’s computer systems reached to the passenger seats, “Passenger laptops are 3 Firewalls away from flight controls!” In early 2015, security researcher Chris Roberts made headlines by being detained by the FBI for allegedly breaking into and manipulating an airplane’s controls from a passenger seat [118]. (Some doubt exists as to how successful he really was.) According to reports, to do this, he first had to physically break into the electronic box under this seat to access the network ports. (Chapter 3 will consider some of the controversy surrounding the concept of fly-by-wire.) As Figure 1-2 shows, later in 2015, I took a flight on a commercial 747 that made this physical break-in step unnecessary. (No, I did not attempt an attack.)

Figure 1-2. On this commercial flight, I would not need to break into a physical box to connect to the Ethernet. (Photo by author.)

The penetration of IT into the dependent infrastructure can also have consequences. In April 2015, American Airlines flights were delayed when “pilots’ iPads—which the airline uses to distribute flight plans and other information to the crew—abruptly crashed” (apparently due to a faulty app) [81]. In June 2015, United Airlines grounded all of its US flights due to an unspecified problem with “dispatching information” [117]. In June 2015, LOT Polish Airlines needed to ground flights due to an unspecified cyberattack on its ground systems [22]. In August 2015, problems with the air traffic control system in the eastern US significantly disrupted flights to and from Washington and New York [98].

The IoT reaches far.

Infrastructure

The penetration of IT into other aspects of the surrounding infrastructure can also have a negative impact.

For example, consider commuting. In 2015, a commuter train in Boston somehow left its station with passengers but with no driver [91]. Also in 2015, Dutch newspapers reported a number of accidents (including fatalities) from, due to some system bug, the lights at road and train crossings showing “green” even though a train was present [37]. In 2015, the New York Times reported on the fragile state of IoT-connected traffic lights [84]:

Mr. Cerrudo, an Argentine security researcher at IOActive Labs, an Internet security company, found he could turn red lights green and green lights red. He could have gridlocked the whole town with the touch of a few keys, or turned a busy thoroughfare into a fast-paced highway. He could have paralyzed emergency responders, or shut down all roads to the Capitol.

For other examples, consider industrial control systems. In 2000, a disgruntled technician in Australia hacked the control system of a sewage treatment facility to discharge raw sewage [97]. In 1999, a complex system failure (including an unexplained computer outage) caused a gasoline pipeline in Washington State to discharge gasoline, leading to a deadly fire [94]. In 2013, after finding many industrial control systems exposed on the internet, researchers at Trend Micro built honeypots (basically, traps in that they are systems controlled by researchers that appear to be exposed internet-facing industrial control systems) and recorded over 33,000 automated attacks [113, 112]. In January 2015, Rapid7 surveyed the internet and found over 5,000 exposed automated tank gauges at gas stations in the US [52]. In February 2015, Trend Micro found an online gasoline pump that had been renamed “WE_ARE_LEGION,” presumably as a nod to Anonymous [3]. Trend Micro also set up gasoline-specific honeypots and recorded more attacks [114].

In 2014, the New York Times reported on dozens of cases where factory robots had killed workers, cataloging many cases in gruesome detail [77]. (I would have reproduced them here, but the licensing fees were too expensive.) In 2015, robots killed again, at a Volkswagen factory in Germany [8].

Medicine

The IoT also penetrates medical infrastructure.

Security risks from software used in implantable medical devices—such as insulin pumps and pacemaker-style cardiac devices—have been in the public eye for a while, thanks in part to the work of researchers such as Kevin Fu (e.g., [10]). Indeed, fear of such an attack led to Vice President Dick Cheney disabling wireless access to his heart device [63].

However, the devices outside bodies but inside hospitals are also at risk. In 2013, a team of penetration specialists including Billy Rios conducted an engagement at the Mayo Clinic [87]:

For a full week, the group spent their days looking for backdoors into magnetic resonance imaging scanners, ultrasound equipment, ventilators, electroconvulsive therapy machines, and dozens of other contraptions.

“Every day, it was like every device on the menu got crushed.''

Many similar engagements (e.g., [116, 36]) have had similar findings. In June 2015, researchers from TrapX found that in hospitals, the computers disguised as medical devices “all but invisible to security monitoring systems” were rife with malware, some generic but some apparently designed to exfiltrate sensitive medical data [83]. In 2016, researchers found numerous software holes in the Pyxis drug-dispensing cabinets used in hospitals [124]—interesting in part because malicious misuse of the Pyxis interface had already been used to obtain drugs used to murder patients [47].

(Chapter 8 will revisit challenges in using public policy to address these problems.)

The Physical World’s Impact on the IoT

Distributing IT throughout physical reality not only permits the IoT to impact the physical world—it also permits the physical world to impact the IoT.

Missing Things

One avenue to consider is whether or not physical environments provide the connectivity an IoT system expects (often implicitly). Here are a few examples:

-

Here at Dartmouth, we had an interesting incident where a seminar speaker drove—with his family—the 125 miles from Boston to Hanover in a rental car that required “phoning home” before the engine would start. When the speaker stopped for a picnic at a scenic location in rural New Hampshire, he was not able to start the car again, because our part of the country is riddled with “dead zones” with no cellular coverage. The only way to solve the problem was to have the car towed to someplace with cell coverage.

-

In the spring of 2015, the National Institute of Standards and Technology (NIST) released a report raising concerns about IoT applications that “frequently will depend on precision timing in computers and networks, which were designed to operate optimally without it.” The report noted that additionally, “many IoT systems will require precision synchronization across networks” [9].

-

In May 2015, the Fairfax, Virginia, public schools had their standardized testing disrupted for 90 minutes due to a problem in internet connectivity [29]. “Some students had to wait in the test environment after they completed their tests until connectivity was restored and they were able to submit the tests.”

-

At a World Cup ski race in Italy in December 2015, a drone crashed and nearly hit a skier—apparently because interference obstructed the radio channel over which it was controlled [104].

-

In May 2016, Terminal 7 at Kennedy International Airport experienced “travel chaos” because of a network outage; check-in lines stretched to over 1,500 people, and counter personnel needed to issue boarding passes by hand [100].

Large Attack Surface

Another avenue to consider is the degree to which the physical exposure of the IoT provides avenues for adversaries to manipulate the IoT by manipulating remote computation nodes. One basic approach is injecting fake data at sensors:

-

The current electrical grid (and the emerging smart grid) uses distributed sensing of electrical state to balance generation, transmission, and consumption, and to keep things from melting down and blowing up. An area of ongoing concern is whether an adversary can cause the grid to do bad things simply by forging some of this data. At the moment, this issue is in churn: researchers seem to alternate between discovering successful attack strategies and then discovering countermeasures, in part using the fact that the set of all measurements must be consistent with the laws of physics as a “secret weapon” to recognize some kinds of forgery—if a sensor reports something that is not physically possible, then we might conclude that it is lying. (See NIST’s 2012 Cybersecurity in Cyber-Physical Systems Workshop for a snapshot of this work; Chapter 3 will discuss the smart grid further.)

-

When (as discussed earlier) residents of previously quiet Los Angeles neighborhoods were peeved by Waze routing commuter traffic there, they tried to sabotage the app by reporting fake accidents and such; unfortunately for them, massive crowdsourcing enabled Waze to filter out these reports as spurious. (Researchers are making progress on both attacks and defenses here [109].)

-

Some companies are starting to offer insurance discounts to employees who exercise regularly, as documented by wearable Fitbit devices. In response, some enterprising engineers at one of these companies have come up with techniques to forge this documentation [59].

The basic surroundings of IT devices may provide surprising attack vectors. In 2015, researchers demonstrated Portable Instrument for Trace Acquisition (PITA) devices that could spy on a nearby computer via the electromagnetic radiation it emits and that can also fit inside a piece of pita bread [60]. More recently, researchers have demonstrated how adversaries over 100 meters away can eavesdrop on and alter communications between a computer and a wireless keyboard or mouse connected to it, thanks to MouseJack vulnerabilities.

Jumping Across Boundaries

In addition to the physical world influencing the IT infrastructure in surprising ways, one sometimes sees one segment of IT infrastructure influencing another segment—surprising because these segments were previously thought of as independent. The coming IoT is manifesting more of this:

-

For some kinds of electric vehicles, the existing power infrastructure in many local neighborhoods in the US cannot support more than one vehicle charging at a time [51]—introducing another argument for a smart grid that can coordinate between vehicles.

-

A regional electric utility considering deploying wireless smart meters analyzed the potential impact if a hacker penetrated these meters and decided the benefits outweighed the risks (this was relayed to me in personal communication). However, the analysis considered merely the risk to the power grid IT, and neglected to consider that a hacker inside a meter might redirect its software defined radio (SDR) to subvert other infastructures, such as the cellular network.

-

The 2013 compromise of Target credit card data stemmed from credential theft from an HVAC vendor [68]—leading one to wonder why an HVAC vendor needs to connect to the same networks that house the credit card crown jewels.

-

January 2014 brought reports of infected smart refrigerators sending over 750,000 malware-laden emails [2].

-

In January 2015, Krebs reported, “The online attack service launched late last year by the same criminals who knocked Sony and Microsoft’s gaming networks offline over the holidays is powered mostly by thousands of hacked home Internet routers” [66].

-

In May 2015, ITworld reported how employee web browsing and email use was providing a vector for malware to infect point-of-sale (POS) terminals [25].

-

In December 2015, the International Business Times hypothesized that a recent internet-wide distributed denial of service (DDoS) attack may have been caused by a “zombie army botnet unwittingly installed on hundreds of millions of smartphones through an as yet unidentified app” [21].

-

In June 2016, Softpedia reported that a botnet hiding in CCTV cameras was attacking web servers [17].

-

September 2016 brought news of an army of infected IoT devices launching DDoS attacks on Brian Krebs’s security blog [67], and an even larger army launching a DDoS attack on French servers [53].

In some instances, the physical distribution of IT enables new kinds of functionality. Google’s Street View already requires sending out cars instrumented for video; ongoing work seeks to extend that instrumentation to things such as air quality [115]. On a larger scale, Rhett Butler at the Hawaii Institute of Geophysics and Planetology suggests using the backbone of the internet itself—the “nearly 1 million kilometers of submarine fiber optic networks…responsible for over 97 percent of international data transfer”—to sense planetary events such as earthquakes [79].

Worst-Case Scenarios: Cyber Pearl Harbor

Pearl Harbor, as most US citizens were taught in school, was the site of a surprise Japanese attack on the US Navy, which catapulted the US into World War II. In the parlance of contemporary media, the term “Pearl Harbor” has come to denote the concept of an infrastructure left completely undefended and how only a massive attack makes society take that exposure seriously.

Our society’s current information infrastructure is likely full of interfaces with exploitable holes. Pundits often discuss the potential of a “cyber Pearl Harbor”—sometimes in caution, referring to the devastation that could happen if an adversary systematically exploited the holes in exactly the wrong way, but sometimes in frustration that only such a large-scale disaster would create the social will to solve these security problems.

Targeted Malicious Attacks in the IoT

As Figure 1-3 shows, researchers at SRI are already worrying about what might transpire if terrorists combined traditional kinetic methods with IoT attacks. Speculation about what might happen has even more credibility when one considers what has already happened.

Figure 1-3. Researchers at SRI speculate on the potential of augmenting traditional kinetic terrorism with IoT attacks. (Illustration by Ulf Lindqvist of SRI International, used with permission.)

The IoT has already seen vandalism with consequences. Ransomware has already been discovered in hospital computers [35] and smart TVs [16]. Analysts predict ransomware will come to medical devices themselves [34]. In spring 2016, a penetration colleague predicted it will come to all smart home appliances; in summer 2016, hackers at DefCon demonstrated a proof-of-concept for smart thermostats. On a larger scale, in early 2008, the Register reported [72]:

A Polish teenager allegedly turned the tram system in the city of Lodz into his own personal train set, triggering chaos and derailing four vehicles in the process. Twelve people were injured in one of the incidents. The 14-year-old modified a TV remote control so that it could be used to change track points.

In 2014, the German government reported [5]:

A blast furnace at a German steel mill suffered “massive damage” following a cyber attack on the plant’s network…. [A]ttackers used booby-trapped emails to steal logins that gave them access to the mill’s control systems.

On a related note, a penetration colleague of mine has shown me screenshots of steel mill control GUIs exposed on the open internet via VNC, noting that the GUI indicated that something was at “1,215 degrees C” and speculating on what might happen in the facility if one randomly played with the control buttons.

The IoT has also seen apparent nation-state level attacks. Perhaps the best-known of these was 2010’s Stuxnet, malware that used a number of zero-day techniques (and even jumped across airgaps via USB thumb drives) to seek out systems connected to particular Siemens programmable logic controllers (PLCs) wired to centrifuges at Iran’s uranium enrichment facilities (e.g., [69, 70]). Widely believed to have been developed and deployed by the US National Security Agency (NSA), perhaps in collaboration with Israel, Stuxnet was designed to cause enough centrifuge failures to slow down Iran’s alleged weapons program—but not enough to alert Iran that sabotage might be occurring. In June 2016 a “Stuxnet copycat,” also appearing to target specific installations of particular Siemens industrial control systems, was discovered, although its full picture remains unknown [23].

December 2015 brought another high-profile incident: a nation-state level actor, widely believed to be Russia, used a variety of cyber attacks to bring down the power grid in the Ukraine (e.g., [27]). Although no one particular technique here was groundbreaking, the overall coordination and scope was impressive—as was the fact that initial steps of the attack had occurred at least nine months earlier.

Where to Go Next

The IoT will continue to grow exponentially and evolve in remarkable ways. As it does, we must acknowledge that we need to prepare for and, where possible, take actions to avoid certain “fundamental truths.”

First, although some vendors will try to push top-down ecosystems, the IoT will probably grow organically, a global mashup of heterogeneous components with no top-down set of principles determining its emergent behavior. This lack of control might help segment security problems at a macro scale but will be a disturbing reality for any entity that would prefer to centrally control the IoT as a large, intelligent, interconnected network. In particular, we should expect abandoned or otherwise legacy segments of today’s IoT to have unanticipated interactions with and impact on the internet of tomorrow, like buried drums of highly toxic cyberwaste.

The creators of the IoT are only human and tend to replicate components at every opportunity. Industry segments are rooted in system designers’ tendency to apply their favorite tools across the problem space. Common hardware and firmware libraries will show up on the IoT in surprising places; we can expect to see smart snowboards, thermostats, lightbulbs, and scientific instruments using the same connected microcontrollers and firmware. This hidden homogeneity can be bad, because just about anything having a particular “genetic” vulnerability might be compromised. However, a systematic homogeneity might also be beneficial if well-designed and inherently safe subsystems—those having the right “IoT DNA”—are widely adopted.

Consumers care little about testing regimes, product recalls, and other measures enacted in their best interest. IoT watchdog groups might start testing for compliance against a set of IoT safety standards, and governments might impose IoT safety regulations and dictate recalls, but we can expect consumers to purchase and deploy substandard devices. The IoT industry could introduce safety-assuring protocols—for instance, applying blockchains for IoT messaging. However, providers and customers will surely look elsewhere if such measures raise costs without adding significant and obvious value.

Finally, consumers’ hunger for the latest and greatest might save their IoT, but enterprises’ conservatism could break theirs. We might be able to exploit consumers’ inherent desire for newer, better, faster to promote the ecosystem’s health, at least among the consumer-facing IoT segments. We can expect today’s IoT devices to get old fast, even without producers intentionally designing them to go obsolete quickly. Bad actors might get adopted quickly, but they might also fade away quickly.

What Do We Do?

It’s tempting to repeat the old joke about the patient telling the doctor, “It hurts when I do this,” to which the doctor replies, “Then don’t do that.” History shows that we keep building and deploying IT systems that contain serious vulnerabilities, which we later try to patch before too much damage is done. If the IoT’s scale and distribution make this “solution” impractical, then maybe we can just start building systems without the vulnerabilities!

Unfortunately, it’s not at all realistic to assume that, starting today, we’ll suddenly start doing things much better. We need some game-changers: maybe a new programming language, a new approach to highly reliable input validation (e.g., [93]), or a way to use massively parallel multicore cloud computing to thoroughly fuzz-test and formally verify.

Another approach might be a new way to structure systems to mitigate damage when they’re compromised. Instead of a smart grid, perhaps we need a “dumb grid”: well-tested commodity operating systems, compilers, languages, and such that are modular so that developers can break off unneeded pieces. Or maybe we can use the extra cores from Moore’s Law (discussed in Chapter 2) to make each IoT system multicultural: N distinct OSs and implementations, which aren’t likely to be vulnerable in the same way at the same time.

Biology tells us that one of the problems with cancer is when the telomeres mechanism, which limits the number of times a cell can divide, stops working, allowing cell growth to run rampant. Maybe we can mitigate the problem of unlatched and forgotten IoT systems by building in a similar aging mechanism: after enough time (or enough time without patching), they automatically stop working. Of course, this could be dangerous as well. Perhaps instead, for each kind of IoT node, we can define a safe, inert “dumb” state to which it reverts after a time. (One wonders what consumers would think of this feature.)

These are just a few ideas. If we want the IoT to give us a safe and healthy future, we have our work cut out for us.

What Comes Next

The rest of this book focuses on how the emergence of the IoT will bring many changes to how we, as a society, think about computing and manage its risks. Chapter 2 will survey some example IoT architectures. Chapter 3 will consider several IoT application areas where earlier-generation computing added smartness already, with mixed results. Chapter 4 will consider how the standard “design patterns for insecurity” that plague the IoC may surface—and be mitigated—in the IoT. Chapter 5 will examine the challenges of identity and authentication when scaled up to the IoT. Chapter 6, Chapter 7, and Chapter 8 consider privacy, economic, and legal issues, respectively. Chapter 9 considers the impact of the IoT on the “digital divide.” Chapter 10 then concludes with some thoughts on the future of humans and machines, in this brave new internet.4

Works Cited

-

AFP, “Piege dans sa voiture, il meurt deshydrate,” Libération, August 25, 2011.

-

AFP, “Hackers use ‘smart’ refrigerator to send 750,000 virus-laced emails,” Raw Story, January 17, 2014.

-

M. Anderson, “US gas pump hacked with ‘Anonymous’ tagline,” The Stack, February 11, 2015.

-

AP, “Crashed computer traps Thai politician,” Daily Aardvark, May 14, 2003.

-

K. Ashton, “That ‘Internet of Things’ thing,” RFID Journal, June 22, 2009.

-

A. Banafa, “Fog computing is vital for a successful Internet of Things (IoT),” LinkedIn Pulse, June 15, 2015.

-

BBC, “Hack attack causes ‘massive damage’ at steel works,” BBC News, December 22, 2014.

-

K. Bora, “Volkswagen German plant accident: Robot grabs, crushes man to death,” International Business Times, July 2, 2015.

-

C. Boutin, “Lack of effective timing signals could hamper ‘Internet of Things’ development,” NIST Tech Beat, March 19, 2015.

-

W. Burleson and others, “Design Challenges for Secure Implantable Medical Device,” in Proceedings of the 49th ACM/EDAC/IEEE Design Automation Conference, 2012.

-

R. E. Calem, “Connected car security,” CTA News, May 17, 2016.

-

D. Chechik, S. Kenin, and R. Kogan, “Angler takes malvertising to new heights,” SpiderLabs Blog, March 14, 2016.

-

Check Point, “Facebook MaliciousChat,” Check Point Blog, June 7, 2016.

-

S. Checkoway and others, “Comprehensive experimental analyses of automotive attack surfaces,” in Proceedings of the 20th USENIX Security Symposium, 2011.

-

C. Cimpanu, “Oracle settles charges regarding fake Java security update,” Softpedia, December 22, 2015.

-

C. Cimpanu, “Ransomware on your TV, get ready, it’s coming,” Softpedia, November 25, 2015.

-

C. Cimpanu, “A massive botnet of CCTV cameras involved in ferocious DDoS attacks,” Softpedia, June 27, 2016.

-

C. Cimpanu, “ASUS delivers BIOS and UEFI updates over HTTP with no verification,” Softpedia, June 5, 2016.

-

C. Cimpanu, “Medical equipment crashes during heart procedure because of antivirus scan,” Softpedia, May 3, 2016.

-

C. Cimpanu, “Windows zero-day affecting all OS versions on sale for $90,000,” Softpedia, May 31, 2016.

-

L. Constantin, “Attackers use email spam to infect point-of-sale terminals with new malware,” IT world, May 25, 2015.

-

L. Constantin, “Cyberattack grounds planes in Poland,” IT world, June 22, 2015.

-

J. Cox, “There’s a Stuxnet copycat, and we have no idea where it came from,” Motherboard, June 2, 2016.

-

J. Crook, “SmartThings and Samsung team up to make your TV a smart home hub,” TechCrunch, December 29, 2015.

-

A. Cuthbertson, “Massive DDoS attack on core internet servers was ‘zombie army’ botnet from popular smartphone app,” December 11, 2015.

-

Difference Engine, “Deus ex vehiculum,” The Economist, June 23, 2015.

-

E-ISAC, Analysis of the Cyber Attack on the Ukrainian Power Grid. SANS Industrial Control Systems, March 18, 2016.

-

T. Eden, “The absolute horror of WiFi light switches,” Terence Eden’s Blog: Mobiles, Shakespeare, Politics, Usability, Security, March 2, 2016.

-

J. Epstein, “Risks of online test taking,” The Risks Digest, May 21, 2015.

-

A. C. Estes, “Why is my smart home so fucking dumb?,” Gizmodo, February 12, 2015.

-

M. Finnegan, “Toyota recalls 1.9m Prius cars due to software fault,” Computerworld UK, February 2014.

-

D. Fisher, “Gone in less than a second,” ThreatPost, August 6, 2015.

-

D. Fisher, “What’s on TV tonight? Ransomware,” On the Wire, June 13, 2016.

-

T. Fox-Brewster, “As ransomware crisis explodes, Hollywood hospital coughs up $17,000 in Bitcoin,” Forbes, February 18, 2016.

-

T. Fox-Brewster, “White hat hackers hit 12 American hospitals to prove patient life ‘Extremely Vulnerable',” Forbes, February 23, 2016.

-

R. Franck, “Beveiliging sneltram valt niet te vertrouwen,” Algemeen Dagblad, April 3, 2016.

-

S. Gallagher, “Report: Airbus transport crash caused by ‘wipe’ of critical engine control data,” Ars Technica, June 10, 2015.

-

N. Gamer, “Vulnerabilities on SoC-powered Android devices have implications for the IoT,” Trend Micro Industry News, March 14, 2016.

-

M. Garrett, “I stayed in a hotel with Android lightswitches and it was just as bad as you’d imagine,” mjg59’s journal, March 11, 2016.

-

S. Gibbs, “Security researchers hack a car and apply the brakes via text,” The Guardian, August 12, 2015.

-

S. Gibbs, “Windows 10 automatically installs without permission, complain users,” The Guardian, March 15, 2016.

-

A. Goard, “Thief steals $15,000 bike in Sausalito with tap of hand: Police,” NBC Bay Area, February 27, 2015.

-

J. Golson, “Many Lexus navigation systems bricked by over-the-air software update,” The Verge, June 7, 2016.

-

D. Goodin, “Rise of ‘forever day’ bugs in industrial systems threatens critical infrastructure,” Ars Technica, April 9, 2012.

-

D. Goodin, “Foul-mouthed worm takes control of wireless ISPs around the globe,” Ars Technica, May 19, 2016.

-

C. Graeber, “How a serial-killing night nurse hacked hospital drug protocol,” Wired, April 29, 2013.

-

A. Greenberg, “Chrysler catches flak for patching hack via mailed USB,” Wired, September 3, 2015.

-

A. Greenberg, “Hackers remotely kill a Jeep on the highway—with me in it,” Wired, July 21, 2015.

-

S. Harris, “Engineers work to prevent electric vehicle charging from overloading grids,” The Engineer, April 13, 2015.

-

hdmoore, “The Internet of gas station tank gauges,” Rapid7 Community, January 22, 2015.

-

B. Hill, “Latest IoT DDoS attack dwarfs Krebs takedown at nearly 1Tbps driven by 150K devices,” Hot Hardware, September 27, 2016.

-

S. P. Holland and others, “Are there environmental benefits from driving electric vehicles?,” American Economic Review, December 2016.

-

I. Johnston, “Smartwatches that allow pupils to cheat in exams for sale on Amazon,” The Independent, March 3, 2016.

-

D. Kemp, C. Czub, and M. Davidov, Out-of-Box Exploitation: A Security Analysis of OEM Updaters. Duo Security, May 31, 2016.

-

A. Kessler, “Fiat Chrysler issues recall over hacking,” The New York Times, July 24, 2015.

-

O. Khazan, “How to fake your workout,” The Atlantic, September 28, 2015.

-

J. Kirk, “How encryption keys could be stolen by your lunch,” Computerworld, June 22, 2015.

-

Z. Kleinman, “Microsoft accused of Windows 10 upgrade ‘nasty trick',” BBC News, May 24, 2016.

-

W. Knight, “Baidu’s self-driving car takes on Beijing traffic,” MIT Technology Review, December 10, 2015.

-

G. Kolata, “Of fact, fiction and Cheney’s defibrillator,” The New York Times, October 27, 2013.

-

P. Koopman, J. Black, and T. Maxino, “Position paper: Deeply embedded survivability,” in Proceedings of the ARO Planning Workshop on Embedded Systems and Network Security, 2007.

-

K. Koscher and others, “Experimental security analysis of a modern automobile,” in Proceedings of the IEEE Symposium on Security and Privacy, 2010.

-

B. Krebs, “Lizard stresser runs on hacked home routers,” Krebs on Security, January 9, 2015.

-

B. Krebs, “KrebsOnSecurity hit with record DDoS,” Krebs on Security, September 21, 2016.

-

B. Krebs, “Target hackers broke in via HVAC company,” Krebs on Security, February 5, 2014.

-

D. Kushner, “The real story of Stuxnet,” IEEE Spectrum, February 26, 2013.

-

R. Langner, “Stuxnet’s secret twin,” Foriegn Policy, November 19, 2013.

-

S. Levin and N. Woolf, “Tesla driver killed while using Autopilot was watching Harry Potter, witness says,” The Guardian, July 1, 2016.

-

J. Leyden, “Polish teen derails tram after hacking train network,” The Register, January 11, 2008.

-

N. Lomas, “The FTC warns Internet of Things businesses to bake in privacy and security,” TechCrunch, January 8, 2015.

-

P. Longeray, “Windows 3.1 is still alive, and it just killed a French airport,” Vice News, November 13, 2015.

-

J. Lowy, “Will robot cars drive traffic congestion off a cliff?,” AP: The Big Story, May 16, 2016.

-

A. MacGregor, “BMW patches security flaw affecting over 2 million vehicles,” The Stack, February 2, 2015.

-

J. Markoff and C. C. Miller, “As robotics advances, worries of killer robots rise,” The New York Times, June 16, 2014.

-

L. Mearian, “With $15 in Radio Shack parts, 14-year-old hacks a car,” Computerworld, February 20, 2015.

-

D. Oberhaus, “The backbone of the internet could detect earthquakes, but no one’s using it,” Motherboard, August 11, 2015.

-

K. Palani, E. Holt, and S. W. Smith, “Invisible and forgotten: Zero-day blooms in the IoT,” in Proceedings of the 1st IEEE PerCom Workshop on Security, Privacy, and Trust in the IoT, 2016.

-

A. Pasick, “An iPad app glitch grounded several dozen American Airlines planes,” Quartz, April 28, 2015.

-

Paul, “Research: IoT hubs expose connected homes to hackers,” The Security Ledger, April 7, 2015.

-

Paul, “X-rays behaving badly: Devices give malware foothold on hospital networks,” The Security Ledger, June 8, 2015.

-

N. Perlroth, “Traffic hacking: Caution light is on,” The New York Times, June 10, 2015.

-

S. Peterson and P. Faramarzi, “Exclusive: Iran hijacked US drone, says Iranian engineer,” The Christian Science Monitor, December 15, 2011.

-

R. Price, “Google’s parent company is deliberately disabling some of its customers’ old smart-home devices,” Business Insider, April 4, 2016.

-

M. Reel and J. Robertson, “It’s way too easy to hack the hospital,” Bloomberg Businessweek, November 2015.

-

D. Roberts, “New study: Fully automating self-driving cars could actually be worse for carbon emissions,” Vox, February 27, 2016.

-

J. Rogers, “LA traffic is getting worse and people are blaming the shortcut app Waze,” The Associated Press, December 14, 2014.

-

I. Rouf and others, “Security and privacy vulnerabilities of in-car wireless networks: A tire pressure monitoring system case study,” in Proceedings of the 20th USENIX Security Symposium, 2010.

-

B. Salsberg, “Mass. train leaves station without driver,” Burlington Free Press, December 10, 2015.

-

A. San Juan, “Texas man, dog die after being trapped in Corvette,” KHOU, June 10, 2015.

-

L. Sassaman and others, “Security applications of formal language theory,” IEEE Systems Journal, September 2013.

-

R. Singel, “Industrial control systems killed once and will kill again, experts warn,” Wired, April 9, 2008.

-

S. W. Smith and J. S. Erickson, “Never mind Pearl Harbor—What about a cyber Love Canal?,” IEEE Security and Privacy, March/April 2015.

-

T. Smith, “Hacker jailed for revenge sewage attacks,” The Register, October 31, 2001.

-

A. Southall, “Technical problem suspends flights along East Coast,” The New York Times, August 15, 2015.

-

D. Spaar, “Beemer, open thyself!—Security vulnerabilities in BMW’s ConnectedDrive,” c’t, May 2, 2015.

-

L. Stack, “J.F.K. Computer glitch wreaks havoc on air passengers,” The New York Times, May 30, 2016.

-

B. Steele, “Windows 10 update message interrupts live weather report,” Engadget, April 28, 2016.

-

I. Thomson, “Even in remotest Africa, Windows 10 nagware ruins your day: Update burns satellite link cash,” The Register, June 3, 2016.

-

A. Toor, “Heinz ketchup bottle QR code leads to hardcore porn site,” The Verge, June 19, 2015.

-

Tribune wire reports, “Drones banned from World Cup skiing after one nearly falls on race,” Chicago Tribune, December 23, 2015.

-

Z. Tufekci, “Why ‘smart’ objects may be a dumb idea,” The New York Times, August 10, 2015.

-

J. Valentino-Devries, “Rarely patched software bugs in home routers cripple security,” The Wall Street Journal, January 6, 2016.

-

C. Vallance, “Car hack uses digital-radio broadcasts to seize control,” BBC News, July 22, 2015.

-

J. Voelcker, “Tesla Model S hacked in low-speed driving; Patch issued, details tomorrow,” Green Car Reports, August 6, 2015.

-

G. Wang and others, “Defending against Sybil devices in crowdsourced mapping services,” in MobiSys ’16, Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, 2016.

-

R. Wiedeman, “The Big Hack: The day cars droves themselves into walls and the hospitals froze,” New York, June 19, 2016.

-

K. Wilhoit, The SCADA That Didn’t Cry Wolf: Who’s Really Attacking Your ICS Equipment? (Part 2). Trend Micro Research Paper, 2013.

-

K. Wilhoit, Who’s Really Attacking Your ICS Equipment? Trend Micro Research Paper, 2013.

-

K. Wilhoit and S. Hilt, The GasPot Experiment: Unexamined Perils in Using Gas-Tank-Monitoring Systems. TrendLabs, 2015.

-

C. Wood, “Google to measure air quality through Street View,” Gizmag, July 30, 2015.

-

K. Zetter, “It’s insanely easy to hack hospital equipment,” Wired, April 25, 2014.

-

K. Zetter, “All U.S. United flights grounded over mysterious problem,” Wired, June 2, 2015.

-

K. Zetter, “Feds say that banned researcher commandeered plane,” Wired, May 15, 2015.

-

V. Zhang, “High-profile mobile apps at risk due to three-year-old vulnerability,” TrendLabs Security Intelligence Blog, December 8, 2015.

-

Y. Zitun and E. B. Kimon, “IDF investigation finds soldiers in Qalandiya acted appropriately,” YNet News, March 1, 2016.

-

Z. Zorz, “65,000+ Land Rovers recalled due to software bug,” Help Net Security, July 14, 2015.

-

Z. Zorz, “Cheap web cams can open permanent, difficult-to-spot backdoors into networks,” Help Net Security, January 14, 2016.

-

Z. Zorz, “Researchers hack the Mitsubishi Outlander SUV, shut off alarm remotely,” Help Net Security, June 6, 2016.

-

Z. Zorz, “1,400+ vulnerabilities found in automated medical supply system,” Help Net Security, March 30, 2016.

1 Indeed, strictly speaking, it’s not correct to say the IoT is coming; as examples throughout this book show, it’s already here. What’s coming is much, much more of it—and as one colleague observes, “In making predictions, we always tend to overestimate short-term impact and underestimate long-term impact.”

2 However, despite the impact of the Pearl Harbor attack, one should keep in mind that it was just an attack on one base, and only on some of the ships at that base; a modern cyber version would likely not have such a limited scope.

3 At least, one likes to think so; friends and colleagues point out examples of mission-critical computing running on obsolete operating systems and/or with source code no longer available.

4 Parts of “Worst-Case Scenarios: Cyber Love Canal”, “Worst-Case Scenarios: Cyber Pearl Harbor”, and “Where to Go Next” are adapted from portions of my paper [96] and used with permission.

Get The Internet of Risky Things now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.