Chapter 1. Graph Thinking

Think about the first time you learned about graph technology.

The scene probably started at the whiteboard where your team of directors, architects, scientists, and engineers were discussing your data problems. Eventually, someone drew the connections from one piece of data to another. After stepping back, someone noted that the links across the data built up a graph.

That realization sparked the beginning of your teamâs graph journey. The group saw that you could use relationships across the data to provide new and powerful insights to the business. An individual or a small group was probably tasked with evaluating the techniques and tools available for storing, analyzing, and/or retrieving graph-shaped data.

The next major revelation for your team was likely that itâs easy to explain your data as a graph. But itâs hard to use your data as a graph.

Sound familiar?

Much like this whiteboard experience, earlier teams discovered connections within their data and turned them into valuable applications we use everyday. Think about apps like Netflix, LinkedIn, and GitHub. These products translate connected data into an integral asset used by millions of people around the world.

We wrote this book to teach you how they did it.

As both tool builders and tool users, we have had the opportunity to sit on both sides of the whiteboard conversation hundreds of times. From our experiences, we collected a core set of choices and subsequent technology decisions to accelerate your journey with graph technology.

This book will be your guide in navigating the space between understanding your data as a graph and using your data as a graph.

Why Now? Putting Database Technologies in Context

Graphs have been around for centuries. So why are they relevant now?

And before you skip this section, we ask you to hear us out. We are about to go into history here; it isnât long, and it isnât involved. We need to do this because the successes and failures of our recent history explain why graph technology is relevant again.

Graphs are relevant now because the tech industryâs focus has shifted over the last few decades. Previously, technologies and databases focused on how to most efficiently store data. Relational technologies evolved as the front-runner to achieve this efficiency. Now we want to know how we can get the most value out of data.

Todayâs realization is that data is inherently more valuable when it is connected.

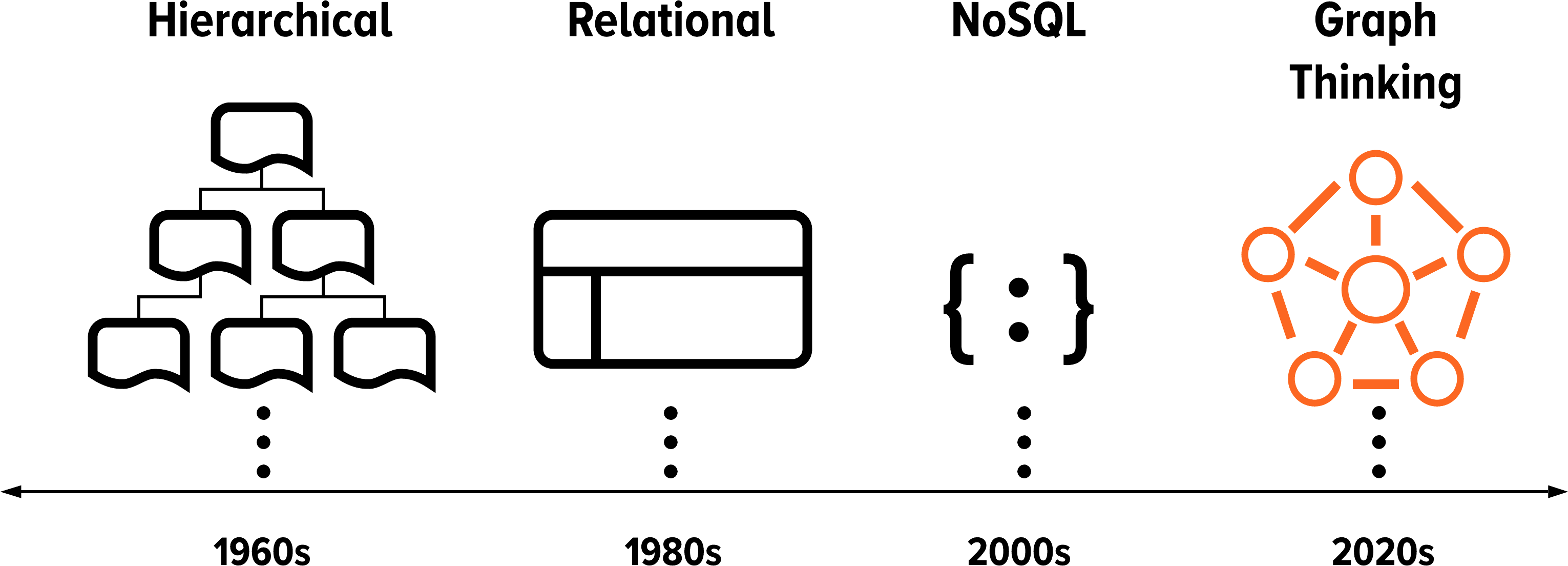

A little bit of historical context on the evolution of database technologies sheds a lot of light on how we got here, and maybe even on why you picked up this book. The history of database technology can loosely be divided into three eras: hierarchical, relational, and NoSQL. The following abbreviated tour explores each of these historical eras, with a focus on how each era is relevant to this book.

Note

The following sections provide you with an abridged version of the evolution of graph technology. We are highlighting only the most relevant parts of our industryâs vast history. At the very least, we are saving you from losing your valuable time down the rabbit hole of a self-guided Wikipedia link walking tourâthough ironically, the self-guided version would be walking through todayâs most accessible knowledge graph.

This brief history will take us from the 1960s to today. Our tour will culminate with the fourth era of graph thinking that is on our doorstep, as shown in Figure 1-1. We are asking you to take this short journey with us because we believe that historical context is one of the keys to unlocking the wide adoption of graph technologies within our industry.

Figure 1-1. A high-level timeline showing the historical context on the evolution of database technology to illustrate the emergence of graph thinking

1960sâ1980s: Hierarchical Data

Technical literature interchangeably labels the database technologies of the 1960s through the 1980s as âhierarchicalâ or ânavigational.â Irrespective of the label, the thinking during this era aimed to organize data in treelike structures.

During this era, database technologies stored data as records that were linked to one another. The architects of these systems envisioned walking through these treelike structures so that any record could be accessed by a key or system scan or through navigating the treeâs links.

In the early 1960s, the Database Task Group within CODASYL, the Conference/Committee on Data Systems Languages, organized to create the industryâs first set of standards. The Database Task Group created a standard for retrieving records from these tree structures. This early standard is known as âthe CODASYL approachâ and set the following three objectives for retrieving records from database management systems:1

-

Using a primary key

-

Scanning all the records in a sequential order

-

Navigating links from one record to another

Note

CODASYL was a consortium formed in 1959 and was the group responsible for the creation and standardization of COBOL.

Aside from the history lesson, there is an ironic point we are building up to. At the inception of this approach, the technologists of CODASYL envisioned retrieving data by keys, scans, and links. To date, we have seen significant innovation in and adoption of two of these three original standards: keys and scans.

But what happened with the third goal of CODASYLâs retrieval standardization: to navigate links from one record to another? Storing, navigating, and retrieving records according to the links between them describes what we refer to today as graph technology. And as we mentioned before, graphs are not new; technologists have been using them for years.

The short version of this part of our history is that CODASYLâs link-navigating technologies were too difficult and too slow. The most innovative solutions at the time introduced B-trees, or self-balancing tree data structures, as a structural optimization to address performance issues. In this context, B-trees helped speed up record retrieval by providing alternate access paths across the linked records.2

Ultimately, the imbalance among implementation expenditures, hardware maturity, and delivered value resulted in these systems being shelved for their speedier cousin: relational systems. As a result, CODASYL no longer exists today, though some of the CODASYL committees continue their work.

1980sâ2000s: Entity-Relationship

Edgar F. Coddâs idea to separate the organization of data from its retrieval system ignited the next wave of innovation in data management technologies.3 Coddâs work founded what we still refer to as the entity-relationship era of databases.

The entity-relationship era encompasses the decades when our industry polished the approach for retrieving data by a key, which was one of the objectives set by the early working groups of the 1960s. During this era, our industry developed technology that was, and still is, extremely efficient at storing, managing, and retrieving data from tables. The techniques developed during these decades are still thriving today because they are tested, documented, and well understood.

The systems of this era introduced and popularized a specific way of thinking about data. First and foremost, relational systems are built on the sound mathematical theory of relational algebra. Specifically, relational systems organize your data into sets. These sets focus on the storage and retrieval of real-world entities, such as people, places, and things. Similar entities, such as people, are grouped together in a table. In these tables, each record is a row. An individual record is accessed from the table by its primary key.

In relational systems, entities can be linked together. To create links between entities, you create more tables. A linking table will combine the primary keys of each entity and store them as a new row in the linking table. This era, and the innovators within it, created the solution for tabular-shaped data that still thrives today.

There are volumes of books and more resources than one can mention on the topic of relational systems. This book does not intend to be one of them. Instead, we want to focus on the thought processes and design principles that have become widely accepted today.

For better or for worse, this era introduced and ingrained the mentality that all data maps to a table.

If your data needs to be organized in and retrieved from a table, relational technologies remain the preferred solution. But however integral their role remains, relational technologies are not a one-size-fits-all solution.

The late â90s brought early signs of the information age through the popularization of the web. This stage during our short history hinted at volumes and shapes of data that were previously unplanned and unused. At this time in database innovation, incomprehensible volumes of data in diverse shapes began to fill the queues of applications. A key realization at this point was that the relational model was lacking: there was no mention of the intended use for the data. The industry had a detailed storage model, but nothing for analyzing or intelligently applying that data.

This brings us to the third and most recent wave of database innovation.

2000sâ2020s: NoSQL

The development of database technologies from the 2000s to the 2020s, approximately, is characterized as the advent of the NoSQL (non-SQL or ânot only SQLâ) movement. The objective of this era was to create scalable technologies that stored, managed, and queried all shapes of data.

One way to describe the NoSQL era relates database innovation to the burgeoning of the craft beer market in the United States. The process of fermenting the beer didnât change, but flavors were added and the quality and freshness of ingredients were elevated. A closer connection developed between the brew master and the consumer, yielding an immediate feedback loop on product direction. Now, instead of three brands of beer in your supermarket, you likely have more than 30.

Instead of finding new combinations for fermentation, the database industry experienced exponential growth in choices for data management technologies. Architects needed scalable technologies to address the different shapes, volumes, and requirements of their rapidly growing applications. Popular data shapes that emerged during this movement were key-value, wide-column, document, stream, and graph.

The message of the NoSQL era was quite clear: storing, managing, and querying data at scale in tables doesnât work for everything, just like not everyone wants to drink a light pilsner.

There were a few motivations that led to the NoSQL movement. These motivations are integral to understanding why and where we are within the hype cycle of the graph technology market. The three we like to call out are the need for data serialization standards, specialized tooling, and horizontal scalability.

First, the rise in popularity of web-based applications created natural channels for passing data between these applications. Through these channels, innovators developed new and different standards for data serialization such as XML, JSON, and YAML.

Naturally, these standardizations led to the second motivation: specialized tooling. The protocols for exchanging data across the web created structures that were inherently not tabular. This demand led to the innovation and rise in popularity of key-value, document, graph, and other specialized databases.

Last, this new class of applications came with an influx of data that put pressure on system scalability like never before. Derivatives and applications of Mooreâs law predicted the silver lining of this era as we saw the cost of hardware, and thus the cost of data storage, continue to decrease. The effects of Mooreâs law enabled data duplication, specialized systems, and overall computation power to become less expensive.4

Together, the innovations and new demands of the NoSQL era paved the way for the industryâs migration from scale-up systems to scale-out systems. A scale-out system adds physical or virtual machines to increase the overall computational capacity of a system. A scale-out system, generally referred to as a âcluster,â appears to the end-user as a single platform; the user has no idea that their workload is actually being served by a collection of servers. On the other hand, a scale-up system procures more powerful machines. Out of room? Get a bigger box, which is more expensive, until there are no bigger boxes to get.

Note

Scaling out means adding more resources to spread out a load, typically in parallel. Scaling up means making a resource bigger or faster so that it can handle more load.

Given these three motivations, this versatile tool set for building scalable data architectures for nontabular data evolved to be the most important deliverable of the NoSQL era. Now development teams have choices to evaluate when designing their next application. They can select from a suite of technologies to accommodate different shapes, velocities, and scalability requirements of their data. There are tools that manage, store, search, and retrieve document, key-value, wide-column, and/or graph data at any scale. With these tools, we began working with multiple forms of data in ways previously unachievable.

What can we do with this unique collection of tools and data? We can solve more complex problems faster and at a larger scale.

2020sâ?: Graph

We promised you that our history tour would be brief and purposeful. This section delivers on that promise by connecting the important moments from our condensed tour. Together, the connections we see across our industryâs history set the stage for the fourth era of database innovation: the wave of graph thinking.

This era in innovation is shifting from efficiency of the storage systems to extracting value from the data the storage systems contain.

Why the 2020s?

Before we can outline our perspective on the graph era, you might be wondering why we are starting the era of graph thinking in 2020. We want to take a brief moment to explain our position on the timing of the graph market.

Our callout to the general timeline of 2020 comes from the intersection of two trains of thought. At this intersection, we are crossing Geoffrey Mooreâs popular adoption model5 with the timing observed during the past three eras of database innovation.

Note

Like CODASYL, the technology adoption life cycle commonly attributed to Moore originated in the 1950s. See Everett Rogerâs 1962 book Diffusion of Innovations.6

Specifically, there is a proven and observable time lag between early adopters and the wide adoption of new technologies. We saw this time lag in â1980sâ2000s: Entity-Relationshipâ with relational databases during the 1970s. There was a 10-year lag between the first paper and corresponding viable implementations of relational technology. You can find examples of the same time lag within each of the other eras.

History has shown us that every era prior to the graph era contained a niche period that saw wide adoption years later. By looking to the 2020s, we are making this same assumption about the state of the graph market. History has also shown us that this doesnât mean that the existing tools are going to go away.

However you would like to measure it, this is not a stock market prediction where we are nailing down a date. Our outlook ultimately describes a new era of technology adoption that is being driven by an evolution of value. That is, value is shifting from efficiency to being derived from highly connected data assets. These changes take time and do not run on schedules.

Connecting the dots

Recall the three patterns of retrieval envisioned by the CODASYL committee in the 1960s: accessing data by keys, scans, and links. Extracting a piece of data by its key, in any shape, remains the most efficient way to access it. This efficiency was achieved during the entity-relationship era and remains a popular solution.

As for the second goal of the CODASYL committee, accessing data through scans, the NoSQL era created technologies capable of handling large scans of data. Now we have software and hardware capable of processing and extracting value from massive datasets at immense scale. That is to say: we have the committeeâs first two goals nailed down.

Last on the list: accessing data by traversing links. Our industry has come full circle.

The industryâs return to focusing on graph technologies goes hand in hand with our shift from efficiently managing data to needing to extract value from it. This shift doesnât mean we no longer need to efficiently manage data; it means we have solved one problem well and are moving on to address the harder problem. Our industry now emphasizes value alongside speed and cost.

Extracting value from data can be achieved when you are able to connect pieces of information and construct new insights. Extracting value in data comes from understanding the complex network of relationships within your data.

This is synonymous with recognizing the complex problems and complex systems that are observable across the inherent network in your data.

Our industryâs and this bookâs focus looks toward developing and deploying technologies that deliver value from data. As in the relational era, a new way of thinking is required to understand, deploy, and apply these technologies.

A shift in mindset needs to occur in order to see the value we are talking about here. This mindset is a shift from thinking about your data in a table to prioritizing the relationships across it. This is what we call graph thinking.

What Is Graph Thinking?

Without explicitly stating it, we already walked through what we call graph thinking during the whiteboard scene at the beginning of this chapter.

When we illustrated the realization that your data could look like a graph, we were recreating the power of graph thinking. It is that simple: graph thinking encompasses your experience and realizations when you see the value of understanding relationships across your data.

Note

Graph thinking is understanding a problem domain as an interconnected graph and using graph techniques to describe domain dynamics in an effort to solve domain problems.

Being able to see graphs across your data is the same as recognizing the complex network within your domain. Within a complex network, you will find the most complex problems to solve. And most high-value business problems and opportunities are complex problems.

This is why the next stage of innovation in data technologies is shifting from a focus on efficiency to a focus on finding value by specifically applying graph technologies.

Complex Problems and Complex Systems

We have used the term complex problem a few times now without providing a specific description. When we talk about complex problems, we are referring to the networks within complex systems.

- Complex problems

-

Complex problems are the individual problems that are observable and measurable within complex systems.

- Complex systems

-

Complex systems are systems composed of many individual components that are interconnected in various ways such that the behavior of the overall system is not just a simple aggregate of the individual componentsâ behavior (called âemergent behaviorâ).

Complex systems describe the relationships, influences, dependencies, and interactions among the individual components of real-world constructs. Simply put, a complex system describes anything where multiple components interact with each other. Examples of complex systems are human knowledge, supply chains, transportation or communication systems, social organization, earthâs global climate, and the entire universe.

Most high-value business problems are complex problems and require graph thinking. This book will teach you the four main patternsâneighborhoods, hierarchies, paths, and recommendationsâused to solve complex problems with graph technology for businesses around the world.

Complex Problems in Business

Data is no longer just a by-product of doing business. Data is increasingly becoming a strategic asset in our economy. Previously, data was something that needed to be managed with the greatest convenience and the least cost to enable business operation. Now it is treated as an investment that should yield a return. This requires us to rethink how we handle and work with data.

For example, the late stage of the NoSQL era saw the acquisitions of LinkedIn and GitHub by Microsoft. These acquisitions gave measurement to the value of data that solves complex problems. Specifically, Microsoft acquired LinkedIn for $26 billion on an estimated $1 billion in revenue. GitHubâs acquisition set the price at $7.8 billion on an estimated $300 million in revenue.

Each of these companies, LinkedIn and GitHub, owns the graph to its respective networks. Their networks are the professional and the developer graphs, respectively. This puts a 26Ã multiplier on the data that models a domainâs complex system. These two acquisitions begin to illustrate the strategic value of data that models a domainâs graph. Owning a domainâs graph yields significant return on a companyâs valuation.

We do not want to misrepresent our intentions with these statistics. Observing high revenue multiples for fast-growing startups isnât a novelty. We specifically mention these two examples because GitHub and LinkedIn found and monetized value from data. These revenue multiples are higher than those valuations for similarly sized and similarly growing startups because of the data asset.

By applying graph thinking, these companies are able to represent, access, and understand the most complex problem within their domain. In short, these companies built solutions for some of the largest and most difficult complex systems.

Companies that have a head start on rethinking data strategies are those that built technology to model their domainsâ most complex problems. Specifically, what do Google, Amazon, FedEx, Verizon, Netflix, and Facebook all have in common? Aside from being among todayâs most valued companies, each one owns the data that models its domainâs largest and most complex problem. Each owns the data that constructs its domainâs graph.

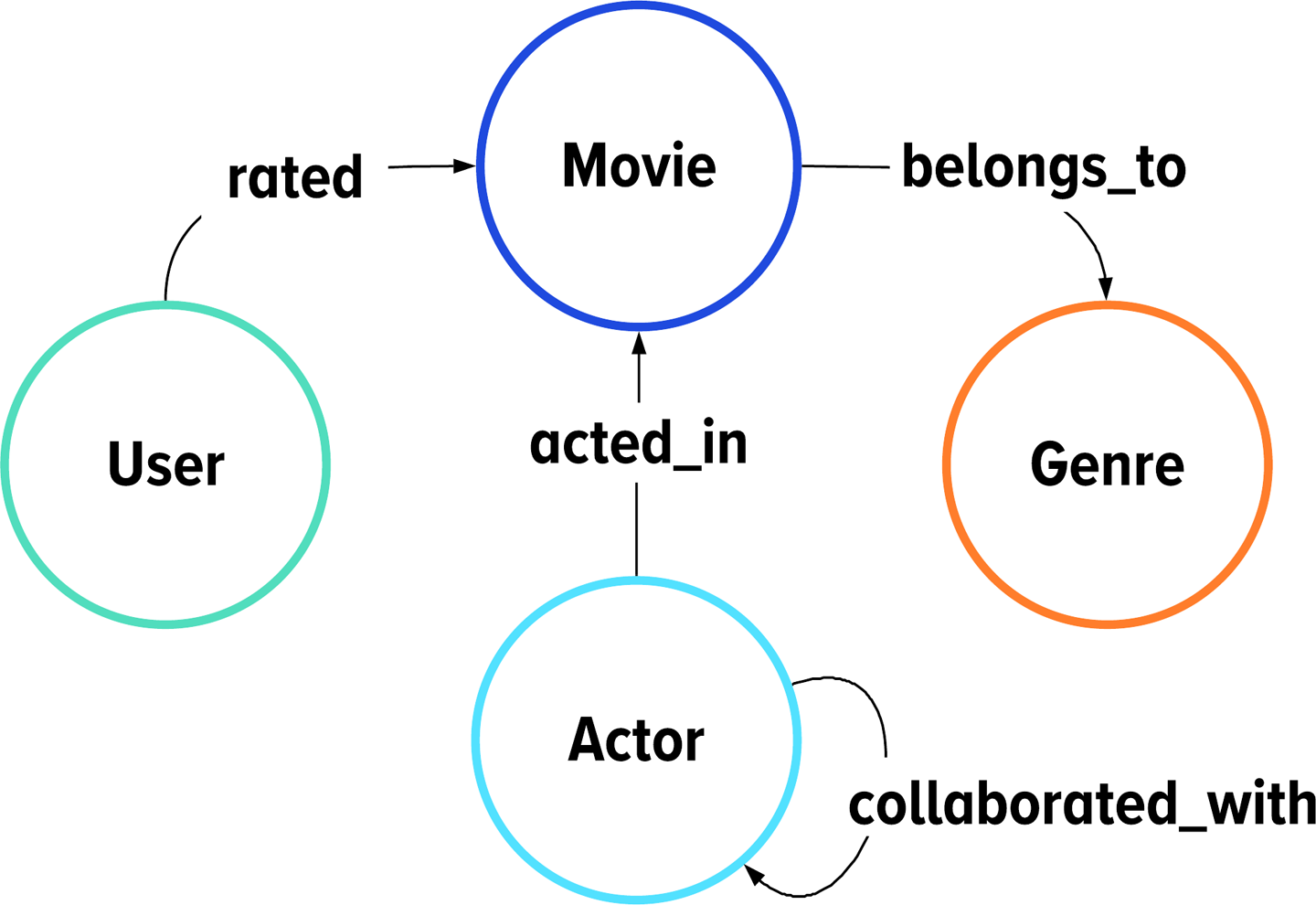

Just think about it. Google has the graph of all human knowledge. Amazon and FedEx contain the graphs of our global supply chain and transportation economies. Verizonâs data builds up our worldâs largest telecommunications graph. Facebook has the graph of our global social network. Netflix has access to the entertainment graph, modeled in Figure 1-2 and implemented in the final chapters of this book.

Figure 1-2. One way to model Netflixâs data as graph and the final example you will implement in this book: collaborative filtering at scale

Going forward, those companies that invest in data architectures to model their domainsâ complex systems will join the ranks of these behemoths. The investment in technologies for modeling complex systems is the same as prioritizing the extraction of value from data.

If you want to get value out of your data, the first place to look is within its interconnectivity. What you are looking for is the complex system that your data describes. From there, your next decisions center around the right technologies for storing, managing, and extracting this interconnectivity.

Making Technology Decisions to Solve Complex Problems

Whether or not you work at one of the companies previously mentioned, you can learn to apply graph thinking to the data in your domain.

So where do you get started?

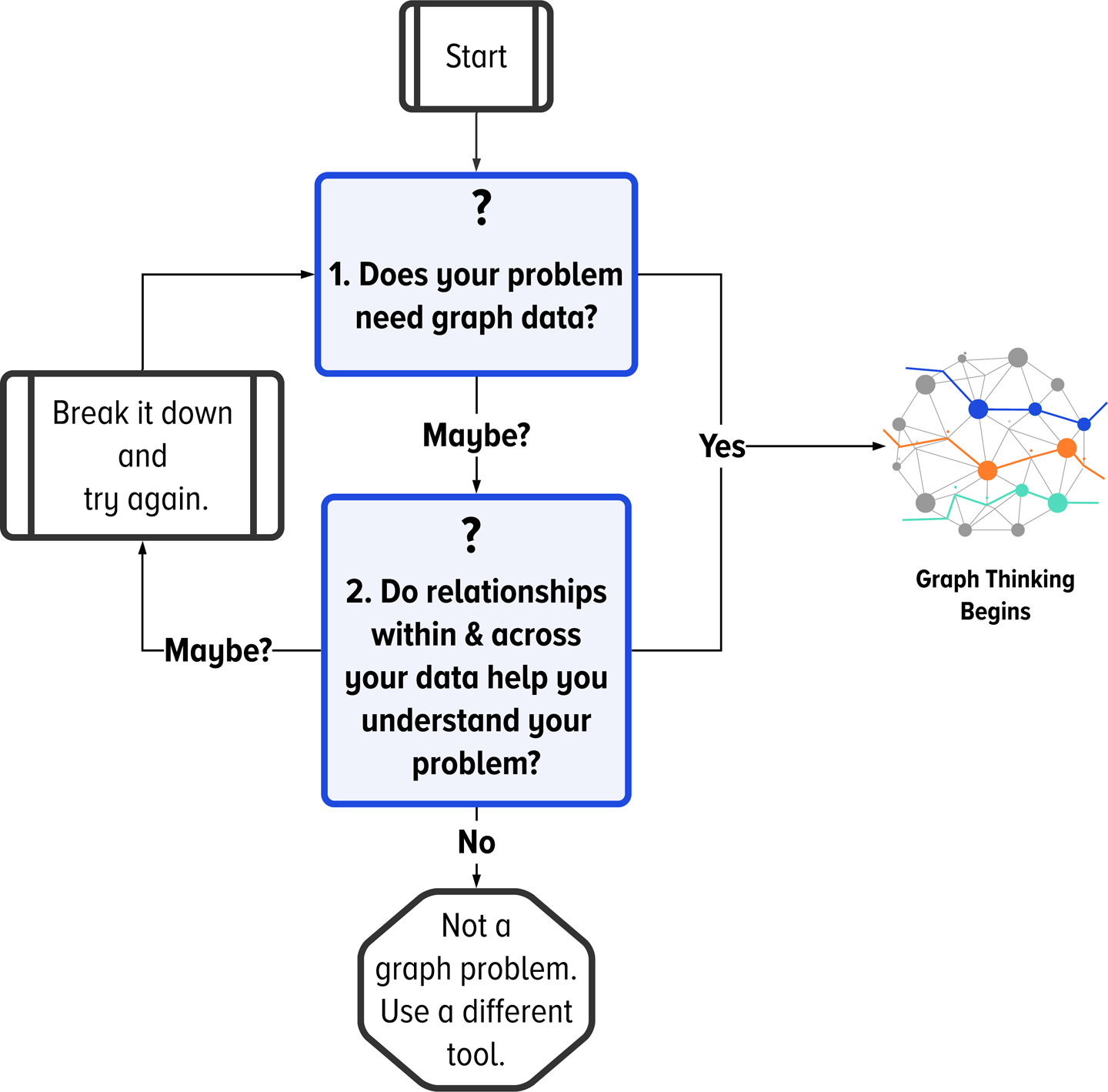

The difficulty with learning and applying graph thinking begins with recognizing where relationships do or do not add value within your data. We use the two images in this section to simplify the stops along the way and illustrate the challenges ahead.

Though simple, Figure 1-3 challenges you to evaluate pivotal questions about your data. This first decision requires your team to know the type of data your application requires. We specifically start with this question because it is often overlooked.

Other teams before yours have overlooked the choices shown in Figure 1-3 because the lure of the new distracted them from following established processes for building production applications. This strain between new and established caused early teams to move too quickly through a critical evaluation of their applicationâs goals. Because of this, we saw many graph projects fail and be shelved.

Letâs step through what we mean in Figure 1-3 to keep you from repeating the common mistakes of early adopters of graph technologies.

Figure 1-3. Not every problem is a graph problemâthe first decision you need to make

Question 1: Does your problem need graph data?

There are many ways of thinking about data. This first question in the decision tree challenges you to understand the shape of data that your application requires. For example, the mutual connections section on LinkedIn is a great example of a âyesâ answer to question 1 in Figure 1-3. LinkedIn uses relationships between contacts so you can navigate your professional network and understand your shared connections. Presenting a section of mutual connections to an end user is a very popular way that graph shaped data is also used by Twitter, Facebook, and other social networking applications.

When we say âshape of data,â we are referring to the structure of the valuable information you want to get out of your data. Do you want to know the name and age of a person? We would describe that as a row of data that would fit into a table. Do you want to know the chapter, section, page, and example in this book that shows you how to add a vertex to a graph? We would describe that as nested data that would fit into a document or hierarchy. Do you want to know the series of friends of your friends that connect you to Elon Musk? Here you are asking for a series of relationships that best fit into a graph.

Thinking top-down, we advise that the shape of your data drive the decision about your database and technology options. The types of data commonly used in modern applications are shown in Table 1-1.

| Data Description | Data Shape | Usage | Database Recommendation |

|---|---|---|---|

Spreadsheets or tables |

Relational |

Retrieved by a primary key |

RDBMS databases |

Collections of files or documents |

Hierarchical or nested |

Root identified by an ID |

Document databases |

Relationships or links |

Graph |

Queried by a pattern |

Graph databases |

For the most interesting data problems today, you need to be able to apply all three ways of thinking about your data. You need to be fluent in applying each to your data problem and its subproblems. For each piece of your problem, you need to understand the shape of the data coming into, residing within, and leaving your application. Each of these points, and any time in which data is in flight, drives the requirements for technology choices in your application.

If you are unsure about the shape of data that your problem requires, the next question from Figure 1-3 challenges you to think about the importance of relationships within your data.

Question 2: Do relationships within your data help you understand your problem?

The more pivotal question from Figure 1-3 asks whether relationships within your data exist and bring value to your business problem. A successful use of graph technology hinges on applying this second question from the decision tree. To us, there are only three answers to this question: yes, no, or maybe.

If you can confidently answer yes or no, then the path is clear. For example, LinkedInâs mutual connection section exemplifies a clear âyesâ for graph-shaped data whereas LinkedInâs search box requires faceted search functionality and is a clear âno.â We can make these clear distinctions by understanding the shape of data required to solve the business problem.

If relationships within your data help solve your business problem, then you need to use and apply graph technologies within your application. If they do not, then you need to find a different tool. Maybe a choice from Table 1-1 will be a solution for your problem at hand.

The tricky part comes into play when you arenât exactly sure whether relationships are important to your business problem. This is shown with the âMaybe?â choice at left in Figure 1-3. In our experience, if your line of thinking brings you to this decision point, then you are likely trying to solve too large of a problem. We advise that you break down your problem and start back at the top of Figure 1-3. The most common problem we advise teams to break down is entity resolution, or knowing who-is-who in your data. Chapter 11 details an example of when to use graph structure within entity resolution.

Common missteps in understanding your data

Sometimes, seeing the shape of your data as a graph can subsume the importance of the other two data shapes: nested and tabular. Teams commonly misinterpret this red herring.

While you may think about your problem as a complex problem and therefore employ graph thinking to make sense of it, that does not mean you have to apply graph technologies to all data components of your problem. In fact, it may be advantageous to project certain components or subproblems onto tables or nested documents.

It will always be useful to think in projections (to files or tables). So our thought exercise in Figure 1-3 is more than âWhich is the best way to think about your data?â It is above delving into a more agile thought process to break down complex problems into smaller components. That is, we encourage you to consider the best way to think about your data for the current problem at hand.

The shortest version of what we are trying to say in Figure 1-3 is: use the right tool for the problem at hand. And when we say âtoolâ here, we are thinking very broadly. We arenât necessarily using that term to refer to the choice of databases; we are thinking more broadly about the scope of data representation choices.

So You Have Graph Data. Whatâs Next?

The first question from Figure 1-3 challenges you to apply query-driven design to your data representation decisions. There may be parts of your complex problem that are best represented with tables or nested documents. That is expected.

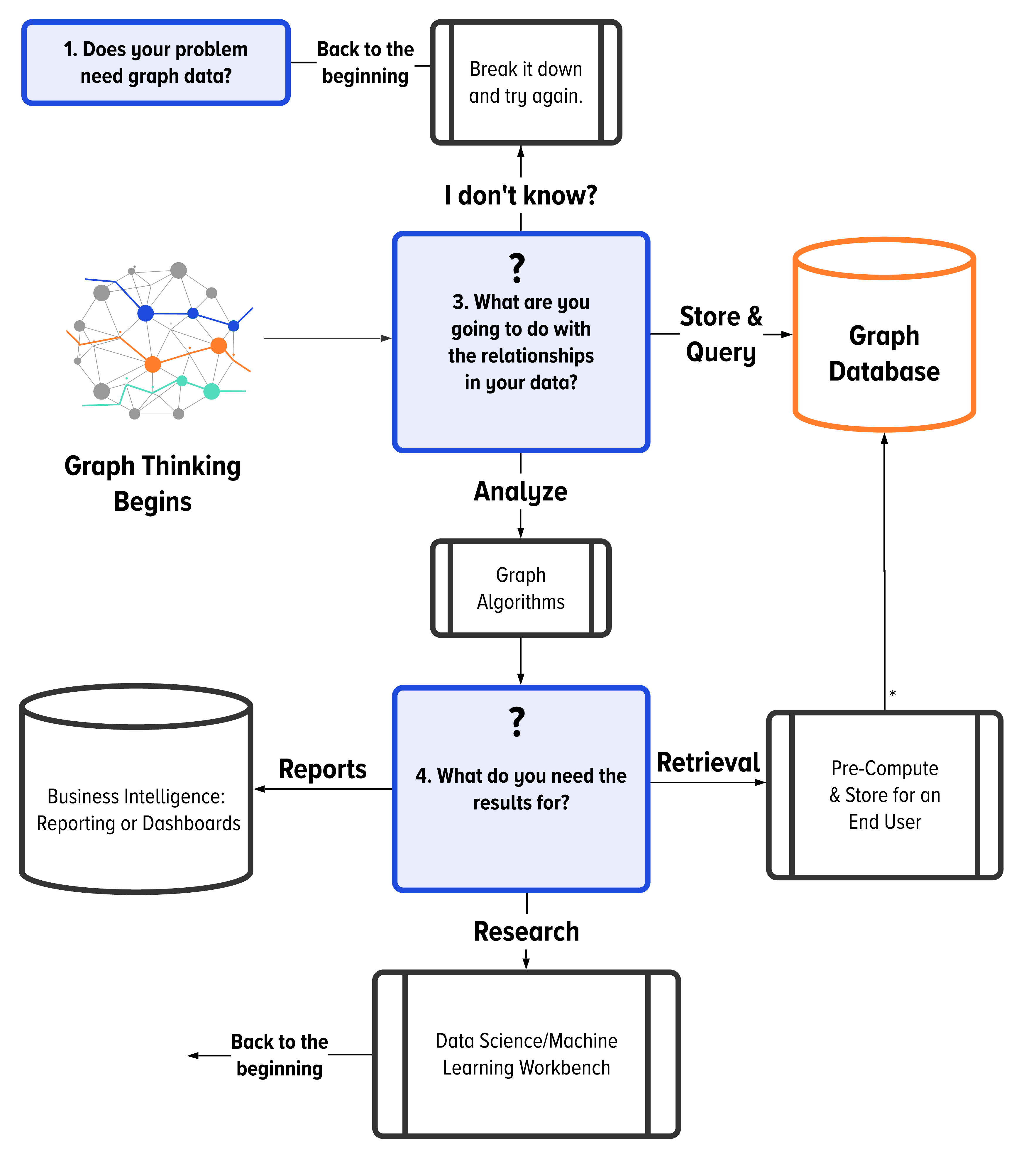

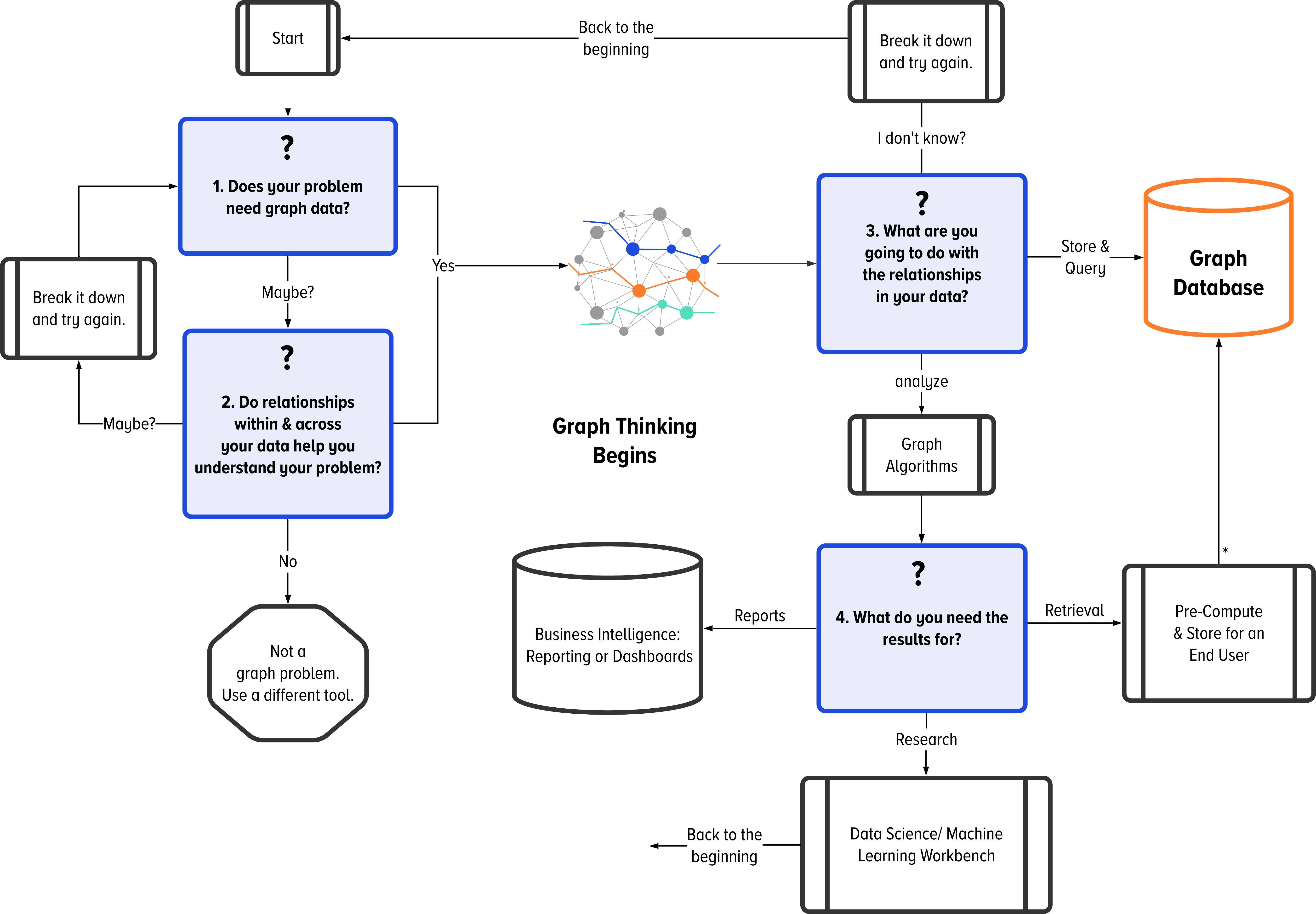

But what happens when you have graph data and need to use it? This brings us to the second part of our graph thinking thought process, shown in Figure 1-4.

Figure 1-4. How to navigate the applicability and usage of graph data within your application

Moving forward, we are assuming that your application benefits from understanding, modeling, and using the relationships within your data.

Question 3: What are you going to do with the relationships in your data?

Within the world of graph technologies, there are two main things you will need to do with your graph data: analyze it or query it. Continuing the LinkedIn example, the mutual connections section is an example of when graph data is queried and loaded into view. LinkedInâs research team probably tracks the average number of connections between any two people, which is an example of analyzing graph data.

The answer to this third question divides graph technology decisions into two camps: data analysis versus data management. The center of Figure 1-4 shows this question and the decision flow for each option.

Note

When we say analyze, we are referring to when you need to examine your data. Usually, teams spend time studying the relationships within their data with the goal of finding which relationships are important. This process is different from querying your graph data. Query refers to when you need to retrieve data from a system. In this case, you know the question you need to ask and the relationships required to answer the question.

Letâs start with the option that moves to the right: the cases when you know your end application needs to store and query the relationships within your data. Admittedly, this is the least likely path today, due to the stage and age of the graph industry. But in these cases, you are primed and ready to move directly to using a graph database within your application.

From our collaborations, we have found a common set of use cases in which databases are needed to manage graph data. Those use cases are the topics of the upcoming chapters, and we will save them for later discussion.

Most often, however, teams know that their problems require graph-shaped data, but they do not know exactly how to answer their questions or which relationships are important. This is pointing toward needing to analyze your graph data.

From here, we challenge you and your team to take one more step in this journey. Our request is that you think about the deliverables from analyzing your graph data. Creating structure and purpose around graph analysis helps your team make more informed choices for your infrastructure and tools. This is the final question posed in Figure 1-4.

Question 4: What do you need the results for?

Topics in graph data analysis can range from understanding specific distributions across the relationships to running algorithms across the entire structure. This is the area for algorithms such as connected components, clique detection, triangle counting, calculating a graphâs degree distribution, page rank, reasoners, collaborative filtering, and many, many others. We will define many of these terms in upcoming chapters.

We most often see three different end goals for the results of a graph algorithm: reports, research, or retrieval. Letâs dig into what we mean by each of those options.

Note

We are going into detail for all three options (reports, research, and retrieval) because this is what most people are doing with graph data today. The remaining technical examples and discussion in this book are focused primarily on when you have decided you need a graph database.

First, letâs talk about reporting. Our use of the word reports refers to the traditional need for intelligence and insights into your businessâs data. This is most commonly referred to as business intelligence (BI). While debatably misapplied, the deliverables of many early graph projects aimed to provide metrics or inputs into an executiveâs established BI pipeline. The tools and infrastructure you will need for augmenting or creating processes for business intelligence from graph data deserve their own book and deep dive. This book does not focus on the architecture or approaches for BI problems.

Within the realm of data science and machine learning, you find another common use of graph algorithms: general research and development. Businesses invest in research and development to find the value within their graph-shaped data. There are a few books that explore the tools and infrastructure you will need for researching graph-structured data; this book is not one of them.

This brings us to the last path, labeled âretrieval.â In Figure 1-4, we are specifically referencing those applications that provide a service to an end user. We are talking about data-driven products that serve your customers. These products come with expectations around latency, availability, personalization, and so on. These applications have different architectural requirements than applications that aim to create metrics for an internal audience. This book will cover these topics and use cases in the coming technology chapters.

Think back to our mention of LinkedIn. If you use LinkedIn, you have likely interacted with one of the best examples we can think of to describe the âretrievalâ path in Figure 1-4. There is a feature in LinkedIn that describes how you are connected to any other person in the network. When you look at someone elseâs professional profile, this feature describes whether that person is a 1st-degree, 2nd-degree, or 3rd-degree connection. The length of the connection between you and anyone else on LinkedIn tells you useful information about your professional network. This LinkedIn feature is an example of a data product that followed the retrieval path of Figure 1-4 to deliver a contextual graph metric to the end users.

The lines between these three paths can be blurry. The difference lies between building a data-driven product or needing to derive data insights. Data-driven products deliver unique value to your customers. The next wave of innovation for these products will be to use graph data to deliver more relevant and meaningful experiences. These are the interesting problems and architectures we want to explore throughout this book.

Break it down and try again

Occasionally you may respond to the questions throughout Figure 1-3 and Figure 1-4 with âI donât knowââand that is OK.

Ultimately, you are likely reading this book because your business has data and a complex problem. Such problems are vast and interdependent. At your problemâs highest level, navigating the thought process we are presenting throughout Figure 1-3 and Figure 1-4 can seem out of touch with your complex data.

However, drawing on our collective experience helping hundreds of teams around the world, our advice remains that you should break down your problem and cycle through the process again.

Balancing the demands of executive stakeholders, developer skills, and industry demands is extremely difficult. You need to start small. Build a foundation upon known and proven value to get you one step closer to solving your complex problem.

Note

What happens if you ignore making a decision? Too often, we have seen great ideas fail to make the transition from research and development to a production application: the age-old analysis paralysis. The objective of running graph algorithms is to determine how relationships bring value to your data-driven application. You will need to make some difficult decisions about the amount of time and resources you spend in this area.

Seeing the Bigger Picture

The path to understanding the strategic importance of your businessâs data is synonymous with finding where (and whether) graph technology fits into your application. To help you determine the strategic importance of graph data for your business, we have walked through four very important questions about your application development:

-

Does your problem need graph data?

-

Do relationships within your data help you understand your problem?

-

What are you going to do with the relationships in your data?

-

What do you need to do with the results of a graph algorithm?

Bringing these thought processes together, Figure 1-5 combines all four questions into one chart.

Figure 1-5. The decision process that sparked the creation of this book: how to navigate the applicability and usage of graph technology within your application

We spent time walking through the entire decision tree for two reasons. First, the decision tree depicts a complete picture of the thought process we use when we build, advise on, and apply graph technologies. Second, the decision tree illustrates where this bookâs purpose fits into the space of graph thinking.

That is, this book serves as your guide to navigating graph thinking in the paths throughout Figure 1-5 that end in needing a graph database.

Getting Started on Your Journey with Graph Thinking

When properly leveraged, your businessâs data can be a strategic asset and an investment that yields a return. Graphs are of particular importance here since network effects are a powerful force that provides exquisite competitive advantage. Additionally, todayâs design thinking encourages architects to view their businessâs data as something that needs to get managed with maximal convenience and minimal cost.

This mindset requires a rethinking of how we handle and work with data.

Changing a mindset is a long journey, and any journey begins with one step. Letâs take that step together and learn the new set of terms we will be using along the way.

1 T. William Olle, The CODASYL Approach to Data Base Management (Chichester, England: Wiley-Interscience, 1978). No. 04; QA76. 9. D3, O5.

2 Rudolph Bayer and Edward McCreight, âOrganization and Maintenance of Large Ordered Indexes,â in Software Pioneers, ed. Manfred Broy and Ernst Denert (Berlin: Springer-Verlag, 2002), 245â262.

3 Edgar F. Codd, âA Relational Model of Data for Large Shared Data Banks,â Communications of the ACM 13, no. 6 (1970): 377-387.

4 Clair Brown and Greg Linden, Chips and Change: How Crisis Reshapes the Semiconductor Industry (Cambridge: MIT Press, 2011).

5 Geoffrey A. Moore and Regis McKenna, Crossing the Chasm (New York: HarperBusiness, 1999).

6 Everett M. Rogers, Diffusion of Innovations (New York: Simon and Schuster, 2010).

Get The Practitioner's Guide to Graph Data now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.