Chapter 4. Tomcat Performance Tuning

Once you have Tomcat up and running, you will likely want to do some performance tuning so that it serves requests more efficiently on your box. In this chapter, we give you some ideas for performance tuning the underlying Java runtime and the Tomcat server itself.

The art of tuning a server is complex. It consists of measuring, understanding, changing, and measuring again. The basic steps in tuning are:

Decide what needs to be measured.

Decide how to measure.

Measure.

Understand the implications of what you learned.

Tinker with the configuration in ways that are expected to improve the measurements.

Measure and compare with previous measurements.

Go back to step 4.

Note that, as shown, there is no “exit from loop” clause. This is perhaps representative of real life, but in practice you will need to set a threshold below which minor changes are insignificant enough that you can get on with the rest of your life. You can stop adjusting and measuring when you believe you’re close enough to the response times that satisfy your requirements.

To decide what to tune for better performance, you should do something like the following:

Set up your Tomcat as it will be in your production environment. Try to use the same hardware, OS, database, etc. The closer it is to the production environment, the closer you’ll be to finding the bottlenecks that you’ll experience in your production setup.

On a separate machine, install and configure the software that you will use for load testing. If you run it on the same machine that Tomcat runs on, you will skew your test results.

Isolate the communication between your load-tester machine and the machine you’re running Tomcat on. If you run high-traffic tests, you don’t want to skew those tests by involving network traffic that doesn’t belong in your tests. Also, you don’t want to bring down machines that are uninvolved with your tests due to the heavy traffic. Use a switching hub between your tester machine and your mock production server, or use a hub that has only those two machines connected.

Run some load tests that simulate the various types of high-traffic situations you expect your production server to have. Additionally, you should probably run some tests with higher traffic than you expect your production server to have, so that you’ll be better prepared for future expansion.

Look for any unusually slow response times, and try to determine which hardware or software components are causing the slowness. Usually it’s software, which is good news because you can alleviate some of the slowness by reconfiguring or rewriting the software. (I suppose you could always hack the Linux kernel of your OS, but that’s well beyond this book’s scope!) In extreme cases, however, you might need more hardware or newer, faster, and more expensive hardware. Watch the load average of your server machine, and watch the Tomcat log files for error messages.

In this chapter, we show you some of the common Tomcat things to tune, including web server performance, Tomcat request thread pools, JVM and operating system performance, DNS lookup configuration, and JSP compilation tuning. We then end the chapter with a word on capacity planning.

Measuring Web Server Performance

Measuring web server performance is a daunting task, but we give it some attention here and also point you to more detailed resources. Unlike simpler tasks—such as measuring the speed of a CPU, or even a CPU and programming language combination—there are far too many variables involved in web server performance to do it full justice here. Most measuring strategies involve a “client” program that pretends to be a browser but actually sends a huge number of requests, more or less concurrently, and measures the response times.[1] Are the client and server on the same machine? Is the server machine running anything else at the time of the tests? Are the client and server connected via a gigabit Ethernet, 100baseT, 10baseT, or 56KB dialup? Does the client ask for the same page over and over, or pick randomly from a large list of pages? (This can affect the server’s caching performance.) Does the client send requests regularly or in bursts? Are you running your server in its final configuration, or is there still some debugging enabled that might cause extraneous overhead? Does the “client” request images or just the HTML page? Are some of the URLs exercising servlets and JSPs? CGI programs? Server-Side Includes? We hope you see the point: the list of questions is long and almost impossible to get exactly right.

Load Testing Tools

The point of most web load measuring tools is to request a page a certain (large) number of times and tell you exactly how long it took (or how many times per second the page could be fetched). There are many web load measuring tools; see http://www.softwareqatest.com/qatweb1.html#LOAD for a list. A few measuring tools of note are the Apache Benchmark (ab, included with distributions of the standard Apache web server), Jeff Poskanzer’s http_load from Acme Software (see http://www.acme.com/software/http_load), and JMeter from Apache Jakarta (see http://jakarta.apache.org/jmeter). The first two tools and JMeter are at opposite ends of the spectrum in terms of complexity and functionality.

There seems to be only light support for web application authentication in these web performance tester tools. They support sending cookies, but some may not support receiving them. And, whereas Tomcat supports several different authorization methods (basic, digest, form, and client-cert), these tools tend to support only HTTP basic authentication. The ability to closely simulate the production user authentication is an important part of performance testing because the authentication itself often does change the performance characteristics of a web site. Depending on which authentication method you are using in production, you might need to find different tools from the previously mentioned list (or elsewhere) that support your method.

The ab and http_load programs are command-line utilities that are very simple to use. The ab tool takes a single URL and loads it repeatedly, using a variety of command-line arguments to control the number of times to fetch it, the maximum concurrency, and so on. One of its nice features is the option to print progress reports periodically, and another is the comprehensive report it issues. Example 4-1 is an example running ab; the times are high because it was run on a slow machine (a Pentium 233). We instructed it to fetch the URL 1,000 times with a maximum concurrency of 127.

ian$ ab -k -n 1000 -c 127 -k http://tomcathost:8080/examples/date/date.jsp

This is ApacheBench, Version 2.0.36 <$Revision: 1.1 $> apache-2.0

Copyright (c) 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Copyright (c) 1998-2002 The Apache Software Foundation, http://www.apache.org/

Benchmarking tomcathost (be patient)

Completed 100 requests

Completed 200 requests

Completed 300 requests

Completed 400 requests

Completed 500 requests

Completed 600 requests

Completed 700 requests

Completed 800 requests

Completed 900 requests

Finished 1000 requests

Server Software: Apache

Server Hostname: tomcathost

Server Port: 8080

Document Path: /examples/date/date.jsp

Document Length: 701 bytes

Concurrency Level: 127

Time taken for tests: 53.162315 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Non-2xx responses: 1000

Keep-Alive requests: 0

Total transferred: 861000 bytes

HTML transferred: 701000 bytes

Requests per second: 18.81 [#/sec] (mean)

Time per request: 6.752 [ms] (mean)

Time per request: 0.053 [ms] (mean, across all concurrent requests)

Transfer rate: 15.80 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 51 387.5 0 2999

Processing: 63 6228 2058.4 6208 12072

Waiting: 17 4236 1855.2 3283 9193

Total: 64 6280 2065.0 6285 12072

Percentage of the requests served within a certain time (ms)

50% 6285

66% 6397

75% 6580

80% 9076

90% 9080

95% 9089

98% 9265

99% 12071

100% 12072 (longest request)

ian$To use http_load, give it a file containing one or more URLs to be selected randomly, and tell it how many times per second to try fetching and the total number of fetches you want (or the total number of seconds to run for). For example:

http_load -rate 50 -seconds 20 urls

This command tells http_load to fetch a maximum of 50 pages per second for a span of 20 seconds, and to pick the URLs from the list contained in the file named urls in the current directory.

While it’s running, all errors appear at once, such as a file having a different size than it had previously or, optionally, a different checksum than it had before (this requires more processing, so it’s not the default). Also, keep in mind that any URI containing dynamic content will have a varying size and checksum. At the end, it prints a short report like this:

996 fetches, 32 max parallel, 704172 bytes, in 20.0027 seconds 707 mean bytes/connection 49.7934 fetches/sec, 35203.9 bytes/sec msecs/connect: 7.41594 mean, 5992.07 max, 0.134 min msecs/first-response: 34.3104 mean, 665.955 max, 4.106 min

From this information, the number of fetches per second (almost exactly what was requested) indicates the server is adequate for that many hits/second. The variance in the length of connection is more interesting; on that particular machine, at this load level, the server occasionally took up to five seconds to service a page. This is not an exhaustive test, just a quick series of requests to show the usage of this program.

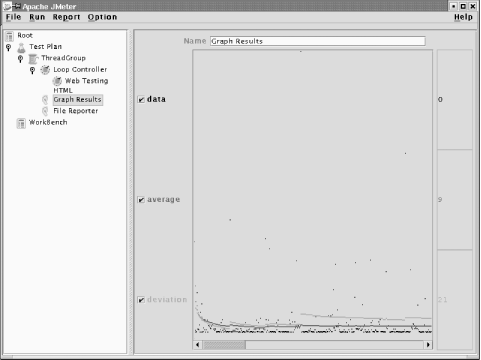

The JMeter program, on the other hand, is quite a bit more complex to run. At least, you must go through a GUI to create a “test plan” that tells it what you want to test. However, it more than makes up for this in its graphical output. With a bit of work, you can make it draw very clear response-time graphs, such as the one shown in Figure 4-1 .

External Tuning

Once you have an idea of how your application and Tomcat instance respond to load, you can begin some performance tuning. There are two basic categories of tuning detailed here:

- External tuning

Tuning that involves non-Tomcat components, such as the operating system that Tomcat runs on and the Java virtual machine running Tomcat.

- Internal tuning

Tuning that deals with Tomcat itself, ranging from changing settings in configuration files to modifying the Tomcat source code itself. Modifications to your application would also fall into this category.

In this section, we detail the most common areas of external tuning, and then move on to internal tuning in the next section.

JVM Performance

T omcat doesn’t run directly on a computer; there is a JVM and an operating system between it and the underlying hardware. There are relatively few full-blown Java virtual machines to choose from for any given operating system, so most people will probably stick with Sun’s or their own system vendor’s implementation. One thing you can do is ensure that you have the latest release, since Sun and most vendors do work on improving performance between releases. Some reports have shown a 10 to 20 percent increase in performance with Tomcat running on JDK 1.4 over JDK 1.3. See Appendix A for information about which JDKs may be available for your operating system.

Operating System Performance

And what about the OS? Is your server operating system optimal for running a large, high-volume web server? Of course, different operating systems have very different design goals. OpenBSD, for example, is aimed at security, so many of the limits in the kernel are set low to prevent various forms of denial-of-service attacks (one of OpenBSD’s mottoes is “Secure by default”). These limits will most likely need to be increased to run a busy web server.

Linux, on the other hand, aims to be easy to use, so it comes with the limits set higher. The BSD kernels come out of the box with a “Generic” kernel; that is, most of the drivers are statically linked in. This makes it easier to get started, but if you’re building a custom kernel to raise some of those limits, you might as well rip out un-needed devices. Linux kernels have most of the drivers dynamically loaded. On the other hand, memory itself is getting cheaper, so the reasoning that led to loadable device drivers is less important. What is important is to have lots and lots of memory, and to make lots of it available to the server.

Tip

Memory is cheap these days, but don’t buy cheap memory—brand name memory costs only a little more and will repay the cost in reliability.

If you run any variant of Microsoft Windows, be sure you have the Server version (e.g., Windows 2000 Server instead of just Windows 2000). In non-Server versions, the end-user license agreement or the operating system’s code itself might restrict the number of users, limit the number of network connections that you can use, or place other restrictions on what you can run. Additionally, be sure to obtain the latest Microsoft service packs frequently, for the obvious security reasons (this is true for any system but is particularly important for Windows).

Internal Tuning

This section details a specific set of techniques that will help your Tomcat instance run faster, regardless of the operating system or JVM you are using. In many cases, you may not have control of the OS or JVM on the machine to which you are deploying. In those situations, you should still make recommendations in line with what was detailed in the last section; however, you should be able to affect changes in Tomcat itself. We think that the following areas are the best places to start when internally tuning Tomcat.

Disabling DNS Lookups

When

a web application wants to log information about the client, it can

either log the client’s numeric IP address or look

up the actual host name in the Domain Name Service (DNS) data. DNS

lookups require network traffic, involving a round-trip response from

multiple servers that are possibly far away and possibly inoperative,

which results in delays. To disable these delays, you can turn off

DNS lookups. Then, whenever a web application calls the

getRemoteHost( ) method in the HTTP request

object, it will get only the numeric IP address. This is set in the

Connector object for your application, in

Tomcat’s server.xml file. For

the common “Coyote” (HTTP 1.1)

connector, use the enableLookups attribute. Just

find this part of the server.xml file:

<!--

Define a non-SSL Coyote HTTP/1.1 Connector on port 8080

-->

<Connector

className="org.apache.coyote.tomcat4.CoyoteConnector"

port="8080" minProcessors="5" maxProcessors="75"

enableLookups="true" redirectPort="8443"

acceptCount="10" debug="0" connectionTimeout="20000"

useURIValidationHack="false"

/>If you are using Tomcat 4.0 instead of 4.1, the

Connector’s

className attribute will have the value

org.apache.catalina.connector.http.HttpConnector.

In either case, change the enableLookups value

from "true" to "false“, and

restart Tomcat. No more DNS lookups and their resulting delays!

Unless you need the fully qualified hostname of every HTTP client that connects to your site, we recommend turning off DNS lookups on production sites. Remember that you can always look up the names later, outside of Tomcat. Not only does turning them off save network bandwidth, lookup time, and memory but, in sites where quite a bit of traffic generates quite a bit of log data, it can save a noticeable amount of disk space as well. For low-traffic sites, turning off DNS lookups might not have as dramatic an effect, but it is still not a bad practice. How often have low-traffic sites become high-traffic sites overnight?

Adjusting the Number of Threads

Another performance control on your

application’s Connector is the

number of processors it uses. Tomcat uses a thread pool to provide

rapid response to incoming requests. A thread in Java (as in other

programming languages) is a separate flow of control, with its own

interactions with the operating system and its own local memory, but

with some memory shared among all threads in the process. This allows

developers to provide fine-grained organization of code that will

respond well to many incoming requests.

You can control the number of threads that are allocated by changing

a Connector’s

minProcessors and maxProcessors

values. The values provided are adequate for typical installations

but may need to be increased as your site gets larger. The

minProcessors value should be high enough to

handle a minimal loading. That is, if at a slow time of day you get

five hits per second and each request takes under a second to

process, the five preallocated threads are all you will need. Later

in the day as your site gets busier, more threads will need to be

allocated, up to the number of threads specified in

maxProcessors attribute. There must be an upper

limit to prevent spikes in traffic (or a denial-of-service attack

from a malicious user) from bombing out your server by making it

exceed the maximum memory limit of the JVM.

The best way to set these to optimal values is to try many different settings for each, and then test them with simulated traffic loads while watching response times and memory utilization. Every machine, operating system, and JVM combination may act differently, and not everyone’s web site traffic volume is the same, so there is no cut-and-dried rule for how to determine minimum and maximum threads.

Speeding Up JSP Compilation

When a JSP is first accessed, it is converted into Java servlet source code, which must then be compiled into Java byte code. You have control over which compiler is used; by default, Tomcat uses the same compiler that the command-line javac process uses. There are faster compilers available that can be used, however, and this section tells you how to take advantage of them.

Tip

Another option is to not use JSPs altogether and instead take advantage of some of the various Java templating engines available today. While this is obviously a larger scale decision, many have found that it is at least worth investigating. For detailed information about other templating languages that you can use with Tomcat, see Jason Hunter and William Crawford’s Java Servlet Programming (O’Reilly).

Changing the JSP compiler under Tomcat 4.0

In Tomcat 4.0, you can use the popular

free-software Jikes compiler for the compilation of JSP into

servlets. Jikes is faster than Sun’s Java compiler.

You first must install Jikes (see http://oss.software.ibm.com/pub/jikes for

information). You might need to set the JIKESPATH

environment variable to include your system’s

runtime JAR; see the documentation accompanying Jikes for detailed

instructions.

Once Jikes is set up, you need only tell the JSP compiler servlet to

use Jikes, which is done by setting its

jspCompilerPlugin attribute. The following entry

in your application’s web.xml

should suffice:

<servlet>

<servlet-name>jsp</servlet-name>

<servlet-class>

org.apache.jasper.servlet.JspServlet

</servlet-class>

<init-param>

<param-name>logVerbosityLevel</param-name>

<param-value>WARNING</param-value>

</init-param>

<init-param>

<param-name>jspCompilerPlugin</param-name>

<param-value>

org.apache.jasper.compiler.JikesJavaCompiler

</param- value>

</init-param>

<init-param>

<!--

<param-name>

org.apache.catalina.jsp_classpath

</param-name>

-->

<param-name>classpath</param-name>

<param-value>

/usr/local/jdk1.3.1-linux/jre/lib/rt.jar:

/usr/local/lib/java/servletapi/servlet.jar

</param-value>

</init-param>

<load-on-startup>3</load-on-startup>

</servlet>Changing the JSP compiler under Tomcat 4.1

In 4.1,

compilation of JSPs is performed by using the Ant program controller

directly from within Tomcat. This sounds a bit strange, but

it’s part of what Ant was intended for; there is a

documented API that lets developers use Ant without starting up a new

JVM. This is one advantage of having Ant written in Java. Plus, it

means you can now use any compiler supported by the

javac task within Ant; these are listed in Table

4-1. It is easier to use than the 4.0 method described previously

because you need only an init-param with a name of

“compiler” and a value of one of

the supported compiler names:

<servlet>

<servlet-name>jsp</servlet-name>

<servlet-class>

org.apache.jasper.servlet.JspServlet

</servlet-class>

<init-param>

<param-name>logVerbosityLevel</param-name>

<param-value>WARNING</param-value>

</init-param>

<init-param>

<param-name>compiler</param-name>

<param-value>jikes</param-value>

</init-param>

<load-on-startup>3</load-on-startup>

</servlet>Of course, the given compiler must be installed on your system, and

the CLASSPATH might need to be set.

Warning

If you are using Jikes, be sure you have Version 1.16 or higher and have compiled in the support for the optional-encoding command-line argument. Earlier versions of Jikes did not support this argument,[2] and Jasper (Tomcat’s JSP compiler) assumes it can pass this to any command line-based compiler.

|

Name |

Additional aliases |

Compiler invoked |

|

classic |

javac1.1, javac1.2 |

Standard JDK 1.1/1.2 compiler |

|

modern |

javac1.3, javac1.4 |

Standard JDK 1.3/1.4 compiler |

|

jikes |

The Jikes compiler | |

|

jvc |

Microsoft |

Microsoft command-line compiler from the Microsoft SDK for Java/Visual J++ |

|

kjc |

The kopi compiler | |

|

gcj |

The gcj compiler (included as part of gcc) | |

|

sj |

Symantec |

Symantec’s Java compiler |

|

extJavac |

Runs either the modern or classic compiler in a JVM of its own |

Precompiling JSPs

Since a JSP is compiled the first time it’s accessed, you may wish to perform precompilation after installing an updated JSP instead of waiting for the first user to visit it. In fact, this should be an automatic step in deployment because it ensures that the new JSP works as well on your production server as it did on your test machine.

There is a script file called jspc in the Tomcat bin/ directory that looks as though it might be used to precompile JSPs, but it does not. It does run the translation phase, but not the compilation phase, and it generates the resulting Java source file in the current directory, not in the work directory for the web application. It is primarily for the benefit of people debugging JSPs.

The best way to ensure precompilation, then, is to simply access the JSP through a web browser. This will ensure the file is translated to a servlet, compiled, and then run. It also has the advantage of exactly simulating how a user would access the JSP, allowing you to see what they would. You can catch any last-minute errors, correct them, and then repeat the process.

A special feature of Tomcat’s JSP implementation is that it offers a way to make a compile-only request to any JSP from any HTTP client. For example, if you make a request to http://localhost:8080/examples/jsp/dates/date.jsp?jsp_precompile=true, then Tomcat would precompile the date.jsp page but not actually serve it. This is handy for automating the precompilation of your JSP files after they’re deployed but before they’re accessed.

Capacity Planning

Capacity planning is another important part of tuning the performance of your Tomcat server in production. Regardless of how much configuration file tuning and testing you do, it won’t really help if you don’t have the hardware and bandwidth your site needs to serve the volume of traffic that you are expecting.

Here’s a loose definition as it fits into the context of this section: capacity planning is the activity of estimating the computer hardware, operating system, and bandwidth necessary for a web site by studying and/or estimating the total network traffic a site will have to handle; deciding on acceptable service characteristics; and finding the appropriate hardware and operating system that meet or exceed the server software’s requirements in order to meet the service requirements. In this case, the server software includes Tomcat, as well as any third-party web servers you are using in front of Tomcat.

If you don’t do any capacity planning before you buy and deploy your production servers, you won’t know whether the server hardware can handle your web site’s traffic load. Or, even worse, you won’t realize the error until you’ve already ordered, paid for, and deployed applications on the hardware—usually too late to change direction very much. You can usually add a larger hard drive, or even order more server computers, but sometimes it’s less expensive overall to buy and maintain fewer server computers in the first place.

The larger the amount of traffic to your web site or the larger the load that is generated per client request, the more important capacity planning becomes. Some sites get so much traffic that only a cluster of server computers can handle it all within reasonable response-time limits. Conversely, sites with less traffic have less of a problem finding hardware that meets all their requirements. It’s true that throwing more or bigger hardware at the problem usually fixes things but, especially in the high-traffic cases, that may be prohibitively costly. For most companies, the lower the hardware costs are (including ongoing maintenance costs after the initial purchase), the higher profits can be. Another factor to consider is employee productivity. If having faster hardware would make the developers 20% more effective in getting their work done quickly, for example, it may be worth the hardware cost difference to order bigger/faster hardware up front, depending on the size of the team.

Capacity planning is usually done at upgrade points as well. Before ordering replacement hardware for existing mission-critical server computers, it’s probably a good idea to gather information about what your company needs, based on updated requirements, software footprints, etc.

There are at least a couple of common methods for arriving at decisions when doing capacity planning. In practice, we’ve seen two main types: anecdotal approaches and academic approaches, such as enterprise capacity planning.

Anecdotal Capacity Planning

Anecdotal capacity planning is a sort of light capacity planning that isn’t meant to be exact, but close enough to keep a company out of situations that might result from doing no capacity planning at all. This method of capacity planning follows capacity and performance trends obtained from previous industry experience. For example, you could make your best educated guess at how much outgoing network traffic your site will have at its peak usage (hopefully from some other real-world site) and double that figure. That figure becomes the site’s new outgoing bandwidth requirement. Then you would buy and deploy hardware that can handle that bandwidth requirement. Most people will do capacity planning this way because it’s quick and requires little effort or time.

Enterprise Capacity Planning

Enterprise capacity planning is meant to be more exact, and it takes much longer. This method of capacity planning is necessary for sites with a very high volume of traffic, often combined with a high load per request. Detailed capacity planning like this is necessary to keep hardware and bandwidth costs as low as possible, while still providing the quality of service that the company guarantees or is contractually obligated to live up to. Usually, this involves the use of commercial capacity planning analysis software in addition to iterative testing and modeling. Few companies do this kind of capacity planning, but the few that do are very large enterprises with a budget large enough to afford it (mainly because this sort of thorough planning ends up paying for itself).

The biggest difference between anecdotal and enterprise capacity planning is depth. Anecdotal capacity planning is governed by rules of thumb and is more of an educated guess, whereas enterprise capacity planning is an in-depth study of requirements and performance whose goal is to arrive at numbers that are as exact as possible.

Capacity Planning on Tomcat

To capacity plan for server machines that run Tomcat, you could study and plan for any of the following items (this isn’t meant to be a comprehensive list, but instead some common items):

- Server computer hardware

Which computer architecture(s)? How many computers will your site need? One big one? Many smaller ones? How many CPUs per computer? How much RAM? How much hard drive space and what speed I/O? What will the ongoing maintenance be like? How does switching to different JVM implementations affect the hardware requirements?

- Network bandwidth

How much incoming and outgoing bandwidth will be needed at peak times? How might the web application be modified to lower these requirements?

- Server operating system

Which operating system works best for the job of serving the site? Which JVM implementations are available for each operating system, and how well does each one take advantage of the operating system? For example, does the JVM support native multithreading? Symmetric multiprocessing? If SMP is supported by the JVM, should you consider multiprocessor server computer hardware? Which serves your web application faster, more reliably, and less expensively: multiple single-processor server computers or a single four-CPU server computer?

Here’s a general procedure for all types of capacity planning that is particularly applicable to Tomcat:

Characterize the workload. If your site is already up and running, you could measure the requests per second, summarize the different kinds of possible requests, and measure the resource utilization per request type. If your site isn’t running yet, you can make some educated guesses at the request volume, and you can run staging tests to determine the resource requirements.

Analyze performance trends. You need to know which requests generate the most load and how other requests compare. By knowing which requests generate the most load or use the most resources, you’ll know what to optimize in order to have the best overall positive impact on your server computers. For example, if a servlet that queries a database takes too long to send its response, maybe caching some of the data in RAM would safely improve response time.

Decide on minimum acceptable service requirements. For example, you may want the end user to never wait longer than 20 seconds for a web page response. That means that, even during peak load, no request’s total time—from the initial request to the completion of the response—can be longer than 20 seconds. That may include any and all database queries and filesystem access needed to complete the heaviest resource-intensive request in your application. The minimum acceptable service requirements are up to each company and vary from company to company. Other kinds of service minimums include the number of requests per second the site must be able to serve, and the minimum number of concurrent sessions and users.

Decide what infrastructure resources you will use, and test them in a staging environment. Infrastructure resources include computer hardware, bandwidth circuits, operating system software, etc. Order, deploy, and test at least one server machine that mirrors what you’ll have for production, and see if it meets your requirements. While testing Tomcat, make sure you try more than one JVM implementation, and try different memory size settings and request thread pool sizes (discussed earlier in this chapter).

If step 4 meets your service requirements, you can order and deploy more of the same thing to use as your production server computers. Otherwise, redo step 4 until service requirements are met.

Be sure to document your work, since this tends to be a time-consuming process that must be repeated if someone needs to know how your company arrived at the answers. Also, since the testing is an iterative process, it’s important to document all of the test results on each iteration, as well as the configuration settings that produced them, so you know when your tuning is no longer yielding noticeable positive results.

Once you’ve finished with your capacity planning, your site will be much better tuned for performance, mainly due to the rigorous testing of a variety of options. You should gain a noticeable amount of performance just by having the right hardware, operating system, and JVM combination for your particular use of Tomcat.

Additional Resources

Clearly, one chapter is hardly enough when it comes to detailing performance tuning. You would do well to perform some additional research, investigating tuning of Java applications, tuning operating systems, how capacity planning works across multiple servers and applications, and anything else that is relevant to your particular application. To get you started, we wanted to provide some resources that have helped us.

Java Performance Tuning, by Jack Shirazi (O’Reilly), covers all aspects of tuning Java applications, including good material on JVM performance. This is a great book, and it covers developer-level performance issues in great depth. Of course Tomcat is a Java application, so much of what Jack says applies to your instances of Tomcat. As you learned earlier in this chapter, several performance enhancements can be achieved by simply editing Tomcat’s configuration files.

Tip

Keep in mind that, although Tomcat is open source, it’s also a very complex application, and you might want to be cautious before you start making changes to the source code. Use the Tomcat mailing lists to bounce your ideas around, and get involved with the community if you decide to delve into the Tomcat source code.

If you’re running a web site with so much traffic that one server might not be enough to handle all of the load, you should probably read Chapter 10, which discusses running a web site on more than one Tomcat instance at a time, and potentially on more than one server computer.

There are also web sites that focus on performance tuning. For Linux versus OpenBSD, for example, you should check out http://misc.bsws.de/obsdtuning/webserver.txt. You can find a collection of informative web pages on capacity planning at http://www.capacityplanning.com. Both of these sites, and others like them, will help supplement the material in this chapter.

[1] There is also the server-side approach, such as running Tomcat under a Java profiler to optimize its code, but this is likely to be more interesting to developers than to administrators.

[2] Jikes Version 1.15 would ignore the option with a warning, so you could use 1.15 in a pinch, but then every time a JSP is compiled, the compiler would generate a warning message. Not good.

Get Tomcat: The Definitive Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.