Chapter 4. SAN Backup and Recovery

Overview

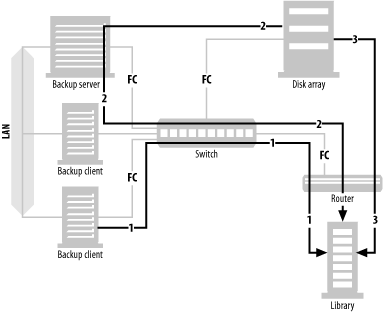

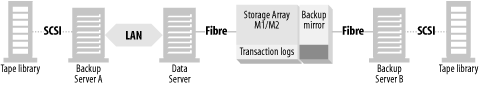

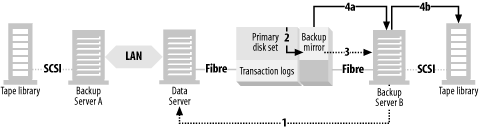

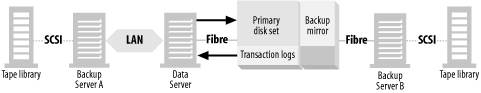

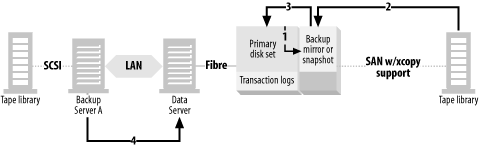

A SAN typically consists of multiple servers, online storage (disk), and offline storage (tape or optical), all of which are connected to a Fibre Channel switch or hub—usually a switch. You can see all these SAN elements in Figure 4-1. Once the three servers in Figure 4-1 are connected to the SAN, each server in the SAN can be granted full read/write access to any disk or tape drive within the SAN. This allows for LAN-free, client-free, and server-free backups, each represented by a different-numbered arrow in Figure 4-1.

Tip

Pretty much anything said in this chapter about Fibre Channel-based SANs will soon be true about iSCSI-based SANs. To visualize how an iSCSI SAN fits into the pictures and explanations in this chapter, simply replace the Fibre Channel HBAs with iSCSI-capable NICs, and the Fibre Channel switches and hubs with Ethernet switches and hubs, and you’ve got yourself an iSCSI-based SAN that works essentially like the Fibre Channel-based SANs discussed in this chapter. The difficulty will be in getting the storage array, library, and software vendors to support it.

- LAN-free backups

LAN-free backups occur when several servers share a single tape library. Each server connected to the SAN can back up to tape drives it believes are locally attached. The data is transferred via the SAN using the SCSI-3 protocol, and thus doesn’t use the LAN.[1] All that is needed is software that will act as a “traffic cop.” LAN-free backups are represented in Figure 4-1 by arrow number 1, which shows a data path starting at the backup client, traveling through the SAN switch and router, finally arriving at the shared tape library.

- Client-free backups

Although an individual computer is often called a server, it’s referred to by the backup system as a client . If a client has its disk storage on the SAN, and that storage can create a mirror that can be split off and made visible to the backup server, that client’s data can be backed up via the backup server; the data never travels via the backup client. Thus, this is called client-free backup. Client-free backups are represented in Figure 4-1 by arrow number 2, which shows a data path starting at the disk array, traveling through the backup server, followed by the SAN switch and router, finally arriving at the shared tape library. The backup path is similar to LAN-free backups, except that the backup server isn’t backing up its own data. It’s backing up data from another client whose disk drives happen to reside on the SAN. Since the data path doesn’t include the client that is using the data, this is referred to as client-free backups.

Tip

To my knowledge, I am the first to use the term client-free backups. Some backup software vendors refer to this as server-free backups, and others simply refer to it by their product name for it. I felt that a generic term was needed, and I believe that this helps distinguish this type of backups from server-free backups, which are defined next.

- Server-free backups

If the SAN to which the disk storage is connected supports a SCSI feature called extended copy , the data can be sent directly from disk to tape, without going through a server. There are also other, more proprietary, methods for doing this that don’t involve the extended copy command. This is the newest area of backup and recovery functionality being added to SANs. Server-free backups are represented in Figure 4-1 by arrow number 3, which shows a data path starting at the disk array, traveling through the SAN switch and router, and arriving at the shared tape library. You will notice that the data path doesn’t include a server of any kind. This is why it’s called server-free backups.

Tip

No backup is completely LAN-free, client-free, or server-free. The backup server is always communicating with the backup client in some way, even if it’s just to get the metadata about the backup. What these terms are meant to illustrate is that the bulk of the data is transferred without using the LAN (LAN-free), the backup client (client-free), or the backup server (server-free).

LAN-Free Backups

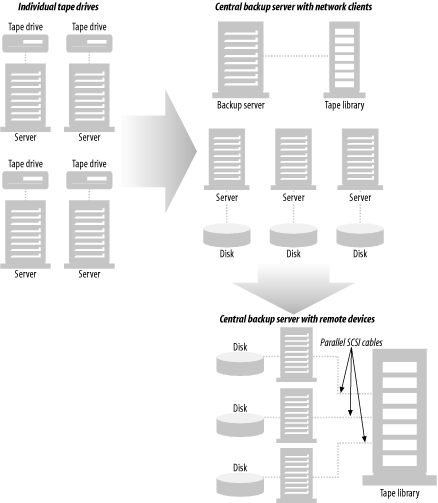

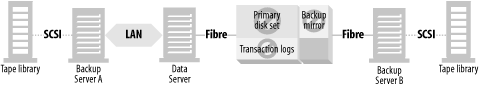

As discussed in Chapter 1, LAN-free backups allow you to use a SAN to share one of the most expensive components of your backup and recovery system—your tape or optical library and the drives within it. Figure 4-2 shows how this is simply the latest evolution of centralized backups. There was a time when most backups were done to locally attached tape drives. This method worked fine when data centers were small, and each server could fit on a single tape. Once the management of dozens (or even hundreds) of individual tapes became too much or when servers would no longer fit on a tape, data centers started using backup software that allowed them to use a central backup server and back up their servers across the LAN. (The servers are now referred to by the backup system as clients.)

This methodology works great as long as you have a LAN that can support the amount of network traffic such backups generate. Even if you have a state-of-the-art LAN, you may find individual backup clients that are too big to back up across the LAN. Also, increasingly large amounts of system resources are required on the backup server and clients to back up large amounts of data across the LAN. Luckily, backup software companies saw this coming and added support for remote devices. This meant that you could again decentralize your backups by placing tape drives on each backup client. Each client would then be told when and what to back up by the central backup server, but the data would be transferred to a locally attached tape drive. Most major software vendors also allowed this to be done within a tape library. As depicted in Figure 4-2, you can connect one or more tape drives from a tape library to each backup client that needs them. The physical movement of the media within the library is then managed centrally—usually by the backup server.

Although the backup data at the bottom of Figure 4-2 isn’t going across the LAN, this isn’t typically referred to as LAN-free backups. The configuration depicted at the bottom of Figure 4-2 is normally referred to as library sharing , since the library is being shared, but the drives aren’t. When people talk about LAN-free backups, they are typically referring to drive sharing , where multiple hosts have shared access to an individual tape drive. The problem with library sharing is that each tape drive is dedicated to the backup client to which it’s connected.[2] The result is that the tape drives in a shared library go unused most of the time.

As an example, assume we have three large servers, each with 1.5 TB of data. Five percent of this data changes daily, resulting in 75 GB of incremental backups per day per host.[3] All backups must be completed within an eight-hour window, and the entire host must be backed up within that window, requiring an aggregate transfer rate of 54 MB/s[4] for full backups. If you assume each tape drive is capable of 15 MB/s,[5] each host needs four tape drives to complete its full backup in one night. Therefore, we need to connect four tape drives to each server, resulting in a configuration that looks like the one at the bottom of Figure 4-2. While this configuration allows the servers to complete their full backups within the backup window, that many tape drives will allow them to complete the incremental backup (75 GB) in just 20 minutes!

An eight-hour backup window each night results in 240 possible hours during a month when backups can be performed. However, with a monthly full backup that takes eight hours, and a nightly incremental backup that takes 20 minutes, they are going unused for 228 out of their 240 available hours.

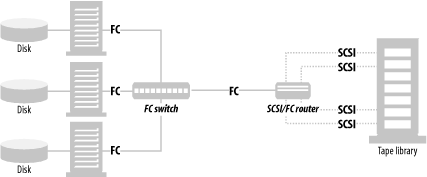

However, suppose we take these same three servers and connect them and the tape library to a SAN as illustrated in Figure 4-3. When we do so, the numbers change drastically. As you can see in Figure 4-3, we’ll connect each host to a switched fabric SAN via a single 100-MB Fibre Channel connection. Next, we must connect the tape drives to the SAN. (For reasons explained later, however, we will only need five tape drives to meet the requirements discussed earlier.) If the tape drives supported Fibre Channel natively, we can just connect them to the SAN via the switch. If the tape drives can connect only via standard parallel SCSI cables, we can connect the five tape drives to the SAN via a Fibre Channel router, as can be seen in Figure 4-3. The routers that are shipping as of this writing can connect one to six SCSI buses to one or two Fibre Channel connections. For this example, we will use a four-to-one model allowing us to connect our five tape drives to the SAN via a single Fibre Channel connection. (As is common in such configurations, two of the drives will be sharing one of the SCSI buses.)

With this configuration, we can easily back up the three hosts discussed previously. The reason that we only need only five tape drives is that in the configuration shown in Figure 4-3, we can use dynamic drive sharing software provided by any of the major backup software companies. This software performs the job of dynamically assigning tape drives to the hosts that need them. When it’s time for a given host’s full backup, it is assigned four of the five drives. The hosts that aren’t performing a full backup will share the fifth tape drive. By sharing the tape drives in this manner, we could actually backup 25 hosts this size. Let’s take a look at some math to see how this is possible.

Assume we have 25 hosts, each with 1.5 TB of data. (Of course, all 25 hosts need to be connected to the SAN.) With four of the five tape drives, we can perform an eight-hour full backup of a different host each night, resulting in a full backup for each server once a month. With the fifth drive, we can perform a 20-minute incremental backup of the 24 other hosts within the same eight-hour window.[6] If this was done with directly connected SCSI, it would require connecting four drives to each host, for a total of 100 tape drives! This, of course, would require a really huge library. In fact, as of this writing, there are few libraries available that can hold that many tape drives. Table 4-1 illustrates the difference in the price of these two solutions.

|

Parallel SCSI solution |

SAN solution | |||

|

Quantity |

Total |

Quantity |

Total | |

|

Tape drives |

100 |

$900K |

5 |

$45K |

|

HBAs |

25 |

$25K |

25 |

$50K |

|

Switch |

None |

2 |

$70K | |

|

Router |

None |

1 |

$7K | |

|

Device server software |

Same |

Same | ||

|

Tape library |

Same |

Same | ||

|

Total |

$925K |

$172K |

Tip

Whether or not a monthly full backup makes sense for your environment is up to you. Unless circumstances require otherwise, I personally prefer a monthly full backup, nightly incremental backups, followed by weekly cumulative incremental backups.

Each solution requires some sort of HBA on each client. Since Fibre Channel HBAs are more expensive, we will use a price of $2,000 for the Fibre Channel HBAs, and $1,000 for the SCSI HBAs.[7] We will even assume you can obtain SCSI HBAs that have two SCSI buses per card. The SCSI solution requires 100 tape drives, and the SAN solution requires five tape drives. (We will use UHrium LTO drives for this example, which have a native transfer speed of 15 MB/s and a discounted price of $9,000.) Since the library needs to store at least one copy of each full backup and 30 copies of each incremental backup from 25 1.5-TB hosts, this is probably going to be a really large library. To keep things as simple as we can, let’s assume the tape library for both solutions is the same size and the same cost. (In reality, the SCSI library would have to be much larger and more expensive.) For the SAN solution, we need to buy two 16-port switches, and one four-to-one SAN router. Each solution requires purchasing device server licenses from your backup software vendor for each of the 25 hosts, so this will cost the same for each solution. (The SAN solution licenses might cost slightly more than the SCSI solution licenses.) As you can see in Table 4-1, there is a $700,000 difference in the cost of these two solutions.

What about the original requirements above, you ask? What if you only need to back up three large hosts? A three-host SAN solution requires the purchase of a much smaller switch and only one router. It requires 12 tape drives for the SCSI solution but only five for the SAN solution. As you can see in Table 4-2, even with these numbers, the SAN solution is over $40,000 cheaper than the SCSI solution.

|

Parallel SCSI solution |

SAN solution | |||

|

Quantity |

Total |

Quantity |

Total | |

|

Tape drives |

12 |

$108K |

5 |

$45K |

|

HBAs |

3 |

$3K |

3 |

$6K |

|

Switch |

None |

1 |

$8K | |

|

Router |

None |

1 |

$7K | |

|

Device server software |

Same |

Same | ||

|

Tape library |

Same |

Same | ||

|

Total |

$111K |

$66K |

How Does This Work?

The data in the previous section shows that sharing tape drives between servers can be a Good Thing. But how can multiple servers share the same physical tape drive? This is accomplished in one of two ways. Either the vendor uses the SCSI reserve and release commands, or they implement their own queuing system.

SCSI reserve/release

Tape drives aren’t the first peripherals that needed to be shared between two or more computers. In fact, many pre-SAN high availability systems were based on disk drives that were connected to multiple systems via standard, parallel SCSI. (This is referred to as a multiple-initiator system.) In order to make this possible, the commands reserve and release were added to the SCSI-2 specification. The following is a description of how they were intended to work.

Each system that wants to access a particular device issues the SCSI reserve command. If no other device has previously reserved the device, the system is granted access to the device. If another system has already reserved the device, the application requesting the device is given a resolution conflict message. Once a reservation is granted, it remains valid until one of the following things happen:

The same initiator (SCSI HBA) requests another reservation of the same device.

The same initiator issues a SCSI release command for the reserved device.

A SCSI bus reset, a hard reset, or a power cycle occurs.

If you combine the idea of the SCSI reserve command with a SCSI bus that is connected to more than one initiator (host HBA), you get a configuration where multiple hosts can share the same device by simply reserving it prior to using it. This device can be a disk drive, tape drive, or SCSI-controlled robotic arm of a tape library. The following is an example of how the configuration works.

Each host that needs to put a tape into a drive attempts to reserve the use of the robotic arm with the SCSI reserve command. If it’s given a resolution conflict message, it waits a predetermined period of time and tries again until it successfully reserves it. If it’s granted a reservation to the robotic arm, it then attempts to reserve a tape drive using the SCSI reserve command. If it’s given a resolution conflict message while attempting to reserve the first drive in the library, it continues trying to reserve each drive until it either successfully reserves one or is given a resolution conflict message for each one. If this happens, it issues a SCSI release command for the robotic arm and then waits a predetermined period of time and tries again. It continues to do this until it successfully puts a tape into a reserved drive. Once done, it can then back up to the drive via the SAN.

This description is how the designers intended it to work. However, shared drive systems that use the SCSI reserve/release method run into a number of issues, so many people consider the SCSI reserve/release command set to be fundamentally flawed. For example, one such flaw is that a SCSI bus reset releases the reservation. Since SCSI bus resets happen under a number of conditions, including system reboots, it’s highly possible to completely confuse your reservation system with the reboot of only one server connected to the shared device. Another issue with the SCSI reserve/release method is that not all platforms support it. Therefore, a shared drive system that uses the SCSI reserve/release method is limited to the platforms on which these commands are supported.

Third-party queuing system

Most backup software vendors use the third-party queuing method for shared devices. When I think of how this works, I think my two small daughters. Their method of toy sharing is a lot like the SCSI reserve/release method. Whoever gets the toy first gets it as long as she wants it. However, as soon as one of them has one toy, the other one wants it. The one who wants the toy continually asks the other one for it. However, the one that already has the toy issues a resolution conflict message. (It sounds like, “Mine!”) The one that wants the toy is usually persistent enough, however, that Mom or Dad have to intervene. Not very elegant, is it?

A third-party queuing system is like having Daddy sit between the children and the toys, where each child has full knowledge of what toys are available, but the only way they can get a toy is to ask Daddy for it. If the toy is in use, Daddy simply says, “You can’t have that toy right now. Choose another.” Then once the child asks for a toy that’s not being used, Daddy hands it over. When the child is done with the toy, Daddy returns it to the toy bin.

There are two main differences between the SCSI reserve/release method and the third-party queuing method:

- Reservation attempts are made to a third-party application

In the SCSI reserve/release method, the application that wants a drive has no one to ask if the drive is already busy—other than the drive itself. If the drive is busy, it simply gets a resolution conflict message, and there is no queuing of requests. Suppose for example, that Hosts 1, 2, and 3 are sharing a drive. Host 1 requests for and is issued a reservation for the drive and begins using it. Host 2 then attempts to reserve the drive and is given a resolution conflict message. Host 3 then attempts to reserve the drive and is also given a resolution conflict message. Host 2 then waits the predetermined period of time and requests the drive again, but it’s still being used. However, as soon as it’s given a resolution conflict message, the drive becomes available. Assuming that Host 2 and Host 3’s waiting time is the same, Host 3 will ask for the drive next, and is granted a reservation. This happens despite the fact that it’s actually Host 2’s “turn” to use the drive, since it asked for the drive first.

However, consider a third-party queuing system. In the example, Host2 would have asked a third-party application if the drive was available. It would have been told to wait and would be placed in a queue for the drive. Instead of having to continually poll the drive for availability, it’s simply notified of the drive’s availability by the third-party application. The third-party queueing system can also place multiple requests into a queue, keeping track of which host asked for a drive first. The hosts are then given permission to use the drive in the order the requests were received.

- Tape movement is accomplished by the third party

Another major difference between third-party queuing systems and the SCSI reserve/release method is that, while the hosts do share the tape library, they don’t typically share the robotic arm. When a host requests a tape and a tape drive, the third-party application grants the request (if a tape and drive are available) and issues the robotic request to one host dedicated as the robotic control host.[8]

Levels of Drive Sharing

In addition to accomplishing drive sharing via different methods, backup software companies also differ in how they allow libraries and their drives to be shared. In order to understand what I mean, I must explain a few terms. A main server is the central backup server in any backup configuration. It contains the schedules and indexes for all backups and acts as a central point of control for a group of backup clients. A device server has only tape drives that receive backup data from clients. The main server controls its actions. This is known as a three-tiered backup system , where the main server can tell a backup client to back up to the main server’s tape drives or to the tape drives on one of its device servers. Some backup software products don’t support device servers and require all backups to be transmitted across the LAN to the main server. This type of product is known as a two-tiered backup system . (A single-tiered backup product would support only backups to a server’s own tape drives. An example of such a product would be the software that comes with an inexpensive tape drive.)

- Sharing drives between device servers

Most LAN-free backup products allow you to share a tape library and its drives only between a single main server and/or device servers under that main server’s control. If you have more than one main server in your environment, this product doesn’t allow you to share a tape library and its drives between multiple main servers.

- Sharing drives between main servers

Some LAN-free backup products allow you to share a library and its drives between multiple main servers. Many products that support this functionality do so because they are two-tiered products that don’t support device servers, but this isn’t always the case.

Restores

One of the truly beautiful things about sharing tape drives in a library via a SAN is what happens when it’s time to restore. First, consider the parallel study illustrated in Table 4-2. Since only three drives are available to each host, only three drives will be available during the restores as well. Even if your backup software had the ability to read more than three tapes at a time, you wouldn’t be able to do so.

However, if you are sharing the drives dynamically, a restore could be given access to all available drives. If your backups occur at night, and most of your restores occur during the day, you would probably be given access to all drives in the library during most restores. Depending on the capabilities of your backup software package, this can drastically increase the speed of your restore.

Other Ways to Share Tape Drives

As of this writing, there are at least three other ways to share devices without using a SAN. Although this is a chapter about SAN technology, I thought it appropriate to mention them here.

NDMP libraries

The Network Data Management Protocol (NDMP), originally designed by Network Appliance and PDC/Intelliguard (acquired by Legato) to back up filers, offers another way to share a tape library. There are a few vendors that have tape libraries that can be shared via NDMP.

Here’s how it works. First, the vendors designed a small computer system to support the “tape server” functionality of NDMP:

It can connect to the LAN and use NDMP to receive backups or transmit restores via NDMP.

It can connect to the SCSI bus of a tape library and transmit to (or receive data from) its tape drives.

They then connect the SCSI bus of this computer to a tape drive in the library, and the Ethernet port of this computer to your LAN. (Current implementations have one of these computers for each tape drive, giving each tape drive its own connection to the LAN.) The function of the computer system is to convert data coming across the LAN in raw TCP/IP format and to write it as SCSI data via a local device driver which understands the drive type. Early implementations are using DLT 8000s and 100-Mb Ethernet, because the speed of a 100-Mb network is well matched to a 10-MB/s DLT. By the time you read this, there will probably be newer implementations that use Gigabit Ethernet and faster tape drives.

Not only does this give you a way to back up your filers; it also offers another option for backing up your distributed machines. As will be discussed in Chapter 7, NDMP v4 supports backing up a standard (non-NDMP) backup client to an NDMP-capable device. Since this NDMP library looks just like another filer to the backup server, you can use it to perform backups of non-NDMP clients with this feature. Since the tape library does the job of tracking which drives are in use and won’t allow more than one backup server to use it at a time, you can effectively share this tape library with any backup server on the LAN.

SCSI over IP

SCSI over IP, also known as iSCSI, is a rather new concept when compared to Fibre Channel, but it’s gaining a lot of ground. A SCSI device with an iSCSI adapter is accessible to any host on the LAN that also has an iSCSI interface card. Proponents of iSCSI say that Fibre Channel-based SANs are complex, expensive, and built on standards that are still being finalized. They believe that SANs based on iSCSI will solve these problems. This is because Ethernet is an old standard that has evolved slowly in such a way that almost all equipment remains interoperable. SCSI is also another standard that’s been around a long time and is a very established protocol.

The one limitation to iSCSI today is that it’s easy for a host to saturate a 100-MB/s Fibre Channel pipe, but it’s difficult for the same host to saturate a 100-MB/s (1000 Mb or 1 Gb) Ethernet pipe. Therefore, although the architectures offer relatively the same speed, they aren’t necessarily equivalent. iSCSI vendors realize this and are therefore have developed network interface cards (NICs) that can communicate “at line speed.” One way to do this is to offload the TCP/IP processing from the host and perform it on the NIC itself. Now that vendors have started shipping gigabit NICs that can communicate at line speed, iSCSI stands to make significant inroads into the SAN marketplace. More information about iSCSI can be found in Appendix A.

A Variation on the Theme

LAN-free backups solve a lot of problems by removing backup traffic from the LAN. When combined with commercial interfaces to database backup APIs, they can provide a fast way to back up your databases. However, what happens if you have an application that is constantly changing data, and for which there is no API? Similarly, what if you can’t afford to purchase the interface to that API from your backup software company? Usually, the only answer is to shut down the application during the entire time of the backup.

One solution to this problem is to use a client-free backup solution, which is covered in the next section. However, client-free backups are expensive, and they are meant to solve more problems (and provide more features) than this. If you don’t need all the functionality provided by client-free backups, but you still have the problem described earlier, perhaps what you need is a snapshot. In my book Unix Backup and Recovery, I introduced the concept of snapshots as a feature I’d like to see integrated into commercial backup and recovery products, and a number of them now offer it.

What is a snapshot?

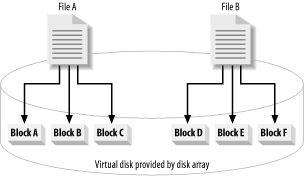

A snapshot is a virtual copy of a device or filesystem. Think of it as a Windows shortcut or Unix symbolic link to a device that has been frozen in time. Just as a symbolic link or shortcut isn’t really a copy of a file or device that it points to, a snapshot is a symbolic (or virtual) representation of a file or device. The only difference between a snapshot and a shortcut or symbolic link is that the snapshot always mimics the way that file or device looked at the time the snapshot was taken.

In order to take snapshots, you must have snapshot software. This software can be found in any of the following places:

- Advanced filesystems

There are filesystems that let you create snapshots as part of their list of advanced features. These filesystems are usually not the filesystem provided by the operating-system vendor and are usually only available at an extra cost.

- Standard host-based volume managers

A host-based volume manager is the standard type of volume manager you are used to seeing. They manage disks that are visible to the host and create virtual devices using various levels of RAID, including RAID (striping), RAID 1 (mirroring), RAID 5, RAID 0+1 (striping and mirroring), and RAID 1+0 (mirroring and striping). (See Appendix B for descriptions of the various levels of RAID.) Snapshots created by a standard volume manager can be seen only on the host where the volume manager is running.

- Enterprise storage arrays

A few enterprise storage arrays can create snapshots. These snapshots work virtually the same as any other snapshot, with the additional feature that a snapshot made within the storage array can be made visible to another host that also happens to be connected to the storage array. There is usually software that runs on Unix or NT that communicates with the storage array and tells it when and where to create snapshots.

- Enterprise volume managers

Enterprise volume managers are a relatively new type of product that attempt to provide enterprise storage array type features for JBOD disks. Instead of buying one large enterprise storage array, you can buy SAN-capable disks and create RAID volumes on the SAN. Some of these products also offer the ability to create snapshots that are visible to any host on the SAN.

- Backup software add-on products

Some backup products have recognized the value provided by snapshot software and can create snapshots within their software. These products emulate many other types of snapshots that are available. For example, some can create snapshots only of certain filesystem types, just like the advanced filesystem snapshots discussed earlier. Others can create snapshots of any device that is available to the host, but the data must be backed up via that host, just like the snapshots provided by host-based volume managers. Some, however, can create snapshots that are visible to other hosts, emulating the functionality of an enterprise storage array or enterprise volume manager. This type of snapshot functionality is discussed in more detail later in this chapter.

Let’s review why we are looking at snapshots. Suppose you perform LAN-free backups but have an application for which there is no API or can’t afford the API for an application. You are therefore required to shut down the application during backups. You want something better but aren’t yet ready for the cost of client-free backups, nor do you need all the functionality they provide. Therefore, you need the type of snapshots that are available only from an advanced filesystem, a host-based volume manager, or a backup software add-on product that emulates this functionality. An enterprise storage array that can create snapshots (that are visible from the host of which the snapshot was taken) also works fine in this situation, but it isn’t necessary. Choose whichever solution works best in your environment. Regardless of how you take the snapshot, most snapshot software works essentially the same way.

When you create a snapshot, the snapshot software records the time at which the snapshot was taken. Once the snapshot is taken, it gives you and your backup utility another name through which you may view the snapshot of the device or filesystem. It looks like any other device or filesystem, but it’s really a symbolic representation of the device. Creating the snapshot doesn’t actually copy data from diska to diska.snapshot, but it appears as if that’s exactly what happened. If you look at diska.snapshot, you’ll see diska exactly as it looked at the moment diska.snapshot was created.

Creating the snapshot takes only a few seconds. Sometimes people have a hard time grasping how the software can create a separate view of the device without copying it. This is why it’s called a snapshot; it doesn’t actually copy the data, it merely took a “picture” of it.

Once the snapshot has been created, most snapshot software (or firmware in the array) monitors the device for activity. When it sees that a block of data is going to change, it records the “before” image of that block in a special logging area (often called the snapshot device). Even if a particular block changes several times, it only needs to record the way it looked before the first change occurred.[9]

For details on how this works, please consult your vendor’s manual. When you view the device or filesystem via the snapshot virtual device or mount point, it watches what you’re looking for. If you request a block of data that has not changed since the snapshot was taken, it retrieves that block from the original device or filesystem. However, if you request a block of data that has changed since the snapshot was taken, it retrieves that block from the snapshot device. This, of course, is completely invisible to the user or application accessing the data. The user or application simply views the device via the snapshot device or mount point, and where the blocks come from is managed by the snapshot software or firmware.

Problem solved

Now that you can create a “copy” of your system in just a few seconds, you have a completely different way to back up an unsupported application. Simply stop the application, create the snapshot (which only takes a few seconds) and restart the application. As far as the application is concerned, the backup takes only a few seconds. However, there is a performance hit while the data is being backed up to the locally attached tape library (that is being shared with other hosts via a LAN-free backup setup). The degree to which this affects the performance of your application will, of course, vary. The only way to back up this data without affecting the application during the actual transfer of data from disk to tape is to use client-free backups.

Tip

One vendor uses the term “snapshot” to refer to snapshots as described here, and to describe additional mirrors created for the purpose of backup, which is discussed in Section 4.3. I’m not sure why they do this, and I find it confusing. In this book, the term snapshot will always refer to a virtual, copy-on-write copy as discussed here.

Problems with LAN-Free Backups

LAN-free backups solve a lot of problems. They allow you to share one or more tape libraries between your critical servers, allowing each to back up much faster than they could across the LAN. It removes the bulk of the data transfer from the LAN, freeing your LAN for other uses. It also reduces the CPU and memory overhead of backups on the clients, because they no longer have to transmit their backup data via TCP/IP. However, there are still downsides to LAN-free backups. Let’s take a look at a LAN-free backup system to see what these downsides are.

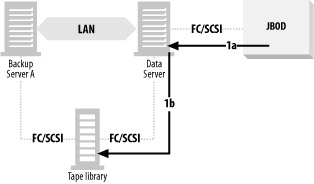

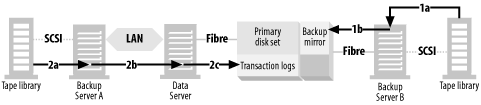

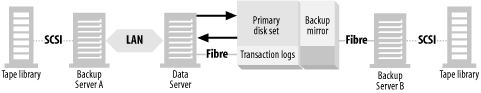

A typical LAN-free backup system is shown in Figure 4-4, where the database resides on disks that are visible to the data server. These disks may be a set of discreet disks inside the data server, a disk array with or without mirroring, or an enterprise storage array. In order to back up the data, the data must be read from the disks by the data server (1a) and transferred across the data server’s backplane, CPU, and memory via some kind of backup software. It’s then sent to tape drives on the data server (1b). This is true even if you use the snapshot technology discussed earlier. Even though you’ve created a static view of the data to back up, you still must back up the data locally.

This, of course, requires using the CPU, memory, and backplane of the data server quite a bit. The application is negatively affected, or even shut down, during the entire time it takes to transfer the database from disk to tape. The larger the data, the greater the impact on the data server and the users. Also, traditional database recovery (including snapshot recovery systems like those described at the end of the previous section) requires transferring the data back across the same path, slowing down a recovery that should go as fast as possible.

With this type of setup, a recovery takes as long or longer than the backup and is limited by the I/O bandwidth of the data server. This includes only the recovery of the database files themselves. If it’s a database you are recovering, the replaying of the transaction logs adds a significant amount of time on top of that.

Let’s take a look at each of these limitations in more detail.

Application impact

You’d think that backing up data to locally attached tape drives would present a minimal impact to the application. It certainly creates much less of a load than typical LAN-based backups. In reality, however, the amount of throughput required to complete the backup within an acceptable window can sometimes create quite a load on the server, robbing precious resources needed by the application the server is running. The degree to which the application is affected depends on certain factors:

Are the size and computing power of the server based only on the needs of the “primary” application, or are the needs of the backup and recovery application also taken into account? It’s often possible to build a server that is powerful enough that the primary application isn’t affected by the demands of the backup and recovery application, but only if both applications are taken into account when building the server. This is, however, often not done.

How much data needs to be transferred from online storage (i.e., disk) to offline storage (i.e., tape) each night? This affects the length of the impact.

What are the I/O capabilities of the server’s backplane? Some server designs do a good job of computing but a poor job of transferring large amounts of data.

How much memory does the backup application require?

Can the primary application be backed up online, or does it need to be completely shut down during backups?

How busy is the application during the backup window? Is this application being accessed 24 x 7, or is it not needed while backups are running?

Please notice that the last question asked about when the application is needed -- not when it’s being used. The reason the question is worded this way is that too many businesses have gotten used to systems that aren’t available during the backup window. They have grown to expect this, so they simply don’t try to use it at that time. They would use it if they could, but they can’t—so they don’t. The question is, “Would you like to be able to access your system 24 x 7?” If the answer is yes, you need to design a backup and recovery system that creates minimal impact on the application.

Almost all applications are impacted in some way during LAN-based or LAN-free backups. File servers take longer to process file requests. If you have a database and can perform backups with the database running, your database may take longer to process queries and commits. If your database application requires you to shut down the database to perform a backup, the impact on users is much greater.

Whether you are slowing down file or database services, or you are completely halting all database activity, it will be for some period of time. The duration of this period is determined by four factors:

How much data do you have to back up?

How many offline storage devices do you have available?

How much can your backup software take advantage of these devices?

How well can your server handle the load of moving data from point A to point B?

Recovery speed

This is the only reason you are backing up, right? Many people fail to take recovery speed into consideration when designing a backup and recovery system when they should be doing almost the opposite. They should design a backup and recovery system in such a way that it can recover the system within an acceptable window. In almost every case, this also results in a system that can back up the system within an acceptable window.

If your backup system is based on moving data from disk to tape, and your recovery system is based on moving data from tape to disk, the recovery time is always a factor of the questions in the previous section. They boil down to two basic questions: how much data do you have to move, and what resources are available to move it?

No other way?

Of course applications are affected during backups. Recovery takes as long, if not longer, than the backup. There’s simply no way to get around this, right? That was the correct answer up until just recently; however, client-free backups have changed the rules.

What if there was a way you could back up a given server’s data with almost no impact to the application? If there were any impact, it would last for only a few seconds. What if you could recover a multi-terabyte database instantaneously? Wouldn’t that be wonderful? That’s what client-free backups can do for you.

Client-Free Backups

Performing client-free backup and recovery requires the coordination of several steps across at least two hosts. At one point in time, none of the popular commercial backup and recovery applications had software that could automate all the steps without requiring custom scripting by the administrator. All early implementations of client-free backups involved a significant amount of scripting on the part of the administrator, and almost all early implementations of client-free backups were on Unix servers. This was for several reasons, the first of which was demand. Many people had really large, multi-terabyte Unix servers that qualified as candidates for client-free backups. This led to a lot of cooperation between the storage array vendors and the Unix vendors, which led to commands that could run on a Unix system and accomplish the tasks required to make client-free backups possible. Since many of these tasks required steps to be coordinated on multiple computers, the rsh and ssh capabilities of Unix came in handy.

Since NT systems lacked integrated, advanced scripting support, and communications between NT machines were easy for administrators to script, it wasn’t simple to design a scripted solution for NT client-free backups. (As you will see later in this section, another key component that was missing was the ability to mount brand new drive letters from the command line.) This, combined with the fact that NT machines tended to use less storage than their monolithic Unix counterparts, meant that there were not a lot of early implementations of client-free backup on NT. However, things have changed in recent years. It isn’t uncommon to find very large Windows machines. (I personally have seen one approaching a terabyte.) Scripting and intermachine communication has improved in recent years, but the limitation of not being able to mount drives via the command line has existed until just recently.[10] Therefore, it’s good that a few commercial backup software companies are beginning to release client-free backup software that includes the Windows platform. Those of us with very large Windows machines can finally take advantage of this technology.

Windows isn’t the only platform for which commercial client-free applications are being written. As of this writing, I am aware of several products (that are either in beta or have just been released) that will provide integrated client-free backup and recovery functionality for at least four versions of Unix (AIX, HP-UX, Solaris, and Tru64).

The next section attempts to explain all the steps a client-free backup system must complete. These steps can be scripted, or they can be managed by an integrated commercial client-free backup software package. Hopefully, by reading the steps in detail, you will have a greater appreciation of the functionality client-free backups provide, as well as the complexity of the application that provide them.

How Client-Free Backups Work

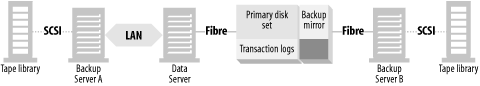

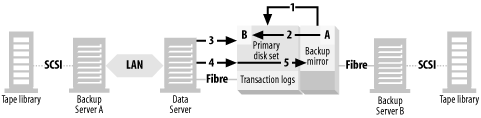

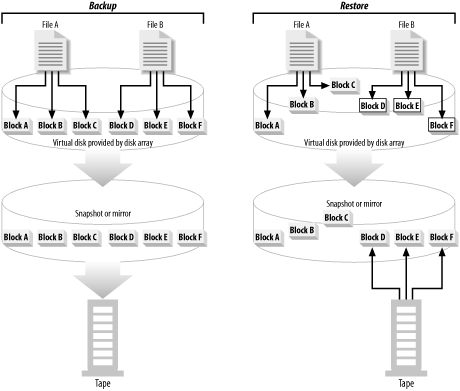

As you can see in Figure 4-5, there is SAN-connected storage that is available to at least two systems: the data server and a backup server. The storage consists of a primary disk set and a backup mirror , which is simply an additional copy of the primary disk set. (In the figure, the primary disk set is mirrored, as represented by the M1/M2.) Note that the SAN-connected storage is depicted as a single, large multihosted storage array with an internal, prewired SAN. The reason for this is that all early implementations of client-free backups have been with such storage arrays. As discussed earlier, there are now enterprise volume managers that will make it possible to do this with JBOD on a SAN, but I use the concept of a large storage array in this example because it’s the solution that’s available from the most vendors—even if it’s the most expensive.

The normal status quo of the backup mirror is that it’s left split from the primary disk set. Why it’s left split will become clear later in this section.

At the appropriate time, the backup application performs a series of tasks:

Backup Server A, the main backup server, tells Backup Server B to begin the backup.

Unmounts the backup mirror (if it’s a mounted filesystem) from Backup Server B.

Exports the volumes from the OS’ volume manager on Backup Server B.

Establishes the backup mirror (i.e., “reconnects” it to the primary disk set) by running commands on Backup Server B that communicate with the storage array.

Backup Server B monitors the backup mirror, waiting for the establish to complete.

Backup Server B waits for the appropriate time to split off the backup mirror.

Backup Server B tells Data Server to put the application into backup mode.

Backup Server B splits the backup mirror.

Backup Server B tells Data Server to take the application out of backup mode.

Backup Server B imports the volumes found on the backup mirror.

Backup Server B mounts any filesystems found on the backup mirror.

Backup Server B now performs the backup of the backup mirror via its I/O backplane, instead of the data server’s backplane.

After the backup, the filesystems are left mounted and imported on Backup Server B.

These tasks mean that the backup data is sent via the SAN to the backup server and doesn’t travel through the client at all, which is why it’s referred to as client-free backups. Again, some vendors refer to this as server-free backups, but I reserve that term for a specific type of backup that will be covered in Section 4.4.

You may find yourself asking a few questions:

How do you put the application into backup mode?

How do you import another machine’s volumes and filesystems?

Why is the backup mirror left split and mounted most of the time?

This sounds really complicated. Is it?

Before explaining this process in detail, let’s examine a few things that make client-free backups possible:

- You must be able to put the application into backup mode

You are going to split off a mirror of the application’s disk, and it has no idea you’re going to do that. The best way to do this is to stop all I/O operations to the disk during the split. This is usually done by shutting down the application that is using the disk. However, some database products, such as Oracle and Informix, allow you to put their databases into a backup-friendly state without shutting them down. This works fine as well. Informix actually freezes all commits, and Oracle does some extra logging. If you’re backing up a file server, you need to stop writes to the files during the time of the split. Otherwise, the filesystem can be corrupted during the process. If you perform an online, split-mirror backup of a SQL Server database, SQL Server’s recovery mechanism is supposed to recover the database back to the last checkpoint. However, any transactions that aren’t in the online transaction log are lost. (In other words, you can’t issue a load transaction command after a split mirror recovery). Microsoft Exchange must normally be shut down during the split.

This isn’t to say that a backup and recovery software company can’t write an API that communicates with the SQL Server and Exchange APIs. This software product could tell SQL Server and Exchange that it performed a traditional backup, when in reality it’s performing a split-mirror backup. In fact, this is already being done for Exchange in the NAS world with snapshots, which act like split mirrors as far as the application is concerned.

- You have to be able to back up the application’s data via a third-party application

If the application is a file server, this is usually not a problem. However, most modern databases are backed up by passing a stream of data to the backup application via a special API. Perhaps two examples will illustrate what I mean.

The standard way to back up Oracle is to use the RMAN (Recovery Manager) utility. Once configured, your backup software automatically talks to Oracle’s RMAN API, which then passes streams of data back to the backup application. However, Oracle also allows you start sqlplus (a command-line utility you can script), issue the command alter tablespace

tablespacebegin backup and then back up that tablespace’s datafiles in any way you want.[11] When it’s time to recover, you shut down the database, restore those datafiles from backup, then use Oracle’s archived redo logs to redo any transactions that have occurred since the backup.Informix now offers similar functionality, but it didn’t always do so. Prior to the creation of the onbar utility, you were forced to use the ontape utility to back up Informix. This backed up a database’s datafiles or logical logs (transaction logs) to tape. If you didn’t back up the datafiles with ontape, you couldn’t restore the database to a point in time. For example, suppose you shut down the database and used a third-party backup tool to back up the database’s datafiles. You then started up the database and ran it a while, making and recording transactions. If you then shut down the database, using your third-party tool to restore the datafiles, there would be no way within Informix to redo the transactions that occurred since the backup was taken. Therefore, third-party backups with these versions of Informix were useless, preventing you from doing client-free backups of these databases. Now, with the onbar utility, Informix supports what it calls an external backup. As you will see later, this is the tool that you now use to perform client-free backups of Informix.

Although Microsoft Exchange does have transaction logs, the only way to back them up is via the Microsoft-provided tools and APIs. Unless a backup product writes an application that speaks to Microsoft’s API and tricks it into thinking that the split mirror backup is a “normal” backup, there is no way to perform an online third-party backup of it. However, you can perform an offline third-party backup of Exchange by shutting down the Exchange services prior to splitting the mirror. You can then restore the Exchange server back to the point the mirror was split, but you lose any messages that have been sent since then. (This is why there are few people performing client-free backups of Exchange.)

Microsoft’s SQL Server has a sophisticated recovery mechanism that allows you to perform both online and offline backups, with advantages and disadvantages of both. If you split the mirror while SQL Server is online, you shouldn’t suffer a service interruption because of backup, but the recovery process that SQL Server must perform when a restored database is restarted will take much longer. If you shut down SQL Server before splitting the mirror, SQL Server doesn’t need to recover the database back to the last checkpoint before it can start replaying transaction logs. However, you will obviously suffer a service interruption whenever you perform a backup. Whether you shut down the database or not, you can’t use the dump transaction and load transaction commands in conjunction with a split mirror backup of SQL Server.

- You have to be able to establish to (and split the mirror from) the primary disk set

This functionality is provided by software on the backup server or data server that communicates with the volume manager of the storage array. With large, multihosted arrays (e.g., Compaq’s Enterprise Storage Array, EMC Symmetrix, and Hitachi 9000 series), the client-side software is communicating with the built-in, hardware RAID controller. (An example of this is provided later in this chapter.) Other solutions use software RAID; in this situation, the client-side software is talking to the host that is managing the volumes.

- You must be able to see the backup mirror from the backup server

Once you split the backup mirror from the primary disk set, you have to be able to see its disk on the backup server. If you can’t, the entire exercise is pointless. This is where Windows NT has typically had a problem. Once the mirror has been split, the associated drives just “appear” on the SCSI bus—roughly the equivalent to plugging in new disk drives without rebooting or running the Disk Manager or Computer Management GUIs. However, Windows 2000 now uses a light version of the Veritas Volume Manager. You can purchase a full-priced version for both NT and Windows 2000, which comes with command line utilities. By the time you read this, the full version of Volume Manager should support the necessary functionality. In fact, Veritas will reportedly have a script included with the product that is specifically designed to help automate client-free backups.

- You normally have to have the same operating system on the data server and the backup server

The reason for this is that you are going to be reading one host’s disks on another host. If Backup Server B in Figure 4-5 is to back up the data server’s disks, it needs to understand the disk labels, any volume manager disk groups and volumes, and any filesystems that may reside on the disks. With few exceptions, this normally means that both servers must be running the same operating system. At least one client-free backup software package has gotten around this limitation by writing custom software that can understand volume managers and filesystems from other operating systems. But, for the most part, the data server and backup server need to be the same operating system. This can be true even if you aren’t using a volume manager or filesystem and are backing up raw disks. For example, a Solaris system can’t perform I/O operations on a disk that has an NT label on it.

Before continuing this explanation of client-free backups, I must define some terms that will be used in the following example. Please understand that there are multiple vendors that offer this functionality, and they all use different terminology and all work slightly differently.

Tip

The lists that follow in the rest of this chapter give examples for Compaq, EMC, and Hitachi using Exchange, Informix, Oracle, and SQL Server databases, and the Veritas Volume Manager and File System. These examples are for clarification only and aren’t meant to imply that this functionality is available only on these platforms or to indicate an endorsement of these products. These vendors are listed in alphabetical order throughout, and so no inferences should be made as to the author’s preference for one vendor over another. There are several multihosted storage array vendors and several database vendors. Consult your vendor for specifics on how this works on your platform.

- Primary disk set

The term primary disk set refers to the set of disks that hold the primary copy of the data server’s data. What I refer to as a disk set are probably several independent disks or can be one large RAID volume. Whether the primary disk set uses RAID 0, 1, 0+1, 1+0, or 5 is irrelevant. I’m simply referring to the entire set of disks that contain the primary copy of the data server’s data. Compaq calls this the primary mirror , EMC calls it the standard , and Hitachi, the primary volume or P-VOL.

- Backup mirror

The backup mirror device is another set of disks specifically allocated for backup mirror use. When people say “the backup mirror,” they are typically referring to a set of backup mirror disks that are associated with a primary disk set. It’s often referred to as a “third mirror,” because the primary disk set is often mirrored. When synchronized with the primary disk set, this set of disks then represents a third copy of the data—thus the term third mirror. However, not all vendors use mirroring for primary disk sets, so I’ve chosen to use the term backup mirror instead. Compaq and EMC both call this a BCV, or business continuity volume; Hitachi calls it the secondary volume or S-VOL.

- Backup mirror application

This is a piece of software that synchronizes and splits backup mirror devices based on commands that are issued by the client-side version of the software running on the data or backup server. That is, you run the backup mirror application on your Unix or NT system, and it talks to the disk array and tells it what to do. Compaq has a few ways to do this. The more established way is to use the Enterprise Volume Manager GUI and the Compaq Batch Scheduler. The Batch Scheduler is a web-based GUI that is accessible via any web browser and can automate the creation of BCVs. For scripting, however, you should use the SANWorks Command Scripter, which allows direct command-line access to the StorageWorks controllers. EMC’s application for this is Timefinder; Hitachi’s is Shadowimage.

- Establish

To establish a backup mirror is to tell the primary disk set to copy its data over to the backup mirror, thus synchronizing it with the primary disk set. Many backup mirror applications offer an incremental establish, which copies only the sectors that have changed since the last establish. Some refer to this as silvering the mirror, a reference to the silver that is put on the back of a “real” mirror.

- Split

When you split a backup mirror, you tell the disk array to break the link between the primary disk set and the backup mirror. This is typically done to back up the backup mirror. Once it’s finished, you have a complete copy of the primary disk set on another set of devices, and those devices become visible on the backup server. This is also called breaking the mirror.

- Restore

To restore the backup mirror is to copy the backup mirror to the primary disk set. Once the command to do this is issued, the restore usually appears to have been completed instantaneously. As will be explained in more detail later, requests for data that has not yet been restored is redirected to the mirror.

- Main server

As discussed earlier in this chapter, a main server is the server in a backup environment that schedules all backups and stores in the database information about what backups went to what tape. It may or may not have tape drives connected to it.

- Device server

A device server, as discussed earlier, is a server that has tape drives connected to it. The backups that go to these tape drives are scheduled by the main server. The main server also keeps track of what files went to what tapes on this device server.

Backing Up the Backup Mirror

This section explains what’s involved in backing up a backup mirror to another server.

Setup

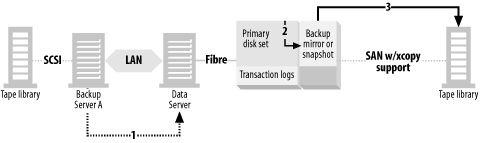

Figure 4-6 again illustrates a typical backup mirror backup configuration. There is a main backup server (Backup Server A) that is connected to a tape library (via SCSI or Fibre Channel) and connected to the data server via a LAN. The data server is connected via Fibre Channel to the storage array, and its datafiles and transaction logs reside on its primary disk sets. The datafiles have a backup mirror associated with them, and it’s connected via Fibre Channel to a device server (Backup Server B), and that device server is connected via SCSI to another tape library.

Tip

Multihosted storage arrays have a SAN built into them. You can put extra switches or hubs between the storage array and the servers connecting to it, but it isn’t necessary unless the number of servers that you wish to connect to the storage array exceeds the number of Fibre Channel ports the array has.

To back up a database, you must back up its datafiles (and other files) and its transaction logs. The datafiles contain the actual database, and the transaction logs contain the changes to the database since the last time you backed up the datafiles. Once you restore the datafiles, the transaction logs can then replay the transactions that occurred between the time of the datafile backup and the time of the failure. Therefore, you must back up the transaction logs continually, because they are essential to a good recovery.

Back up the transaction logs

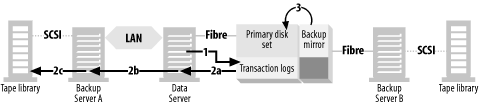

As shown in Figure 4-7, transaction logs are often backed up to disk (1), and then to tape (2). This is my personal preference, but it isn’t required. The reason I prefer to back up to disk and then to tape is that replaying the transaction logs is the slowest part of the restore, and having the transaction logs available on disk significantly speeds up the process. The log disk may or may not be on the storage array and doesn’t need a backup mirror.

You can also see in Figure 4-5 and Figure 4-6 that the transaction logs don’t need to be backed up via the split mirror. The reason for this is that there is no supported methods for backing up transaction logs this way. Therefore, there is no reason to put them on the backup mirror.

As discussed previously, the backup mirror is left split from the primary disk set (3). The following list details how transaction logs are backed with Exchange, Informix, Oracle, and SQL Server:

- Exchange

As discussed previously, the only way to back up Exchange’s transaction logs is to write an application that communicates directly with Microsoft’s API.

- Informix

Informix’s transaction logs are called logical logs and can be backed up directly to tape or disk. Again, I recommend backing up to disk first, followed by a backup to tape, since recovering logical logs from disk is much faster than recovering them from tape. If you plan to perform client-free backups, you need to use Informix’s onbar command, which provides the log_full.sh script to kick off the backups of logical logs whenever one becomes full. Although the setup in the next section may look involved, once it’s complete, logical log backup and recovery is actually easy with Informix.

- Oracle

Oracle’s transaction logs are called redo logs , and the backup to disk is accomplished with Oracle’s standard archiving procedure. This is done by placing the database in archive log mode and specifying automatic archiving. As soon as one online redo log is filled, Oracle switches to the next one and begins copying the full log to the archived redo log location. To back up these archived redo logs to tape, you can use an incremental backup that runs once an hour or more often if you prefer.

- SQL Server

As discussed previously, you will not be able to use SQL Server’s dump transaction command in conjunction with a split backup. If you need point-in-time recovery, you need to choose another backup method.

Back up the datafiles

It’s now time to back up the datafiles. In order to do that, you must:

Establish the backup mirror to the primary disk set.

Put the database in backup mode, or sync the filesystem.

Split the backup mirror.

Take the database out of backup mode.

Import and mount the backup mirror volumes to the backup server.

Back up the backup mirror volumes to tape.

The details of how these steps are accomplished are discussed next.

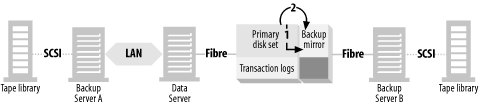

Establish the backup mirror

As shown in Figure 4-8, you must establish (reattach) the backup mirror to the primary disk set (1), causing the mirror to copy to the backup mirror any regions that have been modified since the backup mirror was last established (2).

The following list shows how this works for the various products:

- Compaq

This functionality is available on the RAID Array 8000 (RA8000) and the Enterprise Storage Array 12000 (ESA12000) using HSG80 controllers in switch or hub configurations. The SANworks Enterprise Volume Manager and Command Scripter products support Windows NT/2000, Solaris, and Tru64.

Although Compaq uses the term BCV to refer to a set of disks that comprise a backup mirror, their arrays don’t have any commands that interact with the entire BCV as one entity. All establishing and splitting of mirrors takes place on the individual disk level. Therefore, if you have a striped set of mirrored disks to which you want to assign a third mirror, you need assign a third disk to each set of mirrored disks that make up that stripe. In order to do this, you issue the following commands:

set

mirrorset-namenopolicy setmirrorset-namemembers=3 setmirrorset-namereplace=disk-nameFirst, the nopolicy flag tells the mirror not to add members to the disk group until you do so manually. Then you add a member to the mirrorset by specifying that it has one more member than it already has. (The number 3 in this example assumes that there was already a mirrored pair to which you are adding a third member.) Then, you specify the name of the disk that is to be that third mirror.

Once this relationship is established for each disk in the stripe, it will take some time to copy the data from the existing mirror to the backup mirror. To check the status of this copy, issue the command show mirrorset

mirrorset-name.These commands can be issued via a terminal connected directly to the array, or via the Compaq Command Line Scripter tool discussed earlier.

- EMC

On EMC, establishing the BCV (i.e., backup mirror) to the standard (i.e., primary disk set) is done with the symbcv establish command that is part of the EMC Timefinder package. (Timefinder is available on both Unix and Windows.) When issuing this command, you need to tell it which BCV to establish. Since a BCV is actually a set of devices that are collectively referred to as “the BCV,” EMC uses the concept of device groups to tell Timefinder which BCV devices to synchronize with which standard devices. Therefore, prior to issuing the symbcv establish command, you need to create a device group that contains all the standards and the BCV devices to which they are associated. In order to establish the BCV to the standard, you issue the following command:

# symbcv establish -g

group_name[-i]The -g option specifies the name of the device group that you created above. If the BCV has been previously established and split, you can also specify the -i flag that tells Timefinder to perform an incremental establish. This tells Timefinder to look at both the BCV and the standard devices and copy over only those regions that have changed since the BCV was last established. It even allows you to modify the BCV while it’s split. If you modify any regions on the BCV devices (such as when you overwrite the private regions of each device with Veritas Volume Manager so that you can import them to another system), those regions will also be refreshed from the standard, even if they have not been changed on the primary disk set.

Once the BCV is established, you can check the progress of the synchronization with the symbcv verify -g

device_groupcommand. This shows the number of “BCV invalids” and “standard invalids” that still have to be copied. It also sometimes lists a percentage complete column, but I have not found that column to be particularly reliable or useful.- Hitachi

On HDS, establishing the shadow volume (i.e., backup mirror) to the primary mirror is done with the paircreate command that is part of the HDS Shadowimage package. (Shadowimage is available on both Unix and NT.) When issuing this command, you need to tell it which secondary volume (S-VOL) to establish. Since an S-VOL is actually a pool of devices that are collectively referred to as “reserve pool,” HDS uses the concept of groups to tell Shadowimage which S-VOL devices to synchronize with which primary volumes (P-VOL). Therefore, prior to issuing the paircreate -g

device_groupcommand, you need to create a device group that contains all the primary mirrors and the BCV devices to which they are associated.In order to establish (i.e., synchronize) the S-VOL to the P-VOL, issue the following command:

# paircreate -g

device_groupIf the S-VOL has been previously established and split, you can also specify the pairresync command that tells Shadowimage to perform a resynchronization of the pairs.This tells Shadowimage to apply the writes to the P-VOL, which are logged in cache, to the S-VOL because it has been split. It even allows you to modify the S-VOL while it’s split. If you modify any regions on the S-VOL devices (such as when you overwrite the private regions of each device with Veritas Volume Manager so that you can import them to another system), those regions are also refreshed from the primary, even if they have not been changed on the primary mirror.

Once the S-VOL is established, you can check the progress of the synchronization with the pairdisplay -g

device_groupor -m all command. This shows you the number of “transition volumes” that still have to be copied and the percentage of copying already done.

Put the database in backup mode

As shown in Figure 4-9, once the backup mirror is fully synchronized with the primary disk set, you have to tell the data server to put the database in backup mode (1). In most client-server backup environments, this is normally accomplished by telling the backup software to run a script prior to a backup. The problem here is the backup client, where the script is normally run, isn’t the host where the script needs to run. The client in Figure 4-9 is actually the Backup Server B, not Data Server. This means that you need to use something like rsh or ssh to pass the command from Backup Server B to Data Server. There are now ssh servers available for both Unix and Windows. The commands you need to run on Data Server will obviously depend on the application.

Here are the steps for Exchange, Informix, Oracle, and SQL Server:

- Exchange

Exchange must be shut down prior to splitting the mirror. This is done by issuing a series of net stop commands in a batch file:

net stop "Microsoft Exchange Directory" /y net stop "Microsoft Exchange Event Service" /y net stop "Microsoft Exchange Information Store" /y net stop "Microsoft Exchange Internet Mail Service" /y net stop "Microsoft Exchange Message Transfer Agent" /y net stop "Microsoft Exchange System Attendant" /y

- Informix

Informix is relatively easy. All you have to do is specify the appropriate environment variables and issue the command onmode -c block. Unlike Oracle, however, once this command is issued, all commits will hang until the onmode -c unblock command is issued.

- Oracle

Putting Oracle databases in backup mode is no easy task. You need to know the names of every tablespace, and place each tablespace into backup mode using the command alter tablespace

tablespace_namebegin backup. Many people create a script to automatically discover all the tablespaces and place each in backup mode. Putting the tablespaces into backup mode causes a minor performance hit, but the database will continue to function normally.- SQL Server

As discussed previously, it isn’t necessary to shut down SQL Server prior to splitting the mirror. However, doing so will speed up recovery time. To stop SQL Server, issue the following command:

net stop MSSQLSERVER

Split the backup mirror

As shown in Figure 4-9, once the database is put into backup mode, the backup mirror is split from the primary disk set (2).

This split requires a number of commands on the Compaq array. First,

you set the nopolicy flag with the command

set

mirrorset-name

nopolicy. Then you split the mirror by issuing the command

reduce

disk-name for

each disk in the BCV. Then you create a unit

name for each disk with the command add

unit

unit-name

disk-name

.

Finally, you make that unit visible to the backup server by

issuing the commands set unit

unit-name

disable_access_path=all and set unit

unit-name

enable_access_path=(

backupserver-name

).

To do this on EMC, run the command symbcv split -g

device_group

on the backup server. To do this on Hitachi, run the

command pairsplit -g

device_group

.

(On both EMC and Hitachi, the backup mirror devices are made visible

to the backup server as part of the storage array’s

configuration.)

Take the database out of backup mode

Now that the backup mirror is split, you can take the database out of backup mode. Here are the details of taking the databases out of backup mode:

- Exchange

To start Exchange automatically after splitting the mirror, place the following series of commands in a batch file and run it:

net start "Microsoft Exchange Directory" /y net start "Microsoft Exchange Event Service" /y net start "Microsoft Exchange Information Store" /y net start "Microsoft Exchange Internet Mail Service" /y net start "Microsoft Exchange Message Transfer Agent" /y net start "Microsoft Exchange System Attendant" /y

- Informix

Again, Informix is relatively easy. All you have to do is specify the appropriate environment variables and issue the command onmode -c unblock. Any commits that were issued while the database was blocked will now complete. If you perform the block, split, and unblock fast enough, the users of the application will never realize that commits were frozen for a few seconds.

- Oracle

Again, you must determine the name of each tablespace in a particular ORACLE_SID, and use the command alter tablespace

tablespace_nameend backup to take each tablespace out of backup mode.- SQL Server

To start SQL Server automatically after splitting the mirror, run the following command:

net start MSSQLSERVER

Import the backup mirror’s volumes to the backup server

This step is the most OS-specific and complicated step, since it involves using OS-level volume manager and filesystem commands. However, the prominence of the Veritas Volume Manager makes this a little simpler. Veritas Volume Manager is now available for HP-UX, Solaris, NT, and Windows 2000. Also, the native volume manager for Tru64 is an OEM version of Volume Manager.

On the data server, the logical volumes are mounted as filesystems (or drives) or used as raw devices on Unix for a database. As shown in Figure 4-9, you need to figure out what backup mirror devices belong to which disk group, import those disk groups to the backup server (3), activate the disk groups (which turns on the logical volumes), and mount the filesystems if there are any. This is probably the most difficult part of the procedure, but it’s possible to automate it.

It isn’t possible to assign drive letters to devices via the command line in NT and Windows 2000 without the full-priced version of the Veritas Volume Manager. Therefore, the steps in the list that follows assume you are using this product. Except where noted, the commands discussed next should work roughly the same on both Unix and NT systems. If you don’t want to pay for the full-priced volume manager product, there is an alternate, although much less automated, method discussed at the end of the Veritas-based steps.

You can write a script that discovers the names of the disk groups on

the backup mirror and uses the command vxdg -n

newname

-t import

volume-group to import the

disk/volume groups from the backup server. (The -t

option specifies that the new name is only temporary.) The

following list is a brief summary of how this works.

It’s not meant to be an exact step-by-step guide; it

gives you an overall picture of what’s involved in

discovering and mounting the backup mirror.

First, you need a list of disks that are on the primary disk set. Compaq’s show disks command, EMC’s inq command, and Hitachi’s raidscan command provide this information.

To get a list of which disk groups each disk was in, run the command vxdisk -s list

devicenameon Unix or vxdisk diskinfodisk_nameon Windows.You now have a list of disk groups that can be imported from the backup mirror.

Import each disk group with the vxdg -n

newname-t importdisk-groupcommand.A vxrecover is necessary on Unix to activate the volumes on the disk.

On Unix, you may also need to mount the filesystems found on the disk. On NT/2000, the Volume Manager takes care of assigning drive letters (i.e., mounting) the drives.

As mentioned before, if you are running Windows and don’t wish to pay for the full-priced version of Volume Manager, you can’t assign the drive letters via the command line. Since it isn’t reasonable to expect you to go to the GUI every night during backups, an alternative method is to perform the following steps manually each time there is a configuration change to the backup mirror:

Split the backup mirror as described in the previous section, Section 4.3.2.3.3. This makes the drives accessible to the backup server.

Start the Computer Management GUI in Windows 2000 and later (or the Disk Administrator GUI in NT), and tell it to find and assign drive letters to new disk drives.

A reboot may be necessary to effect the changes.

After the drive letters have been assigned, you may establish and split the backup mirror at will. However, bad things are liable to happen if you reboot the server (or try to access the backup mirror’s disks) while the backup mirror is established.

Back up the backup mirror volumes to tape