Chapter 4. Ray for Applications

The previous chapter ended with the point that Ray Serve is not limited to serving ML models. It can serve any kind of function, as is. We saw in Chapter 1 that the Ray API is very general and flexible, not at all focused exclusively on ML use cases.

So what if you use Ray for other applications? How does Ray change the way we design and run microservices? What about serverless computing?

Why Microservices?

These days, applications are often decomposed into microservices, meaning lots of small services, each of which has limited scope and purpose. There are many reasons for using this approach, but let’s focus on the operational aspects.

Figure 4-1 shows a schematic application architecture using microservices. Interactions come through an API gateway and are routed to an appropriate microservice instance. While each microservice is a logical component of the application, there may be many instances of it running in the cluster. It’s rare to run just one instance of a microservice, because that instance is a potential single point of failure, should it crash or the server on which it is running fail. Multiple instances across several servers improves resiliency.

Figure 4-1. Typical microservices

Microservices with heavy service loads may need far more instances than the minimum necessary for resiliency. Machines have finite resources, so many microservice instances may be necessary to spread the load over many machines.

Finally, the lifetime of each instance can vary. Microservices that are evolving quickly are replaced more quickly.

All of these instances have to be managed explicitly. Conventions, tools, and techniques have to be adopted for how their life cycles are managed, how they communicate, etc. Automation helps, and so do container-based architectures like Kubernetes, but can we do better? It’s annoying that we have to think about machines and microservice instances.

Production Services Built with Ray

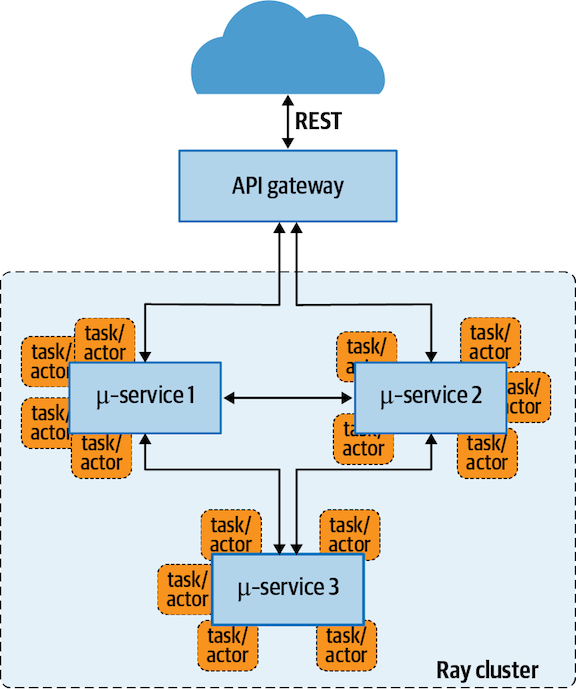

Now consider the application rebuilt on Ray, as shown in Figure 4-2.

Figure 4-2. Microservices on Ray

Ray allows you to work with one logical instance of each microservice. Scaling to cluster-wide resources is handled transparently and uniformly by Ray. The instance life-cycle management challenges are greatly reduced. In effect, Ray is a de facto standard for the automated, fine-grained scaling and distribution of workloads. Without Ray, scaling and distribution have to be explicitly designed and implemented.

Ray operates at a much finer granularity than containers and pods, which are essentially minimachines. Ray actors and tasks can be tiny by comparison.

Hence, Kubernetes and Ray together provide uniform standards and conventions for designing and operating applications at different levels of abstraction.

Ray for Serverless

Serverless is a hot trend in cloud computing. Function as a service (FAAS) is a more accurate term. Instead of configuring virtual machines and the software stack you need, you write a block of code as a function, then submit it to a runtime that executes it for you.

The advantages of serverless include the following:

-

Operations are dramatically simpler. You don’t manage machines, just your function and its execution.

-

You only pay while your function is running, not while your virtual machine is sitting idle.

However, there are significant drawbacks:

-

The programming abstraction is very limited. In particular, existing frameworks provide no support for stateful services. Instead, you have to read and write state information from storage for each function execution. This overhead makes short functions too inefficient. You are forced to write more coarse-grained functions.

-

Available resources are quite limited. GPU support is nonexistent, and functions can only run for a few minutes.1

-

You have no ability to tune performance and overhead.

Hence, building complex applications requires workarounds for the limitations of serverless. On balance, it will sometimes be better to run your own virtual machines, giving you more control and flexibility.

However, what serverless gets right is the fact that physical and virtual machines are an implementation detail that we would like to avoid if possible.

Ray offers a glimmer of hope for a model “beyond serverless.” In a team with a shared Ray cluster, programming with Ray is serverless in the sense that you stop thinking about servers and the explicit distribution of processes over a cluster. Programming with Ray feels much more like single-machine application development, yet your application “magically” has near-infinite scalability, handled by Ray.

At Anyscale, we’re thinking hard about how Ray can make the promise of serverless available to a wider class of applications and developers.

What’s Next?

In our final chapter, we’ll finish with a summary of what we’ve learned, references for more information, and possible next steps for you to consider.

1 These limitations will likely go away as serverless implementations improve.

Get What Is Ray? now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.