Prototyping the Local and Cloud Flows

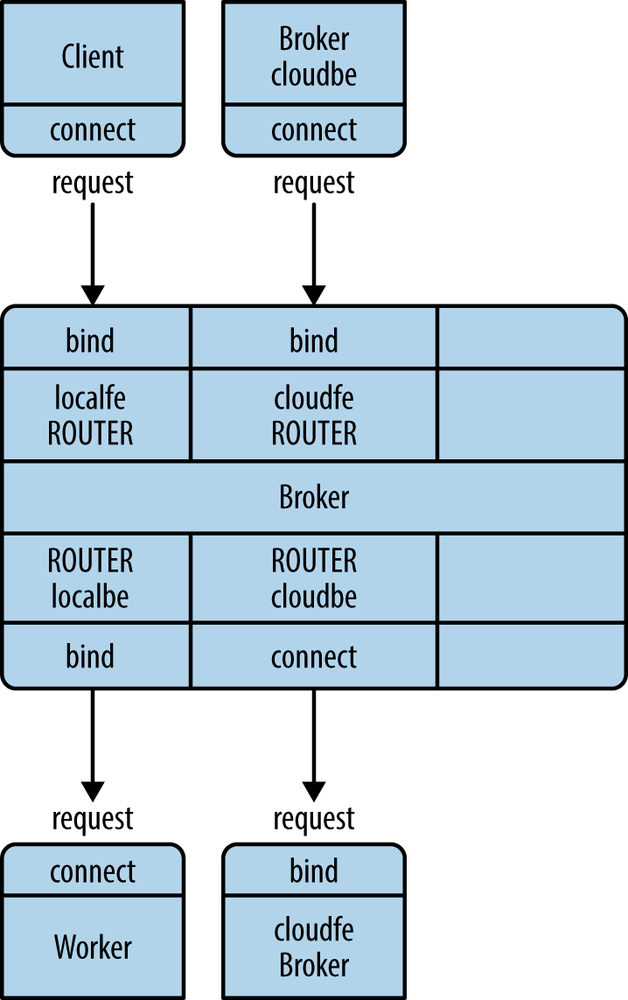

Letâs now prototype the flow of tasks via the local and cloud sockets (Figure 3-21). This code pulls requests from clients and then distributes them to local workers and cloud peers on a random basis.

Figure 3-21. The flow of tasks

Before we jump into the code, which is getting a little complex, letâs sketch the core routing logic and break it down into a simple but robust design.

We need two queues, one for requests from local clients and one for requests from cloud clients. One option would be to pull messages off the local and cloud frontends and pump these onto their respective queues. But this is kind of pointless, because ÃMQ sockets are queues already. So letâs use the ÃMQ socket buffers as queues.

This was the technique we used in the load-balancing broker earlier in this chapter, and it worked nicely. We only read from the two frontends when there is somewhere to send the requests. We can always read from the backends, as they give us replies to route back. As long as the backends arenât talking to us, thereâs no point in even looking at the frontends.

So, our main loop becomes:

Poll the backends for activity. When we get a message, it may be âreadyâ from a worker or it may be a reply. If itâs a reply, we route it back via the local or cloud frontend.

If a worker has replied, it has become available, so we queue it and count ...

Get ZeroMQ now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.