Our second approach extends the Lazy Pirate pattern with a queue proxy that lets us talk, transparently, to multiple servers, which we can more accurately call âworkers.â Weâll develop this in stages, starting with a minimal working model, the Simple Pirate pattern.

In all these Pirate patterns, workers are stateless. If the application requires some shared state, such as a shared database, we donât know about it as we design our messaging framework. Having a queue proxy means workers can come and go without clients knowing anything about it. If one worker dies, another takes over. This is a nice, simple topology with only one real weakness: the central queue itself, which can become a problem to manage and is a single point of failure.

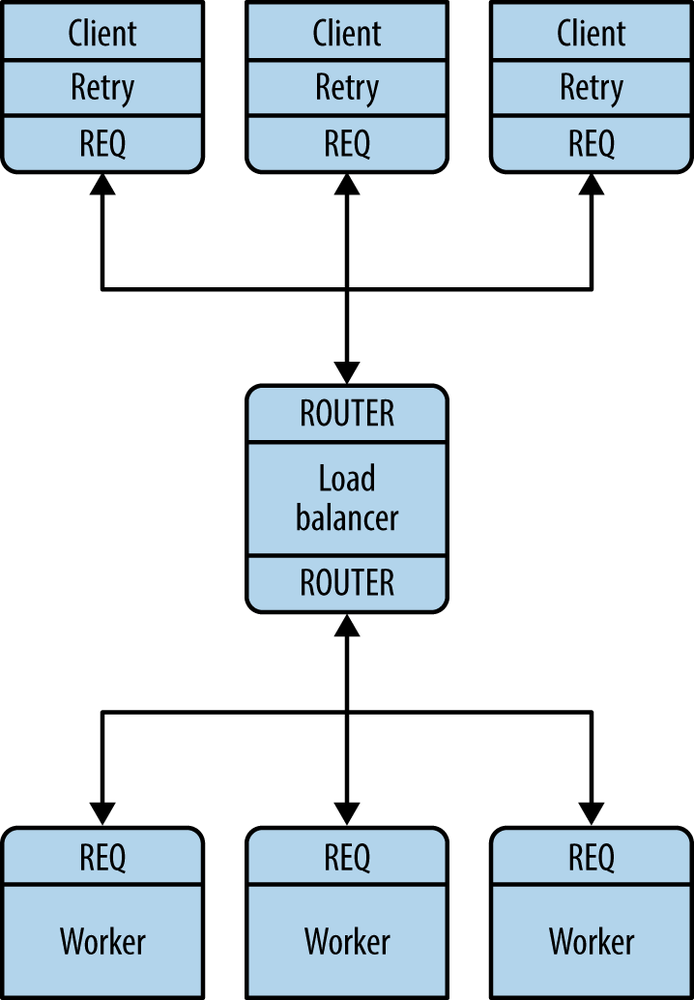

The basis for the queue proxy is the load-balancing broker from Chapter 3. What is the very minimum we need to do to handle dead or blocked workers? Turns out, itâs surprisingly little. We already have a retry mechanism in the client, so using the load-balancing pattern will work pretty well. This fits with ÃMQâs philosophy that we can extend a peer-to-peer pattern like request-reply by plugging naive proxies in the middle (Figure 4-2).

We donât need a special client; weâre still using the Lazy Pirate client. Example 4-4 presents is the queue, which is identical to the main task of the load-balancing broker.

Example 4-4. Simple Pirate queue (spqueue.c)

//// Simple Pirate broker// This is identical to load-balancing pattern, with no reliability// mechanisms. It depends on the client for recovery. Runs forever.//#include "czmq.h"#define WORKER_READY "\001"// Signals worker is readyintmain(void){zctx_t*ctx=zctx_new();void*frontend=zsocket_new(ctx,ZMQ_ROUTER);void*backend=zsocket_new(ctx,ZMQ_ROUTER);zsocket_bind(frontend,"tcp://*:5555");// For clientszsocket_bind(backend,"tcp://*:5556");// For workers// Queue of available workerszlist_t*workers=zlist_new();// The body of this example is exactly the same as lbbroker2...}

Example 4-5 shows the worker, which takes the Lazy Pirate server and adapts it for the load-balancing pattern (using the REQ âreadyâ signaling).

Example 4-5. Simple Pirate worker (spworker.c)

//// Simple Pirate worker// Connects REQ socket to tcp://*:5556// Implements worker part of load balancing//#include "czmq.h"#define WORKER_READY "\001"// Signals worker is readyintmain(void){zctx_t*ctx=zctx_new();void*worker=zsocket_new(ctx,ZMQ_REQ);// Set random identity to make tracing easiersrandom((unsigned)time(NULL));charidentity[10];sprintf(identity,"%04X-%04X",randof(0x10000),randof(0x10000));zmq_setsockopt(worker,ZMQ_IDENTITY,identity,strlen(identity));zsocket_connect(worker,"tcp://localhost:5556");// Tell broker we're ready for workprintf("I: (%s) worker ready\n",identity);zframe_t*frame=zframe_new(WORKER_READY,1);zframe_send(&frame,worker,0);intcycles=0;while(true){zmsg_t*msg=zmsg_recv(worker);if(!msg)break;// Interrupted// Simulate various problems, after a few cyclescycles++;if(cycles>3&&randof(5)==0){printf("I: (%s) simulating a crash\n",identity);zmsg_destroy(&msg);break;}elseif(cycles>3&&randof(5)==0){printf("I: (%s) simulating CPU overload\n",identity);sleep(3);if(zctx_interrupted)break;}printf("I: (%s) normal reply\n",identity);sleep(1);// Do some heavy workzmsg_send(&msg,worker);}zctx_destroy(&ctx);return0;}

To test this, start a handful of workers, a Lazy Pirate client, and the queue, in any order. Youâll see that the workers eventually all crash and burn, and the client retries and then gives up. The queue never stops, and you can restart workers and clients ad nauseum. This model works with any number of clients and workers.

Get ZeroMQ now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.