Jupyter is where humans and data science intersect

Discover how data-driven organizations are using Jupyter to analyze data, share insights, and foster practices for dynamic, reproducible data science.

Crisscross (source: Callum Wale on Unsplash)

Crisscross (source: Callum Wale on Unsplash)

I’m grateful to join Fernando Pérez and Brian Granger as a program co-chair for JupyterCon 2018. Project Jupyter, NumFOCUS, and O’Reilly Media will present the second annual JupyterCon in New York City August 21–25, 2018.

Timing for this event couldn’t be better. The human side of data science, machine learning/AI, and scientific computing is more important than ever. This is seen in the broad adoption of data-driven decision-making in human organizations of all kinds, the increasing importance of human centered design in tools for working with data, the urgency for better data insights in the face of complex socioeconomic conditions worldwide, as well as dialogue about the social issues these technologies bring to the fore: collaboration, security, ethics, data privacy, transparency, propaganda, etc.

To paraphrase our co-chairs, Brian Granger:

Jupyter is where humans and data science intersect.

And Fernando Perez:

The better the technology, the more important that human judgement becomes.

Consequently, we’ll explore three main themes at JupyterCon 2018:

- Interactive computing with data at scale: the technical best practices and organizational challenges of supporting interactive computing in companies, universities, research collaborations, etc., (JupyterHub)

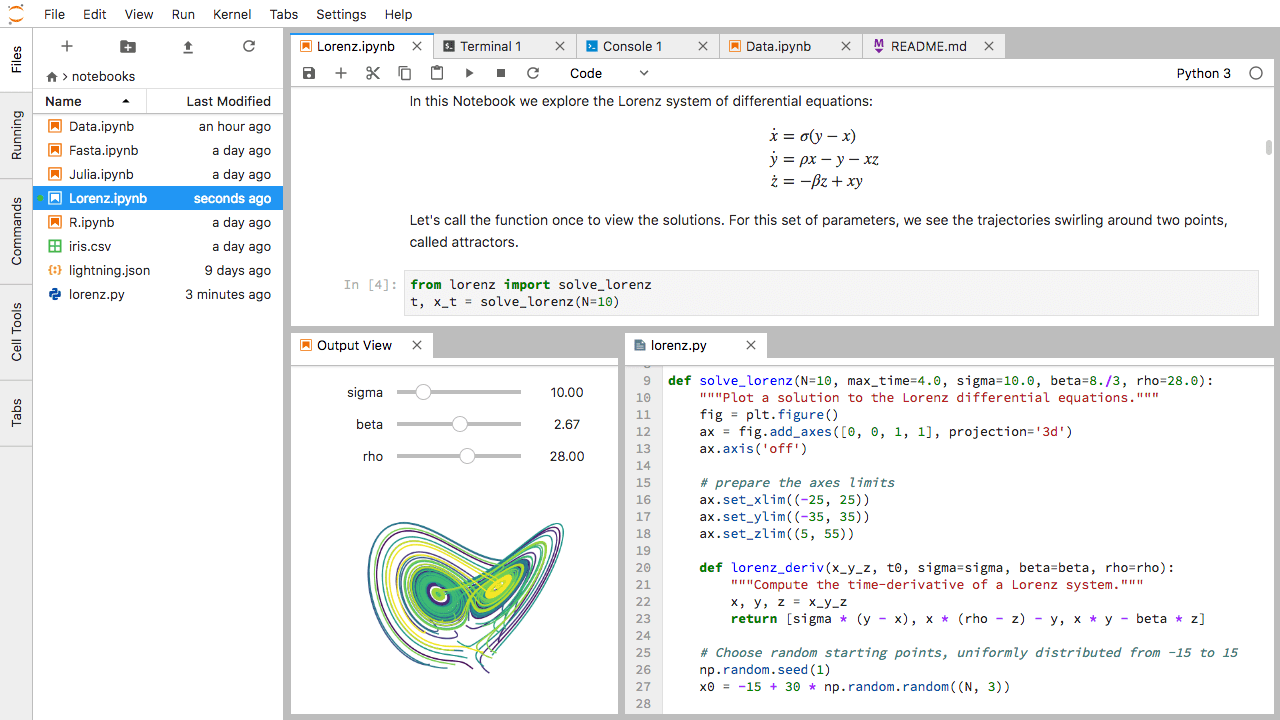

- Extensible user interfaces for data science, machine learning/AI, and scientific computing (JupyterLab)

- Computational communication: taking the artifacts of interactive computing and communicating them to different audiences

A meta-theme that ties these together is extensible software architecture for interactive computing with data. Jupyter is built on a set of flexible, extensible, and re-usable building blocks that can be combined and assembled to address a wide range of usage cases. These building blocks are expressed through the various open protocols, APIs, and standards of Jupyter.

The Jupyter community has much to discuss and share this year. For example, success stories such as the data science program at UC Berkeley illustrate the power of JupyterHub deployments at scale in education, research, and industry. As universities and enterprise firms learn to handle the technical challenges of rolling out hands-on, interactive computing at scale, a cohort of organizational challenges come to the fore: practices regarding collaboration, security, compliance, data privacy, ethics, etc. These points are especially poignant in verticals such as health care, finance, and education, where the handling of sensitive data is rightly constrained by ethical and legal requirements (HIPAA, FERPA, etc.). Overall, this dialogue is extremely relevant—it is happening at the intersection of contemporary political and social issues, industry concerns, new laws (GDPR), the evolution of computation, plus good storytelling and communication in general—as we’ll explore with practitioners throughout the conference.

The recent beta release of JupyterLab embodies the meta-theme of extensible software architecture for interactive computing with data. While many people think of Jupyter as a “notebook,” that’s merely one building block needed for interactive computing with data. Other building blocks include terminals, file browsers, LaTeX, markdown, rich outputs, text editors, and renderers/viewers for different data formats. JupyterLab is the next-generation user interface for Project Jupyter, and provides these different building blocks in a flexible, configurable, customizable environment. This opens the door for Jupyter users to build custom workflows, and also for organizations to extend JupyterLab with their own custom functionality.

Thousands of organizations require data infrastructure for reporting, sharing data insights, reproducing results of analytics, etc. Recent business studiesestimate that more than half of all companies globally are precluded from adopting AI technologies due to a lack of digital infrastructure— often because their efforts toward data and reporting infrastructure are buried in technical debt. So much of that infrastructure was built from scratch, even when organizations needed essentially the same building blocks. JupyterLab’s primary goal is to make it routine to build highly customized, interactive computing platforms, while supporting more than 90 different popular programming environments.

A third major theme builds on top of the other two: computational communication. For data and code to be useful for humans, who need to make decisions, it has to be embedded into a narrative—a story— that that can be communicated to others. Examples of this pattern include: data journalism, reproducible research and open science, computational narratives, open data in society and government, citizen science, and really any area of scientific research (physics, zoology, chemistry, astronomy, etc.), plus the range of economics, finance, and econometric forecasting.

Another growing segment of use cases involves Jupyter as a “last-mile” layer for leveraging AI resources in the cloud. This becomes especially important in light of new hardware emerging for AI needs, vying with competing demand from online gaming, virtual reality, cryptocurrency mining, etc.

Please take the following as personal opinion, observations, perspectives: we’ve reached a point where hardware appears to be evolving more rapidly than software, while software appears to be evolving more rapidly than effective process. At O’Reilly Media, we work to map the emerging themes in industry, in a process nicknamed “radar.” This perspective about hardware is a theme I’ve been mapping, and meanwhile comparing notes with industry experts. A few data points to consider: Jeff Dean’s talk at NIPS 2017, “Machine Learning for Systems and Systems for Machine Learning” about comparisons of CPUs/GPUs/TPUs, and how AI is transforming the design of computer hardware; The Case for Learned Index Structures, also from Google, about the impact of “branch vs. multiple” costs on decades of database theory; this podcast interview “Scaling machine learning” with Reza Zadeh about the critical importance of hardware/software interfaces in AI apps; the video interview that Wes McKinney and I recorded at JupyterCon 2017 about how Apache Arrow presents a much different take on how to leverage hardware and distributed resources.

The notion that “hardware > software > process” contradicts the past 15–20 years of software engineering practice. It’s an inversion of the general assumptions we make. In response, industry will need to rework approaches for building software within the context of AI— which was articulated succinctly by Lenny Pruss from Amplify Partners in “Infrastructure 3.0: Building blocks for the AI revolution.” In this light, Jupyter provides an abstraction layer— a kind of buffer to help “future proof”— for complex use cases in NLP, machine learning, and related work. We’re seeing this from most of the public cloud vendors, who are also leaders in AI, Google, Amazon, Microsoft, IBM, etc., and who will be represented at the conference in August.

Our program at JupyterCon will feature expert speakers across all of these themes. However, to me, that’s merely the tip of the iceberg. So much of the real value that I get from conferences happens in the proverbial “Hallway Track,” where you run into people who are riffing off news they’ve just learned in a session— perhaps in line with your thinking, perhaps in a completely different direction. Those conversations have space to flourish when people get immersed in the community, the issues, the possibilities.

It’ll be a busy week. We’ll have two days of training courses: intensive, hands-on coding, a lot of interaction with expert instructors. Training will overlap with one day of tutorials: led by experts, generally larger than training courses (though more detailed than session talks), featuring a lot of Q&A.

Then we’ll have two days of keynotes and session talks, expo hall, lunches and sponsored breaks, plus Project Jupyter sponsored events. Events include Jupyter User Testing, author signings, “Meet the Experts” office hours, demos in the vendor expo hall— plus related meetups in the evenings. Last year, the Poster Session was one of the biggest surprises to me: it was such a hit that it was difficult to move through the room; walkways were packed with people asking presenters questions about their projects.

This year, we’ll introduce a Business Summit, similar to the popular summits at the Strata Data Conferences and The AI Conference. This will include high-level presentations on the most promising and important developments in Jupyter for executives and decision-makers. Brian Granger and I will be hosting the Business Summit, along with Joel Horwitz of IBM. One interesting data point: among the regional events, we’ve seen much more engagement this year from enterprise and government than we’d expected, more emphasis on business use cases and new product launches. The ecosystem is growing, and will be represented well at JupyterCon!

We will also feature an Education Track in the main conference, expanding on the well-attended Education Birds-of-a-Feather and related talks during JupyterCon 2017. Use of Jupyter in education has grown rapidly across many contexts: middle/high-school, universities, corporate training, and online courses. Lorena Barba and Robert Talbert will be organizing this track.

Following our schedule of conference talks, the week wraps up with a community sprint day on Saturday. You can work side-by-side with leaders and contributors in the Jupyter ecosystem to implement that feature you’ve always wanted, fix bugs, work on design, write documentation, test software, or dive deep into the internals of something in the Jupyter ecosystem. Be sure to bring your laptop.

Note that we believe true innovation depends on hearing from, and listening to, people with a variety of perspectives. Please read our Diversity Statement for more details. Also, we’re committed to creating a safe and productive environment for everyone at all of our events. Please read our Code of Conduct. Last year, we were able to work with the community plus matching donations to provide several “Diversity & Inclusion” scholarships, as well as more than dozen student scholarships. We’re looking forward to building on that this year!

That’s a sample of what’s coming up for JupyterCon in NYC this August. In preparation for the event, we’ll also help present and sponsor a regional community event—check out Jupyter Pop-up DC, May 15, 2018. We look forward to many opportunities to showcase new work and ideas, to meet each other, to learn about the architecture of the project itself, and to contribute to the future of Jupyter.