Chapter 1. Myths and Realities of AI

Pamela McCorduck, in her book Machines Who Think (W. H. Freeman), describes AI as an “audacious effort to duplicate in an artifact” what all of us see as our most defining attribute: human intelligence. Her 1979 book provides a fascinating glimpse into early thinking about AI—not using theorems or science, but instead describing how people came to imagine its possibilities. With something so magical and awe-inspiring as AI, it’s not hard to imagine the surrounding hyperbole. This chapter hopes to maintain the awe but ground it in reality.

Stuart Russell, a computer scientist and one of the most important AI thinkers of the 21st century, discusses the past, present, and future of AI in his book Human Compatible (Viking). Russell writes that AI is rapidly becoming a pervasive aspect of today and will be the dominant technology of the future. Perhaps in no industry but healthcare is this so true, and we hope to address the implications of that in this book.

For most people, the term artificial intelligence evokes a number of attributed properties and capabilities—some real, many futuristic, and others imagined. AI does have several superpowers, but it is not a “silver bullet” that will solve skyrocketing healthcare costs and the growing burden of illness. That said, thoughtful AI use in healthcare creates an enormous opportunity to help people live healthier lives and, in doing so, control some healthcare costs and drive better outcomes. This chapter describes healthcare and technology myths surrounding AI as a prelude to discussing how AI-enhanced apps, systems, processes, and platforms provide enormous advantages in quality, speed, effectiveness, cost, and capacity, allowing clinicians to focus on people and their healthcare.

A lot of the hype accompanying AI stems from machine learning models’ performance compared to that of people, often clinicians. Papers and algorithms abound describing machine learning models outperforming humans in various tasks ranging from image and voice recognition to language processing and predictions. This raises the question of whether machine learning (ML) diagnosticians will become the norm. However, the performance of these models in clinical practice often differs from their performance in the lab; machine learning models built on training and test data sometimes fail to achieve the same success in areas such as object detection (e.g., identifying a tumor) or disease prediction. Real-world data is different—that is, the training data does not match the real-world data—and this causes a data shift. For example, something as simple as variation in skin types could cause a model trained in the lab to lose accuracy in a clinical setting. ML diagnosticians may be our future, but additional innovation must occur for algorithm diagnosticians to become a reality.

The hyperbole and myths that have emerged around AI blur the art of what’s possible with AI. Before discussing those myths, let’s understand what we mean by AI. Descriptions of AI are abundant, but the utility of AI will be more important than a definition. Much of this book will explore the service of AI. We provide clarity in helping with understanding the context and meaning of the term AI. A brief look at its origin provides a useful framework for understanding how AI is understood and used today.

AI Origins and Definition

Humans imagining the art of what’s possible with artificial life and machines has been centuries in the making. In her 2018 book Gods and Robots (Princeton University Press), Adrienne Mayor, a research scholar, paints a picture of humans envisioning artificial life in the early years of recorded history. She writes about ancient dreams and myths of technology enhancing humans. A few thousand years later, in 1943, two Chicago-area researchers introduced the notion of neural networks in a paper describing a mathematical model. The two researchers—a neuroscientist, Warren S. McCulloch, and a logician, Walter Pitts—attempted to explain how the complex decision processes of the human brain work using math. This was the birth of neural networks, and the dawn of artificial intelligence as we now know it.

Decades later, in a small town along the Connecticut River in New Hampshire, a plaque hangs in Dartmouth Hall, commemorating a 1956 summer research project, a brainstorming session conducted by mathematicians and scientists. The names of the founding fathers of AI are engraved on the plaque, recognizing them for their contributions during that summer session, which was the first time that the words “artificial intelligence” were used; John McCarthy, widely known as the father of AI, gets credit for coining the term.

The attendees at the Dartmouth summer session imagined artificial intelligence as computers doing things that we perceive as displays of human intelligence. They discussed ideas ranging from computers that understand human speech to machines that operate like the human brain, using neurons. What better display of intelligence than devices that are able to speak and understand human language, now known as natural language processing? During this summer session, AI founders drew inspiration from how the human brain works as it relays information between input receptors, neurons, and deep brain matter. Consequently, thinking emerged on using artificial neurons as a technique for mimicking the human brain.

Enthusiasm and promises for the transformation of healthcare abound, but this goal remains elusive. In the 1960s, the AI community introduced expert systems, which attempt to transfer expertise from an expert (e.g., a doctor) to computers using rules and then to apply them to a knowledge base to deduce new information, an inference. In the 1970s, rules-based systems like MYCIN, an AI system engineered to treat blood infections, held much promise. MYCIN attempted to diagnose patients using their symptoms and clinical test results. Although its results were better than or comparable to those of specialists in blood infections, its use in clinical practice did not materialize. Another medical expert system, CADUCEUS, tried to improve on MYCIN. MYCIN, CADUCEUS, and other expert systems (such as INTERNIST-I) illustrate the efforts of the AI community to create clinical diagnostic tools, but none of these systems found its way into clinical practice.

This situation persists today; AI in healthcare is not readily found at the clinical bedside. Several research papers demonstrate that AI performs better than humans at tasks such as diagnosing disease. For example, deep learning algorithms outperform radiologists at spotting malignant tumors. Yet these “superior” disease-detecting algorithms largely remain in the labs. Will these machine learning diagnostic tools meet the same fate as the expert systems of the 20th century? Will it be many years before AI substantially augments humans in clinical settings?

This is not the 1970s; AI now permeates healthcare in a variety of ways—in production, for example. Artificial intelligence helps researchers in drug creation for various diseases, such as cancer. Beth Israel Deaconess Medical Center, a teaching hospital of Harvard Medical School, uses AI to diagnose possible terminal blood diseases. It uses AI-enhanced microscopes to scan for injurious bacteria like E. coli in blood samples, working at a faster rate than manual scanning. Natural language processing is widely used for automating the extraction and encoding of clinical data from physician notes. Multiple tools at work in production settings today use natural language processing for clinical coding. Machine learning helps with steering patients to the optimal providers. For decades, machine learning has been used to identify fraud and reduce waste. The widespread adoption of AI in specific use cases in healthcare companies, coupled with recent innovations in AI, holds tremendous promise for expanding the use of AI in clinical settings.

This book on AI-First healthcare hopes to show a different future for the widespread adoption of AI in healthcare, including in clinical settings and in people’s homes. There continues to be an active conversation among clinicians and technologists about implementing AI in healthcare. A 2019 symposium attended by clinicians, policy makers, healthcare professionals, and computer scientists profiled real-world examples of AI moving from the lab to the clinic.1 The symposium highlighted three themes for success: life cycle planning, stakeholder involvement, and contextualizing AI products and tools in existing workflows.

A lot has changed in the years since the conception of neural networks in 1943. AI continues to evolve every decade, explaining why consensus on an AI definition remains elusive. Since we are not all operating with the same definition, there is a lot of confusion around what AI is and what it is not. How artificial intelligence is defined can depend on who provides the explanation, the context, and the reason for offering a definition. AI is a broad term representing our intent to build humanlike intelligent entities for selected tasks. The goal is to use fields of science, mathematics, and technology to mimic or replicate human intelligence with machines, and we call this “AI.” In this book, we will explore several intelligent entities, such as augmented doctors, prediction machines, virtual care spaces, and more, that improve healthcare outcomes, patient care, experiences, and cost.

We continue to build and exhibit systems, machines, and computers that can do what we previously had understood only humans could do: win at checkers, beat a reigning world chess master, best the winningest Jeopardy! contestants. Famously, the computer program AlphaGo has defeated world champions in the 4,000-year-old abstract strategy game Go and excels in emulating (and surpassing) human performance of this game. Articles abound on machine learning models in labs outperforming doctors on selected tasks, such as identification of possible cancerous tumors in imaging studies; this suggests that AI may eventually replace some physician specialties, such as radiologist.

AI and Machine Learning

A tenet of this book’s central message is that artificial intelligence comprises more than machine learning. If we think of AI solely in the context of ML, it’s doubtful that we ever build intelligent systems that mirror human intelligence in the performance of clinical or healthcare activities, or that we create AI that materially enhances patient experiences, reduces costs, and improves the health of people and the quality of life for healthcare workers.

Most publicized implementations of AI showcase ML model successes, which explains why many see an equivalence between machine learning and AI. Furthermore, the most common AI applications utilizing deep learning, computer vision, or natural language processing all employ machine learning.

The supposition that machine learning is the same as AI ignores or dismisses those aspects of a software stack used to build intelligent systems that are not machine learning. Or worse, our imagination or knowledge of what’s possible with AI is limited to only those functions implementable by machine learning.

AI comprises many of the components of the AI Stack in Figure 1-1, which illustrates the many capabilities of AI beyond machine learning.

Figure 1-1. AI Stack

AI’s aspiration to create machines exhibiting humanlike intelligence requires more than a learning capability. We expect and need the many AI capabilities for engineering healthcare solutions illustrated in Figure 1-1. Transforming healthcare with AI involves computer vision, language, audio, reasoning, and planning functions. For some problems, we may choose to give AI autonomy; in our discussion on ethics later in the book, we address the implications and risks of doing so.

The AI features in an implementation will vary based on the problem at hand. For example, in an ICU, we may want AI to have audio capability to enable question-and-answer capability between AI and clinicians. A clinician in conversation with AI requires language skills in AI. AI must be able to understand the natural language of people—that is, it must have natural language processing (NLP). Speech to text and text to address must be a capability of AI to enable a rich conversation. We need sensors to detect movement, such as a patient falling out of bed, with AI triggering alerts. At the heart of enabling this intelligent space in the ICU, we need continuous learning. All of these features require machine learning. Thinking of AI as a single thing or a single machine would be incorrect. For example, in the ICU, AI would be part of the environment. Embedding AI in voice speakers, sensors, and other smart things working together provides humanlike intelligence augmenting clinicians. We know that patients recall less than 50% of their physician communications during the patient-doctor interaction.2 Therein lies one of many opportunities to use AI to improve patient-doctor interactions.

The AI Stack may comprise both a hardware and a software stack, a set of underlying components needed to create a complete solution. Using the AI Stack in Figure 1-1 as a guide, the next sections provide a brief primer on AI capabilities, starting with machine learning and neural networks.

Machine learning and neural networks

There are different types or subcategories of machine learning, such as supervised learning, unsupervised learning, and deep learning.

In supervised learning, we train the computer using data that is labeled. If we want an ML model to detect a child’s mother, we provide a large number of pictures of the mother, labeling each photo as “the mother.” Or if we want an ML model to detect pneumonia in an X-ray, we take many X-rays of pneumonia and label each as such. In essence, we tag the data with the correct answer. It’s like having a supervisor who gives you all the right solutions for your test. A supervised machine learning algorithm learns from labeled training data. In effect, we supervise the training of the algorithm. With supervised machine learning, we effectively use a computer to identify, sort, and categorize things. If we need to pore through thousands of X-rays to identify pneumonia, we will likely perform this task more quickly with a computer using machine learning than with a doctor. However, just because the computer outperforms the doctor on this task doesn’t mean the computer can do better than a doctor in clinical care.

Much of the learning we do as humans is unsupervised, without the benefit of a teacher. We don’t give the answers to unsupervised learning models, and they don’t use labeled data. Instead, we ask the algorithm, the model, to discover the answer. Applying this to the example of the child’s mother, we provide the face recognition algorithm with features, like skin tone, eye color, facial shape, dimples, hair color, or distance between the eyes. Unsupervised machine learning recognizes the unique features of the child’s mother; learning from the data, it identifies “the mother” in images with high accuracy.

Deep learning uses a series of algorithms to derive answers. Data feeds one side, the input layer, and moves through the hidden layer(s), pulling specific information that feeds the output layer, producing an insight. We describe these layers, or series of algorithms, as a neural network. Figure 1-2 illustrates a neural network with three layers. Each layer’s output is the next layer’s input. The depth of the neural network reflects the number of layers traversed in order to reach the output layer. When a neural network comprises more than three layers or more than one hidden layer, it is considered deep, and we refer to this machine learning technique as deep learning. It’s often said that the neural network mimics the way the human brain operates, but the reality is that the neural network is an attempt to simulate the network of neurons that make up a human brain so that machines will be able to learn like humans.

Figure 1-2. Illustration of a neural network

The neural network allows the tweaking of parameters until the model provides the desired output. Since the output can be tuned to our liking, it’s a challenge to “show our work,” resulting in the “black box” categorization. We’re less concerned about how the model got the result and are instead focused on consistently getting the same result. The more data advances through the neural network, the less likely it is that we can pinpoint exactly why the model works, and we focus on the model accuracy.

People and machines learn differently. We teach machines to identify certain images as a tumor with predefined algorithms using labeled data (e.g., tumor or non-tumor). A child can recognize their mother with minimal training and without lots of pictures of their mother and of people who are not their mother. But a machine needs enough data (e.g., tumor/non-tumor) to build the “skill” to learn if an image is “the mother.”

A machine learning model, often synonymously but inaccurately described as an algorithm, learns from data. These algorithms are implemented in code. Algorithms are just the “rules,” like an algebraic expression, but a model is a solution. Machine learning algorithms are designed mechanisms that run the engineered featured inputs through the algorithms to get the probability of the targets. The option selections and hyperparameter tuning increase the model’s outcome accuracy and control modeling errors. Data is split into training and test data, using training data to build a model and test data to validate the model. Cross-function validation is necessary before the model’s deployment into production, to make sure the new data would get a similar outcome. Figure 1-3 illustrates a simple regression model. This figure shows a linear relationship to the target Y variable from x1, x2, ... features used in the model. An early step is to define the target to predict, find the data source to perform the feature engineering, which identifies the related variables, and test the different algorithms to best fit the data and the target. A scoring mechanism called the F1 score is used to validate the model performance.

Figure 1-3. ML model

An easy example to understand is when our email is sorted into categories like “spam” and “inbox.” We could write a computer program with If/Then/Else statements to perform the sorting, but this handcrafted approach would take time; it would also be fragile, because what constitutes spam changes constantly, so we would have to continuously update the computer program with more If/Then/Else computer statements as we learned more about new spam emails. Machine learning algorithms can learn how to classify email messages as spam or inbox by training on large datasets that have spam.

Once we are satisfied that the model works with different data, we incorporate the model or file and plug it into a workflow or product like email or a disease prediction tool. The model can handle new data without any hand coding, and the model gets used by different users using the email product. In this email example, we labeled data, which is supervised learning.

Computer vision and natural language processing (NLP)

Computer vision (CV), a subfield of AI, seeks to help computers understand the visual world. The visual world in healthcare encompasses many things; a short (and incomplete) list includes:

-

Still images, such as X-ray images for detecting pneumonia

-

Photographs showing skin lesions

-

Sensors that detect movements such as falling or activities within a home

-

Faxes of handwritten clinician notes

-

Videos showing potential health issues

Computer vision enables machines to see and understand these visual items and then react. Often machine learning facilitates the identification and classification of objects.

Natural language processing, or NLP, provides machines with the ability to read and understand human languages. Understanding and generating speech (i.e., writing and speaking) is essential for human intelligence. NLP allows computers to read clinical notes, analyze keywords and phrases, and extract meaning from them so that people can create and use actionable insights. NLP can be useful in extracting vital elements from patient-physician interactions and automating content population in electronic medical records.

Planning and reasoning

Humans plan as a natural part of their lives. Solving many healthcare problems requires AI to realize a planning component. Planning and machine learning operate complementarily to solve challenging problems. When Google created the computer program AlphaGo to beat one of the world’s best strategy players in the board game Go, the program used planning and learning. The game involved AlphaGo using a simulation model, Monte Carlo, and deep learning to predict the probability of specific outcomes. In this example, the computer must act autonomously and flexibly to construct a sequence of actions to reach a goal. It uses machine learning and techniques such as computational algorithms, aka Monte Carlo, to determine its next move. Planning may take the form of If/Then/Else logic or algorithms—whatever it takes to engineer an intelligent system necessary to solve a challenge.

Another aspect of planning addresses the black-box challenge of deep learning. The fact that we cannot explain how a model consistently gets to, say, 95% accuracy doesn’t translate to not being able to explain AI outcomes. Model performance may not achieve clinical adoption, but model transparency gets us closer to that end goal, and this is part of AI planning. Like all fields of AI, interpretable AI is an area of research in which start-ups and researchers work to take the guesswork out of AI.

In addition to planning, many AI solutions must have a reasoning element. Machines make inferences using data, which is a form of reasoning. Early researchers in the field of AI developed algorithms and used If/Then/Else rules to emulate the simple step-by-step reasoning people use to solve problems and make logical inferences. The early inference engines and decision support systems in the 1960s used these kinds of techniques.

Machine learning performs many human tasks better than humans, but not reasoning. Figure 1-4 shows an image of two cylinders of different sizes and a box. Any five-year-old could answer the non-relational and relational questions shown in the image. But a computer using deep learning would not be able to understand implicit relationships between different things, something humans do quite well.

Figure 1-4. Image of various objects

In June 2017, DeepMind published a paper showing a new technique, relation networks, that can reason about relations among objects.3 DeepMind reasoning used a variety of AI techniques in combination to get answers to relational questions. Developing reasoning in AI continues to be an evolving field of research. Healthcare solutions that need reasoning will consider both old and new AI techniques as well as emerging research.

Autonomy

There are instances in which we want AI to operate on its own, to make decisions and take action. An obvious example is when AI in an autonomous vehicle can save the life of a pedestrian. In healthcare, people filing claims would like instantaneous affirmative responses. Autonomous AI systems accomplish tasks like approving claims or authoring readmissions, and AI accomplishes these goals without interacting with humans. Autonomous systems may operate in an augmented fashion, answering clinicians’ questions or guiding a clinician on a task. Levels of autonomy in healthcare will vary, taking cues from levels of autonomy defined for vehicles.4 Autonomy in AI is not an all-or-nothing proposition. AI in healthcare must do no harm and in many cases should be held to a higher standard of care than AI in vehicles. Engineering autonomy in healthcare solutions must be a human-centered process. Engineers should not conflate automation, where AI can consistently repeat a task, with AI having autonomy. Humans must remain in the loop.

Human-AI interactions

Another key tenet of an AI-First approach is to make sure humans are always in the loop. AI cannot operate in a vacuum; people are needed to curate and provide data, check for biases in data, maintain machine learning models, and manage the overall efficacy of AI systems in healthcare. AI won’t always be right—things can even go terribly wrong—and humans’ experience and ability to react will be critical to evolving AI systems and their effectiveness in clinical settings and in healthcare at large.

It’s amazing to think that AI and ML are a natural part of our lexicon and are ubiquitous terms around the world. Clearly there has been a resurgence of AI, and it’s still spreading. The next section explores the diffusion of AI.

AI Transitions

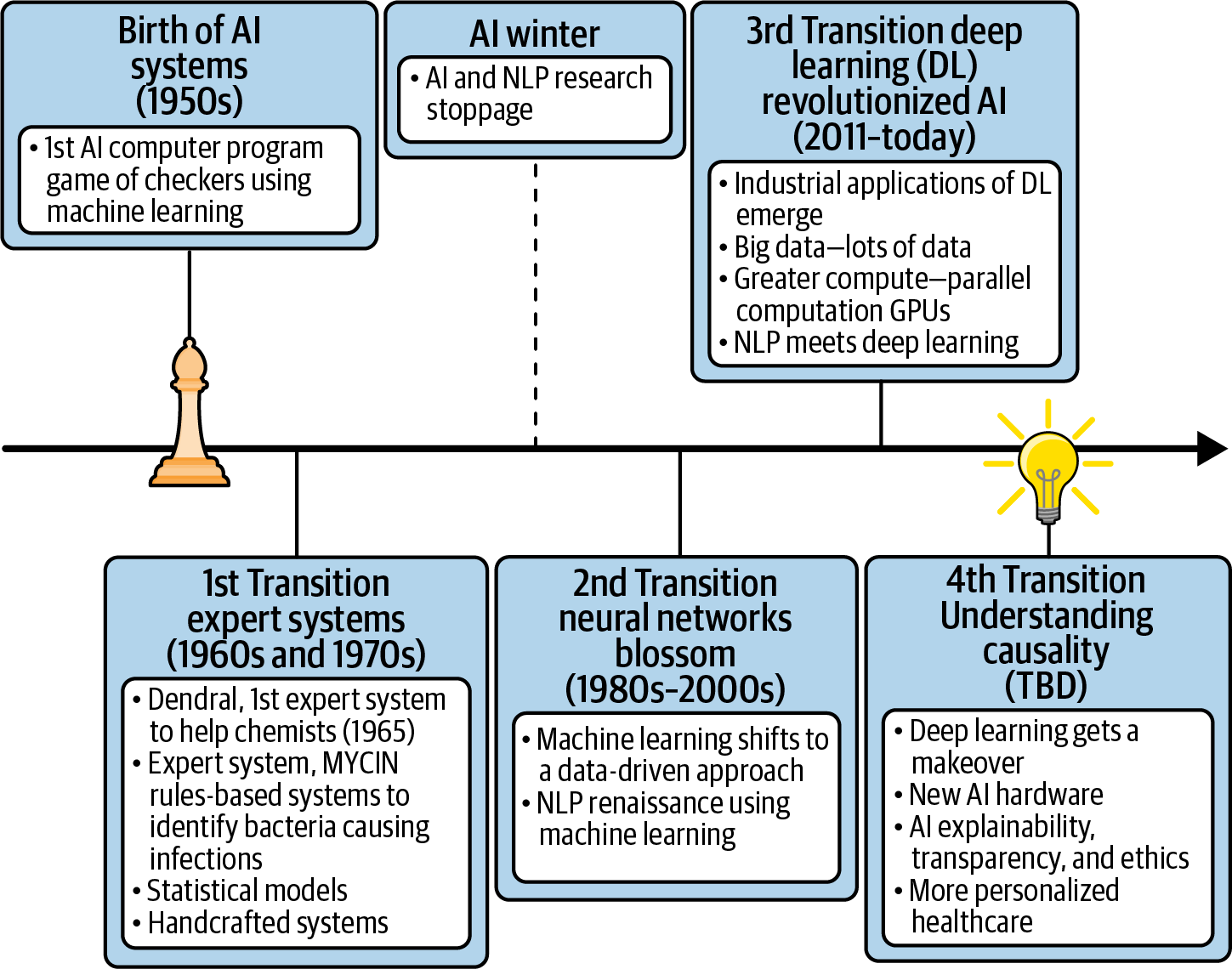

Innovations in AI allow it to make giant leaps forward, toward the goal of mimicking human intelligence. Figure 1-5 highlights key innovations that became transitions for powering new AI applications and solutions in healthcare and other industries.

Figure 1-5. AI transitions

Arthur Samuel, a pioneer in AI, developed a checkers-playing computer program in 1952, and it is widely recognized to be the first computer program to learn on its own—the very first machine learning program. Later years saw the emergence of expert systems and decision-support systems such as MYCIN, CADUCEUS, and INTERNIST-I, which we discussed previously.

Many of the early 20th-century activities in AI used procedural programming logic as the primary programming technique and heavy lifting to provide the supporting infrastructure. The iconic display of this wave may have been when IBM’s Deep Blue computer beat one of humanity’s great intellectual champions in chess, Gary Kasparov. At the time, we thought this was a sign of artificial intelligence catching up to human intelligence. IBM’s Deep Blue required a lot of heavy lifting; it was a specialized, purpose-built computer that many described as a brute force in both the hardware and the software. It’s worth mentioning that one of Deep Blue’s programmers, when asked how much effort was devoted to AI, responded “no effort.” He described Deep Blue not as an AI project but as a project that played chess through the brute force of computers to do fast calculations and shift through possibilities.

AI Winter is a metaphorical description of the period of AI research and development that experienced a substantially reduced level of interest and funding. The hype and exaggeration of AI in the early 20th century backfired, as the technology at hand failed to meet expectations. Algorithmic AI did not transcend humans. The AI depicted in sci-fi movies was not real.

In 1966, government funding of research on NLP was halted, as machine translations were more expensive than using people. Governments canceled AI research, and AI researchers found it hard to find work. This was a dark period for AI, a winter. The delivery failures, runaway projects, and sunken costs of governments and industry led to the first AI Winter. AI was a dream, and it became an accepted premise that AI doesn’t work. This thinking continued until analytics became the moniker. Machine learning techniques and predictive analytics were born, and the second AI transition was underway.

In 2011, IBM again made an iconic display of AI, when the IBM Watson computer system competed on Jeopardy! against legendary champions Brad Rutter and Ken Jennings and won! This 2011 IBM Watson system took several years to build, and again brute force was used in the hardware implementation, while a lot of math, machine learning, and NLP were used on the engineering side. Neither graphical processor units (GPUs) nor deep learning characteristics of 21st-century AI found any traction. To the casual viewer, it may have seemed that IBM Watson on Jeopardy! was doing conversational AI, but that was not the case, as IBM Watson was not listening and processing audio. Rather, ASCII files were transmitted.

In fairness to IBM Watson and its Jeopardy!-winning system, its innovative work on natural language understanding advanced the field.

What we can do with AI today is of course different from what we could do in the 1950s, 1960s, 1970s, 1980s, 1990s, and early 2000s. Although we are using many of the same algorithms and much of the same computer science, a great deal of innovation has occurred in AI. Deep learning, new algorithms, GPUs, and massive amounts of data are key differences. There has been an explosion of new thinking in industry and academia, and there is a renaissance going on today, largely because of deep learning.

The demonstration of neural nets, deep learning algorithms, and the reaching of human perception in sight, sound, and language understanding set in motion a tsunami of AI research and development in academia and technology companies. The internet provided the mother lode of data, and GPUs the compute power for computation, and thus the third AI transition arrived.

In October 2015, the original AlphaGo became the first computer system to beat a human professional Go player without a handicap. In 2017, a successor, AlphaGo Master, beat the world’s top-ranked player in a three-game match. AlphaGo uses deep learning and is another iconic display of AI. In 2017, DeepMind introduced AlphaZero, an AI system that taught itself from scratch how to play and master the games of chess and Go and subsequently beat world champions. What’s remarkable is that the system was built without providing it domain knowledge—only the rules of the game. It’s also fascinating to see a computer game with its own unique, dynamic, and creative style of play.5 AlphaZero shows the power of AI.

It’s quite possible (and even likely) that many companies will see failures with their AI plans and implementations. The limitations of deep learning and AI are well known to researchers, bloggers, and a number of experts.6 For example, deep learning cannot itself explain how it derived its answers. Deep learning does not have causality and, unlike humans, doesn’t enable human reasoning, or what many describe as common sense. Deep learning needs thousands of images to learn and identify specific objects, like determining a type of cat or identifying your mother from a photo. Humans need only a handful of examples and can do it in seconds. Humans know that an image of a face in which the nose is below the mouth is not correct. Anything that approximates human reasoning is currently not viable for AI; that will be the fourth transition.

Amazing progress has been made in AI, but we have a ways to go before it achieves human-level intelligence. Research continues to advance AI. AI is aspirational, endeavoring to move as far to the right on the Artificial Intelligence Continuum as possible, and as quickly as possible, as illustrated in Figure 1-6.

In contrast to movies like Ex Machina or 2001: A Space Odyssey, where AI surpasses human intelligence (reflecting strong AI), we are not yet there—we have weak AI today, or what some describe as narrow AI. It’s narrow because, for example, we train machine learning models to detect pneumonia, but the same model cannot detect tumorous cancers in an X-ray. That is, a system trained to do one thing will quickly break on a related but slightly different task. Today, when we largely target well-defined problems for AI, people are needed to ensure that AI is correct. Humans must train the AI models and manage the life cycle of AI development from cradle to grave.

Figure 1-6. Weak to strong AI

New innovations in AI will move us further to the right along the continuum, where perhaps causality will be within our sight. We will begin to understand, for example, whether an aspirin taken before a long flight actually does operate as a blood thinner, reducing the subject’s risk of blood clots related to immobility during travel. This is contrasted with the current practice of recommending that airline passengers take an aspirin as a precautionary measure.

Deep learning most likely will get a makeover with new research and innovations. Hardware will improve. There will be another AI transition like those depicted in Figure 1-5, and it will accelerate the movement of AI further to the right along the continuum depicted in Figure 1-6. Today AI and humans must work hand in hand to solve problems. AI can go wrong, and we need clinicians with intuition and experience doing healthcare augmented with AI.

AI—A General Purpose Technology

In addition to AI being a field of study in computer science, it is also proving to be a general purpose technology (GPT).7 GPTs are described as engines of growth—they fuel technological progress and economic growth. That is, a handful of technologies have a dramatic effect on industries over extended periods of time. GPTs, like as the steam engine, the electric motor, semiconductors, computers, and now AI, have the following characteristics:

-

They are ubiquitous—the technology is pervasive, sometimes invisible, and can be used in a large number of industries and complimentary innovations.

-

They spawn innovation—as a GPT improves and becomes more ubiquitous, spreading throughout the economy, more productivity gains are realized.

-

They are disruptive—they can change how work is done by most if not all industries.

-

They have general purposefulness—they perform generic functions, allowing their usage in a variety of products and services.

The difficulty in arriving at a single definition of artificial intelligence lies in our same disagreements about what constitutes human intelligence. The development of computer systems able to perform activities that normally require human intelligence is also a good definition of AI, but this is a very low bar, as for centuries machines have been doing tasks associated with human intelligence. Computers today can do tasks that PhDs cannot and at the same time cannot do tasks that one-year-old humans can do.

Contrary to the oft-seen nefarious machines or robots in movies like Blade Runner, The Terminator, and I, Robot, seemingly sentient, highly intelligent computers don’t exist today. That would be strong and general AI. The computers seeking technological singularity (where AI surpasses human intelligence) in dystopian movies like The Matrix and Transcendence do not exist.

World-renowned scientists and “AI experts” often unanimously agree in public forums that it is possible for machines to develop superintelligence. However, the question being asked is whether it’s theoretically possible. The trajectory of AI suggests the possibility exists. But this leads us to Moravec’s paradox, which is an observation made by AI researchers that it’s easy to get computers to exhibit advanced intelligence in playing chess or passing intelligence tests, yet it is difficult for machines to show the skills of a small child when it comes to perception and mobility. Intelligence and learning are complicated. Although we like to put things in buckets, such as “stupid” or “intelligent,” AI is moving along a continuum, as illustrated in Figure 1-6; but AI today is not “superintelligent,” nor does anyone have a time line that helps us understand when the day of superintelligent computers will arrive—it could be in five years or in a hundred years. The amount of computational resources needed to demonstrate the low-level skills humans possess is enormously reflective of the challenges ahead in creating superintelligent machines.

AI is used as shorthand for describing a varied set of concepts, architectures, technologies, and aspirations, but there is no single AI technology. AI is not a system or product that is embedded or used by such. We use the term AI loosely, as no computer or machine or AI with humanlike goals and intent has been developed. Nor do we know how to build such a computer or machine, as this again would be strong or general AI. When we talk about human intelligence, superintelligence, or sentience in machines, it is a future state being discussed. There is no miraculous AI fix; we cannot wave a magic AI wand that will fix healthcare or make people healthier.

Andrej Karpathy, a computer science researcher and the current director of AI at Tesla, makes a powerful case that AI changes how we build software, how we engineer applications. He argues that the way we build software/writing instructions that tell the computer what to do is slow and prone to errors. We spend a lot of time debugging—and worse, the code gets more brittle over time as more people maintain the code, often resulting in a big spaghetti of code that’s difficult to change. The application locks the business into a way of doing work.

Healthcare is riddled with legacy software and/or complex applications that are so big that they themselves become impediments to innovation. Using machine learning, we can program by example—that is, we can collect many examples of what we want the application or software to do or not do, label them, and train a model to effectively learn to automate application or software development itself. Although tools to automate aspects of software engineering are starting to appear, more innovation and more tools are needed. Karpathy describes this vision as Software 2.0. In addition, few if any companies have the talent or tools to implement this vision. But we can still use machine learning rather than handcrafting for many of today’s problems, allowing us to future-proof systems using machine learning as they learn with new data and to avoid programmers writing more code.

Understanding and exploring AI healthcare and technology myths illuminates our understanding and definition of AI. This will be key to understanding concepts presented in later chapters. Next, we’ll turn our attention to discussing some key myths in AI healthcare.

AI Healthcare Myths

There is so much excitement involving AI in healthcare, but what exactly is AI in healthcare supposed to fix? People look to AI to predict future disease, prevent disease, enhance disease treatment, overcome obstacles to healthcare access, solve the burden of overworked and burnt-out clinicians, and improve the health of people overall while decreasing the cost of healthcare. While some of this is achievable, AI is not a miracle panacea for all health and healthcare-related problems. If AI is not applied judiciously, with human oversight and vision, then mistakes or incorrect recommendations based on faulty or inadequate data inputs and assumptions can kill the trust that AI uses in healthcare are designed to achieve. AI in healthcare must be applied for the right purpose, with review of potential risks in the forefront of developers’ minds.

Roy Amara, a previous head of the Institute for the Future, coined Amara’s Law, which states: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” One of the major myths regarding AI is that AI will replace physicians and other healthcare providers. AI relies on the knowledge base provided by trained and experienced clinicians. AI cannot replace the “care” aspect of human interaction and its documented therapeutic effect. AI does not have the capability to determine the best solution when a holistic review of a patient would recommend an approach that relies on human creativity, judgment, and insight. For example, consider an otherwise healthy 90-year-old patient who develops an eminently treatable cancer. Logic and current medicine would support aggressive treatment to destroy the cancer. The human aspect comes into play when this same patient lets their clinician know that they are widowed and alone, and although they are not depressed, they feel they have lived a full life and thus decline treatment. AI and most physicians would argue for treatment. Holistic review of the patient’s wishes and their autonomy in their healthcare decisions take priority here and would have been missed by an autonomous AI agency operating without human oversight. As this patient would be choosing a course of action that involves inaction, and the characteristics of what is included in the patient’s determination of a full life are not brought into existing models, it is unlikely that AI would be able to predict this unusual but valid patient decision.

AI can apply counterintuitive strategies to health management, but the steps from raw data to decision are complex and need human perceptions and insights. The process is a progression, starting with clinical data obtained from innumerable sources that is built and developed to become relevant information, which is then used and applied to populations and/or individuals. The transformation from raw data to insights to intelligence is a process that is guided by clinicians working with data scientists using AI. The clinical interpretation of data is dependent on humans and their understanding of disease processes and its effect on the timeline of progression of disease that molds this early knowledge. Algorithms for disease management, identification of risk factors predicting the probability of development of disease—these are based on human understanding and interpretation of the disease process and the human state. The use of AI and clinicians’ activities are intertwined, and together their potential for improving health is remarkable. Because there are so many potential uses for AI in healthcare, we can break them down into some of the numerous gaps identified earlier:

- Inequitable access to healthcare

AI can be used to assess for social determinants of health that can predict which populations are either “at risk” or identified for underutilization of care, and tactical plans can then be developed to best address these gaps in medical usage.

- Insufficient on-demand healthcare services

AI is already addressing some of these needs with applications like Lark Health, which manages using smart devices and AI with deep machine learning to manage well, at-risk, and stable chronic disease patients outside of the healthcare system.

- High costs and lack of price transparency

AI can be used to predict which patients or populations are at risk of becoming “high cost,” and further analysis within these populations can identify factors that can be intervened upon to prevent this outcome.

- Significant waste

AI incorporation into healthcare payer systems is prevalent today. Administrative tasks and unnecessary paperwork are being removed from the patient and the provider, making their user experience that much better.

- Fragmented, siloed payer and provider systems

AI has potential use here, such as in the automatic coding of office visits and the ability to automatically deduct the cost of tests/exams/visits from one’s health savings account.

- High business frictions and poor consumer experiences

AI uses already include the facilitation of claims payments in a timely manner and the communication of health benefits for an individual, with fewer errors and decreased processing time as compared with human-resource-dominant systems.

- Record keeping frozen since the 1960s

Electronic health records and the amount of patient data available to AI applications, which can process this data and draw insights from population analysis, continue to grow and develop constantly.

- Slow adoption of technological advances

AI cannot help us here. Again, the judicious application of AI to healthcare gaps is needed. AI use in healthcare should be to extend provider capabilities, ease patient access to and use of healthcare, and so on. What AI cannot answer is how we humans will accept AI-enabled solutions. AI is used in an inconspicuous manner in healthcare for the most part, due to patient and provider skepticism and resistance to change. As time progresses, and with national and international emergencies such as the COVID-19 pandemic, people are being forced to accept and even embrace technological enhancement of healthcare. To continue this trend, we need to build on success and user satisfaction with the product and process.

- Burnout of healthcare providers, with an inability of clinicians to remain educated on the newest advances in medicine based on the volume of data to be absorbed

AI can process the hundreds of new journal articles on scientific and pharmaceutical advancements that are released daily. It can also compile relevant findings on various subjects as requested by the providers so that patients can receive the most advanced medical treatments and diagnostics relevant to their condition. AI can be used in real time to determine whether a prior authorization for a test/procedure or medication can be given to a patient. All of these advances lead to users’ increased satisfaction with their healthcare experience, decreases in delays in care, the removal of time and resource waste, improvement in patient health outcomes, and increased time for doctors to be with their patients and not on their tablets.

These are just a handful of examples of AI use in healthcare today.

Further capabilities with ongoing development and future use cases, as well as additional applications of AI to help remedy the above healthcare problems from different angles, will be addressed throughout this book.

Now, let’s take a hard example. The healthcare industry seeks to address high-cost healthcare situations that impact patients’ health. An example is maternal and fetal mortality and morbidity and the associated astronomical costs. An initial step is to gather as much data as possible for machine learning to occur. Training data would need to be labeled, models developed, the models tested for accuracy, and data scored where the outcome is unknown to identify which mothers and babies in a population pool would be at risk for development of complications during or after pregnancy and would be at risk for a neonatal intensive care unit (NICU) stay. This involves AI analysis of all the patient variables to isolate the target population. It’s also important to understand whether other conditions are at play, including gestational hypertension (and perhaps its more severe manifestations), which is highly prevalent and associated with NICU infants and complications from pregnancies.

The routine monitoring of at-risk moms from an early stage allows clinicians to intervene with early diagnosis and management of gestational hypertension and its manifestations to prevent maternal and fetal complications and death, with their associated maternal and NICU costs. AI can be utilized to identify the highest-risk moms with the greatest potential impacts from intervention. AI can assist clinicians with identification of gestational hypertension and can then be used in disease management, with goal therapies identified by human providers and used to educate AI tools and products. We can use AI for the analysis of these moms and infants to determine if there is a better way to identify, diagnose, and manage this specific population. AI has inherent benefits and broad applications, but it is AI’s collaboration with the human interface that allows AI tools to be so impactful. AI will not replace healthcare providers, but it is a powerful tool for augmenting physicians’ work in identifying and managing disease. Let’s explore some of the ways that AI and healthcare providers have been able to work together, while also dispelling the myth that AI can do it all on its own.

Myth: AI Will Cure Disease

AI is not a replacement for a medicinal cure that may one day end diseases (e.g., coronary artery disease and cancer); however, advances in AI, the massive accumulation of data (i.e., big data), and data sharing in healthcare could lead to what does end diseases. Some people believe that if AI can be used to predict who is at risk for a particular disease, then we can intervene and change behaviors or start treatment that would circumvent the disease from ever becoming present. Of course, helping people avoid getting a disease is not the same as curing the disease. Defining what we mean by a cure can be confusing, and this is never more evident than with diseases such as human immunodeficiency virus (HIV). Magic Johnson, the NBA Hall of Famer, proclaimed he was cured of HIV because doctors were unable to detect the virus in his body after ongoing treatment for HIV. Without the antiretroviral medications, HIV would have increased in number and would once again have been found in Johnson’s body. Was he ever truly cured? For certain diseases, a prospective cure is not well defined. However, preventing a disease for an individual is better than trying to cure that disease.

The norm today is that we work to prevent disease, often with the use of machine learning. Routinely, healthcare companies take in data from electronic health records (EHRs), healthcare claims, prescriptions, biometrics, and numerous other data sources to create models for the identification of “at-risk” patients. For prevention to be effective, healthcare constituents need to use artificial intelligence to support decisions and make recommendations based on the assessment and findings of clinicians providing healthcare to all patients.

The healthcare ecosystem comprises consumers in need of healthcare services; clinicians and providers who deliver healthcare services; the government, which regulates healthcare; insurance companies and other payers that pay for services; and the various agencies that administer and coordinate services. In the ideal state, these ecosystem constituents are in sync, optimizing patient care. A simple example is medical coding, where the medical jargon does not always sync with the coding terminology, resulting in gaps in care in identifying the true disease process of an individual patient. The current system is heavily dependent on coding of diagnoses by hospitals, providers, medical coders, and billing agents. This medical coding process has improved as we see greater AI adoption, increasing the opportunity to prevent diseases.

AI can provide clinicians with more and better tools, augmenting a clinician’s diagnostic capabilities by analyzing a holistic picture of the individual patient with broader data streams and technological understanding of the disease process and of who is at risk and will be most impacted. AI has become a more accurate tool for identifying disease in images, and with the rise of intelligent spaces (e.g., hospitals, homes, clinicians’ work spaces), AI triggers a more viable source of diagnostic information. We will examine this in greater detail in Chapter 3, in which the world of intelligent machines and ambient computing and its impact on healthcare is discussed. The volume of data streams makes this unmanageable for a human but highly possible for intelligent machines supported by AI. By identifying and stratifying individuals most at risk, AI can alert physicians and healthcare companies to intervene and address modifiable risk factors to prevent disease.

AI algorithms, personalized medicine, and predictive patient outcomes can be used to study different diseases and identify the best practice treatments and outcomes, leading to an increased likelihood of curing a specific disease. AI can further analyze whether and why a specific population may not respond to certain treatments versus another treatment. In 2014, Hawaii’s attorney general sued the makers of a drug called Plavix8 (used to thin the blood to prevent stroke and heart attack). The suit alleged that Plavix did not work for a large proportion of Pacific Islanders due to a metabolic deficiency in that population and that the makers of the medication were aware of this anomaly. Although the drug maker would not have known of this genetic difference originally, as it did not include Pacific Islanders in its initial clinical trials, it was aware of the anomaly in efficacy later on, during widespread patient use. AI algorithms were not used to identify the increase in poor outcomes related to Plavix’s use as a preventive medication within this population. But AI’s application to such a use case—that is, following a population after a medication’s release to look for aberrancy points in data and then drawing conclusions from these findings—is a perfect use of AI, which would have led to alternative therapy recommendations for blood thinners to prevent cardiovascular complications and recurrence of disease in a much more timely manner. The alternative was a drawn-out process occurring over several years in which a finding was noted by clinicians and then reported, and eventually the finding was made regarding the lack of efficacy of Plavix among Pacific Islanders.

The application of AI to cancer is garnering a huge amount of interest, prompting thinking that we may be able to substantially reduce annual deaths from cancer. A large proportion of our world population develops cancer—in 2018 alone, about 10 million people died of cancer worldwide. The data from these individuals prior to their development of disease, after diagnosis, and during treatment, along with the use of AI, has the potential to improve oncologic care and effect more cures from treatment. Embedding AI technology in cancer treatment accelerates the speed of diagnostics, leading to improved clinical decision making, which in turn improves cancer patient outcomes. AI can also be useful in managing advanced stages of cancer, through the ability to predict end-of-life care needs to facilitate a patient’s quality of life.

One area in which AI has the potential for significant impact is the identification of cancer in radiologic studies. The University of Southern California’s Keck School of Medicine published a study in 2019 showing improved cancer detection using AI.9 Specifically, “a blinded retrospective study was performed with a panel of seven radiologists using a cancer-enriched dataset from 122 patients that included 90 false-negative mammograms.” Findings showed that all radiologists experienced a significant improvement in their cancer detection rate. The mean rate of cancer detection by radiologists increased from 51% to 62% with the use of AI, while false positives (a determination of cancer when cancer is not actually present) remained essentially the same.

Early detection of disease states has an enormous positive impact on treatment and cure in cancer outcomes not just with radiologists but also with dermatologists. Computer scientists at Stanford created an AI skin cancer detection algorithm using deep learning for detection of malignant melanoma (a form of skin cancer) and found that AI identified cancers as accurately as dermatologists. China has used AI in the analysis of brain tumors. Previously, neurosurgeons performed tumor segmentation (used in diagnosis of brain cancer) manually. With AI, the results were accurate and reliable and created greater efficiency. Wherever early and accurate detection can lead to a cure, AI has a proven place in the diagnosis of cancers.

AI continues to evolve in its use and role in cancer detection. Currently, genetic mutations are determined through DNA analysis. AI is widely used in companies like Foundation Medicine (Roche) and Tempus to recommend treatments for particular tumor genomes. Also, AI has been used in radiogenomics, where radiographic image analysis is used to predict underlying genetic traits. That is, AI has been used to analyze and interpret magnetic resonance imaging (MRI) to determine whether a mutation representing cancer is present. In one Chinese study, AI analysis of MRIs predicted the presence of low-grade glioma (brain tumor) in patients with 83–95% accuracy. In the past decade, AI has been applied to cancer treatment drug development, with one study showing AI ability to predict the likelihood of failure in a clinical trial testing more than 200 sample drugs. Another study applied AI to the prediction of cancer cell response to treatment.

Identifying and diagnosing cancer in its early stages, coupled with proper care treatment, increases the opportunity to cure patients of cancer. AI can significantly contribute to the diagnosis of patients based on signals or symptoms missed by human detection. Gathering data from large population pools allows an understanding and awareness of the most effective plans for treatment. This leads to better treatment for individual patients based on what have been proven to be the most effective treatment plans for other patients who have had similar types of cancers. This is where AI—specifically, deep learning algorithms using large datasets containing the data of millions of patients diagnosed with cancer all over the world—could play a major role. Unfortunately, access to and accumulation of such massive datasets does not yet exist. Nations would have to agree to share data, while within countries patients would have to grant general access to their private data; technological systems to aggregate all this disparate data with different measures and norms would have to be built. All of which leads to worldwide health data aggregates being a thing of the future. The technological ability is there, but the ethicality of such datasets and their use may prohibit their creation. That said, if given large enough data sources for analysis, AI can provide clinicians with evidence-based recommendations for the treatment plans with the greatest opportunity for positive outcomes.

Human error that results in a delay in diagnosis, or a misdiagnosis, can be a matter of life or death for cancer patients; detecting cancer early makes all the difference. AI helps with early detection and diagnosis by augmenting current diagnostic tools for clinicians. An important part of detecting lung cancer is finding if there are small lesions on the lungs through computed tomography (CT) scans. There is some chance for human error, and this is where artificial intelligence plays a role. Clinicians using AI could potentially increase the likelihood of early detection and diagnosis. With the data available in relation to cancer and its treatment, AI has the potential to assist in creating structure out of these databases and pulling relevant information to guide patient decision making and treatment in the near future.

Giving patients tools that help them also helps their doctors; we will discuss this in more detail in Chapter 3. Tools that rapidly analyze large amounts of patient data to provide signals that may otherwise go undetected should increasingly play a role in healthcare, as should machine learning algorithms that can learn about a patient continuously. In Chapter 3 we explore the synergy between increasingly instrumented patients, doctors, and clinical care. The shift from largely using computers to using devices that patients wear for early detection of disease states is well underway. Despite the plethora of seemingly almost daily news stories about new machine learning algorithms or new devices or AI products improving healthcare, AI alone will not fix the healthcare problems confronting societies. There are many challenges that need to be solved to make our healthcare system work better, and AI will help, but let’s dispel the next myth about AI alone fixing the problems facing healthcare.

Myth: AI Will Replace Doctors

AI will not replace doctors, either now or in the near future, despite much discussion suggesting that it will happen. In 2012, entrepreneur and Sun Microsystems co-founder Vinod Khosla wrote an article with the provocative title, “Do We Need Doctors or Algorithms?”, in which he asserts that computers will replace 80% of what doctors do. Vinod saw a healthcare future driven by entrepreneurs, not by medical professionals.

We can look at AI as a doctor replacement or as a doctor enhancement. AI can perform a double check and see patterns among millions of patients that one doctor cannot possibly see. A single doctor would not be able to see a million patients across their lifetime, but AI can. The diagnostic work done by a doctor arguably focuses heavily on pattern recognition. So augmenting diagnoses with AI makes sense.

Key arguments for AI replacing doctors include the following:

-

AI can get more accurate every day, every year, every decade, at a rate and scale not possible for human doctors.

-

AI will be able to explain possibilities and results with confidence scores.

-

AI can improve the knowledge set, raising the insight of a doctor (who is perhaps not trained in a specific specialty).

-

AI may be the only way to provide access to best-in-class healthcare to millions of people who don’t have access to healthcare services or can’t afford healthcare services.

Arguments against AI replacing doctors include the following:

-

Human doctors are better at making decisions in collaboration with other humans.

-

Doctors have empathy, which is critical to clinical care.

-

Doctors can make a human connection, which may directly influence how a patient feels and can facilitate a patient adhering to a treatment plan.

-

The AMA Journal of Ethics states that “patients’ desire for emotional connection, reassurance, and a healing touch from their caregivers is well documented.”10

-

Doctors may observe or detect critical signals because of their human senses.

-

AI cannot yet converse with patients in the manner that a human doctor can.

-

Subconscious factors that may influence a doctor’s ability to treat, if not explicitly identified for AI, will be missed.

The role of radiologist has come under a lot of scrutiny as one that AI could potentially replace. It’s worth looking at some tasks that a radiologist performs and recognizing that AI won’t be able to take over these tasks, although it might augment the radiologist in performing some of them:

-

Supervising residents and medical students

-

Participating in research projects

-

Performing treatment

-

Helping with quality improvements

-

Administering substances to render internal structures visible in imaging studies

-

Developing or monitoring procedures for quality

-

Coordinating radiological services with other medical activities

-

Providing counseling to radiologic patients for things such as risky or alternative treatments

-

Conversing with referring doctors on examination results or diagnostic information

-

Performing interventional procedures

All this said, radiologists are the group most likely to be considered “replaceable” by AI, as they have zero to minimal direct patient contact, and not all of them perform all of the tasks listed here. There are many tasks that AI can do better than a doctor, but rarely if ever will AI replace entire business processes or operations or an entire occupation or profession. The most likely scenario is that doctors in the foreseeable future will transition to wielding AI with an understanding of how to use AI tools to deliver more efficient and better clinical care. AI today provides a lot of point solutions, and its opportunity to improve diagnostics is significant. Treatment pathways and even many diagnostics today require decision making, something AI is not good at.

AI and physicians can work together as a team because the stakes are high. AI can facilitate active learning (as a mentor to the clinician) by paying attention to aspects that are highly uncertain and can potentially contribute to a positive or negative outcome. The AI can receive feedback and insights (as a mentee of the clinician) to improve in particular ways.

A practical problem exists in that AI must live in our current brownfield world, where several barriers to AI replacing doctors must be overcome. Today we have a proliferation of systems that do not integrate well with each other. For example, a patient who is cared for at a hospital, an urgent care center, or a provider office may have their data spread across several different systems with varying degrees of integration. A doctor’s ability to navigate the healthcare system and pull together this disparate data is critical to patient care.

The reality is that there is not going to be a computer, a machine, or an AI that solves healthcare, just like there isn’t one solution to all banking, retail, or manufacturing problems. The path to digitization differs based on clinical specialty and most likely will occur one process at a time within each domain or specialty. AI systems are here and on the horizon for assessing mental health, diagnosing disease states, identifying abnormalities, and more.

Myth: AI Will Fix the “Healthcare Problem”

Most assuredly, AI fixing the myriad of healthcare problems is a myth. In the United States, the current healthcare system has a large ecosystem of providers, insurer systems, payers, and government agencies who must play well together to increase access to healthcare, reduce costs, reduce waste, and improve care. Generally, when governments discuss the “healthcare problem,” they are referring mainly to our nation’s exponentially increasing expenditures on healthcare. Most nations continue to address an aging population that is consuming more resources on an ever-growing basis. The ability of AI to overcome obstacles to healthcare access, solve the burden of overworked and burnt-out clinicians, and improve the health of people while decreasing the cost of healthcare is possible but exaggerated.

The Committee for a Responsible Federal Budget reports that the US spent more than $3 trillion on healthcare expenditures in 2017, equating to approximately $9,500 per person. The Deloitte consulting firm states that America spends more per capita on healthcare than any other country in the world, while also holding the unpleasant distinction of ranking near the bottom in objective measures, such as access, efficiency, and efficacy. Administrative costs are adding additional burden to the healthcare system, along with the rise in healthcare costs as medical advances lead to prolonged longevity and the associated costs of an aging population.

Neither technology nor AI can fix all of these problems, so realistically, the question is: how can AI help address some of the healthcare issues? First is the issue of access, as access to healthcare is composed of three parts:

-

Gaining entry into the care system (usually through insurers)

-

Having access to a location where healthcare is provided (i.e., geographic availability)

-

Having access to a healthcare provider (limited number of providers)

The Patient Protection and Affordable Care Act (ACA) of 2010 led to an additional 20 million adults gaining health insurance coverage. However, the US population still has millions of uncovered people. This gap is so relevant as a major healthcare problem because the evidence shows that people without insurance are sicker and die younger, burdening the healthcare system and contributing to higher government costs. The American Medical Association (AMA) has endorsed the ACA and is committed to expanding healthcare coverage and the protection of people from potential insurance industry abuse, which increases access to healthcare and thus increases the likelihood of people living healthier lives, thereby reducing the costs of healthcare.

Although AI alone cannot fix all healthcare problems, it can play an enormous role in helping to fix some issues in healthcare, a major one being access to healthcare services. While governments work on addressing insurance coverage reform, AI is gaining a foothold in increasing healthcare accessibility. Apps such as Lark now use AI and chatbots, along with personal data from patients and smart devices, to provide health recommendations for preventive care, reduction of healthcare risks (such as obesity), and management of stable chronic medical conditions, such as diabetes, without direct clinician interaction. Thus AI is being used to identify at-risk patients, prevent disease, and focus on best practices for current disease conditions while addressing provider access issues. AI has been shown to be more effective than providers in certain areas, such as disease identification (as we saw earlier regarding cancer identification). As AI is applied to more functions associated with nurses, doctors, and hospitals, it will relieve some of the burden on healthcare access, freeing providers to perform more directly patient-related activities.

China provides an illuminating case study for how AI can help to reform healthcare access. China is facing arguably the largest healthcare challenges in the world. With a population of 1.4 billion, China is having enormous difficulty addressing the needs of its population with limited resources. A Chinese doctor will frequently see more than 50 patients daily in an outpatient setting.11 The general practitioner is in high demand in China, and there is a shortage of qualified general practitioners. Poor working conditions, threats of violence, low salaries, and low social status are challenges contributing to the shortage, and they are compounded by patient concerns about the quality of care provided by general practitioners. Patients recognize differences in the academic and professional qualities of specialists versus those of general practitioners. This is where new technologies like AI can create breakthroughs by augmenting general practitioners with better diagnostic tools and clinical care treatment guidance. So China has relied heavily on AI to address its healthcare issues. From medical speech recognition and documentation to diagnostic identification and best practice treatment algorithms, China is utilizing AI to decrease the work burden for its providers. The further integration of these technologies into the healthcare system opens up accessibility to providers in both direct and remote care, thus providing a potential solution to the issue of resource limitation in both quantity and location. The advantages of AI in China’s system as opposed to that of the US are certainly linked to the fact that China’s system is government-run; with a centralized, controlled healthcare system in which all data is accessible by a single entity, utilization of AI is more impactful.

However, even with our disparate systems in the US, direct and remote access to clinicians will be improved as AI is further adopted and integrated into our healthcare system. There are several prime applications for AI, including clinical decision support, patient monitoring and guidance (remote or direct patient management), surgical assistance with automated devices, and healthcare systems management. Each of these and more will be discussed in later chapters.

Myth: AI Will Decrease Healthcare Costs

US health expenditure projections for 2018–2027 from the Centers for Medicare & Medicaid Services show that the projected average growth rate on healthcare spend is 5.5%, with expectations of meeting $6 trillion in spend by 2027. Looking at these numbers, it’s clear that healthcare spending will outstrip economic growth. All components of healthcare are projected to increase at exceedingly high annual rates over the next decade. For example, inpatient hospital care, which is the largest component of national health expenditures, is expected to grow at an annual average rate of 5.6%, which is above its recent five-year average growth rate of 5%. AI alone won’t fix these problems, but it can help with cost containment and cost reduction.

The myth regarding AI and healthcare costs is the thinking that AI will completely reinvent or overturn the existing healthcare or medical models in practice today, or that AI use alone will be able to contain healthcare costs. AI is not a magic wand, but it can make a big difference by streamlining inefficiencies, finding some innovative ways to look at data and member health, and providing new use cases to impact patient health and cause improvement in overall health, which may well cause a decline in medical spend (though that will be offset by the cost of the technology and its supporting infrastructure). Tremendous evidence abounds that the big technology companies and start-ups will transform how healthcare is done. AI will be the tool of trade that makes many of these transformations possible. You might see this as splitting hairs, but the point is that the problems facing healthcare have lain in resistance to change, historical inefficiencies and inertia, lack of cooperation for the greater good by companies designed to compete with each other, and the lack of a game-changing technology. Now we have the game-changing technology: AI.

Given this background, how and where will AI have an impact? One major area of spend is management of chronic disease. When people with a chronic condition are not adherent to treatment plans, then complications related to the underlying disease occur, which can result in expensive hospitalizations and/or the need to institute high-cost specialty pharmacy therapies. For example, diabetic retinopathy (or DR), an eye disease of diabetes, is responsible for 24,000 Americans going blind annually, as reported by the Centers for Disease Control and Prevention (CDC). This is a preventable problem; routine exams with early diagnosis and treatment can prevent blindness in up to 95% of diabetics. And yet more than 50% of diabetics don’t get their eyes examined, or they do so too late to receive effective treatment. The cost of treating diabetes-related disease and blindness is estimated to total more than $500 million per year.

AI can address cost spending in chronic disease by increasing efficacy and ease of obtaining eye exams in diabetics. AI using machine learning and deep learning has been adopted by various groups to develop automated DR-detection algorithms, some of which are commercially available. Although binocular slit-lamp ophthalmoscopy remains the standard against which other DR-screening approaches are compared, AI applications with fundus photography are more cost effective and do not require an ophthalmologist consultation. Fundus photography involves photographing the rear of the eye (fundus) using specialized flash cameras with microscopic detail. The scarcity of ophthalmologists who can perform this exam necessitates the use of nonphysicians who can carry out the DR screening using AI algorithms (deep learning) embedded in various tools, such as a mobile device. Such solutions are already used in developing nations with a shortage of ophthalmologists, demonstrating that a highly trained doctor is not required for disease detection when AI can be trained to do the same.

Cost savings from using AI and fundus photography have been reported at 16–17% (due to fewer unnecessary referrals). A cost effectiveness study from China showed that though the screening cost per patient increased by 35%, the cost per quality-adjusted life year was reduced by 45%. Taking this a step further, to help diabetics with existing eye-related disease, AT&T partnered with Aira in a research study combining smart glasses with AI algorithms to improve patients’ quality of life, which resulted in increased medication adherence through medication recognition technology. The technologies for medication adherence are varied, such as tracking when patients open their pill bottle using sensors in the bottle cap or sensors in the bottle that show weight decline. Mobile apps or smart speakers that alert patients to take their medications can also be armed with machine learning to teach the patients habits, and instead of sending repeated and annoying alerts they learn the optimal time of day to send a reminder to the patient.

All of these strategies using AI in chronic condition management lead to a waterfall of positive health effects and cost savings. In this case, the diabetic is now screened for diabetic eye-related disease through the ease and efficacy of AI; if they are found to have significant disease, then the AT&T/Aira “pill bottle reader project” can facilitate medication adherence, and issues such as falls related to poor vision, fractures or other musculoskeletal trauma, hospital admissions for poor blood sugar control, infections related to poor blood sugar control, and so on, can all be avoided, with significant cost savings as well as quality-of-life improvement for patients.

AI can assist in the standardization and identification of best practice management of chronic disease. It has always been known in the medical community that there is a wide variation in practices, and economists have pointed out that treatment variability results in wasteful healthcare spending. As an example of how treatment management can impact healthcare spend, let’s examine low back pain. More than 80% of Americans experience low back pain at some point in their lives. Of those people with low back pain, 1.2% account for about 30% of expenditures. When treatment guidelines were adhered to, costs were less. The pattern for increasing costs to both the overall healthcare system and patients is reflected in Figure 1-7, illustrating the impact on costs when consumers (that is, patients) fail to adhere to treatment guidelines.12

Figure 1-7. Lack of adherence to treatment recommendations increases costs

AI can reduce treatment variability by applying itself to the myriad of siloed data sources to identify optimal care pathways, leading to the updating of current guidelines and improvements in cost expenditure. As a caveat, health savings are associated with the application of evidence-based medicine treatment guidelines; the issue is that they are not strictly adhered to in medical practice. AI would facilitate application of treatment guidelines by helping to facilitate doctor decisions on plan of care. (This will be explored further in Chapter 4.) Some have asked: what if AI makes the wrong recommendation? As AI would be augmenting and facilitating the physician’s decision, the ultimate treatment decision and responsibility for verifying AI recommendations would likely lie with the provider.