Back in 1996, the Web was incredibly exciting, but not a whole lot was actually happening on web pages. Programming a web page in 1996 often meant working with a static page, and maybe a bit of scripting helped manage a form on that page. That scripting usually came in the form of a Perl or C Common Gateway Interface (CGI) script, and it handled basic things such as authorization, page counters, search queries, and advertising. The most dynamic features on the pages were the updating of a counter or time of day, or the changing of an advertising banner when a page reloaded. Applets were briefly the rage for supplying a little chrome to your site, or maybe some animated GIF images to break the monotony of text on the page. Thinking back now, the Web at that time was really a boring place to surf.

But look at what we had to use back then. HTML 2.0 was the standard, with HTML 3.2 right around the corner. You pretty much had to develop for Internet Explorer 3.0 or Netscape Navigator 2.1. You were lucky if someone was browsing with a resolution of 800 × 600, as 640 × 480 was still the norm. It was a challenging time to make anything that felt truly cool or creative.

Since then, tools, standards, hardware technology, and browsers have changed so much that it is difficult to draw a comparison between what the Web was then and what it is today. Ajax’s emergence signals the reinvention of the Web, and we should take a look at just how much has changed.

Tip

If you want to jump into implementation, skip ahead to Chapter 4. You can always come back to reflect on how we got here.

When a carpenter goes to work every day, he takes all of his work tools: hammer, saw, screwdrivers, tape measure, and more. Those tools, though, are not what makes a house. What makes a house are the materials that go into it: concrete for a foundation; wood and nails for framing; brick, stone, vinyl, or wood for the exterior—you get the idea. When we talk about web tools, we are interested in the materials that make up the web pages, web sites, and web applications, not necessarily the tools that are used to build them. Those discussions are best left for other books that can focus more tightly on individual tools. Here, we want to take a closer look at these web tools (the components or materials, if you will), and see how these components have changed over the history of the Web—especially since the introduction of Ajax.

The tools of the classic web page are really more like the wood-framed solid or wattle walls of the Neolithic period. They were crude and simple, serving their purpose but leaving much to be desired. They were a renaissance, though. Man no longer lived the lifestyle of a nomad following a herd, and instead built permanent settlements to support hunting and farming. In much the same way, the birth of the Web and these classic web pages was a renaissance, giving people communication tools they never had before.

The tools of the classic Web were few and simple:

Eventually, other things went into the building of a web page, such as CGI scripting and possibly even a database.

Tip

The World Wide Web Consortium (W3C) introduced the Cascading Style Sheets Level 1 (CSS1) Recommendation in December 1996, but it was not widely adopted for some time after. Most of the available web browsers were slow to adopt the technology. It wasn’t until browser makers began to support CSS that it even made sense to start using the technology.

HTML provided everything in a web page in the classic environment. There was no separation of presentation from structure; JavaScript was in its infancy at best, and could not be used to create “dynamic HTML” through Document Object Model (DOM) manipulation, because there was no DOM. If the client and the server were to communicate, they did so using very basic HTTP GET and, sometimes, POST calls.

Many more parts go into web sites and web applications today. Ajax is like the materials that go into making a high-rise building. High rises are made of steel instead of wood, and their exteriors are modern and flashy with metals and special glass. The basic structure is still there, though (unless the building was designed by Frank Lloyd Wright); walls run parallel and perpendicular to one another at 90-degree angles, and all of the structure’s basic elements, including plumbing, electricity, and lighting, are the same—they are just enhanced.

In this way, the structure of an Ajax application is built on an underlying structure of XHTML, which was merely an extension of HTML, and so forth. Here are what I consider to be the tools used to build Ajax web applications:

Now, obviously, other things can go into building an Ajax application, such as Extensible Stylesheet Language Transformation (XSLT), syndication feeds with RSS and Atom (of course), some sort of server-side scripting (which is often overlooked when discussing Ajax in general), and possibly a database.

XHTML is the structure of any Ajax application, and yes, HTML is too, but we aren’t going to discuss older technology here. XHTML holds everything that is going to be displayed on the client browser, and everything else works off of it. The DOM is used to navigate all of the XHTML on the page. JavaScript has the most important role in an Ajax application. It is used to manipulate the DOM for the page, but more important, JavaScript creates all of the communication between client and server that makes Ajax what it is. CSS is used to affect the look of the page, and is manipulated dynamically through the DOM. Finally, XML is the protocol that is used to transfer data back and forth between clients and servers.

You may not think that changing and adding tools would have that much of an impact on how a site functions, but it certainly does. For a case study, I want to turn your attention to a site that actually existed in the classic web environment, and exists now as a changed Ajax web application. Then there will be no doubt as to just how far the Web has come.

The following is a closer look at MapQuest, Inc. (http://www.mapquest.com/), how it functioned and existed in 2000, and how it functions today.

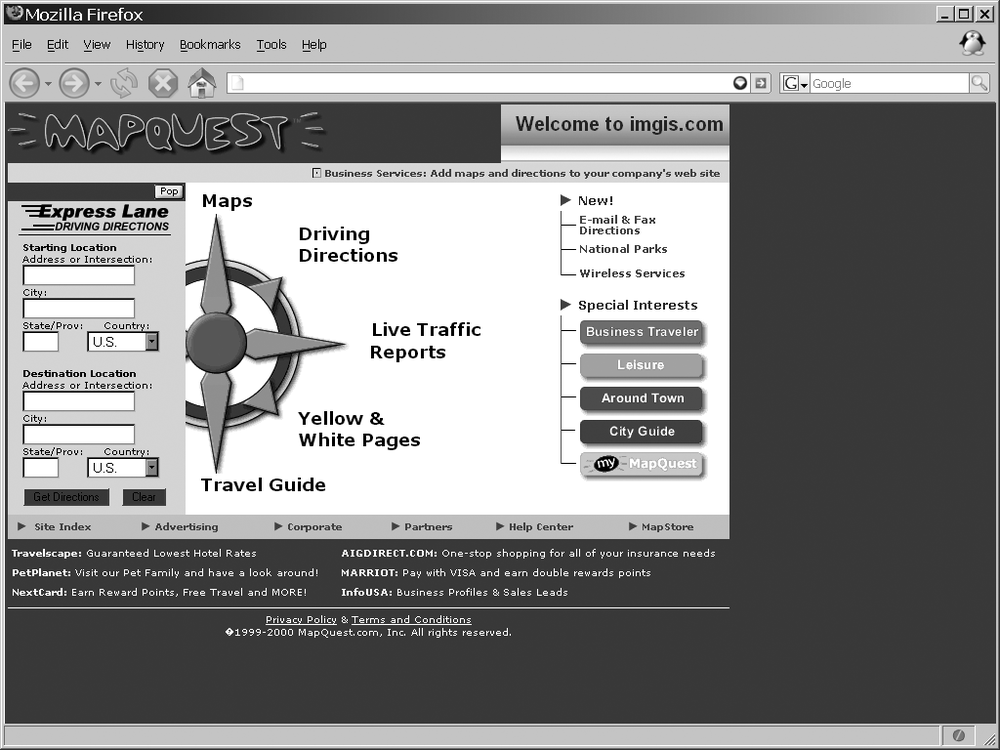

Most people are familiar with MapQuest, seen in Figure 1-1, and how it pretty much single-handedly put Internet mapping on the map (no pun intended). For those who are not familiar with it, I’ll give the briefest of introductions. MapQuest was launched on February 5, 1996, delivering maps and directions based on user-defined search queries. It has been the primary source for directions and maps on the Web for millions of people ever since (well, until Google, at least).

Figure 1-1. MapQuest’s home page in 2000, according to The Wayback Machine (http://www.archive.org/)

As MapQuest evolved, it began to offer more services than just maps and driving directions. By 2000, it offered traffic reports, travel guides, and Yellow and White Pages as well. How did it deliver all of these services? The same way all other Internet sites did at the time: click on a link or search button, and you were taken to a new page that had to be completely redrawn. The same held true for all of the map navigation. A change in the zoom factor or a move in any direction yielded a round trip to the server that, upon return, caused the whole page to refresh. You will learn more about this client/server architecture in the section "Basic Web and Ajax Design Patterns" in Chapter 2.

What you really need to note about MapQuest—and all web sites in general at the time—is that for every user request for data, the client would need to make a round trip to the server to get information. The client would query the server, and when the server returned with an answer, it was in the form of a completely new page that needed to be loaded into the browser. Now, this can be an extremely frustrating process, especially when navigating a map or slightly changing query parameters for a driving directions search. And no knock at MapQuest is intended here. After all, this was how everything was done on the Internet back then; it was the only way to do things.

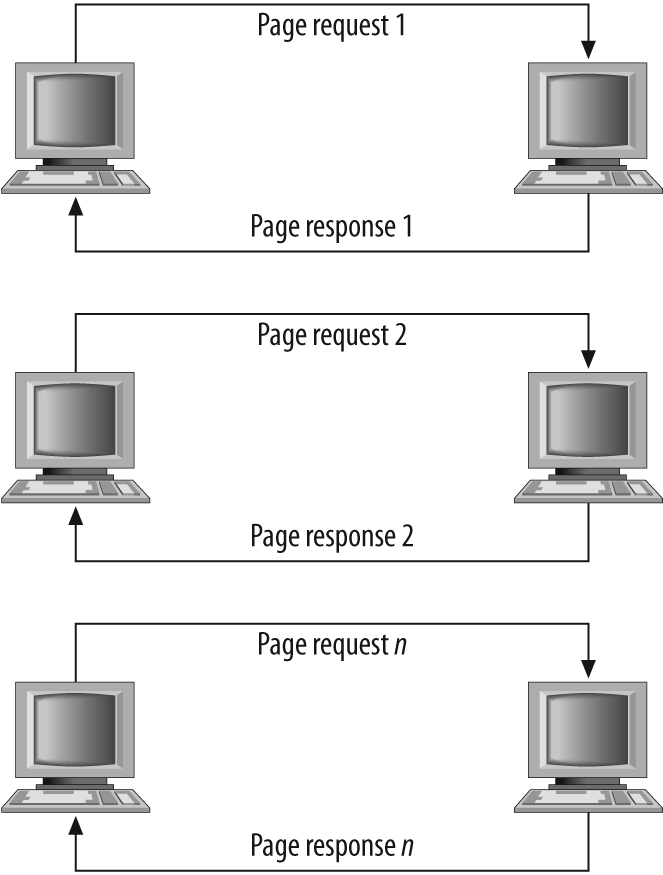

The Web was still in its click-wait-click-wait stage, and nothing about a web page was in any way dynamic. Every user interaction required a complete page reload, accompanied by the momentary “flash” as the page began the reloading process. It could take a long time for these pages to reload in the browser—everything on the page had to be loaded again. This includes all of the background loading of CSS and JavaScript, as well as images and objects. Figure 1-2 illustrates the flow of interaction on the Web as it was in 2000.

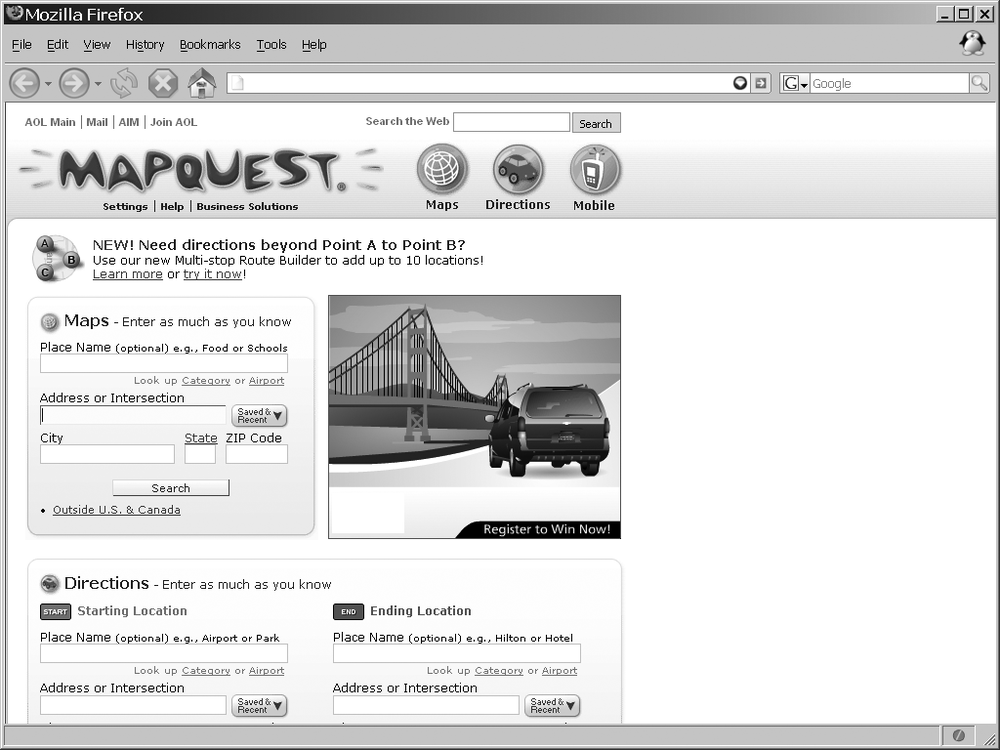

In 2005, when Google announced its version of Internet mapping, Google Maps, everything changed both for the mapping industry and for the web development industry in general. The funny thing was that Google was not using any fancy new technology to create its application. Instead, it was drawing on tools that had been around for some time: (X)HTML, JavaScript, and XML. Soon after, all of the major Internet mapping sites had to upgrade, and had to implement all the cool features that Google Maps had, or they would not be able to compete in the long term. MapQuest, shown in Figure 1-3, did just that.

Tip

Jesse James Garrett coined the term Ajax in February 2005 in his essay, “Ajax: A New Approach to Web Applications” (http://www.adaptivepath.com/publications/essays/archives/000385.php). Although he used Ajax, others began using the acronym AJAX (which stands for Asynchronous JavaScript and XML). I prefer the former simply because the X for XML is not absolutely necessary for Ajax to work; JavaScript Object Notation (JSON) or plain text could be used instead.

Now, when you’re browsing a map, the only thing on the page that refreshes when new data is requested is the map itself. It is dynamic. This is also the case when you get driving directions and wish to add another stop to your route. The whole page does not refresh, only the map does, and the new directions are added to the list. The result is a more interactive user experience.

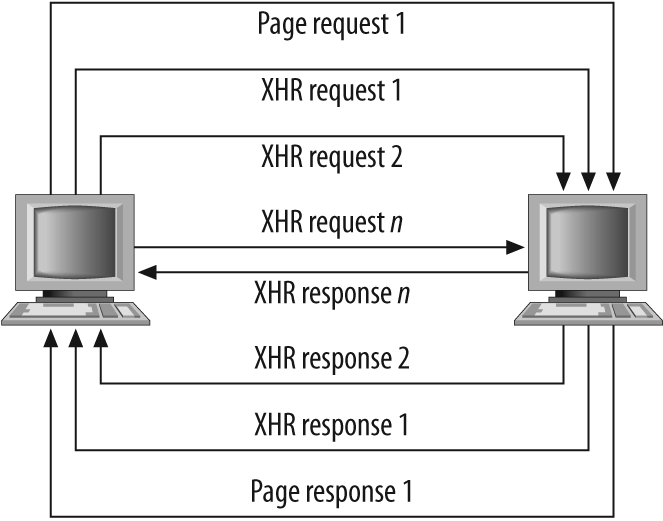

Ajax web applications remove the click-wait-click-wait scenario that has plagued the Web for so long. Now, when you request information, you may still perform other tasks on the page while your request (not the whole page) loads. All of this is done by using the Ajax tools discussed earlier, in the “Ajax” section of this chapter, and the standards that apply to them. After reading the section “Standards Compliance,” later in this chapter, you will have a better idea of why coding to standards is important, and what it means when a site does not validate correctly (MapQuest, incidentally, does not). Figure 1-4 shows how Ajax has changed the flow of interaction on a web page.

The addition of Ajax as a tool to use in web applications allows a developer to make user interaction more similar to that of a desktop application. Flickering as a page is loaded after user interaction goes away. The user will perceive everything about the web application as being self-contained. With this technology a savvy developer can make an application function in virtually the same way, whether on the Web or on the desktop.

Web standards: these two words evoke different feelings in different people. Some will scoff and roll their eyes, some will get angry and declare the need for them, and some will get on a soapbox and preach to anyone who will listen. Whatever your view is, it is time to reach a common ground on which everyone can agree. The simple fact is that web standards enable the content of an application to be made available to a much wider range of people and technologies at lower costs and faster development speeds.

Tip

Using the standards that have been published on the Web (and making sure they validate) satisfies the following Web Accessibility Initiative-Web Content Accessibility Guidelines (WAI-WCAG) 1.0 guideline:

Priority 2 checkpoint 3.2: Create documents that validate to published formal grammars.

In the earlier years of the Web, the browser makers were to blame for difficulties in adopting web standards. Anyone that remembers the days of the 4.0 browsers, more commonly referred to as the “Browser Wars,” will attest to the fact that nothing you did in one environment would work the same in another. No one can really blame Netscape and Microsoft for what they did at the time. Competition was stiff, so why would either of them want to agree on common formats for markup, for example?

This is no longer the case. Now developers are to blame for not adopting standards. Some developers are stuck with the mentality of the 1990s, when browser quirks mode, coding hacks, and other tricks were the only things that allowed code to work in all environments. Also at fault is “helpful” What You See Is What You Get (WYSIWYG) software that still generates code geared for 4.0 browsers without any real thought to document structure, web standards, separating structure from presentation, and so forth.

Now several standards bodies provide the formal standards and technical specifications we all love and hold dear to our hearts. For our discussion on standards, we will be concerning ourselves with the W3C (http://www.w3.org/), Ecma International (formerly known as ECMA; http://www.ecma-international.org/), and the Internet Engineering Task Force (IETF; http://www.ietf.org/). These organizations have provided some of the standards we web developers use day in and day out, such as XHTML, CSS, JavaScript, the DOM, XML, XSLT, RSS, and Atom.

Not only does Ajax use each standard, but also these standards are either the fundamental building blocks of Ajax or may be used in exciting ways with Ajax web applications.

On January 26, 2000, the W3C published “XHTML 1.0: The Extensible HyperText MarkUp Language,” a reformulation of HTML 4.01 as XML. Unfortunately, even today XHTML 1.0 is still not incorporated in a vast majority of web sites. It may be that people are taking the “if it ain’t broke, don’t fix it” mentality when it comes to changing their markup from HTML 4.01 to XHTML 1.0, it may be that people just do not see the benefits of XML, or it may be, as is especially true in corporate environments, that there is simply no budget to change sites that already exist and function adequately. Even after a second version of the standard was released on August 1, 2002, incorporating the errata changes made to that point, it still was not widely adopted.

On May 31, 2001, even before the second version of XHTML 1.0 was released, the W3C introduced the “XHTML 1.1—Module-based XHTML Recommendation.” This version of XHTML introduced the idea of a modular design, with the intention that you could add other modules or components to create a new document type without breaking standards compliance (though it would break XHTML compliance); see Example 1-1. All deprecated features of HTML (presentation elements, framesets, etc.) were also completely removed in XHTML 1.1. This, more than anything, slowed the adoption of XHTML 1.1 in the majority of web sites, as few people were willing to make the needed changes—redesigning site layout without frames and adding additional attributes to elements, not to mention removing presentation and placing that into CSS. Contributing to XHTML 1.1’s lack of deployment is the fact that it is not backward-compatible with XHTML 1.0 and HTML.

Example 1-1. The simplest XHTML 1.1 document

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.1//EN" "http://www.w3.org/TR/xhtml11.dtd">

<html xmlns="http://www.w3.org/1999/xhtml" xml:lang="en">

<head>

<title>Example 1-1. The simplest XHTML 1.1 document</title>

<meta http-equiv="content-type" content="text/xml; charset=utf-8" />

</head>

<body>

<div>Hello World!</div>

</body>

</html>Although the vast majority of web sites out there are not following the XHTML 1.1 Recommendation, it has tremendous potential for certain key areas. The development of new applications on the Web, and the use of those applications on different platforms such as mobile and wireless devices, is leading to a greater rate of adoption than when XHTML 1.1 was first published. For this reason, I believe it is important to recognize the power and potential of XHTML 1.1. Therefore, we will follow this standard in nearly every example in this book (see Chapter 20 and Chapter 21 for different standards usage).

With that said, we must be mindful that the future of web application development is being proposed right now. Already the W3C has a working draft for an XHTML 2.0 specification. In XHTML 2.0, HTML forms are replaced with XForms, HTML frames are replaced with XFrames, and DOM Events are replaced with XML Events. It builds on past recommendations, but when the XHTML 2.0 Recommendation is published, it will define the beginning of a new era in web development. You should note that XHTML 2.0 is not designed to be backward-compatible. Development taking advantage of this recommendation will most likely be geared toward more specialized audiences that have the ability to view such applications, and not the general public. It will be some time before this recommendation gets its feet off the ground, but I felt that it was worth mentioning. You can find more information on the XHTML family of recommendations at http://www.w3.org/MarkUp/.

Netscape Communications Corporation’s implementation of ECMAScript, now a registered trademark of Sun Microsystems, Inc., is JavaScript. It was first introduced in December 1995. In response, Microsoft developed its own version of the ECMA standard, calling it JScript. This confused a lot of developers, and at the time it was thought to contribute to the incompatibilities among web browsers. These incompatibilities, however, are more likely due to differences in DOM implementation rather than JavaScript or its subset, ECMAScript.

The European Computer Manufacturer’s Association (ECMA) International controls the recommendations for ECMAScript. JavaScript 1.5 corresponds to the ECMA-262 Edition 3 standard that you can find at http://www.ecma-international.org/publications/standards/Ecma-262.htm. As of 2009, the latest implemented version of JavaScript is 1.9, which builds upon all of its predecessors (1.5 through 1.8.1) – all of which correspond to ECMA-262 Edition 3 starting at 1.5. This latest addition includes ECMAScript 5 compliance, and is projected to first be seen in Mozilla Firefox 4.

JavaScript technically does not comply with ECMA International standards. Mozilla has JavaScript, Internet Explorer has JScript, and Opera and Safari have other ECMAScript implementations, though it should be noted that Mozilla is closer to standards than Internet Explorer is. Most of these browsers have now implemented to at least JavaScript 1.7, with the exception being Internet Explorer and surprisingly, Opera, who have still only implemented to JavaScript 1.5. For this reason all code examples, unless otherwise noted, are based on this version.

The Document Object Model, a Level 2 specification built onto

the existing DOM Level 1 specification, introduced modules to the

specification. The Core, View, Events,

Style, and Traversal and

Range modules were introduced on November 13, 2000. The

HTML module was introduced on January 9, 2003.

The DOM Level 3 specification built onto its predecessor as

well. The modules changed around somewhat, but what this version

added to DOM Level 2 was greater functionality to work with XML.

This was an important specification, as it adds to the functionality

of Ajax applications as well. The Validation module was published on

December 15, 2003. The modules Core and Load and

Save were published on April 7, 2004.

Not all of the modules for DOM Level 3 have become

recommendations yet, and because of that they bear watching. The

Abstract Schemas module has been

a note since July 25, 2002; Events has been a working group note since

November 7, 2003 (though it was updated April 13, 2006); XPath has been a working group note since

February 24, 2004; and Requirements and Views and Formatting have been working

group notes since February 26, 2004. These modules will further

shape the ways in which developers can interact with the DOM,

subsequently shaping how Ajax applications perform as well.

The W3C’s DOM Technical Reports page is located at http://www.w3.org/DOM/DOMTR.

The W3C proposed the “Cascading Style Sheets Level 2 (CSS2) Recommendation” on May 12, 1998. Most modern browsers support most of the CSS2 specifications, though there are some issues with full browser support, as you will see in the “Browsers” section, later in this chapter. The CSS2 specification was built onto the “Cascading Style Sheets Level 1 (CSS1) Recommendation,” which all modern browsers should fully support.

Because of poor adoption by browsers of the CSS2 Recommendation, the W3C revised CSS2 with CSS2.1 on August 2, 2002. This version was more of a working snapshot of the current CSS support in web browsers than an actual recommendation. CSS2.1 became a Candidate Recommendation on February 24, 2004, but it went back to a Working Draft on June 13, 2005 to fix some bugs and to match the current browser implementations at the time.

Browsers are working toward full implementation of the CSS2.1 standard (some more than others), even though it is still a working draft, mainly so that when the newer Cascading Style Sheets Level 3 (CSS3) finally becomes a recommendation they will not be as far behind the times. CSS3 has been under development since 2000, and is important in that it also has taken into account the idea of modularity with its design. Beyond that, it defines the styles needed for better control of paged media, positioning, and generated content, plus support for Scalable Vector Graphics (SVG) and Ruby. These recommendations will take Ajax web development to a whole new level, but as of this writing CSS3 is very sparsely implemented. So, this book will primarily be using the CSS2.1 Recommendation for all examples, unless otherwise noted.

You can find more information on the W3C’s progress on CSS at http://www.w3.org/Style/CSS/.

XML is the general language for describing different kinds of data, and it is one of the main data transportation agents used on the Web. The W3C’s XML 1.0 Recommendation is now in its fifth edition: the first was published on February 10, 1998 while the latest edition was published on November 26, 2008. At the same time as edition three was being released (February 4, 2004), the W3C also published the XML 1.1 Recommendation, which gave consistency in character representations and relaxed names, allowable characters, and end-of-line representations. The second edition of XML 1.1 was published on September 29, 2006. Though both XML 1.0 and XML 1.1 are considered current versions, this book will not need anything more than XML 1.0.

People like XML for use on the Web for a number of reasons. It is self-documenting, meaning that the structure itself defines and describes the data within it. Because it is plain text, there are no restrictions on its use, an important point for the free and open Web. And both humans and machines can read it without altering the original structure and data. You can find more on XML at http://www.w3.org/XML/.

Even though Ajax is no longer an acronym and the X in AJAX is now just an x, XML is still an important structure to mention when discussing Ajax applications. It may not be the transportation mode of choice for many applications, but it may still be the foundation for the data that is being used in those applications by way of syndication feeds.

The type of syndication that we will discuss here is, of course, that in which sections of a web site are made available for other sites to use, most often using XML as the transport agent. News, weather, and blog web sites have always been the most common sources for syndication, but there is no limitation as to where a feed can come from.

The idea of syndication is not new. It first appeared on the Web around 1995 when R. V. Guha created a system called Meta Content Framework (MCF) while working for Apple. Two years later, Microsoft released its own format, called Channel Definition Format (CDF). It wasn’t until the introduction of the RDF-SPF 0.9 Recommendation in 1999, later renamed to RSS 0.9, that syndication feeds began to take off.

Tip

For much more on syndication and feeds see Developing Feeds with RSS and Atom, by Ben Hammersley (O’Reilly).

RSS is not a single standard, but a family of standards, all using XML for their base structure. Note that I use the term standard loosely here, as RSS is not actually a standard. (RDF, the basis of RSS 1.0, is a W3C standard.) This family of standards for syndication feeds has a sordid history, with the different versions having been created through code forks and disagreements among developers. For the sake of simplicity, the only version of RSS that we will use in this book is RSS 2.0, a simple example of which you can see in Example 1-2.

Example 1-2. A modified RSS 2.0 feed from O’Reilly’s News & Articles Feeds

<?xml version="1.0"?>

<rss version="2.0">

<channel>

<title>O'Reilly News/Articles</title>

<link>http://www.oreilly.com/</link>

<description>O'Reilly's News/Articles</description>

<copyright>Copyright O'Reilly Media, Inc.</copyright>

<language>en-US</language>

<docs>http://blogs.law.harvard.edu/tech/rss</docs>

<item>

<title>Buy Two Books, Get the Third Free!</title>

<link>http://www.oreilly.com/store</link>

<guid>http://www.oreilly.com/store</guid>

<description><![CDATA[ (description edited for display purposes...)

]]></description>

<author>webmaster@oreillynet.com (O'Reilly Media, Inc.)</author>

<dc:date></dc:date>

</item>

<item>

<title>New! O'Reilly Photography Learning Center</title>

<link>http://digitalmedia.oreilly.com/learningcenter/</link>

<guid>http://digitalmedia.oreilly.com/learningcenter/</guid>

<description><![CDATA[ (description edited for display purposes...)

]]></description>

<author>webmaster@oreillynet.com (O'Reilly Media, Inc.)</author>

<dc:date></dc:date>

</item>

</channel>

</rss>Warning

Make sure you know which RSS standard you are using:

Each syndication format is different from the next, especially RSS 1.0. (This version is more modular than the others, but also more complex.) Most RSS processors can handle all of them, but mixing pieces from different formats may confuse even the most flexible processors.

Because of all the different versions of RSS and resulting issues and confusion, another group began working on a new syndication specification, called Atom. In July 2005, the IETF accepted Atom 1.0 as a proposed standard. In December of that year, it published the Atom Syndication Format protocol known as RFC 4287 (http://tools.ietf.org/html/4287). An example of this protocol appears in Example 1-3.

There are several major differences between Atom 1.0 and RSS

2.0. Atom 1.0 is within an XML namespace, has a registered MIME

type, includes an XML schema, and undergoes a standardization

process. By contrast, RSS 2.0 is not within a namespace, is often

sent as application/rss+xml but

has no registered MIME type, does not have an XML schema, and is

not standardized, nor can it be modified, as per its

copyright.

Example 1-3. A modified Atom feed from O’Reilly’s News & Articles Feeds

<?xml version="1.0" encoding="utf-8"?>

<feed xmlns="http://www.w3.org/2005/Atom" xml:lang="en-US">

<title>O'Reilly News/Articles</title>

<link rel="alternate" type="text/html" href="http://www.oreilly.com/" />

<subtitle type="text">O'Reilly's News/Articles</subtitle>

<rights>Copyright O'Reilly Media, Inc.</rights>

<id>http://www.oreilly.com/</id>

<updated></updated>

<entry>

<title>Buy Two Books, Get the Third Free!</title>

<id>http://www.oreilly.com/store</id>

<link rel="alternate" href="http://www.oreilly.com/store"/>

<summary type="html"> </summary>

<author>

<name>O'Reilly Media, Inc.</name>

</author>

<updated></updated>

</entry>

<entry>

<title>New! O'Reilly Photography Learning Center</title>

<id>http://digitalmedia.oreilly.com/learningcenter/</id>

<link rel="alternate"

href="http://digitalmedia.oreilly.com/learningcenter/"/>

<summary type="html"> </summary>

<author>

<name>O'Reilly Media, Inc.</name>

</author>

<updated></updated>

</entry>

</feed>XSLT is an XML-based language used to transform, or format, XML documents. On November 16, 1999, XSLT version 1.0 became a W3C Recommendation. As of January 23, 2007, XSLT version 2.0 is a Recommendation that works in conjunction with XPath 2.0. (Most browsers currently support only XSLT 1.0 and XPath 1.0.) XSLT uses XPath to identify subsets of the XML document tree and to perform calculations on queries. We will discuss XPath and XSLT in more detail in Chapter 5. For more information on the XSL family of W3C Recommendations, visit http://www.w3.org/Style/XSL/.

XSLT takes an XML document and creates a new document with all of the transformations, leaving the original XML document intact. In Ajax contexts, the transformation usually produces XHTML with CSS linked to it so that the user can view the data in his browser.

Like standards, browsers can be a touchy subject for some people. Everyone has a particular browser that she is comfortable with, whether because of features, simplicity of use, or familiarity. Developers need to know, however, the differences among the browsers—for example, what standards they support. Also, it should be noted that it’s not the browser, but rather the engine driving it that really matters. To generalize our discussion of browsers, therefore, it’s easiest to focus on the following engines:

Gecko

Trident

KHTML/WebKit

Presto

Table 1-1 shows just how well each major browser layout engine supports the standards we have discussed in this chapter, as well as some that we will cover later in the book.

Table 1-1. Standards supported by browser engines

Gecko | Trident | Presto | ||

|---|---|---|---|---|

HTML | Yes | Yes | Yes | Yes |

XHTML/XML | Yes | Partial | Yes | Yes |

CSS1 | Yes | Yes | Yes | Yes |

CSS2 (CSS2.1) | Yes | Partial | Yes | Yes |

CSS3 | Partial | Partial | Partial | Partial |

DOM Level 1 | Yes | Partial | Yes | Yes |

DOM Level 2 | Yes | No | Yes | Yes |

DOM Level 3 | Partial | No | Partial | Partial |

RSS | Yes | Yes | Yes | Yes |

Atom | Yes | Yes | Yes | Yes |

JavaScript | 1.8.1 | 1.5 | 1.7 | 1.5 |

PNG alpha-transparency | Yes | Yes | Yes | Yes |

XSLT | Yes | Yes | Yes | Yes |

SVG | Partial | No | Partial | Partial |

XPath | Yes | Yes | Yes | Yes |

Ajax | Yes | Yes | Yes | Yes |

Progressive JPEG | Yes | No | Yes | Yes |

Gecko is the layout engine built by the Mozilla project and used in all Mozilla-branded browsers and software. Some of these products are Mozilla Firefox, Netscape, and K-Meleon. One of the nice features of Gecko is that it is cross-platform by design, so it runs on several different operating systems, including Windows, Linux, and Mac OS X.

Trident is the layout engine that Internet Explorer (Windows versions only) has used since version 4.0, and it is sometimes referred to as MSHTML. AOL Explorer and Netscape use it as well (Netscape can use either Gecko or Trident).

KHTML is the layout engine developed by the KDE project. The most notable browsers that use KHTML are KDE Konqueror and Apple’s Safari, though Safari uses a variant called WebKit, which Google’s Chrome and OmniWeb also use. A version of KHTML is now also being used on Nokia series 60 mobile phones.

Presto is the layout engine developed by Opera Software for the Opera web browser. The engine is also used in the Mac OS X versions of Macromedia Dreamweaver MX and later. Presto is probably the most standards-compliant browser out there today.

Other layout engines support browsers on the Web, but these browsers make up less than two percent of all browsers in use today, and maybe even less than that. These layout engines support a wide range of standards, but none of these browsers implements any standard that another one of the aforementioned layout engines does not already implement.

So far, I have pointed out the current standards and when they were introduced, as well as which browsers support them, but I still need to answer a burning question: “Why program to standards, anyway?” Let’s discuss that now.

What is one of the worst things developers have to account for when programming a site for the Internet? That answer is easy, right? Backward compatibility. Developers are always struggling to make their sites work with all browsers that could potentially view their work. But why bend over backward for the 0.01 percent of people clinging to their beloved 4.0 browsers? Is it really that important to make sure that 100 percent of the people can view your site? Some purists will probably answer “yes,” but in this new age of technology, developers should be concerned with a more important objective: forward compatibility.

Forward compatibility is, in all actuality, harder to achieve than backward compatibility. Why? Just think about it for a minute. With backward compatibility, you as a developer already know what format all your data needs to be in to work with older browsers. This is not the case with forward compatibility, because you are being asked to program to an unknown. Here is where standards compliance really comes into play. By adhering to the standards that have been put forth and by keeping faith that the standards bodies will keep backward compatibility in mind when producing newer recommendations, the unknown of forward compatibility is not so unknown. Even if future recommendations do not have built-in backward compatibility, by following the latest standards that have been put forth, you will still, in all likelihood, be set up to make a smoother transition if need be. After all, instead of worrying whether my site works for a browser that is nine years old and obsolete, I would rather worry that my site will work, with only very minor changes, nine years from now. Wouldn’t you?

Keep in mind, too, that by complying with the latest standards, you are ensuring that site accessibility can still be achieved. For examples of maintaining accessibility, see “Accessibility” in Chapter 6. After all, shouldn’t we be more concerned with making our sites accessible to handicapped viewers than to viewers whose only handicap is that they have not upgraded their browsers?

And why not have standards-compliant sites now? I mean, come on. Most of the recommendations that I laid out earlier are not exactly new. XHTML 1.1 is from 2001. DOM Level 3 is from 2003 and 2004. The recommendations for CSS2 started in 1998. The latest XML is from 2004, and XSLT has not had a new recommendation since 1999.

It is time to give the users of the older browsers reasons to upgrade to something new, because let’s face it, if they haven’t upgraded by now (we are talking about almost a decade here!), they are never going to unless they are pushed to do so. It is time to give old browser users that push, and to give users of the current browsers the sites they deserve to have.

So, what exactly do users deserve? They deserve interaction, accessibility, and functionality; but most of all, they deserve for the Web to be a platform, and Ajax is the means to that end. With Ajax, you can make the interface in the browser be just like a desktop application, and it can react faster and offer functionality that web users have not traditionally had in the past (such as inline editing, hints as you type, etc.). Sites can be built that allow unprecedented levels of collaboration. But what, you may ask, is in it for the developers and clients paying for this platform? The answer: lower costs, better accessibility, more visibility, and better perception.

A great plus to building a standards-based Ajax web application is that it is so much easier to maintain. By separating presentation from content, you are allowing your application to be more easily modified and updated. It also reduces the size of files, consuming less bandwidth. This equals less money spent on making those changes and lower hosting costs for your application. Another plus is that your web application becomes more accessible to your viewers. A well-built web application functions in a manner in which users have come to expect from desktop applications, and can more easily adapt to your site. Also, the accessibility for handicapped viewers is more readily available (we will discuss the coding for such sites in later chapters). Search engines can more easily interpret the relevance of text on your site when the application is coded correctly. This leads to better visibility on these search engines, and more viewing of your application, as you are better represented by user queries. Finally, users will have a better perception of your application when it provides easy-to-use navigation, reacts quickly, and functions correctly in their browsers. And who can perceive a site badly when it loads quickly, yielding better user experiences?

Ajax web development gives you everything you need. And what makes Ajax special is that it is not a new technology—it is the combination of many technologies that have been around for a while and that are production-tested. User interaction, fast response time, desktop-like features: web applications are no longer something that you can only dream of for the future. Web applications are in the here and now. Welcome to Web 2.0 with Ajax.

Get Ajax: The Definitive Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.