Chapter 4. Network Operating System Choices

All models are wrong, but some are useful.

George E.P. Box

All operating systems suck, but Linux just sucks less.

Linus Torvalds

The breakup at the heart of cloud native data center networking was engineered by the network operators dissatisfied with the degree of control the vendors were willing to cede. Whenever a breakup occurs, the possibilities seem limitless, and there’s a natural tendency to explore new ideas and ways of being that didn’t seem possible before. But after a while, we realize that some of the old ways of being were actually fine. Just because we did not like watching yet another episode of The X Files with pizza every Friday evening did not mean that pizza was the problem. We don’t need to throw out the things that work with the things that do not.

Thus, it was that the evolution of the modern big data and cloud native applications gave rise to a whole new set of ideas about how to do networking. Some were born out of the necessity of the time, some were truly fundamental requirements, and some are still testing their mettle in the new world. In this chapter, we follow the possibilities and models explored by the software half (the better half?) of network disaggregation.

The chapter aims to help you answer questions such as the following:

-

What are the primary requirements of a cloud native NOS?

-

What are OpenFlow and software-defined networking? Where do they make sense and where do they not?

-

What are the possible choices for a NOS in a disaggregated box?

-

How do the models compare with the requirements of cloud native NOS?

Requirements of a Network Device

In the spirit of Hobbes, the state of NOS around 2010 could be summarized as: proprietary, manual, and embedded. The NOS was designed around the idea that network devices were appliances and so the NOS had to function much like an embedded OS, including manual operation. What this meant was that the NOS stood in the way of everything that the cloud native data center operators wanted. James Hamilton, a key architect of Amazon Web Services (AWS), wrote a well-read blog post titled “Datacenter Networks Are in my Way” discussing these issues.

Operators buying equipment on a vast scale were extremely conscious of costs—the cost of both the equipment and the cost of operating it at scale. To control the cost of running the network, the operators wanted to change the operations model. Thus, a primary factor distinguishing a cloud native networking infrastructure from earlier models is the focus on efficient operations. In other words, reduce the burden of managing network devices. The other distinguishing characteristic is the focus on agility: speed of innovation, the speed of maintenance and upgrades, and the speed with which new services and devices can be rolled out. From these two needs arose the following requirements of any network device in the cloud native era:

- Programmability of the device

-

For efficient operations that can scale, automation is a key requirement. Automation is accomplished by making both device configuration and device monitoring programmable.

- Ability to run third-party applications

-

This includes running configuration and monitoring agents that don’t rely on antiquated models provided by switch vendors. It also includes writing and running operator-defined scripts in modern programming languages such as Python. Some prefer to run these third-party apps as containerized apps, primarily to isolate and contain a badly behaving third-party app.

- Ability to replace vendor-supplied components with their equivalents

-

An example of this requirement is to replace the vendor-supplied routing suite with an open source version. This is a less common requirement for most network operators, but it is essential for many larger-scale operators to try out new ideas that might solve problems specific to their networks. This is also something that researchers in the academia would like. For a very long time, academia has had very little to contribute to the three lowest layers of the networking stack, including routing protocol innovations. The network was something you innovated around rather than with. The goal of this requirement was to crack open the lower layers to let parties other than the vendors make relevant contributions in networking. As an example of the innovation that can result, imagine the design, testing, and deployment of new path optimization algorithms or routing protocols.

- Ability for the operator to fix bugs in the code

-

This allows the operators to adapt to their environment and needs as quickly as they want, not just as quickly as the vendor can.

In other words, the network device must behave more like a server platform than the embedded box or specialized appliance that it had been for a long time.

The Rise of Software-Defined Networking and OpenFlow

The first salvo in the search for a better operating model came from academia, which was battling a different problem. The networking research community faced a seemingly insurmountable problem: how to perform useful research with a vertically integrated switch made up of proprietary switching silicon and a NOS that was not designed to be a platform? Neither the network vendors nor the network operators would allow any arbitrary code to be run on the switch. So the academics came up with an answer that they hoped would overcome these problems. Their answer, expressed in the influential OpenFlow paper, was based on the following ideas:

-

Use the flow tables available on most packet-switching silicon to determine packet processing. This would allow researchers to dictate novel packet-forwarding behavior.

-

Allow the slicing of the flow table to allow operators to run production and research data on the same box. This would allow researchers to test out novel ideas on real networks without messing up the production traffic.

-

Define a new protocol to allow software running remotely to program these flow tables and exchange other pieces of information. This would allow researchers to remotely program the flow tables, eliminating the need to run software on the switch itself, thereby sidestepping the nonplatform model of the then-current switch network operating systems.

Flow tables (usually called Access Control List tables in most switch literature) are lookup tables that use at least the following fields in their lookup key: source and destination IP addresses, the Layer 4 (L4) protocol type (Transmission Control Protocol [TCP], User Datagram Protocol [UDP], etc.), and the L4 source and destination ports (TCP/UDP ports). The result of the lookup could be one of the following actions:

-

Forward the packet to a different destination than provided by IP routing or bridging

-

Drop the packet

-

Perform additional actions such as counting, Network Address Translation (NAT), and so on.

The node remotely programming the flow tables is called the controller. The controller runs software to determine how to program the flow tables, and it programs OpenFlow nodes. For example, the controller could run a traditional routing protocol and set up the flow tables to use only the destination IP address from the packet, causing the individual OpenFlow node to function like a traditional router. But because the routing protocol is not a distributed application—an application running on multiple nodes with each node independently deciding the contents of the local routing table—the researchers could create new path forwarding algorithms without any support from the switch vendor. Moreover, the researchers could also try out completely new packet-forwarding behavior very different from traditional IP routing.

This separation of data plane (the packet-forwarding behavior) and control plane (the software determining the population of the tables governing packet forwarding) would allow researchers to make changes to the control plane from the comfort of a server OS. The NOS would merely manage resources local to the device. Based on the protocol commands sent by the control-plane program, the NOS on the local device would update the flow tables.

This network model, having a central control plane and a distributed data plane, became known as software-defined networking (SDN). The idea was to make the flow tables into a universal, software abstraction of the forwarding behavior built into switching silicon hardware. This was a new approach to breaking open the vendor-specific world of networking.

More Details About SDN and OpenFlow

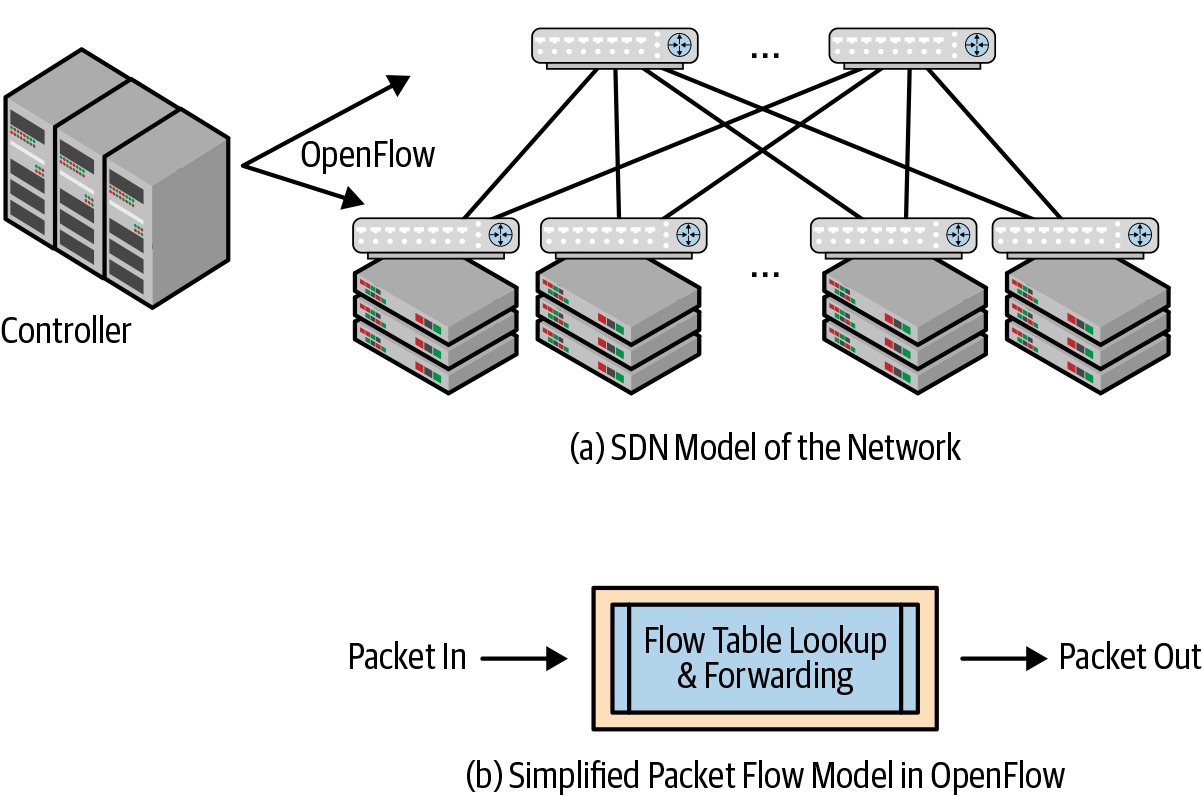

Figure 4-1 shows the two central components of a switch with SDN. Figure 4-1(a) shows how OpenFlow manages a network run by devices with flow tables governing packet forwarding, as shown in Figure 4-1(b).

Figure 4-1. OpenFlow and SDN

Figure 4-1(a) shows that configuration and monitoring can be done at a central place. The network operator has a god’s view of the entire network. The hope was that this model would also relieve network operators of trying to translate their network-wide desired behavior into individual box-specific configuration.

Figure 4-1(b) shows that the switching silicon can be quite simple with OpenFlow. The original flow tables consisted of only a handful of fields. To allow for interesting ideas, OpenFlow advocates wanted to make the flow table lookup key very generic: just include all of the fields of the packet header up to a specific size—say the first 40 or 80 bytes. An example of this approach that was often cited was the automatic support for new packet headers, like new tunnel headers. We discuss tunnels in more detail in Chapter 6, but for the purpose of this discussion, assume that the tunnel header is a new packet header inserted before an existing packet header such as the IP header. Because the lookup involved all of the bytes in the packet up to a certain length, the table lookup could obviously handle the presence of these tunnel headers, or other new packet headers, if programmed to recognize them.

The flow tables on traditional switching silicon were quite small compared to the routing or bridging tables. It was quite expensive to build silicon with very large flow tables. So the OpenFlow paper also suggested to not prepopulate the flow tables, but to use them like a cache. If there was no match for a packet in the flow table, send the packet to the controller. The central controller would consult its software tables to determine the appropriate behavior for the packet and also program the flow table so that subsequent packets for this flow would not be sent to the central controller.

The Trouble with OpenFlow

OpenFlow ran into trouble almost immediately out of the gate. The simple flow table lookup model didn’t really work even with simple routing because it didn’t handle ECMP (introduced in “Routing as the Fundamental Interconnect Model”). On the control-plane side, sending the first packet of every new flow to a central control plane didn’t scale. These were both fundamental problems. In the networking world, the idea of sending the first packet of a flow to the control plane had already been explored in several commercial products and deemed a failure. This was because they didn’t scale, and the caches thrashed far more frequently than the developers originally anticipated.

The problems didn’t end there. Packet switching involves a lot more complexity than a simple flow table lookup. Multiple tables are looked up, and sometimes the same table looked up multiple times to determine packet-switching behavior. How would you control the ability to decrement a TTL, or not, to perform checksums on the IP header, handle network virtualization, and so on? Flow table invalidation was another problem. When should the controller decide to remove a flow? The answer was not always straightforward.

To handle all of this and the fact that a simple flow table isn’t sufficient, OpenFlow 1.0 was updated to version 1.1, which was so complex that no packet-switching silicon vendor knew how to support it. From that arose version 1.2, which was a little more pragmatic. The designers also revised the control plane to prepopulate the lookup tables and didn’t require sending the first packet to a controller. But most network operators had begun to lose interest at this point. As a data point, around 2013, when I started talking to customers as an employee of Cumulus Networks, OpenFlow and SDN were all I was asked about. A year and a half or two years later, it rarely came up. I also didn’t see it come up with operators I spoke to who were not Cumulus customers. It is rarely deployed to program data center routers, except for one very large operator: Google.

OpenFlow didn’t succeed as much as its hype had indicated for at least the following reasons:

-

OpenFlow conflated multiple problems into one. The programmability that users desired was for constructs such as configuration and statistics. OpenFlow presented an entirely different abstraction and required reinventing too much to solve this problem.

-

The silicon implementation of flow tables couldn’t scale inexpensively compared to routing and bridging tables.

-

Data center operators didn’t care about research questions such as whether to forward all packets with a TTL of 62 or 12 differently from other packets, or how to perform a lookup based on 32 bits of an IPv4 address combined with the lower 12 bits of a MAC address.

-

OpenFlow’s abstraction models were either too limiting or too loose. And like other attempts to model behavior, such as Simple Network Management Protocol (SNMP), these attempts ended up with more vendor lock-in instead of less. Two different SDN controllers would program the OpenFlow tables very differently. Consequently, after you chose a vendor for one controller, you really couldn’t easily switch vendors.

-

Finally, people realized that OpenFlow presented a very different mindset from the classic networking model of routing and bridging, and therefore a lower maturity level. The internet, the largest network in the world, already ran fairly well (though not without its share of problems). People did not see the benefit of throwing out what worked well (distributed routing protocols) along with the thing that did not (the inability to programmatically access the switch).

OVS

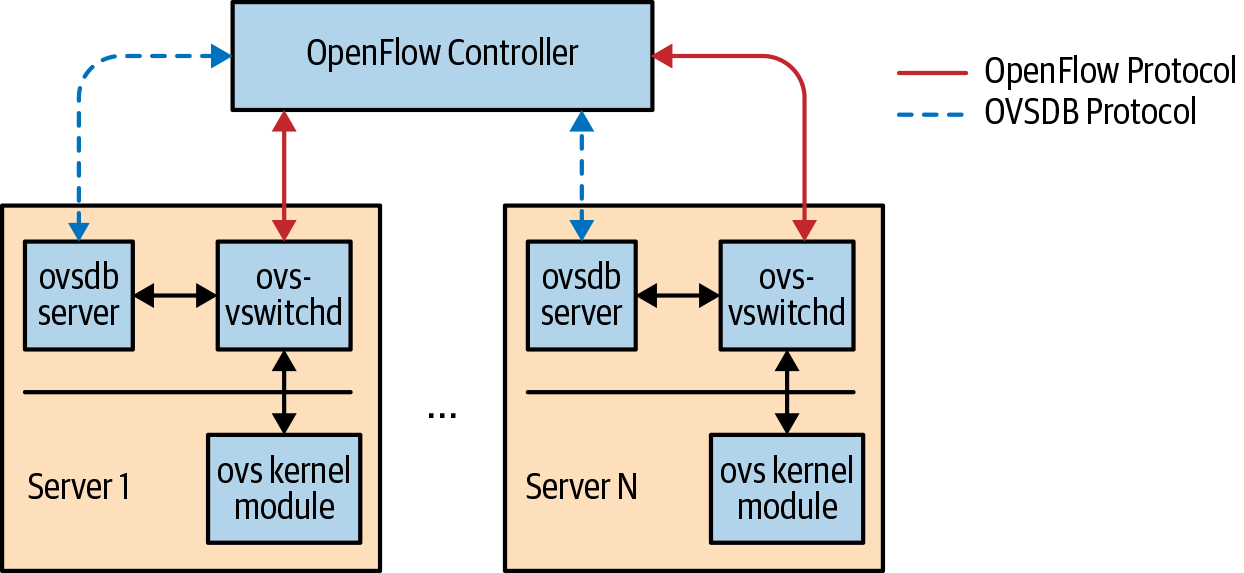

Open Virtual Switch (OVS) is a well-regarded open source implementation of the OpenFlow switch on Linux. ovs-vswitchd is the user space application that implements the OpenFlow switch, which includes the OpenFlow flow tables and actions. It does not use the lookup tables or packet forwarding logic of the Linux kernel. So ovs-vswitchd reimplements a lot of standard code such as Address Resolution Protocol (ARP), IP, and Multiprotocol Label Switching (MPLS). The flow tables in ovs-vswitchd are typically programmed by an OpenFlow controller. As of this writing, OVS was at version 2.11.90 and supports OpenFlow protocol version 1.5. The website provides more details on all this. Use of OVS is the most widespread use of OpenFlow I’ve encountered.

OVS comes with a component called OVSDB server or just OVSDB, which is used to configure and query OVS itself. The OpenFlow controller can query the OVS state via OVSDB using the schema exported by OVSDB server for such queries. The OVSDB specification has been published as RFC 7047 by the IETF. Figure 4-2 shows the relation between the OpenFlow controller, OVSDB, and OVS.

Figure 4-2. OVS components and OpenFlow controller

OVSDB is also used by hypervisor and OpenStack vendors to configure OVS with information about the VMs or endpoints they control on hardware switches. For example, this latter approach is used to integrate SDN-like solutions that configure OVS on hypervisors with bare-metal servers that communicate with the VMs hosted by the hypervisors. In such a model, the switch NOS vendor supplies an OVSDB implementation that translates the configuration specified by OVSDB into its NOS equivalent and into the switching silicon state. VMware’s NSX is a prominent user of OVSDB. Switches from various prominent vendors, old and new, support such a version of OVSDB to get their switch certified for use with NSX.

You can configure OVS to use Intel’s Data Path Development Kit (DPDK) to perform high-speed packet processing in user space.

The Effect of SDN and OpenFlow on Network Disaggregation

OpenFlow and the drums of SDN continue to beat. They are by no means moribund. In some domains such as wide area networks (WANs), the introduction of software-defined WAN (SD-WAN) has been quite successful because it did present a model that was simpler than the ones it replaced. Also, as a global traffic optimization model on the WAN, it was simpler and easier to use and deploy than the complicated RSVP-TE model. Others have come up with a BGP-based SDN. Here, BGP is used instead of OpenFlow as the mechanism to communicate path information for changing the path of certain flows, not every flow. Another use case is as the network virtualization overlay controller such as VMware’s NSX product.

But within the data center, as a method to replace distributed routing protocols with a centralized control plane and a switch with OpenFlow-defined lookup tables, my observations indicate that it has not been successful. Outside of a handful of operators, I have rarely encountered this model for building data center network infrastructure.

The authors of OpenFlow weren’t trying to solve the problems of the cloud native data center or of disaggregated networking. Their promise to provide something like a programmable network device intrigued many people and drew them down the path of OpenFlow.

But SDN muddied the waters of network disaggregation quite a bit. First, it made a lot of network operators think that all their skills acquired over many years via certifications and practice—skills in distributed routing protocols and bridging—were going to be obsolete. Next, traditional switch vendors used this idea of programming to say that network operators had to learn coding and become developers to operate SDN-based networks. This made many of them averse to even considering network disaggregation. And I know of other operators who spent a lot of time trying to run proof-of-concept (PoC) experiments to verify the viability of OpenFlow and SDN in building a data center network, before giving up and running back into the warm embrace of traditional network vendors. Furthermore, many of the vendors who sprang up around the use of SDN to control data center network infrastructure supported only bridging, which, as we discussed, doesn’t scale.

NOS Design Models

If OpenFlow and SDN didn’t really take off as predicted, what is the answer to the NOS in a disaggregated world? Strangely, the OpenFlow paper alluded to a different approach, but rejected it as impractical. From the paper:

One approach—that we do not take—is to persuade commercial “name-brand” equipment vendors to provide an open, programmable, virtualized platform on their switches and routers so that researchers can deploy new protocols.

Alas, the cliché of the road not taken—again. Let us examine the various NOS models in vogue. We begin by looking at the parts that are common before examining the parts that are different.

Here are the two common elements in all modern network operating systems:

- Linux

-

This is the base OS for just about every NOS. By base OS, I mean the part that does process and memory management and nonnetworking device I/O, primarily storage.

- Intel x86/ARM processors

-

A NOS needs a CPU to run on. Intel and ARM are the most common CPU architecture for switches. ARM is the most common processor family used on lower-end switches: those that have 1GbE Ethernet ports or lower. The 10GbE and higher speed switches using Intel are typically dual-core processors with two to eight gigabytes of memory.

These two elements can be found in both the new disaggregated network operating systems and the traditional data center switch vendors. The most well-known holdout from this combination is Juniper, whose Junos OS uses FreeBSD rather than Linux as its base OS. However, the next generation of Junos, called Junos OS Evolved, runs on Linux instead of FreeBSD.

An OS acts as the moderator between resources and the applications that want to use those resources. For example, CPU is a resource, and access to it by different applications is controlled by process scheduling within the OS.

Every OS has a user space and a kernel space, distinguished primarily by protected memory access. Software in kernel space, except for device drivers, has complete access to all hardware and software resources. In the user space, each process has access only to its own private memory space; anything else requires a request to the kernel. This separation is enforced by the CPU itself via protection rings or domains provided by the CPU. An OS also provides a standard Application Programming Interface (API) to allow applications to request, use, and release resources.

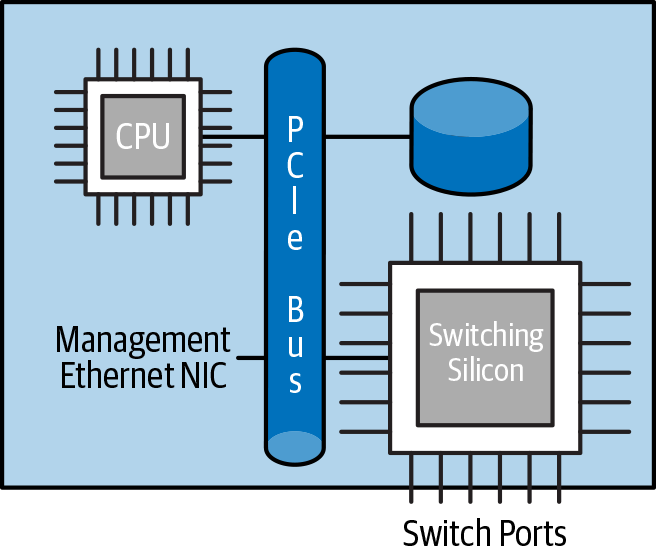

In any switch, disaggregated or otherwise, the CPU complex is connected to the packet-switching silicon, the management Ethernet port, storage, and so on, as shown in Figure 4-3.

Figure 4-3. CPU and packet-switching silicon in a switch complex

The packet-switching silicon is connected to the CPU via a peripheral input/output bus, usually Peripheral Component Interconnect express (PCI). The interconnect bandwidth between the packet-switching silicon and the CPU complex is only a fraction of the switching silicon capacity. For example, a common switching silicon available circa 2018 has 32 ports of 100GbE, which adds up to 3.2 Terabits per second. However, the interconnect bandwidth varies between 400 Mbps and at most 100 Gbps, though it rarely reaches the upper bound.

The main tasks of a NOS are to run control and management protocols, maintain additional state such as counters, and set up the packet-switching silicon to forward packets. Except when OpenFlow is used, the NOS on a switch is not in the packet-forwarding path. A small amount of traffic is destined to the switch itself, and those are handled by the NOS. Packets destined to the switch are usually control-plane packets, such as those sent by a routing protocol or bridging protocol. The other kind of packet that the NOS sees consists of erroneous packets, for which it must generate error message packets.

Thus, the NOS on the switch sees a minuscule percentage of the total bandwidth supported by the packet-switching silicon. For example, the NOS might process at most 150,000 packets per second, whereas the switching silicon will be processing more than 5 billion packets per second. The NOS uses policers to rate-limit traffic hitting it and prioritizes control protocol traffic over the others. This ensures that the NOS is never overwhelmed by packets sent to it.

All of the parts discussed so far are the same across all the network operating systems designed from the turn of the century. The reliance on embedded or real-time operating systems such as QNX and VxWorks is so past century, though a couple of vendors (see this NOS list maintained by the Open Compute Project [OCP]) still seem to rely on them.

Modern network operating systems however, differ in the following areas, each of which is examined in upcoming sections:

-

Location of the switch network state

-

Implementation of the switching silicon driver

-

Programming model

Each of these pieces affects the extent of control a network engineer or architect is able to exercise. The first two considerations are interconnected, but I have broken them up to highlight the effect of each on the network operator. Furthermore, a solution chosen for one of these considerations doesn’t necessarily dictate the answer to the others. We’ll conclude the comparison by seeing how each of the choices address the requirements presented in “Requirements of a Network Device”.

Location of Switch Network State

Switch network state represents everything associated with packet forwarding on the switch, from the lookup tables to Access Control Lists (ACLs) to counters. This piece is important to understand because it defines to a large extent how third-party applications function in the NOS.

There are three primary models for this.

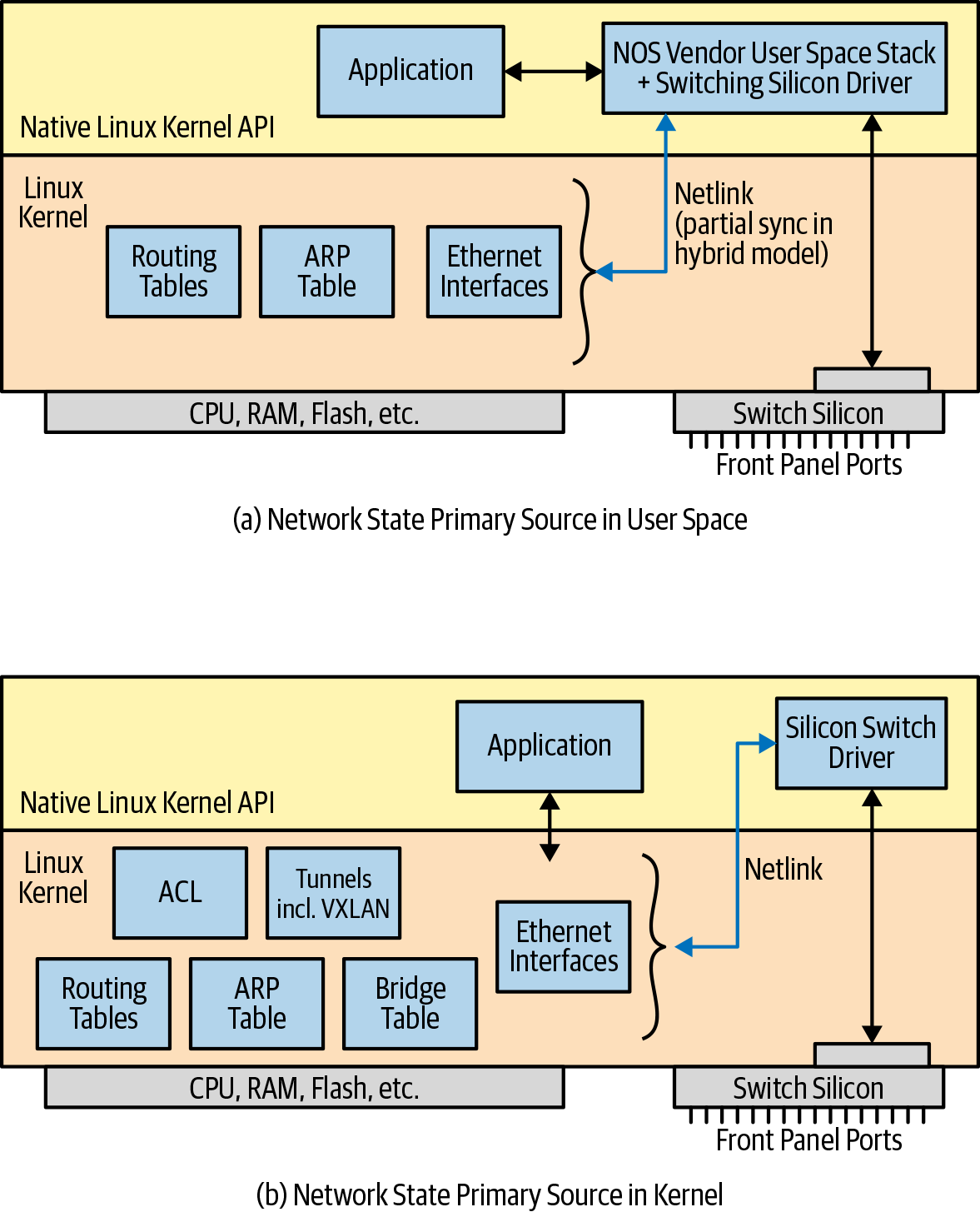

Vendor-specific user space model

The most common model at the time of this writing stores the network state only in NOS-vendor specific software in the user space. In other words, the ultimate source of truth in the NOS in such a model is the vendor-specific software. In this model, the vendor-supplied control protocol stack writes directly to this vendor-specific data store. Cisco’s NX-OS and DPDK-based network operating systems implement this model.

Hybrid model

The second model, which is the hybrid model, is a variation of the first model. The ultimate source of truth in these cases is the same as the first model, the vendor-specific user-space vendor stack. However, the NOS also synchronizes parts of this state with the equivalent parts in the kernel. For example, in this model, the NOS synchronizes the routing table with the kernel routing table. Only a subset of the total state is synchronized. For example, I know of a NOS that synchronizes everything except bridging state. Another NOS synchronizes only the interfaces and the IP routing state, including the ARP/ND state. The rest are not synchronized in the kernel. In most cases, vendors also synchronize changes in the other direction: any changes made to the kernel in those structures are also reflected in the vendor’s proprietary stack.

So is there a rationale behind which states are synchronized, why those and not the others? I have heard different reasons from different NOS vendors. Some NOS vendors treat the switching silicon as the only packet forwarder in the system. When the CPU sends a packet to the switching silicon, it expects that chip to behave very much as if the packet came in on a switching silicon port, and to forward the packet in hardware; the same is assumed in the reverse direction. So the only state that is synchronized is whatever is necessary to preserve this illusion. In this way, the only packet-forwarding model used is the switching silicon’s model. In other cases, the vendor’s user-space blob is synchronized with the kernel state only to the extent that it allows certain large network operators that use only routing functions to write their own applications on top of such a NOS. This is done primarily to retain (or acquire) the business of such large-scale network operators.

In either case, the vendor-provided applications, such as control protocol suites, update state directly in the vendor-specific user space part rather than in the kernel.

Arista’s Extensible Operating System (EOS), Microsoft’s SONiC, IP Infusion’s OcNOS, Dell’s OpenSwitch, and many others follow this hybrid model, albeit with each differing in what parts are synchronized with the kernel.

Complete kernel model

The third model, one that is relatively new, is to use the Linux kernel’s data structures as the network state’s ultimate source of truth. The kernel today supports just about every critical construct relevant to the data center network infrastructure, such as IPv4, IPv6, network virtualization, and logical switches. Because packet forwarding is happening in the switching silicon, this model requires synchronizing counters and other state from the silicon with the equivalent state in the kernel. I’m assuming that in this model, the NOS vendor does not use any nonstandard Linux API calls to perform any of the required functions. Cumulus Linux is the primary NOS vendor behind this model.

At some level, you can argue that most vendors follow the hybrid model, even one like Cumulus, because not everything from the switching silicon is always available in the Linux kernel. However, the discussion here is about the general philosophy of the model rather than the state of an implementation at a particular point of time. The Linux kernel is sophisticated, mature, and evolving continuously through the work of a vibrant community.

Let’s now consider what models permit when you want to run a third-party application on the switch itself. The vendor-specific user space-only model requires modifications to the application to use the NOS vendor’s API. Even simple, everyday applications such as ping and Secure Shell (SSH) need to be modified to work on such a NOS. The kernel model will work with any application written to work with the standard Linux API. For example, researchers wanting to test out a new routing protocol can exploit this model. Furthermore, because the Linux API almost guarantees that changes will not break existing user-space applications (though they might need to be recompiled), the code will be practically guaranteed to work on future versions of the kernel, too. In the hybrid model, if the third-party application relies only on whatever is synchronized with the kernel (such as IP routing), it can use the standard Linux API. Otherwise, it must use the vendor-specific API.

Programming the Switching Silicon

After the local network state is in the NOS, how is this state pushed into the switching silicon? Because the switching silicon is a device like an Ethernet NIC or disk, a device driver is obviously involved. The driver can be implemented as a traditional device driver—that is, in the Linux kernel—or it can be implemented in user space.

As of this writing, the most common model puts the driver in user space. One of the primary reasons for this is that most switching silicon vendors provide the driver in user space only. The other reason is that until recently, the Linux kernel did not have an abstraction or model for packet-switching silicon. The kernel provides many device abstractions such as block device, character device, net device, and so on. There was no such equivalent for packet-switching silicon. Another reason for the prevalence of the user-space model is that many consider it simpler to write a driver in user space than in the kernel.

How does the user-space driver get the information to program the switching silicon? When the network state is in the user space, the user-space blob communicates with the driver to program the relevant information into the silicon. When the ultimate source of truth is in the kernel, the user-space driver opens a Netlink socket and gets notifications about successful changes made to the networking state in the kernel. It then uses this information to program the switching silicon. Figure 4-4 shows these two models.

The small, light block just above the silicon switch represents a very low-level driver that’s responsible for interrupt management of the switching silicon, setting up DMA, and so on.

Figure 4-4. How a user-space switching-silicon driver gets information

Switch Abstraction Interface

Because the silicon switch driver is in the user space, most NOS vendors have defined their own Hardware Abstraction Layer (HAL) and a silicon-specific part that uses the silicon vendor’s driver. This has enabled them to support multiple types of switching silicon by mapping logical operations into silicon-specific code. The HAL definition is specific to each NOS vendor.

Microsoft and Dell defined a generic, NOS-agnostic HAL for packet-switching silicon and called it Switch Abstraction Interface (SAI). They defined as much as they needed from a switching silicon to power Microsoft’s Azure data centers and donated the work to the OCP. OCP adopted this as a subproject under the networking umbrella. Microsoft requires that any switching silicon support SAI for it to be considered in Microsoft Azure. Therefore, many merchant silicon vendors announced support for SAI; that is, they provided a mapping specific to their switching silicon for the abstractions supported by SAI. As originally defined, SAI supported basic routing and bridging and little else. Development of SAI has continued since then and is an open source project available on GitHub.

SAI makes no assumptions about the rest of the issues we defined at the beginning of this section. In other words, SAI does not address the issues associated with supporting third-party applications on a NOS. SAI neither assumes that the packet forwarding behavior is the switching silicon’s nor does it assume that the Linux kernel is the ultimate source of truth.

Switchdev

To counter the lack of a kernel abstraction for packet-switching silicon, Cumulus Networks and Mellanox, along with some other kernel developers, started a new device abstraction model in the Linux kernel. This is called switchdev.

Switchdev works as follows:

-

The switchdev driver for a switching silicon communicates with the switching silicon and determines the number of switching ports for which the silicon is configured. The switchdev driver then instantiates as many Netdev devices as there are configured silicon ports. These netdev devices then take care of offloading the actual packet I/O to the switching silicon. netdev is the Linux model for Ethernet interfaces and is used by NICs today. By using the same kernel abstraction, and adding hooks to allow offloading of forwarding table state, ACL state, interface state, and so on, all the existing Linux network commands such as ethtool, iproute2 commands, and others automatically work with ports from packet-switching silicon.

-

Every packet forwarding data structure in the kernel provides well-defined back-end hooks that are used to invoke the switching silicon driver to offload the state from the kernel to the switching silicon. Switching silicon drivers can register functions to be called via these hooks. For example, when a route is added, this hook function calls a Mellanox switchdev driver which then determines whether this route needs to be offloaded to the switching silicon. Routes that do not involve the switching silicon are typically not offloaded.

So when the kernel stores all the local network state, programming the switching silicon is more efficient using switchdev.

Switchdev first made an appearance in kernel release 3.19. Mellanox has provided full Switchdev support for Mellanox’s Spectrum chipset.

API

When the kernel holds the local network state in standard structures, the standard Linux kernel API is the programming API. It is not vendor specific and is governed by the Linux kernel community. When the user space holds the local network state, the NOS vendor’s API is the only way to reliably access all network state. This is why operators demand vendor-agnostic APIs. Despite the Linux kernel API already being vendor agnostic, the kernel model is considered as just another vendor model by operators. Thus, some operators demand that the vendor-agnostic API be provided even on native Linux.

These so-called vendor-agnostic APIs are a continued throwback to the position of network devices largely as appliances rather than platforms. So the operators continue the pursuit of a uniform data model for the network devices via the vendor-agnostic APIs. No sooner have the operators defined an API, a new feature added by the vendor causes a vendor-specific extension to the API. This happened a lot; for example, with the Management Information Base (MIB) data structures consulted by SNMP. It happened with OpenFlow. It’s happening with NETCONF. I am reminded of the Peanuts cartoon’s football gag in which Lucy keeps egging Charlie Brown to kick the ball, only to pull it out from under him at the last minute. I fear operators are treated similarly when their vendor-agnostic models are promptly polluted by vendor-specific extensions.

The Reasons Behind the Different Answers

The different models of where network state is stored and the differences in switch silicon programming have two primary causes, in my opinion:

-

The time period when they were first conceived and developed, and the network features of the Linux kernel during that period

-

The business model and its relation to licensing

Timing is relevant because of the evolution of the Linux kernel. As of this writing, Linux kernel version 5.0 has been released. Vendors framed their design on the state of the Linux kernel at the time the vendor decided to adopt the Linux kernel. As such, they miss out as evolving versions of Linux provide more features, better stability, and higher performance. The timing of the model and its relation to the kernel is as follows:

-

The first model was developed around 2005. The Linux kernel version was roughly 2.4.29. Cisco’s NX-OS had its roots in the OS that was built for its MDS Fibre Channel Switch family. NX-OS first started using the Linux kernel around 2002, when Linux 2.2 was the most stable version. Furthermore, NX-OS froze its version of the Linux kernel in the 2.6 series because it did not really need much from the kernel.

-

Arista, which was the primary network vendor pushing for the hybrid model, started operations around 2004. Although I do not know the origins of its Linux kernel model, it largely stayed with Linux kernel version 2.6 (Fedora Release 12, from what I gather) for a long time. The latest versions of its OS as of this writing runs Linux kernel version 4.9.

-

Cumulus Networks, which heralded the beginning of the third NOS model, uses the Debian distribution as its base distribution model. This means that whatever kernel version is running in the Debian distribution it picked, is largely the kernel version it has. As of this writing, it is based on version 4.1, with significant backports of the useful features from the newer Linux kernels. In other words, although the kernel version is supposed to be 4.1, it contains fixes and advanced features picked from the newer Linux kernels. By staying with the base 4.1 kernel, software from the base Debian distribution work as is.

Licensing considerations were another critical reason why the different NOS models developed and persisted. The Linux kernel is under the GNU General Public License (GPL) version 2. So if a NOS vendor patches the kernel, such as by improving the kernel’s TCP/IP stack, it must release it back to the community if the vendor intends to make a publicly usable version of its kernel. In 2002, when the Linux kernel was still relatively nascent, NOS vendor engineers felt that they did not want to help competitors with the improvements of the kernel networking stack. So they switched to designing and developing a user-space networking stack. Kernel modules can be proprietary blobs, although the Linux community heavily discourages their use. This idea continued with later NOS vendors, which developed new features (consider VRF, VXLAN, and MPLS) in the user space in their own private blob.

Finally, working in an open source community involves a different dynamic from the ones for-profit enterprises are used to. First, finding good, talented, and knowledgeable kernel developers is difficult. Next, unless organizations are sold on the benefits of developing open source software, they are loathe to develop something in the open that they fear potentially enables competitors and reduces their own value proposition. NOS vendors such as Cumulus Networks built their businesses on developing all network-layer features exclusively in the Linux kernel, and so working closely with the kernel community was part of their DNA.

User Interface

Network operators are used to a command line that is specific to a networking device. The command-line interface (CLI) that networking devices provide is not a programmable shell, unlike Linux. It is often a modal CLI—for instance, most commands are valid only within a specific context of the CLI. For example, neighbor 1.1.1.1 remote-as 65000 is only valid within the context of configuring BGP. You enter this context by typing several prior commands such as “configure” and “router bgp 64001.” Linux commands are nonmodal and programmable. They’re meant to be scripted. Network operators are used to typing “?” rather than pressing TAB to get the command completions, not typing “--” or “-” to specify options to a command, and so on. What this means is that when dropped into a Bash shell, most network operators are too paralyzed to do anything. They type “?” and nothing happens. They press Tab and most likely nothing happens. A network operator not used to the Bash shell or, more important, used to the traditional network device CLI will be at a loss on how to proceed.

There’s a popular saying: “Unix is user-friendly. It just is very selective about who its friends are.” Just because the Linux kernel makes for a powerful and sophisticated network OS, doesn’t automatically mean that Bash can be the only CLI to interact with networking on a Linux platform. Linux grew up as a host OS and as such does not automatically make things easy from a user interface perspective for those who want to use it as a router or a bridge. Furthermore, each routing suite has its own CLI. FRR, the routing suite we use in this book, follows a CLI model more familiar to Cisco. BIRD, another popular open source routing suite, uses Juniper’s Junos OS-like syntax. goBGP uses a CLI more familiar to people used to Linux and other modern Go-based applications.

Therefore, there is no single, unified, and open source way to interface with networking commands under Linux. There are open source packages put out by Cumulus such as ifupdown2 that work with Debian-based systems such as Ubuntu, but they don’t work with Red Hat–based systems. FRR seems like the closest thing to a unified, homogeneous CLI. It has an active, vibrant community, and people are adding new features to it all the time. If you’re configuring a pure routing network, FRR can be your single unified networking CLI. If you’re doing network virtualization, you have to use native Linux commands or ifupdown2 to configure VXLAN and bond (port-channel) interfaces, though there are efforts underway to add this support to FRR. Pure bridging support such as MSTP will most likely remain outside the purview of FRR. The FRR community is also working on a management layer that can support REST API, NETCONF, and so on.

To summarize, using the Linux kernel as the native network OS doesn’t mean that Bash shell with the existing command set defined by iproute2 is the only choice. FRR seems the best alternative, though interface configuration is limited to routed interface configuration as of this writing.

Comparing the NOS Models with Cloud Native NOS Requirements

Let’s compare the three models of NOS with regard to the requirements posed by the cloud native NOS. Table 4-1 specifies the requirement as it applies to running on the switch itself.

| Requirement | User-space model | Hybrid model | Kernel model | Kernel model with switchdev |

|---|---|---|---|---|

Can be programmable |

Proprietary API |

Proprietary API |

Mostly open source |

Open source |

Can add new monitoring agent |

NOS-supported only |

Mostly NOS-supported only |

Mostly yes |

Mostly yes |

Can run off-the-shelf distributed app |

NOS-supported only |

Mostly NOS-supported only |

Yes |

Yes |

Can replace routing protocol suite |

No |

No |

Yes |

Yes |

Can patch software by operator |

No |

No |

On open source components |

On open source components |

Support new switching silicon |

NOS vendor support |

NOS vendor support |

NOS vendor support |

Switching silicon support |

Illustrating the Models with an Example

Let us dive a bit deeper into exploring how the hybrid and kernel space models work when a third-party application is involved. We can ignore the pure user-space model here, because every networking application must be provided by the vendor and runs in user space without kernel involvement. In the following sections, I describe applications on Arista’s EOS, even though EOS is not a disaggregated NOS, though other disaggregated network operating systems behave in a somewhat similar fashion. I use EOS primarily because it is one I’m familiar with, though not as much as I am with Cumulus.

Ping

Let’s see what happens when the ping application is used to see whether an IP address is reachable. Let’s assume that we’re trying to ping 10.1.1.2, which is reachable via switch port 2. In both models, we assume the kernel’s routing table contains this information.

First, in both the kernel and the hybrid models, the switching silicon ports are represented in the Linux kernel as regular Ethernet ports, except they’re named differently. Cumulus names these ports swp1, swp2, and so on, whereas Arista names them Ethernet1, Ethernet2, and so on.

In either model, some implementations might choose to implement a proprietary kernel driver that hooks behind the netdevs that represent the switching silicon ports. Other implementations might choose to use the kernel’s Tun/Tap driver (described later in “Tun/Tap Devices”) to boomerang the packets back to the NOS vendor’s user-space component, which handles the forwarding. In the case of Cumulus, the proprietary driver is provided by the switching silicon vendor whose silicon is in use on the switch. In the case of Arista, the proprietary driver is provided by Arista itself.

The switching silicon vendor defines an internal packet header for packet communication between the CPU complex and the switching silicon. This internal header specifies such things as which port the packet is received from or needs to be sent out of, any additional packet processing that is required of the switching silicon, and so on. This internal header is used only between the switching silicon and the CPU complex and never makes it out of the switch.

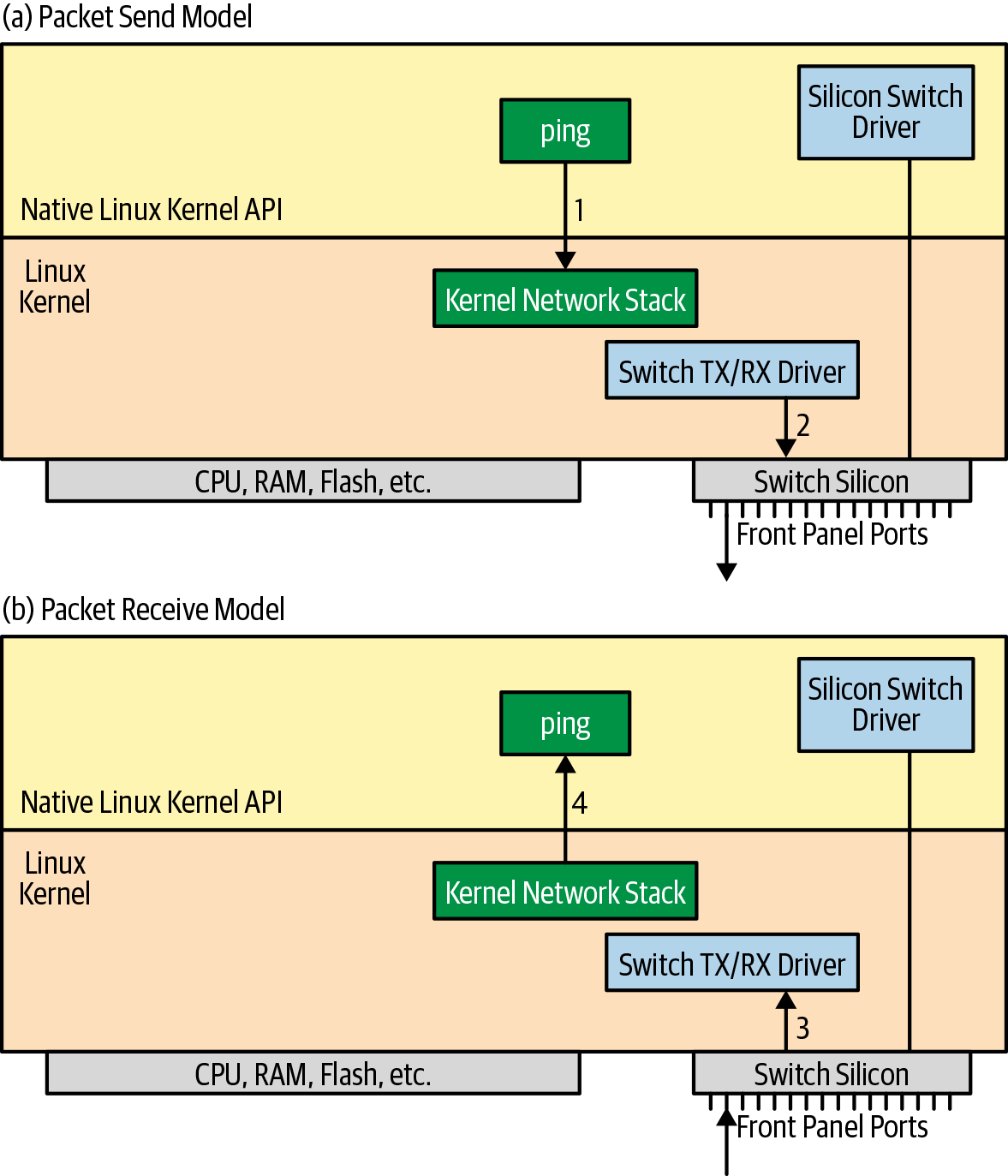

In regard to the kernel driver responsible for sending the packet to the switching silicon, when a ping command is issued, the following sequence of actions occurs:

-

The application, ping, opens a socket to send a packet to 10.1.1.2. This is just as it would on a server running Linux.

-

The kernel’s routing tables point to swp2 as the next hop. The switching silicon’s tx/rx driver delivers the packet to the switching silicon, for delivery to the next-hop router.

-

When the ping reply arrives, the switching silicon delivers the packet to the kernel. The switching silicon’s tx/rx driver receives the packet, and the kernel processes the packet as if it came in on a NIC.

-

The kernel determines the socket to deliver the packet to, and the ping application receives it.

Figure 4-5 presents this packet flow. As shown, the user-space switching-silicon driver is not involved in this processing.

Figure 4-5. Native kernel packet tx/rx flow

Even though the same sequence of commands occurs on an Arista switch, too, the Arista switch requests the switching silicon to treat this packet as if it came in on one of its ports and to forward it appropriately. This request is indicated via the internal packet header mentioned earlier. In other words, the switching silicon redoes the packet forwarding behavior even though the kernel had already done the right thing. This allows Arista to claim that all packets, locally originated or otherwise, are forwarded the same way, via the switching silicon. In other words, the packet forwarding behavior is that of the silicon, not the Linux kernel.

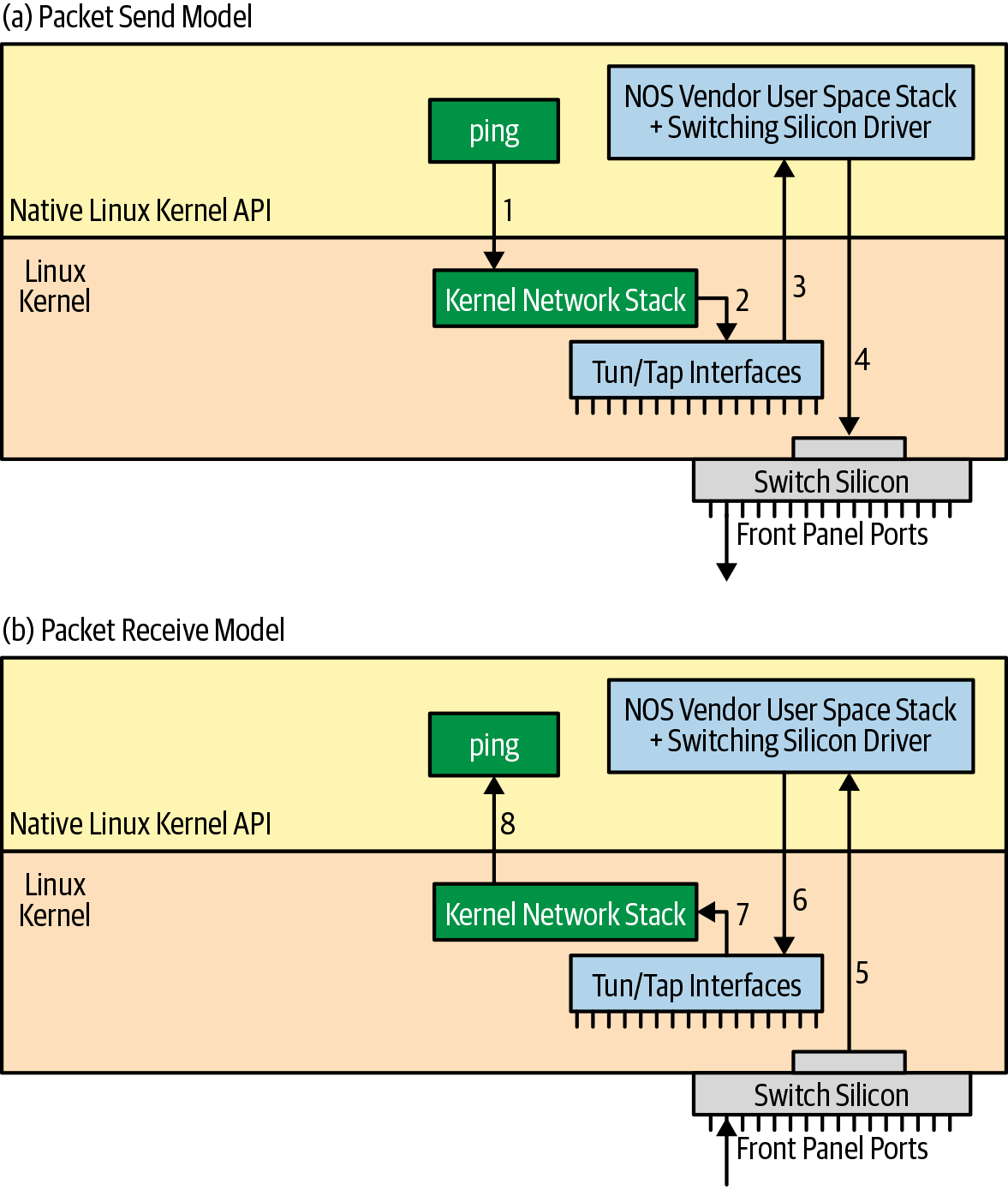

There are other commercial network operating systems that use virtual network devices called Tun/Tap to create switch ports inside the kernel that map to the switching silicon ports. The primary behavior of a Tun/Tap device is to boomerang the packet back from the kernel into the user space. In such a case, the NOS vendor implements packet forwarding in its user-space stack when running as a VM. The sequence of steps for the ping now works as shown in Figure 4-6:

-

The application ping opens a socket to send a packet to 10.1.1.2.

-

The kernel does the packet forwarding for this packet and sends it out port et2, as specified by the kernel routing table.

-

et2 is a Tun/Tap device, so the packet is sent to the NOS vendor’s user-space stack.

-

The user-space stack performs any vendor-specific processing necessary and then uses the actual driver for the packet-switching silicon to transmit the packet out the appropriate front-panel port.

-

When the ping reply is received, the switching silicon delivers the packet to the user-space stack.

-

After processing the packet, the user-space stack writes the packet to the appropriate Tun/Tap device. For example, if the reply is received via the switching-silicon port 3, the packet is written to et3, the Tun/Tap port corresponding to that port.

-

The kernel receives this packet and processes it as if it came in on a regular network device such as a NIC.

-

Ultimately, the kernel hands the packet to the ping process.

This completes the loop of packet send and receive by a third-party application such as ping. Some NOS vendors who implement the hybrid model also use the Tun/Tap model when running on a real switch.

Figure 4-6. Tun/tap packet tx/rx flow

Running a different routing protocol

If you want to run a different routing protocol suite from the one supplied by the vendor, it’s certainly possible in the kernel-only model. This suite needs to run on Linux and be able to program the state in the Linux kernel using Netlink.

In the hybrid mode, if the routing protocol suite needs to program state that is not synchronized with the kernel, it needs to be modified to use the NOS vendor’s API. Even if this third-party routing suite wants to program the kernel, it must adopt the peculiarities of the NOS vendor’s implementation of switching silicon interfaces in the kernel.

What Else Is Left for a NOS to Do?

Besides programming the switching silicon, the NOS also needs to read and control the various sensors on the box, such as power, fan, and the LEDs on the interfaces. The NOS also must discover the box model, the switching silicon version, and so on. Besides all this, the NOS needs to know how to read and program the optics of the interfaces. None of this stuff is standard across white boxes and brite boxes. So a NOS vendor must write their own drivers and other patches to handle all of this. The NOS of course has lots of other things to provide such as a user interface, possibly a programmatic API, and so on.

Summary

The primary purpose of this chapter was to educate you on cloud native NOS choices. The reason this education is important is that these choices impose some fundamental consequences on the future of networking and the benefits they yield. The model chosen has effects on what operators can do: what applications they can run on the box, what information they can retrieve, and perhaps what network protocol features are supported.

The philosopher and second president of India, Sarvepalli Radhakrishnan, is quoted to have said, “All men are desirous of peace, but not all men are desirous of the things that lead to peace.” In a similar fashion, not every choice leads to the flourishing of a NOS that is true to the cloud native spirit. So being thoughtful about the choices is very important, especially for network designers and architects. Network engineers, too, can cultivate their skillsets for each of the choices. The information in this chapter should help you determine which choices are easy today but possibly gone tomorrow, and which choices truly empower your organization. In the next chapter, we move up the software stack to examine routing protocols and how to select one that’s appropriate for your needs.

References

-

Hamilton, James. “Datacenter Networks are in my Way”.

-

McKeown, Nick, et al. “Linux kernel documentation of switchdev”.

Get Cloud Native Data Center Networking now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.