Now that you know how to create a new application with various layout options and how to request application permissions, it is time to explore the ways in which your application can interact with the Android operating system. The AIR 2.6 release includes access to many Android features. These include the accelerometer, the GPS unit, the camera, the camera roll, the file system and the multitouch screen.

Note

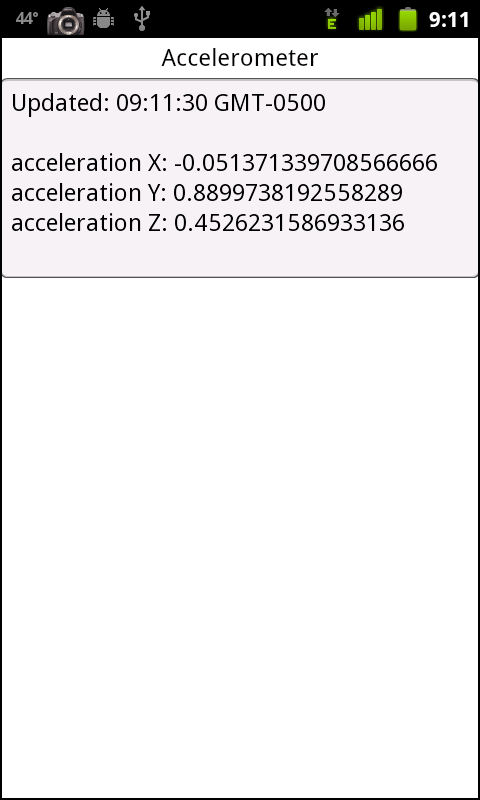

Up until this point, I have compiled the sample applications to the ADL simulator. However, to demonstrate the API integrations, it is necessary to run the applications on an Android device. The screenshots in this section are from an HTC NexusOne phone. Instructions on how to run an application on an Android device are included in Chapter 1.

The accelerometer is a device that measures the speed or g-forces created when a device accelerates across multiple planes. The faster the device is moved through space, the higher the readings will be across the x, y, and z axes.

Letâs review the code below. First, you will notice that there is a

private variable named accelerometer declared, of type

flash.sensors.Accelerometer. Within

applicationComplete of the application, an event

handler function is called, which first checks to see if the device has an

accelerometer by reading the static property of the

Accelerometer class. If this property returns as true,

a new instance of Accelerometer is created and an event

listener of type AccelerometerEvent.UPDATE is added to handle

updates. Upon update, the accelerometer

information is read from the event and written to a

TextArea within the

handleUpdate function. The results can be seen within

Figure 4-1:

<?xml version="1.0" encoding="utf-8"?>

<s:Application xmlns:fx="http://ns.adobe.com/mxml/2009"

xmlns:s="library://ns.adobe.com/flex/spark"

applicationComplete="application1_applicationCompleteHandler(event)">

<fx:Script>

<![CDATA[

import flash.sensors.Accelerometer;

import mx.events.FlexEvent;

privatevar accelerometer:Accelerometer;

protectedfunction application1_applicationCompleteHandler

(event:FlexEvent):void {

if(Accelerometer.isSupported==true){

accelerometer = new Accelerometer();

accelerometer.addEventListener(AccelerometerEvent.

UPDATE,handleUpdate);

} else {

status.text = "Accelerometer not supported";

}

}

privatefunction handleUpdate(event:AccelerometerEvent):void {

info.text = "Updated: " + new Date().toTimeString() + "\n\n"

+ "acceleration X: "+ event.accelerationX + "\n"

+ "acceleration Y: " + event.accelerationY + "\n"

+ "acceleration Z: " + event.accelerationZ;

}

]]>

</fx:Script>

<fx:Declarations>

<!-- Place non-visual elements (e.g., services, value objects) here -->

</fx:Declarations>

<s:Label id="status" text="Shake your phone a bit" top="10" width="100%"

textAlign="center"/>

<s:TextArea id="info" width="100%" height="200" top="40" editable="false"/>

</s:Application>Get Developing Android Applications with Flex 4.5 now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.