Chapter 1. Demystifying AI

A majority of analytics exist to take operational data (e.g., past/present stock prices) and provide focused insights (e.g., predicted stock prices) that inform decision making. This essential objective is the same for conventional business analytics and AI analytics and includes a range of functions (e.g., automation, augmentation, conversational AI for consumers, etc.).

The key difference is how developers create the code that transforms operational data into insights. For conventional business analytics, this is a static process where the developer manually defines each logical operation the computer must take.

AI analytics, via machine learning (ML), attempts to derive the necessary operations directly from the data, reducing the onus on the developer to create and update the model over time (but not eliminating the developer) and making it possible to address otherwise prohibitively sophisticated use cases (e.g., computer vision).1

Beyond the initial challenge of the ML algorithm teaching an AI analytic to complete a basic task, we must ensure that it does not learn additional, undesirable behaviors that may impact its long-term sustainability (reliability, security, etc.). The ability to holistically understand the learned behavior of an AI analytic is called explainability and will be explored in detail in the following chapters.

With machine learning, computer models use experiences/historical data to make decisions by recognizing patterns in data. These experiences take several forms. For example, they could be collected by reviewing historical process data or observing current processes, or they could be generated using synthetic data. However, in many cases, practitioners must manually extract these patterns before they can be used. The sophistication of patterns and resulting operations can vary wildly based on the algorithm selected, the learning parameters used, and the way in which the training data is processed into the algorithm.

Similarly, AI (to be more specific—the sub-area of deep learning) uses models, such as neural networks, to learn highly complex patterns across various data types. To summarize at a high level, AI enables computers to perceive, learn from, abstract, and act on data while automatically recognizing patterns in provided datasets.2

AI can be used for a variety of use cases—some of which you may be familiar with. A few common examples where AI can be deployed to recognize patterns include:

-

Detecting anomalous entries in a dataset (e.g., identifying fraudulent versus legitimate credit card purchases)

-

Classifying a set of pixels in an image as familiar or unfamiliar (e.g., suggesting which of your friends might be included in a photo you took on your phone)

-

Offering new suggestions for entertainment choices based on your history (e.g., Netflix, Spotify, Amazon)

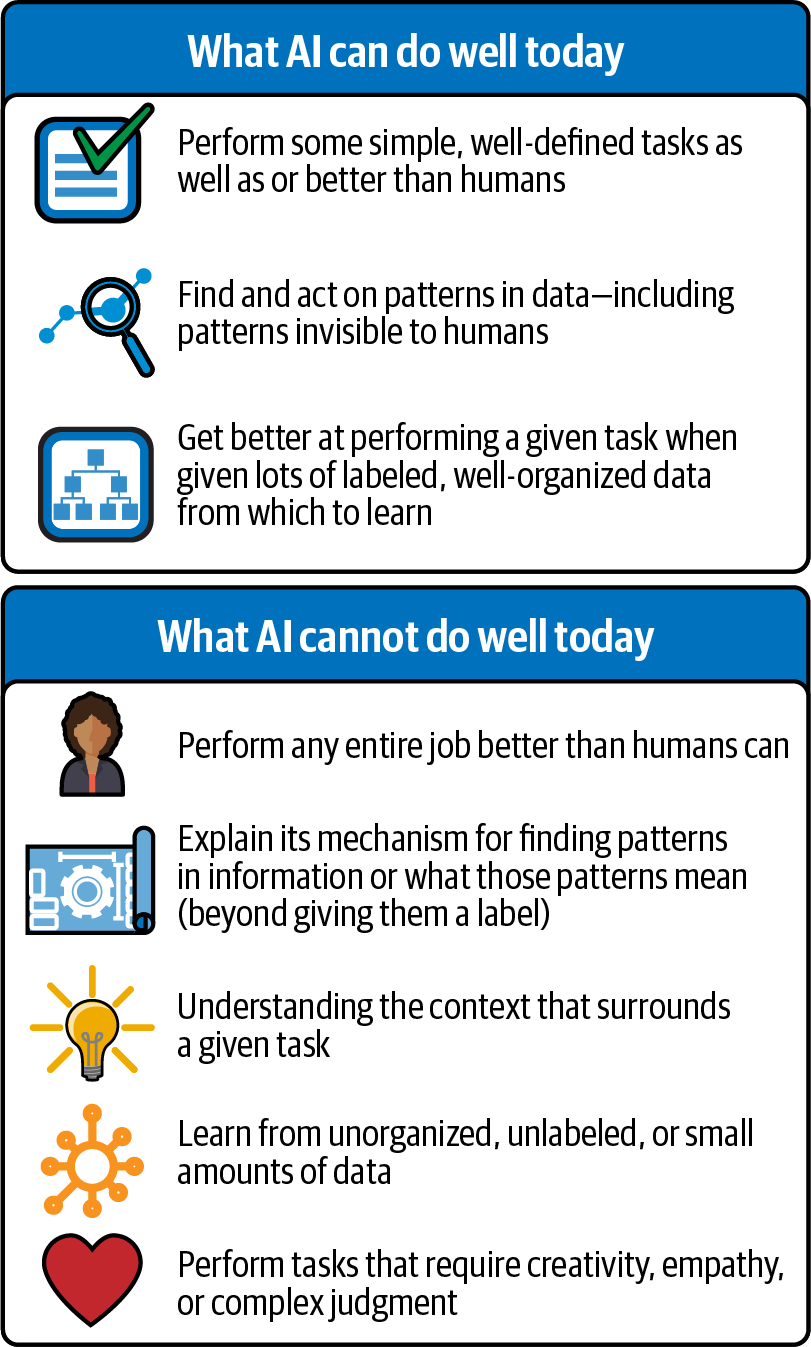

We can also describe what AI is not—at least not today. For example, some older sci-fi movies depict robots with the ability to have sophisticated, improvised, and fluent conversations with humans, or carry out complex actions and decisions in unexpected circumstances as people can. In fact, we’re not at that level of AI sophistication; to get there will take significant, persistent investment to advance current AI capabilities.

Currently, operational instances of AI represent what is known as narrow intelligence, or the ability to supplement human judgment for a single decision under controlled circumstances. Artificial general intelligence, in which machines can match a human’s capacity to perform multiple decisions in uncontrolled circumstances, does not exist at this point in time. While there have been recent advances to move in the direction of general intelligence,3 we are still quite far from this type of AI being seen at any meaningful scale. Figure 1-1 provides a high-level overview on what AI can and cannot do well today.

Figure 1-1. AI limitations

AI Pilot-to-Production Challenges

Mature AI capabilities do not appear overnight. Rather, they require months to years of sustained, cooperative, organization-spanning efforts to achieve. Creating and maintaining buy-in across stakeholders (e.g., strategic leadership, end users, and risk managers) is a critical and essential challenge for change agents within your organizations.

AI analytic pilots performed in laboratory conditions (handpicked use cases, curated data, controlled environments) are one of the best ways to create initial buy-in at modest cost. However, most analytical use cases will require organizations to graduate these pilots from a laboratory setting to a production environment in order to fully succeed with solving the analytical challenges within these selected use cases.

A common mistake many organizations make is underestimating the challenge of transitioning between these environments and failing to mature their development capability in response. A few main challenges include scalability, sustainability, and coordination.

Scalability

During a pilot, AI development teams can be small and simple in terms of roles and processes because they are addressing only a single use case. As AI capabilities mature and migrate from pilot to production, project volume will generally rise much more rapidly than available personnel. Particularly, analytics already in production will begin to compete for resources with new deployments (amplified by the sustainability challenges in item 2). This calls for the evolution of the development team, process, and tooling to allow individual and collective distribution of labor across multiple teams and projects. Additionally, the volume and velocity of data involved in development will increase, demanding increasingly powerful, efficient, and sophisticated training pipelines (discussed extensively in Chapter 5).

Sustainability

By design, the laboratory environment limits threats to analytic sustainment to help the pilot team focus on functionality. Once in production, analytics are subject to a diverse range of issues, including operational (e.g., load variability, data drift, user error), security, legal, and ethical. Ensuring that sustainability does not compromise scalability requires evolution of development to anticipate and resolve these issues prior to release. Sustainability also benefits from coordination (see item 3) to allow key stakeholders to participate in the effort (see Chapter 3).

Coordination

In a laboratory environment, the pilot team interacts with a limited number of stakeholders by design. The number of stakeholders climbs drastically as these pilots enter production, and your teams must be prepared to motivate and facilitate coordination across data owners, end users, operations staff, risk managers, and others. Coordination also helps ensure equitable and efficient distribution of labor across the organization.

In addition to the three we just discussed, Table 1-1 provides a more complete list of challenges you might face when moving your AI solutions from pilots to production.

| Challenges to Operationalizing AI | |

|---|---|

| AI Pilots | AI in Production |

| Simplified, static use case | Multistakeholder, dynamic use case |

| High-performance laboratory environment | Distributed legacy systems with dynamic fallback options |

| Openly accessible, low latency, data remains consistent | Access controlled; latency restricted; high-velocity data |

| Complete responsibility and control over data | Data mostly controlled by upstream stakeholders |

| No change or widely anticipated changes to data | Rapid, unexpected data drift |

| One-time, manual explanation for algorithm’s results | Real-time, automated explanation |

| AI developer does not reexamine model after pilot | AI developer continues to monitor model |

| No-cost shut-down, refactor | Costly shut-down, refactoring |

| One-off development against a single use case | Reproducible development against multiple use cases |

| Small team with well-defined focus and requisite skill sets | Continual training for a wide mix of experience, skill levels, and specialties |

| Informal, research-oriented project management | Hybrid research/development project management |

Failing to mature AI capabilities to meet these challenges threatens the long-term viability of AI adoption since organizations will struggle to implement artificial intelligence in a production capacity. AI development will slow, existing analytics will remain difficult to sustain, leadership will become disillusioned with the lack of lasting mission impact, end users will lose faith, and hard conversations will ensue. Proactively addressing these challenges during the design phase results in organizations dramatically increasing the speed of adoption and impact of AI initiatives.4

In coming chapters, we’ll introduce a framework (AIOps) to address these challenges and allow your organization to maximize the impact of AI across the enterprise.

1 Aurélien Géron, Hands-On Machine Learning with Scikit-Learn and TensorFlow (O’Reilly Media, 2017).

2 While there are many, often competing, technical definitions of AI, we wanted to provide a broad, high-level definition for this report. Our definition of AI is extracted from the National Security Commission on Artificial Intelligence. You can view their 2021 report at their website, where they define AI on page 20.

3 An example of current research and thinking in the area of artificial general intelligence that continues to evolve rapidly is David Silver, Satinder Singh, Doina Precup, and Richard S. Sutton, “Reward Is Enough”, Artificial Intelligence 299 (October 2021).

4 Mike Loukides, AI Adoption in the Enterprise (O’Reilly Media, 2021).

Get Enterprise AIOps now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.