Chapter 12. Multiple-image Network Graphics

The Multiple-image Network Graphics format, or MNG, is not merely a multi-image, animated extension to PNG; it can also be used to store certain types of single images more compactly than PNG, and in mid-1998 it was extended to include JPEG-compressed streams. Conceivably, it may one day incorporate audio or even video channels, too, although this is a more remote possibility. Yet despite all of this promise--or, rather, because of it--MNG was still a slowly evolving draft proposal nearly four years after it was first suggested.

As noted in Chapter 7, “History of the Portable Network Graphics Format”, MNG's early development was delayed due to weariness on the part of the PNG group and disagreement over whether it should be a heavyweight multimedia format or a very basic “glue” format. What it has evolved into, primarily due to the willingness of Glenn Randers-Pehrson to continue working on it, is a moderately complex format for composing images or parts of images, either spatially or temporally, or both. I will not attempt to describe it in full detail here--a complete description of MNG could fill a book all by itself and probably will, one of these days--but I will give a solid overview of its basic features and most useful applications. Further information on the format can be found at the MNG web site, http://www.libpng.org/pub/mng/.

12.1. Common Applications of MNG

Perhaps the most basic, nontrivial MNG application is the slide show: a sequence of static images displayed in order, possibly looping indefinitely (e.g., for a kiosk). Because MNG incorporates not only the concepts of frames, clipping, and user input but also all of PNG's features, a MNG slide show could include scrolling, sideways transitions, fades, and palette animations--in other words, most of the standard effects of a dedicated presentation package and maybe a few nonstandard ones. Such an approach would not necessarily produce smaller presentations than the alternative methods (although the most popular alternatives tend to be rather large), and, as currently specified, it would be limited to a particular resolution defined by the component raster images. But MNG offers the potential of a more open, cross-platform approach to slide shows.

MNG also supports partial-frame updates, which not only could be used for further slide show transitions (for example, dropping bulleted items into place, one at a time) but also are able to support animated movies. Unlike animated GIFs, where moving a tiny, static bitmap (or “sprite”) around a frame requires many copies of the sprite, MNG can simply indicate that a previously defined sprite should move somewhere else. It also supports nested loops, so a sprite could move in a zigzag path to the right, then up, then left, and finally back down to the starting position--all with no more than one copy of the background image (if any) and one copy of the moving bitmap. In this sense, MNG defines a true animation format, whereas GIF merely supports slightly fancy slide shows.

Images that change with time are likely to be some of the most common types of MNG streams, but MNG is useful in completely static contexts as well. For example, one could easily put together a MNG-based contact sheet of thumbnail images without actually merging the images into a single, composite bitmap. This would allow the same file to act both as an archive (or container) for the thumbnails, from which they could easily be extracted later without loss, and as a convenient display format.

[91] If the number of thumbnails grew too large to fit on a single “page,” MNG's slide show capabilities could be invoked to enable multipage display.

Other types of static MNGs might include algorithmic images or three-dimensional “voxel” (volume-pixel) data such as medical scans. Images that can be generated by simple algorithms are fairly rare if one ignores fractals. But 16million.png, which I discussed in Chapter 9, “Compression and Filtering”, is such an image. Containing all 16.8 million colors possible in a 24-bit image, it consists of nothing but smooth gradients, both horizontally and vertically. While this allowed PNG's filtering and compression engine to squeeze a 48 MB image into just over 100 KB, as a MNG containing a pair of loops, move commands, and a few odds and ends it amounts to a mere 476 bytes. Of course, compression factors in excess of 100,000 times are highly atypical. But background gradient fills are not, and MNG effectively allows one to compress the foreground and background parts independently, in turn allowing the compression engine and the file format itself to work more efficiently.

Ironically, one of the most popular nonanimated forms of MNG is likely to have no PNG image data inside at all. I've emphasized in earlier chapters that PNG's lossless compression method is not well suited to all tasks; in particular, for web-based display of continuous-tone images like photographs, a lossy format such as JPEG is much more appropriate, since the files can be so much smaller. For a multi-image format such as MNG, support for a lossy subformat--JPEG in particular--is a natural extension. Not only does it provide for the efficient storage of photographic backgrounds for composite frames (or even photographic sprites in the foreground), it also allows JPEG to be enhanced with PNG-like features such as gamma and color correction and (ta da!) transparency. Transparency has always been a problem for JPEG precisely because of its lossy approach to compression. What MNG provides is a means for a lossy JPEG image to inherit a loss less alpha channel. In other words, all of the size benefits of a JPEG image and all of the fine-tuned anti-aliasing and fade effects of a PNG alpha channel are now possible in one neat package.

12.2. MNG Structure

So that's some of what MNG can do; now let's take a closer look at what the format looks like and how it works. To begin with, MNG is chunk-based, just like PNG. It has an 8-byte signature similar to PNG's, but it differs in the first two bytes, as shown in Table 12-1.

Table 12-1. MNG Signature Bytes

| Decimal Value | ASCII Interpretation |

| 138 | A byte with its most significant bit set (”8-bit character”) |

| 77 | M |

| 78 | N |

| 71 | G |

| 13 | Carriage-return (CR) character, a.k.a. CTRL-M or ^M |

| 10 | Line-feed (LF) character, a.k.a. CTRL-J or^J |

| 26 | CTRL-Z or ^Z |

| 10 | Line-feed (LF) character, a.k.a. CTRL-J or ^J |

So while a PNG-supporting application could be trivially modified to identify and parse a MNG stream,

[92] there is no danger that an older PNG application might mistake a MNG stream for a PNG image. Since the file extensions differ as well (.mng instead of .png), ordinary users are unlikely to confuse images with animations. The only cases in which they might do so are when an allowed component type (e.g., a PNG or a JNG) is renamed with a .mng extension; such files are considered legal MNGs.

With the exception of such renamed image formats, all MNG streams begin with the MNG signature and MHDR chunk, and they all end with the MEND chunk. The latter, like PNG's IEND, is an empty chunk that simply indicates the end of the stream. MHDR, however, contains seven fields, all unsigned 32-bit integers: frame width, frame height, ticks per second, number of layers, number of frames, total play time, and the complexity (or simplicity) profile.

Frame width and height are just what they sound like: they give the overall size of the displayable region in pixels. A MNG stream that contains no visible images--say, a collection of palettes--should have its frame dimensions set to zero.

The ticks-per-second value is essentially a scale factor for time-related fields in other chunks, including the frame rate. In the absence of any other timing information, animations are recommended to be displayed at a rate of one frame per tick. For single-frame MNGs, the ticks-per-second value is recommended to be 0, providing decoders with an easy way to detect non-animations. Conversely, if the value is 0 for a multiframe MNG, decoders are required to display only the first frame unless the user specifically intervenes in some way.

“Number of layers” refers to the total number of displayable images in the MNG stream, including the background. This may be many more than the number of frames, since a single frame often consists of multiple images composited (or layered) on top of one another. Some of the layers may be empty if they lie completely outside the clipping boundaries. The layer count is purely advisory; if it is 0, the count is considered unspecified. At the other end of the spectrum, a value of 231-1 (2,147,483,647) is considered infinite.

The frame-count and play-time values are also basically what they sound like: on an ideal computer (i.e., one with infinite processing speed), they respectively indicate the number of frames that correspond to distinct instants of time[93] and the overall duration of the complete animation. As with the layer count, these values are advisory; 0 and 231-1 correspond to “unspecified” and “infinite,” respectively.

Finally, MHDR's complexity profile provides some indication of the level of complexity in the stream, in order to allow simple decoders to give up immediately if the MNG file contains features they are unable to render. The profile field is also advisory; a value of zero is allowed and indicates that the complexity level is unspecified. But a nonzero value indicates that the encoder has provided information about the presence or absence of JPEG (JNG) chunks, transparency, or complex MNG features. The latter category includes most of the animation features mentioned earlier, including looping and object manipulation (i.e., sprites). All possible combinations of the three categories are encoded in the lower 4 bits of the field as odd values only--all even values other than zero are invalid, which means the lowest bit is always set if the profile contains any useful information. The remaining bits of the 2 lower bytes are reserved for public expansion, and all but the most significant bit of the 2 upper bytes are available for private or experimental use. The topmost bit must be zero.

Note that any unset (0) bit guarantees that the corresponding feature is not present or the MNG stream is invalid. A set bit, on the other hand, does not automatically guarantee that the feature is included, but encoders should be as accurate as possible to avoid causing simple decoders to reject MNGs unnecessarily.

The stuff that goes between the MHDR and MEND chunks can be divided into a few basic categories:

- Image-defining chunks

- Image-displaying chunks

- Control chunks

- Ancillary (optional) chunks

Note the distinction between defining an image and displaying it. This will make sense in the context of a composite frame made up of many subimages. Alternatively, consider a sprite-based animation composed of several sprite “poses” that should be read into memory (i.e., defined) as part of the animation's initialization procedure. The sprite frames may not actually be used until much later, perhaps only in response to user input.

12.2.1. Image-Defining Chunks

The most direct way to define an image in MNG is simply to incorporate one. There are two possibilities for this in the current draft specification: a PNG image without the PNG signature, or the corresponding PNG-like JPEG format, JNG (JPEG Network Graphics). [94] Just as with standalone PNGs, an embedded PNG must contain at least IHDR, IDAT, and IEND chunks. It may also include PLTE, tRNS, bKGD, gAMA, cHRM, sRGB, tEXt, iTXt, and any of the other PNG chunks we've described. The PLTE chunk is allowed to be empty in an embedded PNG, which indicates that the global MNG PLTE data is to be used instead.

An embedded JNG stream is exactly analogous to the PNG stream: it begins with a JHDR chunk, includes one or more JDAT chunks containing the actual JPEG image data, and ends with an IEND chunk. Standalone JNGs are also allowed; they must include an 8-byte JNG signature before JHDR, with the format that's shown in Table 12-2.

Table 12-2. JNG Signature Bytes

| Decimal Value | ASCII Interpretation |

| 139 | A byte with its most significant bit set (”8-bit character”) |

| 74 | J |

| 78 | N |

| 71 | G |

| 13 | Carriage-return (CR) character, a.k.a. CTRL-M or ^M |

| 10 | Line-feed (LF) character, a.k.a. CTRL-J or ^J |

| 26 | CTRL-Z or ^Z |

| 10 | Line-feed (LF) character, a.k.a. CTRL-J or ^J |

JDATs simply contain JFIF-compatible JPEG data, which can be either baseline, extended sequential, or progressive--i.e., the same format used in practically every web site and commonly (but imprecisely) referred to as JPEG files. The requirements on the allowed JPEG types eliminate the less-common arithmetic and lossless JPEG variants, though the 12-bit grayscale and 36-bit color flavors are still allowed. [95] To decode the JPEG image, simply concatenate all of the JDAT data together and treat the whole as a normal JFIF-format file stream--typically, this involves feeding the data to the Independent JPEG Group's free libjpeg library.

In order to accommodate an alpha channel, a JNG stream may also include one or more grayscale IDAT chunks. The JHDR chunk defines whether the image has an alpha channel or not, and if so, what its bit depth is. Unlike PNG, which restricts alpha channels to either 8 bits or 16 bits, a JNG alpha channel may be any legal PNG grayscale depth: 1, 2, 4, 8, or 16 bits. The IDATs composing the alpha channel may come before or after or be interleaved with the JDATs to allow progressive display of an alpha-JPEG image, but no other chunk types are allowed within the block of IDATs and JDATs.

Although incorporating complete JNGs or PNGs is conceptually the simplest approach to defining images in a MNG stream, it is generally not the most efficient way. MNG provides two basic alternatives that can be much better in many cases; the first of these is delta images. [96] A delta image is simply a difference image; combining it with its parent re-creates the original image, in much the same way that combining an “up”-filtered row of pixels with the previous row results in the original, unfiltered row. (Recall the discussion of compression filters in Chapter 9, “Compression and Filtering”.) The difference of two arbitrary images is likely to be at least as large as either parent image, but certain types of images may respond quite well to differencing. For example, consider a pair of prototype images for a web page, both containing the same background graphics and much of the same text, but differing in small, scattered regions. Since 90% of the image area is identical, the difference of the two will be 90% zeros, and therefore will compress much better than either of the original images will.

Currently, MNG allows delta images to be encoded only in PNG format, and it delimits them with the DHDR and IEND chunks. In addition to the delta options for pixels given in DHDR--whether the delta applies to the main image pixels or to the alpha channel, and whether applying the delta involves pixel addition or merely replacement of an entire block--MNG defines several chunks for modifying the parent image at a higher level. Among these are the PROM chunk, for promoting the bit depth or color type of an image, including adding an alpha channel to it; the DROP and DBYK chunks, for dropping certain chunks, either by name alone or by both name and keyword; and the PPLT chunk, for modifying the parent's palette (either PLTE or tRNS, or both). The latter could be used to animate the palette of an image, for example; cycling the colors is a popular option in some fractal programs. PPLT could also be used to fade out an image by adding an opaque tRNS chunk and then progressively changing the values of all entries until the image is fully transparent.

The second and more powerful alternative to defining an image by including its complete pixel data is object manipulation. In this mode, MNG basically treats images as little pieces of paper that can be copied and pasted at will. For example, a polka-dot image could be created from a single bitmap of a circle with a transparent background, which could be copied and pasted multiple times to create the complete, composite image. Alternatively, tileable images of a few basic pipe fittings and elbow joints could be pasted together in various orientations to create an image of a maze. The three chunks used for creating or destroying images in the object sense are CLON (“clone”), PAST (“paste”), and DISC (“discard”).

The CLON chunk is the only one necessary for the first example; it not only copies an image object in the abstract sense, but also gives it a position in the current frame--either as an absolute location or as an offset from the object that was copied. In order to change the orientation of objects, as in the maze example, the PAST chunk is required; as currently defined, it only supports 180° rotations and mirror operations around the x and y axes. (90° rotations were ruled out since they are rarely supported in hardware, and abstract images are intended to map to hardware and platform-specific APIs as closely as possible.) PAST also includes options to tile an object, and not only to replace the underlying pixel data but also to composite either over or under it, assuming either the object or underlying image includes transparency information. Once component objects are no longer needed--for example, in the maze image when the maze is completely drawn--the decoder can be instructed to discard them via the DISC chunk.

12.2.2. Chunks for Image Display, Manipulation, and Control

MNG includes nine chunks for manipulating and displaying image objects and for providing a kind of programmability of the decoder's operations. The most complex of these is the framing chunk, FRAM. It is used not only to delimit the chunks that form a single frame, but also to provide rendering information (including frame boundaries, where clipping occurs) and timing and synchronization information for subsequent frames. Included in FRAM's timing and synchronization information is a flag that allows the user to advance frames, which would be necessary in a slide show or business presentation that accompanies a live speaker.

The CLIP chunk provides an alternate and more precise method for specifying clipping boundaries. It can affect single objects or groups of objects, not just complete frames, and it can be given both as absolute pixel coordinates and in terms of a relative offset from a previous CLIP chunk. Images that are affected by a CLIP chunk will not be visible outside the clipping boundary, which allows for windowing effects.

The LOOP and ENDL chunks are possibly the most powerful of all MNG chunks. They provide one of the most fundamental programming functions, the ability to repeat one or more image-affecting actions many times. I mentioned earlier that 16million.mng, the MNG image with all possible 24-bit colors in it, makes use of a pair of loops; those loops are the principal reason the complete image can be stored in less than 500 bytes. Without the ability to repeat the same copy-and-paste commands by looping several thousand times, the MNG version would be at least three times the size of the original PNG (close to 1,000 times its actual size)--unless the PNG version were simply renamed with a .mng extension.

In addition to a simple iteration count, which can go as high as two billion, the LOOP chunk can provide either the decoder or the user discretionary control over terminating the loop early. It also allows for control via signals (not necessarily Unix-style signals) from an external program; for example, this capability might be invoked by a program that monitors an infrared port, thus enabling the user to control the MNG decoder via a standard television remote control.

Often used in conjunction with loops and clipping is the MOVE chunk, one of MNG's big advantages over animated GIFs. As one might expect, MOVE allows one or more already defined image objects to be moved, either to an absolute position or relative to the previous position of each object. Together with LOOP and ENDL, MOVE provides the basis for animating sprites. Thus, one might imagine a small Christmas MNG, where perhaps half a dozen poses of a single reindeer are cloned, positioned appropriately (with transparency for overlaps, of course!), and looped at slightly different rates in order to create the illusion of eight tiny reindeer galloping independently across the winter sky.[97]

Up until now, we've glossed over the issue of how or whether any given image is actually seen; the implication has been that any image that gets defined is visible, unless it lies outside the image frame or local clipping region. But an object-based format should have a way of effectively turning on and off objects, and that is precisely where the SHOW chunk comes in. It contains a list of images that are affected and a 1-byte flag indicating the “show mode.” The show-mode flag has two purposes: it can direct the decoder to modify the potential visibility of each object, and it can direct the decoder to display each object that is potentially visible. Note that I say potential visibility; any object outside the clipping region or frame or completely covered by another object will clearly not be visible regardless of whether it is “on.” Among the show modes SHOW supports is one that cycles through the images in the specified range, making one potentially visible and the rest not visible. This is the means by which a single sprite frame in a multipose animation--such as the reindeer example--is displayed and advanced.

In order to provide a suitably snowy background for our reindeer example, MNG provides the background chunk, BACK. As with PNG's bKGD chunk, BACK can specify a single color to be used as the background in the absence of any better candidates. But it also can point at an image object to be used as the background, either tiled or not. And either the background color or the background image (or both) may be flagged as mandatory, so that even if the decoder has its own default background, for example, in a web browser, it must use the contents of the BACK chunk. When both the background color and the background image are required, the image takes precedence; the color is used around the edges if the image is smaller than the frame and not tiled, or if it is tiled but clipped to a smaller region, and it is the “true” background with which the image is blended if it has transparency.

Finally, MNG provides a pair of housekeeping chunks, SAVE and SEEK. Together, they implement a one-entry stack similar to PostScript's gsave and grestore commands; they can be used to store the state of the MNG stream at a single point. Typically, this point would represent the end of a prologue section containing such basic information as gamma and chromaticity, the default background, any non-changeable images (the poses of our reindeer, for example), and so forth. Once the SAVE chunk appears--and only one is allowed--the prologue information is effectively frozen. Some of its chunks, such as gAMA, may be overridden by later chunks, but they will be restored as soon as a SEEK chunk is encountered. Any images in the prologue are fixed for the duration of the MNG stream, although one can always make a clone of any such image and move that instead.

The SEEK chunk is allowed to appear multiple times, and it is where the real power lies. As soon as a decoder encounters SEEK, it is allowed to throw out everything that appeared after the SAVE chunk, flush memory buffers, and so forth. If a MNG were structured as a long-form story, for example, the SEEK chunks might be used to delimit chapters or scenes--any props used for only one scene could be thrown away, thus reducing the memory burden on the decoder.

That summarizes the essential structure and capabilities of MNG. I've skipped over a few chunks, mostly ancillary ones, but the basic ideas have been covered. So let us now take a look at a few examples.

12.3. The Simplest MNG

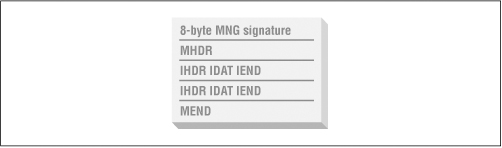

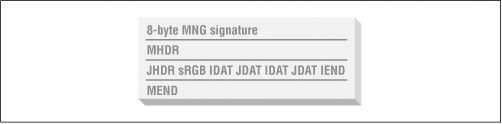

Arguably the absolute simplest MNG is just the simplest PNG (recall Chapter 8, “PNG Basics”), renamed with a .mng extension. Another truly simple one would be the empty MNG, composed only of MHDR, FRAM, and MEND chunks, which could be used as a spacer on web pages--it would generate a transparent frame with the dimensions specified in MHDR. But if we consider only nontrivial MNGs, the most basic one probably looks like Figure 12-1.

Figure 12-1: Layout of the simplest MNG.

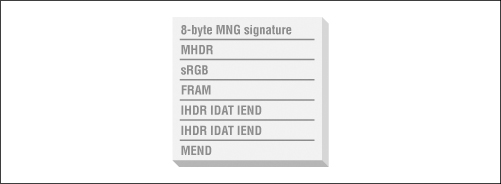

This is a very basic, two-image slide show, consisting of a pair of grayscale or truecolor PNG images (note the absence of PLTE chunks, so they cannot be colormapped images) and nothing else. In fact, the MNG stream is a little too basic; it contains no color space information, so the images will not display the same way on different platforms. It includes no explicit timing information, so the decoder will display the images at a rate of one frame per tick. At the minimum value of MHDR's ticks-per-second field, that translates to a duration of just one second for the first image and one or more seconds for the second image (in practice, probably indefinitely). There is no way to use this abbreviated method to define a duration longer than one second. To avoid those problems, sRGB and FRAM chunks could be added after MHDR; the latter would specify an interframe delay--say, five seconds' worth. Thus the simplest reasonable MNG looks like Figure 12-2.

Figure 12-2: Layout of the second simplest MNG.

Of course, sRGB should only be used if the images are actually in the standard RGB color space (see Chapter 10, “Gamma Correction and Precision Color”); if not, explicit gamma and chromaticity chunks can be used. Note that sRGB is only 13 bytes long, so its overhead is negligible.

12.4. An Animated MNG

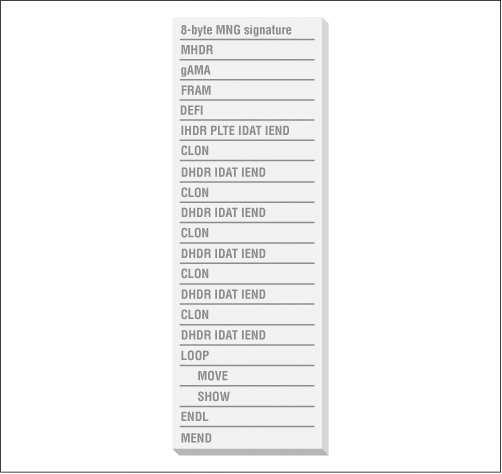

As a more complex example, let us take a closer look at how we might create the animated reindeer example I described earlier. I will assume that a single cycle of a reindeer's gallop can be represented by six poses (sprite frames), and I'll further assume that all but the first pose can be efficiently coded as delta-PNGs. The complete MNG of a single reindeer galloping across the screen might be structured as shown in Figure 12-3.

Figure 12-3: Layout of an animated MNG.

As always, we begin with MHDR, which defines the overall size of the image area. I've also included a gamma chunk so that the (nighttime) animation won't look too dark or too bright on other computer systems. The animation timing is set by the FRAM chunk, and then we begin loading sprite data for the six poses. The DEFI chunk (“define image”) is one I haven't discussed so far; it is included here to set the potential visibility of the first pose explicitly--in this case, we want the first pose to be visible. After the IHDR, PLTE, IDAT, and IEND chunks defining the first pose is a clone chunk, indicating that the second object (the second pose in the six-pose sequence) is to be created by copying the first object. The CLON chunk also indicates that the second object is not potentially visible yet. It is followed by the delta-PNG chunks that define the second image; we can imagine either that the IDAT represents a complete replacement for the pixels in the first image, with the delta part referring to the inheritance of the first image's palette chunk, or perhaps the second image is truly a pixel-level delta from the first image. Either way, the third through sixth images are defined similarly.

The heart of the animation is the loop at the end. In this case, I've included a MOVE chunk, which moves the animation objects to the left by a few pixels every iteration, and a SHOW chunk to advance the poses in sequence. If there are 600 iterations in the loop, the animation will progress through 100 six-pose cycles.

The complete eight-reindeer version would be very similar, but instead of defining full clones of the sprite frames, each remaining reindeer would be represented by partial clones of the six original poses. In effect, a partial clone is an empty object: it has its own object ID, visibility, and location, but it points at another object for its image data-in this case, at one of the six existing poses. So the seven remaining reindeer would be represented by 42 CLON chunks, of which seven would have the potential-visibility flag turned on. The loop would now include a total of eight SHOW chunks, each advancing one of the reindeer sprite's poses; a single MOVE chunk would still suffice to move all eight forward. Of course, this is still not quite the original design; this version has all eight reindeer galloping synchronously. To have them gallop at different rates would require separate FRAM chunks for each one. [98]

12.5. An Algorithmic MNG

Another good delta-PNG example, but one that creates only a single image algorithmically, is 16million.mng, which I mentioned once or twice already. Figure 12-4 shows its complete contents.

Figure 12-4: Layout of an algorithmic MNG.

The initial FRAM chunk defines the structure of the stream as a composite frame, and it is followed by a DEFI chunk that indicates the image is potentially visible. The IHDR...IEND sequence defines the first row of the image (512 pixels wide), with red changing every pixel and blue incrementing by one at the halfway point. Then the outer loop begins--we'll return to that in a moment-followed immediately by the inner loop of 255 iterations. The inner loop simply increments the green value of every pixel in the row and moves the modified line down one. The DHDR, IDAT, and IEND chunks represent this green increment; the delta pixels are simply a sequence of 512 “0 1 0” triples. As one might guess, they compress extremely well; the 1,536 data bytes are packed into a total of 20 zlib-compressed bytes, including six zlib header and trailer bytes.

The outer loop has the task of resetting the green values to 0 again (easily accomplished by incrementing them by one more, so they roll over from 255 to 0) and of incrementing the blue values by two--recall that the first block of rows had blue = 0 on the left side and blue = 1 on the right. Thus the delta-PNG data at the bottom of the outer loop consists of 512 “0 1 2” triples, which compress to 23 bytes. Because the blue increments by two, this loop only needs to interate 128 times. It actually produces one extra row at the very end, but because this appears outside the frame boundary (as defined by the MHDR chunk), it is not visible.

12.6. A JPEG Image with Transparency

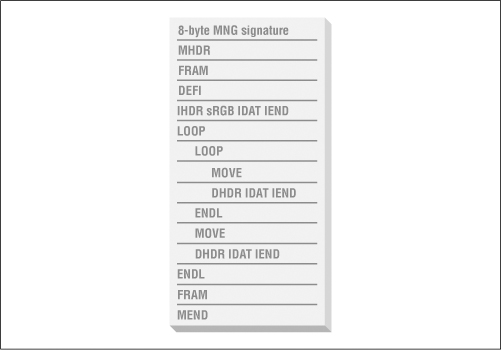

Finally, let's look at an example of a JPEG image with an interleaved alpha channel. The particular example shown in Figure 12-5 is still wrapped inside a MNG stream, but it could as easily exist standalone if the MHDR and MEND chunks were removed and the signature changed to the JNG signature.

Figure 12-5: Layout of an alpha-JNG MNG.

The JHDR chunk introduces the embedded JNG, defines its dimensions, and declares it to have an alpha channel. It is followed by an sRGB PNG chunk that indicates the image is in the International Color Consortium's standard RGB color space; decoders without access to a color management system should instead use the predetermined gamma and chromaticity values that approximate the sRGB color space (see Table 10-3).

The color-space chunk is followed by the IDAT chunks that define the image's alpha channel and the JDAT chunks that define its main (foreground) image. We've included a two-way interleave here in order to allow some possibility of progressive display, but in general one would want to interleave the IDATs and JDATs after perhaps every 16 or 32 rows--16 is a special number for JPEG decoders, and 16 or 32 rows is usually a reasonable amount to display at a time unless the image is quite skinny. On the other hand, keep in mind that each interleave (interleaf) adds an extra 24 bytes of IDAT/JDAT wrapper code; this overhead should be balanced against the desired smoothness of the progressive output.

Note that we've included an IDAT first. This may be a good idea since the decoder often will be able to start displaying the image before all of the JDAT arrives, and we've assumed that the alpha channel is simple enough that the PNG data compressed extremely well (i.e., the IDAT is smaller than the JDAT of the same region). If the reverse is true, the JDAT should come first so that the image can be displayed as each line of alpha channel arrives and is decoded.

Also note that, although I've referred to “progressive” display here, I am not necessarily referring either to progressive JPEG or to interlaced PNG. In fact, MNG prohibits interlaced PNG alpha channels in JNG streams, and progressive JPEG may not mix well even with noninterlaced alpha channels, depending on how the application is written. The reason is that the final value of any given pixel will not be known until the JPEG is almost completely transmitted, and “approximate rendering” of partially transparent pixels (that is, rendering before the final values are known) requires that the unmodified background image remain available until the end, so that the approximated pixels can be recomputed during the final pass. Of course, a sophisticated decoder could display such an image progressively anyway, but it would incur a substantially greater memory and computational overhead than would be necessary when displaying a nonprogressive JPEG interleaved with an alpha channel. Instead, most decoders are likely to wait for sections of the image (e.g., the first 32 rows) to be competely transmitted before displaying anything. If progressive JPEG data is interleaved with the alpha channel, then such decoders will end up waiting for practically the entire image to be transmitted before even starting to render, which defeats the purpose of both interleaved JNG and progressive JPEG.

12.7. MNG Applications

As of April 1999, there were a total of six applications available that supported MNG in some form or another, with at least one or two more under development. The six available applications are listed; four of them were new in 1998.

Viewpng

The original MNG application, Viewpng was Glenn Randers-Pehrson's test bed for PNG- and MNG-related features and modifications. It has not been actively developed since May 1997, and it runs only under IRIX on Silicon Graphics (SGI) workstations.

ftp://swrinde.nde.swri.edu/pub/mng/applications/sgi/

ImageMagick

This is a viewing and conversion toolkit for the X Window System; it runs under both Unix and VMS and has supported a minimal subset of MNG (MHDR, concatenated PNG images, MEND) since November 1997. In particular, it is capable of converting GIF animations to MNG and then back to GIF.

MNGeye

Probably the most complete MNG decoder yet written, MNGeye was written by Gerard Juyn starting in May 1998 and runs under 32-bit Windows. Its author has indicated a willingness to base a MNG reference library on the code in MNGeye.

pngcheck

A simple command-line program that can be compiled for almost any operating system, pngcheck simply prints the PNG chunk information in human-readable form and checks that it conforms to the specification. Partial MNG support was added by Greg Roelofs beginning in June 1998. Currently, the program does minimal checking of MNG streams, but it is still useful for listing MNG chunks and interpreting their contents.

PaintShopPro

PSP 5.0 uses MNG as the native format in its Animation Shop component, but it is not clear whether any MNG support is actually visible to the user. Paint Shop Pro runs under both 16-bit and 32-bit Windows.

XVidCap

This is a free X-based video-capture application for Unix; it captures a rectangular area of the screen at intervals and saves the images in various formats. Originally XVidCap supported the writing of individual PNG images, but as of its 1.0 release, it also supports writing MNG streams.

While support for MNG is undeniably still quite sparse, it is nevertheless encouraging that a handful of applications already provide support for what has been, in effect, a moving target. Once MNG settles down (plans were to freeze the spec by May 1999) and is approved as a specification, and once some form of free MNG programming library is available to ease the burden on application developers, broader support can be expected.

New programs will be listed on the MNG applications page, http://www.libpng.org/pub/mng/mngapps.html.

12.8. The Future?

MNG's development has not been the same success story that PNG's was, primarily due to a lesser interest in and need for a new animation format. Especially with the advent of the World Wide Web, people from many different walks of life have direct experience with ordinary images, and, in particular, they are increasingly aware of various limitations in formats such as GIF and JPEG. All of this worked (and continues to work) in PNG's favor. But when it comes to multi-image formats and animation, not only do these same people have much less experience, what need they do have for animation is largely met by the animated GIF format that Netscape made so popular. Animated GIFs may not be the answer to all of the world's web problems, but they're good enough 99% of the time. All of this, of course, works against MNG.

In addition, MNG is decidedly complex; objects may be modified by other objects, loops may be nested arbitrarily deeply, and so on. While it is debatable whether MNG is too complex--certainly there are some who feel it is--even its principal author freely admits that fully implementing the current draft specification is a considerable amount of work.

On the positive side, animated GIFs often can be rewritten as MNG animations in a tiny fraction of the file size, and there are no patent-fee barriers to implementing MNG in applications. Moreover, the Multiple-image Network Graphics format is making progress, both as a mature specification and as a supported format in real applications, and versions released since March 1999 now include implementor-friendly subsets known as MNG-LC and MNG-VLC (for Low Complexity and Very Low Complexity, respectively). Its future looks good.

[91] A file format encapsulating both data and a display method? Egad, it's object-oriented!

[92] Actually making sense of the MNG stream would require considerably more work, of course.

[93] MNG's concept of frames and subframes allows one to speak of two or more distinct frames with precisely zero delay between them, but these are considered just one frame for the purpose of counting the total number of frames in the stream.

[94] OK, that's a stretch, acronym-wise. But it's pronounceable, rhymes with PNG and MNG, and has a file extension, .jng, that differs by only one letter from .jpg, .png, and .mng.

[95] MNG optionally allows 12-bit-per-sample JPEG image data to follow the far more common 8-bit flavor, giving decoders the freedom to choose whichever is most appropriate. If both are included, it is signalled in JHDR by a bit-depth value of 20 instead of 8 or 12, and the 8-bit and 12-bit JDATs are separated by a special JSEP chunk. The 8-bit data must come first. Note that current versions of libjpeg can only be compiled to handle either 8-bit or 12-bit JPEG data, not both simultaneously.

[96] Named for the Greek letter delta (Δ or σ), which is often used in science and engineering to denote differences.

[97] Add a few more poses of a waving fat guy in a sleigh, and you'll swear you hear sleigh bells ringing and chestnuts roasting on an open fire.

[98] Note that Rudolph could be encoded as a set of six tiny delta-PNGs relative to the six original poses. Of course, to get that realistic Rudolph glow would require a semitransparent reddish region around his olfactory appendage, which necessarily involves either an alpha channel or a full tRNS chunk. But now we're talking True Art, and no sacrifice is too great.

Get PNG: The Definitive Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.