Chapter 1. ECMAScript and the Future of JavaScript

JavaScript has gone from being a 1995 marketing ploy to gain a tactical advantage to becoming the core programming experience in the world’s most widely used application runtime platform in 2017. The language doesn’t merely run in browsers anymore, but is also used to create desktop and mobile applications, in hardware devices, and even in space suit design at NASA.

How did JavaScript get here, and where is it going next?

1.1 A Brief History of JavaScript Standards

Back in 1995, Netscape envisioned a dynamic web beyond what HTML could offer. Brendan Eich was initially brought into Netscape to develop a language that was functionally akin to Scheme, but for the browser. Once he joined, he learned that upper management wanted it to look like Java, and a deal to that effect was already underway.

Brendan created the first JavaScript prototype in 10 days, taking Scheme’s first-class functions and Self’s prototypes as its main ingredients. The initial version of JavaScript was code-named Mocha. It didn’t have array or object literals, and every error resulted in an alert. The lack of exception handling is why, to this day, many operations result in NaN or undefined. Brendan’s work on DOM level 0 and the first edition of JavaScript set the stage for standards work.

This revision of JavaScript was marketed as LiveScript when it started shipping with a beta release of Netscape Navigator 2.0, in September 1995. It was rebranded as JavaScript (trademarked by Sun, now owned by Oracle) when Navigator 2.0 beta 3 was released in December 1995. Soon after this release, Netscape introduced a server-side JavaScript implementation for scripting in Netscape Enterprise Server, and named it LiveWire.1 JScript, Microsoft’s reverse-engineered implementation of JavaScript, was bundled with IE3 in 1996. JScript was available for Internet Information Server (IIS) in the server side.

The language started being standardized under the ECMAScript name (ES) into the ECMA-262 specification in 1996, under a technical committee at ECMA known as TC39. Sun wouldn’t transfer ownership of the JavaScript trademark to ECMA, and while Microsoft offered JScript, other member companies didn’t want to use that name, so ECMAScript stuck.

Disputes by competing implementations, JavaScript by Netscape and JScript by Microsoft, dominated most of the TC39 standards committee meetings at the time. Even so, the committee was already bearing fruit: backward compatibility was established as a golden rule, bringing about strict equality operators (=== and !==) instead of breaking existing programs that relied on the loose Equality Comparison Algorithm.

The first edition of ECMA-262 was released June 1997. A year later, in June 1998, the specification was refined under the ISO/IEC 16262 international standard, after much scrutiny from national ISO bodies, and formalized as the second edition.

By December 1999 the third edition was published, standardizing regular expressions, the switch statement, do/while, try/catch, and Object#hasOwnProperty, among a few other changes. Most of these features were already available in the wild through Netscape’s JavaScript runtime, SpiderMonkey.

Drafts for an ES4 specification were soon afterwards published by TC39. This early work on ES4 led to JScript.NET in mid-20002 and, eventually, to ActionScript 3 for Flash in 2006.3

Conflicting opinions on how JavaScript was to move forward brought work on the specification to a standstill. This was a delicate time for web standards: Microsoft had all but monopolized the web and they had little interest in standards development.

As AOL laid off 50 Netscape employees in 2003,4 the Mozilla Foundation was formed. With over 95% of web-browsing market share now in the hands of Microsoft, TC39 was disbanded.

It took two years until Brendan, now at Mozilla, had ECMA resurrect work on TC39 by using Firefox’s growing market share as leverage to get Microsoft back in the fold. By mid-2005, TC39 started meeting regularly once again. As for ES4, there were plans for introducing a module system, classes, iterators, generators, destructuring, type annotations, proper tail calls, algebraic typing, and an assortment of other features. Due to how ambitious the project was, work on ES4 was repeatedly delayed.

By 2007 the committee was split in two: ES3.1, which hailed a more incremental approach to ES3; and ES4, which was overdesigned and underspecified. It wouldn’t be until August 20085 when ES3.1 was agreed upon as the way forward, but later rebranded as ES5. Although ES4 would be abandoned, many of its features eventually made its way into ES6 (which was dubbed Harmony at the time of this resolution), while some of them still remain under consideration and a few others have been abandoned, rejected, or withdrawn. The ES3.1 update served as the foundation on top of which the ES4 specification could be laid in bits and pieces.

In December 2009, on the 10-year anniversary since the publication of ES3, the fifth edition of ECMAScript was published. This edition codified de facto extensions to the language specification that had become common among browser implementations, adding get and set accessors, functional improvements to the Array prototype, reflection and introspection, as well as native support for JSON parsing and strict mode.

A couple of years later, in June 2011, the specification was once again reviewed and edited to become the third edition of the international standard ISO/IEC 16262:2011, and formalized under ECMAScript 5.1.

It took TC39 another four years to formalize ECMAScript 6, in June 2015. The sixth edition is the largest update to the language that made its way into publication, implementing many of the ES4 proposals that were deferred as part of the Harmony resolution. Throughout this book, we’ll be exploring ES6 in depth.

In parallel with the ES6 effort, in 2012 the WHATWG (a standards body interested in pushing the web forward) set out to document the differences between ES5.1 and browser implementations, in terms of compatibility and interoperability requirements. The task force standardized String#substr, which was previously unspecified; unified several methods for wrapping strings in HTML tags, which were inconsistent across browsers; and documented Object.prototype properties like __proto__ and __defineGetter__, among other improvements.6 This effort was condensed into a separate Web ECMAScript specification, which eventually made its way into Annex B in 2015. Annex B was an informative section of the core ECMAScript specification, meaning implementations weren’t required to follow its suggestions. Jointly with this update, Annex B was also made normative and required for web browsers.

The sixth edition is a significant milestone in the history of JavaScript. Besides the dozens of new features, ES6 marks a key inflection point where ECMAScript would become a rolling standard.

1.2 ECMAScript as a Rolling Standard

Having spent 10 years without observing significant change to the language specification after ES3, and 4 years for ES6 to materialize, it was clear the TC39 process needed to improve. The revision process used to be deadline-driven. Any delay in arriving at consensus would cause long wait periods between revisions, which led to feature creep, causing more delays. Minor revisions were delayed by large additions to the specification, and large additions faced pressure to finalize so that the revision would be pushed through, avoiding further delays.

Since ES6 came out, TC39 has streamlined7 its proposal revisioning process and adjusted it to meet modern expectations: the need to iterate more often and consistently, and to democratize specification development. At this point, TC39 moved from an ancient Word-based flow to using Ecmarkup (an HTML superset used to format ECMAScript specifications) and GitHub pull requests, greatly increasing the number of proposals8 being created as well as external participation by nonmembers. The new flow is continuous and thus, more transparent: while previously you’d have to download a Word doc or its PDF version from a web page, the latest draft of the specification is now always available.

Firefox, Chrome, Edge, Safari, and Node.js all offer over 95% compliance of the ES6 specification,9 but we’ve been able to use the features as they came out in each of these browsers rather than having to wait until the flip of a switch when their implementation of ES6 was 100% finalized.

The new process involves four different maturity stages.10 The more mature a proposal is, the more likely it is to eventually make it into the specification.

Any discussion, idea, or proposal for a change or addition that has not yet been submitted as a formal proposal is considered to be an aspirational “strawman” proposal (stage 0), but only TC39 members can create strawman proposals. At the time of this writing, there are over a dozen active strawman proposals.11

At stage 1 a proposal is formalized and expected to address cross-cutting concerns, interactions with other proposals, and implementation concerns. Proposals at this stage should identify a discrete problem and offer a concrete solution to the problem. A stage 1 proposal often includes a high-level API description, illustrative usage examples, and a discussion of internal semantics and algorithms. Stage 1 proposals are likely to change significantly as they make their way through the process.

Proposals in stage 2 offer an initial draft of the specification. At this point, it’s reasonable to begin experimenting with actual implementations in runtimes. The implementation could come in the form of a polyfill, user code that mangles the runtime into adhering to the proposal; an engine implementation, natively providing support for the proposal; or compiled into something existing engines can execute, using build-time tools to transform source code.

Proposals in stage 3 are candidate recommendations. In order for a proposal to advance to this stage, the specification editor and designated reviewers must have signed off on the final specification. Implementors should’ve expressed interest in the proposal as well. In practice, proposals move to this level with at least one browser implementation, a high-fidelity polyfill, or when supported by a build-time compiler like Babel. A stage 3 proposal is unlikely to change beyond fixes to issues identified in the wild.

In order for a proposal to attain stage 4 status, two independent implementations need to pass acceptance tests. Proposals that make their way through to stage 4 will be included in the next revision of ECMAScript.

New releases of the specification are expected to be published every year from now on. To accommodate the yearly release schedule, versions will now be referred to by their publication year. Thus ES6 becomes ES2015, then we have ES2016 instead of ES7, ES2017, and so on. Colloquially, ES2015 hasn’t taken and is still largely regarded as ES6. ES2016 had been announced before the naming convention changed, thus it is sometimes still referred to as ES7. When we leave out ES6 due to its pervasiveness in the community, we end up with: ES6, ES2016, ES2017, ES2018, and so on.

The streamlined proposal process combined with the yearly cut into standardization translates into a more consistent publication process, and it also means specification revision numbers are becoming less important. The focus is now on proposal stages, and we can expect references to specific revisions of the ECMAScript standard to become more uncommon.

1.3 Browser Support and Complementary Tooling

A stage 3 candidate recommendation proposal is most likely to make it into the specification in the next cut, provided two independent implementations land in JavaScript engines. Effectively, stage 3 proposals are considered safe to use in real-world applications, be it through an experimental engine implementation, a polyfill, or using a compiler. Stage 2 and earlier proposals are also used in the wild by JavaScript developers, tightening the feedback loop between implementors and consumers.

Babel and similar compilers that take code as input and produce output native to the web platform (HTML, CSS, or JavaScript) are often referred to as transpilers, which are considered to be a subset of compilers. When we want to leverage a proposal that’s not widely implemented in JavaScript engines in our code, compilers like Babel can transform the portions of code using that new proposal into something that’s more widely supported by existing JavaScript implementations.

This transformation can be done at build time, so that consumers receive code that’s well supported by their JavaScript runtime of choice. This mechanism improves the runtime support baseline, giving JavaScript developers the ability to take advantage of new language features and syntax sooner. It is also significantly beneficial to specification writers and implementors, as it allows them to collect feedback regarding viability, desirability, and possible bugs or corner cases.

A transpiler can take the ES6 source code we write and produce ES5 code that browsers can interpret more consistently. This is the most reliable way of running ES6 code in production today: using a build step to produce ES5 code that most old browsers, as well as modern browsers, can execute.

The same applies to ES7 and beyond. As new versions of the language specification are released every year, we can expect compilers to support ES2017 input, ES2018 input, and so on. Similarly, as browser support becomes better, we can also expect compilers to reduce complexity in favor of ES6 output, then ES7 output, and so on. In this sense, we can think of JavaScript-to-JavaScript transpilers as a moving window that takes code written using the latest available language semantics and produces the most modern code they can output without compromising browser support.

Let’s talk about how you can use Babel as part of your workflow.

1.3.1 Introduction to the Babel Transpiler

Babel can compile modern JavaScript code that relies on ES6 features into ES5. It produces human-readable code, making it more welcoming when we don’t have a firm grasp on all of the new features we’re using.

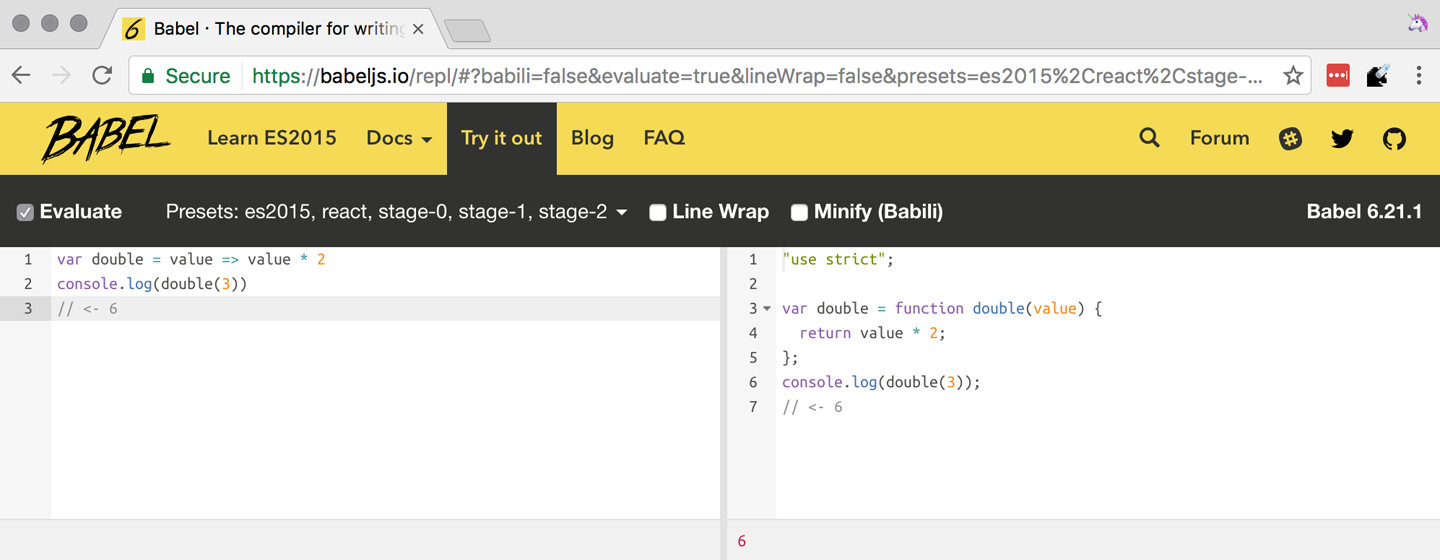

The online Babel REPL (Read-Evaluate-Print Loop) is an excellent way of jumping right into learning ES6, without any of the hassle of installing Node.js and the babel CLI, and manually compiling source code.

The REPL provides us with a source code input area that gets automatically compiled in real time. We can see the compiled code to the right of our source code.

Let’s write some code into the REPL. You can use the following code snippet to get started:

vardouble=value=>value*2console.log(double(3))// <- 6

To the right of the source code we’ve entered, you’ll see the transpiled ES5 equivalent, as shown in Figure 1-1. As you update your source code, the transpiled result is also updated in real time.

Figure 1-1. The online Babel REPL in action—a great way to dive right into an interactive ES6 session

The Babel REPL is an effective companion as a way of trying out some of the features introduced in this book. However, note that Babel doesn’t transpile new built-ins, such as Symbol, Proxy, and WeakMap. Those references are instead left untouched, and it’s up to the runtime executing the Babel output to provide those built-ins. If we want to support runtimes that haven’t yet implemented these built-ins, we could import the babel-polyfill package in our code.

In older versions of JavaScript, semantically correct implementations of these features are hard to accomplish or downright impossible. Polyfills may mitigate the problem, but they often can’t cover all use cases and thus some compromises need to be made. We need to be careful and test our assumptions before we release transpiled code that relies on built-ins or polyfills into the wild.

Given the situation, it might be best to wait until browsers support new built-ins holistically before we start using them. It is suggested that you consider alternative solutions that don’t rely on built-ins. At the same time, it’s important to learn about these features, as to not fall behind in our understanding of the JavaScript language.

Modern browsers like Chrome, Firefox, and Edge now support a large portion of ES2015 and beyond, making their developer tools useful when we want to take the semantics of a particular feature for a spin, provided it’s supported by the browser. When it comes to production-grade applications that rely on modern JavaScript features, a transpilation build-step is advisable so that your application supports a wider array of JavaScript runtimes.

Besides the REPL, Babel offers a command-line tool written as a Node.js package. You can install it through npm, the package manager for Node.

Note

Download Node.js. After installing node, you’ll also be able to use the npm command-line tool in your terminal.

Before getting started we’ll make a project directory and a package.json file, which is a manifest used to describe Node.js applications. We can create the package.json file through the npm CLI:

mkdir babel-setup

cd babel-setup

npm init --yes

Note

Passing the --yes flag to the init command configures package.json using the default values provided by npm, instead of asking us any questions.

Let’s also create a file named example.js, containing the following bits of ES6 code. Save it to the babel-setup directory you’ve just created, under a src subdirectory:

vardouble=value=>value*2console.log(double(3))// <- 6

To install Babel, enter the following couple of commands into your favorite terminal:

npm install babel-cli@6 --save-dev npm install babel-preset-env@6 --save-dev

Note

Packages installed by npm will be placed in a node_modules directory at the project root. We can then access these packages by creating npm scripts or by using require statements in our application.

Using the --save-dev flag will add these packages to our package.json manifest as development dependencies, so that when copying our project to new environments we can reinstall every dependency just by running npm install.

The @ notation indicates we want to install a specific version of a package. Using @6 we’re telling npm to install the latest version of babel-cli in the 6.x range. This preference is handy to future-proof our applications, as it would never install 7.0.0 or later versions, which might contain breaking changes that could not have been foreseen at the time of this writing.

For the next step, we’ll replace the value of the scripts property in package.json with the following. The babel command-line utility provided by babel-cli can take the entire contents of our src directory, compile them into the desired output format, and save the results to a dist directory, while preserving the original directory structure under a different root:

{"scripts":{"build":"babel src --out-dir dist"}}

Together with the packages we’ve installed in the previous step, a minimal package.json file could look like the code in the following snippet:

{"scripts":{"build":"babel src --out-dir dist"},"devDependencies":{"babel-cli":"^6.24.0","babel-preset-env":"^1.2.1"}}

Note

Any commands enumerated in the scripts object can be executed through npm run <name>, which temporarily modifies the $PATH environment variable so that we can run the command-line executables found in babel-cli without installing babel-cli globally on our system.

If you execute npm run build in your terminal now, you’ll note that a dist/example.js file is created. The output file will be identical to our original file, because Babel doesn’t make assumptions, and we have to configure it first. Create a .babelrc file next to package.json, and write the following JSON in it:

{"presets":["env"]}

The env preset, which we installed earlier via npm, adds a series of plugins to Babel that transform different bits of ES6 code into ES5. Among other things, this preset transforms arrow functions like the one in our example.js file into ES5 code. The env Babel preset works by convention, enabling Babel transformation plugins according to feature support in the latest browsers. This preset is configurable, meaning we can decide how far back we want to cover browser support. The more browsers we support, the larger our transpiled bundle. The fewer browsers we support, the fewer customers we can satisfy. As always, research is of the essence to identify what the correct configuration for the Babel env preset is. By default, every transform is enabled, providing broad runtime support.

Once we run our build script again, we’ll observe that the output is now valid ES5 code:

» npm run build » cat dist/example.js"use strict"vardouble=functiondouble(value){returnvalue * 2}console.log(double(3))// <- 6

Let’s jump into a different kind of tool, the eslint code linter, which can help us establish a code quality baseline for our applications.

1.3.2 Code Quality and Consistency with ESLint

As we develop a codebase we factor out snippets that are redundant or no longer useful, write new pieces of code, delete features that are no longer relevant or necessary, and shift chunks of code around while accommodating a new architecture. As the codebase grows, the team working on it changes as well: at first it may be a handful of people or even one person, but as the project grows in size so might the team.

A lint tool can be used to identify syntax errors. Modern linters are often customizable, helping establish a coding style convention that works for everyone on the team. By adhering to a consistent set of style rules and a quality baseline, we bring the team closer together in terms of coding style. Every team member has different opinions about coding styles, but those opinions can be condensed into style rules once we put a linter in place and agree upon a configuration.

Beyond ensuring a program can be parsed, we might want to prevent throw statements throwing string literals as exceptions, or disallow console.log and debugger statements in production code. However, a rule demanding that every function call must have exactly one argument is probably too harsh.

While linters are effective at defining and enforcing a coding style, we should be careful when devising a set of rules. If the lint step is too stringent, developers may become frustrated to the point where productivity is affected. If the lint step is too lenient, it may not yield a consistent coding style across our codebase.

In order to strike the right balance, we may consider avoiding style rules that don’t improve our programs in the majority of cases when they’re applied. Whenever we’re considering a new rule, we should ask ourselves whether it would noticeably improve our existing codebase, as well as new code going forward.

ESLint is a modern linter that packs several plugins, sporting different rules, allowing us to pick and choose which ones we want to enforce. We decide whether failing to stick by these rules should result in a warning being printed as part of the output, or a halting error. To install eslint, we’ll use npm just like we did with babel in the previous section:

npm install eslint@3 --save-dev

Next, we need to configure ESLint. Since we installed eslint as a local dependency, we’ll find its command-line tool in node_modules/.bin. Executing the following command will guide us through configuring ESLint for our project for the first time. To get started, indicate you want to use a popular style guide and choose Standard,12 then pick JSON format for the configuration file:

./node_modules/.bin/eslint --init ? How would you like to configure ESLint? Use a popular style guide ? Which style guidedoyou want to follow? Standard ? What formatdoyou want your config file to be in? JSON

Besides individual rules, eslint allows us to extend predefined sets of rules, which are packaged up as Node.js modules. This is useful when sharing configuration across multiple projects, and even across a community. After picking Standard, we’ll notice that ESLint adds a few dependencies to package.json, namely the packages that define the predefined Standard ruleset; and then creates a configuration file, named .eslintrc.json, with the following contents:

{"extends":"standard","plugins":["standard","promise"]}

Referencing the node_modules/.bin directory, an implementation detail of how npm works, is far from ideal. While we used it when initializing our ESLint configuration, we shouldn’t keep this reference around nor type it out whenever we lint our codebase. To solve this problem, we’ll add the lint script in the next code snippet to our package.json:

{"scripts":{"lint":"eslint ."}}

As you might recall from the Babel example, npm run adds node_modules to the PATH when executing scripts. To lint our codebase, we can execute npm run lint and npm will find the ESLint CLI embedded deep in the node_modules directory.

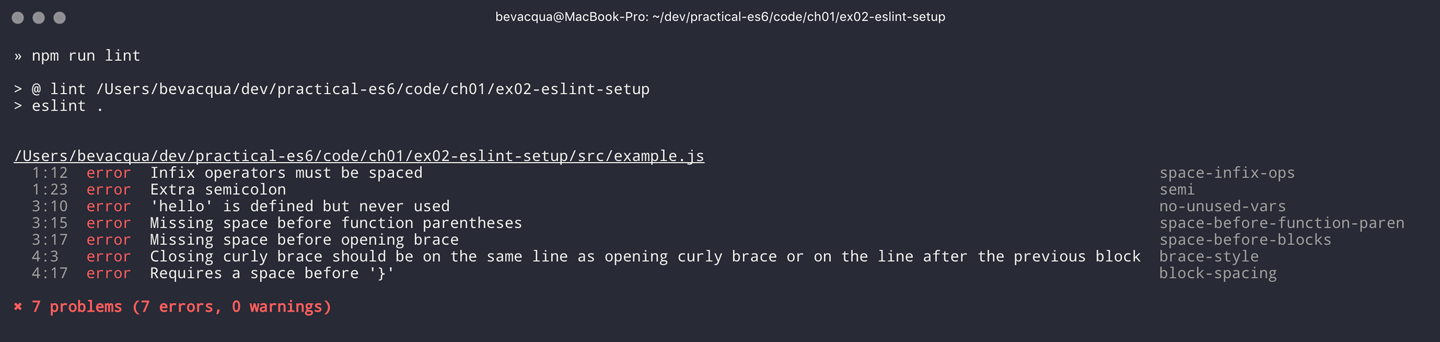

Let’s consider the following example.js file, which is purposely riddled with style issues, to demonstrate what ESLint does:

vargoodbye='Goodbye!'functionhello(){returngoodbye}if(false){}

When we run the lint script, ESLint describes everything that’s wrong with the file, as shown in Figure 1-2.

Figure 1-2. The ESLint tool is a great way to keep your code free of syntax errors and, optionally, inconsistent coding style

ESLint is able to fix most style problems automatically if we pass in a --fix flag. Add the following script to your package.json:

{"scripts":{"lint-fix":"eslint . --fix"}}

When we run lint-fix we’ll only get a pair of errors: hello is never used and false is a constant condition. Every other error has been fixed in place, resulting in the following bit of source code. The remaining errors weren’t fixed because ESLint avoids making assumptions about our code, and prefers not to incur semantic changes. In doing so, --fix becomes a useful tool to resolve code style wrinkles without risking a broken program as a result.

vargoodbye='Goodbye!'functionhello(){returngoodbye}if(false){}

Note

A similar kind of tool can be found in prettier, which can be used to automatically format your code. Prettier can be configured to automatically overwrite our code ensuring it follows preferences such as a given amount of spaces for indentation, single or double quotes, trailing commas, or a maximum line length.

Now that you know how to compile modern JavaScript into something every browser understands, and how to properly lint and format your code, let’s jump into ES6 feature themes and the future of JavaScript.

1.4 Feature Themes in ES6

ES6 is big: the language specification went from 258 pages in ES5.1 to over double that amount in ES6, at 566 pages. Each change to the specification falls in some of a few different categories:

-

Syntactic sugar

-

New mechanics

-

Better semantics

-

More built-ins and methods

-

Nonbreaking solutions to existing limitations

Syntactic sugar is one of the most significant drivers in ES6. The new version offers a shorter way of expressing object inheritance, using the new class syntax; functions, using a shorthand syntax known as arrow functions; and properties, using property value shorthands. Several other features we’ll explore, such as destructuring, rest, and spread, also offer semantically sound ways of writing programs. Chapters 2 and 3 attack these aspects of ES6.

We get several new mechanics to describe asynchronous code flows in ES6: promises, which represent the eventual result of an operation; iterators, which represent a sequence of values; and generators, a special kind of iterator that can produce a sequence of values. In ES2017, async/await builds on top of these new concepts and constructs, letting us write asynchronous routines that appear synchronous. We’ll evaluate all of these iteration and flow control mechanisms in Chapter 4.

There’s a common practice in JavaScript where developers use plain objects to create hash maps with arbitrary string keys. This can lead to vulnerabilities if we’re not careful and let user input end up defining those keys. ES6 introduces a few different native built-ins to manage sets and maps, which don’t have the limitation of using string keys exclusively. These collections are explored in Chapter 9.

Proxy objects redefine what can be done through JavaScript reflection. Proxy objects are similar to proxies in other contexts, such as web traffic routing. They can intercept any interaction with a JavaScript object such as defining, deleting, or accessing a property. Given the mechanics of how proxies work, they are impossible to polyfill holistically: polyfills exist, but they have limitations making them incompatible with the specification in some use cases. We’ll devote Chapter 6 to understanding proxies.

Besides new built-ins, ES6 comes with several updates to Number, Math, Array, and strings. In Chapter 7 we’ll go over a plethora of new instance and static methods added to these built-ins.

We are getting a new module system that’s native to JavaScript. After going over the CommonJS module format that’s used in Node.js, Chapter 8 explains the semantics we can expect from native JavaScript modules.

Due to the sheer amount of changes introduced by ES6, it’s hard to reconcile its new features with our pre-existing knowledge of JavaScript. We’ll spend all of Chapter 9 analyzing the merits and importance of different individual features in ES6, so that you have a practical grounding upon which you can start experimenting with ES6 right away.

1.5 Future of JavaScript

The JavaScript language has evolved from its humble beginnings in 1995 to the formidable language it is today. While ES6 is a great step forward, it’s not the finish line. Given we can expect new specification updates every year, it’s important to learn how to stay up-to-date with the specification.

Having gone over the rolling standard specification development process in Section 1.2: ECMAScript as a Rolling Standard, one of the best ways to keep up with the standard is by periodically visiting the TC39 proposals repository.13 Keep an eye on candidate recommendations (stage 3), which are likely to make their way into the specification.

Describing an ever-evolving language in a book can be challenging, given the rolling nature of the standards process. An effective way of keeping up-to-date with the latest JavaScript updates is by watching the TC39 proposals repository, subscribing to weekly email newsletters14, and reading JavaScript blogs.15

At the time of this writing, the long awaited Async Functions proposal has made it into the specification and is slated for publication in ES2017. There are several candidates at the moment, such as dynamic import(), which enables asynchronous loading of native JavaScript modules, and a proposal to describe object property enumerations using the new rest and spread syntax that was first introduced for parameter lists and arrays in ES6.

While the primary focus in this book is on ES6, we’ll also learn about important candidate recommendations such as the aforementioned async functions, dynamic import() calls, or object rest/spread, among others.

1 A booklet from 1998 explains the intricacies of server-side JavaScript with LiveWire.

2 You can read the original announcement at the Microsoft website (July, 2000).

3 Listen to Brendan Eich in the JavaScript Jabber podcast, talking about the origin of JavaScript.

4 You can read a news report from The Mac Observer, July 2003.

5 Brendan Eich sent an email to the es-discuss mailing list in 2008 where he summarized the situation, almost 10 years after ES3 had been released.

6 For the full set of changes made when merging the Web ECMAScript specification upstream, see the WHATWG blog.

7 Check out the presentation “Post-ES6 Spec Process” from September 2013 that led to the streamlined proposal revisioning process here.

8 Check out all of the proposals being considered by TC39.

9 Check out this detailed table reporting ES6 compatibility across browsers.

10 Take a look at the TC39 proposal process documentation.

11 You can track strawman proposals.

12 Note that Standard is just a self-proclamation, and not actually standardized in any official capacity. It doesn’t really matter which style guide you follow as long as you follow it consistently. Consistency helps reduce confusion while reading a project’s codebase. The Airbnb style guide is also fairly popular and it doesn’t omit semicolons by default, unlike Standard.

13 Check out all of the proposals being considered by TC39.

14 There are many newsletters, including Pony Foo Weekly and JavaScript Weekly.

15 Many of the articles on Pony Foo and by Axel Rauschmayer focus on ECMAScript development.

Get Practical Modern JavaScript now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.