Chapter 1. Introduction to Voice Interfaces and the IoT

Bell Labs engineer Homer Dudley invented the first speech synthesis machine, titled “Voder,” in the early scientific technical revolution days of 1937. Based on his earlier work in 1928 on vocoder (voice encoder) Dudley put together gas tubes, a foot pedal, 10 piano-like keys, and various other components designed to work in harmony to produce human speech from synthesized sound. Then in 1962, at the Seattle World’s Fair, IBM introduced the first speech recognition machine called “IBM Shoebox,” which understood a whopping 16 spoken English words.

Despite the vast experience of these great institutions, the evolution of synthesized speech and recognition would be slow and steady. In addition to advancements in other technological areas, it would take another 49 years for voice to be widely adopted and accepted. Before Apple’s Siri came into the market in 2011, the general population was fed up with voice interfaces, having to deal with spotty voice “options” where we would end up dialing “0” for an operator on calls made to a bank or insurance company.

Both voice interfaces (VI), historically referred to as voice user interfaces (VUI), as well as the Internet of Things (IoT) have been around for many years now in various shapes and sizes. These systems, backed by machine learning (ML), artificial intelligence (AI), and other advances in cognitive computing as well as the rapid decline in hardware costs and the extreme minification of components, have all led to this point. Nowadays, developers can easily integrate voice into their own devices with very little understanding of natural language processing (NLP), natural language understanding (NLU), speech-to-text (STT), text-to-speech (TTS), and all of the other acronyms that make up today’s voice-enabled world.

In this book, we bring to light some of the modern implementations of both technological marvels, VI and IoT, as we explore the multitude of integration options out in the wild today. Lastly, we’ll attempt to explain how we as makers and developers can bring them together in a single functional product that you can continue to build long after you’ve finished reading these pages.

We will attempt to limit general theories, design patterns, and high-level overviews of voice interfaces and IoT to Chapters 1 and 2, while focusing on actual hands-on development and implementation in the subsequent chapters. With this in mind, feel free to jump to Chapter 3 to start coding or continue reading here for a primer on voice interfaces and the Internet of Things—we’ll look at how they complement each other and most importantly, some design principles to help you create pleasant, non-annoying conversational voice experiences.

Welcome to a NUI World

You’ve heard the news and read the articles: technology is evolving and expanding at such an exponential rate that it’s just a matter of time before machines, humans, and the world around us are completely in sync. Soon, ubiquitous conversational interfaces will be all around us, from appliances and office buildings to vehicles and public spaces. The physical structures themselves will be able to recognize who you are, hold conversations and carry out commands, understand basic human needs, and react autonomously on our behalf—all while we use our natural human senses such as sight, sound, touch, and voice as well as the many additional human traits such as walking, waving, and even thinking to connect with this new, natural user interface (NUI)-enabled smart world.

NUI essentially refers to interfaces that are natural in human-to-human communications versus the artificial means of human-to-machine interfacing such as using a keyboard or mouse. An early example of a NUI is the touchscreen, which allowed users to control graphical user interfaces (GUIs) with the touch of their finger. With the introduction of devices such as the Amazon Echo or Google Home, we’ve essentially added a natural interface, such as voice, to our everyday lives. Apply Emotiv’s Insight EEG headset and you can control your PC with your thoughts. And with a MYO armband, you can control the world around you with a mere wave of your hand. As Arthur C. Clarke declared, “Any sufficiently advanced technology is indistinguishable from magic”—and we are surely headed in that direction. Hopefully one day we will have figured out how the wand chooses the wizard.

As futurists, we dream of all the wonderful technologies that will be available in the years and decades to come. As engineers and makers, we wonder what it will take to get there. While NUIs consist of many interfaces wrapped in a multitude of technologies and sensors, this book will focus primarily on our natural ability to speak, listen, and verbally communicate with one another and machines.

In most cases, our voice is probably one of the most efficient methods of communication; it requires very little effort and energy on our part as compared to hand gestures or typing on a touchscreen. I say in most cases, because as with any technology, you want to make sure you are using the right tools for the right job. For instance, it may be great to walk into your bathroom and say “turn on the lights” but in reality, the lights should just turn on automatically as you walk in the bathroom by way of motion sensors.

Always keep the user experience of your product in mind as you’re designing it. You might want to “voice all the things” for the fun of it or because it seems really cool, but if you want to make a viable product, the voice integration will need to make sense. While VI has a long road toward ubiquity, we are getting closer each day to a point where communicating with machines will be as efficient and commonplace as interacting with our friends and family.

Voice All the Things!

Back in 2010 when Allie Brosh published “This Is Why I’ll Never Be an Adult” on her blog “Hyperbole and a Half,” she had no idea that her meme “Clean all the things” (Figure 1-1) would be a viral sensation whose popularity has continued even seven years later in 2017. For coders, sayings like “JavaScript all the things” and “Hack all the things” are now more relevant than ever in the growing universe of the Internet of Things.

Figure 1-1. Viral meme from Allie Brosh’s blog post “This Is Why I’ll Never Be an Adult”

While we of course aren’t going to actually “Drink all the beers” or “JavaScript all the things,” this meme phenomenon does suggest that we should think about how X affects all the Y. In the case of voice interfaces, let’s think about how voice can tie into the everyday objects in our lives. Let’s fail fast and rule out scenarios that are too impractical to move forward with: for example, adding voice to a toilet. Wait, is that really all that strange? Maybe for a viable product it is, but if you find it intriguing then by all means, add a voice interface to your toilet, if for nothing else, the entertainment value.

When adding voice capability to inanimate objects, it is important to consider how many devices within a small proximity will have voice enablement, how many of them share the same wake words, and so on. Think of it this way: imagine being in a room with a dozen voice-enabled devices, then you ask for the time and you get a response from ten different voices. How annoying would that be on a daily basis? In that scenario, ideally there would be one main voice interface that interacts with many different devices.

In thinking about today’s consumer voice landscape, the everyday voice experiences include Siri, OK Google, Cortana, and Alexa. Luckily, these all have their respective wake words; therefore, having all these in the same room at the same time shouldn’t be an issue unless you say something like “Hey Siri, search OK Google for Alexa.” As close to the edge as edge cases can go, this one is pretty damn close but becoming increasingly annoying as more voice interfaces get introduced into the market and, therefore, more potential of clashing instead of communicating between devices is as well. Amazon and Microsoft are trying to thwart this calamity with a newly minted deal in which they will incorporate each of their AI personas on the other’s platform.

Additionally, users will sometimes mix up the wake words for their various devices—for example, imagine someone pressing the button on their iPhone and saying “Hey Alexa.” One of the biggest issues with voice is discoverability. Alexa has over 15,000 skills and it’s very difficult to find out what is available. Even when you do find a relevant skill, it can be difficult to remember the commands. This is why most people tend to go with a single voice interface versus having to deal with remembering multiple wake words and commands.

If you are creating a brand-new device with voice capabilities, there are a ton of things you need to think about to ensure that it offers a pleasant experience. For starters, the wake word. Do you include a wake word, or will you choose not to? If you include it, what should it be? Do you build it from scratch or do you leverage an existing voice framework? These design considerations require significant planning, whether you’re building a product for market or even just a new voice interface that you’d like to integrate into your own home or office.

If you want to learn more about the user experience design side of voice interfaces, check out Laura Klein’s “Design for Voice Interfaces: Building Products that Talk”. In her report she dives into the various dos and don’ts, nuances, and differences between working with VUI versus GUI and multimodal interfaces. Cathy Pearl’s book, Designing Voice User Interfaces, is another great guide with tons of examples and expert advice to help with all of your design considerations.

This book will cover some existing voice frameworks and APIs that can be leveraged in your own internet-enabled device. We will explore Amazon’s Alexa Skills Kit (ASK) and Alexa Voice Service (AVS) as well as Microsoft’s native Speech APIs and its cloud-based solution called Cognitive Services (formerly Project Oxford). Additionally, we will quickly touch on a few other voice frameworks, including Web Speech API, Watson, and Google Speech API.

To gain a high-level understanding of how these APIs operate, we must first grasp the underlying concepts and technologies that power them. With this in mind let’s quickly dive into some areas specifically around NLP, STT, TTS, and other acronyms that make it possible to program voice interfaces into our IoT devices today.

What Is NLP?

Natural language processing (NLP) uses computational linguistics and machine learning to parse text-based human language (i.e., English, Spanish, etc.) and process the phrases for specific events. In other words, it can be used to initiate commands, teach machines to find specific patterns, and so much more. NLP is primarily used in chatbots (both text-based and voice-enabled versions) as well as other applications that aid in machine learning, artificial intelligence, and human–computer interfaces (HCIs) designed for a wide array of use cases.

The biggest challenge facing NLP and AI engineers today is natural language understanding (NLU)—that is, getting the algorithms to process the differences between a metaphor versus a literal statement. Part of the problem is understanding context, discourse, relationships, pronoun resolution, word-sense disambiguation (WSD), and a whole slew of extremely complex computational challenges that are being tackled each and every day. For instance, in 2016 the Microsoft Research Advanced Speech Recognition (ASR) algorithms matched and in some cases outperformed their human counterparts with an impressive error rate of just 5.9%, according to Microsoft’s Chief Speech Scientist Xuedong Huang. Compare that to everyday humans attempting to understand one another, with an error rate of 4% to a whopping 12%, depending on who you ask.

While the concepts behind NLP and NLU are important to comprehend, we will not delve into the details here; however, you will want to make sure that NLP is a key component or feature offered by at least one of the service APIs we will be plugging into our IoT devices. The two top components that complement NLP and allow us as humans to speak and listen to machines are speech-to-text (STT) and text-to-speech (TTS). STT and TTS are crucial components for providing your users with speech recognition and synthesis. We’ll take a look at each of these in the following sections.

Speech-to-Text (STT)

While NLP can be used with or without actual voice input, when used with voice you would essentially be bringing in STT to parse audible words to text. While this is a required feature for converting human spoken language to text, some services might not have this available. For example, if you want to plug into one of the many chatbots available today, you might need to leverage an STT API on another service or framework to first translate the spoken words into text-based strings that you can then post to the chatbot API to get your desired end result.

STT is also known as speech recognition (SR) or advanced speech recognition (ASR).1 Nuance offers a great example of an STT/ASR service; it is used by Siri to convert speech to plain text, which is then processed further by the Siri NLP/NLU layers to understand the user’s intents.

Google’s Cloud Speech API, which converts an audio stream and recognizes over 80 languages using advanced neural network models, is another excellent example of a straightforward STT service. The API sends back plain text that you can then route to one or many chatbots or NLP-based services. While it may be easy to integrate with a single full-service voice API, one thing you might want to keep in mind is this separation of concerns (SoC) in order to maintain flexibility with your devices’ services integration architecture.

Here’s a partial example of what a nonstreaming audio HTTP POST request to the Google Cloud Speech API might look like:

{

'config': {

'encoding': 'LINEAR16',

'sampleRate': 16000,

'languageCode': 'en-US'

},

'audio': {

'content': speech_content.decode('UTF-8') //base64 encoded audio

}

}

Text-to-Speech (TTS)

TTS is the exact opposite direction of STT. With text-to-speech (TTS), text is synthesized into artificial human speech. Similar to STT, TTS might not come bundled with an NLP service or even an STT/ASR service. If you will be using voice for input only (e.g., to speak to a PC and have it display the results rather than verbally respond), then TTS is not necessarily required.

If you simply need TTS there are some standalone services out there today that you can leverage in your projects. For instance, with IVONA (now Amazon Polly), you can post simple HTTP requests with the text you want synthesized and you will receive an HTTP response with an audio stream that you can play back to end users. This is the same TTS service that enables the amazingly smooth voice of Amazon Alexa.

Here’s a partial example of what an unsigned HTTP POST request might look like:2

POST /CreateSpeech HTTP/1.1

Host: tts.eu-west-1.ivonacloud.com

Content-type: application/json

X-Amz-Date: 20130913T092054Z

Content-Length: 32

{"Input":{"Data":"Hello world"}}

As you can see in this example, the phrase “Hello world” has been requested to be synthesized. The response would include an HTTP stream of the audible “Hello world” phrase. Since no additional parameters were set, the defaults for encoding, language, voice, and other variables will be used to synthesize the speech—for example, MP3 encoding in US English and so on. With this in mind, you can get up and running quickly with the default settings, then evolve the voice output by adjusting the settings as you continue to develop your voice interface.

PLS, SSML, and Other Acronyms

Aside from NLP, NLU, STT, TTS, ML, and AI, you will hear or read about Pronunciation Lexicon Specification (PLS), Speech Synthesis Markup Language (SSML), Speech Recognition Grammar Specification (SRGS), and a hodgepodge of alphabet soup in this acronym-filled field. Volumes of literature have been written for each of these individually, outlining their various technical approaches and high-level methodologies. Here we will focus on some examples of SSML, SRGS, and other meta and/or markup type of specifications as these are also applicable to developers looking to incorporate existing and flexible voice interfaces into their own IoT devices.

In addition to all these wonderful acronyms, we will also learn about related terminology and paradigms such as utterances, slots, domains, context, agents, entities, intents, and conversational interfaces.

To get us started we’ll briefly define some of the voice-related terminology you will encounter throughout the following chapters.

Utterances

An utterance is a spoken word or phrase. In some services you will see many references to the word “utterances”—for instance, in Amazon’s ASK, you are required to include some “sample utterances” in plain-text form, prefixed with their related intent, as in Example 1-1.

Example 1-1. Sample utterances in the Amazon Alexa Skills Kit

ToggleLightIntent turn {OnOff} lights

ToggleLightIntent turn lights {OnOff}

OrderPizzaIntent order a {Product}

OrderPizzaIntent order a {Size} {Product}

...

Slots and entities

Slots are the variables and arguments of the NLP world. In Example 1-1, the tokens shown with curly braces (i.e., {OnOff}, {Product}, and {Size}) are all examples of custom slots. In the context of this book, you will specifically read about these Amazon Alexa slot types such as custom slot types, custom slot values, and built-in slot types. In some cases, we will reference other services such as Wit.ai or API.AI, which refer to slot types as “entities.” Entities are essentially the type of variable; for instance, the Size slot can be a custom slot type that supports any number of string values such as large, medium, or small. When choosing a service you will want to ensure that you select one that supports both a robust built-in set of slots or entities but also custom slots that you design for your own use cases.

Skills

Skills are somewhat similar to apps—in other words, with Amazon Alexa, you don’t necessarily create apps in the traditional graphical sense; instead you create skills that add functionality to the Alexa ecosystem. There are a number of built-in skills like weather, music, and so on. Currently, the Alexa Skills Kit (ASK), the SDK of sorts for Amazon Alexa, includes various types of skills, including Custom Interaction Model, Smart Home Skill, Flash Briefing Skill, and List Skill.

Wake word

The wake word is used to alert the device to begin processing commands. This is an alternative to using a button or other means of activating a voice interface. For example, with the Amazon Tap, you tap a button and Alexa begins to listen. With the Amazon Echo, you say the word “Alexa” and Alexa will begin to listen. A device that uses a wake word is constantly listening, but will ignore any commands it hears before the wake word is spoken. Once the device hears the wake word, it will take all subsequent utterances and process them accordingly. For example, in the case of “Alexa, what’s the weather?” the word “Alexa” is the wake word. If a user walks up to an Echo and says “What’s the weather?” nothing will happen because the wake word was not invoked.

Wake words are processed on the device itself so there isn’t a constant stream from millions of devices being sent to the cloud. From a privacy perspective, understanding how the technology works is important in trusting that your device isn’t spying on you.

Wake words are a great way to prevent events firing off randomly. In some cases, however, you might want something that’s constantly listening for specific phrases like, “we should meet Monday to discuss this opportunity further,” where then a list of available times would appear on a screen or simply a digital assistant responds with “you are both available at 2p.m., would you like me to schedule the meeting for you?” and a user can simply respond yes or no.

With this in mind, it’s important to strongly consider the use of wake words, especially if you have decided on an API that requires it.

Intents

The intent is essentially the requests of the user command. For example, when a user asks for the weather, the intent is to obtain weather information; sample utterances such as “What’s the weather?” can be mapped to an intent named GetWeatherIntent. Then in your code, instead of trying to decipher the myriad ways a person can ask for the weather, you simply take the intent name the NLP service sends back to you and process it accordingly. In other words, it’s a lot easier to work with GetWeatherIntent than it is to work with “What’s the weather?” “What’s the temperature?” or “What’s the weather in Chicago?”; similarly, phrases such as “Will it rain today?” or “Will it rain tomorrow?” could map to GetRainIntent so that you can provide a different response.

Conversational

“Alexa, what’s the weather?” This verbal command responds with the weather report, then the conversation is over. This is great for a single command but if you want a greater conversational experience, then you will need to map out your conversational model carefully. We will dive deeper into conversational models throughout this book, but for now keep in mind that conversational models (sometimes referred to as conversational UI) help elevate the user experience by providing a deeper level of conversation versus single voice commands. For instance, something as simple as following up the weather response with a “thank you,” then having your conversational interface respond with “you’re welcome,” goes a long way in elevating the user experience.

Experience Design

We touched a bit on user experience earlier in the chapter. Let’s dive a bit deeper now on how to ensure your product offers your users a pleasant experience, even if you’re the only user. Surely the reason you are interested in building a voice-enabled device is to make life easier right? Or do you think it’s just cool or will make you a million bucks? In any case, nobody will use the device if it’s not user-friendly, so let’s make sure we ask the right questions and think about the right solutions.

With this in mind, and for the purpose of this book, we’ll follow a design-thinking approach to the experience design of our device. Design thinking is a human-centered design process used not only for designs but in all areas of innovation. As we go through this exercise, consider the five stages of design thinking: empathize, define, ideate, prototype, and test. We begin with the empathize stage, where we try to put ourselves in the shoes of the users and really try to get a feel for their lifestyle, environment, and other aspects of their lives where a voice-enabled device can be beneficial.

We then define the core problem discovered during the empathize stage, ideate on possible solutions using a variety of brainstorming techniques, prototype it, and finally test it. This is an extremely high-level explanation, so if you would like to learn more about design thinking, there are a number of great courses available on Lynda.com and Stanford University’s d.school as well as numerous online reference materials that will help you get a greater understanding of design thinking.

In addition to the design-thinking process, there are some aspects of voice design that we will need to consider. Starting with Purpose. If your product has no purpose, then what’s the point, right? Second, we will need to consider when to use one-off commands versus conversational dialogues. Designing conversational flow diagrams can help you ideate and think through how the conversations will flow with your voice-enabled device. We’ll also dive a bit deeper on design concepts for utterances, and inflections, when to use SSML, as well as visual cues and other design considerations.

Purpose

What’s the purpose of life? No, don’t worry, we’re not going down that philosophical rabbit hole! However, we do need to expand on that question and think about the purpose of your device’s life. What problem does it solve? This is the empathize stage, during which you get a feel for the user’s needs and concerns; even if that user is just you, think about how you would feel if a voice-enabled device was introduced into your environment. Think about that device being in all aspects of your life: sitting in your kitchen, laundry room, car, office, garage, pool side—wherever you are right now!

Instead of simply asking “What can I voice-ify?” another way to approach this is to think about problems people you know are having or suffering from. For example, people with dementia may have memory issues that cause them to forget their grandkids’ names. Rather than feeling embarrassed by asking a spouse, they can ask a voice system, with no judgment. Here’s another example to consider: because many traffic incidents today are caused by distracted driving, you could perhaps research what drivers want to be able to do the most in a car and see if voice can help.

Write down all the different ways you can think of for using voice no matter how crazy it sounds. Once you have a comprehensive list, score your ideas using whatever rating system you prefer. If you want to score based on frequency of use, for example, go through each idea and give it a score from 1 to 10 with 1 being the lowest use, potentially as low as once a month, and 10 being the highest use, meaning you would use it more, potentially every day. Go ahead and synthesize all those possibilities into one core problem with the highest score, then run with it!

One-Off Versus Conversational

When designing for voice, you can say things like, “Turn off the lights” and the lights turn off, and a voice responds with “OK” or “Done.” This is considered a one-off or transactional command. One-offs are great for these types of interactions. Another good example is asking for the weather. In some cases, you would say “What’s the weather?” and if contextual awareness or user preferences are available, it will simply respond with the weather in that area. If a location cannot be determined, here’s where conversational comes into play. Your voice service can respond with “For which city?” and the conversation can continue from there.

One-off commands are much easier to implement since in a nutshell, you simply receive a request and provide a response. Conversational is a bit more complex in nature, as your skill or bot needs to remember what it just asked the user. We do this by storing the state of the conversation, specifically the previous intent data. For instance, using our weather example, if a user asks for the weather, we would essentially receive a GetWeatherIntent payload from the NLP service where we would look for the Location slot or entity value. If no location is found, we store the GetWeatherIntent payload in a state store.

The conversational state can be stored in any database in memory or disk. We recommend a NoSQL solution where you can simply save the data model in JSON as is. Then you would simply provide the response “For which city?” back to the user. Once the user responds and the subsequent request comes in for that specific user, we would get a payload that could be called LocationIntent where we would access the previous request to determine why the user gave us a location to work with.

This is just one example of how conversational works. Alexa Skills Kit and some other services have a session object that contains additional information about the session or conversational state that you can also use to store custom parameters such as previous intent name. This helps tremendously when dealing with conversational session state. If all this seems complicated right now, don’t worry; we will walk through all the code for this and more in the subsequent chapters.

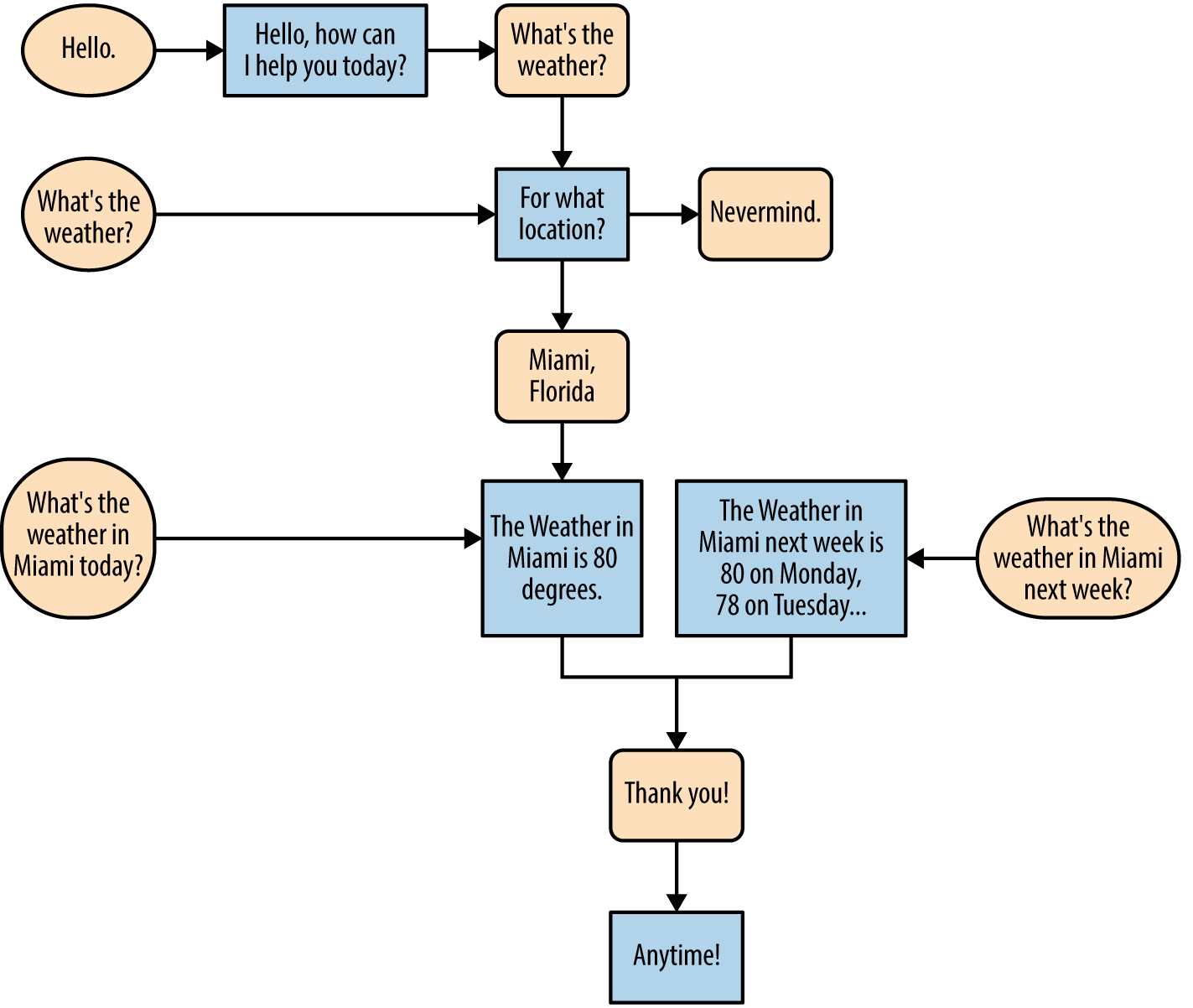

Conversation Flows

Designing a great voice experience first and foremost involves creating a well-thought-out conversational flow diagram (see Figure 1-2 for an example). The idea is to map out the conversation as best you can starting with all the different ways a person can essentially start a conversation with your skill or bot. For example, a user can say, “Hello,” “What’s the weather?” “What’s the weather in Miami?” “What’s the weather in Miami next week?” and so on. Now you don’t have to go crazy and list out all the different locations or time periods, just one or two examples per intent and each entity or slot.

Figure 1-2. Conceptual conversational flow

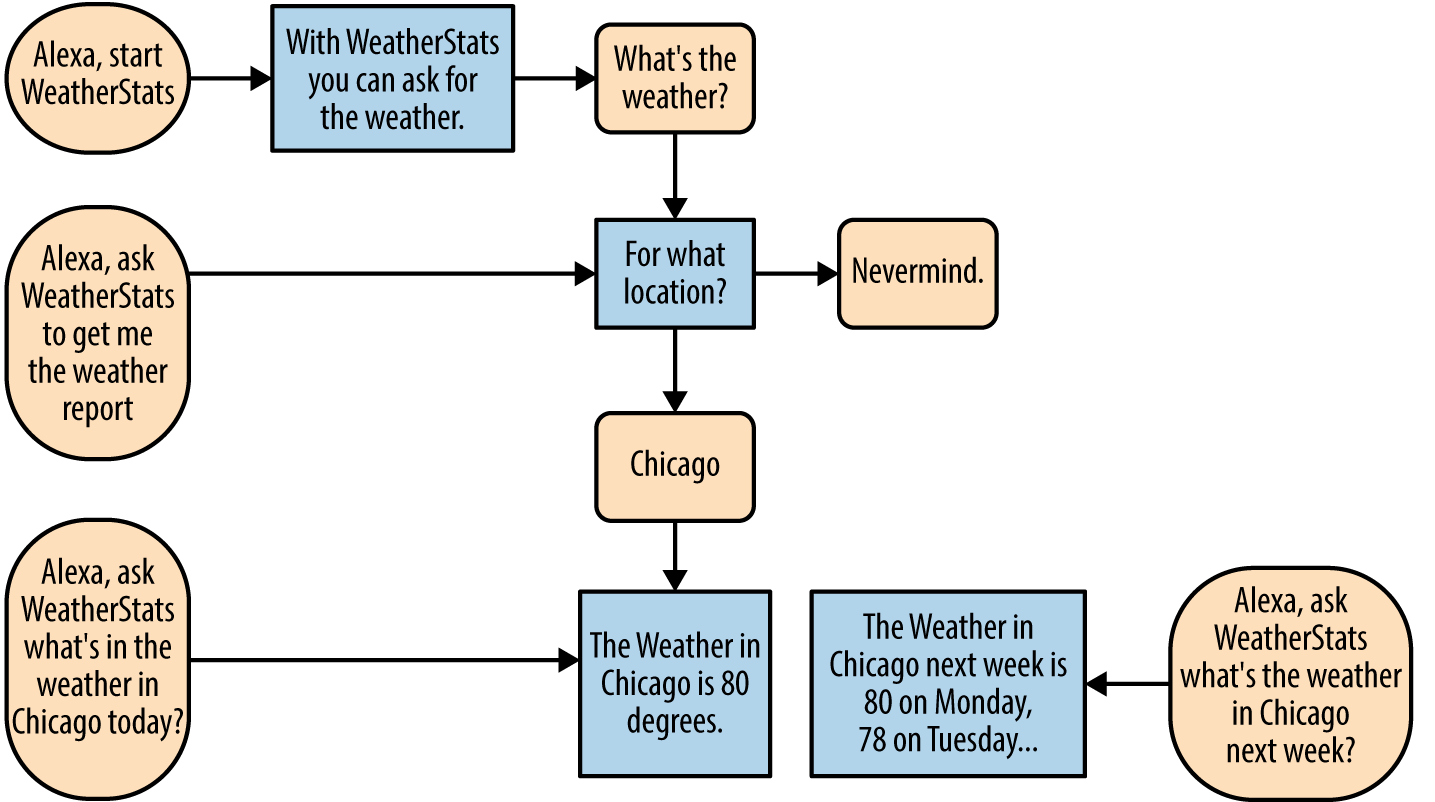

Figure 1-2 shows conceptually how conversations should flow on a general voice-enabled device or service, but it does not represent specific services such as Amazon Alexa. All services are different—for example, with Alexa, in addition to the wake word, invoking the skill name is required. Note the differences between the general conceptual conversational flow diagram (Figure 1-2) and the Alexa-specific conversational flow diagram (Figure 1-3). In the Alexa example, we will call our Alexa skill WeatherStats and include that as well as the wake word “Alexa” in our entry points. Additionally, we will modify the response after “Alexa, start WeatherStats” to reflect the standards and best practices of Amazon Alexa for the LaunchRequest type.

Figure 1-3. Alexa-specific conversational flow

You will want to create a conversational flow diagram for each skill and intent you create. Before writing any code or intent models, ask coworkers, friends, and family for feedback on each of the flows. This will provide you with great insight and ideas for responses and interactions you might have missed on your own.

Sample Utterances

Creating a list of good sample utterances is key to helping the machine learning in your service infer various ways a person can ask for something. In addition to your code, the sample utterances will also need to be iterated on and tweaked as you evolve your product. As you use your skill or bot, you might discover that certain phrases don’t work as well or that users are finding new and interesting ways to invoke your skill and thus will need to be included in your sample utterances list.

Let’s take a look at another example: imagine we have a ride hailing skill called MyRide. Here are some sample utterances for the HailRideIntent:

- I need a ride.

- I need a ride to the supermarket.

- I need a car to take me to the airport.

- Get me a car to the store.

- Get me a ride to the store.

As you can see, there are a multitude of ways one can ask for a ride (there are many possibilities beyond those listed here). In addition, you can add shortened single word commands where appropriate—for instance, we can simply add the word “car” to the preceding list, which would help in the case of “Alexa, ask MyRide for a car.” Surely someone is bound to invoke your skill with this phrase. Additionally, you can take the words “ride” and “car,” as well as “truck,” “van,” and “bus,” and other “Ride Types” and make those slot type values. Consider the following (nonexhaustive) list, for example:

- I need a car.

- I need a ride.

- I need a bus.

- I need a truck.

- I need a van.

- I need an SUV.

We can simply replace all of this with “I need a {RideType}”—this would take care of all of those sample utterances using one sample utterance and a predefined entity or slot type. Also note how the acronym SUV is in caps. This helps the speech recognition engine match with acronyms better. Alternatively, period separation also helps, such as with “S-U-V” for instance, but using “suv” in your sample utterance could potentially cause problems (and be pronounced as “soov”). Every time you add new sample utterances, you will need to test them thoroughly to ensure they work as expected. It’s important to test as you go rather than waiting until the last minute to test your utterances.

Speech Synthesis Markup Language (SSML)

Like HTML and XML, SSML is a markup language that provides information between machines and services. Where HTML provides information for web browsers to display content, SSML provides TTS engines with information on not only what to say, but how to say it. For example, consider the text “54321 go!”; with plain-old text fed to a TTS engine, you would hear something like “Fifty four thousand three hundred twenty one go!” which is most likely not what you were expecting to hear. One thing you can do to get it to actually sound like a countdown is to use the <say-as> SSML element:

<speak><say-as interpret-as="digits">54321</say-as> go!</speak>

Now there are some obvious workarounds here; for instance, you can easily use spaces in between the numbers to force a count down (i.e., “5 4 3 2 1 go!”) but for advanced features such as modifying the pronunciation, emphasis, prosody, or sound effects, SSML is your best option. As mentioned before, every service is different and may not support all of SSML (which is a W3C specification), so make sure to read the latest SSML supported tags documentation for each service. At the time of this writing, Amazon Alexa supports the following:

audioAllows the playing of audio small clips for sound effects.

breakApplies short pauses in the speech.

emphasisChanges the rate and volume of a tagged phrase.

pRepresents a paragraph, which applies an extra strong break in speech.

phonemeModifies the pronunciation of words.

prosodyAllows the modification of volume, pitch, and rate of speech.

sRepresents a sentence, which applies a strong break in speech.

say-asChanges the interpretation of speech.

speakThe required root element.

subSubstitutes the tagged word or phrase.

wAllows you to specify words as a noun, verb, past participle, or sense.

Alexa also supports a custom Amazon-specific tag called <amazon:effect> that currently only allows you to add a whispering effect. This tag has a lot of potential so stay on top of the latest updates from Amazon to see if there are any new effects available. Here’s an example of whispering:

<speak> Here's a secret. <amazon:effect name="whispered">I'm not human.</amazon:effect>. Mind blown? </speak>

The <audio> element is pretty straightforward: if you want to play a sound clip, you can provide the URL to the MP3 file using the URL attribute. However, there are some restrictions: it must be an MP3 file, it cannot be longer than 90 seconds, must be hosted and served over SSL (HTTPS), the sample rate must be 16000 Hz, and the bitrate must be a low 48 kbps. If you’re not an audiophile and have no idea how to get your sound effects to match these specifications, not to worry: ffmpeg to the rescue! Here’s the command you’ll need to use:

ffmpeg -i input-file.mp3 -ac 2 -codec:a libmp3lame -b:a 48k -ar 16000 output-file.mp3

All you need to do is make sure to replace input-file.mp3 with the filename that you are converting from (this can also be other formats so make sure to change the extension if necessary). Also, don’t forget to change output-file.mp3 to your desired output filename. Once your output file is ready, simply upload those audio files to a server that can serve those files publicly over HTTPS.

With the <break> tag you can add pauses of up to 10 seconds in length. This is great for dramatic effect and can be implemented using the unit attribute. With emphasis, you can accentuate words or phrases (which will result in them being spoken more slowly) as well as deemphasize them (which speeds them up a bit). This is done using the level attribute, which supports strong, moderate, and reduced values. For example, based on our previous SSML example, if you want to really accentuate (i.e., stretch out and make slightly louder) the phrase “Mind blown?” you can simply implement the following:

<speak> <emphasis level="strong">Mind blown?</emphasis> </speak>

The <p> and <s> tags are straight to the point. They have no attributes and really do just represent a paragraph and sentence, respectively. As with normal reading, writing, and speech, there’s a strong pause on sentences and an extra strong pause on paragraphs. In sentences, this can be accomplished using periods. In paragraphs, you can add the break tag with "x-strong" set on the strength attribute, but in that case simply using the <p> is cleaner.

<phoneme> is extremely helpful for words that might be spelled the same but have different meanings and pronunciations depending on the context. For instance, with the phrase “I have lead” the word “lead,” by default is pronounced as [leed] “the leader of the country” or “she will lead the group on the expedition,” but should actually be pronounced [led] as in “I have lead for my pencil.” Here’s how you would implement this fix in SSML:

<speak> I have <phoneme alphabet="ipa" ph="led">lead</phoneme>. </speak>

Another one of my favorite examples solves for how folks in different cultures or regions pronounce the same word in different ways, as with the word “tomato” in the famous phrase, “You say tomayto, I say tomahto!” Here’s how to get the correct pronunciations using SSML while maintaining the correct spelling of tomato:

<speak> You say <phoneme alphabet="ipa" ph="təˈmeɪtəʊ">tomato</phoneme>, I say <phoneme alphabet="ipa" ph="təˈmɑːtəʊ">tomato</phoneme>! </speak>

Unless you have either the International Phonetic Alphabet (IPA) or the Extended Speech Assessment Methods Phonetic Alphabet (X-SAMPA) committed to your own memory, the hard part here is finding the correct phonetic transcription (i.e., /təˈmeɪtəʊ/ in this example). Luckily you can simply search the web for a phonetic translator and you’ll be able to use it to translate just about any word to its phonetic form.

Next we have <prosody>, which allows you to control the pitch, rate, and volume of a phrase. For instance, set the pitch attribute to x-low and you’ll hear a deep voice, but set it to x-high and you have what sounds like one of the Chipmunks. With the rate attribute set to x-slow or x-high, you have extremely accentuated and stretched-out words or a voice at an extra high rate of speed that resembles an auctioneer. With the volume attribute, you have values from silent, x-soft, soft, medium, loud, and x-loud, where silent completely blanks out the phrase (which is great for censoring) and x-loud increases the tone of the voice (which is great for expressing excitement or anger).

With <say-as>, as with our countdown example earlier where we specified digits in the interpret-as attribute, we can also specify cardinal, ordinal, spell-out, fraction, unit, date, time, telephone, address, interjection, and expletive. When working with dates, there’s one additional attribute you can specify—the “format” attribute:

<speak> <say-as interpret-as="date" format="md">3/26/2020</say-as> </speak>

In this last example you will hear “March 26th” without the year verbalized. To also hear the year, you can specify mdy as the format and you’re all set.

Now, remember to use <speak> as your root element on all your SSML requests and if you want to substitute a word while keeping your text intact, use the <sub> tag with the alias attribute set to the word you want to hear. This is particularly helpful when using symbols or acronyms—for instance, pronouncing the word “magnesium” when using the symbol “Mg” or saying “pound” instead of the word “hash” in the phrase “Press #”.

Lastly, if you need to pronounce a word in its past tense or accentuated as a verb versus a noun, then you can use the <w> tag, which has one attribute called role. With role, you can specify "amazon:VB" for verbs, "amazon:VBD" for past participle, "amazon:NN" for nouns, and "amazon:SENSE_1" for the nondefault sense of the word. For example, the word “bass” defaults to the low musical range pronunciation and not the fish. If you want to pronounce it as the fish, then you can either use the <phoneme> tag or use the <w> tag as follows:

<speak> I like <w role="amazon:SENSE_1">bass</w>. </speak>

Other services may support the other SSML elements, which includes token, voice, mark, desc, lexicon, lookup, and other elements as well as possible custom elements as with <amazon:effect> so make sure to follow up with those services individually to stay on top of what is and isn’t available.

When to Use Visual Cues

Imagine if the Amazon Echo had no LED light ring: you’d shoot off a command, and seconds might go by with no response. Then, just as you go to ask it what’s taking so long, the device replies. That could be a bit frustrating. To ease that frustration, you really need to use some kind of visual cue like an LED light or a progress indicator in a graphical display to show that something is happening in the background.

The path of least resistance is to use an RGB LED, which has four pins: an anode each for red, green, and blue, and a long cathode pin for your standard ground (Figure 1-4). You can modulate any of the three RGB pins individually to get any one of those three colors or modulate them together to mix the colors and expand your palette to include over 16 million RGB-supported colors. This is done by essentially dimming some colors while brightening others. For instance, the value 0 means off and the value 255 means extremely bright. If we were to set R=80, G=0, and B=80, we would have the color purple.

Figure 1-4. RGB LED

If your product has a screen display, the best option is to include some kind of progress indicator (e.g., a status bar or circle, or a glowing light), such as those used in mobile applications or websites when content is being fetched. Avoid making the indicator overbearing or fast—it should be subtle and slow.

In other cases, you might want to show the user an image but the device itself does not have a screen, as with the Amazon Echo. For cases like this, you can create a mobile app that shows images, email or use SMS to send images, or simply display images on a nearby connected device. This isn’t necessarily a visual cue for process indication per se, but worthy of mentioning here since it can be used to enhance the experience.

Additional Design Considerations

In addition to the design considerations just outlined, you’ll want to put some thought into the design of the first-time setup and configuration process as well as the aesthetics of the product itself, its packaging, and documentation. Although you might not spend a lot of time on these pieces during the early R&D and prototyping stages, they are critical to deploying your product to production and on-boarding new users.

Also, a feedback loop with your early adopters will help you evolve and improve the product. For this you can log data such as most requested intents to unknown intent matches and work on improving those intents and handle unknown intents. In addition to automated logging, receiving verbal feedback directly through the device is a great way to capture what’s on your users’ minds as they use the product in near real time. You might program the device to respond to unknown intents with something like “Sorry, I didn’t understand your command. I have logged it for further analysis. Are there any other ways I can improve this service?” and allow the users to respond with their thoughts.

Additionally, notifications can be sent to users where they will be prompted to start a survey, and if they agree, they will be asked a few questions designed to capture feedback on key aspects of your product. Then you can use this feedback to make improvements as you iterate on your product and voice designs. However, be careful with notifications: you don’t want to simply blurt out the message as users may not be around to receive it. Visual cues (e.g., an orange glowing LED or a message icon on a screen) are a great way to capture users’ attention so that they can listen to those notifications when they are ready.

This brings us full circle to empathy in design thinking. The main point to remember when designing your product is your audience. How will interacting with this device make your users feel? You’ll want to design a product that will improve your users’ lives (even if you are designing a product just for yourself) rather than making things more complicated.

Decisions, Decisions...

In the next chapter you will find many references to several currently available services and frameworks. In subsequent chapters, we will narrow our focus on Amazon’s Alexa Voice Services (AVS) and provide step-by-step instructions for integrating AVS into your own IoT device, specifically using rapid prototyping hardware such as Raspberry Pi. However, depending on your circumstances, AVS on a Raspberry Pi might not be the right choice for you. For example, your business rules may call for just STT, or require the use of Microsoft products. In some cases, you may want your own backend instead of sending your audio streams to a third party, all while running on your own custom-designed PCB.

While we have tried our best to show how to integrate just about any RESTful HTTP voice service into your own IoT device, ultimately you have the freedom to decide which voice service and hardware to go with. With that in mind, continue on to the next chapter to learn more about some of the great voice services available out in the wild today.

1 Not to be confused with speaker recognition or voice biometrics, which uses biometric signatures to identify who is speaking versus what they are saying.

2 This example is taken from IVONA Software’s “Signing POST Requests”.

Get Programming Voice Interfaces now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.