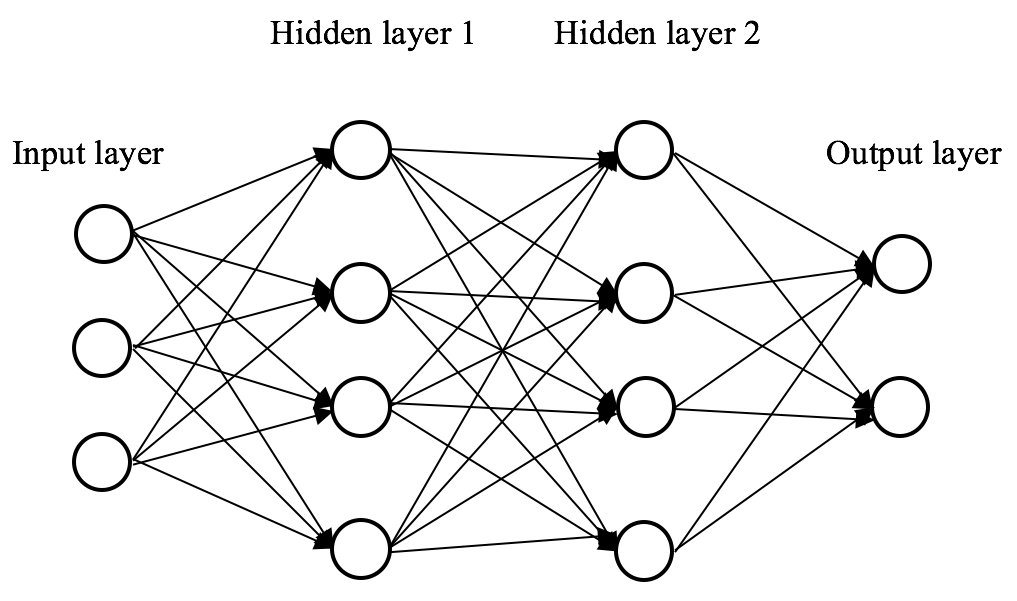

We have just achieved 91.3% accuracy with a single-layer neural network model. Theoretically, we can obtain a better one with more than one hidden layer. As an example, we provide a solution of a deep neural network model with two hidden layers:

Weight optimization in feed-forward deep neural networks is also realized through the backpropagation algorithm, which is identical to single-layer networks. However, the more layers, the higher the computation complexity, and the slower the model convergence. One way to accelerate the weight optimization, is to use a more computational efficient activation ...