Chapter 1. Introduction

Web Real-Time Communication (WebRTC) is a new standard and industry effort that extends the web browsing model. For the first time, browsers are able to directly exchange real-time media with other browsers in a peer-to-peer fashion.

The World Wide Web Consortium (W3C) and the Internet Engineering Task Force (IETF) are jointly defining the JavaScript APIs (Application Programming Interfaces), the standard HTML5 tags, and the underlying communication protocols for the setup and management of a reliable communication channel between any pair of next-generation web browsers.

The standardization goal is to define a WebRTC API that enables a web application running on any device, through secure access to the input peripherals (such as webcams and microphones), to exchange real-time media and data with a remote party in a peer-to-peer fashion.

Web Architecture

The classic web architecture semantics are based on a client-server paradigm, where browsers send an HTTP (Hypertext Transfer Protocol) request for content to the web server, which replies with a response containing the information requested.

The resources provided by a server are closely associated with an entity known by a URI (Uniform Resource Identifier) or URL (Uniform Resource Locator).

In the web application scenario, the server can embed some JavaScript code in the HTML page it sends back to the client. Such code can interact with browsers through standard JavaScript APIs and with users through the user interface.

WebRTC Architecture

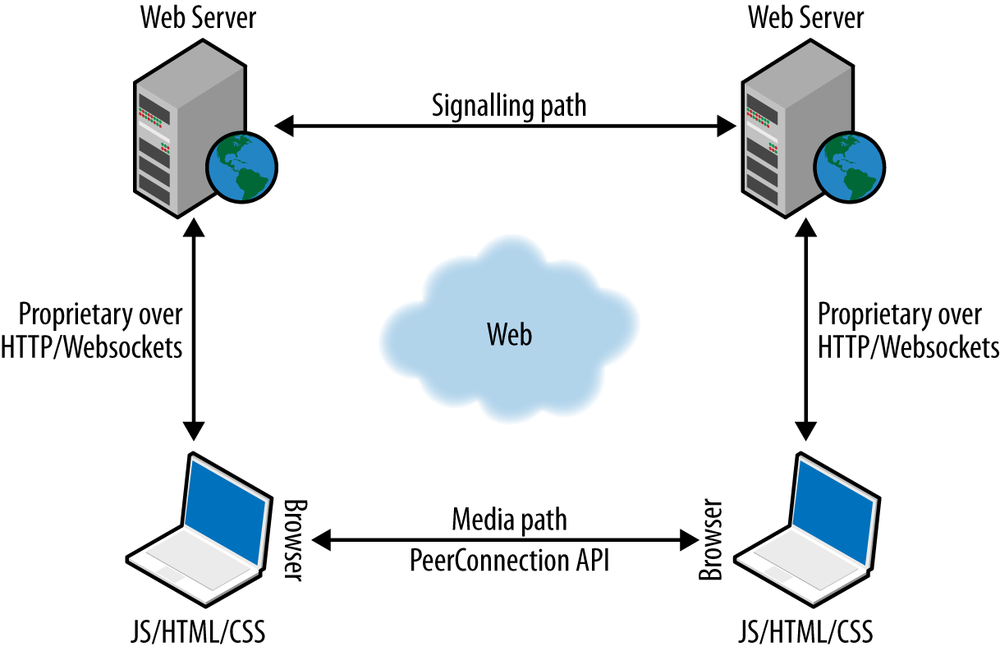

WebRTC extends the client-server semantics by introducing a peer-to-peer communication paradigm between browsers. The most general WebRTC architectural model (see Figure 1-1) draws its inspiration from the so-called SIP (Session Initiation Protocol) Trapezoid (RFC3261).

In the WebRTC Trapezoid model, both browsers are running a web application, which is downloaded from a different web server. Signaling messages are used to set up and terminate communications. They are transported by the HTTP or WebSocket protocol via web servers that can modify, translate, or manage them as needed. It is worth noting that the signaling between browser and server is not standardized in WebRTC, as it is considered to be part of the application (see Signaling). As to the data path, a PeerConnection allows media to flow directly between browsers without any intervening servers.

The two web servers can communicate using a standard signaling protocol such as SIP or Jingle (XEP-0166). Otherwise, they can use a proprietary signaling protocol.

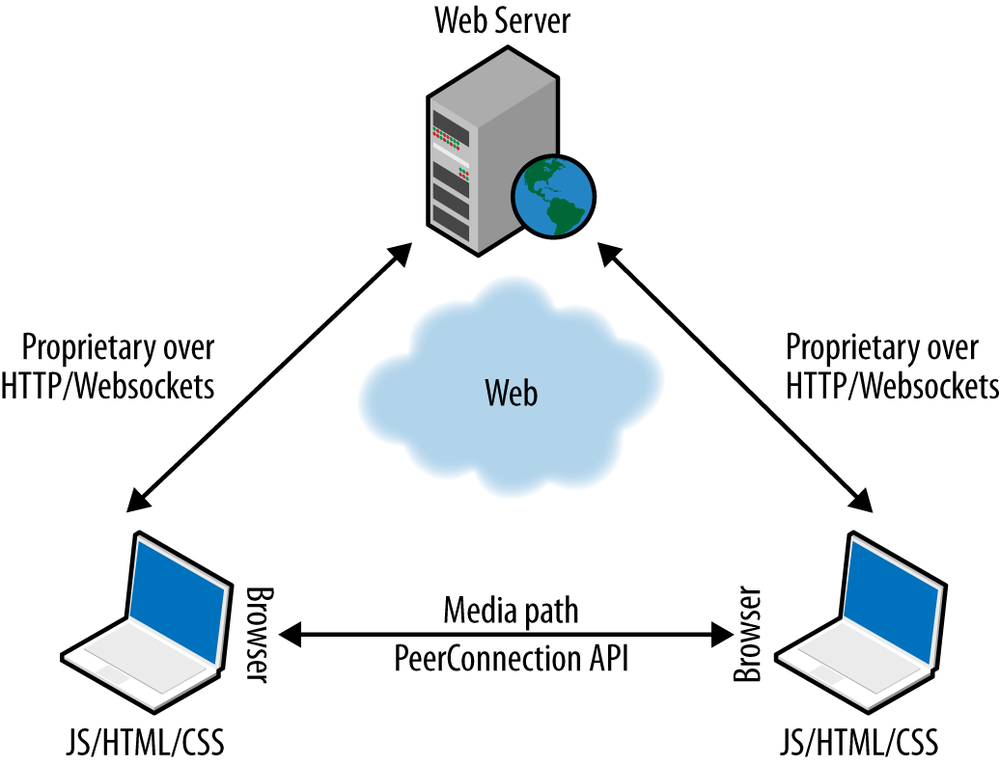

The most common WebRTC scenario is likely to be the one where both browsers are running the same web application, downloaded from the same web page. In this case the Trapezoid becomes a Triangle (see Figure 1-2).

WebRTC in the Browser

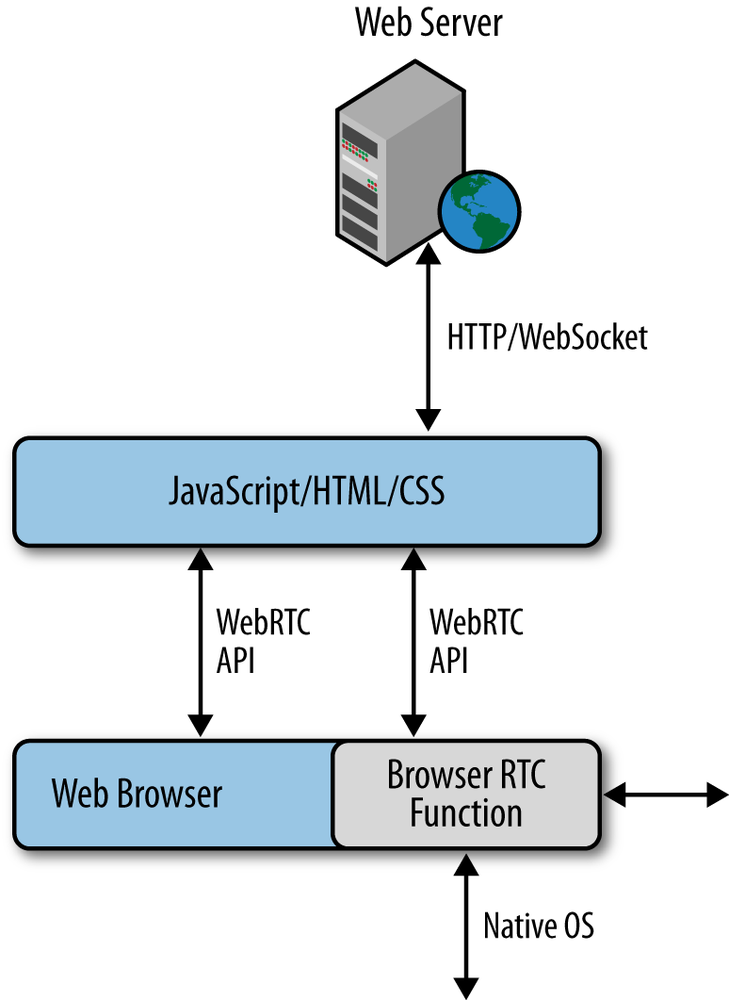

A WebRTC web application (typically written as a mix of HTML and JavaScript) interacts with web browsers through the standardized WebRTC API, allowing it to properly exploit and control the real-time browser function (see Figure 1-3). The WebRTC web application also interacts with the browser, using both WebRTC and other standardized APIs, both proactively (e.g., to query browser capabilities) and reactively (e.g., to receive browser-generated notifications).

The WebRTC API must therefore provide a wide set of functions, like connection management (in a peer-to-peer fashion), encoding/decoding capabilities negotiation, selection and control, media control, firewall and NAT element traversal, etc.

The design of the WebRTC API does represent a challenging issue. It envisages that a continuous, real-time flow of data is streamed across the network in order to allow direct communication between two browsers, with no further intermediaries along the path. This clearly represents a revolutionary approach to web-based communication.

Let us imagine a real-time audio and video call between two browsers. Communication, in such a scenario, might involve direct media streams between the two browsers, with the media path negotiated and instantiated through a complex sequence of interactions involving the following entities:

- The caller browser and the caller JavaScript application (e.g., through the mentioned JavaScript API)

- The caller JavaScript application and the application provider (typically, a web server)

- The application provider and the callee JavaScript application

- The callee JavaScript application and the callee browser (again through the application-browser JavaScript API)

Signaling

The general idea behind the design of WebRTC has been to fully specify how to control the media plane, while leaving the signaling plane as much as possible to the application layer. The rationale is that different applications may prefer to use different standardized signaling protocols (e.g., SIP or eXtensible Messaging and Presence Protocol [XMPP]) or even something custom.

Session description represents the most important information that needs to be exchanged. It specifies the transport (and Interactive Connectivity Establishment [ICE]) information, as well as the media type, format, and all associated media configuration parameters needed to establish the media path.

Since the original idea to exchange session description information in the form of Session Description Protocol (SDP) “blobs” presented several shortcomings, some of which turned out to be really hard to address, the IETF is now standardizing the JavaScript Session Establishment Protocol (JSEP). JSEP provides the interface needed by an application to deal with the negotiated local and remote session descriptions (with the negotiation carried out through whatever signaling mechanism might be desired), together with a standardized way of interacting with the ICE state machine.

The JSEP approach delegates entirely to the application the responsibility for driving the signaling state machine: the application must call the right APIs at the right times, and convert the session descriptions and related ICE information into the defined messages of its chosen signaling protocol, instead of simply forwarding to the remote side the messages emitted from the browser.

WebRTC API

The W3C WebRTC 1.0 API allows a JavaScript application to take advantage of the novel browser’s real-time capabilities. The real-time browser function (see Figure 1-3) implemented in the browser core provides the functionality needed to establish the necessary audio, video, and data channels. All media and data streams are encrypted using DTLS.[1]

Note

The DTLS handshake performed between two WebRTC clients relies on self-signed certificates. As a result, the certificates themselves cannot be used to authenticate the peer, as there is no explicit chain of trust to verify.

To ensure a baseline level of interoperability between different real-time browser function implementations, the IETF is working on selecting a minimum set of mandatory to support audio and video codecs. Opus (RFC6716) and G.711 have been selected as the mandatory to implement audio codecs. However, at the time of this writing, IETF has not yet reached a consensus on the mandatory to implement video codecs.

The API is being designed around three main concepts: MediaStream, PeerConnection, and DataChannel.

MediaStream

A MediaStream is an abstract representation of an actual stream of data of audio and/or video. It serves as a handle for managing actions on the media stream, such as displaying the stream’s content, recording it, or sending it to a remote peer. A MediaStream may be extended to represent a stream that either comes from (remote stream) or is sent to (local stream) a remote node.

A LocalMediaStream represents a media stream from a local media-capture device (e.g., webcam, microphone, etc.). To create and use a local stream, the web application must request access from the user through the getUserMedia() function. The application specifies the type of media—audio or video—to which it requires access. The devices selector in the browser interface serves as the mechanism for granting or denying access. Once the application is done, it may revoke its own access by calling the stop() function on the LocalMediaStream.

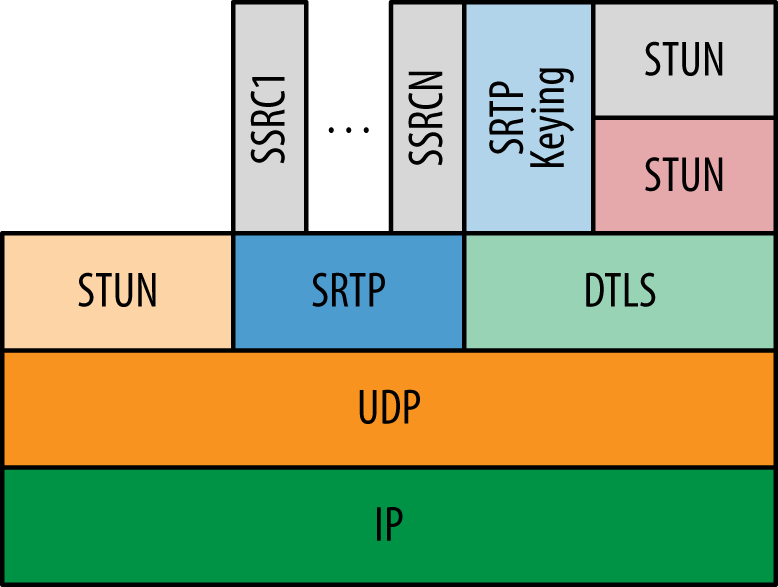

Media-plane signaling is carried out of band between the peers; the Secure Real-time Transport Protocol (SRTP) is used to carry the media data together with the RTP Control Protocol (RTCP) information used to monitor transmission statistics associated with data streams. DTLS is used for SRTP key and association management.

As Figure 1-4 shows, in a multimedia communication each medium is typically carried in a separate RTP session with its own RTCP packets. However, to overcome the issue of opening a new NAT hole for each stream used, the IETF is currently working on the possibility of reducing the number of transport layer ports consumed by RTP-based real-time applications. The idea is to combine (i.e., multiplex) multimedia traffic in a single RTP session.

PeerConnection

A PeerConnection allows two users to communicate directly, browser to browser. It then represents an association with a remote peer, which is usually another instance of the same JavaScript application running at the remote end. Communications are coordinated via a signaling channel provided by scripting code in the page via the web server, e.g., using XMLHttpRequest or WebSocket. Once a peer connection is established, media streams (locally associated with ad hoc defined MediaStream objects) can be sent directly to the remote browser.

The PeerConnection mechanism uses the ICE protocol (see ICE Candidate Exchanging) together with the STUN and TURN servers to let UDP-based media streams traverse NAT boxes and firewalls. ICE allows the browsers to discover enough information about the topology of the network where they are deployed to find the best exploitable communication path. Using ICE also provides a security measure, as it prevents untrusted web pages and applications from sending data to hosts that are not expecting to receive them.

Each signaling message is fed into the receiving PeerConnection upon arrival. The APIs send signaling messages that most applications will treat as opaque blobs, but which must be transferred securely and efficiently to the other peer by the web application via the web server.

DataChannel

The DataChannel API is designed to provide a generic transport service allowing web browsers to exchange generic data in a bidirectional peer-to-peer fashion.

The standardization work within the IETF has reached a general consensus on the usage of the Stream Control Transmission Protocol (SCTP) encapsulated in DTLS to handle nonmedia data types (see Figure 1-4).

The encapsulation of SCTP over DTLS over UDP together with ICE provides a NAT traversal solution, as well as confidentiality, source authentication, and integrity protected transfers. Moreover, this solution allows the data transport to interwork smoothly with the parallel media transports, and both can potentially also share a single transport-layer port number. SCTP has been chosen since it natively supports multiple streams with either reliable or partially reliable delivery modes. It provides the possibility of opening several independent streams within an SCTP association towards a peering SCTP endpoint. Each stream actually represents a unidirectional logical channel providing the notion of in-sequence delivery. A message sequence can be sent either ordered or unordered. The message delivery order is preserved only for all ordered messages sent on the same stream. However, the DataChannel API has been designed to be bidirectional, which means that each DataChannel is composed as a bundle of an incoming and an outgoing SCTP stream.

The DataChannel setup is carried out (i.e., the SCTP association is created) when the CreateDataChannel() function is called for the first time on an instantiated PeerConnection object. Each subsequent call to the CreateDataChannel() function just creates a new DataChannel within the existing SCTP association.

A Simple Example

Alice and Bob are both users of a common calling service. In order to communicate, they have to be simultaneously connected to the web server implementing the calling service. Indeed, when they point their browsers to the calling service web page, they will download an HTML page containing a JavaScript that keeps the browser connected to the server via a secure HTTP or WebSocket connection.

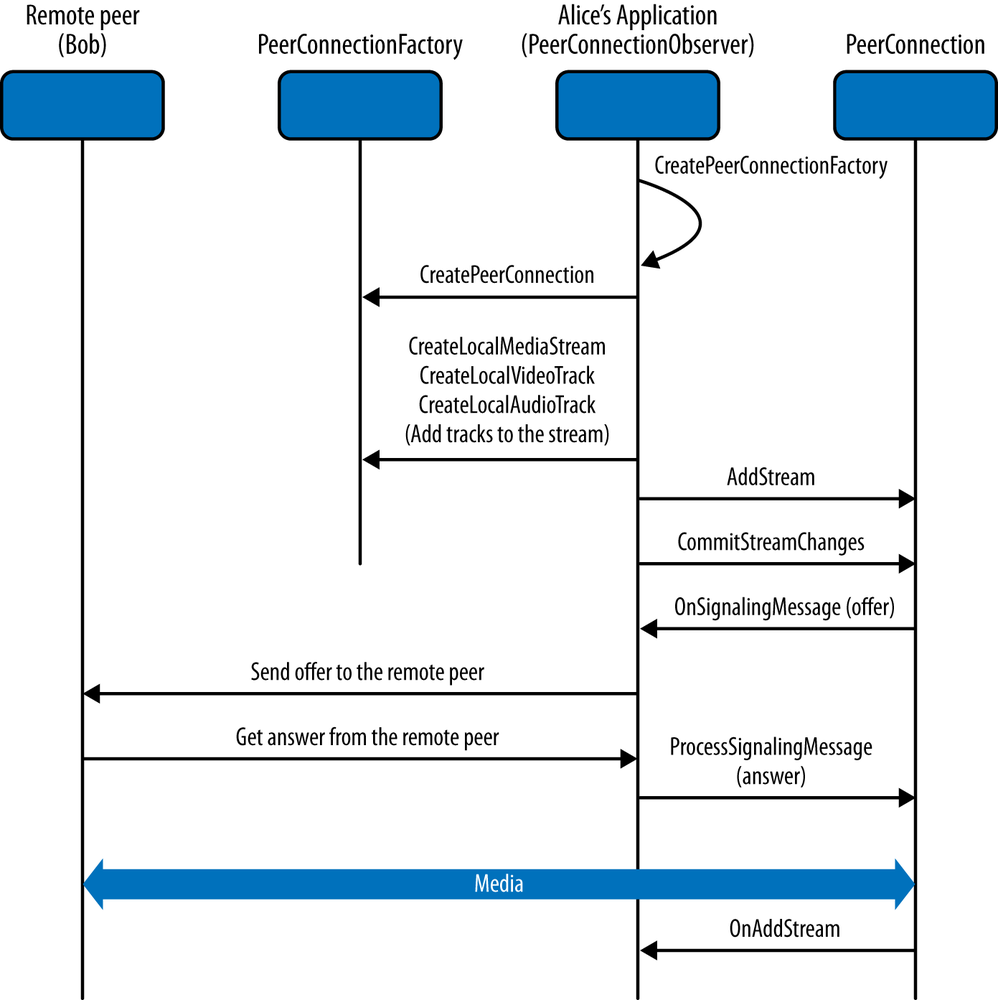

When Alice clicks on the web page button to start a call with Bob, the JavaScript instantiates a PeerConnection object. Once the PeerConnection is created, the JavaScript code on the calling service side needs to set up media and accomplishes such a task through the MediaStream function. It is also necessary that Alice grants permission to allow the calling service to access both her camera and her microphone.

In the current W3C API, once some streams have been added, Alice’s browser, enriched with JavaScript code, generates a signaling message. The exact format of such a message has not been completely defined yet. We do know it must contain media channel information and ICE candidates, as well as a fingerprint attribute binding the communication to Alice’s public key. This message is then sent to the signaling server (e.g., by XMLHttpRequest or by WebSocket).

Figure 1-5 sketches a typical call flow associated with the setup of a real-time, browser-enabled communication channel between Alice and Bob.

The signaling server processes the message from Alice’s browser, determines that this is a call to Bob, and sends a signaling message to Bob’s browser.

The JavaScript on Bob’s browser processes the incoming message, and alerts Bob. Should Bob decide to answer the call, the JavaScript running in his browser would then instantiate a PeerConnection related to the message coming from Alice’s side. Then, a process similar to that on Alice’s browser would occur. Bob’s browser verifies that the calling service is approved and the media streams are created; afterwards, a signaling message containing media information, ICE candidates, and a fingerprint is sent back to Alice via the signaling service.

[1] DTLS is actually used for key derivation, while SRTP is used on the wire. So, the packets on the wire are not DTLS (except for the initial handshake).

Get Real-Time Communication with WebRTC now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.