Chapter 4. Fake Data Gives Real Answers

What do you do when this happens?

Customer: We have sensitive data and a critical query that doesn’t run at scale. We need expert assistance. Can you help us?

Experts: We’d be happy to. Can we log into your machine?

Customer: No.

Experts: Can you show us your data?

Customer: No.

Experts: How about a stack trace?

Customer: No.

Customer: Can you help us?

At first glance, it may seem as though the customer in this scenario is being unreasonable, but they’re actually being smart and responsible. Their data and the details of their project may indeed be too sensitive to be shared with outside experts like you, and yet they do need help. What’s the solution?

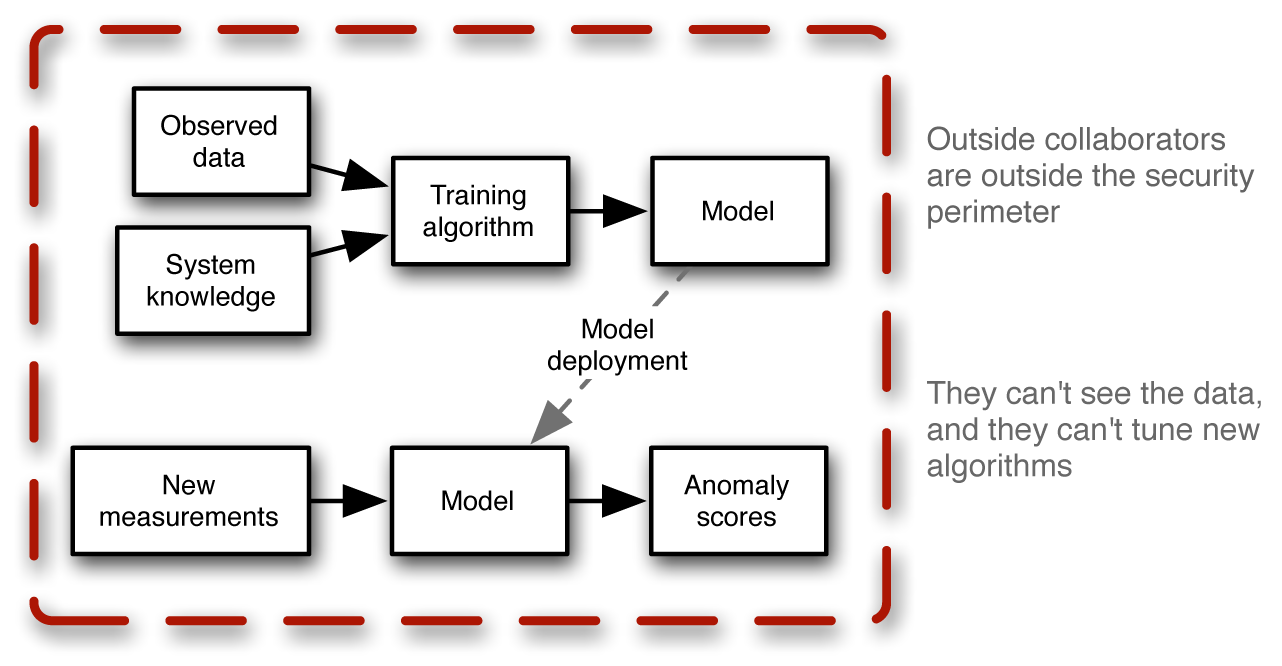

A similar problem arises when someone is trying to do secure development of a machine learning system. Machine learning requires an iterative approach that involves training a model, evaluating performance, tuning the model, and trying the process over again. It’s often not a straightforward cookbook process, but instead one that requires the data scientist to have a good understanding of the data. The data scientist must be able to interpret the initial results produced by the trained model and use this insight to tweak the knobs of the right algorithm to improve performance and better model reality. In these situations, the project can often benefit from the experience of outside collaborators, but getting this help can be challenging when there is a security perimeter protecting the system, as suggested in Figure 4-1.

Figure 4-1. The problem: secure development can be difficult. You may want to get help via collaboration with machine learning experts, but how do you do so effectively when they cannot be allowed to see sensitive data stored behind a security perimeter?

Business analysts, data scientists, and data modelers all face this type of problem. The challenge of safely getting outside help is basically the same whether the project involves sophisticated machine learning or much more basic data analytics and engineering. It’s a bit like the stories from ancient China in which a learned physician was called in to diagnose an aristocratic female patient. The doctor would enter her chamber and find that the patient was hidden behind an opaque bed curtain. Because the patient was a woman and of high status, the doctor would not have been allowed to see her, and yet he would be expected to make a diagnosis. Reports claim that in this situation the aristocratic lady might have extended one hand and wrist through the opening in the curtain and allowed the physician to take her pulse. He then had to formulate his diagnosis without ever seeing the patient directly or collecting other critical data. To a large extent, he had to guess.

Experts called in to advise a customer on how to fix a broken query or how to tune a model where secure data is involved may feel much like the physician from ancient times. The experts are charged with a task that is seemingly infeasible because of the opaque security curtain barring access to the data in question. They may not even be allowed a “wrist’s worth” of a glimpse at the data. But the good news is that they have another approach available to them that the physician did not have: the modern big data expert can make fake data that reveals what they need to know. Here’s how this approach works.

The Surprising Thing About Fake Data

Using synthetic data is not in itself a new idea—for instance, it’s a fairly standard practice among those who do machine learning to generate synthetic data to test out various algorithms or models before attempting to run them at scale or in production. It’s also common to use synthetic data for benchmarking. But synthetic data turns out to be much more valuable than is commonly thought: when used properly, it’s a powerful tool for dealing with analytics safely when the data of interest is sensitive and protected behind a security perimeter. What’s also surprising in what we’re suggesting here is the way we recommend that you generate the data and how you can tell if the synthetic data is a good match for your situation.

The idea in this situation is that instead of providing outsiders with access to sensitive, restricted data, instead you (or they) generate a substitute in the form of custom-built synthetic data. Intuitively, you likely would think that to do this effectively you would need to synthesize fake data that closely matches the characteristics of the real data being analyzed. That’s usually very hard to do. Here’s where the surprise comes in: exactly matching fake data to the characteristics of the real data usually turns out to be unnecessary.

Matching performance indicators (KPIs) is better than matching data details when generating fake data.

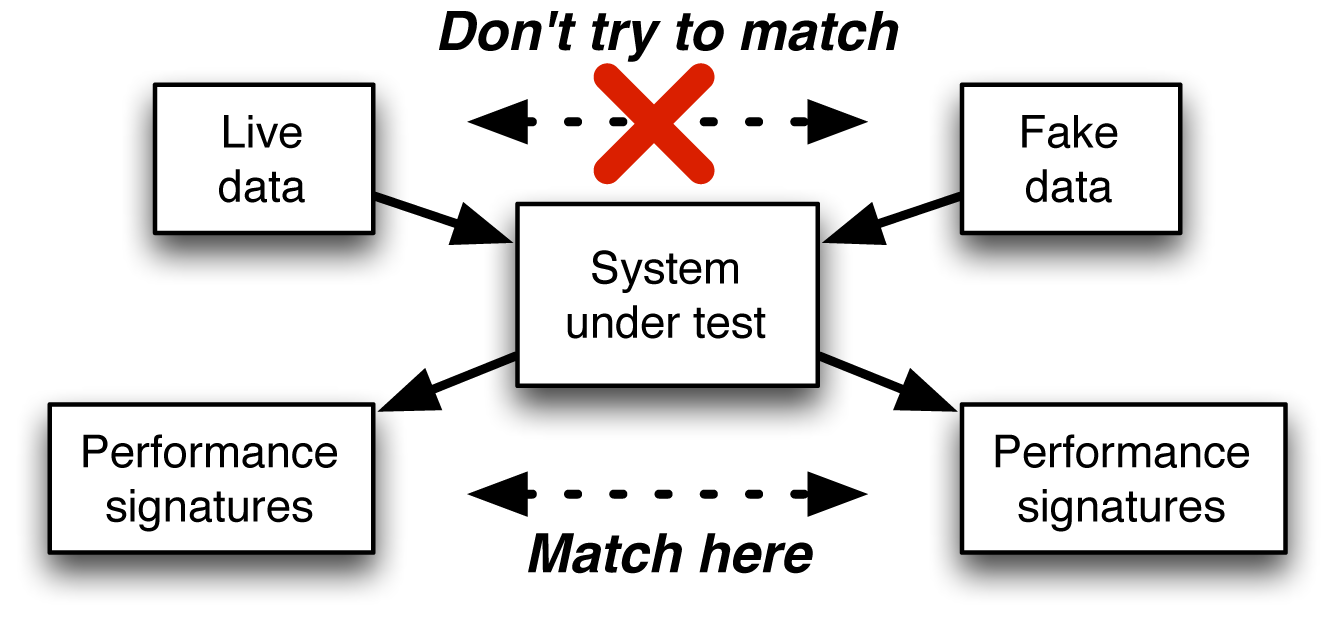

We first saw this pattern while working with a MapR customer, a very large financial company. They were seeking outside help to tune a machine learning model that was using a k-nearest neighbor algorithm. This type of machine learning model can be used for classification, in which you emulate human decisions to assign new data into pre-defined categories, or for fraud detection (perhaps using clustering). In the case of this financial customer, the data being analyzed could not be shown to an outside collaborator, so we generated synthetic data to use as a stand-in. And that led to a surprising observation: even though the synthetic data was not at all a realistic match for the characteristics of the original data, we were able to adjust the synthetic data so that the key performance indices (KPIs) for the model were similar whether we used real or fake data, and that turned out to be all that was needed. Fake data that matched real data with regard to KPIs was good enough to be used for training and tuning the machine learning model, as illustrated in Figure 4-2.

Figure 4-2. When is the fake data you’ve generated good enough? When it behaves like real data according to the system under test. It’s not the match between specific characteristics of the real and fake data that you need to aim for. Instead, it’s the comparison of KPIs that matters.

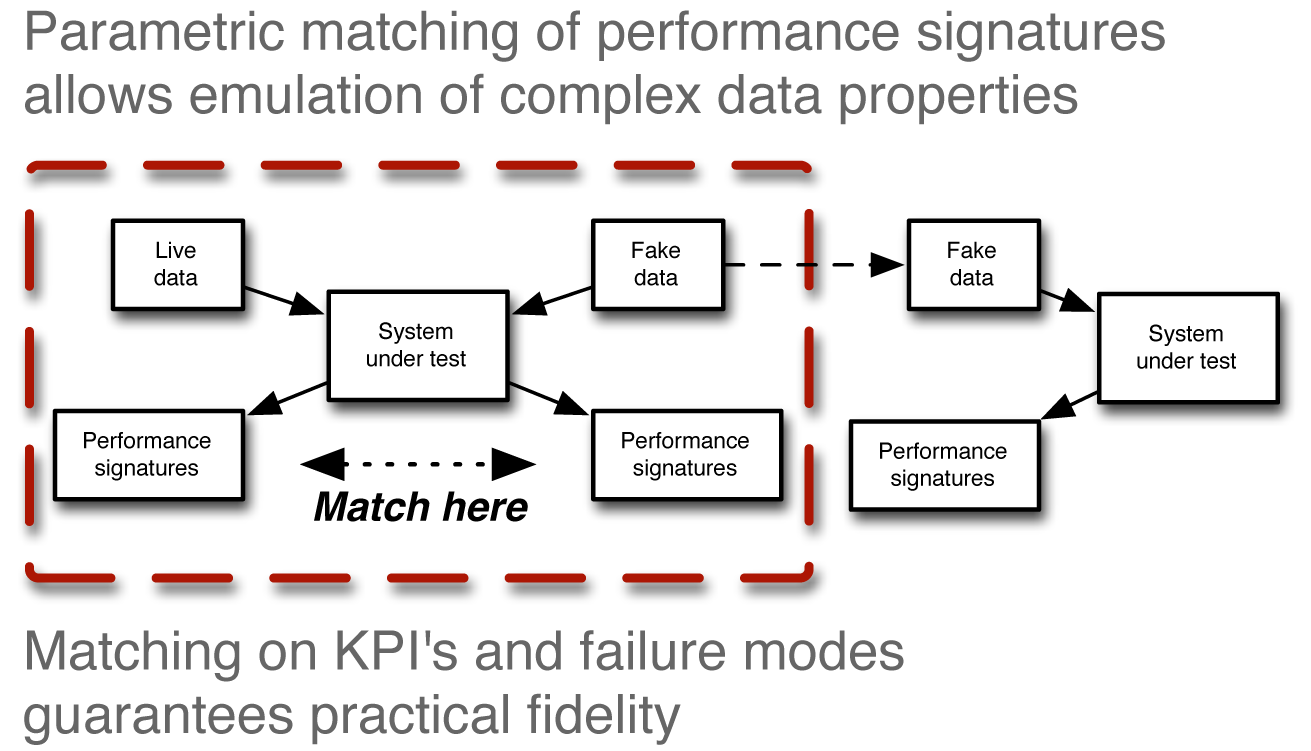

This approach of generating fake data with performance indicators similar to what the customer saw with real data made it possible for them to work with outside collaborators (that is, us) through iterative cycles of evaluation and tuning that are typical of effective machine learning. Once it was established inside the security perimeter that a particular version of synthetic data matched KPIs sufficiently well in the context of the current model training algorithms, the fake data was then used outside the security barrier to build new and improved versions of the model of interest. Those models in turn were subsequently trained and tested on the real data, within the security perimeter, in order to verify that the KPIs still matched well, as outlined in Figure 4-3. This approach allowed development of algorithms and software to proceed without outsiders having access to sensitive data but still with a very high degree of confidence that the algorithm would work the same on real data as it had on synthetic data.

Figure 4-3. When the KPIs match well enough for fake versus real data, the fake data can then be used for experimenting with the process of interest—in this case, tuning a machine-learning model.

Keep It Simple: log-synth

The program used to generate simulated data in the preceding financial use case was fairly specialized, but this approach of using fake data as a way to work outside a security perimeter showed great promise. In order to make this method practical in a larger sense, what was needed was a convenient way to generate synthetic data for a variety of situations. For this purpose, Ted Dunning, co-author of this book, wrote a new program called log-synth and made it available as open source software. It is not a large project, so it’s just provided via Github.

Log-synth provides a safe way to get the benefits of data sharing: you never actually give out the real data.

As in the earlier example, the goal of using log-synth is to prepare a dataset that is sufficient to stand in for secure data you do not want to share directly with outsiders. Instead, you just provide specifications for synthesizing appropriate fake data. And as with the earlier data generator, to use log-synth effectively, you should look for a match with key performance indicators exhibited by original data and the process in question rather than a match with exact characteristics of the real data, as previously shown in Figure 4-2.

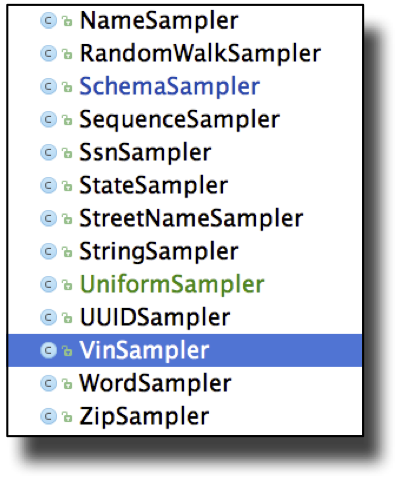

Log-synth is simple yet powerful, and it can be easily adjusted to suit your own needs. The program comes pre-packaged with the ability to simulate realistic data for a wide range of kinds of data. There are a number of different samplers that let you customize the fake data that you generate. Figure 4-4 shows some of the options.

Figure 4-4. Log-synth can be easily customized. The selected option here provides generation of realistic vehicle identification data (VIN). Other options include generation of realistic names of people, US-style social security numbers (SSN), street names, and so on. For the VIN, Zip, and SSN samplers, considerable amounts of additional data is available with each sample beyond the basic number.

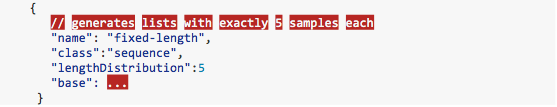

Figure 4-5 displays an excerpt of log-synth schema as an example of how simply it can be configured. For specialized situations, log-synth also is extensible, as we show in Chapter 6.

Figure 4-5. The open source log-synth program can be easily adjusted to fit the particular situation of interest. Shown here is partial schema that would be used to generate fake data with values that are sequences of a particular fixed length.

Generating synthetic data can be useful even in certain types of in-house analyses. For instance, during testing, it is useful to have sample databases of different sizes, but to down-sample large relational datasets without losing meaningful connections can be difficult. Instead, synthesizing sample datasets from scratch with all necessary linkages can actually be much easier. But the main advantage of log-synth is for dealing with the safe management of data security when outsiders need to interact with sensitive data in complex ways. Log-synth makes this interaction possible even when you can’t share the real thing.

This approach is not just theoretical. It’s already proven useful in real-world use cases involving secure data. Before going on to learn exactly how to use log-synth in Chapters 5–7, look at the following two different ways that it has been used in the real world. The first use case is a basic approach, and the second use case employs log-synth with extensions.

Log-synth Use Case 1: Broken Large-Scale Hive Query

This first log-synth use case is a real-life version of the scenario described at the opening of this chapter. The MapR customer was a large insurance company. Naturally, they held sensitive data that could not be shared directly with outsiders. The situation involved a very long and complicated Hive query the customer needed to run against a large-scale dataset—the problem was, the query was broken.

Initially, the customer had tried to down-sample the data in order to make it easier to work on fixing the query, but in this case, down-sampling wasn’t a feasible course of action because of the number of tables and relationships in the data. They were working with complex relational data, and down-sampling messed up the relationships. At this point, the customer wanted to get outside help for dealing with the broken query, and they turned to MapR for advice. This is where log-synth came into play.

The customer explained that because of data security, they could not permit logging in to their system by outsiders, nor could they provide access to real data or even stack traces that might have given clues to how and why the Hive query wasn’t running properly. Instead, we worked with them to create synthetic data that exhibited the same problematic behavior from Hive. In order to use log-synth effectively in this situation, the customer started off by providing a rough description of data size and gave us a copy of the database schema, but no sample of real data was provided.

The collaboration fell into these two stages:

- Stage 1: Prepare to collaborate: Generate appropriate fake data that could realistically emulate the behavior of the real data so that outsiders have a way to collaborate.

- Stage 2: Fix the broken query: Work outside the security perimeter using fake data in order to find the bug in the query and fix it.

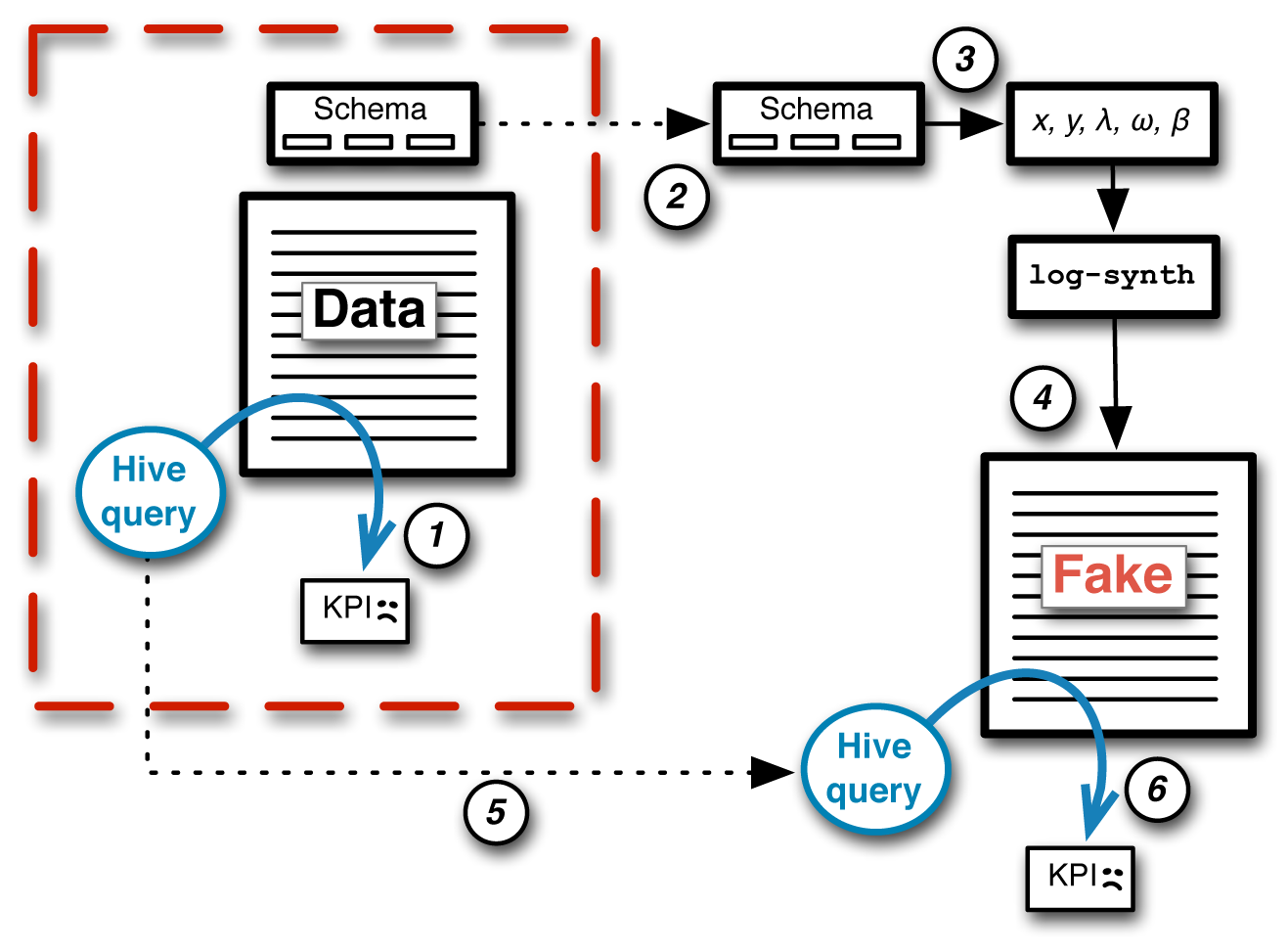

The steps in preparing to collaborate (Stage 1) are shown in Figure 4-6. The process started in Step 1 with an attempt to run the Hive query on real data in order to see the failure profile (in this case, the failure mode is the KPI). Next, in Step 2, the customer transferred the schema for this table outside the security perimeter to be available to us, the outside collaborators from MapR. In Step 3, we used this real schema information to design the log-synth-specific schema that was needed in order to synthesize an appropriate fake dataset. Step 4 used log-synth to generate a fake dataset.

Now we had synthetic material on which to work, but would this fake data provide an appropriate input for working on the query? Before starting that phase of the work, the usefulness of the fake data had to be verified. To do this, the customer provided us with the broken Hive query, as shown in Step 5 of the diagram in Figure 4-6. When the original Hive query was run against the newly generated fake data (Step 6), the result was the same failure signature as with the real data. In other words, we had found a match between the KPIs for the fake data and the real data. Put informally, if it breaks the same, it’s as good as the same.

Figure 4-6. A step-wise look at Stage 1 of collaboration to fix a complex Hive query for secured data. The goal was to set up a way for collaborators to work outside the security perimeter. To make this possible, the customer provided a portion of their schema to inform the process of generating fake data using log-synth. Because the original Hive query broke the same way on the fake data as it did with real data, the fake data could be used instead of the real data.

Keep in mind that the fake data didn’t match the real data in any way except that it had the same data types, sizes, and—most importantly—the same failure modes.

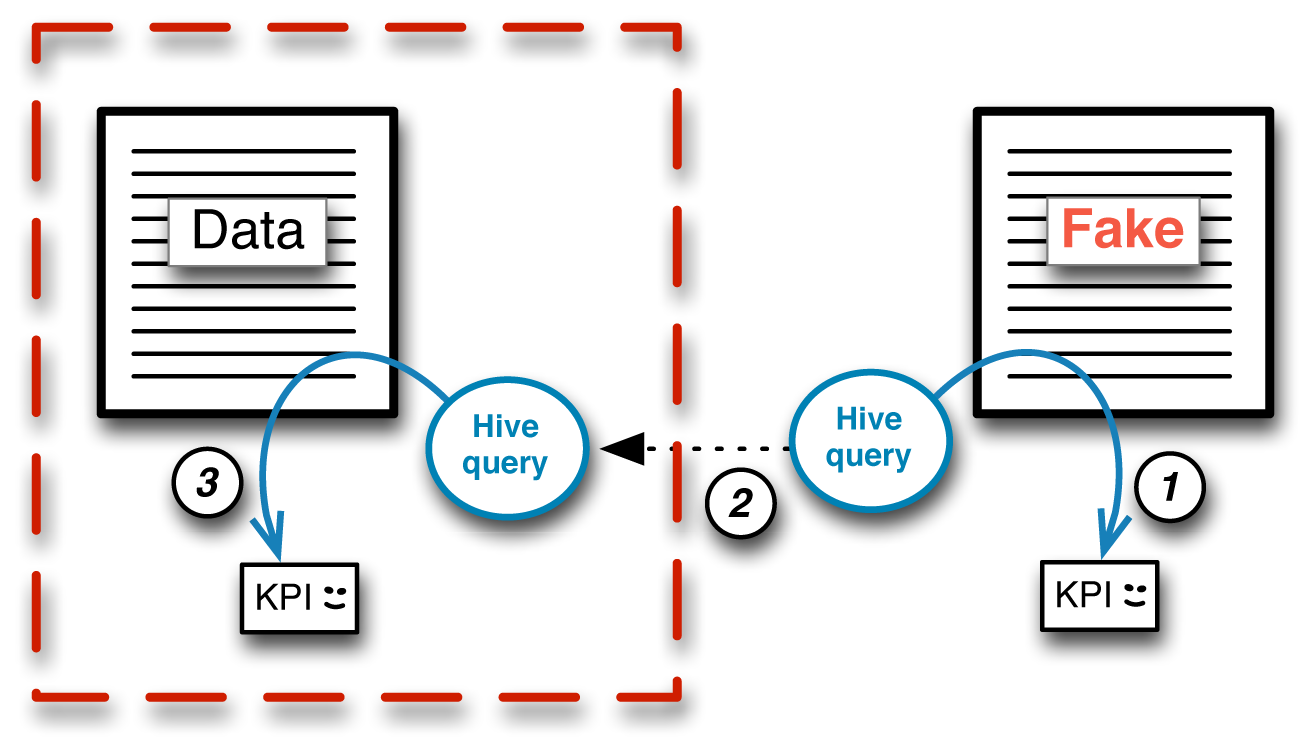

Stage 2 of the collaboration process involved having us (the outside collaborators) do the job the customer had brought us in to do. We were able to look at stack traces we had generated to track down the problem, which turned out to be a bug in Hive. We modified Hive and tested against the fake dataset to see that the query ran to completion on the fake data. The process up to this point is depicted as Step 1 of the diagram presented in Figure 4-7. Once the problem with Hive was fixed, the patched version of Hive was pulled back inside the security perimeter and tested on the real data (Steps 2 and 3). That test succeeded, so we knew we had a real fix.

Figure 4-7. Stage 2 of the collaboration between an insurance company and outside collaborators (MapR) to fix a bug in Hive found using a large-scale secure dataset. Because appropriate fake data was generated in Stage 1 of the process (Figure 4-6), in Stage 2 the outside experts could work outside the security perimeter to repair the bug in Hive so that the query could run properly on the secured real data back inside the perimeter.

The final result was that despite the need for the insurance company to keep their data unobserved behind the security perimeter, they were able to safely take advantage of outside expertise in order to find and fix the problem. Thanks to log-synth, they essentially shared access to the essence of what mattered in the data without outsiders ever having to actually see the real data.

This real-world use case demonstrates the usefulness of the open source log-synth program for safely carrying out large-scale data analytics in a secure environment. The same approach can be used in a wide variety of situations, especially because log-synth can be conveniently adjusted to produce customized fake data with the desired behavior to be realistic relative to the secure system of interest. Chapter 5 provides a detailed technical description of how this particular use case was implemented, and Chapter 7 gives tips for putting log-synth to work in your own situation.

In addition to these basic ways to use log-synth, you also can extend it for use in secure environments on more complex problems. That was what happened in our next fake data use case.

Log-synth Use Case 2: Fraud Detection Model for Common Point of Compromise

Log-synth is written in Java, and it’s relatively easy for someone who is comfortable with Java to extend log-synth to work well in a wider scope of use cases. One real-world example of that type of extension was the use of log-synth to help a large financial institution improve their fraud detection—in particular, to identify a merchant that was a common point of compromise. Before we look at what happened with this use case, here’s some background on the type of fraud in question.

What Thieves Do

Thieves have developed a clever method for the particular type of fraud that is the focus of this use case. They steal financial account numbers such as credit or debit card numbers by breaching a merchant’s data security. They might do this by skimming credit card numbers using false readers, by a point of sale virus installed on cash registers, or through a variety of other techniques. The issue is, at some point in time, the bad guys get access to financial account information for many consumers and then they go to work making fraudulent purchases.

That’s where the thieves do something really clever. In the old style of financial card fraud, the thief would steal a card number and then quickly make really big purchases before the account breach was reported and the card was shut down. In the new style of card fraud, thieves steal account information, often from many consumers at one time, and then make a huge number of small fraudulent purchases. Even if each fraudulent transaction is for only a small amount, perhaps $10–$25 equivalent for US currency, the total amount stolen adds up to millions of dollars when it’s happening to thousands or even millions of accounts. The clever thing is that because each transaction is relatively small, consumers are much less likely to notice them or report them, so it’s much harder to track down the point of compromise.

Why Machine Learning Experts Were Consulted

In this log-synth use case, the MapR customer asked for help improving their fraud detection model because they wanted to be able to identify more fraudulent transactions and to react faster than previously. This would help them identify the source of the breach as well as to close down compromised accounts faster, therefore limiting losses. As with the previous example, the customer had sensitive data and could not provide access to the MapR experts (us again) they wanted to consult. Log-synth to the rescue.

The financial customer had large-scale behavioral data on merchant transactions for individual consumers. For this problem, we transformed the data the customer had into a timeline of purchases for each consumer. Each timeline showed every merchant with whom a particular consumer conducted a business transaction, as well as the time of the transaction. The latter information is important to be able to identify which merchants were visited before a reported fraudulent purchase. The model was built to look for one or more merchants who appeared in a transaction more often than expected anywhere upstream from known fraud.

There are several reasons why this case is a hard problem to solve. One reason is that many frauds are small and therefore often go unreported. Another difficulty is that there are other sources of fraud unrelated to the compromise that cause a steady background of fraud reports. In other words, there is a lot of background noise that must be dealt with.

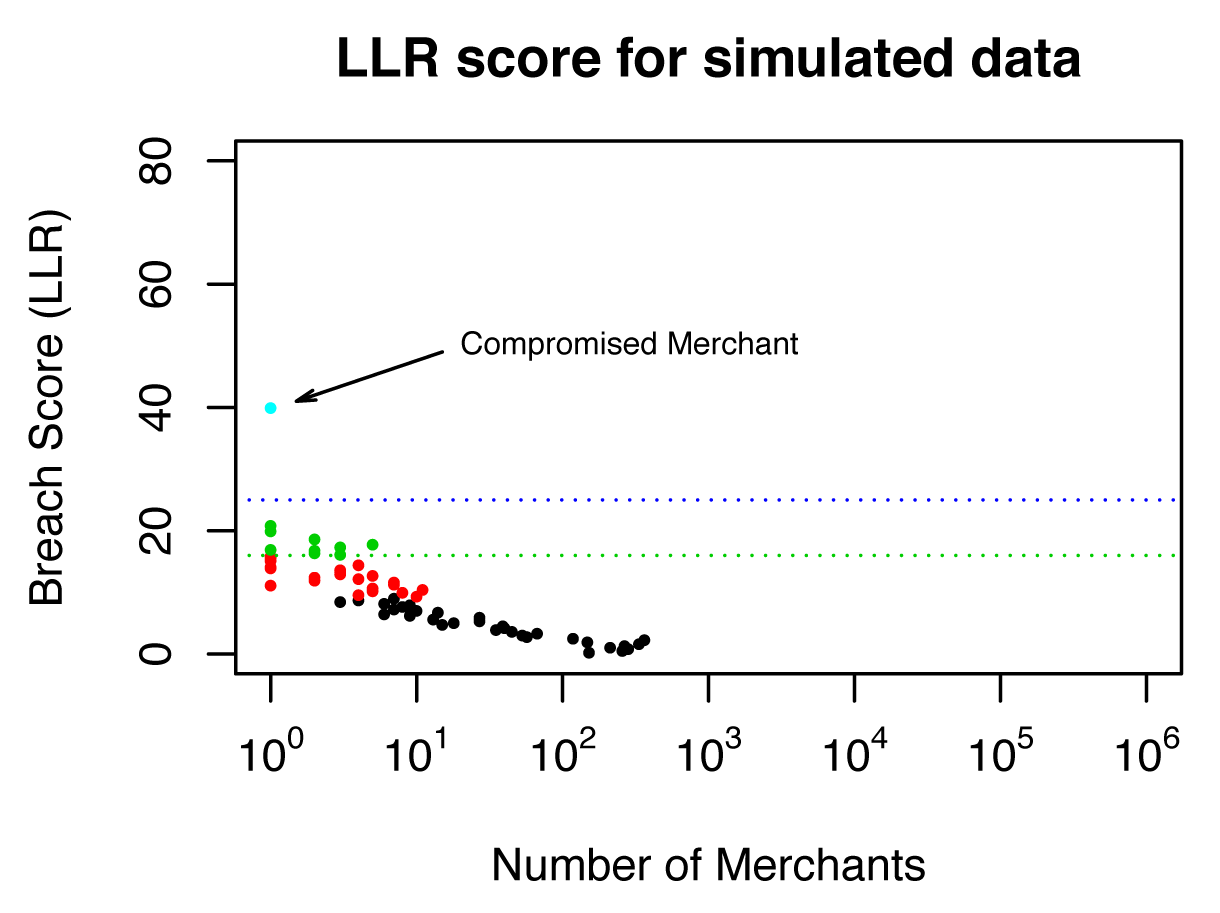

Using log-synth to Generate Fake User Histories

Back to the real-world use case. We wrote extensions to log-synth to enable it to generate fake user histories with made-up merchants so we could conduct common point of compromise simulations. The details of how this was done are discussed in Chapter 6. The results of the simulation were dramatic, as shown in Figure 4-8. Working with the fake data, the model identified a merchant with an exceptionally high breach score, which you can see marked in the figure. As it turned out, this merchant was exactly the one that was “compromised” in the simulations.

Figure 4-8. Fake data was generated using the log-synth program to match the performance signatures of the real data to find potential fraud in a merchant compromise scenario. Merchants were grouped by score. The group average score was graphed against the number of merchants in each group, and an extreme outlier popped out of the simulated data.

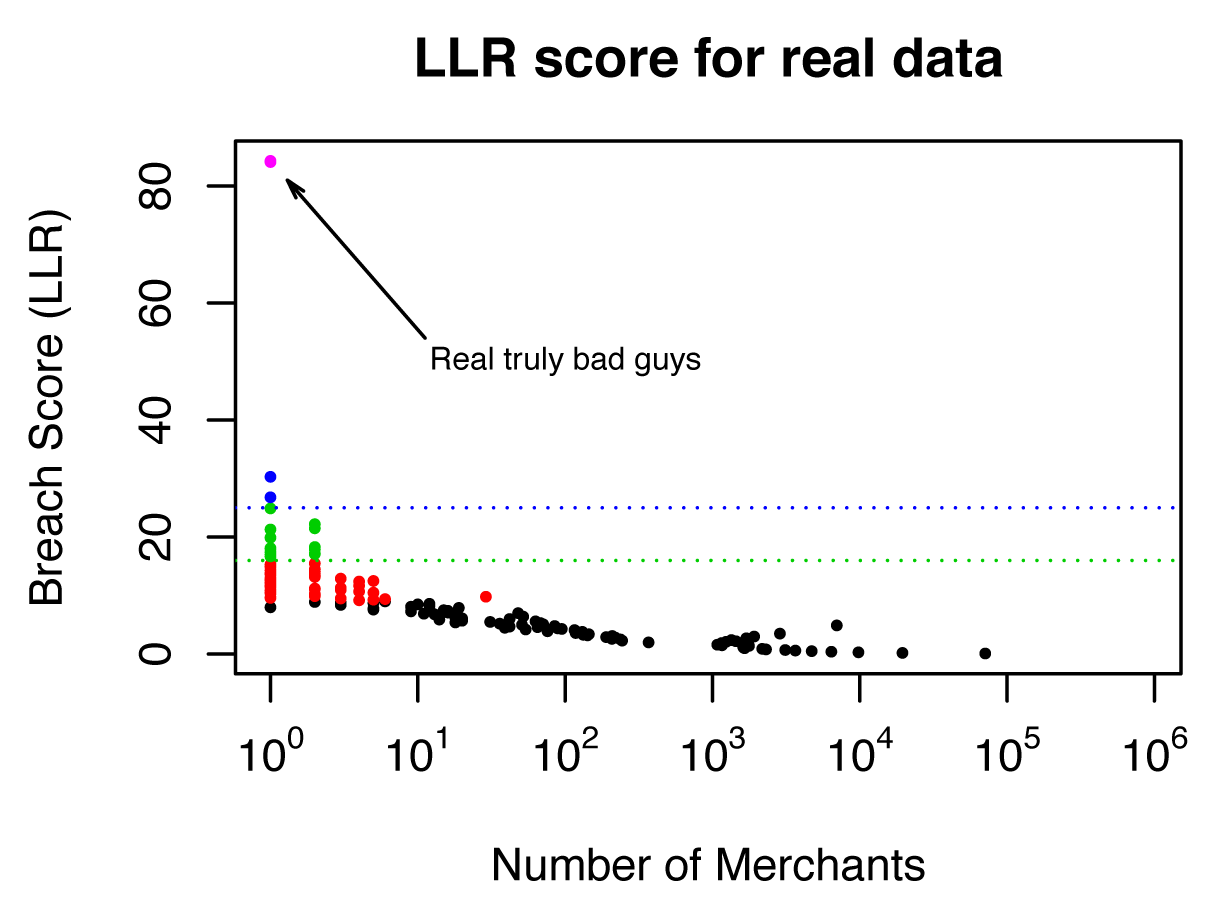

The use of fake data generated by log-synth let us build and tune a model appropriate for the customer’s goals without the customer ever having to show us any of the real data. Having demonstrated the potential for this model, it was then used by the customer on data inside their security perimeter. The real results were even more dramatic: the model identified a real merchant with an exceptionally high breach score, one over 80, as you can see in the graphical results in Figure 4-9.

Figure 4-9. Once the model was shown to work with appropriately synthesized fake data, the analysis was conducted on real data inside the security perimeter. The results for this real-world use case are even more dramatic than with the simulated data: notice the single merchant that showed up with a breach score over 80. This merchant turned out to be a serious point of compromise.

This story is not a hypothetical exercise; it’s a real-world use case. Seeing the high breach score for the merchant in question, the financial institution notified the US Secret Service, and an investigation took place. The merchant, a restaurant, was in fact seriously compromised and was the source of considerable amounts of fraud. In an odd twist of fate, the restaurant happened to be close to the residence of an executive of the financial company conducting the fraud detection. Needless to say, the results of this project caught his attention!

Summary: Fake Data and log-synth to Safely Work with Secure Data

The methods described in this chapter give you a simple but powerful way to safely work with outsiders even when you have highly sensitive data that is kept under a security barrier. You can get the benefit of their help in a way that is specific to your project without ever having to show them your data. The key is to generate fake data that produces realistic results with the process or system in question.

The most surprising thing about this approach is that the fake data doesn’t need to match the characteristics of real data exactly. That data-to-data feature matching is very hard to build into synthetic data, so it’s good news that it’s generally not needed. Instead, you just have to match KPIs between fake and real data when used as input for the process of interest. Note that these KPIs are relative to the specific problem and models you are working with.

It’s also good news that you don’t need a highly sophisticated, fancy version of data generation to do this. We provide links to code for a simple but powerful open source data synthesizer called log-synth that was developed by the author. This program is available to you via Github.

You can easily adjust the data you generate to fit your particular situation using convenient samplers that are pre-packaged with the base version of log-synth. In addition, log-synth can be used in an even wider range of settings by writing Java-based extensions to the original code. One such extension, a common point of compromise simulator, is already available with log-synth on Github.

One of the safest ways to share sensitive data publicly or with outside consultants is to provide a way for them to deal with the data without ever actually seeing it. That’s what the use of custom-generated fake data and log-synth let you do.

Detailed explanations of the two real-world use cases based on log-synth that were mentioned here are provided in Chapters 5–7, as are implementation details for using log-synth in general.

Get Sharing Big Data Safely now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.