CHAPTER ONE

Goals, Issues, and Processes in Capacity Planning

This chapter is designed to help you assemble and use the wealth of tools and techniques presented in the following chapters. If you do not grasp the concepts introduced in this chapter, reading the remainder of this book will be like setting out on the open ocean without knowing how to use a compass, sextant, or GPS device—you can go around in circles forever.

Background

The first edition of the book was written at the time when the use of public clouds was about to take off. Today, public clouds such as Amazon Web Services (AWS) and Microsoft Azure are businesses that generate more than $10 billion. In April 2016, IDC forecasted that the spending on IT infrastructure for cloud environments will grow to $57.8 billion in 2020. Further, the landscape of development and operations has undergone a sea change; for example, the transition from monolithic applications to Service-Oriented Architectures (SOAs) to containerization (e.g., Docker, CoreOS, Rocket, and Open Container Initiative) of applications. Likewise, another paradigm that is gaining momentum is serverless architectures. As per Martin Fowler:

Serverless architectures refer to applications that significantly depend on third-party services (knows as Backend as a Service or “BaaS”) or on custom code that’s run in ephemeral containers (Function as a Service or “FaaS”), the best-known vendor host of which currently is AWS Lambda. By using these ideas, and by moving much behavior to the frontend, such architectures remove the need for the traditional “always on” server system sitting behind an application. Depending on the circumstances, such systems can significantly reduce operational cost and complexity at a cost of vendor dependencies and (at the moment) immaturity of supporting services.

Further, the explosion of mobile traffic—as per Cisco, mobile data traffic will grow at a compound annual growth rate (CAGR) of 53 percent between 2015 and 2020, reaching 30.6 exabytes (EB) per month by 2020—and the virality of social media since the first edition has also had ramifications with respect to capacity planning. The global footprint of today’s datacenters and public clouds, as exemplified by companies such as Amazon, Google, Microsoft, and Facebook, also have direct implications on capacity planning. Having said that, the concepts presented in the first edition of this book (for example, but not limited to, hardware selection, monitoring, forecasting, and deployment) are still applicable not only in the context of datacenters but also apply even in the cloud.

Preliminaries

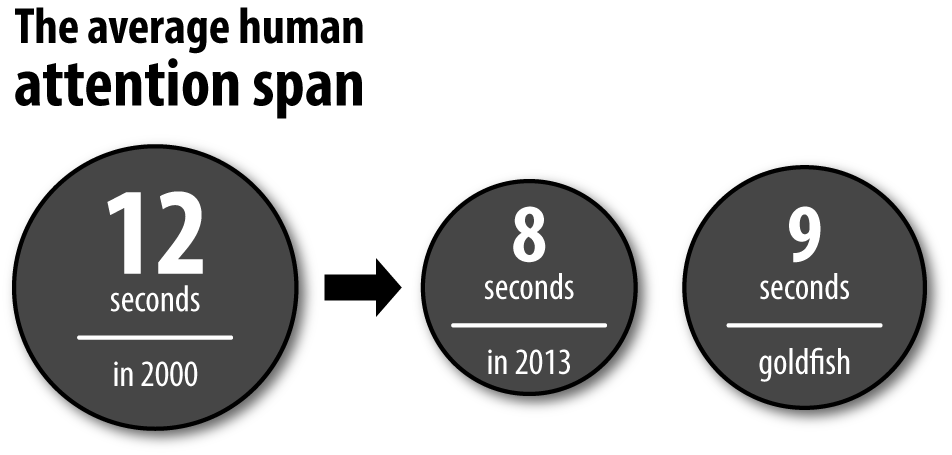

When we break them down, capacity planning and management—the steps taken to organize the resources a site needs to run properly—are, in fact, simple processes. You begin by asking the question: what performance and availability does my organization need from the website? The answer to the former is not static; in other words, the expected performance is ever increasing owing to the progressively decreasing attention span of the end user, as illustrated in Figure 1-1 (several studies have been carried out that underscore the impact of website performance on business; see “Resources”). Having said that, you should assess the performance expected by the end user in the short term going forward. Note that meeting the expected performance is a function of, say, the type (smartphones, smart watches, tablets, etc.) of next-generation devices and the availability and growth in volume of digital media and information. The expected availability also plays a key role in the capacity planning process. We can ascribe this, in part, to aspects such as Disaster Recovery (DR), failover, and so on.

Figure 1-1. Decreasing human attention span

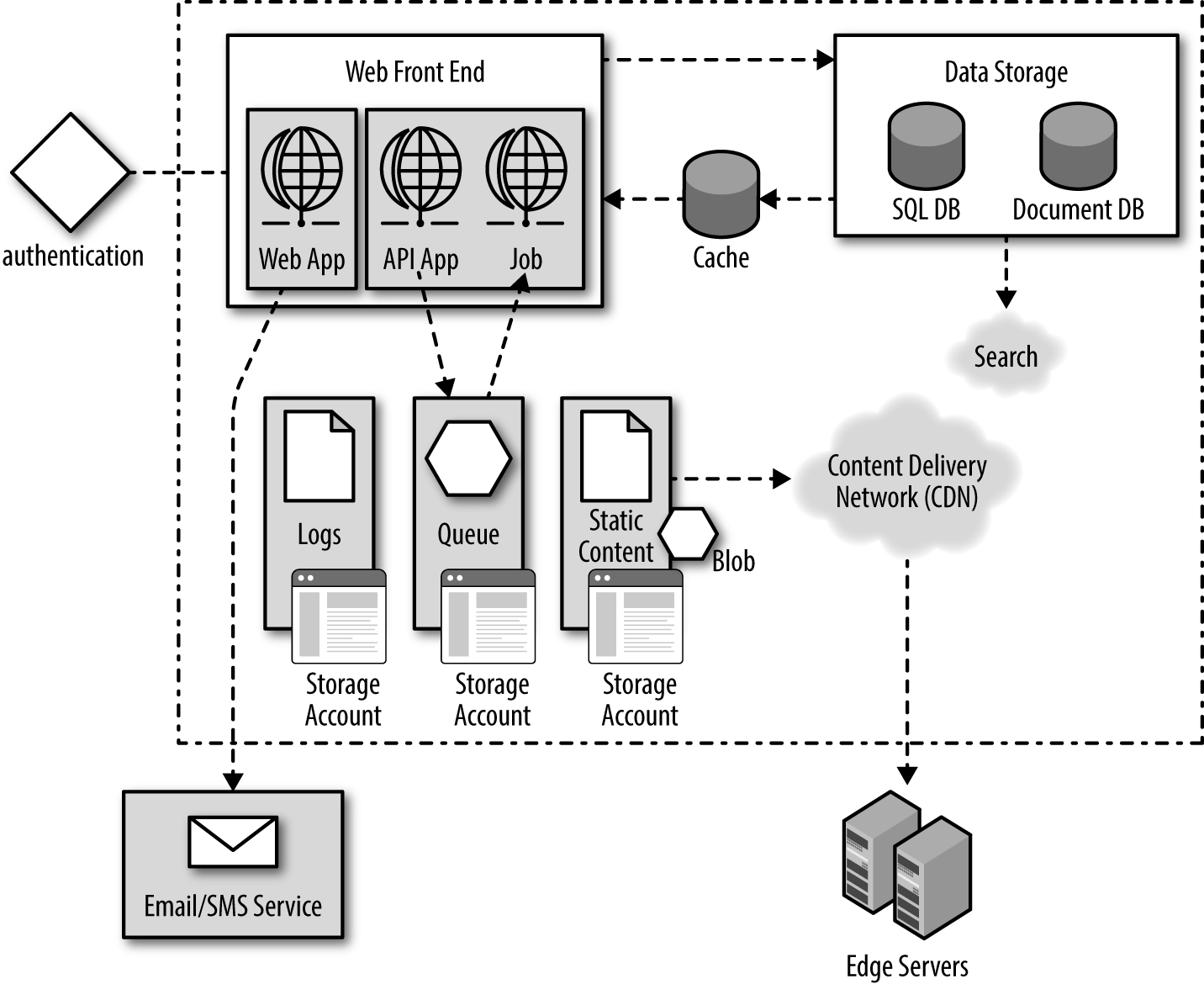

Figure 1-2 illustrates a typical modern web app, which could be a website, or one or more RESTful web APIs, or a job running in the background. A web API can be consumed either by browser clients or by native client applications or by server-side applications. As one would note from the figure, there are many components on the backend, such as a cache, a persistent data storage, a queue, a search and an authentication service, etc. Providing high availability and performance calls for, amongst other things, a systematic and robust capacity planning of each service/microservice on the backend.

Figure 1-2. Architecture of a typical modern web app

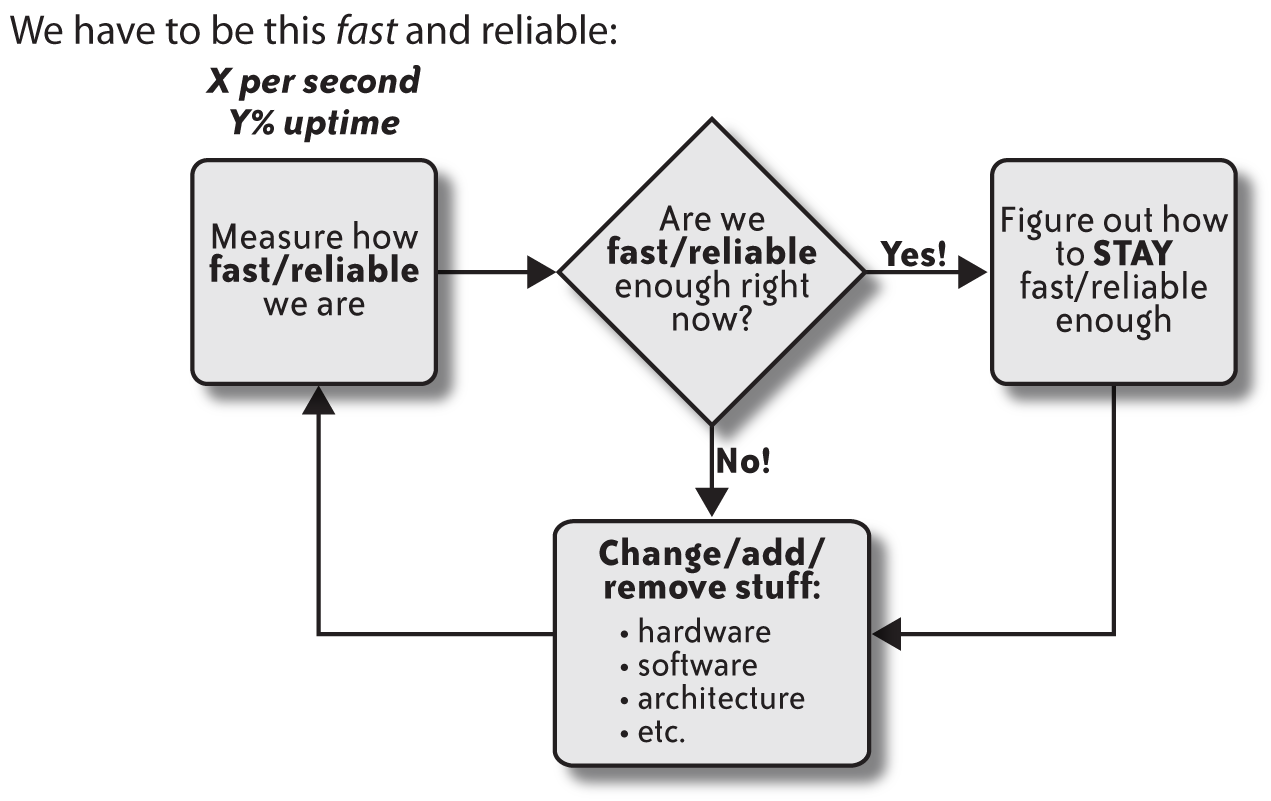

First, define the application’s overall load and capacity requirements using specific metrics, such as response times, consumable capacity, and peak-driven processing. Peak-driven processing is the workload experienced by an application’s resources (web servers, databases, etc.) during peak usage. For instance, peak usage is exemplified by the spike in the number of tweets/minute during the Super Bowl or by the launch of a new season of House of Cards on Netflix. The process for determining how much capacity is needed, illustrated in Figure 1-3, involves answering the following questions:

- How well is the current infrastructure working?

-

Measure the characteristics of the workload for each piece of the architecture, be it in the datacenter or in the cloud, that comprise an application—web server, database server, network, and so on—and compare them to what you came up with for the aforementioned performance requirements.

- What does one need in the future to maintain acceptable performance?

-

Predict the future based on what one knows about growth in traffic, past system performance (note that system performance does not scale linearly with increasing traffic), and expected performance from the end user. Then, marry that prediction with what you can afford as well as a realistic timeline. Determine what you would need and when you would need it.

- How can you install and manage the resources after procurement?

-

Deploy this new capacity with industry-proven tools and techniques.

- Rinse, repeat.

-

Iterate and calibrate the capacity plan over time.

Figure 1-3. The process for determining how much capacity is needed

The ultimate goal lies between not buying enough hardware and wasting money on too much hardware. As functionalities are moved from hardware to software, as exemplified by Software-Defined Networking (SDN), you should include such software components in the capacity-planning process.

Let’s suppose that one is a supermarket manager. One of the tasks is to manage the schedule of cashiers. A challenge in this regard is picking the appropriate number of cashiers that should be working at any moment. Assign too few, the checkout lines will become long and the customers will become irate. Schedule too many working at once and you would end up spending more money than necessary. The trick is finding the precise balance.

Now, think of the cashiers as servers, and the customers as client browsers. Be aware some cashiers might be better than others, and each day might bring a different number of customers. Then, you need to take into consideration that the supermarket is getting more and more popular. A seasoned supermarket manager intuitively knows these variables exist and attempts to strike a good balance between not frustrating the customers and not paying too many cashiers.

Welcome to the supermarket of web operations.

Quick and Dirty Math

The ideas we’ve just presented are hardly new, innovative, or complex. Engineering disciplines have always employed back-of-the-envelope calculations; the field of web operations is no different. In this regard, a report1 from McKinsey reads as follows:

But just because information may be incomplete, based on conjecture, or notably biased does not mean that it should be treated as “garbage.” Soft information does have value. Sometimes, it may even be essential, especially when people try to “connect the dots” between more exact inputs or make a best guess for the emerging future.

Because we’re looking to make judgments and predictions on a quickly changing landscape, approximations will be necessary, and it’s important to realize what that means in terms of limitations in the process. Being aware of when detail is needed and when it’s not is crucial to forecasting budgets and cost models. Unnecessary detail can potentially delay the capacity planning process, thereby risking the website/mobile apps’ performance. Lacking the proper detail can be fatal—both from a capital expenditure (capex) and end-user experience perspective.

When operating in the cloud, you are particularly susceptible to lack of details owing to the ease of horizontal scaling and autoscaling (in Chapter 6, we detail why it is not trivial to set up autoscaling policies). Selection of “heavyweight” instance types and over-provisioning—a result of poor due diligence—are two common reasons for a fast burn rate. The article “The Three Infrastructure Mistakes Your Company Must Not Make” highlighted that using a public cloud service becomes very pricey at scale. In contrast to Netflix, many other big companies—such as, but not limited to, Dropbox—that had their infrastructure on a public cloud from the beginning, have migrated to their own datacenter(s). The author of the article provides guidance when to move from a public cloud to running your own infrastructure.

Even in the context of datacenters, errors made in capacity planning due to lack of detail can have severe implications. This, in part, stems from the long supply-chain cycles and the overhead associated with provisioning and configuration. In contrast, the elasticity of the cloud, independent of cost concerns, greatly reduces the reliability risks of under-provisioning for peak workloads.

If you’re interested in a more formal or academic treatment of the subject of capacity planning, refer to “Readings”.

Predicting When Systems Will Fail

Knowing when each piece of the infrastructure will fail (gracefully or not) is crucial to capacity planning. Failure in the current context can correspond to either violation of Service-Level Agreements (SLAs) (discussed further in Chapter 2), graceful degradation of performance, or a “true” system failure (determining the limits corresponding to the latter is discussed in detail in Chapter 3). Capacity planning for the web or a mobile app, more often than we would like to admit, looks like the approach shown in Figure 1-4.

Figure 1-4. Finding failure points

Including the information about the point of failure as part of the calculations is mandatory, not optional. However, determining the limits of each portion of a site’s backend can be tricky. An easily segmented architecture helps you to find the limits of the current hardware configurations. You then can use those capacity ceilings as a basis for predicting future growth.

For example, let’s assume that you have a database server that responds to queries from the frontend web servers. Planning for capacity means knowing the answers to questions such as these:

-

Taking into account the specific hardware configuration, how many queries per second (QPS) can the database server manage?

-

How many QPS can it serve before performance degradation affects end-user experience?

Adjusting for periodic spikes and subtracting some comfortable percentage of headroom (or safety factor, which we talk about later) will render a single number with which you can characterize that database configuration vis-à-vis the specific role. On finding that “red line” metric, you will know the following:

-

The load that will cause the database to be unresponsive or, in the worst case, fail-over, which will allow you to set alert thresholds accordingly.

-

What to expect from adding (or removing) similar database servers to the backend.

-

When to begin sizing another order of new database capacity.

We talk more about these last points in the coming chapters. One thing to note is the entire capacity planning process is going to be architecture-specific. This means that the calculations that you make to predict increasing capacity might have other constraints specific to a particular application. For example, most (if not all) large-scale internet services have an SOA or microservice architecture (MSA), each microservice being containerized. In an SOA setting, capacity planning for an individual service is influenced by other upstream and downstream services. Likewise, the use of virtual machine (VM) versus container instances or the adoption of a partial serverless system architecture has direct implications on capacity planning.

For example, to spread out the load, a LAMP application (LAMP standing for Linux, Apache, MySQL, PHP5) might utilize a MySQL server as a master database in which all live data is written and maintained. We might use a second, replicated slave database for read-only database operations. Adding more slave databases to scale the read-only traffic is generally an appropriate technique, but many large websites have been forthright about their experiences with this approach and the limits they’ve encountered. There is a limit to how many read-only slave databases you can add before you begin to realize diminishing returns as the rate and volume of changes to data on the master database might be more than the replicated slaves can sustain, no matter how many are added. This is just one example of how an architecture can have a large effect on your ability to add capacity.

Expanding database-driven web applications might take different paths in their evolution toward scalable maturity. Some might choose to federate data across many master databases. They might split the database into their own clusters, or choose to cache data in a variety of methods to reduce load on their database layer. Yet others might take a hybrid approach, using all of these methods of scaling. This book is not intended to be an advice column on database scaling; it’s meant to serve as a guide by which you can come up with your own planning and measurement process—one that is suitable for your environment.

Make System Stats Tell Stories

Server statistics paint only part of the picture of a system’s health. Unless you can tie them to actual site or mobile app metrics, server statistics don’t mean very much in terms of characterizing usage. And this is something you would need to know in order to track how capacity will change over time.

For example, knowing that web servers are processing X requests per second is handy, but it’s also good to know what those X requests per second actually mean in terms of number of users. Maybe X requests per second represents Y number of users employing the site simultaneously.

It would be even better to know that of those Y simultaneous users, A percent are live streaming an event, B percent are uploading photos/videos, C percent are making comments on a heated forum topic, and D percent are poking randomly around the site/mobile app while waiting for the pizza guy to arrive. Measuring those user metrics over time is a first step. Comparing and graphing the web server hits-per-second against those user interaction metrics will ultimately yield some of the cost of providing service to the users. In the preceding examples, the ability to generate a comment within the application might consume more resources than simply browsing the site, but it consumes less when compared to uploading a photo. Having some idea of which features tax the capacity more than others gives you the context in which to decide where you would want to focus priority attention in the capacity planning process. These observations can also help drive any technology procurement justifications. A classic example of this is the Retweet feature in Twitter. A retweet by a celebrity such as Lady Gaga strains the service severely owing to the large fan out (which corresponds to a large number of followers—as of January 21, 2017, Lady Gaga had 64,990,626 followers!) and the virality effect.

Spikes in traffic are not limited to social media. Traffic spikes are routinely observed by ecommerce platforms such as Amazon, eBay, Etsy, and Shopify during the holiday season, broadcasting of ads during events such as Super Bowl, and the launch of, say, a much-awaited music album. From a business perspective, it is critical to survive these bursts in traffic. At the same time, it is not financially sound to have the capacity required to handle the traffic bursts to be always running. To this end, queuing and caching-based approaches have been employed to handle the traffic burst, thereby alleviating the capex overhead.

NOTE

For a reference to a talk about flash sales engineering at Shopify, refer to “Resources”.

Spikes in capacity usage during such cases should serve as a forcing function to revisit the design and architecture of a service. This is well exemplified by the famous tweet from Ellen DeGeneres during the 2014 Oscars (see Figure 1-5), which garnered a large number of views and, more important, a large number of retweets in a short timespan. The outage that happened post Ellen’s Oscar tweet was due to a combination of a missing architecture component in one of the data stores as well as the search tier. Proper load testing of the scenario—more than a million people search for the same tweet and then retweet it—would have avoided the outage.

Quite often, the person approving expensive hardware and software requests is not the same person making the requests. Finance and business leaders must sometimes trust implicitly that their engineers are providing accurate information when they request capital for resources. Tying system statistics to business metrics helps bring the technology closer to the business units, and can help engineers understand what the growth means in terms of business success. Marrying these two metrics together can therefore help spread the awareness that technology costs shouldn’t automatically be considered a cost center, but rather a significant driver of revenue. It also means that future capital expenditure costs have some real context so that even those nontechnical folks will understand the value technology investment brings.

Figure 1-5. A tweet whose retweeting induced an outage

For example, when you’re presenting a proposal for an order of new database hardware, you should have the systems and application metrics on hand to justify the investment. If you had the pertinent supporting data, you could say something along the following lines:

...And if we get these new database servers, we’ll be able to serve our pages X percent faster, which means our pageviews—and corresponding ad revenues—have an opportunity to increase up to Y percent.

The following illustrates another way to make a business case for a capex request:

The A/B test results of product P demonstrate an X% uptick in click-through rate, which we expect to translate to an increase in Y dollars on a quarterly basis or an approximate Z% increase in revenue after worldwide launch. This justifies the expected capex of C dollars needed for the launch of P worldwide.

The cost of having extra capacity headroom should be justified by correlating it with avoidance of risk associated with not having the capacity ready to go if and when there is a spike in traffic. In the event of a pushback from the finance folks, you should highlight the severity of adverse impact on the user experience due to the lack of investment and the consequent impact on the bottom line of the business. Thus, capacity planning entails managing risk to the business.

Backing up the justifications in this way also can help the business development people understand what success means in terms of capacity management.

Be it capacity or any other context under the operations umbrella, it is not uncommon to observe localized efforts toward planning and optimization, which limits their impact on the overall business. In this regard, in their book titled The Goal: A Process of Ongoing Improvement (North River Press), Goldratt and Cox present a very compelling walkthrough, using a manufacturing plant as an example, of how to employ a business-centric approach toward developing a process of ongoing improvement. (Refer to “Readings” for other suggested references.) Following are the key pillars of business-centric capacity planning:

-

What is the goal for capacity planning?

-

Deliver the best end-user experience because it is one of the key drivers for both user acquisition and user retention.

-

Meet well-defined performance, availability, and reliability targets (SLAs are discussed in Chapter 2).

-

Support organic growth and launch of new features or products.

-

Note that the goal in and of itself should not be to reduce the operational footprint or improve resource utilization (discussed further in Chapter 2 and Chapter 3). Datacenter or cloud efficiency is unquestionably important because it directly affects operating expense (opex); having said that, efforts geared toward optimizing opex should not adversely affect the aforementioned goal.

-

-

-

Understanding the following concepts:

-

Dependent events

-

Chart out the interactions between the different components—for instance, services or microservices—of the underlying architecture.

-

Determine the key bottleneck(s) with respect to the high-level goal. Bottlenecks can be induced due to a wide variety of reasons such as presence of a critical section (for references to prior research work on the topic, refer to “Readings”). In a similar vein, bottleneck(s) can assume many different forms such as low throughput at high resource utilization,2 lack of fault tolerance, and small capacity headroom. When finding the bottlenecks, you should focus on the root cause, not the effects. It is important to keep in mind that the high-level goal is always subject to the bottleneck(s) only.

Note that bottlenecks are not limited to one or more services or microservices. Suppliers in a supply chain also can be a bottleneck toward achieving the high-level goal. Thus, procurement (and deployment) are integral steps in the capacity planning process.

-

In practice, characteristics of an application evolve on an ongoing basis owing to an Agile development environment coupled with dynamic incoming user traffic. For instance, the dependencies between different microservices can change over time. This potentially can give rise to new bottleneck(s), and existing bottleneck(s) might be masked. This in turn would directly affect the capacity planning process. Exposing this to finance and business leaders is critical to having them appreciate the need for revising the capacity plan that was presented the last time.

-

Note that the higher the dependencies between the different components, the higher the complexity of the system and, consequently, the higher probability of cascading of potential capacity issues from one component to another.

-

-

Statistical fluctuations

-

How do endogenous and/or exogenous factors—such as a new release or loss of a server in case of the former or events such as the Olympics or Super Bowl in case of the latter—affect the high-level goal and the capacity headroom of each component? For instance, typically, the capacity headroom of bottlenecks is highly susceptible to incoming traffic.

-

To what extent can the increase in input traffic be absorbed—for instance, via queuing—by each component? Further, given that not all the incoming traffic is of equal “importance”—for example, searching for a celebrity versus the list of survivors during an earthquake—it is important to support priority scheduling for the queues associated with the bottleneck(s).

-

How to address—for example, by setting up graceful degradation—the trade-off between capacity headroom and queuing for each component without affecting the high-level goal. Note that consuming the capacity headroom helps to drain the queue; however, it risks not being able to serve a high volume and high-priority traffic going forward. In contrast, maintaining a healthy capacity headroom at the expense of queuing can potentially result in violation of SLAs.

-

-

-

Being agile in the presence of conflicting requests and changing priorities

-

For instance, in the context of web search, improving the freshness and ranking exercises pressure on the crawler and ranking components respectively of a web search engine. Given a fixed capex budget, the requirements of the aforementioned components for additional capacity might conflict with one another. This, in and of itself, can potentially be de-prioritized in the wake of capacity required to, for instance, support the launch of a new product.

-

It’s not uncommon to have policies set up to address the aforementioned cases. However, more than often, such policies do not serve their purpose. This can be attributed, in part, to a) policies are, by definition, not malleable to the constantly changing ground realities in production, and b) a bureaucratic framework inevitably grows around such policies, which in turn builds inertia and slows down the decision-making process.

-

-

Working in close collaboration with product and engineering teams

-

This is critical so as to be able to assess the capacity requirements going forward. Given the long lead times in procurement, it is of utmost importance to be predictive not reactive about one’s capacity requirements going forward. Even in the context of cloud computing wherein you can spin up new instances in a matter of a few minutes, it is important—for both startups and public companies—to keep a tab on one’s expected opex going forward. Further, an understanding of the product and engineering roadmaps can assist in hardware selection (we discuss this further in Chapter 3).

-

Given that in most cases the milestones on the roadmaps of different product and engineering teams are not aligned and that the capacity requirements differ from one team to another, procurement of different elements of the infrastructure should be pipelined in a capacity plan. In addition, you can go a step further by pipelining the procurement of different pieces of hardware (e.g., drives, memory, network switches, etc.).

-

A poor capacity plan can result in, for example, missing a deadline or a lost opportunity with respect to user acquisition or retention, which in turn has a direct impact on the bottom line of the business. As pointed out by Goldratt and Cox, “optimal” decision-making is a synchronized effort of people across (possible) multiple teams, not driven by policies.

As businesses evolve in an increasingly connected world, the complexities of applications will grow, as well. This coupled with increasing rate of innovation, being able to plan ahead well is going to be (as it is even now) a key contributor to success and growth of a business. To this end, as argued by Goldratt and Cox, thinking processes that trigger new ideas to solve problems associated with emerging applications need to be developed. Broadly speaking, the thinking processes cater to the following three fundamental questions:

-

What to change?

-

What to change to?

-

How to cause the change?

These questions provide a general framework to address an unforeseen problem. Having said that, as mentioned earlier, you should always relate the problem at hand with the high-level business goal. This ensures that you’re not mired in a local optimum and that every action or decision is geared toward achieving the goal.

Buying Stuff

After you have completed all the measurements, made estimations about usage, and sketched out predictions for future requirements, you need to actually buy things: bandwidth, storage or storage appliances, servers, maybe even instances of virtual servers or containers in the cloud. In each case, you would need to explain to the people with the checkbooks why your enterprise needs these things and when. (We talk more about predicting the future and presenting those findings in Chapter 4.)

In the cloud context, you can add capacity—be it compute and memory, network bandwidth, or storage—with the push of a button. You can use multicloud Continuous Delivery (CD) platforms such as Spinnaker for this. Having said that, proper due diligence is called for so as to avoid under-provisioning or over-provisioning. At Netflix, which is based entirely on AWS, engineers provision on-demand, putting in place effective cost reporting mechanisms at the team level to provide feedback on capacity growth. In general, this can potentially land you in—as put forward by Avi Freedman—a cloud jail, wherein you find yourself spending far too much money on infrastructure and are completely beholden to a cloud provider. The latter stems from the fact that you’re using the specific services and environments of the cloud provider. It’s not easy and is very expensive to switch after this happens.

Procurement is a process, and it should be treated as yet another part of capacity planning. Whether it’s a call to a hosting provider to bring new capacity online, a request for quotes from a vendor, or a trip to a local computer store, you need to take this important segment of time into account. Smaller companies, although usually a lot less “liquid” than their larger brethren, can really shine in this arena. Being small often goes hand-in-hand with being nimble. So, even though you might not be offered the best price on equipment as the big companies who buy in massive bulk, you would likely be able to get it faster, owing to a less cumbersome approval process.

The person you probably need to persuade is the CFO, who sits across the hall. In the early days of Flickr, we used to be able to get quotes from a vendor and simply walk over to the founder of the company (seated 20 feet away), who could cut and send a check. The servers would arrive in about a week, and we would rack them in the datacenter the day they came out of the box. Easy!

Amazon, Yahoo!, Twitter, Google, Microsoft, and other big companies with their own datacenters have a more involved cycle of vetting hardware requests that includes obtaining many levels of approval and coordinating delivery to various datacenters around the world. After purchases have been made, the local site operations teams in each datacenter then must assemble, rack, cable, and install operating systems on each of the boxes. This all takes more time than for a startup. Of course, the flip side is that at a large company, you can take advantage of buying power. By buying in bulk, organizations can afford more hardware, at a better price. In either case, the concern is the same: the procurement process should be baked into a larger planning exercise. It takes time and effort, just like all the other steps. (We discuss this more in Chapter 4.)

Performance and Capacity: Two Different Animals

The relationship between performance tuning and capacity planning is often misunderstood. Although they affect each other, they have different goals. Performance tuning optimizes the existing system for better performance. Capacity planning determines what a system needs and when it needs it, using the current performance as a baseline.

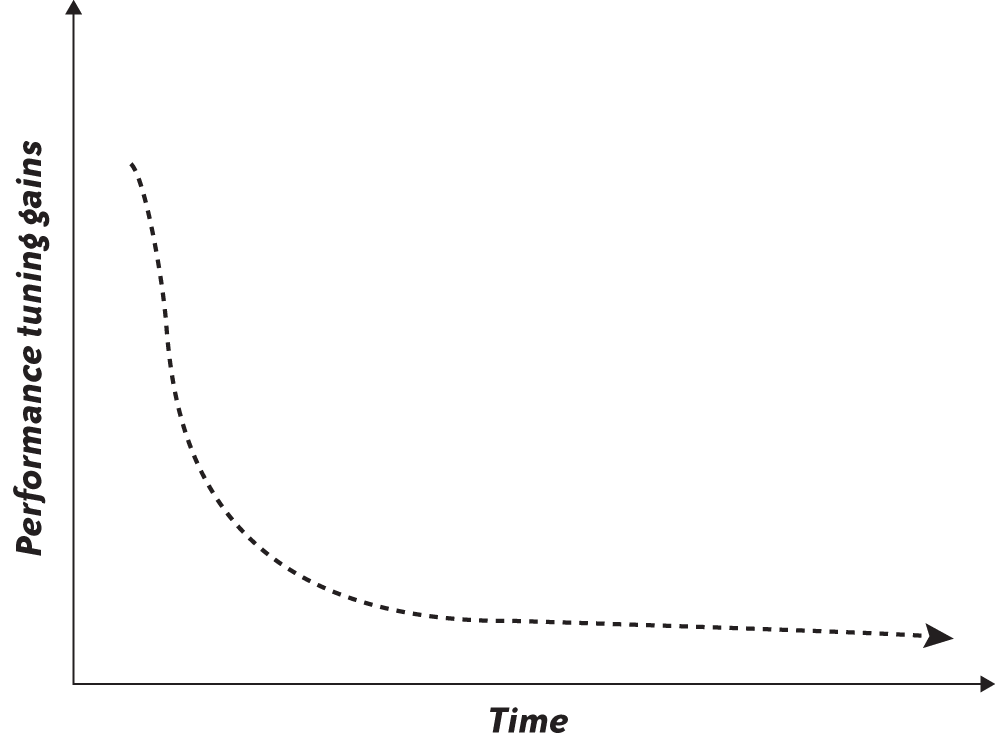

Let’s face it: tuning is fun and addictive. But after you spend some time tweaking values, testing, and tweaking some more, it can become an endless hole, sucking away time and energy for little or no gain. There are those rare and beautiful times when you stumble upon some obvious and simple parameter that can make everything faster—you come across the one MySQL configuration parameter that doubles the cache size, or realize after some testing that those TCP window sizes set in the kernel can really make a difference. Great! But, as illustrated in Figure 1-6, for each of those rare gems we discover, the number of obvious optimizations we find thereafter dwindles pretty rapidly. After claiming the low-hanging fruits, the marginal benefit of employing sophisticated techniques—compiler-driven code optimizations, profile-guided optimization, dynamic optimization, and so on—does not often merit the required investment of time. In such scenarios, high-level optimizations, which often entail system or algorithmic redesign, are warranted to squeeze out further performance gain, if any!

Figure 1-6. Decreasing returns from performance tuning

Capacity planning must happen without regard to what you might optimize. The first real step in the process is to accept the system’s current performance in order to estimate what you might need in the future. If at some point down the road you discover some tweak that brings about more resources, that’s a bonus.

Here’s a quick example of the difference between performance and capacity. Suppose that there is a butcher in San Francisco who prepares the most delectable bacon in the state of California. Let’s assume that the butcher shop has an arrangement with a store in San Jose to sell their great bacon there. Every day, the butcher needs to transport the bacon from San Francisco to San Jose using some number of trucks—and the bacon must get there within an hour. The butcher needs to determine what type of trucks he’ll need and how many of them to get the bacon to San Jose. The demand for the bacon in San Jose is increasing with time. It’s difficult having the best bacon in the state, but it’s a good problem to have.

The butcher has three trucks that suffice for the moment. But he knows that he might be doubling the amount of bacon he’ll need to transport over the next couple of months. At this point, he need to do one of two things:

-

Make the trucks go faster

-

Get more trucks

You’re probably beginning to see the point here. Even though the butcher might squeeze some extra horsepower out of the trucks by having them tuned up—or by convincing the drivers to exceed the speed limit—he’s not going to achieve the same efficiency gain that would come from simply purchasing more trucks. He has no choice but to accept the performance of each truck and then work from there.

The moral of this little story? When faced with the question of capacity, try to ignore those urges to make existing gear faster; instead, focus on the topic at hand, which is finding out what your enterprise needs, and when.

One other note about performance tuning and capacity: there is no silver bullet formula that we can pass on to you as to when tuning is appropriate and when it’s not. It might be that simply buying more hardware and spinning up new instances in the cloud is the correct thing to do when weighed against engineering time spent on tuning the existing system. This is exemplified by a sudden spike in the number of transactions on an ecommerce website or app owing to a sale or a spike in the number of searches on Google after an incident such as an outbreak of a disease. Almost always, events that can potentially affect end-user experience adversely (which in turn affects the bottom line) warrant immediate scaling up of the capacity and foregoing performance optimization. On the other hand, performance tuning is of high importance for startups as they need to minimize their opex costs, which in part helps to keep a check on their burn rate. Striking this balance between optimization and capacity deployment is a challenge and will differ from environment to environment.

The Effects of Social Websites and Open APIs

As more and more websites install Web 2.0 characteristics, web operations are becoming increasingly important, especially capacity management. If a site contains content generated by its users, utilization and growth isn’t completely under the control of the site’s creators—a large portion of that control is in the hands of the user community. In a similar vein, the capacity requirements are also governed by the use of third-party vendors for key services such as, but not limited to, managed DNS, content delivery, content acceleration, adserving, analytics, behavioral targeting, content optimization, and widgets. Further, in the age of social media, the incoming traffic is also subject to virality effects. This can be scary for people accustomed to building sites with very predictable growth patterns, because it means capacity is difficult to predict and needs to be on the radar of all those invested, both the business and the technology staff. The challenge for development and operations staff of a social website is to stay ahead of the growing usage by collecting enough data from that upward spiral to drive informed planning for the future.

Providing web services via open APIs (e.g., APIs exposed by Ad exchanges, Twitter’s API, Facebook’s Atlas/Graph/Marketing APIs) introduces another ball of wax altogether, given that an application’s data will be accessed by yet more applications, each with their own usage and growth patterns. It also means users have a convenient way to abuse the system, which puts more uncertainty into the capacity equation. API usage needs to be monitored to watch for emerging patterns, usage edge cases, and rogue application developers bent on crawling the entire database tree. A classic example of the latter is illustrated by attempts to scrape the connections of LinkedIn’s website. Controls need to be in place to enforce the guidelines or Terms of Service (TOS), which should accompany any open API web service (more about that in Chapter 3).

In John’s first year working at Flickr, photo uploads grew from 60 per minute to 660. Flickr expanded from consuming 200 GB of disk space per day to 880, and then ballooned from serving 3,000 images a second to 8,000. And that was just in the first year. Today, internet powerhouses have dozens of datacenters around the globe. In fact, as per Gartner, in 2016, the worldwide spending on datacenter systems is expected to top $175 billion and the overall IT spending is expected to be in the order of $3.4 trillion.

Capacity planning can become very important, very quickly. But it’s not all that difficult; all you need to do is pay a little attention to the right factors. The rest of the chapters in this book will show you how to do this. We’ll split up this process into segments:

-

Determining the goals (Chapter 2)

-

Collecting metrics, finding the limits, and hardware selection (Chapter 3)

-

Plotting out the trends and making robust (i.e., not susceptible to anomalies) forecasts based on those metrics and limits (Chapter 4)

-

Deploying and managing the capacity (Chapter 5)

-

Managing capacity in the cloud via autoscaling (Chapter 6)

Readings

-

D. A. Menascé et al. Performance by Design: Computer Capacity Planning by Example.

-

N. J. Gunther. Guerrilla Capacity Planning.

-

R. Cammarota et al. (2014). Pruning Hardware Evaluation Space via Correlation-driven Application Similarity Analysis.

-

K. Matthias and S. P. Kane. Docker: Up & Running: Shipping Reliable Containers in Production.

-

A. Mouat. Using Docker: Developing and Deploying Software with Containers.

-

K. Hightower. Kubernetes: Up and Running.

-

E. M. Goldratt and J. Cox. The Goal: A Process of Ongoing Improvement.

-

G. Kim et al. The Phoenix Project: A Novel About IT, DevOps, and Helping Your Business Win Kindle Edition.

Critical Section

-

E. W. Dijkstra. (1965). Solution of a problem in concurrent programming control.

-

L. Lamport. (1974). A new solution of Dijkstra’s concurrent programming problem.

-

G. L. Peterson and M. J. Fischer. (1977). Economical solutions for the critical section problem in a distributed system (Extended Abstract).

-

M. Blasgen et al. (1977). The Convoy Phenomenon.

-

H. P. Katseff. (1978). A new solution to the critical section problem.

-

L. Lamport. (1986). The Mutual Exclusion Problem: Part I – A Theory of Interprocess Communication.

-

L. Lamport. (1986). The Mutual Exclusion Problem: Part II – Statement and Solutions.

Resources

-

“Cloud Environments Will Drive IT Infrastructure Spending Growth Across All Regional Markets in 2016, According to IDC.” (2016) http://bit.ly/idc-cloud-env.

-

“Cisco Visual Networking Index: Forecast and Methodology, 2016–2021.” (2017) http://bit.ly/cisco-vis-net.

-

M. Costigan. (2016). Risk-based Capacity Planning.

-

“Risk-Based Capacity Planning.” (2016) http://ubm.io/2h85HKL.

-

“You Now Have a Shorter Attention Span Than a Goldfish.” (2017) http://ti.me/2wnVOQv.

-

L. Ridley. (2014). People swap devices 21 times an hour, says OMD.

-

B. Koley. (2014). Software Defined Networking at Scale.

-

“Scaling to exabytes and beyond.” (2016) https://blogs.dropbox.com/tech/2016/03/magic-pocket-infrastructure/.

-

“Mitigating Risks through Capacity Planning to achieve Competitive Advantage.” (2013) http://india.cgnglobal.com/node/47.

-

“The Epic Story of Dropbox’s Exodus from the Amazon Cloud Empire.” (2016) https://www.wired.com/2016/03/epic-story-dropboxs-exodus-amazon-cloud-empire/.

-

“Speed Matters for Google Web Search.” (2009) http://services.google.com/fh/files/blogs/google_delayexp.pdf.

-

“Cedexis Announces Impact, Connects Website Performance To Online Business Results.” (2015) http://www.cedexis.com/blog/cedexis-announces-impact-connects-website-performance-to-online-business-results/.

-

“How Loading Time Affects Your Bottom Line.” (2011) https://blog.kissmetrics.com/loading-time/.

-

“Speed Is A Killer – Why Decreasing Page Load Time Can Drastically Increase Conversions.” (2011) https://blog.kissmetrics.com/speed-is-a-killer/.

-

“Why Web Performance Matters: Is Your Site Driving Customers Away?” (2010) http://bit.ly/why-web-perf.

-

“Why You Need a Seriously Fast Website.” (2013) http://www.copyblogger.com/website-speed-matters/.

-

“Monitor and Improve Web Performance Using RUM Data Visualization.” (2014) http://bit.ly/mon-improve-web-perf.

-

“The Importance of Website Loading Speed & Top 3 Factors That Limit Website Speed.” (2014) http://bit.ly/importance-load-speed.

-

“Seven Rules of Thumb for Web Site Experimenters.” (2014) http://stanford.io/2wsXzKJ.

-

“SEO 101: How Important is Site Speed in 2014?” (2014) http://www.searchenginejournal.com/seo-101-important-site-speed-2014/111924/.

-

“User Preference and Search Engine Latency.” http://bit.ly/user-pref-search.

-

“Flash Sale Engineering.” (2016) https://www.usenix.org/conference/srecon16europe/program/presentation/stolarsky.

-

“How micro services are breaking down the enterprise monoliths.” (2016) http://www.appstechnews.com/news/2016/nov/16/micro-services-breaking-down-monolith/.

-

“Breaking Down a Monolithic Software: A Case for Microservices vs. Self-Contained Systems.” (2016) http://bit.ly/breaking-down-monolith.

-

“Breaking a Monolithic API into Microservices at Uber.” (2016) https://www.infoq.com/news/2016/07/uber-microservices.

-

“What’s Your Headroom?” (2016) http://akamai.me/2x5a3eR.

1 “Making data analytics work for you—instead of the other way around” (2016) http://bit.ly/making-analytics-work

2 A potential root cause can be ascribed to, but not limited to, poor selection of algorithm/data structure(s) or poor implementation.

Get The Art of Capacity Planning, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.