Chapter 5. Cross-Device Interactions and Interusability

In systems where functionality and interactions are distributed across more than one device, it’s not enough to design individual UIs in isolation. Designers need to create a coherent UX across all the devices with which the user interacts. That means thinking about how UIs work together to create a coherent understanding of the overall system, and how the user may move between using different devices.

This chapter explores interusability—the user experience of interconnected devices and cross-platform interactions—and how to make a bunch of diverse devices feel like they are working in concert.

This chapter introduces:

Cross-platform UX and the need to design for systems, not just devices (see “Cross-Platform UX and Usability”)

The concept of interusability (see “What Is Interusability?”)

The role of conceptual models in understanding what a system does, and why these are especially complex in IoT (see “Conceptual Models and Composition”)

Composition: distributing functionality between devices (see “Composition”)

Consistency across multiple UIs (see “Do users have set expectations of devices?”)

Continuity of data and interactions across devices (see “Consider the most likely combinations of devices”)

Applying interusability thinking to broader contexts (see “Broader contexts of interusability”)

This chapter addresses the following issues:

What makes a cross-device system feel coherent (see “Cross-Platform UX and Usability”)

Why it’s complicated to understand how an IoT system works, and how we might help users with this (see “Multidevice services are conceptually more complex”)

Deciding how best to allocate roles and functions to different devices in the system (see “Determining the right composition”)

Why terminology and platform conventions are especially important forms of consistency (see “Guidelines for Consistency Across Multiple Devices”)

Dealing with data and content synchronization issues in the UI (see “Consider the most likely combinations of devices”)

Designing interactions that require switching between devices (see “Handling Cross-Device Interactions and Task Migration”)

Cross-Platform UX and Usability

Many of the tools of UX design and HCI originate from a time when an interaction was usually a single user using a single device. This was almost always a desktop computer, which they’d be using to complete a work-like task, giving it more or less their full attention.

The reality of our digital lives moved on from this long ago. Many of us own multiple Internet-capable devices such as smartphones, tablets, and connected TVs, used for leisure as well as work. They have different form factors, may be used in different contexts and some of them come with specific sensing capabilities, such as mobile location.

Cross-platform UX is an area of huge interest to the practitioner community. But academic researchers have given little attention to defining the properties of good cross-platform UX. This has left a gap between practice and theory that needs addressing.

In industry practice, cross-platform UX has often proceeded device by device. Designers begin with a key reference device and subsequent interfaces are treated as adaptations. In the early days of smartphones, this reference device was often the desktop. In recent years, the “mobile first” approach1 has encouraged us to start with mobile web or apps as a way to focus on optimizing key functionality and minimize “featuritis.” Such services usually have overarching design guidelines spanning all platforms to ensure a degree of consistency. The aim is usually to make the different interfaces feel like a family, rather than on how devices work together as a system.

This works when each device is delivering broadly the same functionality. Evernote, eBay, and Dropbox (see Figure 5-1) are typical examples: each offers more or less the same features via a responsive website and smartphone apps. The design is optimized for each device, but provides the same basic service functionality (bar a few admin functions that may only be available on the desktop).

Figure 5-1. Evernote offers broadly the same service functionality across different device types (image: Evernote)

But this approach breaks down when the system involves very diverse devices with different capabilities working in concert. In IoT, many devices do not even have screens, or an on-device user interface. Multiple devices may have UIs with very different forms or specialized functionality (see Figure 5-2). Even if the UI is only on one device, the service still depends on all the devices working together in concert.

Figure 5-2. The SmartThings ecosystem contains a range of specialized devices that complement each other (image: SmartThings)

It’s not possible to design a system like this by thinking about one device at a time: this is likely to create a disjointed experience.

In order to use it effectively, the user has to form a coherent mental image of the overall system. This includes its various parts, what each does and how different objectives can be achieved using the system as a whole. Traditional single-device usability doesn’t tell us very much about how to do this.

What Is Interusability?

Charles Denis and Laurent Karsenty first coined the term “inter-usability” in 2004 to describe UX across multiple devices.2 Conventional usability theory is under-equipped to cope with cross-platform design. However, one 2010 paper by Minna Wäljas, Katarina Segerståhl, Kaisa Väänänen-Vainio-Mattila, and Harri Oinas-Kukkonen proposes a practical model of interusability.3

Wäljas et al. propose that the ultimate goal of cross-platform design is that the experience should feel coherent. Does the service feel like the devices are working in concert, or does the UX feel fragmented?

They define three key concepts for cross-platform service UX, which together ensure a coherent experience:

Composition (how devices and functionality are organized)

Appropriate consistency of interfaces across different devices

Continuity of content and data to ensure smooth transitions between platforms

The paper was published in 2010 and the services evaluated (including Nike+ and Nokia Sportstracker) now inevitably feel a little dated. But we have found the model still holds up well in our own work designing IoT services, and it’s a key reference for the rest of this chapter.

Conceptual Models and Composition

In this section, we’ll look at two related concepts that help us design systems spanning multiple devices.

Conceptual models refer to the way humans understand the overall system (and its interfaces) to work. Users need some understanding of how the system works in order to figure out how to interact with it. As we just saw, composition is a dimension of interusability. It refers to the way user-facing functionality is distributed between different devices: which device does what. The two concepts are related in cross-platform design: understanding which device does what is part of forming an effective conceptual model.

Conceptual Models

The user model and the design model

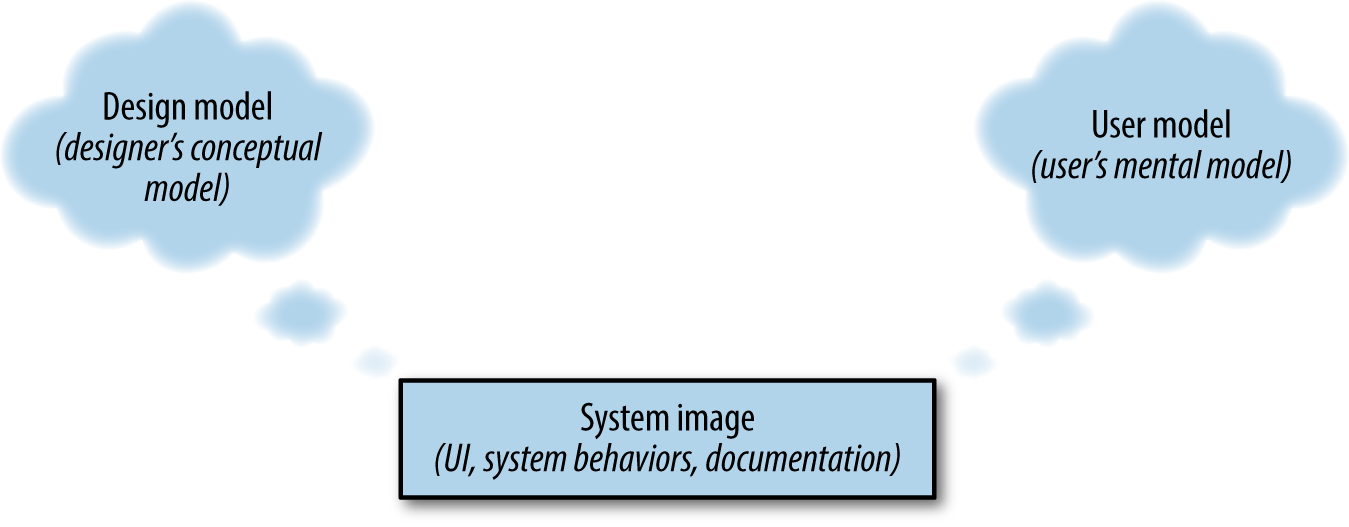

The conceptual model may refer to the way the user understands the system, or the way the designers (or engineers) think about the system. Users develop a mental model of the system (a user model) that enables them to understand what it does, how to interact with it, and how it will behave. At first, this will be based on prior experience of other systems or similar activities. Over time, they will develop the model through their experiences with the system itself. The way the designers or engineers think about the system will be reflected in the design model (this distinction was defined in Don Norman’s The Design of Everyday Things).4

As Norman puts it: “The problem is to design the system so that, first, it follows a consistent, coherent conceptualization—a design model—and second, so that the user can develop a mental model of the system—a user model—consistent with the design model.”

The similarity between the design model and the user’s mental model is a core determinant of usability in any system, not just IoT. How easy is it for the user to figure out how to achieve a particular goal using the system (which Norman refers to as bridging “the gulf of execution”5)? How easy is it for the user to understand what the system does in response (“the gulf of evaluation”)? (See Figure 5-3.)

Figure 5-3. The gulfs of evaluation and execution

Ideally, the user model maps closely onto the design model. But frequently, the design model may not be a good fit for what the user wants to do, or users may only partially understand it. If the system doesn’t conform to any prior expectations, users must develop a new mental model, based on trying to infer the design model. Users learn about the design model through the interface, behaviors of the system, and documentation—which Norman refers to as the system image (see Figure 5-4).

Figure 5-4. Diagram: the user, system, and design model (redrawn from http://www.jnd.org/dn.mss/design_as_communication.html)

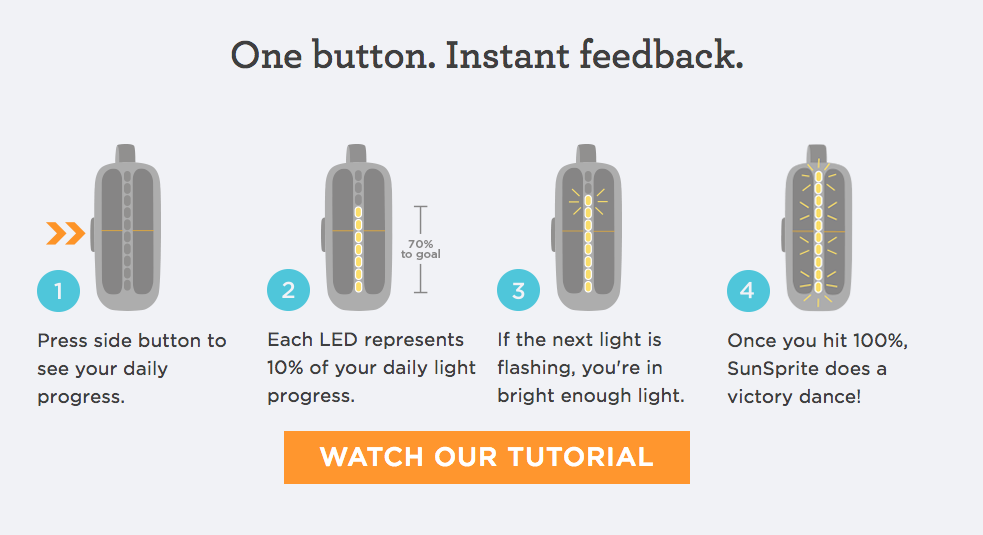

You can’t design the user’s mental model directly. But you can design the system image to convey the design model clearly (see Figure 5-5). You should also define your design model explicitly; to make sure it’s clear, consistent, and not overly technical or complex for your audience.

Figure 5-5. A system image: the SunSprite Tracklight helps users monitor their daylight exposure; the instructions explain what it does, how to interact, and how data is displayed using the LEDs (image: SunSprite)

Multidevice services are conceptually more complex

Back when Norman first wrote about conceptual models, a system was generally a software application running on a standalone computer. Multidevice services make conceptual models more complicated. There are not just more interfaces, but more places where processing and functionality can live and where data can be stored. Because there are more nodes and connections, there are more points of failure and ways to fail. This is often where complexity is exposed: when the system is working well, it may not matter where your data or preferences are stored. But when parts or connections fail, the user has to understand something about how the system works in order to understand what is happening and why.

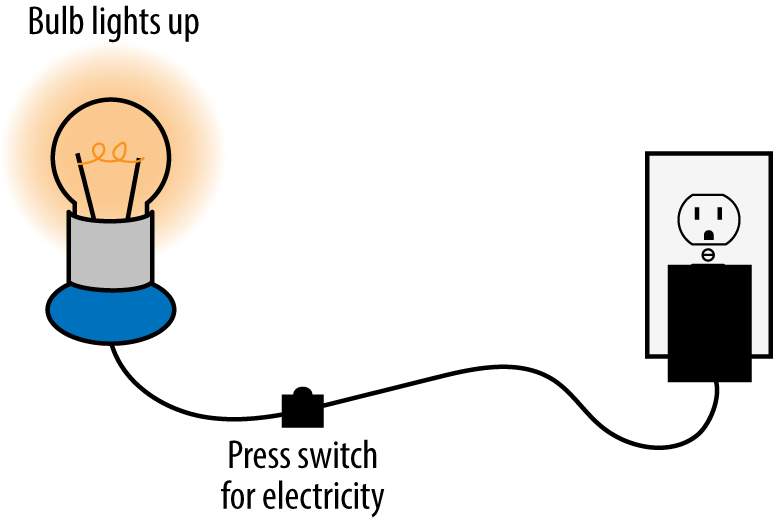

Take the example of a lighting system. The mental model of a lamp is simple: it has power, a switch, a fitting, and a bulb (see Figure 5-6). If the lamp doesn’t work, it’s probably because the power has failed or the bulb has blown.

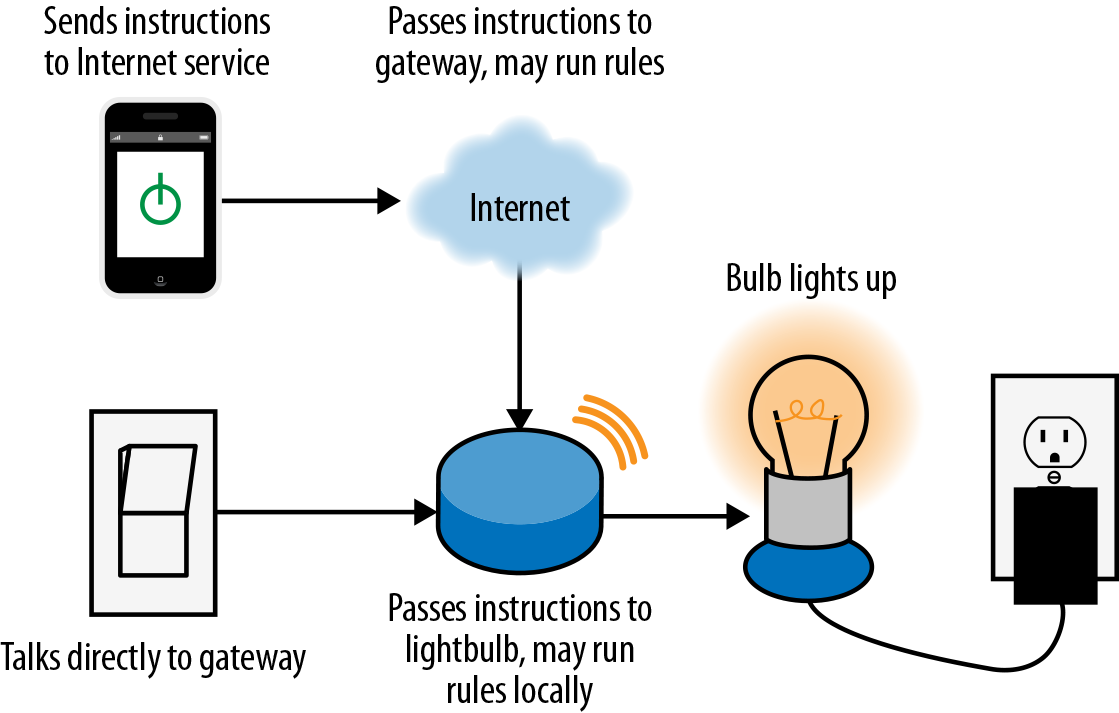

Figure 5-6. A conventional lamp has a simple conceptual model

A connected lighting system has bulbs, switches, fittings, and power too (typically either the bulbs or switches will be connected). It also has an Internet service, probably hosted remotely. It has a smartphone app and perhaps a web app, too. It probably also has a gateway device. It has more parts (see Figure 5-7) and more different kinds of part. It can also do more. It may run automated rules to turn lights on and off at certain times, or when certain trigger events happen (such as the security alarm being activated). The intelligence that controls the system may live in several places: in the bulb or switch itself, in the gateway, in the Internet service, or even in the smartphone app.

Figure 5-7. Connected lighting can do more than conventional lighting, but the conceptual model is more complex

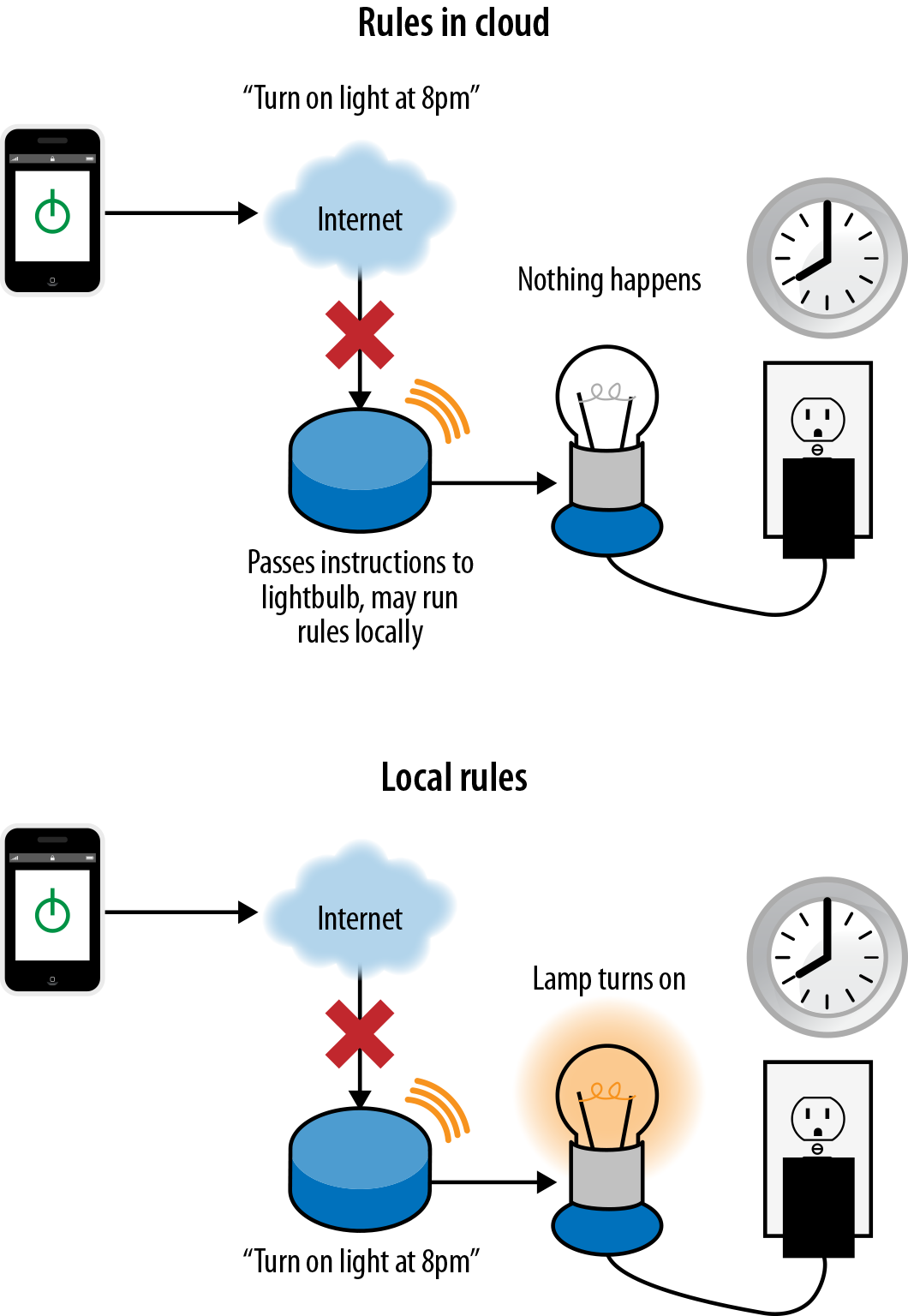

When everything is working well and connected, users don’t need to concern themselves with which code is running where. But if a part of the system develops a fault or loses connectivity or the network is slow, the impact will depend on what that device is doing. Will the lights (or intruder alarm, or heating) stop working if the user’s phone battery runs out or they have no signal? Or will they keep running even if the user cannot remotely access the home at that point? What if the home Internet connection goes down? Will lighting rules continue to work locally? If they are stored in the gateway or edge device, they will. If they are stored in the Internet service or smartphone, they will not (see Figure 5-8).

Figure 5-8. Home automation routines stored in the cloud will not run if the Internet connection goes down; if they are stored locally, they will continue to run, but the user won’t be able to see this or control devices remotely

How you choose to distribute system intelligence is a system architecture issue. What’s most appropriate for your system will depend on what it does and your users’ expectations. An intruder alarm should not fail completely because the Internet went down. But it’s not a disaster if your energy monitoring system is occasionally unavailable for short periods of time, as long as data is not lost.

The new challenge for UX is that this is a lot of complexity that users didn’t previously have to worry about (see the discussion of the “surprise package” in Chapter 4). There are two ways to deal with this complexity: you can explain it, or try to hide it.

Although BERG is now defunct, the BERG Cloud bridge, the gateway for Little Printer, was a simple example of a device that explained how the system was working. It had LEDs to show whether the device had power and an Ethernet connection, and upstream and downstream connectivity (see Figure 5-9). It was labeled to explain that upstream meant that the bridge could see the BERG cloud Internet service, and downstream meant that the ZigBee network used to connect to local devices was running. The gateway was communicating the system image.

Figure 5-9. The BERG Cloud Bridge (image: BERG)

The more complex the system, the more overwhelming it may be to explain in detail. In that case, it would be better to allow the user to work from a simplified mental model. Automatic gearboxes are complex mechanical systems that require only a simplified mental model in order to use (see Figure 5-10). But this is a hard trick to pull off. The gearbox is mature technology and only performs one basic function. Also, most consumers can draw from the experience of driving cars. IoT technology is newer, and often does things that are less familiar to users. Many IoT systems also have multiple functions, so it can be harder to reduce them to a simplified conceptual model.

Figure 5-10. Automatic gearbox controls hide the complexity of the system behind a simplified conceptual model

One example of a product with a simplified conceptual model is Apple iBeacons. Imagine a user walks into a store for which they have an app installed, their location is detected using iBeacons, and they are sent a push notification about a special offer. It’s good enough for that user to understand that the store has sent them a message because they are physically there. They might well think that the beacons are beaming them the message (news articles even describe beacons as working in this way).6

That model isn’t accurate. What actually happens is: the beacon broadcasts a data packet containing a unique iD and information about where it is based (e.g., the store). When the iOS device detects the broadcast, it wakes up the relevant app (e.g., the store’s loyalty app). That, in turn, triggers the app to display a notification: the app might check into the backend Internet service in order to see what notifications are available. The beacon isn’t sending the notification, it’s just acting as a tripwire that tells the store’s app (and backend service) where the user is.

That’s a lot more complicated. If the store tried to explain that to their customers, they’d probably just confuse them. In this situation, a simplified conceptual model is good enough to use the system perfectly well, even if it leads the user to some wrong assumptions.

Note that in this case, there’s nothing that can go wrong as a result of the user not understanding the system model. In other situations, a misunderstanding might have serious consequences. For example, if activating an emergency alarm, the user does not just need to know that pressing the button summons help. They may need to know whether pressing the button is guaranteed to get the message through, and whether they should wait for confirmation that someone has received it.

UX researchers at Ericsson have suggested that it is particularly difficult for users to understand networks of devices. Their informal research indicated that users currently think of connections between devices as being “invisible wires.”7 As Ann Light puts it: “Most people are disposed to think of things, not links; of nodes rather than relations.”8 But this way of understanding is not helpful in making sense of complex networks with many interconnections and interdependencies. To understand a system, users must understand the links as well as the nodes.

In 1983, the HCI specialist Larry Tesler (then at Apple) proposed the Law of Conservation of Complexity. Interviewed in Dan Saffer’s book Designing Interactions, Tesler says: “I postulated that every application must have an inherent amount of irreducible complexity. The only question is who will have to deal with it.”9 His point was that shielding users from complexity would involve extra work from designers and developers.

Figure 5-11. iBeacons diagram: what the user needs to know, and what’s actually happening

User understanding will improve over time with familiarity, but only if we, as designers, help them with clear system images. We need to figure out what complexity users will need to deal with, and where products and tools can be simplified. As a general rule, if the task or activity the user wants to perform is complex or requires a high level of skill, it’s appropriate for the user to engage with that complexity. Or perhaps it’s a job for a professional. If the task or activity can be expressed simply but the technology is complicated, there’s a good case for designing around a simplified mental model.

We don’t yet know what that looks like for IoT. For starters, if you need to explain to the user that part of the system is not working, it’s important to explain why and what this means. For example, if you are alerting them that the security alarm has lost Internet connectivity, you might choose to tell them that the cameras and alarm sounder are still active but that they will not receive alerts. Or if the user is traveling in a different time zone, you might want to show the current time at home on the heating control app, to indicate that schedule changes are based on the local time zone of the controller, not where they are now.

Composition

Composition refers to the way the functionality of a service—especially the user-facing functionality—is distributed across devices.

Good composition distributes functionality between devices to make the most of the capabilities of each device. Designers should take into account the context in which each device will be used, and what users expect each to do.

Patterns of composition

There are some common patterns to composition. Web services delivered across smartphones, desktops, tablets, and connected TVs are often multichannel. Each device provides the same, or very similar, functionality (in other words, there is a high level of redundancy between devices. Kindle, Netflix (see Figure 5-12), BBC iPlayer, Facebook, and eBay are all examples. Devices with small screens or limited input capabilities may only provide a subset of key functionality. But each device offers a similar basic experience of the service. Many users won’t own or use all the possible devices on which the service could be used and this doesn’t matter. It’s perfectly possible to use the service via a single device and still have a good experience.

However, many IoT services run on a mix of devices with different capabilities. Service functionality and user interactions will be distributed across different devices. Some functionality may be exclusive to a specific device. For example, in the Withings ecosystem, only the scale can measure body mass and only the blood pressure monitor can measure blood pressure. Some devices may be custom designed for the service. We’ve seen many examples of these already throughout the book, from connected door locks to smart watches and thermostats.

Figure 5-12. Netflix is a multichannel service (image: Netflix)

For reasons of cost, or practicality, these may have limited inputs and outputs that are quite different from a conventional “computer” UI. A door lock may have a keypad and handle and perhaps an LED to show whether it is connected or not. It probably doesn’t have a screen. In these systems, we have to figure out which device handles which functionality. Each device (and the Internet service itself) may have a different role in terms of providing user interactions, connectivity, information gathering, processing, or display. In the terminology of Wäljas et al., this is a cross-media system (Figure 5-13 is an example).

Figure 5-13. Withings is a cross-media ecosystem (images: Withings)

For example, a heating service as shown in Figure 5-14 may comprise:

A boiler that heats the water

An in-home controller/thermostat that tells the boiler when to switch on or off (this may be a separate thermostat and programmer, or just one device)

A gateway that provides a low power connection to the controller and bridge out to the Internet

A cloud service that stores user account information and remote access to the system

Smartphone and web apps that connect to the cloud service

Figure 5-14. System diagram of a heating system

Both the Tado and British Gas Hive systems work in this way, but user-facing functionality is distributed differently. The Tado thermostat/heating controller has almost no UI (see Figure 5-15). Users can view the current temperature and set or alter the setpoint, but most interactions are handled on the smartphone.10 This may keep manufacturing costs down. Smartphone interfaces are much cheaper to develop than physical interfaces, as components like screens and buttons are relatively expensive. It’s an elegant choice for a small household occupied mainly by smartphone owners, but there are trade-offs. If you don’t have your phone in hand, or the battery is dead, or you’re a guest in the house without access to the phone UI, you have limited control.

Figure 5-15. The Tado heating controller and smartphone app (image: Tado)

The Hive Active Thermostat heating controller is a standard thermostat with on-device controls (see Figure 5-16). The device is designed to be competitive on cost with nonconnected heating controllers. So interaction design has to work within the constraints of an LCD screen and limited number of buttons. However, heating can also be controlled by phone and web apps, which are probably easier for most people to use than the hardware. This means that heating controls are available to anyone in the house, whether they have access to the smartphone app or not.

Some devices may not support any user interactions at all (see Figure 5-17). Some devices may be simple sensors that simply provide data to the service, as in an air quality monitoring system. In this case, you may simply hand off all functionality onto a single mobile or web app. Although the overall service may be complex, the web or smartphone UI in this case is in some ways simpler to design, as there is only one interface to consider.

Figure 5-16. The Hive Active Thermostat heating controller and smartphone app (images: British Gas)

Figure 5-17. The Bluespray garden irrigation controller is entirely controlled by a smartphone app (image: Bluespray)

When key tasks are available across multiple devices, users may still be able to use the service even when some devices are unavailable. For example, the Withings smartphone app can use the onboard accelerometer to measure activity, so even if the user has forgotten their dedicated activity monitor, they need not lose data (see Figure 5-18).

Figure 5-18. The Withings mobile app can use the phone’s onboard accelerometer to measure activity

Even where different devices support the same tasks, they may be used in different situations. For example, the key advantage of connected heating systems is that the smartphone app enables control from anywhere, whether that’s the other side of the world or the user’s bed.

However, this isn’t a recommendation to duplicate every piece of functionality across every device—redundancy isn’t necessarily a good thing. Too many functions on a single device can make the UI harder to use, especially if the device has limited input/output capabilities. And user interaction components, such as screens and buttons, add significantly to manufacturing costs of embedded devices. The right decision will balance the usefulness, cost, and usability of putting various features on different devices.

For many systems, it makes sense to use a network of devices that are specialized for particular functions. For example, the Lively elderly care service uses specialized sensors to monitor the pillbox, fridge, and the kitchen (see Figure 5-19). This is referred to as synergistic specificity:11 specialized components working together to deliver a service that is more than the sum of those components.

Figure 5-19. Lively elderly care system: safety watch for summoning help, with pedometer and medication reminders, hub, sensors for fridge and pillboxes, and a custom sensor containing an accelerometer to detect movement (image: Lively)

Users may also want to add (or remove) devices to suit their individual needs, or combine them in different ways to fulfill different purposes—this is a modular system. In some cases, different devices can be used to perform different functions as part of different services. For example, a home monitoring system may offer contact, temperature, moisture, smoke, and motion sensors. These could be used to detect occupancy for heating, lighting, and potential safety problems or intruders. A highly modular system can be very powerful. It fits well with the Web philosophy of “small pieces, loosely joined”: specialized components designed to be flexibly combined for many uses.

But systems of multiple devices will often be more complex for users to understand, configure, and use. To use the distinction from Chapter 4, it’s likely to be more of a tool for early adopters than a product that the majority of consumers will configure themselves. It’s also more complex for designers to communicate which devices are doing what at each point.

| Multichannel System | Cross-Media System |

|---|---|

Same service functionality available across multiple devices | Specialized devices with different capabilities provide a service that is more than the sum of its components |

Determining the right composition

For any service, there is often more than one possible suite of devices that could be used to deliver the service. The decision as to which is most practical will be influenced by the following factors. You may wish to prioritize, per task, which devices are optimal for each task, acceptable for the task, or not possible for this task.

What best fits the context of use?

The first consideration is what best fits the activity, situation, and user needs. Certain devices need to live in one place where only one function is required (e.g., blind/window shade controllers or light switches). Others are used for activities, or in environmental conditions, that place constraints on form factors and interaction modalities. For example, climbers need their hands free and are out in the open. Delivering altitude, weather, and location information to a weatherproofed wrist-top device makes more sense than using a smartphone. Using a mobile phone’s music controls while driving would be dangerous. Key functionality should be mirrored on the car dashboard in a way that minimizes demands on attention. Features that are essential to one device may be inessential or even inappropriate for others.

What connectivity and power issues do you need to consider?

The availability and reliability of the network connection is also key. If you have a good reliable connection, you can afford to centralize more functionality (e.g., putting your irrigation system controls on a smartphone).

It is simpler to handle information processing in the cloud, as with the Withings scales and fitness trackers, which simply take readings, display them in real time, but handle all other functionality in the online service. A weight and fitness service can handle temporary losses of connectivity gracefully, by storing data locally and syncing when the connection is available again.

However, for other types of service, this will not be acceptable (e.g., where safety or security is at stake). If a monitoring system for an elderly person loses connectivity, it might be acceptable for motion sensor data to be temporarily unavailable to the care giver, as long as it is clear that connectivity has been lost and live data is not available. But it would be completely unacceptable for the elderly person to be unable to use their emergency alarm during this time: the alarm should be able to fall back to using another form of connectivity.

In other words, the designer has to make an informed call on which tasks need to be available in different conditions: offline? With no power? Does the system make no sense if connectivity is lost? Is a suitable fallback available? I should not have to worry about being unable to enter and leave my own house because the front door lock has lost connectivity or has no power—and many other household functions must also be taken for granted to be effective. If you can’t afford to lose access to functionality, you may need user controls (and perhaps more onboard intelligence) in the edge devices.

Can you work with preexisting devices?

What hardware can you assume your user base already has and is familiar with using? For example, if users all have smartphones you can use these to handle complex interactions, determine location and identity, and in some cases handle local and Internet connectivity.

Smartphones come with onboard sensors (such as accelerometers), which can be used for tasks such as activity tracking without additional hardware. However, custom form factors (such as wristbands) may provide a better experience in some contexts of use, such as the forthcoming clip-on fall sensor for the Lively safety watch for older adults. Specialist equipment may also tend to offer better performance through better quality parts, such as more powerful GPS chips or better battery life.

What interaction capabilities do the various devices have (or could you cost-effectively include on a custom device?)

You may be able to consider adding or removing interaction capabilities (like screens, buttons, audio beeps, or LEDs) to embedded devices. However, these typically add to production costs, so you will probably need to keep these to a minimum and offload more complex functionality onto a mobile or web UI. If you’re not able to influence the design of the embedded devices, you’ll have to work with the interaction capabilities you have.

You may also decide that just because a device could support a particular function, it does not have to. Keeping things simple may make the device interface easier to understand. For example, a heating controller with a low-resolution screen and limited buttons might be best used for status information and in-the-moment controls (turn the heating up now!). You could offload more complex tasks such as schedule setting onto a web or mobile interface. The bigger screen size and richer interaction capabilities will enable a better design. You can also provide a “good” way to do the task on a fuller featured device and a limited or compromised version on a less capable device that must occasionally work alone. For example, an intruder alarm system may provide an easy way to view which sensors triggered the alarm on a mobile interface. The task may also need to be possible on the alarm panel via a basic LCD screen, even though this is likely to involve many more button presses, perhaps navigating menus and modal states.

Does the system need to work if some devices are unavailable?

What happens if a device is unavailable (e.g., a smartphone is lost or the battery is dead)? Does it need to be used by third parties who may not have access to a web or mobile app, such as visitors to the home? Can the device work offline?

How accurate does sensing need to be?

If a service needs to know the rough location of its user, a smartphone can estimate this from for GPS/cell tower signals. If it needs to know which room the user is in at home (e.g., to turn the lights on and off), a smartphone could be used via Bluetooth LE connections, but will only be accurate if the user carries it with them at all times.

Do users have set expectations of devices?

Users may expect certain devices to conform to familiar form factors, or provide familiar functionality. For example, they are likely to expect a heating controller to have some way of turning the heating on, or up.

How do you balance cost, upgradeability, and flexibility?

User interface components, such as screens and buttons, are expensive to add to embedded devices. You may therefore decide to limit interactions on the embedded devices themselves and do most of the interaction “heavy lifting” via mobile apps or web interfaces.

It can be difficult to add new features to devices that are already out in the field, especially if this requires modifications to the interface. Again, offloading interactions to smartphone and web apps, which can be modified relatively cheaply and quickly, may make sense.

How central to the service are the devices?

Are devices central to the conceptual model, or not? This may not affect the distribution of functionality, but it will affect the way in which you communicate the composition of the system.

Consistency

Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions.

JAKOB NIELSEN, 199412

Consistency is well known as a general UI design heuristic. It’s a simple concept to grasp. But deciding what needs to be consistent, and what does not, can be tricky. You may have to trade off one type of consistency for another. Do you make all the buttons look the same so they are easy to identify as buttons? Or does that cause confusion by implying that certain functions are similar, when in fact they are not? Too much consistency, or consistency between the wrong things, can be as damaging as too little.

In the case of cross-platform systems, designers also need to consider consistency across different devices. Consistency works to create a sense of coherence of the overall system.

Words, data, and actions that are the same across devices should be understood to be the same. Words, data, and actions that are different should be understood to be different. This helps users form a clear mental model of the system and its capabilities. Knowledge that users have gained about the system from one device can be transferred to help them learn how to use other devices.

Other elements that may need to be consistent to some degree across devices include the following:

Aesthetic/visual design (to make the devices look, feel, and sound like a family)

Interaction architecture (how functionality is organized)

Interaction logic (how tasks are structured or the types of control used)

Guidelines for Consistency Across Multiple Devices

Determining what should, and what should not, be consistent across multiple devices is also a question of trade-offs. Should you try to make your UIs as consistent as possible across all devices in the system, or should you try to follow the conventions that may exist for individual platforms (such as mobile operating systems)? In this section we propose a set of guidelines to help you balance those decisions.

Use consistent terminology

As a rule of thumb, the highest priority is to use consistent wording across devices. This ensures that data and actions across different platforms are understood to be the same thing. Whatever the display capabilities of each device, you can always give functions or data the same label even if you can’t make them look the same.

For example, imagine you are working on a connected heating controller that offers three mode options: ON (heating is on continuously), AUTO (heating is running to a preprogrammed schedule), and OFF (see Figure 5-20). These are already set in the fixed segment LCD display and cannot be changed. You might think that ON and AUTO are not the clearest terms. You’d prefer to change them to CONTINUOUS and TIMER or SCHEDULE in the smartphone app interface. However, this would create a disconnect: users then have to understand that ON and CONTINUOUS are the same thing.

Figure 5-20. The Hive Active Heating controller is an example of a device with fixed segment LCD labels mapped to physical buttons (image: British Gas)

Users are often intimidated by heating controllers and expect them to be confusing. And they might not have a strong enough mental model of the system to infer that CONTINUOUS and ON are the same thing. Having tested systems with similar issues, we found it was more important that these options were consistently named across devices. The value of better terminology in the mobile app is undermined if users don’t understand that the functions are the same.

Follow platform conventions

The second priority is for each UI to be consistent to the platform conventions of the device.

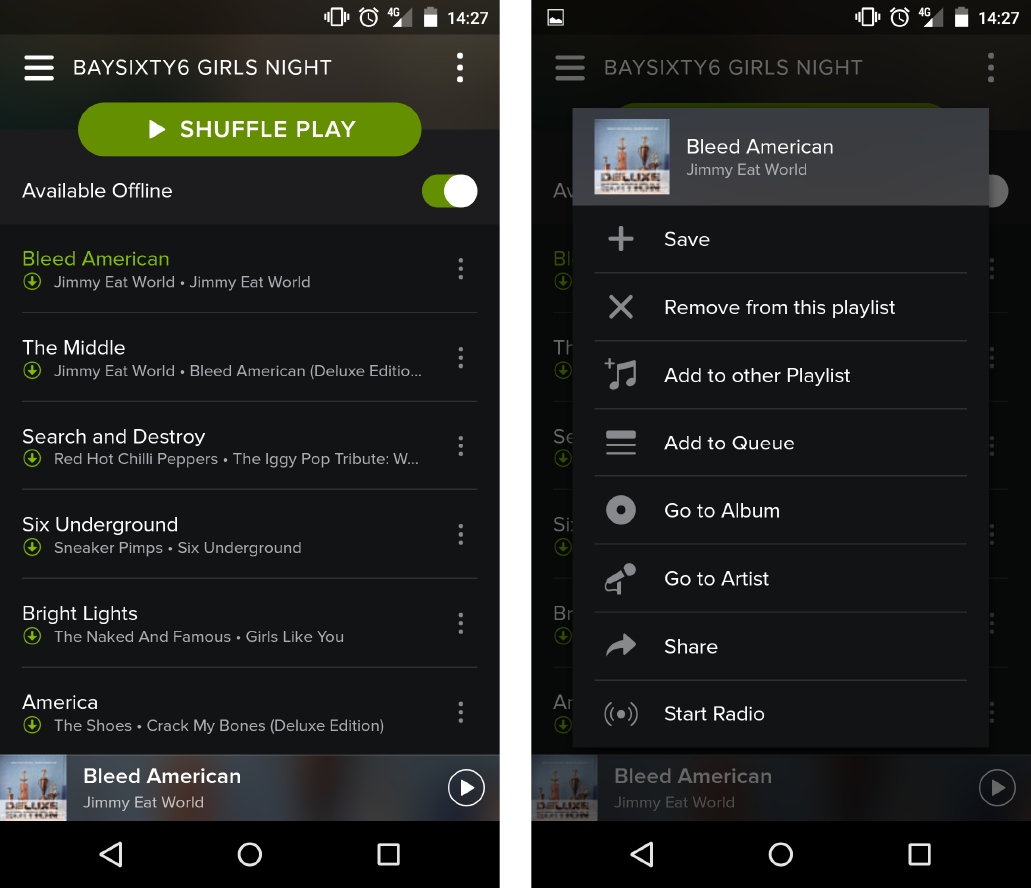

Mobile OS UI conventions are well documented in styleguides (e.g., the iOS Human Interface Guidelines13 and Android Design14). There are some key differences—for example, Android users may expect contextual menus on long press, a convention that does not exist on iOS, where the same menu would be displayed on another screen (see Figure 5-21 and Figure 5-22).

Figure 5-21. The Spotify Android app offers a contextual menu for each track

Figure 5-22. In the Spotify iOS app, the same menu is available on a separate screen

In general, for mobile devices and others that have established platform conventions, following these conventions will make it much easier for users to use your app even if it means some things are done in a different way than they are on any specialized connected devices. A heating control app for iOS must be a good iOS app as well as recognizably part of the heating service. It does not have to be a skeuomorphic representation of a heating controller. But this works both ways: a heating controller does not have to pretend to be an iPhone just because it has an iPhone app. The goal is to ensure that interfaces are appropriate to each device, yet also feel like parts of a coherent service.

Interaction elements such as buttons, menus, and switches should be recognizable according to the platform conventions of the device. An iOS button should generally follow conventions for button styles on iOS rather than trying to look like the physical button on an embedded device.

Most importantly, specific interaction controls should be optimized to the platform. If a physical control uses a wheel, slider, or handle, there is no need to replicate this on a small touchscreen device where it could be harder and more imprecise to use.

For example, the Nest thermostat incorporates a rotating bezel, which is used (among other things) to increase and decrease the temperature. The bezel makes a clicking noise as it is rotated. But the iOS app up/down controls are arrows. The designers could have shown a representation of a bezel onscreen for the user to tap and drag around. But the arrows are a much more efficient and precise control on a touchscreen (see Figure 5-23). Adjustments to domestic temperature are typically within a degree or two, so precision is important to avoid overshooting.

Aesthetic styling

A consistent visual and aesthetic style across all platforms reinforces the perception of a coherent service. Consistent fonts and colors across devices are nice but may not always be practical. For example, the Nest thermostat uses the same font and colors to indicate temperature on the wall thermostat as the iOS app. But this isn’t always going to be possible: a cheap monochrome LCD screen won’t support a choice of fonts anyway. Replicating the LCD font on a web or smartphone app may impact readability. It’s also a definite retro statement that may not be the look you’re after. It’s nearly always aesthetically clunky to make a screen design resemble a physical device.

Figure 5-23. The Nest thermostat and iOS app (showing Celsius temperatures; images: Nest)

Audio is another way to use aesthetic design to create a sense of coherence. Tapping the down/up arrows on the Nest smartphone app produces the same clicking noise per increment as the bezel on the wall thermostat. This is an elegant touch that adds a common aesthetic to each interaction without intruding on usage. It adds to the sense that the devices are a family and helps users form a conceptual model of how the system works.

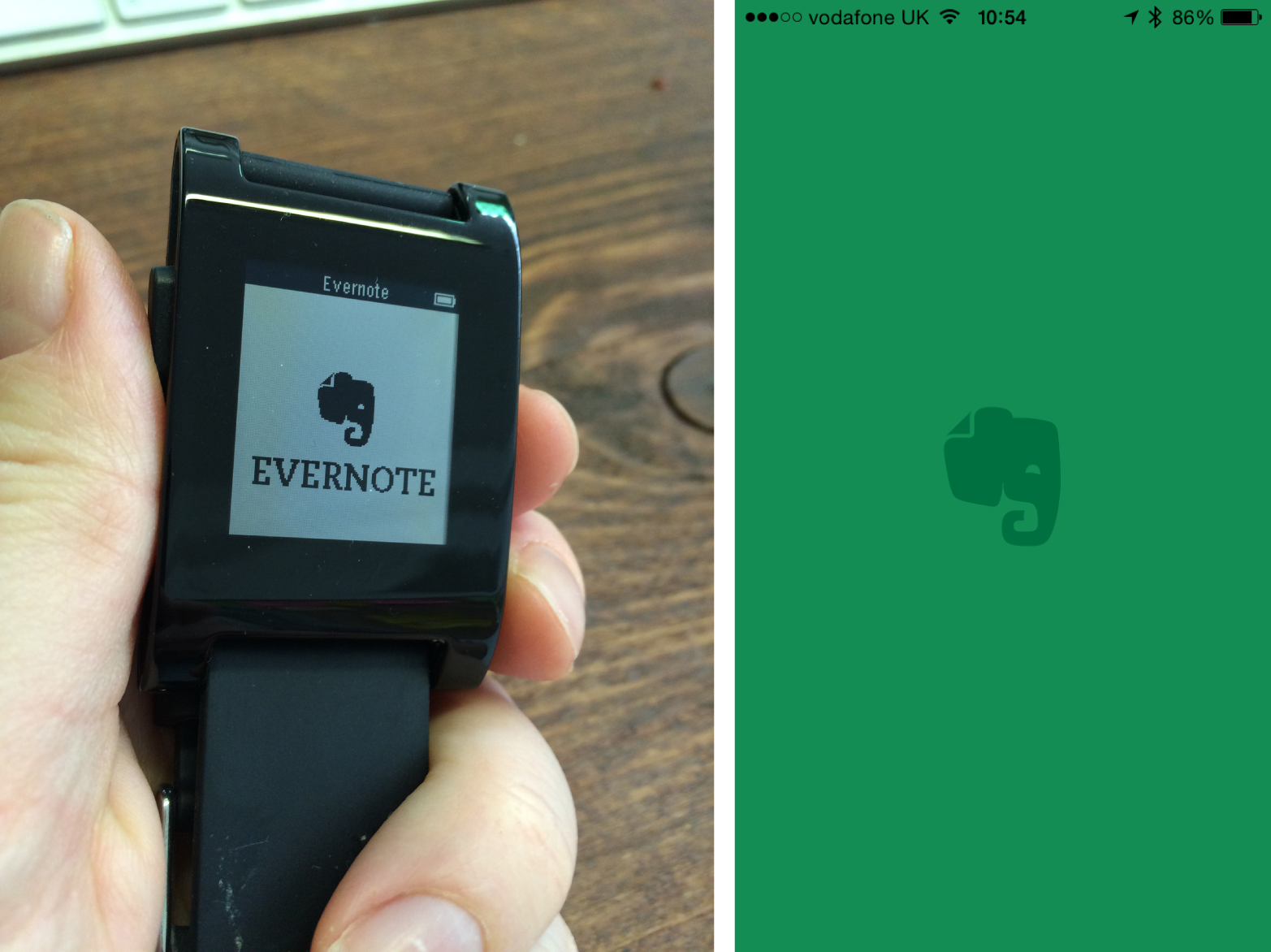

Where visual elements also convey meaning it is vital that they are used in the same way. This is called semantic consistency. To continue with the heating example, you may use red/orange/blue colors to indicate temperature. Or a particular icon might indicate that the water tank is heating up. The icon may be higher resolution on devices with better screens, but it must be recognizably the same thing (see Figure 5-24).

Figure 5-24. An Evernote icon shown on a smartphone screen and Pebble watch

Interaction architecture and functionality

Interaction architecture is the logical hierarchy (or other structure) of the UI as mapped to the controls. This is likely to be less consistent across devices and more platform-dependent. Devices may have different functions in the service. Even where there is an overlap between functions, they may be optimized for different purposes. A wall thermostat might be optimized for small adjustments and switching mode (e.g., turning on the hot water). It might need to support changing the heating schedule, but that’s always going to be a better experience on the mobile or web app. In optimizing the thermostat for quick adjustments, the designers might knowingly create a less-good UX for schedule changes. But they might view this as acceptable if users are likely to change schedules on a smartphone or website anyway.

As devices are used for different things, it’s not necessarily desirable to group functions in exactly the same way. For example, a mobile or tablet screen can provide one-touch access to many functions, facilitating a broad, shallow functional hierarchy. Fitting the same functions into a heating controller with an LCD screen plus three buttons may require a narrower, deeper hierarchy.

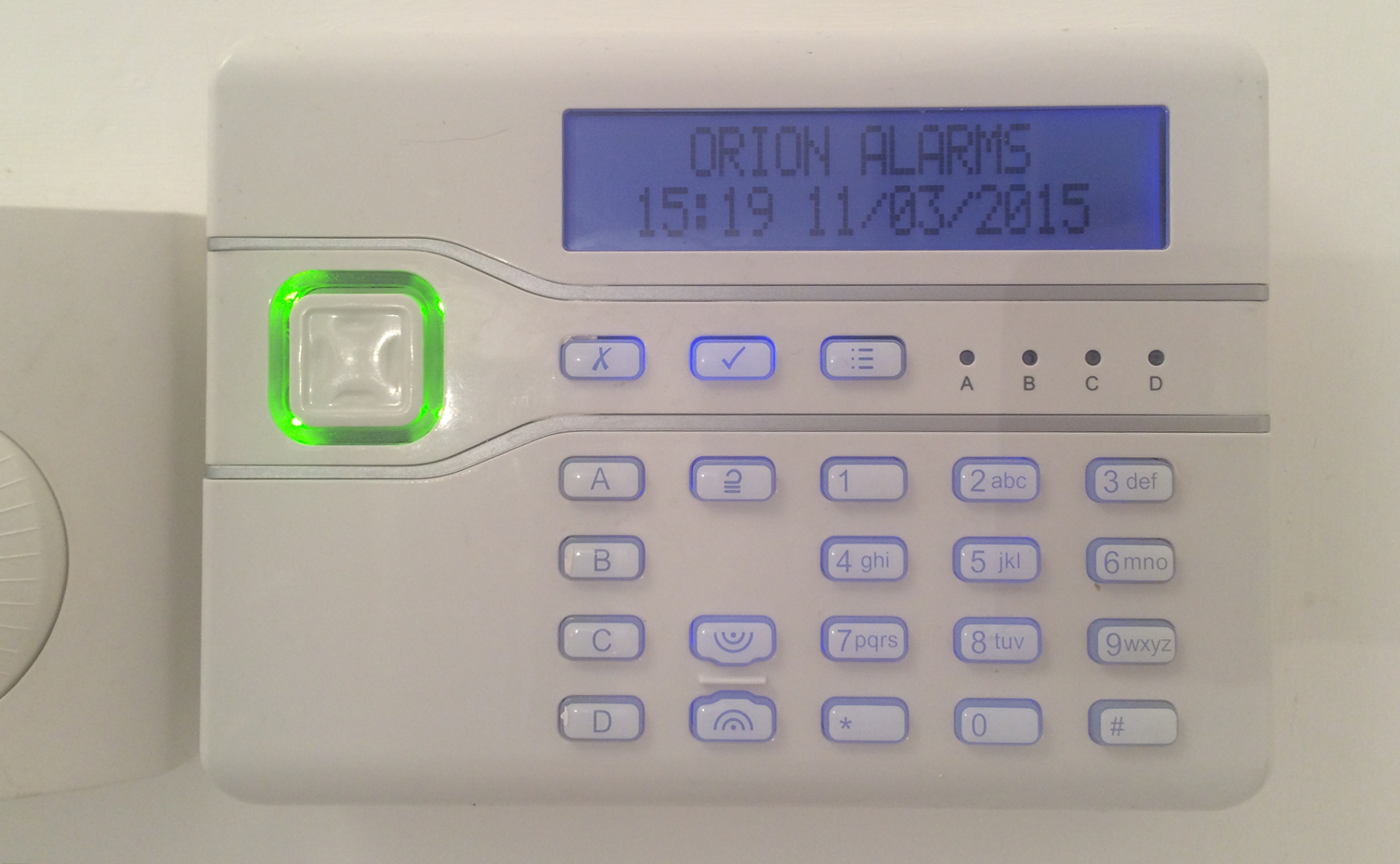

You may also need to use modes, in which the same buttons perform different actions in different states. Modes are typically more difficult to use, but they may be an essential compromise if you’re stuck with the hardware (see Figure 5-25). Structure your mobile or tablet app to be a great solution for that device, and don’t let it be constrained by the limitations of the embedded device.

Figure 5-25. This alarm panel has a modal UI. In its default state, the numeric keypad is used to set/unset the alarm with codes. When the menu button is pressed, the numeric keys correspond to different items in nested menus (corresponding to functions such as setting zones, adding/removing users, viewing the system log and changing configuration settings). There are no cues on the device itself as to which number corresponds to which function, or which menus are available, so the user has to cycle through buttons to find the option they want. Labeled buttons would have made functions easier to find, but would have added many more buttons and increased the bill of materials.

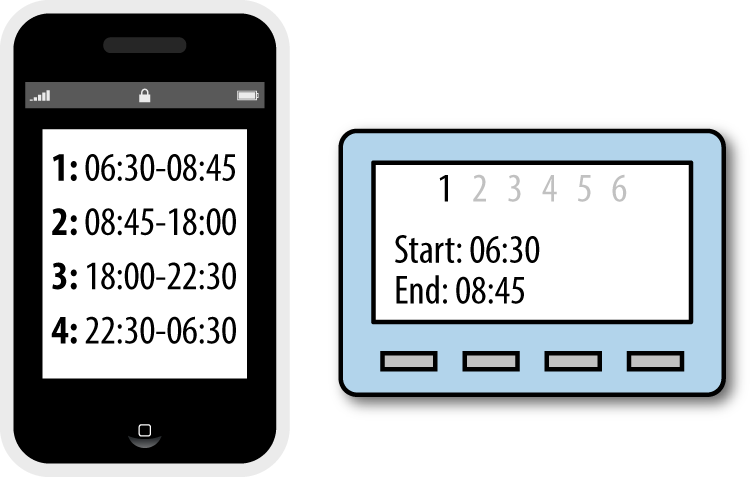

UIs on different devices don’t all have to have the same features, but where they do, the functionality should be consistent. For example, if a heating controller supports a six-phase schedule (six phases throughout the day) but the companion phone app only supports four, users will wonder what happened to the other two and the phone app will run into problems displaying settings that it does not support (see Figure 5-26).

Figure 5-26. When devices support functions inconsistently, confusion will result

Consider the most likely combinations of devices

As a designer, you may have to think about design across a large ecosystem of devices. Users may not have all of these. Focus your effort to achieve consistency on the combinations of devices users are most likely to have. To stick with the example of a heating system, all your users might have a controller and smartphone app, but few will regularly use both iOS and Android apps. So it’s important that the smartphone apps are both appropriately consistent with the controller. It’s less important that knowledge users acquire from using one smartphone app is transferable to the other. For example, the location of the menu button, or the way that system settings are grouped and accessed, need not be the same across mobile platforms, but should conform to the platform conventions (as discussed in “Follow platform conventions”). Few users will use both and those who do are likely to be familiar with both conventions.

Continuity

In the film industry, continuity editing ensures that different shots flow in a coherent sequence, even if they were filmed in a different order. It would be disrupting to the narrative if a character’s hairstyle changed within a scene, furniture moved around, or a broken window was suddenly intact again.15

In cross-platform interaction design, continuity refers to the flow of data and interactions in a coherent sequence across devices. The user should feel as if they are interacting with the service through the devices, not with a bunch of separate devices.

There are two key components of continuity in cross-device UX. Data and content must be synchronized, and cross-device interactions must be clearly signposted. In our experience, some of the biggest usability challenges in IoT are continuity issues.

Data and Content Synchronization

It sounds obvious that different device UIs should each give the same information on system state.

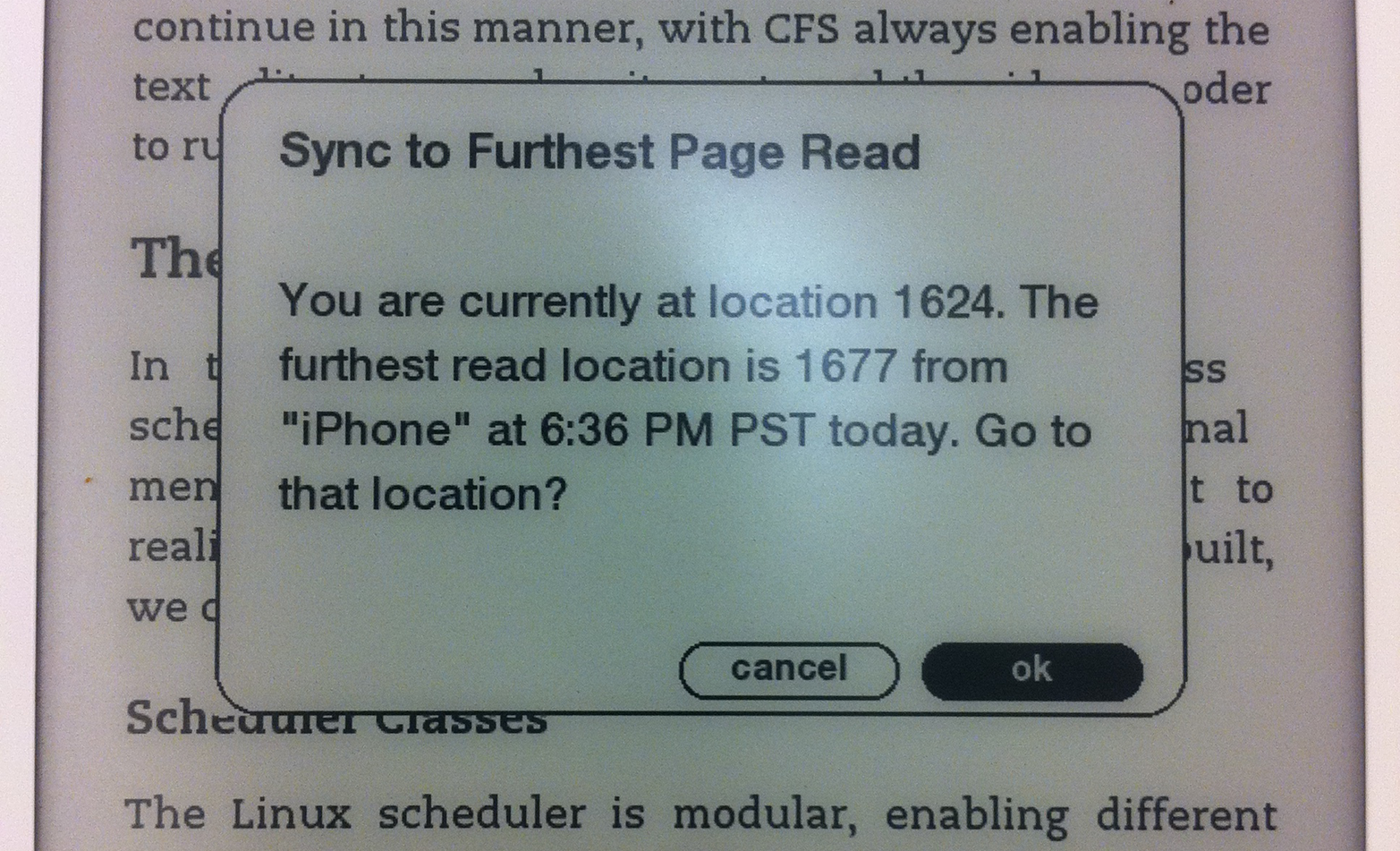

Kindle Whispersync is a great example of synchronization. You can switch between reading on different devices—even swapping between the book and the audiobook—and your place in the book is always up to date (see Figure 5-27).

Figure 5-27. Kindle Whispersync UI dialog (image: Kei Noguchi)

You’d expect this from any other connected device too. For example, say your wall thermostat says it’s 21° C and the heating is on. You’d therefore expect your smartphone heating app to say the same thing, and not to tell you that it’s actually 22° C and the heating is off.

If you turn the heating off from the wall, then you’d expect the smartphone app to reflect that change in state right away, right?

Unfortunately in IoT this isn’t always possible. Devices that need to conserve power, such as those that run on batteries, often cannot maintain constant connections to the network as this uses a lot of power. Instead, they will connect intermittently, checking in for new data. This can cause delays and result in situations where some interfaces do not reflect the “correct” state of the system. Network latency is also an issue: it’s possible for the user to know that something has worked before the UI does. For example, they may be physically sitting near a light that they have just turned on and have to wait for a smartphone app UI to tell them what they already know.

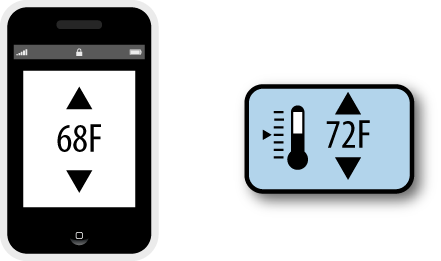

To return to the heating example: in the UK, it’s common for heating controllers to run off a battery.16 So a heating controller may need to connect via a low-powered network like ZigBee to a gateway, and only connect intermittently to check in for new instructions. There might be a delay of perhaps two minutes between a setting being changed on the smartphone app and the heating controller receiving that instruction.

This causes discontinuities in the UX. If a user changes the settings on the smartphone app (say, turning the temperature up from 19° C to 21° C), there may be a period of up to two minutes before the heating controller checks in to the service and receives the updated instruction. During this period, the phone UI could show that the system is set to 21° C, and the controller UI will show that it is set to 19° C. If the user is standing in front of the controller with the smartphone app, they will see two conflicting pieces of information about the current status of the system (see Figure 5-28). This violates one of the most fundamental of Nielsen’s usability heuristics, visibility of system status: “The system should always keep users informed about what is going on, through appropriate feedback within reasonable time.”17

Figure 5-28. In some situations, devices may temporarily report conflicting information about the state of the system

What can you do about this? You could fix this by making the controller check in more frequently. But that would run the battery down within days. Users don’t expect to have to change batteries in heating controllers several times a week. So that’s not practical.

Your next option is to consider how you can design the smartphone UI to account for this two-minute period. There are two possible approaches.

First, you could show the updated settings the user wanted to apply: the temperature setting of 21° C, even though it might (for a short time) give a misleading impression of the system state. If the instruction cannot be applied for some reason (e.g., temporary Internet outage at the property), you can alert the user and then revert the UI to the old state. In essence, you pretend that it has worked while you wait for confirmation from the controller.

Instagram employs similar “white lies” to make their mobile app feel more responsive. For example, Instagram registers likes and comments in the app UI while the request is still being sent to the service. The user is notified if the action fails. They call this “performing actions optimistically”18 (see Figure 5-29).

When everything works OK with our heating example, the responsiveness allows the user to feel as if they are interacting with the service, not just the phone UI. They have direct control of the heating.

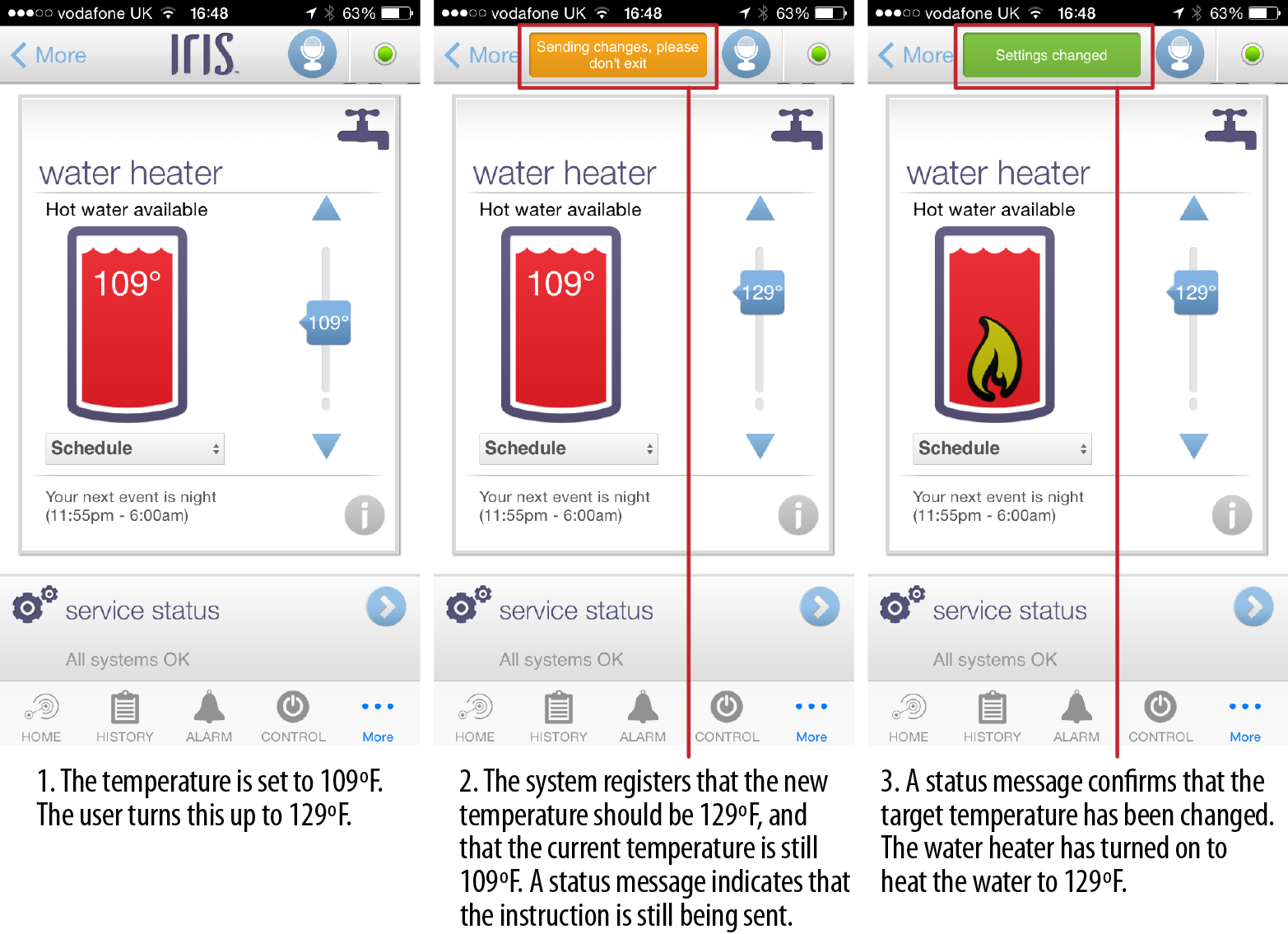

The second approach is to be more transparent about what is technically happening. You show the instruction as being in the process of being sent. This is the approach used by the Lowes Iris system: a status message at the top of the screen is shown to indicate that an instruction is being sent. When confirmation is received that the controller received the instruction, a confirmation message is displayed (see Figure 5-30).

Figure 5-29. Instagram registers likes or comments in the UI even while the status bar spinner (top right) shows the request is technically still being sent

Here, the UI is showing the data as being in the process of being sent. In essence, the system is saying to the user: “thanks for your instruction, let me see whether I can do that.” This requires the user to know a little more about how the system works in order to understand why the instruction isn’t just acted upon. It also introduces the possibility of failure to every interaction, successful or not.

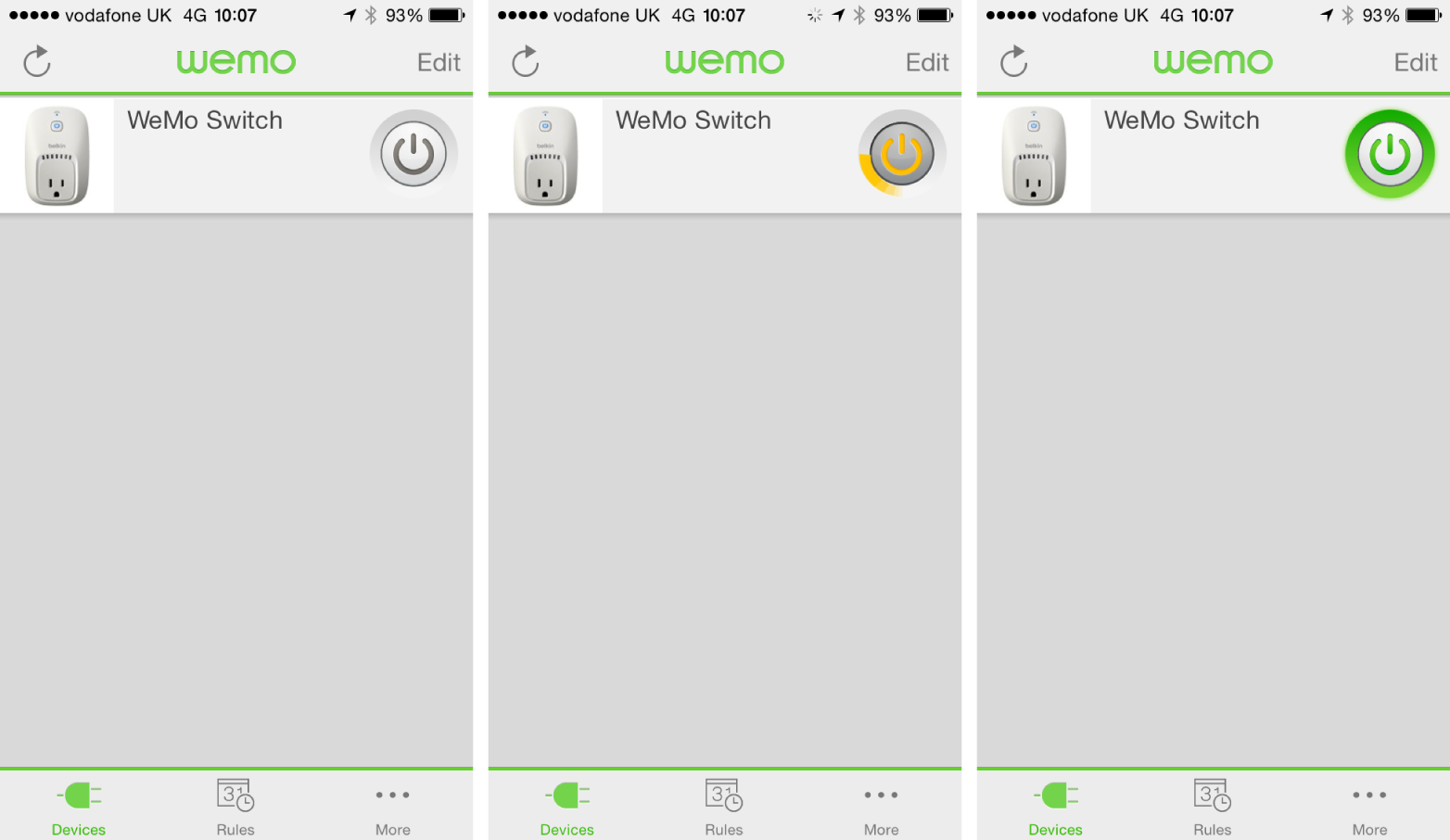

The Belkin WeMo Switch takes a similar but subtler approach (Figure 5-31). When the user turns on the plug, the switch goes from off state (gray) to an intermediate state (yellow with an animation around the switch icon), until it receives confirmation that the action was successful, when it shows as green.

Figure 5-30. The Lowes Iris water heater UI, showing the status message

Figure 5-31. The WeMo Switch, showing off, intermediate, and on states

There are no standards here yet, and no right or wrong answer for every situation. In our first example, the primary use case for the system is to support remote access. If the user is out and about and turns the heating on remotely using the phone app, the two-minute delay is not noticeable. The house will be warm when the user gets home. Even if the user is in, heating is the type of system that operates on a timescale of hours, so unlike a light switch, down-to-the-second responsiveness isn’t necessarily needed. If the user is standing in front of the heating controller with the smartphone app, it may be confusing, but the compromise may be acceptable.

In other situations, any delay or uncertainty about whether a command has been executed might be dangerous. For example, a person who presses an emergency alarm button must be absolutely confident their call for help has been sent and received. In this case, the UI should not make it appear that the system has received and acted on their command until it has definitely done so.

The frequency with which data is synchronized around the system can heavily shape the user value of the service. For example, dual fuel smart meters monitoring natural gas and electricity usage may report data at different frequencies for each fuel. The device monitoring electricity usage can run on mains power, so it can report data every few seconds. However, it would be dangerous to place a mains-powered electrical device on a gas pipe. So the gas monitoring device will be battery powered. To maintain acceptable battery life, gas data will be reported less frequently than electricity data, perhaps only every 30 minutes.

With live electricity data, users can turn devices on and off and use a display (see Figure 5-32) or smartphone to view almost immediately the energy impact each device had. The system can be used to understand the energy consumption of specific appliances and behaviors, such as boiling water in an electric kettle, or turning on a clothes dryer.

With gas data in 30-minute chunks, it’s harder for the user to relate consumption to specific gas-consuming activities in the home. You can’t see the immediate impact of turning on a gas cooker. Was the last half hour’s consumption high because the oven was on, or because the heating or hot water was in use? So the system data does not directly answer the question “how much gas does my oven consume”? As relatively few activities in the home run on gas as compared to electricity, it’s possible for users to make some rough guesses from the data. For example, if no one is at home but gas is being consumed, that might mean that the heating is on (and thus that the schedule should be changed). But for more detailed insights, the system would need to analyze longer-term patterns in gas consumption data and estimate likely usage by appliance.

Figure 5-32. An in-home display from Chameleon Technology (image: Chameleon Technology)

When it’s not possible for all system data to be perfectly synchronized or “live,” it’s important to indicate how old data or status information may be. For example, you might show a timestamp for a sensor reading, or the time that the latest status information was received.

In the energy monitoring example we just looked at, it’s important that users understand that the two energy readings are not equally “live.” You could display a timestamp for each reading, but you might also choose to display information in a different format. You might use a line graph for electricity (because you have near continuous readings), but a bar chart for gas, where readings are only intermittent.

It’s important to ensure that system status information is as accurate as it needs to be for the context of use. In a safety critical system, it should be clear when data may be out of date or an instruction may not yet have been received or acted on. A remote door locking system should not pretend that it has locked the door until proven otherwise! Perhaps the biggest challenge design-wise is how to design these behaviors for a system that needs to do multiple things with different responsiveness demands, such as heating, lighting, and safety alarms. It’s safest to err on the side of communicating what is actually happening, but in some circumstances that may feel inelegant.

Handling Cross-Device Interactions and Task Migration

Cross-device interactions require users to switch between devices in order to achieve a goal. Examples might include syncing data from a wearable fitness tracker to a smartphone, or connecting home sensors to a gateway.

Transitions between devices should be smooth and well-signposted. The word “seamless” is often used in cross-platform UX, but it’s probably misleading. Where a task requires the user to interact with more than one device, they need to be aware of the seams: the different role of each device, and the point at which the handover happens. This is especially important to help reinforce the user’s mental model of the system, and what each part does. The less familiar they are with it (e.g., during setup when devices are new and unfamiliar), the more explanation is required. Next, we set out some key requirements for effective, usable cross-device interactions.

In the first place, the user needs to know that they need to switch to another device to complete their intended task. They may have to identify the correct device from among several: for example, there may be several identical light bulbs. Then they need to know what they’re being asked to do, and any information that’s needed to interact effectively with the other device. They also need to know why they’re being asked to switch. For example, are they transferring data, or pairing the devices?

For example, the Misfit Shine syncing process tells the user to place or tap the Shine on the iPhone screen (see Figure 5-33). (Data is transferred over Bluetooth LE but the sync is initiated by the phone recognizing the Shine on the touchscreen.)

The Bluetooth pairing process to connect a Jaguar car to a smartphone displays a four-digit code on the dashboard that needs to be entered in the phone (if not already displayed; see Figure 5-34).

Figure 5-33. Misfit Shine syncing (image: Misfit Wearables)

Figure 5-34. Pairing a Jaguar XF car to a smartphone with Bluetooth

The user also needs to know what reaction to expect from the other device. This is especially important if it has a very limited UI. For example, hitting a button on the Web UI may make an LED flash for two seconds on a motion sensor to help you know which one it is, but you need to know where to look (see Figure 5-35).

Figure 5-35. Identifying a sensor from the AlertMe web interface (image: AlertMe)

If the interaction is not an integral part of a process (i.e., not something the user has to do), provide enough context/content to enable them to decide whether it’s important right now. For example, the Pebble Smartwatch can notify the user of new emails, texts, and Twitter alerts, and shows some of the content (see Figure 5-36). The user might not be able to see the whole message, but there’s usually enough information to decide whether it’s important to get out the phone and read the whole thing there and then. A wearable that only tells you if you have a message, and not who it is from or what it might be, would not offer much over the phone’s audio alert or vibrate function.

With a multidevice interaction, it is very easy to lose track of your progress in a task, or for one or more devices to lose connectivity. Where possible, design for interrupted use. Try to avoid locking users into lengthy processes (such as setup) that must be completed in one sitting or in a specific order. Provide some flexibility: if the user has to break off and return later, don’t lose their progress—allow them to resume part way through. Guide them back to the parts that need to be completed when they return. For example, a home automation system setup process might require users to associate a gateway with an online account and then pair devices. If the user is interrupted after creating the online account but before pairing the devices, make sure that when they log in there is a clear route to resume and add devices, not just a blank screen!

Figure 5-36. A Pebble notification

Broader contexts of interusability

This chapter has focused on cross-device digital interactions. However, these principles can also be applied to more holistic service design thinking across both online and offline interactions. This broadens the focus of UX to include marketing and sales materials that set expectations of what the product does, packaging and setup guides that shape initial impressions of the UX, as well as customer support.

As with cross-device interaction design, the individual parts can be good, but if they don’t work together well the overall experience can still be unsatisfactory or confusing.

You might consider composition when figuring out which setup instructions to put onscreen and which in a print booklet. You would need to consider consistency of language, information graphics, and aesthetics across online and print materials. And you might also need to consider the continuity of any processes that require users to refer between materials. Again, setup is a key example: if your instruction booklet says “now the LED will blink for 2 seconds,” that’s a pointer to look at the other device. Setting user expectations accurately is also a form of continuity: if your marketing materials highlight a feature, it should be easy to find the UI. If not, that’s a form of discontinuity.

The interusability model may not be complete for the broader service context, but we have found it useful for thinking about interactions that span digital and nondigital media.

Summary

Conventional usability/UX is concerned with interactions between a user and a single UI. Interusability deals with interactions across multiple devices. The aim is to create a coherent UX across the whole system even when devices have very different characteristics.

Users need to form a clear mental model of the overall system, although it can be challenging for them to understand the interconnections between devices.

Designers need to distribute functionality between devices, to suit the capabilities of each and context of use (composition).

They also need to determine which elements of the design should be consistent across which parts of the system (e.g., terminology, platform conventions, aesthetic styling, and interaction architecture).

Data and content can sometimes be out of sync around the system, causing continuity issues. Designers may need to find creative ways of dealing with this in the UI. When interactions begin on one device and switch to another, clear signposting is needed.

1 See Luke Wroblewski’s Mobile First (A Book Apart LLC, 2011), http://abookapart.com/products/mobile-first.

2 C. Denis and L. Karsenty, “Inter-Usability of Multi-Device Systems—A Conceptual Framework,” in Multiple User Interfaces: Cross-Platform Applications and Context-Aware Interfaces, eds. A. Seffah and H. Javahery (Hoboken, NJ: Wiley).

3 M. Wäljas, K. Segerståhl, K. Väänänen-Vainio-Mattila, and H. Oinas-Kukkonen, “Cross-Platform Service User Experience: A Field Study and an Initial Framework,” Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices and Services, MobileHCI 2010, p. 219. ACM, New York (2010). Paper available at http://bugi.oulu.fi/~ksegerst/publications/p219-waljas.pdf. I’ll refer to this paper several times in the rest of this chapter as Wäljas et al., although I understand that Katarina Segerståhl was the primary researcher. Her PhD, available at http://herkules.oulu.fi/isbn9789514297274/isbn9789514297274.pdf, builds on the same concepts.

4 Donald Norman, The Design of Everyday Things (New York: Basic Books, 1988).

5 Donald Norman, “Cognitive Engineering,” in User Centered System Design: New Perspectives on Human-Computer Interaction, ed. Norman and Draper (Hillsdale, NJ: Lawrence Erlbaum Associates, 1986).

7 Joakim Formo, “The Internet of Things for Mere Mortals,” http://www.ericsson.com/uxblog/2012/04/the-internet-of-things-for-mere-mortals/.

8 In conversation.

9 Dan Saffer, Designing for Interaction (San Francisco: New Riders, 2006). Larry Tesler interview available at http://www.designingforinteraction.com/tesler.html.

10 The first-generation Tado controller had no onboard controls at all: all interactions were via the smartphone.

11 M. A. Schilling, “Toward a General Modular Systems Theory and Its Application to Interfirm Product Modularity,” Academy of Management Review, 25 (2000).

12 J. Nielsen, “Heuristic Evaluation,” in Usability Inspection Methods, eds. J. Nielsen and R.L. Mack (Hoboken, NJ: Wiley).

13 https://developer.apple.com/library/ios/documentation/UserExperience/Conceptual/MobileHIG/

14 https://developer.android.com/design/index.html

15 Spotting continuity errors in movies is a sport. Sharp-eyed viewers share the errors they have spotted on websites such as http://www.moviemistakes.com.

16 UK heating engineers prefer battery-powered wireless controllers, as they can be installed easily and sited anywhere without risk that rewiring will be needed. In the UK, mains power is 240V AC and any mains electrical work must be done by a qualified electrician. Even replacing an existing mains controller in the same location requires an electrician. This isn’t an issue in the United States, where HVAC controllers typically run on a special low-voltage circuit, making them safe for homeowners to install themselves. This means that in the United States, it’s feasible to offer a controller that maintains a constant connection to a WiFi network, and the system can always be in sync.

17 J. Nielsen, “Heuristic Evaluation,” in Usability Inspection Methods, eds. J. Nielsen and R.L. Mack (Hoboken, NJ: Wiley).

18 M Krieger, “Secrets to Lightning-Fast Mobile Design,” Warm Gun Conference 2011, https://speakerdeck.com/mikeyk/secrets-to-lightning-fast-mobile-design.

Get Designing for the Internet of Things now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.