Chapter 1. The Problem with Distributed Tracing

I HAVE NO TOOLS BECAUSE I’VE DESTROYED MY TOOLS WITH MY TOOLS.

James Mickens1

The concept of tracing the execution of a computer program is not a new one in any sense. Being able to understand the call stack of a program is fairly critical, you might say, to all manner of profiling, debugging, and monitoring tasks. Indeed, stack traces are likely to be the second most utilized debugging tool in the world, right behind print statements liberally scattered throughout a codebase. Our tools, processes, and technologies have improved over the past two decades and demand new methodologies and patterns of thinking, though. As we recalled in the Introduction, modern architectures such as microservices have fundamentally broken these classic methods of profiling, debugging, and monitoring. Distributed tracing stands ready to alleviate these issues to fix the holes in our tools that we have destroyed with our tools.

There’s just one problem—distributed tracing can be hard. Why is this the case? Three fundamental problems generally occur when you’re trying to get started with distributed tracing.

First, you need to be able to generate trace data. Support for distributed tracing as a first-class citizen in your runtime may be spotty or nonexistent. Your software might not be structured to easily accept the instrumentation code required to emit tracing data. You may use patterns that are antithetical to the request-based style of most distributed tracing platforms. Often, distributed tracing initiatives are dead on arrival due to the challenges of instrumenting an existing codebase.

Another problem is how you collect and store the trace data generated by your software. Imagine hundreds or thousands of services, each emitting small chunks of trace data for each request, potentially millions of times per second. How do you capture that data and store it for analysis and retrieval? How do you decide what to keep, and how long to keep it? How do you scale the collection of your data in time with requests to your services?

Finally, once you’ve got all of this data, how do you actually derive value from it? How do you translate the raw trace data that you’re receiving into actionable insights and actions? How do you use trace data to provide context to other service telemetry, reducing the time required to diagnose issues? Can you turn your trace data into value for other parts of the business, outside of just engineers? These questions, and more, stymie and confuse many who are trying to get started with distributed tracing.

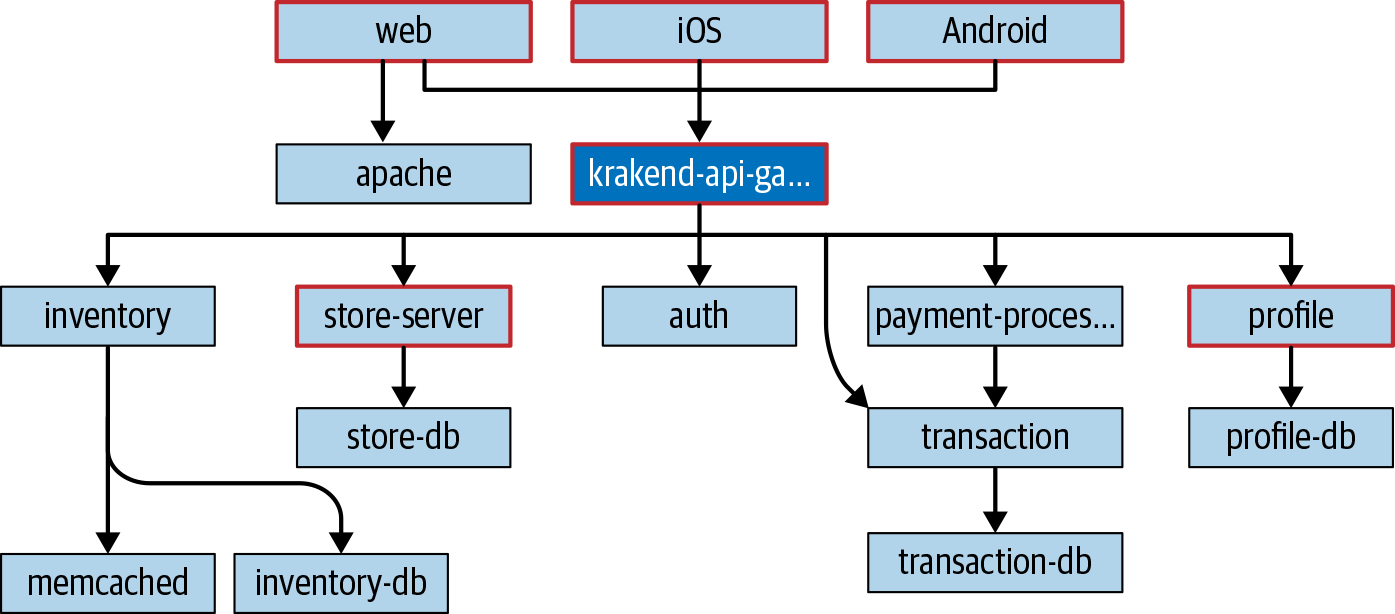

The result of a distributed tracing deployment is a tool that grants you visibility into your deep system and the ability to easily understand how individual services in a request contribute to the overall performance of each request. The trace data you’ll generate can be used not only to display the overall shape of your distributed system (see Figure 1-1), but also to view individual service performance inside a single request.

Figure 1-1. A service map generated from distributed trace data.

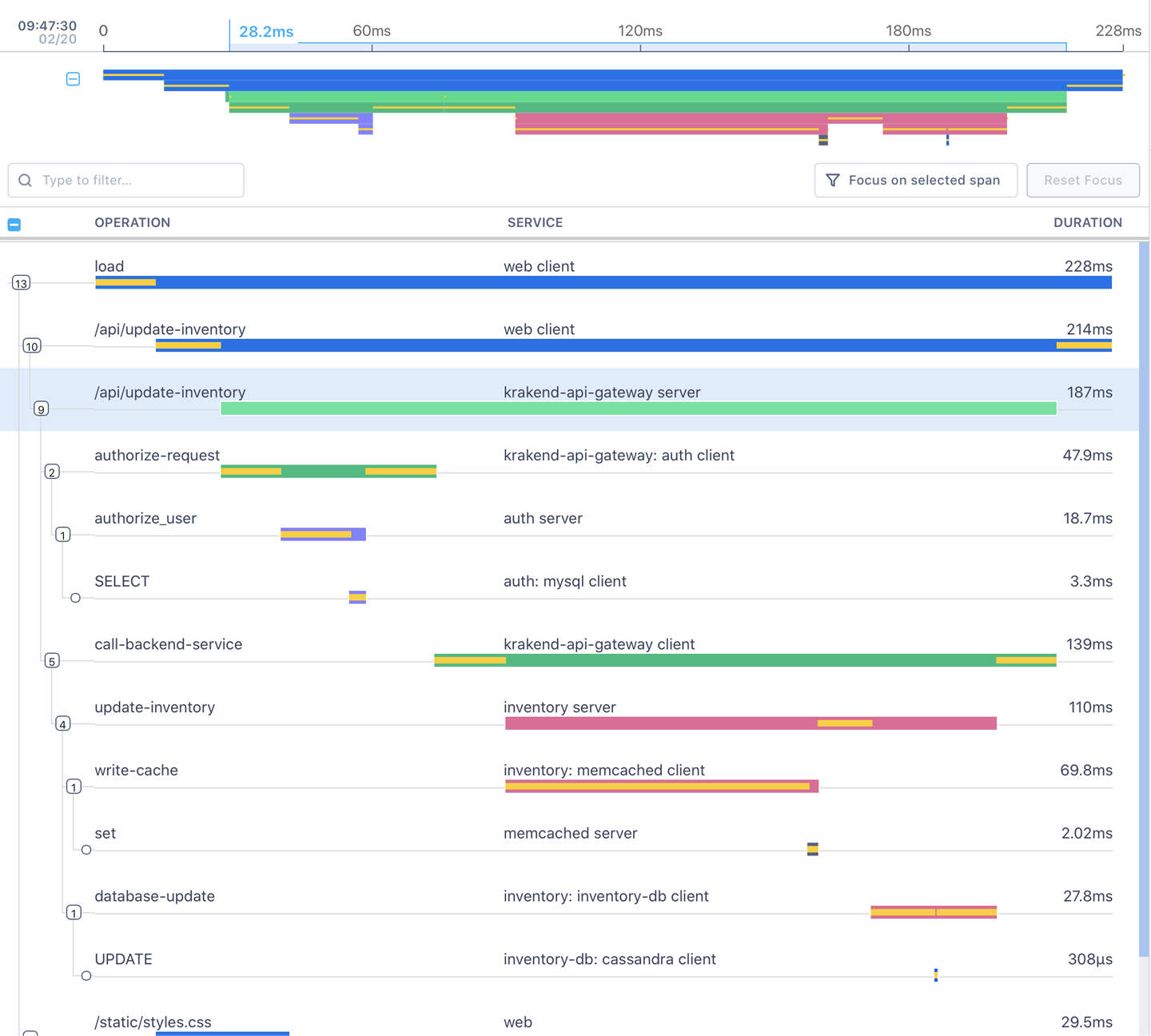

As Figure 1-2 shows, you’ll be able to inspect requests as they move from frontend clients into backend services and understand how—and why—latency or errors are occurring, and what impact they’re having on the entire request. These traces provide a wealth of information that you’ll find invaluable when troubleshooting problems in production, such as metadata that indicates which host or region a particular service is running on. You’ll have the ability to search, sort, filter, group, and generally slice and dice this trace data how you please in order to quickly troubleshoot problems or understand how different dimensions are impacting your service performance.

Figure 1-2. A sample trace demonstrating a request initiated by a frontend web client.

So, how do you get from here to there? What do you need to build a successful distributed tracing deployment?

The Pieces of a Distributed Tracing Deployment

To answer these questions and help you organize your thinking about the subject, we’ve broken down distributed tracing deployments into three main areas of focus, which is also how we’ve organized the book. These three pieces build off of each other, but may be generally useful to different people at different times—by no means do you need to be an expert on all three! Inside each section you’ll find helpful explanations, lessons, and examples of how to build and deliver a distributed tracing deployment at your organization that should help in building confidence in your systems and software.

- Instrumentation, Chapter 2

-

Distributed tracing requires traces. Trace data can be generated through instrumentation of your service processes or by transforming existing telemetry data into trace data. In this section, you’ll learn about spans, the building blocks of request-based distributed traces, and how they may be generated by your services. We’ll discuss the state of the art in instrumentation frameworks such as OpenTelemetry, a widely supported open source project that offers an instrumentation API (and more) that allows for easy bootstrapping of distributed tracing into your software. In addition, we’ll discuss the best practices for instrumenting legacy code as well as greenfield development.

- Deployment, Chapter 5

-

Once you’re generating trace data, you need to send it somewhere. Deploying tracing for your organization requires an understanding of where your software runs—for end users and their clients, as well as on servers—and how it’s operated. You’ll need to understand the security, privacy, and compliance implications of collecting and storing trace data. You may encounter trade-offs in overhead relating to how much data is kept, and how much is discarded through a process known as sampling. We’ll discuss best practices around all of these topics and help you figure out how to quickly deploy tracing infrastructure for your system.

- Delivering value, Chapter 7

-

Once your services are generating trace data and you’ve deployed the necessary infrastructure to collect it, the real fun begins! How do you combine traces with your other observability tools and techniques such as metrics and logs? How do you measure what matters—and how do you define what matters to begin with? Distributed tracing provides the tools you’ll need to answer these questions, and we’ll help you figure it out in this section. You’ll learn how to use traces to improve your baseline performance, as well as how tracing assists you in getting back to that baseline when things catch on fire.

All that said, there’s still an open question here: how does distributed tracing relate to microservices, and distributed architectures more generally? We touched on this in Introduction: What Is Distributed Tracing?, but let’s digress for a moment to review the relationship between these things.

Distributed Tracing, Microservices, Serverless, Oh My!

There’s a certain line of thinking about microservices, now that we’re several years past them being the “hot thing” in every analyst’s portfolio of “Top Trends for 20XX”—namely, that the battle has been won. The exploding popularity of cloud computing, Kubernetes, containerization, and other development tools which enable rapid provisioning and deployment of hardware (or hardware-like abstractions) has transformed the industry, undoubtedly. These factors can make it feel like asking the question “Should I use microservices?” would be to out oneself as a fool or charlatan.

Take a step back here and we’ll look at some real-world data. First and foremost, there’s some evidence that containers aren’t exactly as popular in production as the hype may make them seem: only 25% of developers use them in production.2 Quite a few engineering organizations are still using traditional monoliths for a lot of their work. Why? One reason may be, ironically enough, the lack of accessible distributed tracing tools.

Developer and author Martin Fowler identifies three primary considerations for those adopting microservices: the ability to rapidly provision hardware, the ability to rapidly deploy software, and a monitoring regime that can detect serious problems quickly.3 The things we love about microservices (independence, idempotence, etc.) are also the things that make them difficult to understand, especially when things go wrong. Serverless technologies add further confusion to this equation by giving you less visibility into the runtime environment of a particular function and often being stubbornly resistant to monitoring through your favorite tools.

How, then, should we consider distributed tracing arrayed against these questions? First, distributed tracing solves the monitoring question raised by Fowler by providing visibility into the operation of your microservice architecture. It allows you to gain critical insights into the performance and status of individual services as part of a chain of requests in a way that would be difficult or time-consuming to do otherwise. Distributed tracing gives you the ability to understand exactly what a particular, individual service is doing as part of the whole, enabling you to ask and answer questions about the performance of your services and your distributed system.

Traditional metrics and logging alone simply can’t compare to the additional context provided by distributed tracing. Metrics, for example, will allow you to get an aggregate understanding of what’s happening to all instances of a given service, and even allow you to narrow your query to specific groups of services, but fail to account for infinite cardinality.4

Logs, on the other hand, provide extremely fine-grained detail on a given service, but have no built-in way to provide that detail in the context of a request. While you can use metrics and logs to discover and address problems in your distributed system, distributed tracing provides context that helps you narrow down the search space required to discover the root cause of an incident while it’s occurring (when every moment counts).

As we mentioned in the Introduction, trying to manage and understand a complex, microservice-based distributed architecture can lead to stress and burnout. If you’re thinking about migrating to microservices, are in the middle of a transition from a monolith to microservices, or are already tasked with wrangling an immense microservice architecture, then you might be experiencing this stress too, when considering how to understand the health and performance of your software. Distributed tracing might not be a panacea, but as part of a larger observability strategy, it can become a critical component of how you operate reliable distributed systems.

The Benefits of Tracing

What are the specific benefits you can achieve with distributed tracing? We’ll talk about this throughout the rest of the book, but let’s review the high-level quick wins first:

-

Distributed tracing can transform the way that you develop and deliver software, no doubt about it. It has benefits not only for software quality, but for your organization’s health.

-

Distributed tracing can improve developer productivity and your development output. It is the best and easiest way for developers to understand the behavior of distributed systems in production. You will spend less time troubleshooting and debugging a distributed system by using distributed tracing than you would without it, and you’ll discover problems you wouldn’t otherwise realize you had.

-

Distributed tracing supports modern polyglot development. Since distributed tracing is agnostic to your programming language, monitoring vendor, and runtime environment, you can propagate a single trace from an iOS-native client through a C++ high-performance proxy through a Java or C# backend to a web-scale database and back, all visualized in a single place, using a single tool. No other set of tools allows you this freedom and flexibility.

-

Distributed tracing reduces the overhead required for deployments and rollbacks by quickly giving you visibility into changes. This not only reduces the mean time to resolution of incidents, but decreases the time to market for new features and the mean time to detection of performance regressions. This also improves communication and collaboration across teams because your developers aren’t siloed into a particular monitoring stack for their slice of the pie—everyone, from frontend developers to database nerds, can look at the same data to understand how changes impact the overall system.

Setting the Table

After all that, we hope that we have your attention! Let’s recap:

-

Distributed tracing is a tool that allows for profiling and monitoring distributed systems by way of traces, data that represents requests as they flow through a system.

-

Distributed tracing is agnostic to your programming language, runtime, or deployment environment and can be used with almost every type of application or service.

-

Distributed tracing improves teamwork and coordination, and reduces time to detect and resolve performance issues with your application.

To realize these benefits, first you’ll need some trace data. Then you’ll need to collect it, and finally you’ll have to analyze it. Let’s start at the beginning, then, and talk about instrumenting your code for distributed tracing.

Get Distributed Tracing in Practice now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.