Premature optimization is the root of all evil.

The design and construction of a computer program can involve thousands of decisions, each representing a trade-off. In difficult decisions, each alternative has significant positive and negative consequences. In trading off, we hope to obtain a near optimal good while minimizing the bad. Perhaps the ultimate trade-off is:

I want to go to heaven, but I don’t want to die.

More practically, the Project Triangle:

Fast. Good. Cheap. Pick Two.

predicts that even under ideal circumstances, it is not possible to obtain fast, good, and cheap. There must be a trade-off.

In computer programs, we see time versus memory trade-offs in the selection of algorithms. We also see expediency or time to market traded against code quality. Such trades can have a large impact on the effectiveness of incremental development.

Every time we touch the code, we are trading off the potential of improving the code against the possibility of injecting a bug. When we look at the performance of programs, we must consider all of these trade-offs.

When looking at optimization, we want to reduce the overall cost of the program. Typically, this cost is the perceived execution time of the program, although we could optimize on other factors. We then should focus on the parts of the program that contribute most significantly to its cost.

For example, suppose that by profiling we discover the cost of a program’s four modules.

Module | A | B | C | D |

Cost | 54% | 4% | 30% | 12% |

If we could somehow cut the cost of Module B in half, we would reduce the total cost by only 2%. We would get a better result by cutting the cost of Module A by 10%. There is little benefit from optimizing components that do not contribute significantly to the cost.

The analysis of applications is closely related to the analysis of algorithms. When looking at execution time, the place where programs spend most of their time is in loops. The return on optimization of code that is executed only once is negligible. The benefits of optimizing inner loops can be significant.

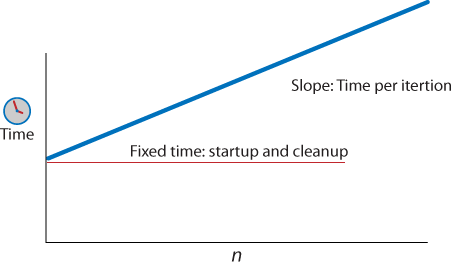

For example, if the cost of a loop is linear with respect to the number of iterations, then we can say it is O(n), and we can graph its performance as shown in Figure 1-1.

The execution time of each iteration is reflected in the slope of the line: the greater the cost, the steeper the slope. The fixed overhead of the loop determines the elevation of its starting point. There is usually little benefit in reducing the fixed overhead. Sometimes there is a benefit in increasing the fixed overhead if the cost of each increment can be reduced. That can be a good trade-off.

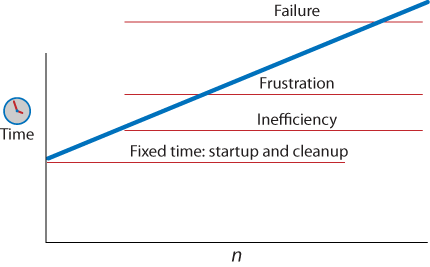

In addition to the plot of execution time, there are three lines—the Axes of Error—that our line must not intersect (see Figure 1-2). The first is the Inefficiency line. Crossing this line reduces the user’s ability to concentrate. This can also make people irritable. The second is the Frustration line. When this line is crossed, the user is aware that he is being forced to wait. This invites him to think about other things, such as the desirability of competing web applications. The third is the Failure line. This is when the user refreshes or closes the browser because the application appears to have crashed, or the browser itself produces a dialog suggesting that the application has failed and that the user should take action.

There are three ways to avoid intersecting the Axes of Error: reduce the cost of each iteration, reduce the number of iterations, or redesign the application.

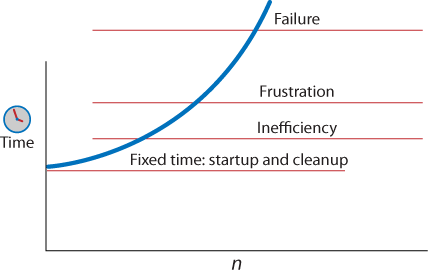

When loops become nested, your options are reduced. If the cost of the loop is O(n log n), O(n2), or worse, reducing the time per iteration is not effective (see Figure 1-3). The only effective options are to reduce n or to replace the algorithm. Fiddling with the cost per iteration will be effective only when n is very small.

Programs must be designed to be correct. If the program isn’t right, it doesn’t matter if it is fast. However, it is important to determine whether it has performance problems as early as possible in the development cycle. In testing web applications, test with slow machines and slow networks that more closely mimic those of real users. Testing in developer configurations is likely to mask performance problems.

Refactoring the code can reduce its apparent complexity, making optimization and other transformations more likely to yield benefits. For example, adopting the YSlow rules can have a huge impact on the delivery time of web pages (see http://developer.yahoo.com/yslow/).

Even so, it is difficult for web applications to get under the Inefficiency line because of the size and complexity of web pages. Web pages are big, heavy, multipart things. Page replacement comes with a significant cost. For applications where the difference between successive pages is relatively small, use of Ajax techniques can produce a significant improvement.

Instead of requesting a replacement page as a result of a user action, a packet of data is sent to the server (usually encoded as JSON text) and the server responds with another packet (also typically JSON-encoded) containing data. A JavaScript program uses that data to update the browser’s display. The amount of data transferred is significantly reduced, and the time between the user action and the visible feedback is also significantly reduced. The amount of work that the server must do is reduced. The amount of work that the browser must do is reduced. The amount of work that the Ajax programmer must do, unfortunately, is likely to increase. That is one of the trade-offs.

The architecture of an Ajax application is significantly different from most other sorts of applications because it is divided between two systems. Getting the division of labor right is essential if the Ajax approach is to have a positive impact on performance. The packets should be as small as possible. The application should be constructed as a conversation between the browser and the server, in which the two halves communicate in a concise, expressive, shared language. Just-in-time data delivery allows the browser side of the application to keep n small, which tends to keep the loops fast.

A common mistake in Ajax applications is to send all of the application’s data to the browser. This reintroduces the latency problems that Ajax is supposed to avoid. It also enlarges the volume of data that must be handled in the browser, increasing n and again compromising performance.

Ajax applications are challenging to write because the browser was not designed to be an application platform. The scripting language and the Document Object Model (DOM) were intended to support applications composed of simple forms. Surprisingly, the browser gets enough right that it is possible to use it to deliver sophisticated applications. Unfortunately, it didn’t get everything right, so the level of difficulty can be high. This can be mitigated with the use of Ajax libraries (e.g., http://developer.yahoo.com/yui/). An Ajax library uses the expressive power of JavaScript to raise the DOM to a practical level, as well as repairing many of the hazards that can prevent applications from running acceptably on the many brands of browsers.

Unfortunately, the DOM API is very inefficient and mysterious. The greatest cost in running programs tends to be the DOM, not JavaScript. At the Velocity 2008 conference, the Microsoft Internet Explorer 8 team shared this performance data on how time is spent in the Alexa 100 pages.[2]

Activity | Layout | Rendering | HTML | Marshaling | DOM | Format | JScript | Other |

Cost | 43.16% | 27.25% | 2.81% | 7.34% | 5.05% | 8.66% | 3.23% | 2.5% |

The cost of running JavaScript is insignificant compared to the other things that the browser spends time on. The Microsoft team also gave an example of a more aggressive Ajax application, the opening of an email thread.

Activity | Layout | Rendering | HTML | Marshaling | DOM | Format | JScript | Other |

Cost | 9.41% | 9.21% | 1.57% | 7.85% | 12.47% | 38.97% | 14.43% | 3.72% |

The cost of the script is still less than 15%. Now CSS processing is the greatest cost. Understanding the mysteries of the DOM and working to suppress its impact is clearly a better strategy than attempting to speed up the script. If you could heroically make the script run twice as fast, it would barely be noticed.

There is a tendency among application designers to add wow features to Ajax applications. These are intended to invoke a reaction such as, “Wow, I didn’t know browsers could do that.” When used badly, wow features can interfere with the productivity of users by distracting them or forcing them to wait for animated sequences to play out. Misused wow features can also cause unnecessary DOM manipulations, which can come with a surprisingly high cost.

Wow features should be used only when they genuinely improve the experience of the user. They should not be used to show off or to compensate for deficiencies in functionality or usability.

Design for things that the browser can do well. For example, viewing a database as an infinitely scrolling list requires that the browser hold on to and display a much larger set than it can manage efficiently. A better alternative is to have a very effective paginating display with no scrolling at all. This provides better performance and can be easier to use.

Most JavaScript engines were optimized for quick time to market, not performance, so it is natural to assume that JavaScript is always the bottleneck. Typically, however, the bottleneck is not JavaScript, but the DOM, so fiddling with scripts will have little effectiveness.

Fiddling should be avoided. Programs should be coded for correctness and clarity. Fiddling tends to work against clarity, which can increase the susceptibility of the program to attract bugs.

Fortunately, competitive pressure is forcing the browser makers to improve the efficiency of their JavaScript engines. These improvements will enable new classes of applications in the browser.

Avoid obscure idioms that might be faster unless you can prove that they will have a noticeable impact on your application. In most cases, they will have no noticeable impact except to degrade the quality of your code. Do not tune to the quirks of particular browsers. The browsers are still in development and may ultimately favor better coding practices.

If you feel you must fiddle, measure first. Our intuitions of the true costs of a program are usually wrong. Only by measuring can you have confidence that you are having a positive effect on performance.

Everything is a trade-off. When optimizing for performance, do not waste time trying to speed up code that does not consume a significant amount of the time. Measure first. Back out of any optimization that does not provide an enjoyable benefit.

Browsers tend to spend little time running JavaScript. Most of their time is spent in the DOM. Ask your browser maker to provide better performance measurement tools.

Code for quality. Clean, legible, well-organized code is easier to get right, easier to maintain, and easier to optimize. Avoid tricks except when they can be proven to substantially improve performance.

Ajax techniques, when used well, can make applications faster. The key is in establishing a balance between the browser and the server. Ajax provides an effective alternative to page replacement, turning the browser into a powerful application platform, but your success is not guaranteed. The browser is a challenging platform and your intuitions about performance are not reliable. The chapters that follow will help you understand how to make even faster web sites.

Get Even Faster Web Sites now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.