Chapter 10. Spark Components and Packages

Spark has a large number of components that are designed to work together as an integrated system, and many of them are distributed as part of Spark. This is different from the Hadoop ecosystem, which has different projects or systems for each task. You’ve already seen how to effectively use Spark Core, SQL, and ML components, and this chapter will introduce you to Spark’s Streaming components, as well as the external/community components (often referred to as packages). Having a largely integrated system gives Spark two advantages: it simplifies both deployment/cluster management and application development by having fewer dependencies and systems to keep track of.

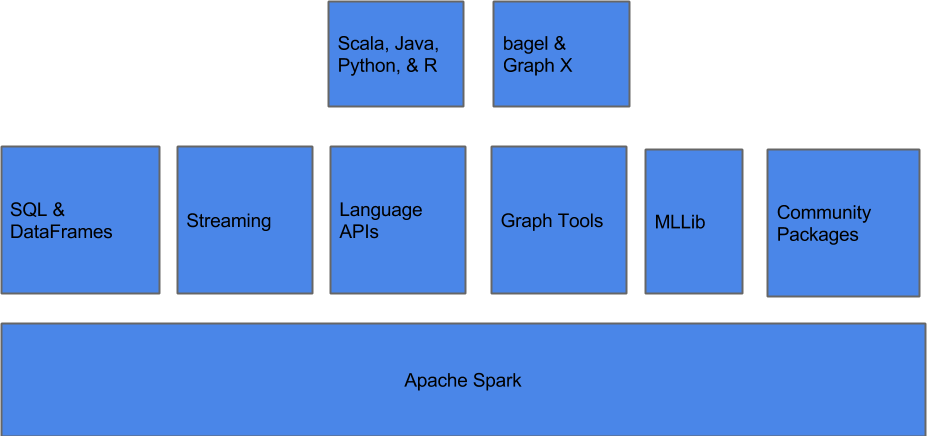

Even early versions of Spark provided tools that traditionally would have required the coordination of multiple systems, as illustrated in Figure 10-1.

Figure 10-1. Spark components diagram

As Datasets and the Spark SQL engine have become a building block for other components inside of Spark, a minor reorganization illustrated in Figure 10-2 represents a more up-to-date version, including two of Spark’s newest components, Spark ML and Structured Streaming.

Much of your knowledge from working with core Spark and Spark SQL can be applied to the other components—although there are some unique considerations for each one.

Figure 10-2. Spark 2.0+ revised components diagram

Get High Performance Spark now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.