This book describes the jobs that I and other networking engineers have performed on client networks over the past few years. We are considered network warriors because of the way that we attack networking challenges and solve issues for our clients. Network warriors come from different backgrounds, including service provider routing, security, and the enterprise. They are experts on many different types of equipment: Cisco, Checkpoint, and Extreme, to name a few. A warrior may be a member of the client’s networking staff, drafted in for a period of time to be part of the solution, but more often than not, the warriors are transient engineers brought into the client’s location.

This book offers a glimpse into the workings of a Juniper Networks warrior. We work in tribes, groups of aligned warriors working with a client toward a set of common goals. Typically technical, commonly political, and almost always economical, these goals are our guides and our measures of success.

Note

To help you get the picture, a quote from the 1970 movie M*A*S*H is just about right for us network warriors: “We are the Pros from Dover and we figure to crack this kid’s chest and get off to the golf course before it gets dark.” Well, not really, but the sentiment is there. We are here to get the job done!

Over the past four years, I have been privileged to team with talented network engineers in a large number of engagements, using a tribal approach to problem solving and design implementation. It is a treat to witness when multiple network warriors put their heads together for a client. But alas, in some cases it’s not possible to muster a team, either due to financial constraints, complexity, or timing, and the “tribe” for the engagement ends up being just you. Such was the case for the first domain we’ll look at in this book, deploying a corporate VPN.

While I used Juniper Networks design resources for this engagement, there were no other technical team members actively engaged, and I resigned myself to do this job as a tribe of one (although with backup support only a phone call away—don’t you just love the promise of JTAC if needed?).

The project came to Proteus Networks (my employer) from a small value added reseller (VAR) based in New England. “We just sold a half-dozen small Juniper Networks routers and the buyers need some help getting up and running.” I thrive on such a detailed statement of work. After a phone call to the VAR, and a couple of calls to the customer, I was able to determine the requirements for this lonely engagement.

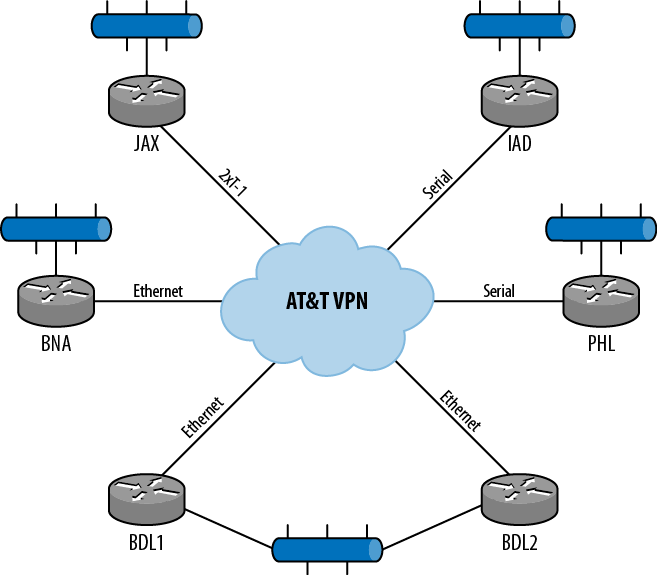

The company is an enterprise with five locations in the Eastern US (Figure 1-1). The headquarters are located in Hartford, CT (BDL). This location houses the management offices, the accounting and HR departments, the primary data center, and warehouse facilities. A backup data center is located in the Nashville, TN (BNA) area in a leased facility. There are three other warehouses scattered down the Eastern Seaboard, with the southernmost being in Florida.

The company has a CEO who is a techie (he was a Coast Guard radio technician, the kind that can make a radio with nothing more than a soldering iron and a handful of sand). He has kept up with developments in CRM (customer relationship management), inventory control, and web sales. He has grown his company to be a leader in his industry segment by being able to predict when his customers are going to need his product, often before the customers themselves know it.

Prior to the upgrade, the company was running on an Internet-based wide area network. All sites were connected to the Internet and had IPSec tunnels back to the headquarters, creating a virtual private network. The sites have DSL Internet connections from the local ISPs. Each location has a simple LAN/firewall network using static routes to send traffic to the Internet or the main location. At the main location, a series of static routes parse the traffic to its destinations.

In 2010, the company created a disaster recovery and business continuity site in Nashville. The original connectivity between the primary servers in Hartford and the backups in Nashville was a private line service running at 1.5 Mbps.

Local inventory control servers in each of the remote locations are updated to the main servers every evening using the IPSec VPN connection over the Internet. All sales transactions are performed over the Internet, either by customers on the web pages or by sales staff over a web portal. The web servers and other backend functions are performed at the main server location (Hartford).

The Hartford servers continually update the backup servers in Nashville. In the event of a failure of the Hartford servers, the traffic is directed to the Nashville servers. This changeover is currently a manual process.

Voice communication is provided by cellular service at all locations. A virtual PBX in the main location forwards incoming customer calls to the sales forces. Each employee has a smartphone, tablet, or laptop for instant messaging and email access.

The CEO realized that the use of nonsecured (reliability-wise) facilities for the core business functions would sooner or later cause an issue with the company. To avert a disaster, and to add services, he decided to install a provider-provisioned VPN (PPVPN) between all the sites. Each site would operate independently, as before, for Internet access and voice service, but all interoffice communications would now be handled by the PPVPN rather than the Internet.

This change would also allow the CEO to offer a series of how-to seminars to the customer base. The videos would be shot on location and uploaded to the main location for post-production work. They would be offered on the website and distributed in DVD format to the retail stores.

After talking to a number of service providers, the CEO settled on AT&T’s VPN service. It offered connectivity options that made sense for the locations and the bandwidth required from each location. An option with the VPN service is the class of service differentiation that can prioritize traffic as it passes over the infrastructure. The CEO thought that this might come in handy for the different traffic types found on the internal network.

The company looked at managed routers from AT&T and compared the price to the purchasing of new equipment. They decided on buying Juniper Networks routers for all the locations. They bought MX10s for the Hartford and Nashville locations, to take advantage of the growth opportunity and the Ethernet interfaces for the VPN. At the remote locations with private line connectivity to AT&T, POP was more economical than Ethernet, so an older J-series router (J2320) was chosen for the availability of the serial cards (T1 and RS-422).

What the CEO required for support was configurations for each of the routers that interconnected the existing LANs at each of the locations and offered a class of service for the different traffic types. He also wanted assistance during the installation of the routers at all the locations.

AT&T offers a variety of profiles for customers that wish to add class of service to their VPN connectivity. AT&T provides customers with a class of service planning document and a worksheet to be filled out if that customer will be using AT&T’s managed router service with the VPN. In this case, the CEO decided that the Multimedia Standard Profile #110 (reference Table 1-1) made the most sense for the company. The description of that profile from the AT&T planning document is as follows.

Multimedia Standard Profiles

Profiles in this category are recommended for high-speed connections or if the band-width demands of Real-time applications is small. Currently, the Multimedia profiles with Real-time bandwidth allocation are only available on private leased line access of 768K or greater. Ideal candidates are Branch sites or Remote locations that require Real-time as well as other application access. The maximum bandwidth allocated for the Real-time class is reserved but can be shared among non Real-time traffic classes in the configured proportions.

Table 1-1. Subset of AT&T CoS profiles

Traffic class | Profile #108 | Profile #109 | Profile #110 | Profile #111 | Profile #112 | Profile #113 | Profile #114 | Profile #115 | Profile #116 |

|---|---|---|---|---|---|---|---|---|---|

CoS1 (real-time) | 50% | 40% | 40% | 40% | 20% | 20% | 20% | 10% | 10% |

CoS2 (critical data) | 0% | 48% | 36% | 24% | 64% | 48% | 32% | 54% | 54% |

CoS3 (business data) | 0% | 6% | 18% | 18% | 8% | 24% | 24% | 27% | 27% |

CoS4 (standard data) | 50% | 6% | 6% | 18% | 8% | 8% | 24% | 9% | 9% |

The CEO defined four classes of traffic that sort of fit into the AT&T classes (see the design trade-offs below for the mapping and parameters). They are:

- Multimedia traffic

The how-to videos, training seminars, and company-wide video meetings are all classified as multimedia traffic. This traffic, while not always present, represents the largest bandwidth hog.

- Inventory control and CRM traffic

This traffic represents a constant background of traffic on the network. Traffic is generated as customers order supplies, as inventory control tracks retail floats, and as materials are received and shipped. While this traffic amounts only to a few kilobits per second overall, it is the most important traffic on the network as far as business success is concerned.

- Office automation traffic

This is the normal email, IM, file transfer, and remote printing traffic that is seen in any office. This traffic is the lowest in bandwidth and has the lowest priority.

- Internet traffic

While each remote site maintains an Internet presence, the servers in the Hartford (or Nashville) location process all supply searches, orders, and queries. This traffic is backhauled from the remote sites to the main servers for processing, and then returned to the Internet at the remote locations. This traffic is a growing stream that is the future of the company. An effort in inventory control will give each member of the sales teams and the delivery force smartphones that can scan supplies and query the inventory server to locate the nearest item.

The design trade-offs for this project fell into three categories: routing between sites, class of service categories, and survivability. The first and the last are interrelated, so they are covered first.

The legacy network used static routes at the remote sites to connect users to the Internet and the IPSec VPN tunnels to the main location. The main site has static routes to each of the other locations. All remote locations have network address translation (NAT) for all outgoing traffic. All incoming Internet traffic that arrives at the main location is NATed to a private address and forwarded to the appropriate server.

The trade-off here is one of simplicity versus reliability. The existing system was created so that no knowledge was needed to set up the devices and have them operate. All traffic either went to the Internet or the IPSec tunnel. Once the VPN is added to the network, the simplicity of the single-path network disappears.

The installation of the VPN allows a secondary path for traffic to the Internet as well as from the Internet to the remote sites. The existing static routes could be retained and additional static routing could be used for the new equipment, but this approach requires knowledge of the possible routing outcomes, metrics, bandwidths, and outages. In the event of a failure, this knowledge would be crucial to determining the cause of the outage and getting the traffic back up and running.

The use of a dynamic routing protocol would allow redundancy and best-path routing without the need of a knowledgeable overseeing eye. It would provide a simple configuration for the routers while supporting survivability in the network. The routing protocol of choice is open shortest path first (OSPF) between the router and the firewall in each site.

The use of OSPF could have negative effects as well. Some implementations of IPSec tunnels (for example, policy-based tunnels in SSG devices) cannot support routing protocols. Also, the interface between customer edge (CE) routers and AT&T’s VPN does not support OSPF (it supports only BGP or static routes).

A word about the technical staffing is required at this point. The company has no technical networking staff. It employs a software person to maintain the servers and keep the programs going, but there is no network person on staff. The CEO has made most of the previous configurations and arrangements, with help from the equipment vendors’ sales engineers. In light of this arrangement, anything that I set up has to be very easy to understand, troubleshoot, and fix. Fortunately, the remote office domain of Juniper Networks allows for hands-off operation, troubleshooting, and maintenance.

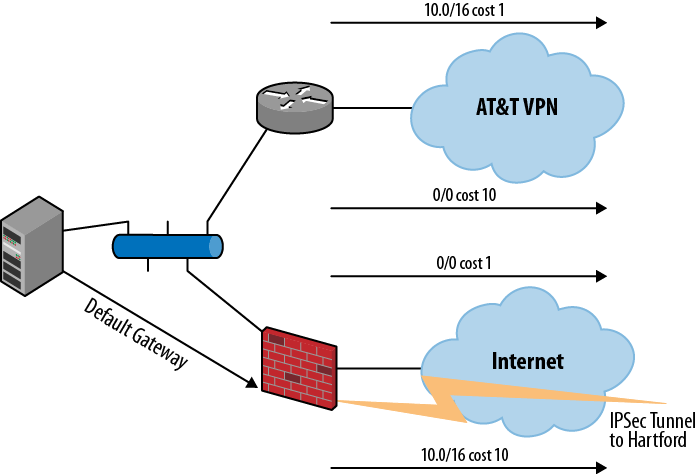

The decision was made to use both approaches. At the remote locations, including the new data center, static routing is used to reduce the complexity while still providing redundancy. The remote locations will retain their existing IPSec tunnels and ISP connections and add the VPN connection. The primary route to the main location is via the VPN for corporate traffic, with the IPSec tunnel as backup. Internet traffic will use an opposite approach: the local ISP connection is the primary route, with the backup being provided by the VPN. Simple route metrics (costs) are used to create the primary secondary relationship. The routes for the remote locations look like those in Figure 1-2.

The impact on the existing firewall is minimal. An additional static route is added to the firewall, identifying the location of the alternate route to the main location via the VPN router with a preferred metric. The existing static route to the main location pointing to the IPSec tunnel is modified to have a higher metric (less preferred).

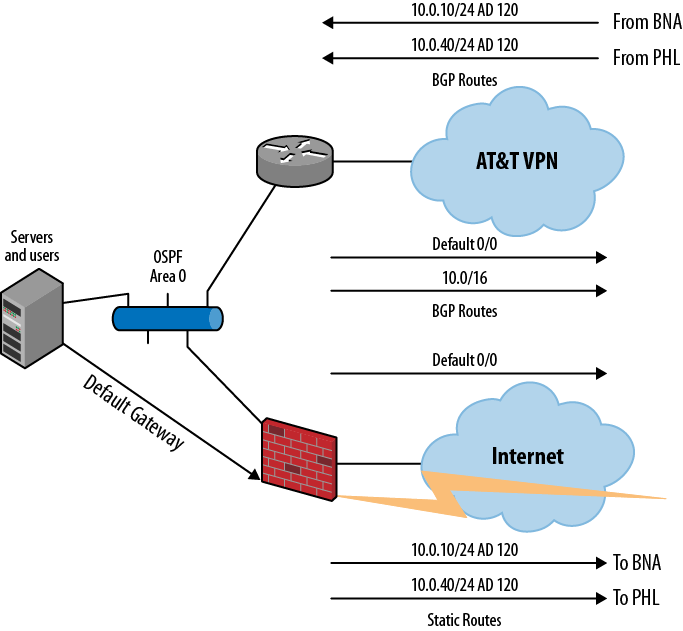

Due to the number of remote locations and the possibility of adding new locations, it was decided to use OSPF in the main location. This would allow the use of the IPSec tunnels as floating statics and the dynamic learning of the remote locations from the VPN CE router. While the main location firewall needed more changes than the remotes, the firewall was up to the task. This decision increased the survivability of the network and decreased the need for changes when more locations were added. It also put the complexity where the intelligence is located in the network (close to the CEO).

The existing static routes to the remote locations were modified to have a higher administrative distance (AD)—yes, they were Cisco devices; the change would have been a route preference for Junos. OSPF was activated on the firewall and the static routes were redistributed into OSPF with a high metric. On the MX10, the same operation was performed, but this time the BGP routes from the AT&T VPN were redistributed into OSPF with a lower metric than the Cisco static routes. The arrangement required that each of the VPN tunnel’s addresses be a passive interface in OSPF (that way, the MX10 can use them); the same is true for the interface to the AT&T network. The last issue is that on the MX10, the route preference of BGP is higher than that of the external OSPF routes (redistributed statics). In order for the router to choose the AT&T VPN for outgoing traffic, the OSPF external route preference must be raised to above that of BGP (170).

The static route to the Internet is also redistributed to OSPF and offered to the AT&T VPN. This allows each of the remote locations to use this route as an alternative to the local Internet connectivity.

Note

The CEO recognized the critical nature of the local Internet access at the main location, but at this point, due to DNS and hosting issues, a true hot standby in Nashville was not possible. The development of this capability was left for another time and another engagement.

The routing arrangement at the main location is shown in Figure 1-3.

The issues for class of service center on how to assign the classes to the traffic types. The class definitions from the AT&T planning guide are:

- COS1

Is for real-time traffic (voice and video) that is given the highest priority in the network. It is guaranteed the lowest latency and the assigned bandwidth. If COS1 traffic does not run at the assigned levels, then other classes can use that bandwidth. The network discards traffic above the assigned bandwidth levels.

- COS2

Is for mission-critical data traffic. This traffic is given the next highest priority and guarantee. Traffic above the COS2 level is passed through the network at the noncompliant level.

- COS3

Is for office automation traffic. This traffic is offered a guarantee for delivery at the assigned rates. Traffic over these rates is passed as noncompliant traffic.

- COS4

This is for best-effort traffic. If class of service is not enabled, all traffic flows are handled as best-effort traffic.

Each of the traffic classes described by the CEO had to be mapped to these AT&T classes, and definitive descriptors had to be defined to create the filters to identify the traffic.

The multimedia traffic was a good match for the COS1 class. This traffic originated from a known set of servers at a known bandwidth (the codec for the MPEG4 format runs at 768 kbps). The CEO agreed that there would not be a need to have multiple streams running at a single time.

The CRM and inventory traffic had many sources but a very limited set of servers at the main (or backup) location. The applications used a number of ports and could burst traffic during backups and inventory reconciliation between warehouses. To meet the demands of the company, 100 kbps was stated as the expected flow rate for this traffic. This traffic was to be mapped to the COS2 AT&T traffic class.

Web traffic (http and https) has such a core responsibility in the company that it was given the COS3 level of priority. This traffic can be limited to 500 kbps for any link.

The COS4 traffic was the office automation traffic that remained in the network. This traffic class was the default traffic class for all additional traffic as well and would not be policed or shaped in any fashion. It also received a minimal bandwidth guarantee.

Note

You might have noticed that the traffic mapping is not as expected for this company—the priorities of the web traffic and office automation traffic are swapped. The Juniper Networks warrior needs to always listen to the customer, and change from the normal whenever needed. When working directly with a client, making assumptions can often have bad impacts on the company’s operation.

These levels are all well below the AT&T profile for the lowest-bandwidth interface (Jacksonville at 3 Mbps). Setting the router classes to match the customer’s specification assures that the network will not change the traffic priorities.

Once the high-level design was created, reviewed, and approved by the CEO, the actual implementation of the new network was undertaken. The plan used a three-phase approach. In the first phase, a prototype network was created that verified the operation of the design. In the second phase, the equipment was installed onsite and interconnected. In the final phase, the new network was cut into the existing network.

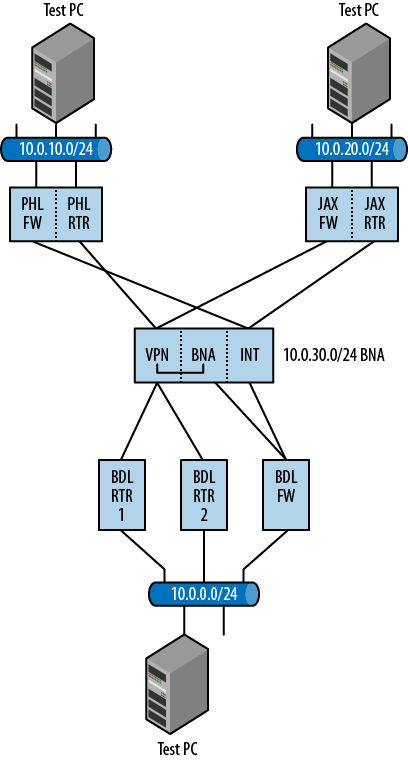

All the Juniper Networks equipment was delivered to the main location, unpacked, and powered up in a lab environment. With the use of routing instances, all the devices of the network were created and interconnected. The J-series routers are equipped with flow-based services and full stateful security services, so these were configured as the firewalls as well as the local routers (all in one box). The router for the backup servers (Nashville) was divided into the local router, the Internet, and the AT&T VPN. Finally, the main routers were configured as themselves. One of the remote locations was repurposed to act as the main location firewall.

This configuration was interconnected with Ethernet links rather than T1 and serial links, but other than that, all the other configuration aspects could be verified. The network diagram looked like that shown in Figure 1-4.

The configuration of the Juniper Networks routers for the firewalls with the IPSec tunnels was taking liberties when compared to the existing firewalls, but for the purposes of the prototype testing, it was acceptable. I did determine a few things that made me wonder, though:

The IPSec tunnels had to be configured from the default routing instance. They could be set up in a routing instance, but I could not get them to come up and pass traffic except in the default routing instance.

The four Ethernet ports on the J2320s were great for aggregating traffic to another device. A single port can act as both the VPN and the Internet ports. This saved cabling and trying to set up the T1 port adapter.

The BNA to VPN connection had to be created internally in the device, as I had used up all the external ports. A filter with a next-table entry and a static route worked just fine.

The relevant configuration of one of the remote locations was:

[edit]

lab@RIGHT_TEST# show

interfaces {

ge-0/0/0 {

description "JAX-FW to LAN";

unit 0 {

family inet {

address 10.0.10.2/24;

}

}

}

ge-0/0/1 {

vlan-tagging;

unit 0 {

description "JAX-RTR to VPN";

vlan-id 100;

family inet {

address 101.121.10.2/24;

}

}

unit 1 {

description "JAX-FW to Internet";

vlan-id 101;

family inet {

address 101.121.20.2/24;

}

}

}

ge-0/0/2 {

description "JAX-RTR to LAN";

unit 0 {

family inet {

address 10.0.10.1/24;

}

}

}

st0 {

description "Tunnel to BDL";

unit 0 {

family inet {

address 10.111.2.1/24;

}

}

}

}While the interface addressing has been altered to preserve privacy, the descriptions of the interfaces give you an indication of their function in the test bed. The static routes used a qualified next hop for the secondary routes. These routes are the firewall routes that are shown in Figure 1-3:

routing-options {

static {

route 10.0.0.0/16 {

next-hop 10.0.10.1;

qualified-next-hop 10.111.2.2 {

metric 100;

}

}

route 0.0.0.0/0 {

next-hop 101.121.20.1;

qualified-next-hop 10.0.10.1 {

metric 100;

}

}

}

}The security stanza was straightforward: a route-based IPSec tunnel with standard proposals connects to the main location. Because the J-series is either a router or a firewall for all interfaces, the router side has zones and security policies just like the firewall interfaces. For the purposes of the tests, all traffic was permitted and no NAT was performed. From a routing perspective, this does not alter the testing. The policies and the host-inbound services have been deleted from the configuration to save space:

security {

ike {

policy ike {

mode main;

proposal-set standard;

pre-shared-key ascii-text "$9$QriN3/t1RuO87-V4oz36/p0BIE";

}

gateway ike-JAX {

ike-policy ike;

address 101.121.30.2;

external-interface ge-0/0/1.100;

}

}

ipsec {

policy vpn {

proposal-set standard;

}

vpn JAX {

bind-interface st0.0;

ike {

gateway ike-JAX;

ipsec-policy vpn;

}

establish-tunnels immediately;

}

}

policies {

...

zones {

security-zone Internet {

interfaces {

ge-0/0/1.1;

}

}

security-zone VPN {

interfaces {

ge-0/0/1.0;

}

}

security-zone JAX-RTR {

interfaces {

ge-0/0/2.0 {

}

}

security-zone JAX-FW {

interfaces {

ge-0/0/0.0 {

st0.0 {

}

}

}

}The routing instance stanza was the actual configuration that would be resident on the router when it was installed at the remote location. The routing options show the static routes between the two devices. In the event of an issue with one route, the qualified next hop would take over:

routing-instances {

JAX-RTR {

instance-type virtual-router;

interface ge-0/0/1.0;

interface ge-0/0/2.0;

routing-options {

static {

route 10.0.0.0/16 {

next-hop 101.121.10.1;

qualified-next-hop 10.0.10.2 {

metric 100;

}

}

route 0.0.0.0/0 {

next-hop 10.0.10.2;

qualified-next-hop 101.121.10.1 {

metric 100;

}

}

}

}

}

}The BDL main routers were set up identically to one another. Each was to be active for the routes that were received from the VPN and OSPF. The selection was to be based on a random choice between these two devices. If one of them failed, the other would take over the full load. The configuration for these devices is shown below. The interfaces have descriptions so that the reader can follow along on the prototype diagrams:

interfaces {

ge-0/0/0 {

description "To the VPN";

unit 0 {

family inet {

address 101.121.30.2/24;

}

}

}

ge-0/0/1 {

description "To the BDL LAN";

unit 0 {

family inet {

address 10.0.0.2/24;

}

}

}

}Both OSPF and BGP were operational on these devices. The BGP protocol allowed remote sites to be learned by the main locations. If a new site was added to the network, only that site needed to be updated, and all the other sites would not need to be touched. BGP has an import policy and an export policy. The export policy allows the VPN to learn about the local addresses, and the import policy is just a safeguard, ensuring that if things go south with AT&T the local router is not going to be making bad decisions. OSPF has an export policy as well. This allows the BGP routes learned from the remote locations to be seen by all the devices at the main location. In all the policies, the internal addressing (10/8) is allowed, as well as the default route (0/0). The last piece is the external route preference that has been assigned to OSPF. This allows the BGP routes to be used as the primary and the IPSec tunnels to the remote locations to be used as a backup (learned via OSPF redistributed from the firewall):

protocols {

bgp {

group VPN {

type external;

import Safe-BGP;

export OSPF-to-VPN;

peer-as 7018;

local-as 65432;

neighbor 101.121.30.1;

}

}

ospf {

external-preference 190;

export BGP-to-OSPF;

area 0.0.0.0 {

interface ge-0/0/0.0 {

passive;

}

interface ge-0/0/1.0;

}

}

}

policy-options {

policy-statement BGP-to-OSPF {

term BGP {

from {

protocol bgp;

route-filter 10.0.0.0/8 orlonger;

route-filter 0.0.0.0/0 exact;

}

then accept;

}

term other {

then reject;

}

}

policy-statement OSPF-to-VPN {

term OSPF {

from {

protocol ospf;

route-filter 0.0.0.0/0 exact;

route-filter 10.0.0.0/8 upto /24;

}

then accept;

}

term other {

then reject;

}

}

policy-statement Safe-BGP {

term Valid-routes {

from {

route-filter 10.0.0.0/8 orlonger;

}

then accept;

}

term default {

from {

route-filter 0.0.0.0/0 exact;

}

then accept;

}

term Other {

then reject;

}

}

}With the prototype up and running, here’s what the route table at the main location showed:

lab@BDL-R1> show route

inet.0: 10 destinations, 11 routes (9 active, 0 holddown, 1 hidden)

+ = Active Route, - = Last Active, * = Both

0.0.0.0/0 *[OSPF/150] 00:22:04, metric 0, tag 0

> to 10.0.0.1 via ge-0/0/1.0

[BGP/170] 00:37:47, localpref 100

AS path: 7018 IThe default route is learned from the local firewall (OSPF) and from the remote location (BGP). This allows the users to access the Internet in the event of a failure of the local ISP:

10.0.0.0/24 *[Direct/0] 01:04:07

> via ge-0/0/1.0

10.0.0.2/32 *[Local/0] 01:14:12

Local via ge-0/0/1.0

10.0.10.0/24 *[BGP/170] 00:50:51, localpref 100

AS path: 7018 I

> to 101.121.30.1 via ge-0/0/0.0

[OSPF/190] 00:04:13, metric 0, tag 0

> to 10.0.0.1 via ge-0/0/1.0

10.0.20.0/24 *[BGP/170] 00:50:51, localpref 100

AS path: 7018 I

> to 101.121.30.1 via ge-0/0/0.0

[OSPF/190] 00:04:13, metric 0, tag 0

> to 10.0.0.1 via ge-0/0/1.0

10.111.2.0/24 *[OSPF/190] 00:04:13, metric 0, tag 0

> to 10.0.0.1 via ge-0/0/1.0The internal network addresses (10.0/16) were seen here as local addresses and learned addresses from the VPN (BGP) as well as the firewall (OSPF). The route preference of these routes was modified so that the BGP routes were preferred. The last local route was the local end of the IPSec tunnel. If the tunnels were ever to use a routing protocol instead of static routing, this address would be necessary to resolve the addresses that were learned over the tunnel. For our purposes, this address was really not necessary.

A careful eye will have noticed a hidden route in the display above: I added a bogus route to the routes advertised from the VPN to the main location. This verified that the import policies were working. The 12.0.0.0/24 route was hidden when received from the VPN:

lab@BDL-R1> show route hidden

inet.0: 10 destinations, 11 routes (9 active, 0 holddown, 1 hidden)

+ = Active Route, - = Last Active, * = Both

12.0.0.0/24 [BGP ] 00:38:19, localpref 100

AS path: 7018 I

> to 101.121.30.1 via ge-0/0/0.0Once the routing mechanisms had been defined and the primary/secondary arrangements had been worked out, it was time to look at the class of service configurations. Because the same traffic was seen on all the interfaces at all the locations, all the configurations were the same. The interface names changed, but the remaining parts of the configurations were exactly the same.

Class of service configuration on Junos is not as simple as other elements, like routing protocols and interfaces—some even consider it to be a bear to configure. There are multiple components that have to be configured, and they all have to agree with one another to ensure that traffic is handled in a consistent manner throughout the network. During the initial talks with the CEO, it was observed that the existing firewalls would not meet the demands of the class of service requirements. It was further determined that these devices would only be used as backups for corporate traffic, so this shortfall was OK.

Note

Whenever I have the chance, I do push the One Junos concept. The same features on the MX can be ported to the SRXs. In this case, the CEO acknowledged the effort but stated that the firewalls that were in place would remain there. Hey, I tried!

Class of service is implemented as follows in the Junos environment:

Incoming traffic is policed and categorized into forwarding classes.

Internal traffic and egress traffic are handled based on the forwarding classes.

Egress traffic is shaped and marked based on the forwarding class.

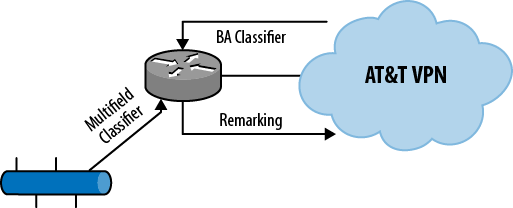

A filter on the ingress interface performs the categorization of incoming traffic. The filter is either a multifield classifier (input firewall filter) or a bandwidth aggregator (BA) classifier. Compliance with bandwidth limits is enforced with the multifield classifier. The difference between the two classification schemes is that the multifield classifier can perform its classification based on any “filterable” field in the packet, while the BA classifier only looks at the class of service marks on the packet.

AT&T’s classes of service are distinguished by the use of the differentiated service code points (DSCPs), or the IP precedence. These fields are part of the packets’ IP headers. AT&T prefers to use the DSCP marking, but will use the IP precedence if the customer’s equipment does not handle the DSCP marking. The classification that AT&T uses (from the class of service planning document) is shown in Table 1-2.

Table 1-2. AT&T class of service coding

TOS (first 6 bits) | Standard per hop behavior | AT&T class |

|---|---|---|

101 110 | DSCP Expedite Forwarding (EF) | COS1 |

101 000 | IP Precedence 5 | COS1 |

011 010 | DSCP Assured Forwarding 31 (AF31) | COS2 compliant |

011 100 | DSCP Assured Forwarding 32 (AF32) | COS2 noncompliant |

011 000 | IP Precedence 3 | COS2 compliant |

010 010 | DSCP Assured Forwarding 21 (AF21) | COS3 compliant |

010 100 | DSCP Assured Forwarding 22 (AF22) | COS3 noncompliant |

010 000 | IP Precedence 2 | COS3 compliant |

000 000 | DSCP Best Effort (DEFAULT) | COS4 |

011 xxx | DSCP Assured Forwarding 3x (AF3x) | COS2 noncompliant |

110 xxx | Reserved for control and signaling | Highest class |

111 xxx | Reserved for control and signaling | Highest class |

010 xxx | DSCP Assured Forwarding 2x (AF2x) | COS3 noncompliant |

101 xxx | COS4 | |

001 xxx | DSCP Assured Forwarding 1x (AF1x) | COS4 |

100 xxx | DSCP Assured Forwarding 4x (AF4x) | COS4 |

000 xxx | COS4 |

For the traffic to be handled by the appropriate class of service in the VPN network, it has to be marked appropriately in the customer’s network. Junos supports a default set of code points for both DSCP and IP precedence. Matching the AT&T classes of Table 1-2 to the output below shows that the two systems are in sync with each other:

lab@BDL-R1>show class-of-service code-point-aliases inet-precedenceCode point type: inet-precedence Alias Bit pattern af11 001 af21 010 af31 011 af41 100 be 000 cs6 110 cs7 111 ef 101 nc1 110 nc2 111 lab@BDL-R1>show class-of-service code-point-aliases dscpCode point type: dscp Alias Bit pattern af11 001010 af12 001100 af13 001110 af21 010010 af22 010100 af23 010110 af31 011010 af32 011100 af33 011110 af41 100010 af42 100100 af43 100110 be 000000 cs1 001000 cs2 010000 cs3 011000 cs4 100000 cs5 101000 cs6 110000 cs7 111000 ef 101110 nc1 110000 nc2 111000

The only piece that seems to be out of sync is the compliant and noncompliant classes. In effect, AT&T handles six classes of service, in the following order:

COS1

COS2 compliant

COS2 noncompliant

COS3 compliant

COS3 noncompliant

COS4

The noncompliant traffic may be discarded at the egress of the VPN if there is not enough bandwidth available for that service class. The compliant and noncompliant markings can be assigned prior to traffic entering the network, or by the network itself based on the profile chosen by the customer.

In this case, the marking is done on the customer’s edge for each class (reference Figure 1-5). This configuration allows the router to shape the traffic as it enters the network. The classification of traffic is done at two points on the Juniper routers: traffic from the local LANs is classified by a multifield classifier and traffic from the VPN is classified by a BA classifier. Traffic shaping and remarking is done only on the interface to the VPN. The remarking is only necessary on the VPN interface because the other portions of the network do not participate in the class of service operations.

The first order of business was the creation of the multifield classifier. This firewall filter accepted the incoming traffic and marked each packet into a forwarding class. The filter was installed on the LAN-facing interfaces for incoming traffic:

ge-0/0/1 {

description "To the BDL LAN";

unit 0 {

family inet {

filter {

input CLASS-OF-SVC;

}

address 10.0.0.2/24;

}

}

}The filter had four terms to divide traffic into the four forwarding classes. The calculation of the maximum transmission rate for each class was handled previously (see the section Design Trade-Offs), but the calculation of the maximum burst size was a point of discussion here: select too small of a maximum burst size and the traffic would be restricted; select too high of a value and no traffic would be policed. The recommended value is the amount of traffic (in bytes) that can be transmitted on an interface in 5 milliseconds, while the minimum value is 10 times the maximum transmission unit (MTU) of the interface. Considering that all the incoming interfaces in this environment were Fast Ethernet (100 Mbps), the recommended value can be calculated to be 62,500 bytes and the minimum value would be 15,000 bytes. The only traffic type that might have a problem with these values is the COS1 video traffic. To avoid any loss of multimedia traffic, the recommended value was doubled to 125,000 bytes.

The first term of the filter looked for traffic destined for or originating from the multimedia servers (10.0.10.10 and 10.0.10.12). This might catch some non-video traffic, but would have a minimal impact on the network. There were three parts to this term—the prefix list that identified the servers (we used the list rather than hardcoding the addresses in the filter just to make things easier to modify later), the policer that limited the traffic (all excess COS1 traffic is discarded), and the filter term that put all the pieces together:

lab@BDL-R1#show policy-optionsprefix-list MM-SVR { 10.0.10.10/32; 10.0.10.11/32; } [edit] lab@BDL-R1#show firewallfamily inet { filter CLASS-OF-SVC { term COS1 { from { prefix-list { MM-SVR; } } then { policer COS1; forwarding-class COS1; accept; } } } } policer COS1 { if-exceeding { bandwidth-limit 768k; burst-size-limit 125k; } then { discard; } }

The other terms were very similar to the first. For each, a prefix list and a policer complemented the term for traffic identification and handling. The last term was a catchall for any other traffic:

[edit] lab@BDL-R1#show firewallfamily inet { filter CLASS-OF-SVC { term COS1 { from { prefix-list { MM-SVR; } } then { policer COS1; forwarding-class COS1; accept; } } term COS2 { from { prefix-list { CRM-SRV; } } then { policer COS2; forwarding-class COS2; accept; } } term COS3 { from { protocol tcp; port [ http https ]; } then { policer COS3; forwarding-class COS3; accept; } } term COS4 { then { forwarding-class COS4; accept; } } } } policer COS1 { if-exceeding { bandwidth-limit 768k; burst-size-limit 125k; } then discard; } policer COS2 { if-exceeding { bandwidth-limit 100k; burst-size-limit 15k; } then loss-priority high; } policer COS3 { if-exceeding { bandwidth-limit 500k; burst-size-limit 30k; } then loss-priority high; } [edit] lab@BDL-R1#show policy-optionsprefix-list MM-SVR { 10.0.10.10/32; 10.0.10.11/32; } prefix-list CRM-SRV { 10.0.10.100/32; 10.0.10.101/32; 10.0.10.102/32; 10.0.10.103/32; 10.0.10.104/32; }

The only piece of this configuration that might make the reader wonder is the setting of the loss priority to high for traffic exceeding the limits. I choose this path rather than the creation of another forwarding class for nonconforming traffic. COS2 traffic with a loss priority of low is the COS2 conforming traffic, while COS2 traffic with a loss priority of high is the COS2 nonconforming traffic. This change in terminology is reflected in the BA classifiers and the rewrite rules that are presented in the following paragraphs.

The multifield classifier was copied to all the other router configurations and applied to the corporate LAN interface.

The bandwidth aggregate classifiers were the next piece of the class of service configuration to be created. These looked at incoming traffic and placed the packets marked with the proper code points into the proper forwarding classes. The BA classifiers were assigned to interfaces facing the VPN. The base configuration for the BA classifiers was:

[edit class-of-service]

lab@BDL-R1# show

classifiers {

dscp ATT {

forwarding-class COS1 {

loss-priority low code-points [ ef cs5 ];

}

forwarding-class COS2 {

loss-priority low code-points [ af31 cs3 ];

loss-priority high code-points [ af32 af33 ];

}

forwarding-class COS3 {

loss-priority low code-points [ af21 cs2 ];

loss-priority high code-points [ af22 af23 ];

}

forwarding-class COS4 {

loss-priority low code-points [ be af11 af12 af13 af41

af42 af43 ];

}

forwarding-class network-control {

loss-priority low code-points [ nc1 nc2 ];

}

}

}

interfaces {

ge-0/0/0 {

unit 0 {

classifiers {

dscp ATT;

}

}

}

}The information for this configuration was gleaned from Table 1-1 and the Junos default code point aliases. The rewrite rules for the routers were the opposite of the classifier rules, minus the duplicates. The configuration for the rewrite rules was:

[edit class-of-service] lab@BDL-R1#showclassifiers { ... interfaces { ge-0/0/0 { unit 0 { classifiers { dscp ATT; }rewrite-rules {dscp ATT;} } } }rewrite-rules{ dscp ATT { forwarding-class COS1 { loss-priority low code-point ef; } forwarding-class COS2 { loss-priority low code-point af31; loss-priority high code-point af32; } forwarding-class COS3 { loss-priority low code-point af21; loss-priority high code-point af22; } forwarding-class COS4 { loss-priority low code-point be; } forwarding-class network-control { loss-priority low code-point nc2; } } }

In most cases, the rewrite rules and the classifier rules should match. I included the IP precedence code points (cs1–cd7) in the classifier just in case these arrive from the network. They should not, but I was making sure all bases were covered. The rewrite rules only show what is leaving the router to the VPN (only the use of DSCP code points for outgoing traffic).

The next piece of the configuration was to define the actual forwarding classes that have been referenced in each of the other configured portions. The forwarding classes are the internal reference points for traffic handling in the router. Traffic is assigned to forwarding classes based on some criteria. There is no specific coding (in the packet header) that identifies the forwarding class except for the mapping defined in the classifiers (multifield and BA). The configuration for the forwarding classes associated one of the queues to each of the forwarding class names. In this case, the names and the queues were an ordered set. The configuration was:

[edit class-of-service]

lab@BDL-R1# show

classifiers {

...

forwarding-classes {

queue 0 network-control;

queue 1 COS1;

queue 2 COS2;

queue 3 COS3;

queue 4 COS4;

}A fifth class of service was added to the mix for network control traffic (a.k.a. routing traffic); BGP had to have a place in the class of service scheme. The network control traffic was assigned its own DSCP codes (nc1 and nc2), as was seen in the rewrite rules and the BA classifier.

Up to now, everything seemed to fit together in a logical sense. The next configuration steps, however, were where the abstract concepts were added to the CoS configuration. The first concept was the schedulers. These associate a forwarding class (and its queue) to a priority for outgoing queuing; they can also identify drop profiles for weighted random early detection (WRED) traffic. In our case, the default drop profiles (linear) were adequate.

For each of the schedulers, a transmit rate and a priority were defined. The rates can be defined as exact (cannot exceed) or as allowing additional traffic to use other idle rates. In our case, the use of idle rates was allowed. The configuration for the schedulers was:

[edit class-of-service schedulers]

lab@BDL-R1# show

COS1 {

transmit-rate 768k;

buffer-size temporal 200k;

priority high;

}

COS2 {

transmit-rate 100k;

priority medium-high;

}

COS3 {

transmit-rate 500k;

priority medium-low;

}

COS4 {

priority low;

}

Network-Control {

transmit-rate percent 10;

priority high;

}When we created the schedulers, we named them the same as the forwarding classes and the filters. This approach can be confusing, but it assures that all the elements are the same for all the classes. The schedulers are the inverse of the multifield classifiers, in the sense that traffic entering an interface is subjected to the classifier, while the traffic exiting the interfaces is subjected to the scheduler. The rates for both are often the same; if high-speed interfaces are mixed with low-speed interfaces the classifier and the scheduler might have different values (so as not to overrun the slower interface), but due to the traffic patterns in this engagement, that was not the case here.

Once the schedulers were defined, they had to be mapped to the forwarding classes with a scheduler map. The scheduler map brings all the elements together and is referenced on the interfaces that need egress queuing (all interfaces in our environment, LAN and VPN). It was configured as follows:

[edit class-of-service] lab@BDL-R1#showclassifiers { ... forwarding-classes { ... interfaces { ge-0/0/0 {scheduler-map ATT;unit 0 { classifiers { dscp ATT; } rewrite-rules { dscp ATT; } } } ge-0/0/1 {scheduler-map ATT;} } rewrite-rules { ...scheduler-maps{ATT{ forwarding-class COS1 scheduler COS1; forwarding-class COS2 scheduler COS2; forwarding-class COS3 scheduler COS3; forwarding-class COS4 scheduler COS4; forwarding-class network-control scheduler Network-Control; } }

Once this configuration was checked and committed on one router, it was copied to all the routers in the network. The interface names were adjusted to match the locations, but all other aspects were copied wholesale. The class of service was verified on the assigned interfaces with the operational command:

lab@BDL-R1>show interfaces ge-0/0/0 extensivePhysical interface: ge-0/0/0, Enabled, Physical link is Up ... Traffic statistics: ...Egress queues: 8 supported, 5 in useQueue counters: Queued packets Transmitted pkts Dropped pkts 0 network-cont 1169 1169 0 1 COS1 0 0 0 2 COS2 0 0 0 3 COS3 0 0 0 4 COS4 0 0 0 Queue number: Mapped forwarding classes 0 network-control 1 COS1 2 COS2 3 COS3 4 COS4 Active alarms : None Active defects : None MAC statistics: ... CoS information: Direction : Output CoS transmit queue Bandwidth Buffer Priority Limit % bps % usec 0 network-control 10 10000000 r 0 high none 1 COS1 0 768000 0 200000 high none 2 COS2 0 100000 r 0 medium-high none 3 COS3 0 500000 r 0 medium-low none 4 COS4 r r r 0 low none Interface transmit statistics: Disabled Logical interface ge-0/0/0.0 (Index 68) (Generation 133)

The output here is truncated to save space, with the remaining portions showing the class of service information. The queues are shown first, with the stats for each queue, the number of dropped packets, and the relative queue names. The CoS information shows the scheduler information for each class (transmit queue). Note that the network control class has the highest bandwidth associated with it. This is the 10% that was assigned to the background traffic. As network bandwidth grows, this number can be reduced to smaller percentages (the default is 5% or 5 Mbps on a Fast Ethernet interface).

The class of service attributes showed each of the configured items. This display can be used to verify the configurations. The specific AT&T information was shown with the following commands:

lab@BDL-R1>show class-of-service classifier name ATTClassifier: ATT, Code point type: dscp, Index: 7594 Code point Forwarding class Loss priority 000000 COS4 low 001010 COS4 low 001100 COS4 low 001110 COS4 low 010000 COS3 low 010010 COS3 low 010100 COS3 high 010110 COS3 high 011000 COS2 low 011010 COS2 low 011100 COS2 high 011110 COS2 high 100010 COS4 low 100100 COS4 low 100110 COS4 low 101000 COS1 low 101110 COS1 low 110000 network-control low 111000 network-control low lab@BDL-R1>show class-of-service rewrite-rule name ATTRewrite rule: ATT, Code point type: dscp, Index: 7594 Forwarding class Loss priority Code point network-control low 111000 COS1 low 101110 COS2 low 011010 COS2 high 011100 COS3 low 010010 COS3 high 010100 COS4 low 000000 lab@BDL-R1>show class-of-service scheduler-map ATTScheduler map: ATT, Index: 3797 Scheduler:Network-Control, Forwarding class:network-control, Index: 40528 Transmit rate: 10 percent, Rate Limit: none, Buffer size: remainder, Buffer Limit: none, Priority: high Excess Priority: unspecified Drop profiles:... Scheduler:COS1, Forwarding class:COS1, Index: 46705 Transmit rate: 768000 bps, Rate Limit: none, Buffer size: 200000 us, Buffer Limit: none, Priority: high Excess Priority: unspecified Drop profiles:... Scheduler:COS2, Forwarding class:COS2, Index: 46706 Transmit rate: 100000 bps, Rate Limit: none, Buffer size: remainder, Buffer Limit: none, Priority: medium-high Excess Priority: unspecified Drop profiles:... Scheduler:COS3, Forwarding class:COS3, Index: 46707 Transmit rate: 500000 bps, Rate Limit: none, Buffer size: remainder, Buffer Limit: none, Priority: medium-low Excess Priority: unspecified Drop profiles:... Scheduler:COS4, Forwarding class:COS4, Index: 46708 Transmit rate: unspecified, Rate Limit: none, Buffer size: remainder, Buffer Limit: none, Priority: low Excess Priority: unspecified Drop profiles:...

The drop profiles have been deleted from each of the schedulers to save space.

Once the class of service was up and running, a series of traffic tests were conducted to verify that the traffic was mapped to the proper classes. The policing and shaping were not verified, due to the limits of the test lab. Once the CEO was satisfied that things were going to work as expected, the system implementation was planned, the equipment was staged and tested, and the system was cut over to the new network.

Once I completed the prototype testing, the configurations were scrubbed and each device was set up with its final configuration. A final test was performed to verify that each device could communicate with the LAN Ethernet port for management access. I double-checked all the addresses, subnet masks, and default routes.

The reason for all the caution was that local personnel were installing the remote locations, but all troubleshooting was going to be performed from the main location. We decided that the cut-over was going to be done over a period of evenings and that each remote location was going be cut over in a separate maintenance window. This reduced the coordination effort for the remote personnel and also the overall stress level of the installation.

As a note, this also allowed us to learn from the initial cut-over. On the first evening, we had some issues with the firewall rules and patching that I had not anticipated, and dealing with them made the other evenings go a lot easier.

Note

Another of the lessons learned for this book: “What you did not think could happen will happen at the most inopportune time and bite you in the butt.”

The cut-over plan was to bring the main location up and make it operational with the VPN. When a remote site was brought online, the traffic for that site would be cut over to the VPN links. Testing and verification were performed on a site-by-site basis.

The installation of the routers at the main site went without a problem. The Ethernet links from AT&T connected to the routers, BGP came up, and routes were seen being exchanged over the interfaces. The internal BGP link between the routers came up and the routes were being exchanged between the two MX10s. We installed a test PC on the LAN switch for later testing (this PC had a default gateway of the MX10s). With the MX10 ready for traffic, only the BDL firewall needed to be altered to allow communications over the new VPN.

With this site installed, the remote sites were attacked. The first site to come online was the JAX site.

The initial cut-over started well enough but then went downhill fast. The equipment arrived at the remote site on time and in good condition. I walked the remote person through the process of installing the router in the proper rack and connecting the cables (power and Ethernet).

The first problem was that when the router was powered up, the lights came on green for the processor and the Ethernet interface, but remained red for the VPN interface. The first remote site was connected to the VPN with two T1 circuits, bundled together to form a 3 Mbps circuit. The configuration for the interfaces, the multilink point-to-point protocol, and the addressing were unique to this site. The cabling between the router and the T1 “smart jack” was supposed to be a straight-through cable, but things did not look good.

The next problem occurred when I attempted to SSH to the router via the existing infrastructure. No-Go, with a big N&G. The connections timed out for both SSH and Telnet. I was not getting a connection refused response, just a lost packet timeout. I checked with the CEO for the firewall access, gained access to that device, and tried again from there to the router. This operated OK, so I backed out and looked at the Cisco rules. I added a rule to allow SSH access through the device from the main addresses to the router, and vice versa.

Back to problem 1—once I had access to the router, the T1 interfaces showed down with the following output (both interfaces were the same):

lab@JAX> show interfaces t1-2/0/0

Physical interface: t1-2/0/0, Enabled, Physical link is Down

Interface index: 145, SNMP ifIndex: 524

Link-level type: Multilink-PPP, MTU: 1510, Clocking: External,

Speed: T1,

Loopback: None, FCS: 16, Framing: ESF

Device flags : Present Running Down

Interface flags: Hardware-Down Point-To-Point SNMP-Traps

Internal: 0x4000

Link flags : None

Keepalive settings: Interval 10 seconds, Up-count 1, Down-count 3

Keepalive: Input: 0 (never), Output: 0 (never)

LCP state: Down

CHAP state: Closed

PAP state: Closed

CoS queues : 8 supported, 8 maximum usable queues

Last flapped : 2012-02-13 23:14:13 UTC (05:49:51 ago)

Input rate : 0 bps (0 pps)

Output rate : 0 bps (0 pps)

DS1 alarms : LOF, LOS

DS1 defects : LOF, LOSThis indicates that the physical connection on the interface is not operational. I spoke to the AT&T technician working on the case, and she verified that the interface was showing down at their end.

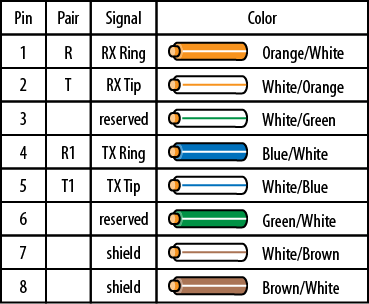

I went into Troubleshooting 101 mode, “Start at the Physical Layer.” The patch cables were new LAN cables, so the probability that they were both bad was low. A quick search showed that the T1 smart jack was wired as shown in Figure 1-6.

I walked the guy on the other end through the process of creating a loopback jack (twist wire 1 to 4 and wire 2 to 5), and we tested each interface.

Note

When in doubt, look it up! Warriors are looked to as walking references of data communications, but while I have seen a lot in the last 30 years, I cannot remember it all. I am the first to say I don’t know, but also the first to look it up and move forward.

All the interfaces transitioned to up with the loopback plug in place, so we knew the problem was in the patch cables. We traded out the patches for another set with no improvement. At this point, we searched for a store close to the site that had various patch cables in stock and located a T1 crossover cable. Once these were procured and installed, the interfaces all came up—for whatever reason, the patch cables needed to be rolled for these interfaces.

We checked whether the interfaces were up and operational with the AT&T technician, and she indicated that they all looked up and good.

Now it was time to see if the VPN was up and operational. We checked the BDL site and found that it showed that BGP was receiving the prefix for JAX. That was a good sign. We did a ping test from BDL and got a response from both ends of the CE to PE link at JAX, and vice versa.

Logging into the JAX router allowed us to ping the servers in BDL using the LAN address as the source address. This did not work because the return route was still through the IPSec tunnel—oops! We satisfied ourselves with pings to the test PC on the LAN side of the BDL routers.

Once the JAX to BDL VPN link was verified as operational, it was time to make the plans for swapping traffic from the IPSec tunnel to the VPN. The steps for that transition were:

Change the existing static route for the 10/8 addresses at the JAX firewall to have a higher metric (10)—this would be the backup route in case the VPN failed.

Add a second static route on the firewall pointing to the VPN router, leaving the metric at the default value—traffic from the devices at the site have a default gateway pointing to the firewall that will redirect the traffic to the VPN router.

Install OSPF at the BDL firewall and redistribute the static routes into OSPF.

Change the administrative distance of the static routes to be higher than that of OSPF (110).

These changes were scheduled for the next maintenance window, and all went as planned (to everyone’s surprise after the issues of the initial install). The routing table from the BDL router showed that the JAX remote site learned from BGP and also from the OSPF external routes (JAX and default):

lab@BDL1> show route

inet.0: 16 destinations, 26 routes (16 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

0.0.0.0/0 *[OSPF/180] 00:00:10, metric 0, tag 0

> to 10.1.10.130 via ge-0/0/0.5

[BGP/190] 02:10:05, localpref 100

AS path: 1234 I

> to 11.2.111.5 via ge-0/0/2.010.1.10.4/30 ...

10.0.30.0/24 *[BGP/170] 01:53:02, localpref 100

AS path: 7018 I

> to 10.1.10.5 via ge-0/0/3.0

[OSPF/180] 00:10:44, metric 0, tag 0

> to 10.1.10.130 via ge-0/0/0.5

224.0.0.5/32 *[OSPF/10] 01:53:40, metric 1

MultiRecvA couple of notes from the routing table. First, in Junos, the default route preference for external OSPF routes is 150, while the route preference for BGP is 170. In the BDL router, I wanted to prefer the BGP routes to the remote locations over the OSPF routes being redistributed from the firewall. To this end, the external OSPF routes are given a higher route preference in the configuration:

lab@BDL1> show configuration protocols ospf

external-preference 180;

area 0.0.0.0 {

interface ge-0/0/0.5;

interface lo0.0;

}The default route received from the remote site allows alternate access to the Internet in the event that the local Internet access is lost. For the remote addresses, the BGP route is preferred over the OSPF route, but in this case, the local OSPF route (the redistributed static route from the firewall) must be preferred over the remote site’s default route. To accomplish this, a route policy had to be created that changed the route preference for the incoming static route. The route policy looks like this:

lab@BDL1# show policy-options

policy-statement Modify-default {

term 1 {

from {

route-filter 0.0.0.0/0 exact;

}

then {

preference 190;

accept;

}

}

term 2 {

then accept;

}

}All incoming BGP routes are accepted, but the default route receives a different route preference.

When the routes were complete for the remote site, the traffic flows to the remote sites showed that the VPN route was being used for outgoing and incoming traffic. When the interface to the VPN on BDL1 was disabled, all traffic flowed over BDL2. When that link was taken down, the traffic reverted to the firewall tunnel. The use of the firewall tunnel caused most users to reinitiate their Internet sessions (the firewall does not like half-open sessions). Once the users reinitiated the Internet sessions, this secondary backup performed as expected. This scenario was acceptable to the CEO.

The other remote sites were cut in a similar manner to the JAX site. Each had a few little glitches (for example, static routes not having the right next-hop address), but all the sites came up and traffic was pushed to the new VPN connectivity.

As a tip that the architecture was correct and that the class of service settings were correct, nobody knew that the cut-over was complete. Nothing broke.

The remaining site to bring online was the backup data center location in BNA. With this site, like the others, the cut-over went off without a hitch. In fact, it was easier because there was no user traffic to interrupt. This site is used when a server crashes in the main data center. Traffic is rerouted to the backup site by the server load balancers; the switchover is totally transparent to the users.

Once the router was up and connected to the VPN, I altered the static routes and tested traffic on the VPN interfaces.

I started this engagement as a tribe of one, but when I got to the finish, I had adopted a new tribe; they were not network engineers or networking professionals, but salespeople and warehouse personnel. We worked together and completed the installation of a new communications backbone for the company that allowed it to grow and reach new heights.

Each engagement is different: you see different technologies and meet a world of different people. And often you find that folks who have no interest in data communications can be productive members of the tribe. The warehouse person in Jacksonville had no idea what an RJ48C connector was, but with a little instruction and patience, he was able to create a usable loopback jack for testing the T1 interfaces. I have no idea what he used to strip the patch cord or what he used to connect the wires together, but it worked and we got the silly thing up and running. I am sure that he does not consider himself part of the tribe, but I sure do.

The technology for this engagement is well baked, and the Juniper Networks routers that were involved were both state of the art (MX10) and legacy (J2320s), yet all are supported, all run the same code, and none brought any surprises to the game. That is the joy of Junos.

Get Juniper Networks Warrior now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.