11

OUR SKYNET MOMENT

ON SEPTEMBER 17, 2011, FED UP WITH GOVERNMENT BAILOUTS that had saved the banks despite the fact that they had brought the world to the brink of financial ruin with a toxic stew of complex derivatives based on aggressively marketed home mortgages, fed up that the banks had then foreclosed on the ordinary people who’d bought homes financed with those mortgages, fed up with crushing student loan debt, fed up with the cost of healthcare they couldn’t afford, fed up with wages that weren’t enough to live on, a group of protesters camped out in Zuccotti Park, a few blocks from Wall Street. Their movement, labeled with the Twitter hashtag #OccupyWallStreet or simply #Occupy, spread worldwide. By early October, Occupy protests had taken place in more than 951 cities, across 82 countries. Many of them were ongoing, with protesters camping out for months, until forcibly removed.

Two days after the protests began, I spent the afternoon at Zuccotti Park, studying the thousands of cardboard signs spread over the ground and surrounding buildings, each telling the story of a person or family failed by the current economy. I talked with the protesters to hear their stories firsthand; I participated in the “people’s microphone,” the clever technique used to get around the ban on amplified sound. Every speaker addressing the crowd paused at the end of each phrase, giving those nearby time to repeat it aloud, with the volume amplified by many voices so that those farther away could hear.

The rallying cry of the movement was “We are the 99%,” a slogan coined by two online activists to highlight the realization, which had recently penetrated the popular consciousness, that 1% of the US population now earned 25% of the national income and owned 40% of its wealth. They began a campaign on Tumblr, a short-form blogging site with hundreds of millions of users. They asked people to post pictures of themselves holding a sign describing their economic situation, the phrase, “I am the 99%,” and a pointer to the occupywallstreet.org site.

The messages were powerful and personal:

“My parents put themselves into debt so I could get a fancy degree. It cost over $100 grand, and I have no job prospects. I am the 99%.”

“I have a master’s degree, and I am a teacher, yet I can barely afford to feed my child because my husband lost his job due to missing too much work being hospitalized with a chronic illness. His meds alone are more than I make in a month. I am the 99%.”

“I have a master’s degree & a full time job in my field—and I have started SELLING MY BODY to pay off my debt. I am the 99%.”

“Single mom, grad student, unemployed, and I paid more tax last year than GE. I am the 99%.”

“I have not seen a dentist or doctor in over 6 years. I have long term injuries that I cannot afford the care for. Some days, I can barely walk. I am the 99%.”

“Single mom. Working part-time and getting food stamps to barely get by. I just want a future for my daughter. I am the 99%.”

“No medical. No dental. No vision. No raises. No 401K. Less than $30K a year before taxes. Less than $24K a year after taxes. I work for a Fortune 500 company. I am the 99%.”

“I have never been appreciated, in retail, for any potential other than selling other people crap, half of which they do not need, and most of which they probably cannot really afford. I hate being used like that, I want a useful job. I am the 99%.”

“We never chose irresponsibly. We were careful not to live outside our means. We bought a humble home and a responsible car; no McMansion, no Hummer. We were OK till my husband was laid off. . . . After six months of unemployment, he was fortunate enough to find work. However, it is 84 miles of commuting a day and it’s 30% less pay. . . . My husband’s fuel costs are almost one of his bi-weekly paychecks. We are in a loss mitigation and loan modification program with our mortgage lender, and struggling with everything we have to keep our little house. I got a 2% raise in June, but my paycheck actually got smaller because my health insurance costs went up. We are the 99%.”

“I have had no job for over 2½ years. Black men have a 20% unemployment rate. I am 33 years old. Born and raised in Watts. I am the 99%.”

“I am nineteen. I have wanted kids in my future for a long time. Now I am scared that the future will not be an OK place for my kids. I am the 99%.”

“I am retired. I live on savings, retirement, and social security. I’m OK. 50 million Americans are NOT OK: they are poor, have no health insurance, or both. But we are all the 99%.”

They go on, thousands of them, voices crying out their fear and pain and helplessness, the voices of people whose lives have been crushed by the machine.

From 2001’s HAL to The Terminator’s Skynet, it’s a science fiction trope: artificial intelligence run amok, created to serve human goals but now pursuing purposes that are inimical to its former masters.

Recently, a collection of scientific and Silicon Valley luminaries, including Stephen Hawking and Elon Musk, wrote an open letter recommending “expanded research aimed at ensuring that increasingly capable AI systems are robust and beneficial: our AI systems must do what we want them to do.” Groups such as the Future of Life Institute and OpenAI have been formed to study the existential risks of AI, and, as the OpenAI site puts it, “to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.”

These are noble goals. But they may have come too late.

We are already in the thrall of a vast, world-spanning machine that, due to errors in its foundational programming, has developed a disdain for human beings, is working to make them irrelevant, and resists all attempts to bring it back under control. It is not yet intelligent or autonomous, and it still depends on its partnership with humans, but it grows more powerful and more independent every day. We are engaged in a battle for the soul of this machine, and we are losing. Systems we have built to serve us no longer do so, and we don’t know how to stop them.

If you think I’m talking about Google, or Facebook, or some shadowy program run by the government, you’d be wrong. I’m talking about something we refer to as “the market.”

To understand how the market, that cornerstone of capitalism, is on its way to becoming that long-feared rogue AI, enemy to humanity, we first need to review some things about artificial intelligence. And then we need to understand how financial markets (often colloquially, and inaccurately, referred to simply as “Wall Street”) have become a machine that its creators no longer fully understand, and how the goals and operation of that machine have become radically disconnected from the market of real goods and services that it was originally created to support.

THREE TYPES OF ARTIFICIAL INTELLIGENCE

As we’ve seen, when experts talk about artificial intelligence, they distinguish between “narrow artificial intelligence” and “general artificial intelligence,” also referred to as “weak AI” and “strong AI.”

Narrow AI burst into the public debate in 2011. That was the year that IBM’s Watson soundly trounced the best human Jeopardy players in a nationally televised match in February. In October of that same year, Apple introduced Siri, its personal agent, able to answer common questions spoken aloud in plain language. Siri’s responses, in a pleasing female voice, were the stuff of science fiction. Even when Siri’s attempts to understand human speech failed, it was remarkable that we were now talking to our devices and expecting them to respond. Siri even became the best friend of one autistic boy.

The year 2011 was also the year that Google announced that its self-driving car prototype had driven more than 100,000 miles in ordinary traffic, a mere six years after the winner of the DARPA Grand Challenge for self-driving cars had managed to go only seven miles in seven hours. Self-driving cars and trucks have now taken center stage, as the media wrestles with the possibility that they will eliminate millions of human jobs. This fear, that this next wave of automation will go much further than the first industrial revolution in making human labor superfluous, is what makes many say “this time is different” when contemplating technology and the future of the economy.

The boundary between narrow AI and other complex software able to take many factors into account and make decisions in microseconds is fuzzy. Autonomous or semiautonomous programs able to perform complex tasks have been part of the plumbing of our society for decades. We rely on automated switching systems to route our phone calls (which were once patched through, literally, by humans in a switchboard office, connecting cables to specific named locations), and humans are routinely ferried thousands of miles by airplane autopilots while the human pilots ride along “just in case.” While these systems at first appear magical, no one thinks of them as AI.

Personal agents like Siri, the Google Assistant, Cortana, and Amazon’s Alexa do strike us as “artificial intelligences” because they listen to us speak, and reply with a human voice, but even they are not truly intelligent. They are cleverly programmed systems, much of whose magic is possible because they have access to massive amounts of data that they can process far faster than any human.

But there is one key difference between traditional programming of even the most complex systems, and deep learning and other techniques at the frontier of AI. Rather than spelling out every procedure, a base program such as an image recognizer or categorizer is built, and then trained by feeding it large amounts of data labeled by humans until it can recognize patterns in the data on its own. We teach the program what success looks like, and it learns to copy us. This leads to the fear that these programs will become increasingly independent of their creators.

Artificial general intelligence (also sometimes referred to as “strong AI”) is still the stuff of science fiction. It is the product of a hypothetical future in which an artificial intelligence isn’t just trained to be smart about a specific task, but to learn entirely on its own, and can effectively apply its intelligence to any problem that comes its way.

The fear is that an artificial general intelligence will develop its own goals and, because of its ability to learn on its own at superhuman speeds, will improve itself at a rate that soon leaves humans far behind. The dire prospect is that such a superhuman AI would have no use for humans, or at best might keep us in the way that we keep pets or domesticated animals. No one even knows what such an intelligence might look like, but people like Nick Bostrom, Stephen Hawking, and Elon Musk postulate that once it exists, it will rapidly outstrip humanity, with unpredictable consequences. Bostrom calls this hypothetical next step in strong AI “artificial superintelligence.”

Deep learning pioneers Demis Hassabis and Yann LeCun are skeptical. They believe we’re still a long way from artificial general intelligence. Andrew Ng, formerly the head of AI research for Chinese search giant Baidu, compared worrying about hostile AI of this kind to worrying about overpopulation on Mars.

Even if we never achieve artificial general intelligence or artificial superintelligence, though, I believe that there is a third form of AI, which I call hybrid artificial intelligence, in which much of the near-term risk resides.

When we imagine an artificial intelligence, we assume it will have an individual self, an individual consciousness, just like us. What if, instead, an AI was more like a multicellular organism, an evolution beyond our single-celled selves? What’s more, what if we were not even the cells of such an organism, but its microbiome, the vast ecology of microorganisms that inhabits our bodies? This notion is at best a metaphor, but I believe it is a useful one.

As the Internet speeds up the connection between human minds, as our collective knowledge, memory, and sensations are shared and stored in digital form, we are weaving a new kind of technology-mediated superorganism, a global brain consisting of all connected humans. This global brain is a human-machine hybrid. The senses of that global brain are the cameras, microphones, keyboards, and location sensors of every computer, smartphone, and “Internet of Things” device; the thoughts of that global brain are the collective output of billions of individual contributing intelligences, shaped, guided, and amplified by algorithms.

Digital services like Google, Facebook, and Twitter that connect hundreds of millions or even billions of people in near-real time are already primitive hybrid AIs. The fact that the intelligence of these systems is interdependent with the intelligence of the community of humans that makes it up is an echo of the way that we ourselves function. Each of us is a vast nation of trillions of differentiated cells, only some of which share our own DNA, while far more are immigrants, the vast microbiome of microorganisms that colonize our guts, our skin, our circulatory systems. There are far more microorganisms in our bodies than there are human cells, not invaders but a functioning part of the whole. Without the microorganisms we host, we could not digest our food or turn it into useful energy. The bacteria in our guts have even been shown to change how we think and how we feel. A multicellular organism is the sum of the communications, the ecosystem, the platform or marketplace if you will, of all its participants. And when that marketplace gets out of balance, we fall ill or fail to live up to our potential.

Humans are living in the guts of an AI that is only now being born. Perhaps, like us, the global AI will not be an independent entity, but a symbiosis with the human consciousnesses living within it and alongside it.

Every day, we teach the global brain new skills. DeepMind began its Go training by studying games played by humans. As its creators wrote in their January 2016 paper in Nature, “These deep neural networks are trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play.” That is, the program began by observing humans playing the game, and then accelerated that learning by playing against itself millions of times, far outstripping the experience level of even the most accomplished human players. This pattern, by which algorithms are trained by humans, either explicitly or implicitly, is central to the explosion of AI-based services.

Explicit development of training data sets for AI is dwarfed, though, by the data that humans produce unasked on the Internet. Google Search, financial markets, and social media platforms like Facebook and Twitter gather data from trillions of human interactions, distilling that data into collective intelligence that can be acted on by narrow AI algorithms. As computational neuroscientist and AI entrepreneur Beau Cronin puts it, “In many cases, Google has succeeded by reducing problems that were previously assumed to require strong AI—that is, reasoning and problem-solving abilities generally associated with human intelligence—into narrow AI, solvable by matching new inputs against vast repositories of previously encountered examples.” Enough narrow AI infused with the data thrown off by billions of humans starts to look suspiciously like strong AI. In short, these are systems of collective intelligence that use algorithms to aggregate the collective knowledge and decisions of millions of individual humans.

And that, of course, is also the classical conception of “the market”—the system by which, without any central coordination, prices of goods and labor are set, buyers and sellers are found for all of the fruits of the earth and the products of human ingenuity, guided as if, as Adam Smith famously noted, “by an invisible hand.”

But is the invisible hand of a market of self-interested human merchants and human consumers the same as a market in which computer algorithms guide and shape those interests?

COLLECTIVE INTELLIGENCE GONE WRONG

Algorithms not only aggregate the intelligence and decisions of humans; they also influence and amplify them. As George Soros notes, the forces that shape our economy are not true or false; they are reflexive, based on what we collectively come to believe or know. We’ve already explored the effect of algorithms on news media.

The speed and scale of electronic networks are also changing the nature of financial market reflexivity in ways that we have not yet fully come to understand. Financial markets, which aggregate the opinions of millions of people in setting prices, are liable to biased design, algorithmically amplified errors, or manipulation, with devastating consequences. In the famous “Flash Crash” of 2010, high-frequency-trading algorithms responding to market manipulation by a rogue human trader dropped the Dow by 1,000 points (nearly a trillion dollars of market value) in only thirty-six minutes, recovering 600 of those points only a few minutes later.

The Flash Crash highlights the role that the speed of electronic networks plays in amplifying the effects of misinformation or bad decisions. The price of goods from China was once known at the speed of clipper ships, then of telegrams. Now electronic stock and commodities traders place themselves closer to Internet points of presence (the endpoints of high-speed networks) to gain microseconds of advantage. And this need for speed has left human traders behind. More than 50% of all stock market trades are now made by programs rather than by human traders.

Unaided humans are at an immense disadvantage. Michael Lewis, the author of Flash Boys, a book about high-frequency trading, summarized that disadvantage in an interview with NPR Fresh Air host Terry Gross: “If I get price changes before everybody else, if I know a stock price is going up or going down before you do, I can act on it. . . . [I]t’s a bit like knowing the result of the horse race before it’s run. . . . The time advantage of a high-frequency trader is so small, it’s literally a millisecond. It takes 100 milliseconds to blink your eye, so it’s a fraction of a blink of an eye, but that for a computer is plenty of time.”

Lewis noted that this divides the market into two camps, prey and predator, the people who actually want to invest in companies, and people who have figured out how to use their speed advantage to front-run them, buy the stock before ordinary traders can get to it, and resell it to them at a higher price. They are essentially parasites, adding no value to the market, only extracting it for themselves. “The stock market is rigged,” Lewis told Gross. “It’s rigged for the benefit for a handful of insiders. It’s rigged to . . . maximize the take of Wall Street, of banks, the exchanges and the high-frequency traders at the expense of ordinary investors.”

When Brad Katsuyama, one of the heroes of Lewis’s book, tried to create a new exchange “where every dollar stands the same chance,” by taking away the advantages of the speed traders, Lewis noted that “the banks and the brokers [who] are also paid a cut of what the high-frequency traders are taking out of investors’ orders . . . don’t want to send their orders on this fair exchange because there’s less money to be made.”

Derivatives, originally invented to hedge against risk, instead came to magnify it. The CDOs (collateralized debt obligations) that Wall Street sold to unsuspecting customers in the years leading up to the 2008 crash could only have been constructed with the help of machines. In a 2009 speech, John Thain, the former CEO of the New York Stock Exchange who’d become CEO of Merrill Lynch, admitted as much. “To model correctly one tranche of one CDO took about three hours on one of the fastest computers in the United States. There is no chance that pretty much anybody understood what they were doing with these securities. Creating things that you don’t understand is really not a good idea no matter who owns it.”

In short, both high-speed trading and complex derivatives tilt financial markets away from human control and understanding. But they do more than that. They have cut their anchor to the human economy of real goods and services. As Bill Janeway noted to me, the bursting of what he calls the “super-bubble” in 2008 “shattered the assumption that financial markets are necessarily efficient and that they will reliably generate prices for financial assets that are locked onto the fundamental value of the physical assets embedded in the nonfinancial, so-called real economy.”

The vast amounts of capital sloshing around in the financial system leading up to that 2008 crisis led to the growth of “shadow banking,” which used that capital to provide credit far in excess of the underlying real assets, credit that was secured by low-quality bonds based on ever-riskier mortgages. Financial capitalism had become a market in imaginary assets, made plausible only by the Wall Street equivalent of fake news.

THE DESIGN OF THE SYSTEM SETS ITS OUTCOMES

High-frequency trading, complex derivatives like CDOs, and shadow banking are only the tip of the iceberg, though, when thinking about how markets have become more and more infused with machinelike characteristics, and less and less friendly to the humans they were originally expected to serve. The fact that we are building financial products that no one understands is actually a reflection of the fundamental design of the modern financial system. What is the fitness function of the model behind its algorithms, and what is the biased data that we feed it?

Like the characters in the Terminator movies, before we can stop Skynet, the global AI bent on the enslavement of humanity, we must travel back in time to try to understand how it came to be.

According to political economist Mark Blyth, writing in Foreign Affairs, during the decades following World War II, government policy makers decided that “sustained mass unemployment was an existential threat to capitalism.” The guiding “fitness function” for Western economies thus became full employment.

This worked well for a time, Blyth notes, but eventually led to what was called “cost-push inflation.” That is, if everyone is employed, there is no barrier to moving from job to job, and the only way to hang on to employees is to pay them more, which employers necessarily compensated themselves for by raising prices, in a continuing spiral of higher wages and higher prices. As Blyth notes, every intervention is subject to Goodhart’s Law: “Targeting any variable long enough undermines the value of the variable.”

Coupled with the end of the Bretton Woods system, a gold exchange standard anchored to the US dollar, the commitment to full employment led to skyrocketing inflation. Inflation is good for debtors—it makes goods such as housing much cheaper, because you repay a fixed dollar amount of debt with future dollars that are worth much less. Meanwhile, you have more of those dollars, as your salary keeps going up. Ordinary goods cost more, though, which means that as a worker, you have to keep demanding higher wages. But inflation is very bad for the owners of capital, since it reduces the value of what they own.

Starting in the 1970s, keeping inflation low replaced full employment as the fitness function. Federal Reserve chairman Paul Volcker put a strict cap on the money supply in an effort to bring inflation to a screeching halt. By the early 1980s, inflation was under control, but at the cost of sky-high interest rates and high unemployment.

The attempt to bring inflation under control was coupled with a series of supporting policy decisions. Labor organizing, which had helped to promote high wages and full employment, was made more difficult. The Taft-Hartley Act of 1947 weakened the power of unions and allowed the passage of state laws that limited it still further. By 2012, only 12% of the US labor force was unionized, down from a peak above 30%. But, perhaps most important, a bad idea took hold.

In September 1970, economist Milton Friedman penned an op-ed in the New York Times Magazine titled “The Social Responsibility of Business Is to Increase Its Profits,” which took ferocious aim at the idea that corporate executives had any obligation but to make money for their shareholders.

“I hear businessmen speak eloquently about the ‘social responsibilities of business in a free-enterprise system,’” Friedman wrote. “The businessmen believe that they are defending free enterprise when they declaim that business is not concerned ‘merely’ with profit but also with promoting desirable ‘social’ ends; that business has a ‘social conscience’ and takes seriously its responsibilities for providing employment, eliminating discrimination, avoiding pollution and whatever else may be the catchwords of the contemporary crop of reformers. In fact they are—or would be if they or anyone else took them seriously—preaching pure and unadulterated socialism.”

Friedman meant well. His concern was that by choosing social priorities, business leaders were making decisions on behalf of their shareholders that those shareholders might individually disagree on. Far better, he thought, to distribute the profits to the shareholders and let them make the choice of charitable act for themselves, if they so wished. But the seed was planted, and began to grow into a noxious weed.

The next step occurred in 1976 with an influential paper published in the Journal of Financial Economics by economists Michael Jensen and William Meckling, “Theory of the Firm: Managerial Behavior, Agency Costs, and Ownership Structure.” Jensen and Meckling made the case that professional managers, who work as agents for the owners of the firm, have incentives to look after themselves rather than the owners. The management, might, for instance, lavish perks on themselves that don’t directly benefit the business and its actual owners.

Jensen and Meckling also meant well. Unfortunately, their work was thereafter interpreted to suggest that the best way to align the interests of management and shareholders was to ensure that the bulk of management compensation was in the form of company stock. That would give management the primary objective of increasing the share price, aligning their interests with those of shareholders, and prioritizing those interests over all others.

Before long, the gospel of shareholder value maximization was taught in business schools and enshrined in corporate governance. In 1981, Jack Welch, then CEO of General Electric, at the time the world’s largest industrial company, announced in a speech called “Growing Fast in a Slow-Growth Economy” that GE would no longer tolerate low-margin or low-growth units. Any business owned by GE that wasn’t first or second in its market and wasn’t growing faster than the market as a whole would be sold or shuttered. Whether or not the unit provided useful jobs to a community or useful services to customers was not a reason to keep on with a line of business. Only the contribution to GE’s growth and profits, and hence its stock price, mattered.

That was our Skynet moment. The machine had begun its takeover.

Yes, the markets have become a hybrid of human and machine intelligence. Yes, the speed of trading has increased, so that a human trader not paired with that machine has become prey, not predator. Yes, the market is increasingly made of complex financial derivatives that no human can truly understand. But the key lesson is one we have seen again and again. The design of a system determines its outcomes. The robots did not force a human-hostile future upon us; we chose it ourselves.

The 1980s were the years of “corporate raiders” celebrated by Michael Douglas’s character, Gordon Gekko, in the 1987 movie Wall Street, who so memorably said, “Greed is good.” The theory was that by discovering and rooting out bad managers and finding efficiencies in underperforming businesses, these raiders were actually improving the operation of the capitalist system. It is certainly true that in some cases they played that role. But by elevating the single fitness function of increasing share price above all else, they hollowed out our overall economy.

The preferred tool of choice has become stock buybacks, which, by reducing the number of shares outstanding, raise the earnings per share, and thus the stock price. As a means of returning cash to shareholders, stock buybacks are more tax-efficient than dividends, but they also send a very different message. Dividends traditionally signaled, “We have more cash than we need for the business, so we are returning it to you,” while stock buybacks signaled, “We believe our stock is undervalued by the market, which doesn’t understand the potential of our business as well as we do.” They were positioned as an investment the company was making in itself. This is clearly no longer the case.

In his 2016 letter to Berkshire Hathaway shareholders, Warren Buffett, the world’s most successful financial investor for the past six decades, put his finger on the short-term thinking driving most buybacks: “The question of whether a repurchase action is value-enhancing or value-destroying for continuing shareholders is entirely purchase-price dependent. It is puzzling, therefore, that corporate repurchase announcements almost never refer to a price above which repurchases will be eschewed.”

Larry Fink, the CEO of BlackRock, the world’s largest asset manager, with more than $5.1 trillion under management, also took aim at buybacks, noting in his 2017 letter to the CEOs that for the twelve months ending in the third quarter of 2016, the amount spent on dividends and buybacks by companies that make up the S&P 500 was greater than the entire operating profit of those companies.

While Buffett believes that companies are spending the money on buybacks because they don’t see opportunities for productive capital investment, Fink points out that for long-term growth and sustainability, companies have to invest in R&D “and, critically, employee development and long-term financial well-being.” Rejecting the idea that companies or the economy can prosper solely by boosting short-term returns to shareholders, he continues: “The events of the past year have only reinforced how critical the well-being of a company’s employees is to its long-term success.”

Fink makes the case that instead of returning cash to shareholders, companies should be spending far more of their hoarded profits on improving the skills of their workers. “In order to fully reap the benefits of a changing economy—and sustain growth over the long-term—businesses will need to increase the earnings potential of the workers who drive returns, helping the employee who once operated a machine learn to program it,” he writes. They “must improve their capacity for internal training and education to compete for talent in today’s economy and fulfill their responsibilities to their employees.”

The Rise and Fall of American Growth, Robert J. Gordon’s magisterial history of the change in the US standard of living since the Civil War, makes a compelling case that after a century of extraordinary expansion, the growth of productivity in the US economy slowed substantially after 1970. Whether Gordon’s analysis that the productivity-enhancing technologies of the previous century gave the economy a historically anomalous surge, or whether Fink and others are right that we simply aren’t making the investments we need, it is clear that companies are using stock buybacks to create the illusion of growth where real growth is lagging.

Stock prices are a map that should ideally describe the underlying prospects of companies; attempts to distort that map should be recognized for what they are. We need to add “fake growth” to “fake news” in our vocabulary to describe what is going on. Real growth improves people’s lives.

Apologists for buybacks argue that much of the benefit of rising stock prices goes to pension funds and thus, by extension, to a wide swath of society. However, even with the most generous interpretation, little more than half of all Americans are shareholders in any form, and of those who are, the proportional ownership is highly skewed toward a small segment of the population—the now-famous 1%. If companies were as eager to allocate shares to all their workers in simple proportion to their wages as they are to award them to top management, this argument might hold some water.

The evidence that companies constructed around a different model can be just as successful as their financialized peers is hiding in plain sight. Storied football firm the Green Bay Packers is owned by its fans and uses that ownership to keep ticket prices low. Outdoors retailer REI, a member cooperative with $2.4 billion in revenue and six million members, returns profits to its members rather than to outside owners. Yet REI’s growth consistently outperforms both its publicly traded competitors and the entire S&P 500 retail index. Vanguard, the second-largest financial asset manager in the United States, with more than $4 trillion under management, is owned by the mutual funds whose performance it aggregates. John Bogle, its founder, invented the index fund as a way to keep fund management fees low, transferring much of the benefit of stock investing from money managers to its customers.

Despite these counterexamples, the idea that extracting the highest possible profits and then returning the money to company management, big investors, and other shareholders is good for society has become so deeply rooted that it has been difficult for too long to see the destructive effects on society when shareholders are prioritized over workers, over communities, over customers. This is a bad map that has led our economy deeply astray.

As former chair of the White House Council of Economic Advisers Laura Tyson impressed on me over dinner one night, though, the bulk of jobs are provided by small businesses, not by large public companies. She was warning me not to overstate the role of financial markets in economic malaise, but her comments instead reminded me that the true effect of “trickle-down economics” is the way that the ideal of maximizing profit, not shared prosperity, has metastasized from financial markets and so shapes our entire society.

Mistaking what is good for financial markets for what is good for jobs, wages, and the lives of actual people is a fatal flaw in so many of the economic choices business leaders, policy makers, and politicians make.

William Lazonick, a professor of economics at the University of Massachusetts Lowell and director of the Center for Industrial Competitiveness, notes that in the decade from 2004 to 2013, Fortune 500 companies spent an astonishing $3.4 trillion on stock buybacks, representing 51% of all corporate profits for those companies. Another 35% of profits were paid out to shareholders in dividends, leaving only 14% for reinvestment in the company. The 2016 figures cited by Larry Fink are the culmination of a decades-long trend. Companies like Amazon that are able to defy financial markets and sacrifice short-term profits in favor of long-term investment are all too rare.

The decline in corporate retained earnings is critical, because they are the most important source of funds for business investment. Despite the common idea that financial markets are used to fund business expansion, Lazonick notes that “the primary role of the stock market has been to permit owner-entrepreneurs and their private-equity associates to exit personally from investments that have already been made rather than to enable a corporation to raise funds for new investment in productive assets.”

Since the mid-1980s, Lazonick observes, “the resource-allocation regime at many, if not most, major U.S. business corporations has transitioned from ‘retain-and-reinvest’ to ‘downsize-and-distribute.’ Under retain-and-reinvest, the corporation retains earnings and reinvests them in the productive capabilities embodied in its labor force. Under downsize-and-distribute, the corporation lays off experienced, and often more expensive, workers, and distributes corporate cash to shareholders.”

One casualty of the shareholder value economy has been the decline of corporate scientific research. In a 1997 analysis for the US Federal Reserve Board of Governors, economists Charles Jones and John Williams calculate that the actual spending on R&D as a share of GDP is less than a quarter of the optimal rate, based on the “social rate of return” from innovation. And in a 2015 paper, economists Ashish Arora, Sharon Belenzon, and Andrea Patacconi document the decline since 1980 in the number of research papers published by scientists at large companies, curiously coupled with no decline in the number of patents filed. This is a shortsighted prioritization of value capture over value creation. “Large firms appear to value the golden eggs of science (as reflected in patents),” the authors write, “but not the golden goose itself (the scientific capabilities).”

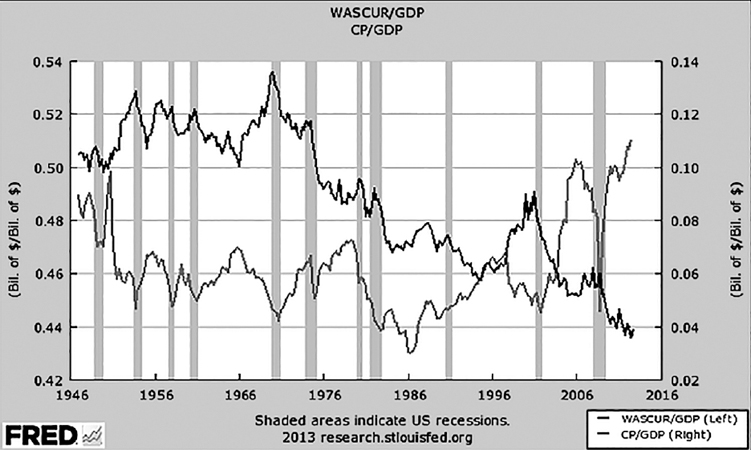

The biggest losers, though, from this change in corporate reinvestment have been workers, whose jobs have been eliminated and whose wages have been cut to fund increasing returns to shareholders. As shown in the figure below, the share of GDP going to wages has fallen from nearly 54% in 1970 to 44% in 2013, while the share going to corporate profits went from about 4% to nearly 11%. Wallace Turbeville, a former Goldman Sachs banker, aptly describes this as “something approaching a zero-sum game between financial wealth-holders and the rest of America.” Zero-sum games don’t end well. “The one percent in America right now is still a bit lower than the one percent in pre-revolutionary France but is getting closer,” says French economist Thomas Piketty, author of Capital in the Twenty-First Century.

Lazonick believes his research demonstrates that this trend “is in large part responsible for a national economy characterized by income inequity, employment instability, and diminished innovative capability—or the opposite of what I have called ‘sustainable prosperity.’”

Even stock options, so powerful a tool in the Silicon Valley innovation economy, have played a damaging role in turning the economy into a casino. Bill Janeway, who is a pioneering venture capitalist as well as an economist, likes to point out that when options began to be deployed by startups they were tickets to a lottery in which most holders would receive nothing. In 75% of VC-backed startups, the entrepreneur gets zero, and only 0.4% hit the proverbial jackpot. “The possible returns had to be abnormally high,” Janeway wrote to me in an email, “given how rarely it was reasonable to expect such success to be realized.”

Options were designed to encourage innovation and risk taking. “But then,” Bill wrote, “this innovation in compensation, mobilized to lure executives out of the safe harbors of HP and IBM, was hijacked. The established companies began using stock options when there was essentially no risk of company failure. It reached the destructive extreme when the CEOs of banks, whose liabilities are guaranteed by taxpayers, began getting most of their compensation in options.”

In 1993, a well-intentioned law pushed by President Clinton limited the ordinary income that could be paid to top management, with the unintended consequence that even more of the compensation moved to stock. Congress also initially allowed a huge loophole in the accounting treatment of options—unlike ordinary income paid to employees, options had to be disclosed, but not valued. Since the value of options did not need to be charged against company earnings, it became a kind of “free money” for companies, invisibly paid for by dilution of public market shareholders (of whom a large percentage are pension funds and other institutional shareholders representing ordinary people) rather than out of the profits of the company.

Meanwhile, there is an incentive to cut income for ordinary workers. Cutting wages drives up net income and thus the price of the stock in which executives are increasingly paid. Those executives who are not motivated by cupidity are held hostage. Any CEO who doesn’t keep growing the share price or who considers other interests than those of the shareholders is liable to lose his or her job or be subject to lawsuits. Even Silicon Valley firms whose founders retain controlling positions in their companies are not immune from pressure. Because so much of the compensation of their employees is now in stock, they can only continue to hire the best talent as long as the stock price continues to rise.

It isn’t Wall Street per se that is becoming hostile to humanity. It is the master algorithm of shareholder capitalism, whose fitness function both motivates and coerces companies to pursue short-term profit above all else. What are humans in that system but a cost to be eliminated?

Why would you employ workers in a local community when you could improve corporate profits by outsourcing the work to people paid far less in emerging economies? Why would you pay a living wage if you could instead use the government social safety net to make up the difference? After all, that safety net is funded by other people’s taxes—because of course it is only efficient to minimize your own.

Why invest in basic research, or a new factory, or training that might make your workforce more competitive, or a risky new line of business that might not contribute meaningfully to earnings for many years when you can get a quick pop in the price by using your cash to buy back your stock instead, reducing the number of shares outstanding, pleasing investors and enriching yourself?

For that matter, why would you provide the best goods or services if you could improve profits by cutting corners? This is the era of what business strategist Umair Haque, director of the Havas Media Lab, calls “thin value, profit extracted through harm to others.” Thin value is the value of tobacco marketed even after its purveyors knew it contributed to cancer; the value of climate change denial by oil companies, who have retained the same disinformation firms used by the tobacco industry. This is the value that we experience when food is adulterated with high-fructose corn syrup or other additives that make us sick and obese; the value we experience when we buy shoddy products that are meant to be prematurely replaced.

If profit is the measure of all things, why not “manage your earnings,” as Welch, the CEO of GE, came to do, so that the business appears better than it is to investors? Why not actively trade against your customers, as investment banks began to do? Why not dip into outright fraud, selling those customers complicated financial instruments that were designed to fail? And when they do fail, why not ask the taxpayers to bail you out, because government regulators—drawn heavily from your own ranks—believe you are so systematically important to the world economy that you become untouchable?

Government—or perhaps more accurately, the lack of it—has become deeply complicit in the problem. Economists George Akerlof and Paul Romer fingered the nexus between corporate malfeasance and political power in their 1994 paper “Looting: The Economic Underworld of Bankruptcy for Profit.” “Bankruptcy for profit will occur if poor accounting, lax regulation, or low penalties for abuse give owners an incentive to pay themselves more than their firms are worth and then default on their debt obligations,” they wrote. “The normal economics of maximizing economic value is replaced by the topsy-turvy economics of maximizing current extractable value, which tends to drive the firm’s economic net worth deeply negative. . . . A dollar in increased dividends today is worth a dollar to owners, but a dollar in increased future earnings of the firm is worth nothing because future payments accrue to the creditors who will be left holding the bag.”

This was the game plan of many corporate raiders, who laid off workers and stripped firms of their assets, even taking them through bankruptcy to eliminate their pension plans. It was also at the heart of a series of booms and busts in real estate and finance that decimated the economy while enormously enriching a tiny group of economic looters and lucky bystanders.

It is the Bizarro World inverse of my maxim that companies must create more value than they capture. Instead, companies seek to capture more value than they create.

This is the tragedy of the commons writ large. It is also the endgame of what Milton Friedman called for in 1970, a bad idea that took hold in the global mind, whose consequences took decades to unfold.

There is an alternative view, which crystallized for me in 2012, even though I’d been living it all my life, when I heard a talk at the TED conference by technology investor Nick Hanauer. Nick is a billionaire capitalist, heir to a small family manufacturing business, who had the great good fortune to become the first nonfamily investor in Amazon, and who later was a major investor in aQuantive, an ad-targeting firm sold to Microsoft for $6 billion. What Nick said made a lot of sense to me. As with open source and Web 2.0, his talk was one more piece of a puzzle that slipped into place, helping me see the outlines of what I would come to call “the Next Economy.”

As I remember the talk, its central argument went something like this: “I’m a successful capitalist, but I’m tired of hearing that people like me create jobs. There’s only one thing that creates jobs, and that’s customers. And we’ve been screwing workers so long that they can no longer afford to be our customers.”

In making this point, Nick was echoing the arguments of Peter F. Drucker in his 1955 book, The Practice of Management: “There is only one valid definition of business purpose: to create a customer. . . . It is the customer who determines what a business is. It is the customer alone whose willingness to pay for a good or for a service converts economic resources into wealth, things into goods. . . . The customer is the foundation of a business and keeps it in existence.”

In this view, business exists to serve human needs. Corporations and profits are a means to that end, not an end in themselves. Free trade, outsourcing, and technology are tools not for reducing costs and improving share price, but for increasing the wealth of the world. Even handicapped by the shareholder value theory, the world is better off because of the dynamism of a capitalist economy, but how much better could we have done had we taken a different path?

I don’t think anyone but the looters believes that making money for shareholders is the ultimate end of economic activity. But many economists and corporate leaders are confused about the role it plays in helping us achieve that end. Milton Friedman, Meckling and Jensen, and Jack Welch were well-meaning. All believed that aligning the interests of corporate management with shareholders would actually produce the greatest good for society as well as for business. But they were wrong. They were following a bad map. By 2009 Welch had changed his mind, calling the shareholder value hypothesis “a dumb idea.”

But by then Welch had retired with a fortune close to $900 million, most of it earned via stock options, and the machine ground on, bigger than any CEO, bigger than any company. Author Douglas Rushkoff told me the story of one Fortune 100 CEO who broke down in tears as she told him how her attempts to inject social value into decision making at her company had resulted in quick punishment by “the market,” forcing her to reverse course.

Who is the market? It is algorithmic traders who pop in and out of companies at millisecond speed, turning what was once a vehicle for capital investment in the real economy into a casino where the rules always favor the house. It is corporate raiders like Carl Icahn (now rebranded as a “shareholder activist”) who buy large blocks of shares and demand that companies that wish to remain independent instead put themselves up for sale, or that a company like Apple disgorge its cash into their pockets rather than using it to lower prices for customers or raise wages for workers. It is also pension funds, desperate for higher returns to fund the promises that they have made, who outsource their money to professional managers who must then do their best to match the market or lose the funds they manage. It is venture capitalists and entrepreneurs dreaming of vast disruption leading to vast fortunes. It is every company executive who makes decisions based on increasing the stock price rather than on serving customers.

But these classes of investor are just the most obvious features of a system of reflexive collective intelligence far bigger even than Google and Facebook, a system bigger than all of us, that issues relentless demands because it is, at bottom, driven by a master algorithm gone wrong.

This is what financial industry critics like Rana Foroohar, author of the book Makers and Takers, are referring to when they say that the economy has become financialized. “The single biggest unexplored reason for long-term slower growth,” she writes, “is that the financial system has stopped serving the real economy and now serves mainly itself.”

It isn’t just that the financial industry employs only 4% of Americans but takes in more than 25% of all corporate profits (down from a 2007 peak of nearly 40%). It isn’t just that Americans born in 1980 are far less likely to be better off financially than their parents than those born in 1940, or that 1% of the population now owns nearly half of all global wealth, and that nearly all the income gains since the 1980s have gone to the top tenth of 1%. It isn’t just that people around the world are electing populist leaders, convinced that the current elites have rigged the system against them.

These are symptoms. The root problem is that the financial market, once a helpful handmaiden to human exchanges of goods and services, has become the master. Even worse, it is the master of all the other collective intelligences. Google, Facebook, Amazon, Twitter, Uber, Airbnb, and all the other unicorn companies shaping the future are in its thrall as much as any one of us.

It is this hybrid artificial intelligence of today, not some fabled future artificial superintelligence, that we must bring under control.

Get WTF?: What's the Future and Why It's Up to Us now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.