Chapter 4. Auto-Scaling in the Cloud

What Is Auto-Scaling?

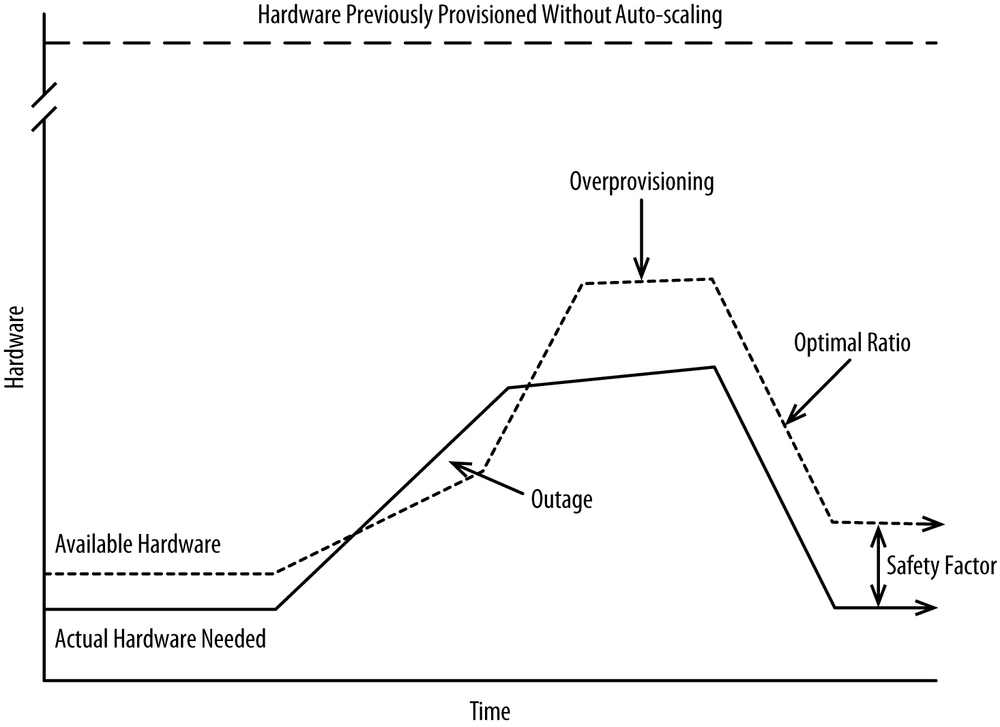

Auto-scaling, also called provisioning, is central to cloud. Without the elasticity provided by auto-scaling, you’re back to provisioning year-round for annual peaks. Every ecommerce platform deployed in a cloud should have a solution in place to scale up and down each of the various layers based on real-time demand, as shown in Figure 4-1.

An auto-scaling solution is not to be confused with initial provisioning. Initial provisioning is all about getting your environments set up properly, which includes setting up load balancers, setting up firewalls, configuring initial server images, and a number of additional one-time activities. Auto-scaling, on the other hand, is focused on taking an existing environment and adding or reducing capacity based on real-time needs. Provisioning and scaling may ultimately use the same provisioning mechanisms, but the purpose and scope of the two are entirely different.

The goal with auto-scaling is to provision enough hardware to support your traffic, while adhering to service-level agreements. If you provision too much, you waste money. If you provision too little, you suffer outages. A good solution will help you provision just enough, but not so much that you’re wasting money.

In this chapter, we’ll cover what needs to be provisioned, when to provision, followed by how to provision.

What Needs to Be Provisioned

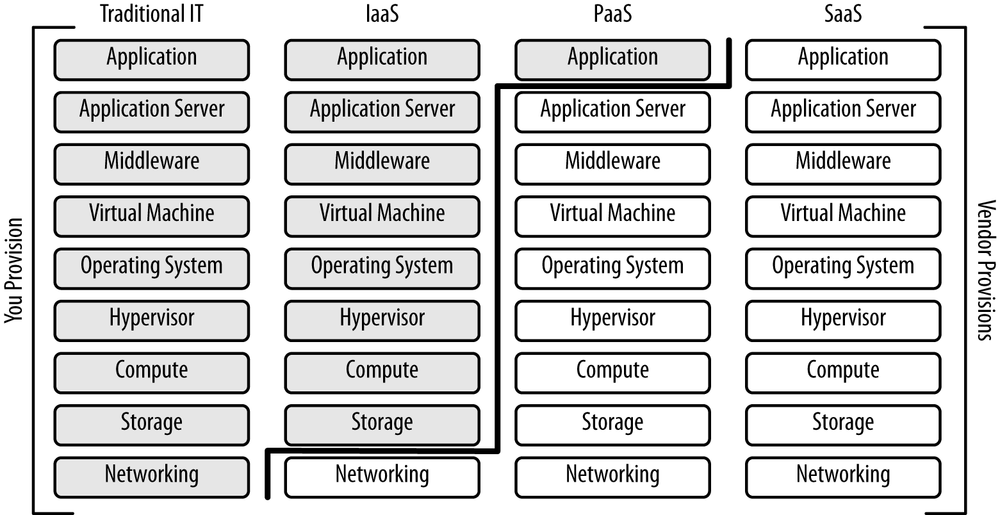

The focus of this chapter is provisioning hardware from an Infrastructure-as-a-Service platform because that has the least amount of provisioning built in, as shown in Figure 4-2.

When you’re using Infrastructure-as-a-Service, your lowest level of abstraction is physical infrastructure—typically, a virtual server. You have to provision computing and storage capacity and then install your software on top of it. We’ll discuss the software installation in the next chapter. IaaS vendors handle the provisioning of lower-level resources, like network and firewalls. Infrastructure-as-a-Service vendors invest substantial resources to ensure that provisioning is as easy as possible, but given that you’re dealing with plain infrastructure, it’s hard to intelligently provision as you can with Platform-as-a-Service.

Moving up the stack, Platform-as-a-Service offerings usually have tightly integrated provisioning tools as a part of their core value proposition. Your lowest level of abstraction is typically the application server, with the vendor managing the application server and everything below it. You define when you want more application servers provisioned, and your Platform-as-a-Service vendor will provision more application servers and everything below it in tandem. Provisioning is inherently difficult, and by doing much of it for you, these vendors offer value that you may be willing to pay extra for.

Finally, there’s Software-as-a-Service. Most Software-as-a-Service vendors offer nearly unlimited capacity. Think of common software offered as Software-as-a-Service: DNS, Global Server Load Balancing, Content Delivery Networks, and ratings and reviews. You just consume these services, and it’s up the vendor to scale up their entire backend infrastructure to be able to handle your demands. That’s a big part of the value of Software-as-a-Service and is conceptually similar to the public utility example from the prior chapter. You just pull more power from the grid when you need it. This differs from Platform-as-a-Service and Infrastructure-as-a-Service, where you need to tell your vendor when you need more and they meter more out to you.

The downside of your vendors handling provisioning for you is that you inherently lose some control. The vendor-provided provisioning tools are fairly flexible and getting better, but your platform will always have unique requirements that may not be exactly met by the vendor-provided provisioning tools. The larger and more complicated your deployment, the more likely you’ll want to build something custom, as shown in Table 4-1.

| Criteria | IaaS | PaaS | SaaS |

Need to provision | Yes | Yes | No |

What you provision | Computing, storage | Platform (application) | N/A |

Who’s responsible | You | Mostly vendor | Vendor |

Flexibility of provisioning | Complete to limited | Limited | None |

Your goal in provisioning is to match the quantity of resources to the level of each resource that is required, plus any safety factor you have in place. A safety factor is how much extra capacity you provision in order to avoid outages. This value is typically represented in the auto-scaling rules you define. If your application is CPU bound, you could decide to provision more capacity at 25%, 50%, 75%, or 95% aggregate CPU utilization. The longer you wait to provision, the higher the likelihood of suffering an outage due to an unanticipated burst of traffic.

What Can’t Be Provisioned

It’s far easier to provision more resources for an existing environment than it is to build out a new environment from scratch. Each environment you build out has fixed overhead—you need to configure a load balancer, DNS, a database, and various management consoles for your applications. You then need to seed your database and any files that your applications and middleware require. It is theoretically possible to script this all out ahead of time, but it’s not very practical.

Then there are a few resources that must be fully provisioned and scaled for peak ahead of time. For example, if you’re deploying your own relational database, it needs to be built out ahead of time. It’s hard to add database nodes for relational databases in real time, as entire database restarts and other configuration changes are often required. A database node for a relational database isn’t like an application server or web server, where you can just add another one and register it with the load balancer. Of course, you can use your vendor’s elastic database solutions, but some may be uncomfortable with sensitive data being in a multitenant database. Nonrelational databases, like NoSQL databases, can typically be scaled out on the fly because they have a shared-nothing architecture that doesn’t require whole database restarts.

Each environment should contain at least one of every server type. Think of each environment as being the size of a development environment to start. Then you can define auto-scaling policies for each tier. The fixed capacity for each environment can all be on dedicated hardware, as opposed to the hourly fees normally charged. Dedicated hardware is often substantially cheaper than per-hour pricing, but hardware requires up-front payment for a fixed term, usually a year. Again, this should be a fairly small footprint, consisting of only a handful of servers. The cost shouldn’t be much.

When to Provision

Ideally, you’d like to perfectly match the resources you’ve provisioned to the amount of traffic the system needs to support. It’s never that simple.

The problem with provisioning is that it takes time for each resource to become functional. It can take at least several minutes for the vendor to give you a functioning server with your image installed and the operating system booted. Then you have to install your software, which takes even more time. The installation of your software on newly provisioned hardware is covered in the next chapter.

Once you provision a resource, you can’t just make it live immediately. Some resources have dependencies and must be provisioned in a predefined order, or you’ll end up with an outage. For example, if you provision application servers before your messaging servers, you probably wouldn’t have enough messaging capacity and would suffer an outage. In this example, you’d have to add your application servers to the load balancer only after the messaging servers have been fully provisioned. The trick here is to provision in parallel, and then install your software in parallel, but add your application servers to the load balancer as a last step.

Provisioning takes two forms:

- Proactive provisioning

- Provisioning ahead of time when you expect there will be traffic

- Reactive provisioning

- Provisioning in reaction to traffic

Reactive provisioning is what you should strive for, though it comes with the risk of outages if you can’t provision quickly enough to meet a rapid spike in traffic. The way to guard against that is to overprovision (start provisioning at, say, 50% CPU utilization, assuming your application is CPU bound) but that leads to waste. Likewise, you could provision at 95% CPU utilization, but you’ll incur costs there, too, because you’ll suffer periodic outages due to not being able to scale fast enough. It’s a balancing act that’s largely a function of how quickly you can provision and how quickly you’re hit with new traffic.

Proactive Provisioning

In proactive provisioning, you provision resources in anticipation of increased traffic. You can estimate traffic based on the following:

Cyclical trends

- Daily

- Monthly

- Seasonally

Active marketing

- Promotions

- Promotional emails

- Social media campaigns

- Deep price discounts

- Flash sales

If you know traffic is coming and you know you’ll get hit with more traffic than you can provision for reactively, it makes sense to proactively add more capacity. For example, you could make sure that you double your capacity an hour before any big promotional emails are sent out.

To be able to proactively provision, you need preferably one system to look at both incoming traffic and how that maps back to the utilization of each tier. Then you can put together a table mapping out each tier you have and how many units of that resource (typically uniformly sized servers) are required for various levels, as shown in Table 4-2.

| Resource | 10,000 concurrent customers | 20,000 concurrent customers | 30,000 concurrent customers |

Application servers | 5 | 10 | 15 |

Cache grid servers | 3 | 6 | 9 |

Messaging servers | 2 | 4 | 6 |

NoSQL database servers | 2 | 4 | 6 |

Ideally, your system will scale linearly. So if your last email advertising a 30% off promotion resulted in 30,000 concurrent customers, you know you’ll need to provision 15 application servers, 9 cache grid servers, 6 messaging servers, and 6 NoSQL database servers prior to that email going out again. A lot of this comes down to process. Unless you can reliably and quickly reactively provision, you’ll need to put safeguards in place to ensure that marketers aren’t driving an unexpectedly large amount of traffic without the system being ready for it.

The costs of proactive provisioning are high because the whole goal is to add more capacity than is actually needed. Traffic must be forecasted, and the system must be scaled manually. All of this forecasting is time-consuming, but compared to the cost of an outage and the waste before the cloud, the costs are miniscule.

Reactive Provisioning

Reactive provisioning has substantial benefits over proactive provisioning and is what you should strive for. In today’s connected world, a link to your website can travel across the world to millions of people in a matter of minutes. Celebrities and thought leaders have 50 million or more followers on popular social networks. All it takes is for somebody with 50 million followers to broadcast a link to your website, and you’re in trouble. You can forecast most traffic but not all of it. The trend of social media–driven spikes in traffic will only accelerate as social media continues to proliferate.

In addition to avoiding outages, reactive provisioning has substantial cost savings. You provision only exactly what you need, when you need it.

Reactive provisioning is built on the premise of being able to accurately interrogate the health of each tier and then taking action if the reported data warrants it. For example, you could define rules as shown in Table 4-3.

| Tier | Metric | Threshold for action | Action to be taken |

Application servers | CPU utilization | 50% | Add 5 more |

Cache grid servers | Memory | 50% | Add 3 more |

Messaging servers | Messages per second | 1,000 | Add 3 more |

NoSQL database servers | CPU utilization | 50% | Add 2 more |

By adding more capacity at 50%, you should always have 50% more capacity than you need, which is a healthy safety factor. We’ll get into the how part of provisioning shortly.

Sometimes your software’s limits will be expressed best by a custom metric. For example, a single messaging server may be able to handle only 1,000 messages per second. But how can you represent that by using off-the-shelf metrics, like network utilization and CPU utilization? Your monitoring tool will most likely allow you to define custom metrics that plug in to hooks you define.

Auto-Scaling Solutions

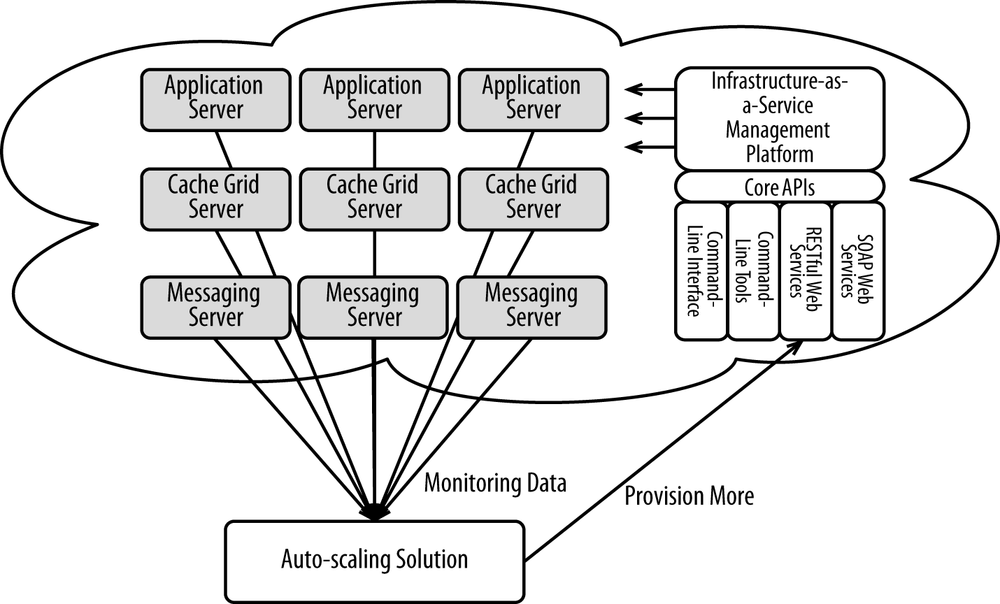

There are many auto-scaling solutions available, ranging from custom-developed solutions to solutions baked into the core of cloud vendors’ offerings to third-party solutions. All of them need to do basically the same thing as Figure 4-3 shows.

While these solutions all do basically the same thing, their goals, approach, and implementation all vary.

Any solution that’s used must be fully available and preferably deployed outside the cloud(s) that you’re using for your platform. You must provide full high availability within each data center this solution operates from, as well as high availability across data centers. If auto-scaling fails for any reason, your platform could suffer an outage. It’s important that all measures possible are employed to prevent outages.

Requirements for a Solution

The following sections show broadly what you need to do in order to auto-scale.

Define each tier that needs to be scaled

To begin with, you need to identify each tier that needs to be scaled out. Common tiers include application servers, cache grid servers, messaging servers, and NoSQL database servers. Within each tier exist numerous instance types, some of which can be scaled and some of which cannot. For example, most tiers have a single admin server that cannot and should not be scaled out. As mentioned earlier, some tiers are fixed and cannot be dynamically scaled out. For example, your relational database tier is fairly static and cannot be scaled out dynamically.

Define the dependencies between tiers

Once you identify each tier, you then have to define the dependencies between them. Some tiers require that other tiers are provisioned first. An earlier example used in this chapter was the addition of more application servers without first adding more messaging servers. There might be intricate dependencies between the tiers and between the components within each tier. Dependencies can cascade. For example, adding an application server may require the addition of a messaging server that may itself require another NoSQL database node.

Define ratios between tiers

Once you’ve identified the dependencies between each tier, you’ll need to define the ratios between each tier if you ever want to be able to proactively provision capacity. For example, you may find that you need a cache grid server for every two application servers. When you proactively provision capacity, you need to make sure you provision each of the tiers in the right quantities. See Proactive Provisioning for more information.

Define what to monitor

Next, for each tier you need to define the metrics that will trigger a scale up or scale down. For application servers, it may be CPU (if your app is CPU bound) or memory (if your app is memory bound). Other metrics include disk utilization, storage utilization, and network utilization. But, as discussed earlier, metrics may be entirely custom for each bit of software. What matters is that you know the bottlenecks of each tier and can accurately predict when that tier will begin to fail.

Monitor each server and aggregate data across each tier

Next, you need to monitor each server in that tier and report back to a centralized controller that is capable of aggregating that data so you know what’s going on across the whole tier, as opposed to what’s going on within each server. The utilization of any given server may be very high, but the tier overall may be OK. Not every server will have perfectly uniform utilization.

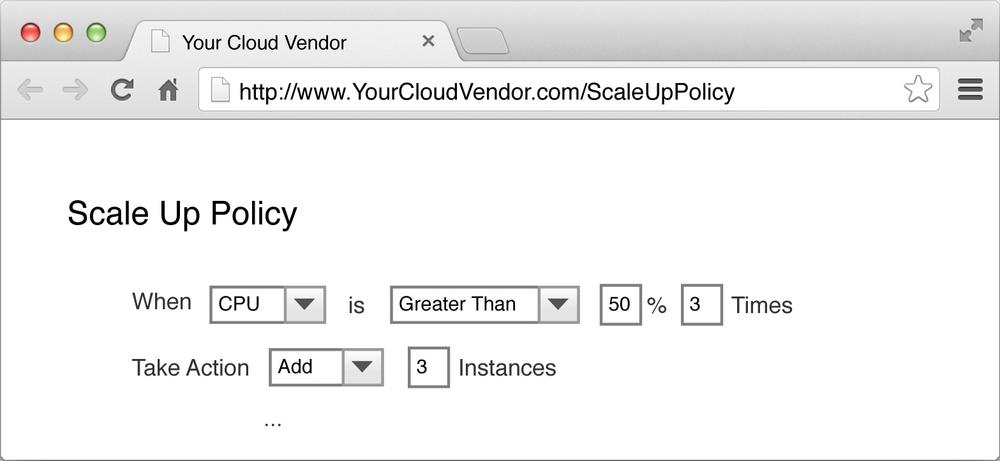

Define rules for scaling each tier

Now that all of the dependencies are in place, you need to define rules for scaling each tier. Rules are defined for each tier and follow standard if/then logic. The if should be tied back to tier-wide metrics, like CPU and memory utilization. Lower-utilization triggers increase safety but come at the expense of overprovisioning. The then clause could take any number and any combination of the following:

- Add capacity

- Reduce capacity

- Send an email notification

- Drop a message onto a queue

- Make an HTTP request

Usually, you’ll have a minimum of two rules for each tier:

- Add more capacity

- Reduce capacity

Figure 4-4 shows an example of how you define a scale-up rule.

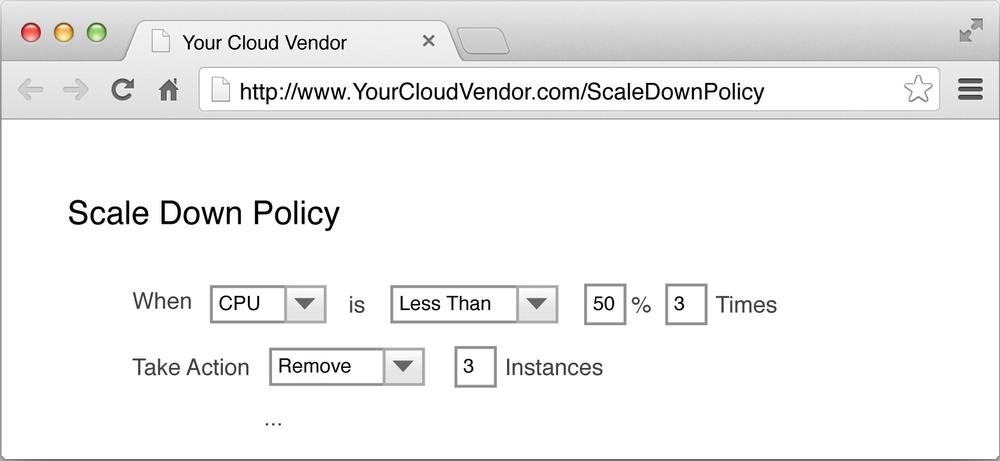

And the corresponding scale-down rule is shown in Figure 4-5.

If there are any exceptions, you should be notified by email or text message, so you can take corrective action.

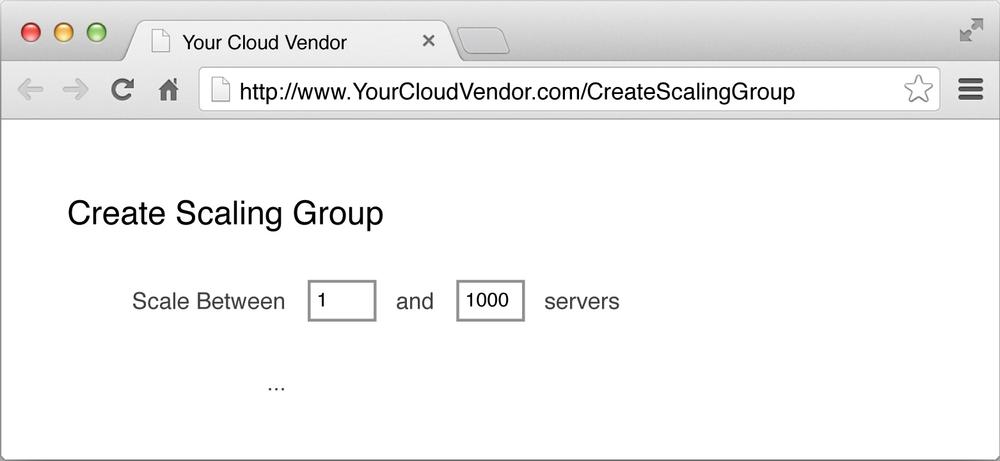

Your solution should offer safeguards to ensure that you don’t provision indefinitely. If the application you’ve deployed has a race condition that spikes CPU utilization immediately, you don’t want to provision indefinitely. Always make sure to set limits as to how many servers can be deployed, as shown in Figure 4-6.

Revisit this periodically to make sure you don’t run into this limit as you grow.

Building an Auto-scaling Solution

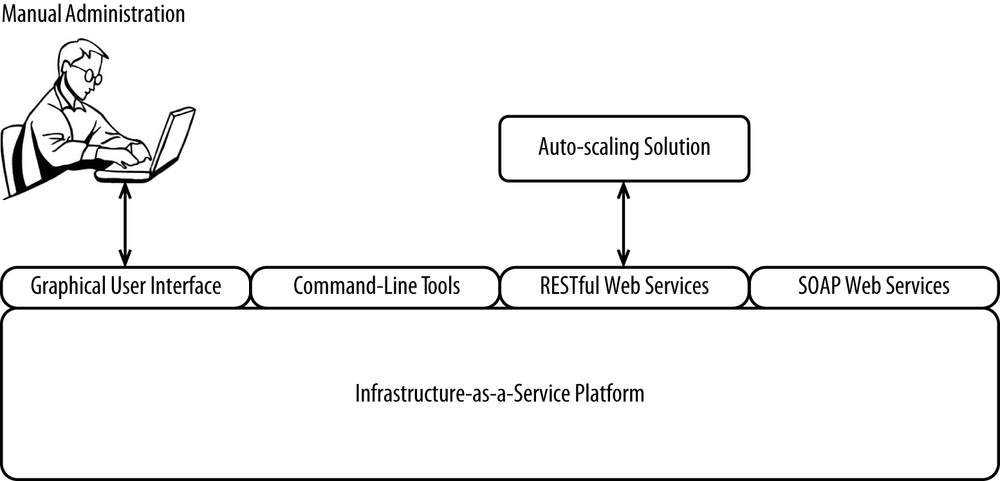

Whether you build or buy one of these solutions, you’ll be using the same APIs to do things like provision new servers, de-provision underutilized servers, register servers with load balancers, and apply security policies. Cloud vendors have made exposing these APIs and making them easy to use a cornerstone of their offerings.

Means of interfacing with the APIs often include the following:

- Graphical user interface

- Command-line tools

- RESTful web services

- SOAP web services

These APIs are what everybody uses to interface with clouds in much the same way that APIs are powering the move to omnichannel. An interface (whether it’s a graphical user interface, command-line tool, or some flavor of web service) is a more or less disposable means to interface with a core set of APIs, shown in Figure 4-7.

OpenStack is a popular open source cloud management stack that has a set of APIs at its core. All cloud vendors offer APIs of this nature. Here are some examples of how you would perform some common actions:

Create machine image (snapshot of filesystem)

// HTTP POST to /images{"id":"production-ecommerce-application-page-server","name":"Production eCommerce Application Page Server",}

Write machine image

// HTTP PUT to /images/{image_id}/fileContent-Typemustbe'application/octet-stream'

List flavors of available images

// HTTP GET to /flavors{"flavors":[{"id":"1","name":"m1.tiny"},{"id":"2","name":"m1.small"},{"id":"3","name":"m1.medium"},{"id":"4","name":"m1.large"},{"id":"5","name":"m1.xlarge"}]}

Provision hardware

// HTTP POST to /{tenant_id}/servers{"server":{"flavorRef":"/flavors/1","imageRef":"/images/production-ecommerce-application-page-server","metadata":{"JNDIName":"CORE"},"name":"eCommerce Server 221",}}

De-provision hardware

// HTTP POST to /{tenant_id}/servers/{server_id}You can string together these APIs and marry them with a monitoring tool to form a fairly comprehensive auto-scaling solution. Provided your vendor exposes all of the appropriate APIs, it’s not all that challenging to build a custom application to handle provisioning. You can also just use the solution your cloud vendor offers.

Building versus Buying an Auto-Scaling Solution

Like most software, auto-scaling solutions can be built or bought. Like any software, you have to decide which direction you want to go. Generally speaking, if you can’t differentiate yourself by building something custom, you should choose a prebuilt solution of some sort, whether that’s a commercial solution sold by a third-party vendor or an integrated solution built into your cloud. What matters is that you have an extremely reliable, robust solution that can grow to meet your future needs.

Table 4-4 shows some reasons you would want to build or buy an auto-scaling solution.

| Build | Buy |

Your IaaS vendor doesn’t offer an auto-scaling solution. | You want to get to market quickly. |

You want more functionality and control than what a pre-built solution can offer. | Your IaaS vendor offers a solution that meets your needs. |

You want to be able to provision across multiple clouds. | A third-party vendor offers a solution that meets your needs. |

You have the resources (finances, people, time) to implement something custom. | You don’t have the resources to implement something custom. |

Generally speaking, it’s best to buy one of these solutions rather than build one. Find what works for you and adopt it. Anything is likely to be better than what you have today.

For more information on auto-scaling, read Cloud Architecture Patterns by Bill Wilder (O’Reilly).

Get eCommerce in the Cloud now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.